Designing Personalization Cues for Museum Robots: Docent Observation and Controlled Studies

Abstract

1. Introduction

2. Related Works

2.1. Public Service HRI

2.2. Personalization in HRI: Data vs. Immediate Cues

2.3. Social Cognitive Mechanisms Underlying Personalization

- RQ1. Do competence cues enhance users’ social impressions of a museum robot?

- RQ2. Does knowledge alignment increase perceived personalization in brief museum encounters?

- RQ3. Do preference verification and memory cues strengthen relational personalization in repeated encounters?

3. Methods

3.1. Hypotheses

- Hypothesis 1-1: Users will attribute greater human-likeness to a robot with higher recognition accuracy.

- Hypothesis 1-2: Users will perceive a robot with higher recognition accuracy as more animated and lifelike.

- Hypothesis 1-3: Users will express higher affinity and emotional acceptance toward a robot with higher recognition accuracy.

- Hypothesis 1-4: Users will assess a robot with higher recognition accuracy as having higher cognitive competence.

- Hypothesis 1-5: Users will feel more secure and relaxed in the presence of a robot with higher recognition accuracy.

- Hypothesis 2: When a robot’s explanation is misaligned with a visitor’s background knowledge, the perceived personalization of the service will be significantly reduced.

- Hypothesis 3: When a robot delivers personalized services based solely on inferred user preferences without explicit inquiry, the perceived personalization of the service will be significantly reduced.

- Hypothesis 4: When a robot fails to demonstrate memory of a user’s prior interaction (e.g., name, past choices, or preferences), the perceived personalization of the service will be significantly reduced.

3.2. Study 1: Observation of Human Service Behavior as Design Basis

3.2.1. Participants

3.2.2. Measures

3.2.3. Data Collection Procedure

3.3. Study 2: Cognitive Performance Cues and Social Perception

3.3.1. Participants

3.3.2. Measures

3.3.3. Stimuli

3.3.4. Procedure

3.4. Study 3: Knowledge Alignment and Perceived Personalization

3.4.1. Participants

3.4.2. Measures

3.4.3. Stimuli

3.4.4. Procedure

3.5. Study 4: Preference Inquiry and Memory Continuity

3.5.1. Participants

3.5.2. Measures

3.5.3. Stimuli

3.5.4. Procedure

- Preference-asking group (n = 22): The robot explicitly asked for participants’ sticker preferences prior to service delivery.

- Preference-inferring group (n = 22): The robot inferred preferences based on gender-based preference assumptions.

- Introduction Phase: The robot greeted the participant, asked for their name, and (in Preference-asking group only) asked which type of sticker they preferred cute or stylish.

- Task Phase: The robot introduced itself, explained its capabilities (e.g., visual tracking, STT, system architecture), and maintained rapport through gaze and responsive dialogue.

- Reward Phase: In Preference-asking group, the robot delivered the participant’s chosen sticker. In Preference-inferring group, the robot delivered a sticker based on internal logic without asking for preferences.

- Remember-user group (n = 10): The robot remembered the participant’s name and previous preference, greeting them accordingly and using this information during the reward phase.

- Forget-user group (n = 10): The robot failed to recall the prior interaction, asked for the name again, and re-queried the sticker preference.

4. Results by Individual Studies

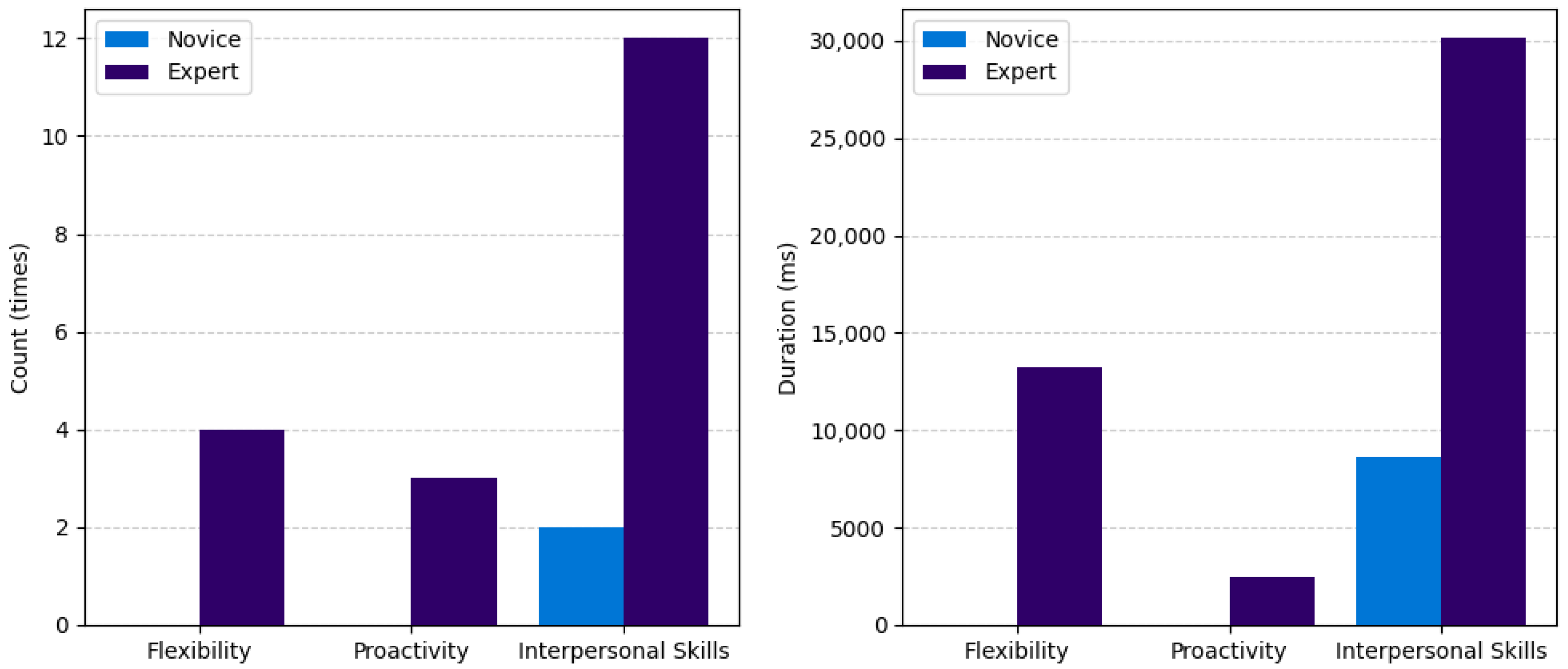

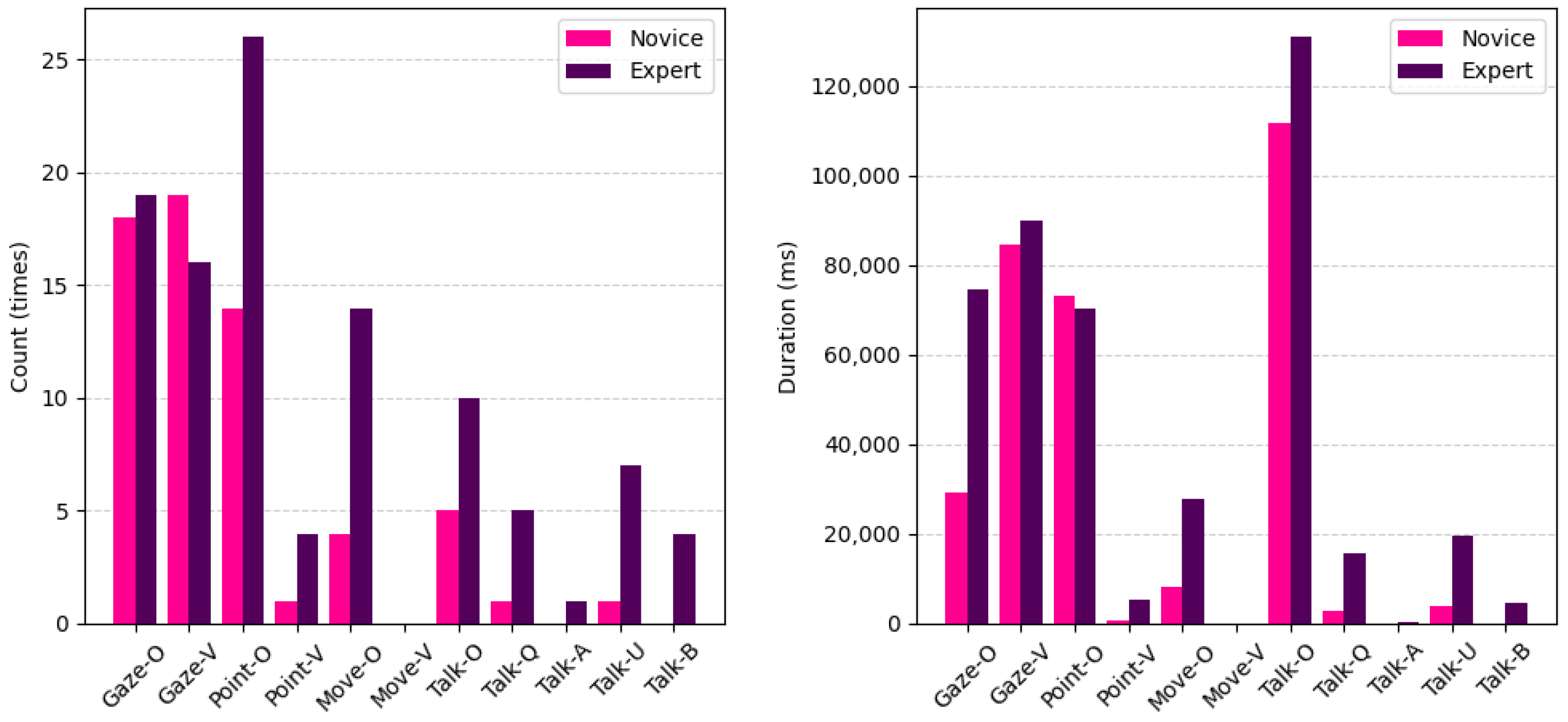

4.1. Study 1: Observation of Human Service Behavior as Design Basis

4.1.1. Differences in Service-Level Behaviors Between Novice and Expert

4.1.2. Differences in Basic Actions Between Novice and Expert

4.1.3. Interview-Based Analysis

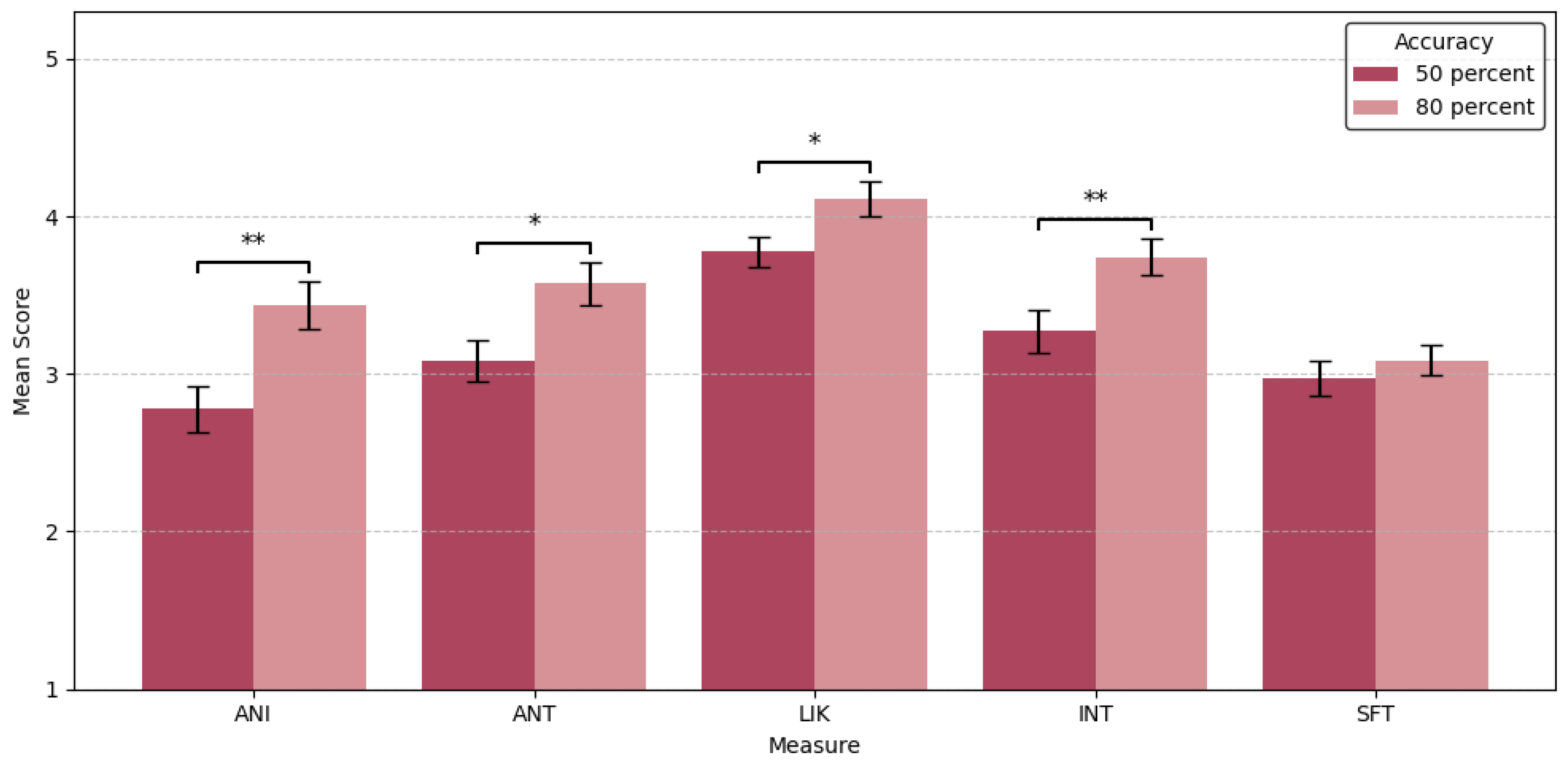

4.2. Study 2: Cognitive Performance Cues and Social Perception

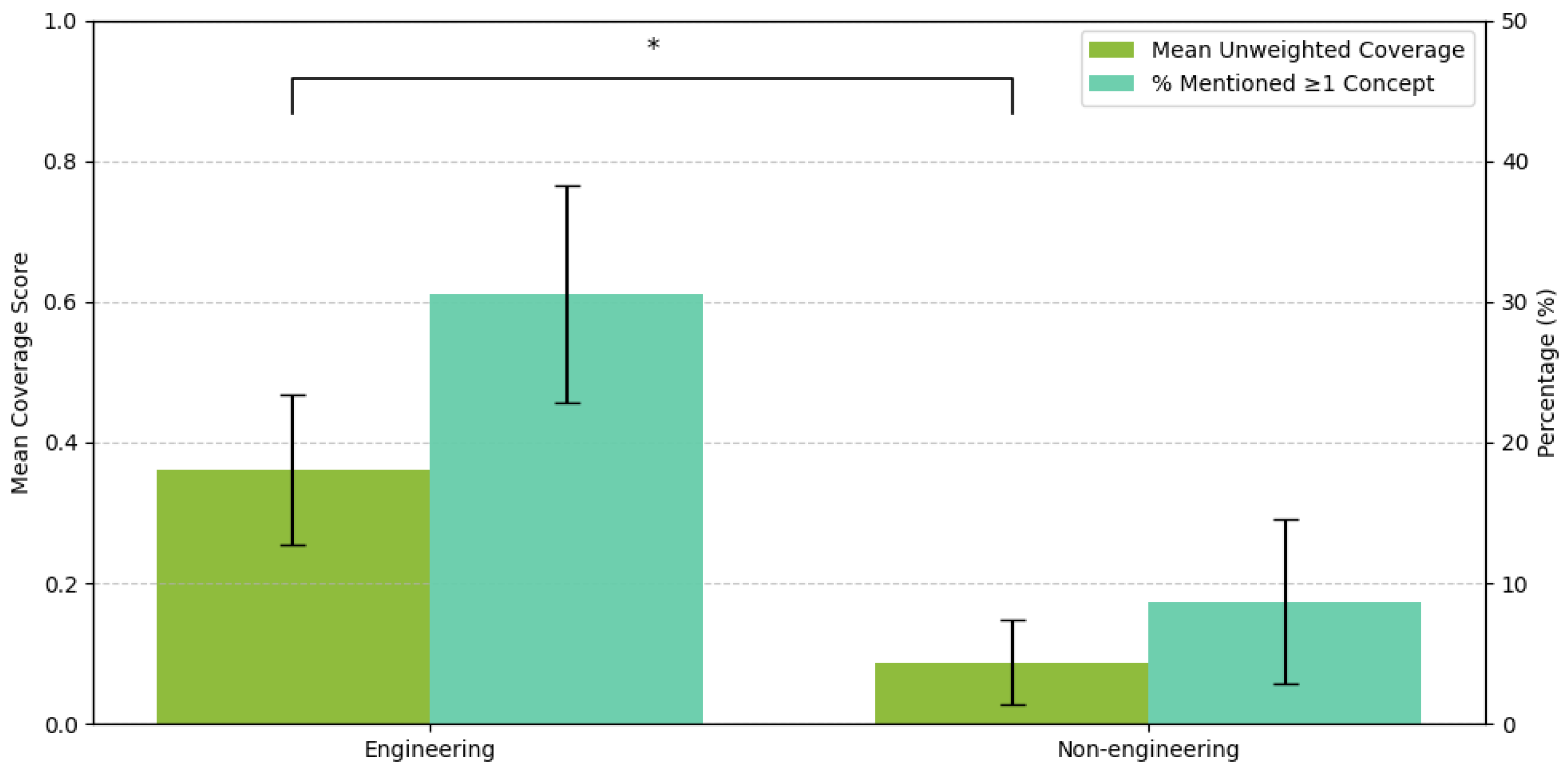

4.3. Study 3: Knowledge Alignment and Perceived Personalization

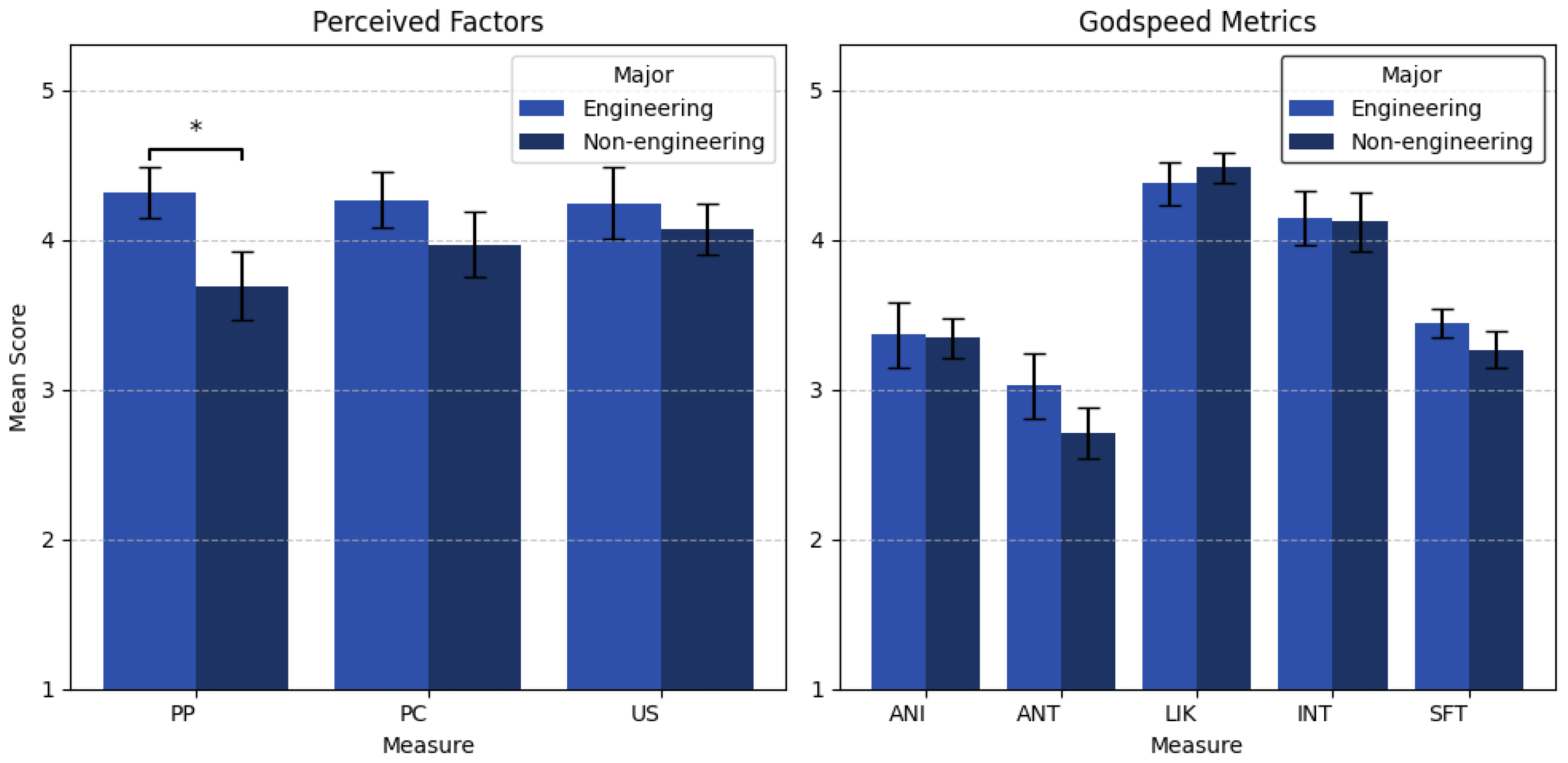

4.3.1. Group Difference in Perceived Personalization

4.3.2. Group Difference in Understanding

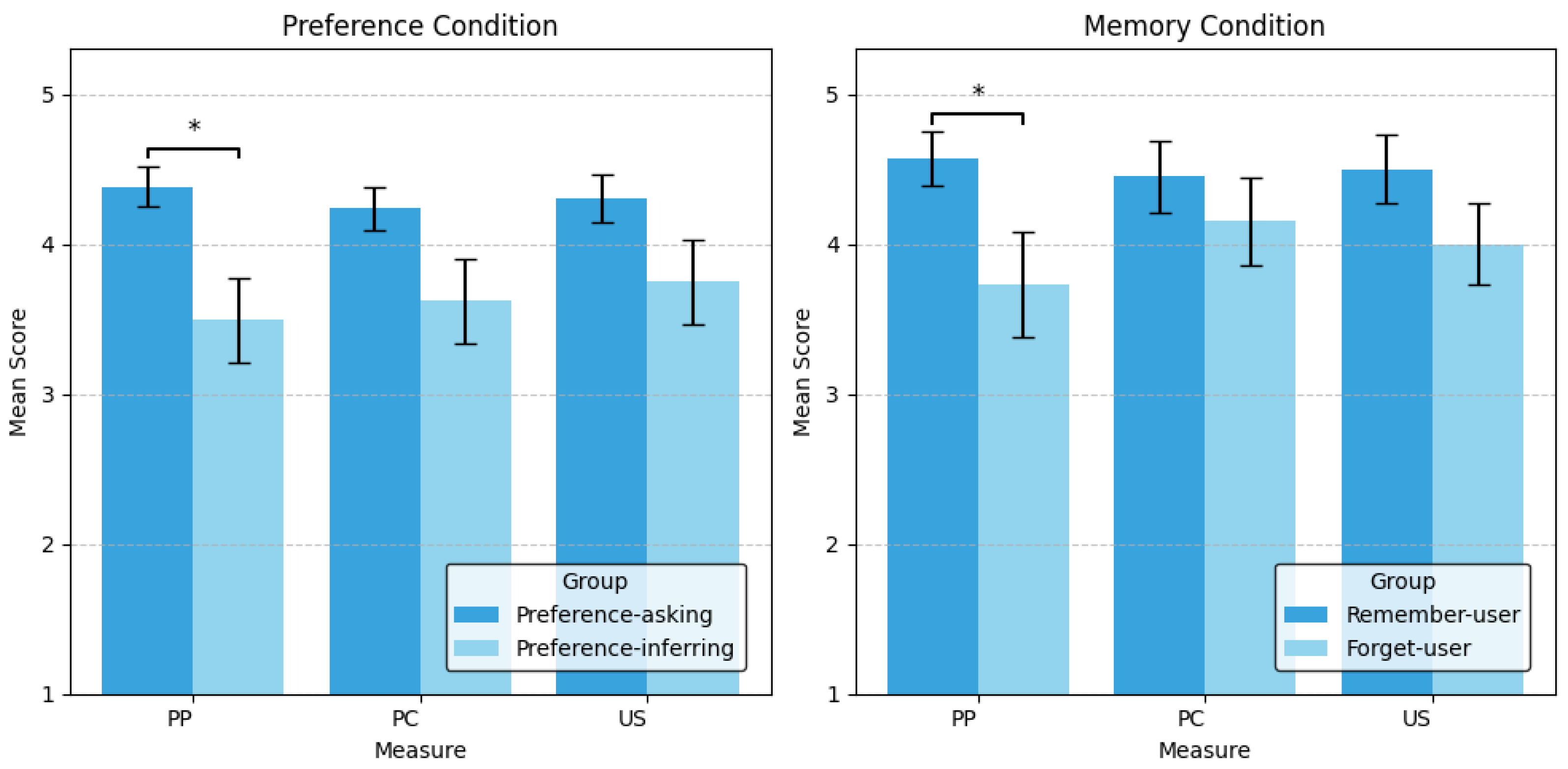

4.4. Study 4: Preference Inquiry and Memory Continuity

4.4.1. Personalization Through Explicit Preference Inquiry

4.4.2. Personalization Through Memory of Past User Information

5. General Discussion

5.1. Integrated Interpretation of Personalization Cues

5.2. Social Attribution Mechanisms

5.3. Design Implications

5.3.1. Interaction Design for Social Adaptivity

5.3.2. Scalable Personalization Under Real-World Constraints

5.4. Limitations

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Díaz-Boladeras, M.; Paillacho, D.; Angulo, C.; Torres, O.; González-Diéguez, J.; Albo-Canals, J. Evaluating group-robot interaction in crowded public spaces: A week-long exploratory study in the wild with a humanoid robot guiding visitors through a science museum. Int. J. Hum. Robot. 2015, 12, 1550022. [Google Scholar] [CrossRef]

- Miraglia, L.; Di Dio, C.; Manzi, F.; Kanda, T.; Cangelosi, A.; Itakura, S.; Ishiguro, H.; Massaro, D.; Fonagy, P.; Marchetti, A. Shared knowledge in human-robot interaction (HRI). Int. J. Soc. Robot. 2024, 16, 59–75. [Google Scholar] [CrossRef]

- Hetherington, N.J.; Croft, E.A.; Van der Loos, H.M. Hey robot, which way are you going? Nonverbal motion legibility cues for human-robot spatial interaction. IEEE Robot. Autom. Lett. 2021, 6, 5010–5015. [Google Scholar] [CrossRef]

- Avelino, J.; Garcia-Marques, L.; Ventura, R.; Bernardino, A. Break the ice: A survey on socially aware engagement for human–robot first encounters. Int. J. Soc. Robot. 2021, 13, 1851–1877. [Google Scholar] [CrossRef] [PubMed]

- Kraus, M.; Wagner, N.; Untereiner, N.; Minker, W. Including social expectations for trustworthy proactive human-robot dialogue. In Proceedings of the 30th ACM Conference on User Modeling, Adaptation and Personalization (UMAP 2022), Barcelona, Spain, 4–7 July 2022; pp. 23–33. [Google Scholar]

- Inoue, K.; Lala, D.; Yamamoto, K.; Takanashi, K.; Kawahara, T. Engagement-based adaptive behaviors for laboratory guide in human-robot dialogue. In Increasing Naturalness and Flexibility in Spoken Dialogue Interaction; Marchi, E., Siniscalchi, S.M., Cumani, S., Salerno, V.M., Li, H., Eds.; Springer: Singapore, 2021; pp. 129–139. [Google Scholar] [CrossRef]

- Finkel, M.; Krämer, N.C. The robot that adapts too much? An experimental study on users’ perceptions of social robots’ behavioral and persona changes between interactions with different users. Comput. Hum. Behav. Artif. Hum. 2023, 1, 100018. [Google Scholar] [CrossRef]

- Rossi, A.; Dautenhahn, K.; Koay, K.L.; Walters, M.L. How social robots influence people’s trust in critical situations. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN 2020), Naples, Italy, 31 August–4 September 2020; pp. 1020–1025. [Google Scholar]

- Rato, D.; Couto, M.; Prada, R. Fitting the room: Social motivations for context-aware agents. In Proceedings of the 9th International Conference on Human-Agent Interaction (HAI, 2021), Online, Japan, 9–11 November 2021; pp. 39–46. [Google Scholar]

- Belgiovine, G.; Gonzalez-Billandon, J.; Sandini, G.; Rea, F.; Sciutti, A. Towards an hri tutoring framework for long-term personalization and real-time adaptation. In Proceedings of the Adjunct Proceedings of the 30th ACM Conference on User Modeling, Adaptation and Personalization (UMAP 2022), Barcelona, Spain, 4–7 July 2022; pp. 139–145. [Google Scholar]

- Maroto-Gómez, M.; Malfaz, M.; Castillo, J.C.; Castro-González, Á.; Salichs, M.Á. Personalizing activity selection in assistive social robots from explicit and implicit user feedback. Int. J. Soc. Robot. 2024, 17, 1999–2017. [Google Scholar] [CrossRef]

- Churamani, N.; Axelsson, M.; Caldır, A.; Gunes, H. Continual learning for affective robotics: A proof of concept for wellbeing. In Proceedings of the 2022 10th International Conference on Affective Computing and Intelligent Interaction Workshops and Demos (ACIIW 2022), Nara, Japan, 17–21 October 2022; pp. 1–8. [Google Scholar]

- Ivanov, R.; Velkova, V. Analyzing visitor behavior to enhance personalized experiences in smart museums: A systematic literature review. Computers 2025, 14, 191. [Google Scholar] [CrossRef]

- Shemshack, A.; Kinshuk; Spector, J.M. A comprehensive analysis of personalized learning components. J. Comput. Educ. 2021, 8, 485–503. [Google Scholar] [CrossRef]

- Todino, M.D.; Campitiello, L. Museum education. Encyclopedia 2025, 5, 3. [Google Scholar] [CrossRef]

- Iocchi, L.; Lázaro, M.T.; Jeanpierre, L.; Mouaddib, A.I. Personalized short-term multi-modal interaction for social robots assisting users in shopping malls. In Social Robotics. ICSR 2015. Lecture Notes in Computer Science; Tapus, A., André, E., Martin, J.C., Ferland, F., Ammi, M., Eds.; Springer International Publishing: Cham, Switzerland, 2015. [Google Scholar]

- Torrey, C.; Powers, A.; Marge, M.; Fussell, S.R.; Kiesler, S. Effects of adaptive robot dialogue on information exchange and social relations. In Proceedings of the 1st ACM SIGCHI/SIGART Conference on Human-Robot Interaction (HRI 2006), Salt Lake City, UT, USA, 2–3 March 2006; pp. 126–133. [Google Scholar]

- Nagaya, R.; Seo, S.H.; Kanda, T. Measuring people’s boredom and indifference to the robot’s explanation in a museum scenario. In Proceedings of the 2023 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2023), Detroit, MI, USA, 1–5 October 2023; pp. 9794–9799. [Google Scholar]

- Ivanov, R. Advanced visitor profiling for personalized museum experiences using telemetry-driven smart badges. Electronics 2024, 13, 3977. [Google Scholar] [CrossRef]

- Ligthart, M.E.; Neerincx, M.A.; Hindriks, K.V. Memory-based personalization for fostering a long-term child-robot relationship. In Proceedings of the 2022 17th ACM/IEEE International Conference on Human-Robot Interaction (HRI 2022), Sapporo, Japan, 7–10 March 2022; pp. 80–89. [Google Scholar]

- Oliveira, W.; Hamari, J.; Ferreira, W.; Toda, A.M.; Palomino, P.T.; Vassileva, J.; Isotani, S. The effects of gender stereotype-based interfaces on users’ flow experience and performance. J. Comput. Educ. 2024, 11, 95–120. [Google Scholar] [CrossRef]

- Dhanaraj, N.; Jeon, M.; Kang, J.H.; Nikolaidis, S.; Gupta, S.K. Preference elicitation and incorporation for human-robot task scheduling. In Proceedings of the 2024 IEEE 20th International Conference on Automation Science and Engineering (CASE 2024), Bari, Italy, 28 August–1 September 2024; pp. 3103–3110. [Google Scholar]

- Lim, M.Y.; Aylett, R.; Vargas, P.A.; Ho, W.C.; Dias, J. Human-like memory retrieval mechanisms for social companions. In Proceedings of the Tenth International Conference on Autonomous Agents and Multiagent Systems (AAMAS 2011), Taipei, Taiwan, 2–6 May 2011; pp. 1117–1118. [Google Scholar]

- Bernotat, J.; Landolfi, L.; Pasquali, D.; Nardelli, A.; Rea, F. Remember me-user-centered implementation of working memory architectures on an industrial robot. Front. Robot. AI 2023, 10, 1257690. [Google Scholar] [CrossRef]

- Gaudiello, I.; Zibetti, E.; Lefort, S.; Chetouani, M.; Ivaldi, S. Trust as indicator of robot functional and social acceptance. an experimental study on user conformation to iCub answers. Comput. Hum. Behav. 2016, 61, 633–655. [Google Scholar] [CrossRef]

- Moussawi, S.; Koufaris, M. Perceived intelligence and perceived anthropomorphism of personal intelligent agents: Scale development and validation. In Proceedings of the 52nd Hawaii International Conference on System Sciences (HICSS 2019), Grand Wailea, Maui, HI, USA, 8–11 January 2019; pp. 115–124. [Google Scholar]

- Scheunemann, M.M.; Cuijpers, R.H.; Salge, C. Warmth and competence to predict human preference of robot behavior in physical human-robot interaction. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN 2020), Naples, Italy, 31 August–4 September 2020; pp. 1340–1347. [Google Scholar]

- Kamide, H.; Mae, Y.; Kawabe, K.; Shigemi, S.; Hirose, M.; Arai, T. New measurement of psychological safety for humanoid. In Proceedings of the Seventh Annual ACM/IEEE International Conference on Human-Robot Interaction (HRI 2012), Boston, MA, USA, 5–8 March 2012; pp. 49–56. [Google Scholar]

- Barchard, K.A.; Lapping-Carr, L.; Westfall, R.S.; Fink-Armold, A.; Banisetty, S.B.; Feil-Seifer, D. Measuring the perceived social intelligence of robots. ACM Trans. Hum.-Robot. Interact. 2020, 9, 24. [Google Scholar] [CrossRef]

- Galeas, J.; Bensch, S.; Hellström, T.; Bandera, A. Personalized causal explanations of a robot’s behavior. Front. Robot. AI 2025, 12, 1637574. [Google Scholar] [CrossRef] [PubMed]

- Bartneck, C.; Kulić, D.; Croft, E.; Zoghbi, S. Measurement instruments for the anthropomorphism, animacy, likeability, perceived intelligence, and perceived safety of robots. Int. J. Soc. Robot. 2009, 1, 71–81. [Google Scholar] [CrossRef]

- Woods, S.N.; Walters, M.L.; Koay, K.L.; Dautenhahn, K. Methodological issues in HRI: A comparison of live and video-based methods in robot to human approach direction trials. In Proceedings of the 15th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN 2006), Hatfield, UK, 6–8 September 2006; pp. 51–58. [Google Scholar]

- Smink, A.R.; Van Reijmersdal, E.A.; Van Noort, G.; Neijens, P.C. Shopping in augmented reality: The effects of spatial presence, personalization and intrusiveness on app and brand responses. J. Bus. Res. 2020, 118, 474–485. [Google Scholar] [CrossRef]

- Liang, T.P.; Chen, H.Y.; Turban, E. Effect of personalization on the perceived usefulness of online customer services: A dual-core theory. In Proceedings of the 11th International Conference on Electronic Commerce (ICEC 2009), Taipei, Taiwan, 12–15 August 2009; pp. 279–288. [Google Scholar]

- Kim, Y.; Lee, H.S. Quality, perceived usefulness, user satisfaction, and intention to use: An empirical study of ubiquitous personal robot service. Asian Soc. Sci. 2014, 10, 1. [Google Scholar] [CrossRef]

- ELAN, version 6.2; Max Planck Institute for Psycholinguistics, The Language Archive: Nijmegen, The Netherlands, 2021. Available online: https://archive.mpi.nl/tla/elan/previous (accessed on 6 July 2021).

- Kosel, C.; Böheim, R.; Schnitzler, K.; Holzberger, D.; Pfeffer, J.; Bannert, M.; Seidel, T. Keeping track in classroom discourse: Comparing in-service and pre-service teachers’ visual attention to students’ hand-raising behavior. Teach. Teach. Educ. 2023, 128, 104142. [Google Scholar] [CrossRef]

- Tolston, M.T.; Shockley, K.; Riley, M.A.; Richardson, M.J. Movement constraints on interpersonal coordination and communication. J. Exp. Psychol. Hum. Percept. Perform. 2014, 40, 1891. [Google Scholar] [CrossRef]

- Kompatsiari, K.; Ciardo, F.; De Tommaso, D.; Wykowska, A. Measuring engagement elicited by eye contact in human-robot interaction. In Proceedings of the 2019 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS 2019), Macau, China, 4–8 November 2019; pp. 6979–6985. [Google Scholar]

- Song, J.; Gao, Y.; Huang, Y.; Chen, L. Being friendly and competent: Service robots’ proactive behavior facilitates customer value co-creation. Technol. Forecast. Soc. Chang. 2023, 196, 122861. [Google Scholar] [CrossRef]

- Erel, H.; Shem Tov, T.; Kessler, Y.; Zuckerman, O. Robots are always social: Robotic movements are automatically interpreted as social cues. In Proceedings of the Extended Abstracts of the 2019 CHI Conference on Human Factors in Computing Systems (CHI EA 2019), Glasgow, UK, 4–9 May 2019; pp. 1–6. [Google Scholar]

- Rossi, A.; Andriella, A.; Rossi, S.; Torras, C.; Alenyà, G. Evaluating the effect of theory of mind on people’s trust in a faulty robot. In Proceedings of the 2022 31st IEEE International Conference on Robot and Human Interactive Communication (RO-MAN 2022), Naples, Italy, 29 August–1 September 2022; pp. 477–482. [Google Scholar]

- Cirillo, G.; Runnqvist, E.; Strijkers, K.; Nguyen, N.; Baus, C. Conceptual alignment in a joint picture-naming task performed with a social robot. Cognition 2022, 227, 105213. [Google Scholar] [CrossRef]

- Lacroix, D.; Wullenkord, R.; Eyssel, F. Who’s in charge? Using personalization vs. customization distinction to inform HRI research on adaptation to users. In Proceedings of the Companion of the 2023 ACM/IEEE International Conference on Human-Robot Interaction (HRI 2023), Stockholm, Sweden, 13–16 March 2023; pp. 580–586. [Google Scholar]

- Souza, P.E.; Chanel, C.P.C.; Dehais, F.; Givigi, S. Towards human-robot interaction: A framing effect experiment. In Proceedings of the 2016 IEEE International Conference on Systems, Man, and Cybernetics (SMC 2016), Budapest, Hungary, 9–12 October 2016; pp. 001929–001934. [Google Scholar]

- Baker, T.L.; Cronin, J.J.; Hopkins, C.D. The impact of involvement on key service relationships. J. Serv. Mark. 2009, 23, 114–123. [Google Scholar] [CrossRef]

- Orji, R.; Oyibo, K.; Tondello, G.F. A comparison of system-controlled and user-controlled personalization approaches. In Proceedings of the Adjunct publication of the 25th Conference on User Modeling, Adaptation and Personalization (UMAP 2017), Bratislava, Slovakia, 9–12 July 2017; pp. 413–418. [Google Scholar]

- Bui, H.D.; Dang, T.L.Q.; Chong, N.Y. Robot social emotional development through memory retrieval. In Proceedings of the 2019 7th International Conference on Robot Intelligence Technology and Applications (RiTA 2019), Daejeon, Republic of Korea, 1–3 November 2019; pp. 46–51. [Google Scholar]

- Nass, C.; Steuer, J.; Tauber, E.R. Computers are social actors. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI 1994), Boston, MA, USA, 24–28 April 1994; pp. 72–78. [Google Scholar]

- Edwards, A.; Edwards, C. Does the correspondence bias apply to social robots?: Dispositional and situational attributions of human versus robot behavior. Front. Robot. AI 2022, 8, 788242. [Google Scholar] [CrossRef]

- Fiore, S.M.; Wiltshire, T.J.; Lobato, E.J.; Jentsch, F.G.; Huang, W.H.; Axelrod, B. Toward understanding social cues and signals in human–robot interaction: Effects of robot gaze and proxemic behavior. Front. Psychol. 2013, 4, 859. [Google Scholar] [CrossRef] [PubMed]

- García-Martínez, J.; Gamboa-Montero, J.J.; Castillo, J.C.; Castro-González, Á. Analyzing the impact of responding to joint attention on the user perception of the robot in human-robot interaction. Biomimetics 2024, 9, 769. [Google Scholar] [CrossRef] [PubMed]

- Vossen, W.; Szymanski, M.; Verbert, K. The effect of personalizing a psychotherapy conversational agent on therapeutic bond and usage intentions. In Proceedings of the 29th International Conference on Intelligent User Interfaces (IUI 2024), Greenville, TX, USA, 18–21 March 2024; pp. 761–771. [Google Scholar]

- Urakami, J.; Seaborn, K. Nonverbal cues in human–robot interaction: A communication studies perspective. ACM Trans. Hum.-Robot. Interact. 2023, 12, 22. [Google Scholar] [CrossRef]

- Naiseh, M.; Clark, J.; Akarsu, T.; Hanoch, Y.; Brito, M.; Wald, M.; Webster, T.; Shukla, P. Trust, risk perception, and intention to use autonomous vehicles: An interdisciplinary bibliometric review. AI Soc. 2025, 40, 1091–1111. [Google Scholar] [CrossRef]

- Kuno, Y.; Sekiguchi, H.; Tsubota, T.; Moriyama, S.; Yamazaki, K.; Yamazaki, A. Museum guide robot with communicative head motion. In Proceedings of the 15th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN 2006), Hatfield, UK, 6–8 September 2006; pp. 33–38. [Google Scholar]

- Cantucci, F.; Marini, M.; Falcone, R. The role of robot competence, autonomy, and personality on trust formation in human-robot interaction. arXiv 2025, arXiv:2503.04296. [Google Scholar] [CrossRef]

- Schneider, J.; Handali, J. Personalized explanation in machine learning: A conceptualization. arXiv 2019, arXiv:1901.00770. [Google Scholar] [CrossRef]

- Clark, N.; Shen, H.; Howe, B.; Mitra, T. Epistemic alignment: A mediating framework for user-LLM knowledge delivery. arXiv 2025, arXiv:2504.01205. [Google Scholar] [CrossRef]

- Nass, C.; Moon, Y. Machines and mindlessness: Social responses to computers. J. Soc. Issues 2000, 56, 81–103. [Google Scholar] [CrossRef]

- Leite, I.; Martinho, C.; Paiva, A. Social robots for long-term interaction: A survey. Int. J. Soc. Robot. 2013, 5, 291–308. [Google Scholar] [CrossRef]

- Yang, J.; Vindolet, C.; Olvera, J.R.G.; Cheng, G. On the impact of robot personalization on human-robot interaction: A review. arXiv 2024, arXiv:2401.11776. [Google Scholar] [CrossRef]

- Reig, S.; Luria, M.; Wang, J.Z.; Oltman, D.; Carter, E.J.; Steinfeld, A.; Forlizzi, J.; Zimmerman, J. Not some random agent: Multi-person interaction with a personalizing service robot. In Proceedings of the 2020 ACM/IEEE International Conference on Human-Robot Interaction (HRI 2020), Cambridge, UK, 23–26 March 2020; pp. 289–297. [Google Scholar]

- Balaji, M.S.; Sharma, P.; Jiang, Y.; Zhang, X.; Walsh, S.T.; Behl, A.; Jain, K. A contingency-based approach to service robot design: Role of robot capabilities and personalities. Technol. Forecast. Soc. Chang. 2024, 201, 123257. [Google Scholar] [CrossRef]

| Category | Definition |

|---|---|

| Flexibility (F) | Behaviors that respond to changes in visitor demands or situational changes. |

| Proactivity (P) | Behaviors that attempt to identify or anticipate visitor needs or situational changes. |

| Interpersonal Skills (IS) | Behaviors that elicit responses from visitors through engaging actions (e.g., maintaining attention). |

| Category | Subcategory | Definition |

|---|---|---|

| Gaze | Object (Gaze-O) | Looking at objects (e.g., exhibits, tools). |

| Visitor (Gaze-V) | Looking around at the visitor(s), including any accompanying persons. | |

| Point | Object (Point-O) | Pointing out the object. |

| Visitor (Point-V) | Pointing out the visitor. | |

| Move | Toward Object (Move-O) | Moving toward the object and stops. |

| Toward Visitor (Move-V) | Moving toward the visitor and stops. | |

| Talk | Object (Talk-O) | Talking about the object. |

| Question (Talk-Q) | Asking a question to the customer. | |

| Answer (Talk-A) | Responding to the customer’s question. | |

| Unexpected situation (Talk-U) | Talking about special or emergent situations (e.g., emergencies, hazards). | |

| Backchannel (Talk-B) | Giving a brief verbal response to acknowledge the customer’s statement. |

| Category | Interview Questions |

|---|---|

| Background & Experience | Q1. How long have you been working as a museum docent? Q2. What do you consider most important when interacting with visitors? |

| Assessing Visitor Characteristics | Q3. How do you adjust explanation style based on age differences? Q4. How do you recognize visitors with prior technical knowledge? Q5. How does your explanation change for technically knowledgeable visitors? |

| Verbal & Non-verbal Adaptation | Q6. How do your gestures, gaze, and expressions change when interacting with children? Q7. What actions do you take when visitors lose attention? Q8. How do you decide when to point to exhibits or move during explanations? |

| Flexibility & Situational Responses | Q9. How do you maintain explanations when unexpected situations occur (e.g., robot malfunction)? Q10. Can you describe a case where you adjusted complexity spontaneously? Q11. How do you manage communication when multiple visitors are present? |

| Relational Personalization | Q12. What strategies do you use to personalize one-off interactions? Q13. Do you ever ask for visitors’ names or personal details? Under what circumstances? |

| Contextual & Spatial Adaptation | Q14. What types of personalization are possible in lobby or transition spaces? Q15. Does your approach change depending on the exhibition area or space? |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yoon, H.; Kim, M.-G.; Kim, S.; Suh, J.-H. Designing Personalization Cues for Museum Robots: Docent Observation and Controlled Studies. Sensors 2025, 25, 7095. https://doi.org/10.3390/s25227095

Yoon H, Kim M-G, Kim S, Suh J-H. Designing Personalization Cues for Museum Robots: Docent Observation and Controlled Studies. Sensors. 2025; 25(22):7095. https://doi.org/10.3390/s25227095

Chicago/Turabian StyleYoon, Heeyoon, Min-Gyu Kim, SunKyoung Kim, and Jin-Ho Suh. 2025. "Designing Personalization Cues for Museum Robots: Docent Observation and Controlled Studies" Sensors 25, no. 22: 7095. https://doi.org/10.3390/s25227095

APA StyleYoon, H., Kim, M.-G., Kim, S., & Suh, J.-H. (2025). Designing Personalization Cues for Museum Robots: Docent Observation and Controlled Studies. Sensors, 25(22), 7095. https://doi.org/10.3390/s25227095