1. Introduction

In underwater vision, there is an urgent demand for high-precision environmental perception techniques. As one of the most fundamental problems, depth estimation holds enormous potential to advance a broader range of underwater tasks—particularly in the field of coastal observation [

1,

2,

3,

4,

5,

6]. Among various sensing approaches—including multibeam/side-scan sonar and bathymetric LiDAR—stereo-based underwater depth estimation remains one of the most promising techniques due to its direct geometric formulation and high accuracy potential in shallow to mid-range waters. Sonar is robust to turbidity and long ranges but typically provides coarser spatial resolution and weak textural cues; bathymetric LiDAR performs best in clear shallow waters and requires specialized, costly hardware and careful logistics. In contrast, passive optical stereo can be built from commodity cameras, delivers centimeter-level structure with natural color, and is therefore a practical and economical choice for many diver/ROV-scale applications. Nevertheless, the effective range of passive optical stereo is constrained by turbidity and available light. Our dual-branch denoising (AWB + RCP for monocular; JBF for stereo) is explicitly designed to mitigate these failure modes while preserving the low-cost footprint of passive sensing.

Despite the remarkable progress achieved in the field of terrestrial stereo depth estimation [

7,

8,

9,

10], transferring these advances underwater remains highly challenging. Affected by wavelength-selective attenuation, backscatter, and inhomogeneous turbulence, underwater images suffer from multiple degradations, including edge blurring, hazing, and detail loss. Therefore, stereo depth estimation for multi-degraded underwater images has become one of the most challenging topics in current underwater vision [

11,

12].

Existing research generally follows three directions. First, there is directly transferring large pretrained terrestrial models: million-parameter models such as Foundation-Stereo [

8] and MonSter [

7] have demonstrated excellent generalization capabilities in adverse weather scenarios. However, when directly applied to underwater environments, they often misclassify hazy regions as objects and suffer from severe edge deviation, leading to significant performance degradation. Second, there are pre-denoising approaches prior to depth estimation. Traditional dehazing [

13] and contrast enhancement [

14,

15] algorithms can improve visual perception but tend to sacrifice details or amplify noise; deep learning-based enhancement networks [

16,

17] are mostly optimized for object detection [

18,

19] or segmentation [

20,

21] tasks, which are inconsistent with the geometric fidelity requirements of depth estimation. Another often-overlooked issue is that existing methods struggle to simultaneously address various challenges in underwater depth estimation: contrast enhancement algorithms tend to intensify noise in hazy regions, while dehazing algorithms easily cause detail loss. Additionally, to avoid the high cost of acquiring real underwater stereo datasets, mainstream works [

22,

23] synthesize large-scale training data using terrestrial stereo datasets and underwater imaging models, and they also train monocular or stereo matching networks on this basis. However, the inherent problems of the domain gap and insufficient realism limit their practical application effects.

In summary, synthetic underwater datasets lack real degradation priors, large-model transfer ignores underwater domain differences, and image enhancement fails to simultaneously balance dehazing, edge preservation, and detail retention. These issues result in significant errors in the final depth maps within occluded regions, low-texture regions, and distant regions.

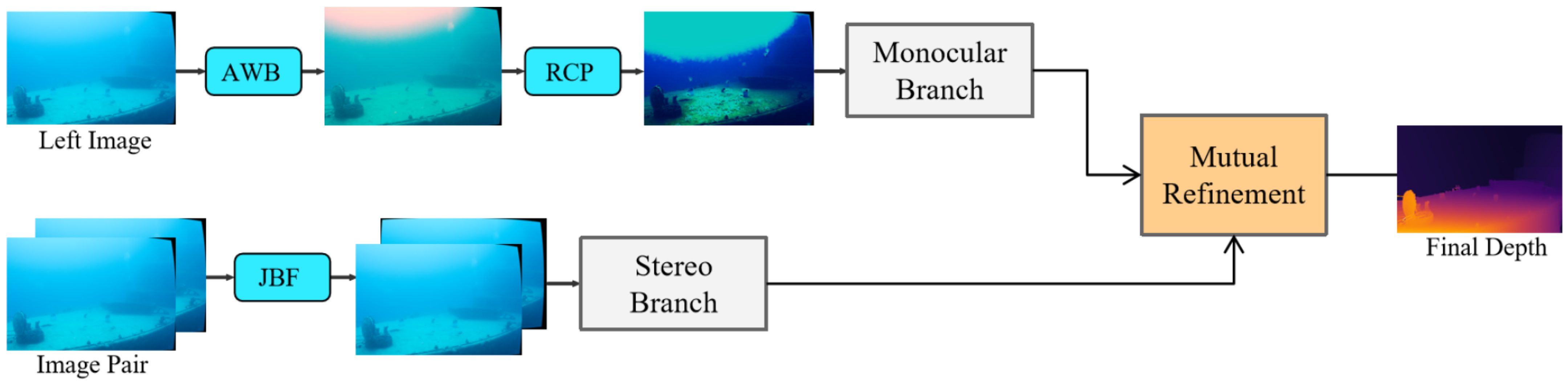

To address these challenges, we propose Joint Dual-Branch Denoising (JDBD), which is a plug-in framework embedded within a dual-branch depth estimation network. JDBD introduces targeted denoising for both monocular and stereo pathways and enables bidirectional refinement between them. The monocular branch integrates Adaptive White Balance (AWB) and Red Inverse Channel Prior (RCP) for color correction and depth-aware dehazing, while the stereo branch applies Joint Bilateral Filtering (JBF) to suppress scattering and preserve geometric structures. Through this dual-pathway interaction, JDBD generates high-fidelity, full-range underwater depth maps.

Experiments on the UWStereo [

24] synthetic dataset and the SQUID [

25] real-world dataset demonstrate that incorporating JDBD notably improves underwater depth estimation accuracy and visual quality, showing robust performance under diverse conditions. The main contributions of this paper are summarized as follows:

We propose the dual-branch joint denoising framework for underwater stereo depth estimation, achieving a balanced optimization of dehazing, edge preservation, and detail retention.

We design three lightweight modules—AWB, RCP, and JBF—to perform targeted compensation for distinct degradations in monocular and stereo pathways.

We show that JDBD can serve as a plug-in for dual-branch depth networks, providing a transferable paradigm for the unified development of terrestrial and underwater depth estimation.

3. Method

We propose a Joint Dual-Branch Denoising framework to address the accuracy drop of underwater stereo depth estimation caused by multiple image degradations. The architecture comprises three lightweight modules—Adaptive White Balance (AWB), Red Inverse Channel Prior (RCP), and Joint Bilateral Filtering (JBF). All three are adapted from classical image processing and prior-based restoration—gray-world white balancing, red/underwater dark-channel priors [

36,

41], and joint bilateral filtering [

42]—but are re-parameterized and positioned for stereo depth fidelity. Concretely, AWB computes per-channel gains from a high-confidence luminance subset to expand the attenuated red band while preserving cross-view photometric consistency; RCP inverts the red channel and imposes channel-coupled transmission constraints under the underwater imaging model to suppress haze without over-correction; JBF is applied only to the stereo branch as a cross-view-guided filter that reduces scattering while keeping epipolar-consistent edges. Integrated via the dual-branch design (AWB→RCP on the monocular path; JBF on the stereo path), these modules provide complementary, task-aware denoising before the mutual refinement stage, as illustrated in

Figure 1.

3.1. Targeted Denoising for the Monocular Branch

The preprocessing for the monocular branch follows an AWB→RCP order designed for task adaptation. Adaptive White Balance (AWB) is first applied as spatially invariant per-channel gains to neutralize color cast and increase local contrast, maximizing the retention of texture details and faint distant cues.This step inevitably accentuates backscatter in turbid regions. RCP is subsequently applied to compensate range-dependent attenuation and suppress backscatter, producing an input to the monocular branch that preserves details while remaining visually clear. Within the dual-branch architecture, the monocular branch leverages this preprocessed image to capture rich textures and distant scene layout, whereas the stereo branch supplies precise depth for fine structures via disparity.

3.1.1. Notation and Windows

Let be the observed intensity at pixel x and channel . We denote the image domain by . Let denote the scene radiance (haze-suppressed image), the background light, and the transmission, where is the attenuation coefficient and the scene depth. For any pixel x, denotes a square spatial window of radius r (size ). For AWB, the k-th color-temperature bin is the intra-frame pixel set with cardinality .

3.1.2. Adaptive White Balance (AWB)

Define channel sums and means over

:

Using

G as the neutral reference yields per-bin gains

and the corrected output for pixels

:

We compute

from a luminance-confident subset intersected with the

k-th CCT bin, which stabilizes gray-world estimation under wavelength-selective attenuation.

3.1.3. Red Inverse Channel Prior (RCP)

The classical dark channel prior (DCP) assumes that in a haze-free patch, at least one channel is nearly zero:

Underwater, strong red attenuation violates this assumption; we therefore invert the red channel before the min operator:

Spectral Coupling

Using the scattering–attenuation relation

we obtain

and thus channel-coupled transmissions

Finally, the restored radiance is

with a small

to avoid amplification.

3.2. Targeted Denoising for the Stereo Branch

Before disparity estimation, we apply bilateral filtering to each view to suppress scattering while preserving edges. For a pixel

p in the left image and a spatial window

,

where

and

. When the guidance image differs (e.g., the right view guides the left),

Here,

maps a left-image pixel

q to its guided correspondence in the right image; for rectified pairs,

using the current disparity

d.

The JBF suppresses scattering-induced blur and uneven illumination while maintaining geometric and photometric consistency between the stereo pair. By performing spatially and photometrically weighted filtering, it produces edge-preserving, disparity-consistent images that strengthen the reliability and stability of stereo correspondence estimation, particularly under turbid or low-contrast underwater conditions.

3.3. Mutual Refinement

Following the mutual refinement framework of MonSter [

7], our network establishes an iterative coupling between the denoised monocular and stereo branches. After branch-wise denoising (AWB and RCP for the monocular path, and JBF for the stereo path; see

Figure 1), the two branches exchange complementary cues to achieve consistent, noise-suppressed depth estimation. The refinement consists of three essential stages: global alignment, alternating update, and weighted supervision.

3.3.1. Global Alignment

The relative monocular depth is converted into disparity and coarsely aligned with the stereo domain through a global scale–shift pair

estimated on reliable pixels

:

This step provides a unified depth scale, enabling the two branches to operate within the same geometric domain.

3.3.2. Alternating Update

The refinement alternates between the monocular and stereo branches for

rounds after

initial stereo-only iterations. Each update stage integrates cross-branch cues through learned refinement operators

and

:

where

. The preceding denoising modules ensure that each branch provides stable, low-noise structural priors, allowing the iterative updates to jointly enhance fine-scale consistency and suppress scattering-related artifacts. This cooperative process tightly couples the dual-branch denoising and depth estimation, enabling the progressive fusion of geometric and photometric information.

3.3.3. Loss Function

The network is trained with L1 supervision across all iterations, using exponentially decayed weights to emphasize later refinements. The total loss combines the stereo branch loss

and monocular branch loss

as follows:

where

is the exponential decay coefficient. After

rounds, the refined stereo disparity

serves as the final output.

This dual-branch refinement effectively integrates denoising and depth estimation: the monocular path provides dehazed and spectrally corrected priors, while the stereo path enforces geometric accuracy. Through iterative cross-guidance, both branches converge toward a unified, noise-robust underwater depth representation.