A Review of Methods for Predicting Driver Take-Over Time in Conditionally Automated Driving

Abstract

1. Introduction

- RQ1: What are the key factors that influence take-over time, and how do they interact? This question aims to synthesize the multifaceted influences on TOT—encompassing driver states, environmental conditions, and TOR characteristics—to advance beyond analyses of factors in isolation.

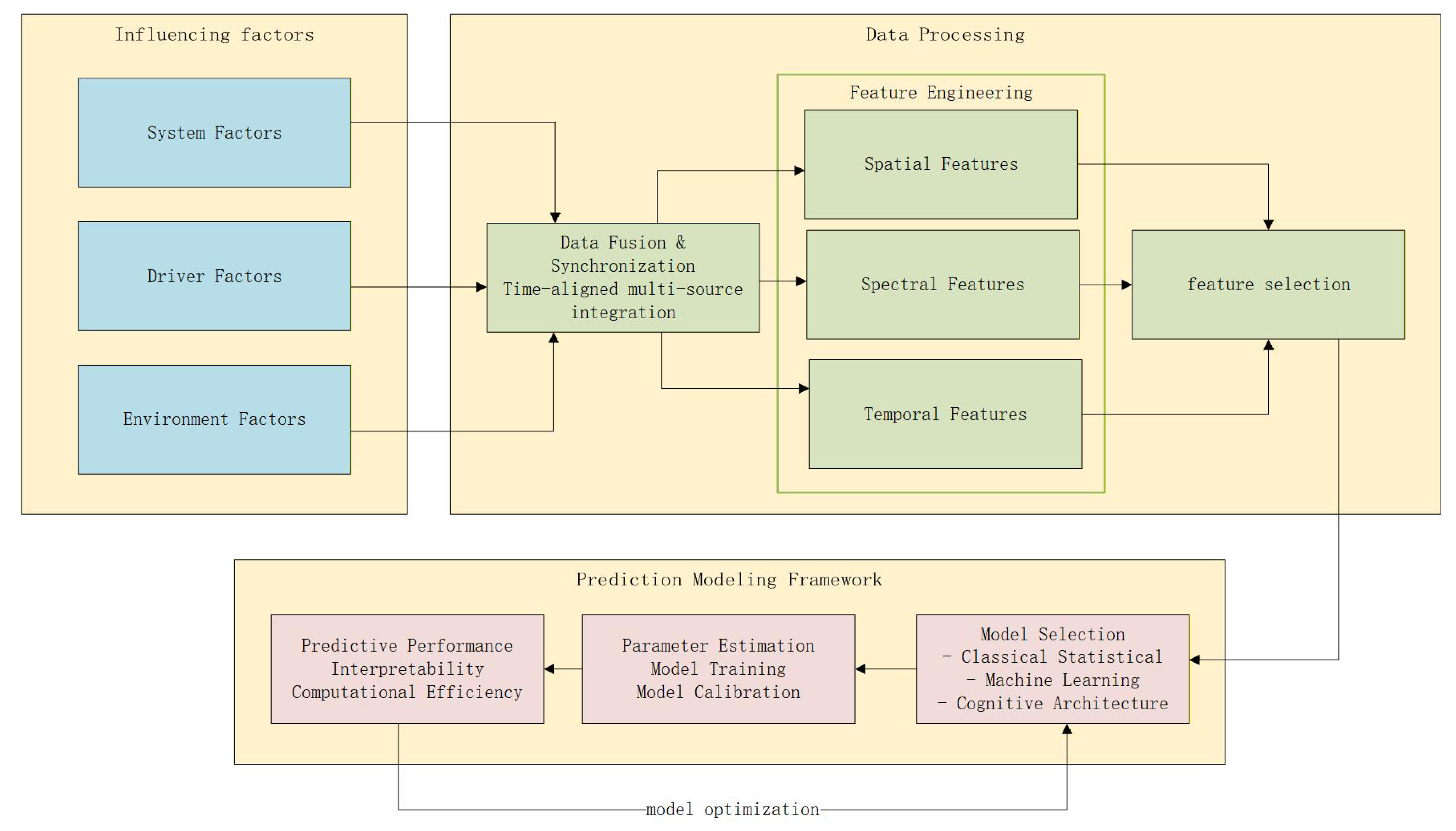

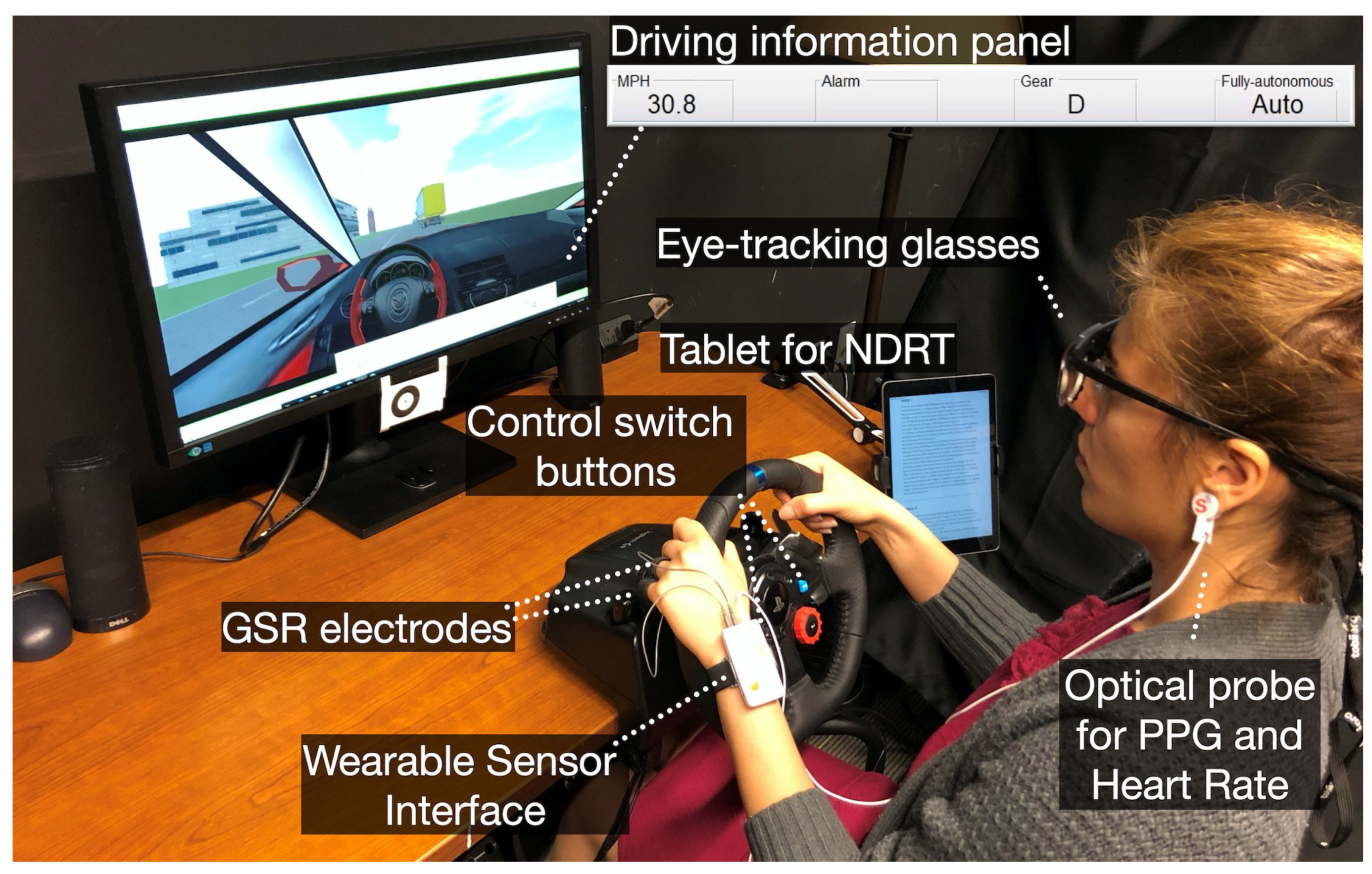

- RQ2: What are the primary methods for data collection and processing in TOT research, and what are their respective challenges?This question seeks to critically outline and compare experimental paradigms, data acquisition techniques, and preprocessing methods, with a particular focus on the gap between simulator-based and real-world data.

- RQ3: What are the prevailing methodological approaches for predicting TOT, and how do their performance and applicability compare across different scenarios? This question focuses on reviewing, classifying, and evaluating the prediction models themselves, ranging from statistical analyses to machine learning techniques, to elucidate their strengths and limitations.

2. Literature Search Information

2.1. Literature Type

2.2. Keywords of the Literature

2.3. Countries and Regions

2.4. Institutions and Journals

3. Factors Affecting Take-Over Time

3.1. Driver Factors

3.1.1. State of Engaging in Non-Driving Related Tasks

| Non-Driving Related Tasks | Sensory | Movement | Language | Memory | Ref. |

|---|---|---|---|---|---|

| Observe Surrounding Environment | Visual | N/A | F | T | [9,15,16,17] |

| Watch Video | Visual, Audio | N/A | F | N/A | [14,15,18] |

| Make Phone Call | Audio | T | T | T | [9] |

| Have a Conversation | Audio | N/A | T | T | [9,14] |

| Answer Questions | Visual, Audio | F | T | T | [14,19] |

| Read Book | Visual | N/A | F | N/A | [9] |

| Listen to Music | Audio | F | F | N/A | [14] |

| Listen to Audiobook | Audio | N/A | F | T | [16] |

| Read Magazine | Visual | T | F | N/A | [14,16] |

| 2-Back (Visual) | Visual | T | F | T | [20] |

| 2-Back (Audio) | Audio | F | T | T | [21] |

| Rest with Eyes Closed | N/A | F | F | F | [9] |

| Send Text Messages | Visual | T | F | T | [9] |

| Count Change | Visual | T | F | T | [9] |

| Search Task | Visual | T | F | T | [16] |

| SuRT | Visual | T | F | T | [10,20,22,23,24] |

| Play Tetris | Visual | T | F | T | [16] |

| Play 2048 | Visual | T | F | T | [25,26] |

3.1.2. Individual Differences

3.2. Autonomous Driving System

3.2.1. Take-Over Request

| Take-Over Request | Specific Content | Ref. |

|---|---|---|

| Auditory | Audio Alarm | [41] |

| Beeper | [22] | |

| Beep Sound | [14,42,43] | |

| Mixed Frequency Alert Tone | [39] | |

| Visual | Display Icon and Steering Wheel LED Flashing | [44] |

| Flashing Red Image | [39] | |

| Changing Color Lighting | [45] | |

| Tactile | Seatbelt and Seat Vibration | [46] |

| Seat Vibration | [47] | |

| Bottom Seat Vibration | [39] | |

| Visual + Auditory | Screen Icon + Mixed Frequency Alert Tone | [48] |

| Screen Text and Ambient Light + Bell/Beep Sound | [40] | |

| Beep Sound + Red Text Image | [42] | |

| Screen Icon + Buzz Sound | [18] | |

| Visual Cue + Female Voice | [43] | |

| Screen Image + Standard Warning Tone (Beep) | [38] | |

| Display Text + Non-verbal Alert Sound | [49] | |

| Visual + Tactile | Flashing Red Image + Bottom Seat Vibration | [39] |

| Changing Color Lighting + Seat Vibration | [45] | |

| Screen Image + Seatbelt Vibration | [38] | |

| Auditory + Tactile | Mixed Frequency Alert Tone + Bottom Seat Vibration | [39] |

| Visual + Auditory + Tactile | Flashing Red Image + Mixed Frequency Alert Tone + Bottom Seat Vibration | [39] |

| Bar LED + Boeing 747 Alarm Sound + Backrest Vibration | [50] | |

| Screen Image + Standard Warning Tone + Seatbelt Vibration | [38] |

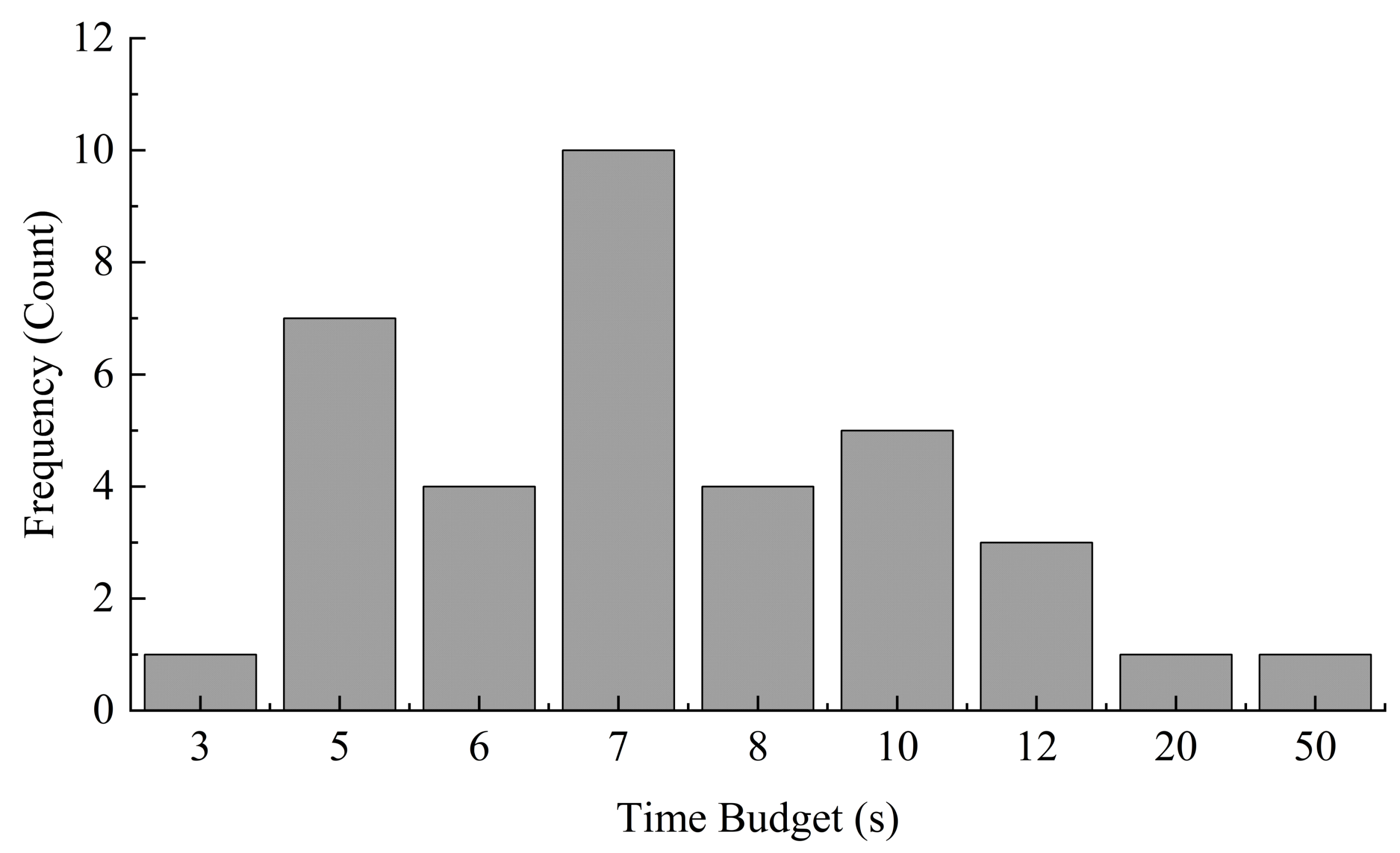

3.2.2. Time Budget

3.2.3. Take-Over Methods

3.3. Driving Environment

3.3.1. Environmental Factors

3.3.2. Take-Over Events

4. Data Acquisition and Processing Method

4.1. Data Acquisition

| Data Type | Specific Content | Ref. |

|---|---|---|

| Visual Data | Gaze | [14,16,56,58,63,88] |

| Saccade | [56,58] | |

| Pupil area | [56,58,63] | |

| Blinking | [56] | |

| Facial direction | [48] | |

| Head posture | [56,89] | |

| Experiment Type Data | Age/Gender/NDRTs/Take-over mode | [90] |

| Psychometric Data | Drowsiness | [16] |

| NDRTs engagement | [14] | |

| Distraction score | [18] | |

| Risky driving tendency | [83] | |

| System trust | [26] | |

| Physiological Data | Respiration | [59] |

| Heart rate | [22,56,63] | |

| Skin conductance response | [22,56,63] | |

| Electrocardiography | [48] | |

| Electroencephalography | [91] | |

| Limb Data | Hand position | [14,48,88,90] |

| Foot position | [48,88,90] | |

| Body posture | [48] | |

| Vehicle Data | Position/Speed/Acceleration/Steering angle | [19,41,63] |

4.2. Data Processing

5. Take-Over Time Prediction Methods

5.1. Classical Statistical Models

5.2. Machine Learning Models

5.3. Cognitive Architectures Models

5.4. Comparative Analysis Across Modeling Paradigms

6. Discussion

6.1. Experimental Limitations

6.2. Model Limitations

7. Future Directions

8. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gangadharaiah, R.; Mims, L.; Jia, Y.; Brooks, J. Opinions from Users Across the Lifespan about Fully Autonomous and Rideshare Vehicles with Associated Features. SAE Int. J. Adv. Curr. Prac. Mobil. 2024, 6, 309–323. [Google Scholar] [CrossRef]

- Mohammed, K.; Abdelhafid, M.; Kamal, K.; Ismail, N.; Ilias, A. Intelligent driver monitoring system: An Internet of Things-based system for tracking and identifying the driving behavior. Comput. Stand. Interfaces 2023, 84, 103704. [Google Scholar] [CrossRef]

- Cigno, R.L.; Segata, M. Cooperative driving: A comprehensive perspective, the role of communications, and its potential development. Comput. Commun. 2022, 193, 82–93. [Google Scholar] [CrossRef]

- Zhang, B.; De Winter, J.; Varotto, S.; Happee, R.; Martens, M. Determinants of take-over time from automated driving: A meta-analysis of 129 studies. Transp. Res. Part F Traffic Psychol. Behav. 2019, 64, 285–307. [Google Scholar] [CrossRef]

- Wang, W.; Li, Q.; Zeng, C.; Li, G.; Zhang, J.; Li, S.; Cheng, B. Review of Take-over Performance of Autonomous Driving: Influencing Factors, Models, and Evaluation Methods. China J. Highw. Transp. 2023, 36, 2022–2224. [Google Scholar] [CrossRef]

- Wang, C.; Ren, W.; Xu, C.; Zheng, N.; Peng, C.; Tong, H. Exploring the Impact of Conditionally Automated Driving Vehicles Transferring Control to Human Drivers on the Stability of Heterogeneous Traffic Flow. IEEE Trans. Intell. Veh. 2025, 10, 912–928. [Google Scholar] [CrossRef]

- Aria, M.; Cuccurullo, C. bibliometrix: An R-tool for comprehensive science mapping analysis. J. Informetr. 2017, 11, 959–975. [Google Scholar] [CrossRef]

- Minhas, S.; Hernández-Sabaté, A.; Ehsan, S.; McDonald-Maier, K.D. Effects of non-driving related tasks during self-driving mode. IEEE Trans. Intell. Transp. Syst. 2020, 23, 1391–1399. [Google Scholar] [CrossRef]

- Rangesh, A.; Deo, N.; Greer, R.; Gunaratne, P.; Trivedi, M.M. Predicting take-over time for autonomous driving with real-world data: Robust data augmentation, models, and evaluation. arXiv 2021, arXiv:2107.12932. [Google Scholar] [CrossRef]

- Ban, G.; Park, W. Effects of in-vehicle touchscreen location on driver task performance, eye gaze behavior, and workload during conditionally automated driving: Nondriving-related task and take-over. Hum. Factors 2024, 66, 2651–2668. [Google Scholar] [CrossRef]

- Müller, A.L.; Fernandes-Estrela, N.; Hetfleisch, R.; Zecha, L.; Abendroth, B. Effects of non-driving related tasks on mental workload and take-over times during conditional automated driving. Eur. Transp. Res. Rev. 2021, 13, 16. [Google Scholar] [CrossRef]

- Merlhiot, G.; Bueno, M. How drowsiness and distraction can interfere with take-over performance: A systematic and meta-analysis review. Accid. Anal. Prev. 2022, 170, 106536. [Google Scholar] [CrossRef]

- Pan, H.; He, H.; Wang, Y.; Cheng, Y.; Dai, Z. The impact of non-driving related tasks on the development of driver sleepiness and takeover performances in prolonged automated driving. J. Saf. Res. 2023, 86, 148–163. [Google Scholar] [CrossRef]

- Yoon, S.H.; Lee, S.C.; Ji, Y.G. Modeling takeover time based on non-driving-related task attributes in highly automated driving. Appl. Ergon. 2021, 92, 103343. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Q.; Esterwood, C.; Pradhan, A.K.; Tilbury, D.; Yang, X.J.; Robert, L.P. The impact of modality, technology suspicion, and ndrt engagement on the effectiveness of av explanations. IEEE Access 2023, 11, 81981–81994. [Google Scholar] [CrossRef]

- Berghöfer, F.L.; Purucker, C.; Naujoks, F.; Wiedemann, K.; Marberger, C. Prediction of take-over time demand in conditionally automated driving-results of a real world driving study. In Proceedings of the Human Factors and Ergonomics Society Europe, Berlin, Germany, 8–10 October 2018; pp. 69–81. [Google Scholar]

- Bai, J.; Sun, X.; Cao, S.; Wang, Q.; Wu, J. Exploring the timing of disengagement from nondriving related tasks in scheduled takeovers with pre-alerts: An analysis of takeover-related measures. Hum. Factors 2024, 66, 2669–2690. [Google Scholar] [CrossRef] [PubMed]

- Li, Q.; Hou, L.; Wang, Z.; Wang, W.; Zeng, C.; Yuan, Q.; Cheng, B. Drivers’ visual-distracted take-over performance model and its application on adaptive adjustment of time budget. Accid. Anal. Prev. 2021, 154, 106099. [Google Scholar] [CrossRef]

- Hwang, S.; Banerjee, A.G.; Boyle, L.N. Predicting driver’s transition time to a secondary task given an in-vehicle alert. IEEE Trans. Intell. Transp. Syst. 2020, 23, 4739–4745. [Google Scholar] [CrossRef]

- Deng, C.; Cao, S.; Wu, C.; Lyu, N. Modeling driver take-over reaction time and emergency response time using an integrated cognitive architecture. Transp. Res. Rec. 2019, 2673, 380–390. [Google Scholar] [CrossRef]

- Du, N.; Zhou, F.; Tilbury, D.M.; Robert, L.P.; Yang, X.J. Behavioral and physiological responses to takeovers in different scenarios during conditionally automated driving. Transp. Res. F Traffic Psychol. Behav. 2024, 101, 320–331. [Google Scholar] [CrossRef]

- Yi, B.; Cao, H.; Song, X.; Wang, J.; Guo, W.; Huang, Z. Measurement and real-time recognition of driver trust in conditionally automated vehicles: Using multimodal feature fusions network. Transp. Res. Rec. 2023, 2677, 311–330. [Google Scholar] [CrossRef]

- Huang, C.; Yang, B.; Nakano, K. Impact of Personality on Takeover Time and Maneuvers Shortly After Takeover Request. IEEE Trans. Intell. Transp. Syst. 2024, 25, 10712–10724. [Google Scholar] [CrossRef]

- Huang, C.; Yang, B.; Nakano, K. Impact of duration of monitoring before takeover request on takeover time with insights into eye tracking data. Accid. Anal. Prev. 2023, 185, 107018. [Google Scholar] [CrossRef]

- Sanghavi, H.; Zhang, Y.; Jeon, M. Exploring the influence of driver affective state and auditory display urgency on takeover performance in semi-automated vehicles: Experiment and modelling. Int. J. Hum.-Comput. Stud. 2023, 171, 102979. [Google Scholar] [CrossRef]

- Ko, S.; Zhang, Y.; Jeon, M. Modeling the effects of auditory display takeover requests on drivers’ behavior in autonomous vehicles. In Proceedings of the 11th International Conference on Automotive User Interfaces and Interactive Vehicular Applications: Adjunct Proceedings, Utrecht, The Netherlands, 21–25 September 2019; pp. 392–398. [Google Scholar] [CrossRef]

- Gasne, C.; Paire-Ficout, L.; Bordel, S.; Lafont, S.; Ranchet, M. Takeover performance of older drivers in automated driving: A review. Transp. Res. Part F Traffic Psychol. Behav. 2022, 87, 347–364. [Google Scholar] [CrossRef]

- Muslim, H.; Itoh, M.; Liang, C.K.; Antona-Makoshi, J.; Uchida, N. Effects of gender, age, experience, and practice on driver reaction and acceptance of traffic jam chauffeur systems. Sci. Rep. 2021, 11, 17874. [Google Scholar] [CrossRef]

- Becker, S.; Brandenburg, S.; Thüring, M. Driver-initiated take-overs during critical evasion maneuvers in automated driving. Accid. Anal. Prev. 2024, 194, 107362. [Google Scholar] [CrossRef] [PubMed]

- Samani, A.R.; Mishra, S.; Dey, K. Assessing the effect of long-automated driving operation, repeated take-over requests, and driver’s characteristics on commercial motor vehicle drivers’ driving behavior and reaction time in highly automated vehicles. Transp. Res. Part F Traffic Psychol. Behav. 2022, 84, 239–261. [Google Scholar] [CrossRef]

- Han, Y.; Wang, T.; Shi, D.; Ye, X.; Yuan, Q. The Effect of Multifactor Interaction on the Quality of Human–Machine Co-Driving Vehicle Take-Over. Sustainability 2023, 15, 5131. [Google Scholar] [CrossRef]

- Sanghavi, H.K. Exploring the Influence of Anger on Takeover Performance in Semi-Automated Vehicles. Ph.D. Thesis, Virginia Tech, Blacksburg, VA, USA, 2020. [Google Scholar]

- Rydström, A.; Mullaart, M.S.; Novakazi, F.; Johansson, M.; Eriksson, A. Drivers’ performance in non-critical take-overs from an automated driving system—An on-road study. Hum. Factors 2023, 65, 1841–1857. [Google Scholar] [CrossRef] [PubMed]

- Li, S.; Blythe, P.; Zhang, Y.; Edwards, S.; Guo, W.; Ji, Y.; Goodman, P.; Hill, G.; Namdeo, A. Analysing the effect of gender on the human-machine interaction in level 3 automated vehicles. Sci. Rep. 2022, 12, 11645. [Google Scholar] [CrossRef]

- Roberts, S.C.; Hanson, W.; Ebadi, Y.; Talreja, N.; Knodler, M.A., Jr.; Fisher, D.L. Evaluation of a 3M (mistakes, mentoring, and mastery) training program for transfer of control situations in a level 2 automated driving system. Appl. Ergon. 2024, 116, 104215. [Google Scholar] [CrossRef]

- Janssen, C.; Praetorius, L.; Borst, J. PREDICTOR: A tool to predict the timing of the take-over response process in semi-automated driving. Transp. Res. Interdiscip. Perspect. 2024, 27, 101192. [Google Scholar] [CrossRef]

- Niu, L.; Gao, S.; Shi, J.; Wu, C.; Wang, Y.; Ma, S.; Wang, D.; Yang, Z.; Li, H. Are warnings suitable for presentation in head-up display? A meta-analysis for the effect of head-up display warning on driving performance. Transp. Res. Rec. 2024, 2678, 336–359. [Google Scholar] [CrossRef]

- Laakmann, F.; Seyffert, M.; Herpich, T.; Saupp, L.; Ladwig, S.; Kugelmeier, M.; Vollrath, M. Benefits of Tactile Warning and Alerting of the Driver through an Active Seat Belt System. In Proceedings of the 27th International Technical Conference on the Enhanced Safety of Vehicles (ESV 2023), Yokohama, Japan, 3–6 April 2023; Volume 24. [Google Scholar]

- Yun, H.; Yang, J.H. Multimodal warning design for take-over request in conditionally automated driving. Eur. Transp. Res. Rev. 2020, 12, 1–11. [Google Scholar] [CrossRef]

- Lee, S.; Hong, J.; Jeon, G.; Jo, J.; Boo, S.; Kim, H.; Jung, S.; Park, J.; Choi, I.; Kim, S. Investigating effects of multimodal explanations using multiple In-vehicle displays for takeover request in conditionally automated driving. Transp. Res. Part F Traffic Psychol. Behav. 2023, 96, 1–22. [Google Scholar] [CrossRef]

- Wang, C.; Xu, C.; Peng, C.; Tong, H.; Ren, W.; Jiao, Y. Predicting the duration of reduced driver performance during the automated driving takeover process. J. Intell. Transp. Syst. 2024, 29, 218–233. [Google Scholar] [CrossRef]

- Yang, W.; Wu, Z.; Tang, J.; Liang, Y. Assessing the effects of modalities of takeover request, lead time of takeover request, and traffic conditions on takeover performance in conditionally automated driving. Sustainability 2023, 15, 7270. [Google Scholar] [CrossRef]

- Zhang, Y.; Ma, Q.; Qu, J.; Zhou, R. Effects of driving style on takeover performance during automated driving: Under the influence of warning system factors. Appl. Ergon. 2024, 117, 104229. [Google Scholar] [CrossRef]

- Diederichs, F.; Muthumani, A.; Feierle, A.; Galle, M.; Mathis, L.A.; Bopp-Bertenbreiter, V.; Widlroither, H.; Bengler, K. Improving driver performance and experience in assisted and automated driving with visual cues in the steering wheel. IEEE Trans. Intell. Transp. Syst. 2022, 23, 4843–4852. [Google Scholar] [CrossRef]

- Capallera, M.; Meteier, Q.; De Salis, E.; Widmer, M.; Angelini, L.; Carrino, S.; Sonderegger, A.; Abou Khaled, O.; Mugellini, E. A contextual multimodal system for increasing situation awareness and takeover quality in conditionally automated driving. IEEE Access 2023, 11, 5746–5771. [Google Scholar] [CrossRef]

- Martinez, K.D.; Huang, G. In-vehicle human machine interface: Investigating the effects of tactile displays on information presentation in automated vehicles. IEEE Access 2022, 10, 94668–94676. [Google Scholar] [CrossRef]

- Huang, G.; Pitts, B.J. To inform or to instruct? An evaluation of meaningful vibrotactile patterns to support automated vehicle takeover performance. IEEE Trans. Hum.-Mach. Syst. 2022, 53, 678–687. [Google Scholar] [CrossRef]

- Teshima, T.; Niitsuma, M.; Nishimura, H. Determining the onset of driver’s preparatory action for take-over in automated driving using multimodal data. Expert Syst. Appl. 2024, 246, 123153. [Google Scholar] [CrossRef]

- Ko, S.; Kutchek, K.; Zhang, Y.; Jeon, M. Effects of non-speech auditory cues on control transition behaviors in semi-automated vehicles: Empirical study, modeling, and validation. Int. J. Hum.-Comput. Interact. 2022, 38, 185–200. [Google Scholar] [CrossRef]

- Gruden, T.; Tomažič, S.; Sodnik, J.; Jakus, G. A user study of directional tactile and auditory user interfaces for take-over requests in conditionally automated vehicles. Accid. Anal. Prev. 2022, 174, 106766. [Google Scholar] [CrossRef] [PubMed]

- Shahini, F.; Park, J.; Welch, K.; Zahabi, M. Effects of unreliable automation, non-driving related task, and takeover time budget on drivers’ takeover performance and workload. Ergonomics 2023, 66, 182–197. [Google Scholar] [CrossRef]

- Gold, C.; Damböck, D.; Lorenz, L.; Bengler, K. “Take over!” How long does it take to get the driver back into the loop? In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, San Diego, CA, USA, 30 September–4 October 2013; Volume 57, pp. 1938–1942. [Google Scholar] [CrossRef]

- Wu, H.; Wu, C.; Lyu, N.; Li, J. Does a faster takeover necessarily mean it is better? A study on the influence of urgency and takeover-request lead time on takeover performance and safety. Accid. Anal. Prev. 2022, 171, 106647. [Google Scholar] [CrossRef]

- Griffith, M.; Akkem, R.; Maheshwari, J.; Seacrist, T.; Arbogast, K.B.; Graci, V. The effect of a startle-based warning, age, gender, and secondary task on takeover actions in critical autonomous driving scenarios. Front. Bioeng. Biotechnol. 2023, 11, 1147606. [Google Scholar] [CrossRef]

- Oh, H.; Yun, Y.; Myung, R. Driver behavior and mental workload for takeover safety in automated driving: ACT-R prediction modeling approach. Traffic Inj. Prev. 2024, 25, 381–389. [Google Scholar] [CrossRef]

- Du, N.; Zhou, F.; Pulver, E.M.; Tilbury, D.M.; Robert, L.P.; Pradhan, A.K.; Yang, X.J. Predicting driver takeover performance in conditionally automated driving. Accid. Anal. Prev. 2020, 148, 105748. [Google Scholar] [CrossRef]

- Leitner, J.; Miller, L.; Stoll, T.; Baumann, M. Overtake or not—A computer-based driving simulation experiment on drivers’ decisions during transitions in automated driving. Transp. Res. Part F Traffic Psychol. Behav. 2023, 96, 285–300. [Google Scholar] [CrossRef]

- Wu, Y.; Kihara, K.; Takeda, Y.; Sato, T.; Akamatsu, M.; Kitazaki, S.; Nakagawa, K.; Yamada, K.; Oka, H.; Kameyama, S. Eye movements predict driver reaction time to takeover request in automated driving: A real-vehicle study. Transp. Res. Part F Traffic Psychol. Behav. 2021, 81, 355–363. [Google Scholar] [CrossRef]

- de Salis, E.; Meteier, Q.; Capallera, M.; Angelini, L.; Sonderegger, A.; Khaled, O.A.; Mugellini, E.; Widmer, M.; Carrino, S. Predicting takeover quality in conditionally automated vehicles using machine learning and genetic algorithms. In Proceedings of the 4th International Conference on Intelligent Human Systems Integration, Virtual, 22–24 February 2021; pp. 84–89. [Google Scholar] [CrossRef]

- DinparastDjadid, A.; Lee, J.D.; Domeyer, J.; Schwarz, C.; Brown, T.L.; Gunaratne, P. Designing for the extremes: Modeling drivers‘ response time to take back control from automation using Bayesian quantile regression. Hum. Factors 2021, 63, 519–530. [Google Scholar] [CrossRef] [PubMed]

- Körber, M.; Weißgerber, T.; Kalb, L.; Blaschke, C.; Farid, M. Prediction of take-over time in highly automated driving by two psychometric tests. Dyna 2015, 82, 195–201. [Google Scholar] [CrossRef]

- Dillmann, J.; Den Hartigh, R.J.R.; Kurpiers, C.M.; Raisch, F.K.; Kadrileev, N.; Cox, R.F.A.; De Waard, D. Repeated conditionally automated driving on the road: How do drivers leave the loop over time? Accid. Anal. Prev. 2023, 181, 106927. [Google Scholar] [CrossRef]

- Pakdamanian, E.; Sheng, S.; Baee, S.; Heo, S.; Kraus, S.; Feng, L. DeepTake: Prediction of Driver Takeover Behavior using Multimodal Data. In Proceedings of the ACM CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–13. [Google Scholar] [CrossRef]

- Heo, J.; Lee, H.; Yoon, S.; Kim, K. Responses to take-over request in autonomous vehicles: Effects of environmental conditions and cues. IEEE Trans. Intell. Transp. Syst. 2022, 23, 23573–23582. [Google Scholar] [CrossRef]

- Kaduk, S.I.; Roberts, A.P.J.; Stanton, N.A. Driving performance, sleepiness, fatigue, and mental workload throughout the time course of semi-automated driving—Experimental data from the driving simulator. Hum. Factors Ergon. Manuf. Serv. Ind. 2021, 31, 143–154. [Google Scholar] [CrossRef]

- Li, S.; Blythe, P.; Guo, W.; Namdeo, A. Investigation of older driver’s takeover performance in highly automated vehicles in adverse weather conditions. IET Intell. Transp. Syst. 2018, 12, 1157–1165. [Google Scholar] [CrossRef]

- Li, Y.; Xuan, Z. Take-Over Safety Evaluation of Conditionally Automated Vehicles under Typical Highway Segments. Systems 2023, 11, 475. [Google Scholar] [CrossRef]

- Scharfe-Scherf, M.S.L.; Russwinkel, N. Familiarity and complexity during a takeover in highly automated driving. Int. J. Intell. Transp. Syst. Res. 2021, 19, 525–538. [Google Scholar] [CrossRef]

- Pipkorn, L.; Victor, T.; Dozza, M.; Tivesten, E. Automation aftereffects: The influence of automation duration, test track and timings. IEEE Trans. Intell. Transp. Syst. 2021, 23, 4746–4757. [Google Scholar] [CrossRef]

- Li, Y.; Xuan, Z.; Li, X. A study on the entire take-over process-based emergency obstacle avoidance behavior. Int. J. Environ. Res. Public Health 2023, 20, 3069. [Google Scholar] [CrossRef]

- Chen, K.T.; Chen, H.Y.W.; Bisantz, A. Adding visual contextual information to continuous sonification feedback about low-reliability situations in conditionally automated driving: A driving simulator study. Transp. Res. Part F Traffic Psychol. Behav. 2023, 94, 25–41. [Google Scholar] [CrossRef]

- Zhao, X.; Chen, H.; Li, Z.; Li, H.; Gong, J.; Fu, Q. Influence characteristics of automated driving takeover behavior in different scenarios. China J. Highw. Transp. 2022, 35, 195–214. [Google Scholar] [CrossRef]

- Tan, X.; Zhang, Y. A computational cognitive model of driver response time for scheduled freeway exiting takeovers in conditionally automated vehicles. Hum. Factors 2024, 66, 1583–1599. [Google Scholar] [CrossRef] [PubMed]

- Hong, S.; Yue, T.; You, Y.; Lv, Z.; Tang, X.; Hu, J.; Yin, H. A Resilience Recovery Method for Complex Traffic Network Security Based on Trend Forecasting. Int. J. Intell. Syst. 2025, 2025, 3715086. [Google Scholar] [CrossRef]

- Nilsson, J.; Strand, N.; Falcone, P.; Vinter, J. Driver performance in the presence of adaptive cruise control related failures: Implications for safety analysis and fault tolerance. In Proceedings of the 43rd Annual IEEE/IFIP International Conference on Dependable Systems and Networks Workshop (DSN-W), Budapest, Hungary, 24–27 June 2013; pp. 1–10. [Google Scholar] [CrossRef]

- Wei, R.; McDonald, A.D.; Garcia, A.; Alambeigi, H. Modeling driver responses to automation failures with active inference. IEEE Trans. Intell. Transp. Syst. 2022, 23, 18064–18075. [Google Scholar] [CrossRef]

- Jung, K.H.; Labriola, J.T.; Baek, H. Projecting the planned trajectory of a Level-2 automated vehicle in the windshield: Effects on human drivers’ take-over response to silent failures. Appl. Ergon. 2023, 111, 104047. [Google Scholar] [CrossRef]

- Pipkorn, L.; Dozza, M.; Tivesten, E. Driver visual attention before and after take-over requests during automated driving on public roads. Hum. Factors 2024, 66, 336–347. [Google Scholar] [CrossRef]

- Riegler, A.; Riener, A.; Holzmann, C. Towards personalized 3D augmented reality windshield displays in the context of automated driving. Front. Future Transp. 2022, 3, 810698. [Google Scholar] [CrossRef]

- Radlmayr, J.; Gold, C.; Lorenz, L.; Farid, M.; Bengler, K. How traffic situations and non-driving related tasks affect the take-over quality in highly automated driving. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Chicago, IL, USA, 27–31 October 2014; Volume 58, pp. 2063–2067. [Google Scholar] [CrossRef]

- Gold, C.; Körber, M.; Lechner, D.; Bengler, K. Taking over control from highly automated vehicles in complex traffic situations: The role of traffic density. Hum. Factors 2016, 58, 642–652. [Google Scholar] [CrossRef] [PubMed]

- Gold, C.; Berisha, I.; Bengler, K. Utilization of drivetime–performing non-driving related tasks while driving highly automated. In Proceedings of the Human Factors and Ergonomics Society Annual Meeting, Los Angeles, CA, USA, 26–30 October 2015; Volume 59, pp. 1666–1670. [Google Scholar] [CrossRef]

- Kim, H.; Kim, W.; Kim, J.; Lee, S.J.; Yoon, D.; Kwon, O.C.; Park, C.H. Study on the Take-over Performance of Level 3 Autonomous Vehicles Based on Subjective Driving Tendency Questionnaires and Machine Learning Methods. ETRI J. 2023, 45, 75–92. [Google Scholar] [CrossRef]

- Shi, E.; Bengler, K. Non-driving related tasks’ effects on takeover and manual driving behavior in a real driving setting: A differentiation approach based on task switching and modality shifting. Accid. Anal. Prev. 2022, 178, 106844. [Google Scholar] [CrossRef]

- Chen, R.; Kusano, K.D.; Gabler, H.C. Driver behavior during overtaking maneuvers from the 100-car naturalistic driving study. Traffic Inj. Prev. 2015, 16, S176–S181. [Google Scholar] [CrossRef]

- Victor, T.; Dozza, M.; Bärgman, J.; Boda, C.N.; Engström, J.; Flannagan, C.; Lee, J.D.; Markkula, G. Analysis of Naturalistic Driving Study Data: Safer Glances, Driver Inattention, and Crash Risk; Technical Report; The National Academies Press: Washington, DC, USA, 2015. [Google Scholar] [CrossRef]

- Zhu, M.; Wang, X.; Tarko, A.; Fang, S. Modeling car-following behavior on urban expressways in Shanghai: A naturalistic driving study. Transp. Res. Part C Emerg. Technol. 2018, 93, 425–445. [Google Scholar] [CrossRef]

- Pipkorn, L.; Tivesten, E.; Flannagan, C.; Dozza, M. Driver response to take-over requests in real traffic. IEEE Trans. Hum.-Mach. Syst. 2023, 53, 823–833. [Google Scholar] [CrossRef]

- Lotz, A.; Weissenberger, S. Predicting take-over times of truck drivers in conditional autonomous driving. In Advances in Human Aspects of Transportation: AHFE 2018 Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2019; pp. 329–338. [Google Scholar] [CrossRef]

- Rangesh, A.; Deo, N.; Greer, R.; Gunaratne, P.; Trivedi, M.M. Autonomous vehicles that alert humans to take-over controls: Modeling with real-world data. In Proceedings of the IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 231–236. [Google Scholar] [CrossRef]

- Rahman, S.U.; O’Connor, N.; Lemley, J.; Healy, G. Using pre-stimulus EEG to predict driver reaction time to road events. In Proceedings of the 44th Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Glasgow, UK, 11–15 July 2022; pp. 4036–4039. [Google Scholar] [CrossRef]

- Li, Q.; Wang, Z.; Wang, W.; Zeng, C.; Wu, C.; Li, G.; Heh, J.S.; Cheng, B. A human-centered comprehensive measure of take-over performance based on multiple objective metrics. IEEE Trans. Intell. Transp. Syst. 2023, 24, 4235–4250. [Google Scholar] [CrossRef]

- Yi, B.; Cao, H.; Song, X.; Wang, J.; Zhao, S.; Guo, W.; Cao, D. How can the trust-change direction be measured and identified during takeover transitions in conditionally automated driving? Using physiological responses and takeover-related factors. Hum. Factors 2024, 66, 1276–1301. [Google Scholar] [CrossRef]

- Dogan, D.; Bogosyan, S.; Acarman, T. Evaluation of takeover time performance of drivers in partially autonomous vehicles using a wearable sensor. J. Sens. 2022, 2022, 7924444. [Google Scholar] [CrossRef]

- Li, Q.; Wang, Z.; Wang, W.; Zeng, C.; Li, G.; Yuan, Q.; Cheng, B. An adaptive time budget adjustment strategy based on a take-over performance model for passive fatigue. IEEE Trans. Hum.-Mach. Syst. 2021, 52, 1025–1035. [Google Scholar] [CrossRef]

- Araluce, J.; Bergasa, L.M.; Ocaña, M.; López-Guillén, E.; Gutiérrez-Moreno, R.; Arango, J.F. Driver take-over behaviour study based on gaze focalization and vehicle data in CARLA simulator. Sensors 2022, 22, 9993. [Google Scholar] [CrossRef]

- Ayoub, J.; Du, N.; Yang, X.J.; Zhou, F. Predicting driver takeover time in conditionally automated driving. IEEE Trans. Intell. Transp. Syst. 2022, 23, 9580–9589. [Google Scholar] [CrossRef]

- Gruden, T.; Jakus, G. Determining key parameters with data-assisted analysis of conditionally automated driving. Appl. Sci. 2023, 13, 6649. [Google Scholar] [CrossRef]

- Liu, W.; Li, Q.; Wang, W.; Wang, Z.; Zeng, C.; Cheng, B. Deep Learning Based Take-Over Performance Prediction and Its Application on Intelligent Vehicles. IEEE Trans. Intell. Veh. 2024, 1–15. [Google Scholar] [CrossRef]

- Gold, C.; Happee, R.; Bengler, K. Modeling take-over performance in level 3 conditionally automated vehicles. Accid. Anal. Prev. 2018, 116, 3–13. [Google Scholar] [CrossRef] [PubMed]

- Chen, H.; Zhao, X.; Li, H.; Gong, J.; Fu, Q. Predicting driver’s takeover time based on individual characteristics, external environment, and situation awareness. Accid. Anal. Prev. 2024, 203, 107601. [Google Scholar] [CrossRef]

- Jin, M.; Lu, G.; Chen, F.; Shi, X.; Tan, H.; Zhai, J. Modeling takeover behavior in level 3 automated driving via a structural equation model: Considering the mediating role of trust. Accid. Anal. Prev. 2021, 157, 106156. [Google Scholar] [CrossRef]

- El Jouhri, A.; El Sharkawy, A.; Paksoy, H.; Youssif, O.; He, X.; Kim, S.; Happee, R. The influence of a color themed HMI on trust and take-over performance in automated vehicles. Front. Psychol. 2023, 14, 1128285. [Google Scholar] [CrossRef]

| Countries | Total Cited Count |

|---|---|

| Germany | 901 |

| United Kingdom | 461 |

| Netherlands | 345 |

| United States | 308 |

| Australia | 219 |

| China | 158 |

| Republic of Korea | 138 |

| Austria | 53 |

| Japan | 51 |

| France | 48 |

| Countries | Average Cited Count |

|---|---|

| Australia | 109.50 |

| Netherlands | 57.50 |

| United Kingdom | 51.20 |

| Germany | 42.90 |

| Austria | 17.70 |

| Morocco | 13.00 |

| Republic of Korea | 12.50 |

| United States | 11.00 |

| France | 9.60 |

| Japan | 7.30 |

| Institutes | Number of Publications |

|---|---|

| University of Michigan | 19 |

| Tsinghua University | 15 |

| Beihang University | 11 |

| Delft University of Technology | 11 |

| Technical University of Berlin | 11 |

| Technical University of Munich | 11 |

| Chalmers University of Technology | 9 |

| University of Ljubljana | 9 |

| University of Southampton | 8 |

| Wuhan University of Technology | 8 |

| Journal | Number of Publications |

|---|---|

| Transportation Research Part F: Traffic Psychology and Behaviour | 21 |

| Accident Analysis and Prevention | 18 |

| Human Factors | 15 |

| IEEE Transactions on Intelligent Transportation Systems | 9 |

| Applied Ergonomics | 6 |

| IEEE Access | 5 |

| IEEE Transactions on Human–Machine Systems | 4 |

| Transportation Research Record | 4 |

| Applied Sciences-Basel | 3 |

| International Journal of Human-Computer Interaction | 3 |

| Take-Over Mode | Specific Content | Ref. |

|---|---|---|

| Steering | Turn the steering wheel by a certain angle | [54,55] |

| Pedal | Press brake pedal percentage | [30] |

| Button | Fixed button on: Display/Steering wheel/Gear position | [41,49,56,57] |

| Pedal or Steering | [31,32,48,51,58,59,60] | |

| Pedal/Steering/Button | [22,61] | |

| Custom Methods | Press the lever behind the steering wheel | [16] |

| Touch the steering wheel and press the button | [62] | |

| Press two buttons on the steering wheel simultaneously | [63] |

| Event Type | Specific Content | Ref. |

|---|---|---|

| System Longitudinal Function Limited | Obstacle ahead | [40,55,59,69] |

| Steep slope | [59] | |

| Vehicle ahead stationary | [22,26,70] | |

| Construction site | [22,23,40,48] | |

| Vehicle ahead braking | [15,23] | |

| Obstacle during lane change of front vehicle | [58] | |

| Pedestrian or animal intrusion | [26,59,71] | |

| Sudden vehicle entry | [15,22,23] | |

| Overtaking | [23,42] | |

| Rainy day | [59,71] | |

| Foggy day | [23,71,72] | |

| System Lateral Function Limited | Blurred lane markings | [15,59] |

| Ramp entrance/exit | [17,40,67,72,73] | |

| System Failure | Partial system function failure | [71,74,75,76,77] |

| Experimental Equipment | Ref. |

|---|---|

| Desktop Simulator | [8,31,34,47,79] |

| Cockpit Simulator | [25,30,32,33,35,80,81,82,83] |

| Real Vehicle | [23,51,68,84,85] |

| Statistical Model | Independent Variables | Year | Key Methodology | Ref. |

|---|---|---|---|---|

| Generalized Non-linear Model | TB, traffic density, NDRTs, task repetitiveness, lane, driver age | 2018 | Diagnosis via VIF Significant predictors (p < 0.05) | [100] |

| Multiple Regression Model | Visual behavior, drowsiness, attitude, ACC experience, reaction speed, age, gender | 2018 | Significant predictors (p < 0.05) | [16] |

| linear mixed-effects model | Event urgency, device usage, visual NDRTs, TOR type, driver experience | 2019 | Within-study: Condition-wise TOT differences (Wilcoxon) Between-study: TOT correlations with study variables (Pearson/Spearman) | [4] |

| Multiple Linear Regression model | Physical/visual/cognitive NDRTs | 2021 | Feature selection: Backward elimination (p < 0.05) Collinearity check: VIF values 1.35–3.51, below critical threshold (VIF > 10) | [14] |

| Multiple Regression Model | Visual characteristics | 2021 | Preliminary analysis: Pearson correlations between eye-movement measures and RT Model building: Stepwise regression with backward elimination (p < 0.05) | [58] |

| Generalized Additive Model | Fatigue, traffic situations, TB | 2022 | Validation: Spearman correlation (POF, MSRD, TTBT) Data: 357 take-overs, train/test split (286/71) VIF: Low values, no multicollinearity | [95] |

| Generalized Linear Mixed Model | Preceding speed, autonomy duration, TB, trajectory, behavior | 2024 | Feature selection: EMD-based screening for optimal GMM variable combination Driving state classification: GMM to detect unstable-stable transitions Model validation: GLMM compared to GLM via likelihood ratio test | [41] |

| Model | Goodness-of-Fit | Error Metrics | Statistical Significance | Ref. |

|---|---|---|---|---|

| Generalized Non-linear Model | R2 = 0.43 | RMSE = 0.81 s | – | [100] |

| Multiple Regression Model | Adjusted R2 = 0.182 | – | , p < 0.001 | [16] |

| Linear Mixed-effects Model | – | – | Most predictors: | [4] |

| Multiple Linear Regression | MRT: R2 = 0.326 (Adj. R2 = 0.313) | – | Validation correlation: | [14] |

| (Component Models) | PARST: R2 = 0.304 (Adj. R2 = 0.274) | (individual), | ||

| GT: R2 = 0.373 (Adj. R2 = 0.364) | (mean by NDRT) | |||

| Multiple Regression Model | R2 = 0.40 | – | F-statistic, 0.001 | [58] |

| Generalized Additive Model | Training Adj. R2 = 0.747 | Test Set: MAE = 0.72 s, RMSE = 0.90 s | – | [95] |

| Adaptive Strategy: MAE = 0.71 s, RMSE = 0.86 s | ||||

| Mixed Model | Critical Scenario: Adj. R2 = 0.839 | – | Likelihood Ratio test: | [41] |

| (GMM & GLMM) | Non-critical Scenario: Adj. R2 = 0.846 | GLMM > GLM (p < 0.005) |

| Model | Features | Year | Key Methodology | Ref. |

|---|---|---|---|---|

| SVM | Eye movements, posture | 2019 | Feature selection: MANOVA | [89] |

| RF | Heart rate, skin conductance, eye tracking, scene type, traffic density | 2020 | Method: Random Forest permutation importance ranking Process: Sequential addition of top-ranked features | [56] |

| DeepTake | Visual features, skin conductance, heart rate | 2021 | SMOTE class imbalance, LASSO stable selection, Random Forest importance ranking | [63] |

| LSTM | Driving conditions, driver state, distractions, control transfer timing | 2021 | Ablation studies on feature combinations | [90] |

| Extra Trees | 150 s psychophysiological data | 2021 | Variance Threshold, PCA | [59] |

| Bayesian Ridge + ANN | EEG spectral features | 2022 | Validation: leave-one-subject-out cross-validation | [91] |

| M5’ nonlinear regression tree | 41 factors (demographics, driving attributes, take-over characteristics) | 2023 | The dataset is divided according to rules such as “the time required for the first braking/steering”, and an optimal linear model is constructed for each subset. | [98] |

| ACTNet | Driver state, demographics, traffic situations, interaction features | 2024 | Dual-input ACTNet fusing CNN-processed heatmaps and tabular features | [99] |

| XGBoost | Personal traits, environment, situational awareness | 2024 | Model Interpretation: SHAP analysis for global/local explanations. Ablation analysis via Base Model (BM) vs. enhanced model (BM+SA) | [101] |

| Model | Primary Task | Classification Metrics | Regression Error Metrics | Goodness-of-Fit | Ref. |

|---|---|---|---|---|---|

| SVM | Classification (Online vs. Offline) | Online MR: 38.7% Offline MR: 22.5% With Posture: 37.7% | – | – | [89] |

| RF | Classification (Good/Bad take-over) | Accuracy: 84.3% F1: 64.0% Precision: 64.5% Recall: 63.9% | – | – | [56] |

| DeepTake | Classification (3-class: TOT Level) | Accuracy: 92.8% Weighted F1: 0.87 AUC: 0.96 | – | – | [63] |

| LSTM | Regression (Multiple Targets) | – | TOT MAE: 0.9144 s Eyes MAE: 0.2497 s Foot MAE: 0.4650 s Hands MAE: 0.8055 s | – | [90] |

| Extra Trees | Regression | – | RT MSE: 1.6906 MaxSWA MSE: 161.93 | – | [59] |

| Bayesian Ridge + ANN | Regression | – | Best MAE: 0.51–0.54 s (Alpha/Theta bands) | – | [91] |

| M5’ | Mixed (Regression & Classification) | Acc: 88.59% | Reaction Time: 43.57% Lat. Accel: 85.41% | – | [98] |

| ACTNet | Regression | – | MAE: 1.25 ± 0.21 s RMSE: 1.60 ± 0.20 s | R2: 0.62 ± 0.04 | [99] |

| XGBoost | Regression | – | MAE: 0.1507 s RMSE: 0.2763 s | Adj. R2: 0.7746 | [101] |

| Model | Predictors | Year | Key Methodology | Ref. |

|---|---|---|---|---|

| QN-ACTR | Road/traffic situations, driver attention/fatigue | 2019 | Modeling:Production-rule-based single-task models integrated via QN-ACTR’s multi-task scheduling | [20] |

| QN-MHP | Emotional states, sound cue frequency/repetition | 2020 | Statistical tests were chosen based on normality of residuals: parametric tests (e.g., ANOVA) for normal data, non-parametric tests (e.g., Mann-Whitney U) otherwise. | [32] |

| QN-MHP | Sound characteristics (loudness/semantics/acoustics) | 2021 | Statistical Analysis: Repeated measures ANOVA with Bonferroni correction for multiple comparisons | [49] |

| ACT-R | Trust, system/environment characteristics, individual differences | 2021 | Validated the measurement model using Confirmatory Factor Analysis, followed by path analysis to test the structural relationships | [102] |

| QN-MHP | Visual redirection, task priority, situational awareness, trust | 2022 | Modeling the decision-making mechanism through Markov chains to simulate real-time transitions between monitoring, NDRTs, and take-over | [73] |

| ACT-R | Psycho-load in take-over scenarios | 2024 | Quantifying workload via ACT-R module activation/decay; Simulating adaptive decision-making between take-over and NDRTs | [55] |

| Model | Goodness-of-Fit (R2) | Error Metrics | Model Fit Indices | Ref. |

|---|---|---|---|---|

| QN-ACTR | R2 = 0.96 | RMSE = 0.5 s MAPE = 9% | - | [20] |

| QN-MHP | All data: R2 = 0.4997 Excl. 8-rep/s warnings: R2 = 0.6892 | - | - | [32] |

| QN-MHP | R2 = 0.997 | RMSE = 0.148 s | - | [49] |

| ACT-R | - | - | /df = 1.684 (<3) CFI = 0.948 (>0.9) RMSEA = 0.071 (<0.08) GFI = 0.901 (>0.9) | [102] |

| QN-MHP | Method 1 (by driver): R2 = 0.76 Method 2 (by event): R2 = 0.97 | RMSE = 8.10 s RMSE = 3.02 s | - | [73] |

| ACT-R | take-over Response Time: R2 = 0.9669 Mental Workload: R2 = 0.9705 | - | - | [55] |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, H.; Zhou, X.; Lyu, N.; Wang, Y.; Xu, L.; Yang, Z. A Review of Methods for Predicting Driver Take-Over Time in Conditionally Automated Driving. Sensors 2025, 25, 6931. https://doi.org/10.3390/s25226931

Wu H, Zhou X, Lyu N, Wang Y, Xu L, Yang Z. A Review of Methods for Predicting Driver Take-Over Time in Conditionally Automated Driving. Sensors. 2025; 25(22):6931. https://doi.org/10.3390/s25226931

Chicago/Turabian StyleWu, Haoran, Xun Zhou, Nengchao Lyu, Yugang Wang, Linli Xu, and Zhengcai Yang. 2025. "A Review of Methods for Predicting Driver Take-Over Time in Conditionally Automated Driving" Sensors 25, no. 22: 6931. https://doi.org/10.3390/s25226931

APA StyleWu, H., Zhou, X., Lyu, N., Wang, Y., Xu, L., & Yang, Z. (2025). A Review of Methods for Predicting Driver Take-Over Time in Conditionally Automated Driving. Sensors, 25(22), 6931. https://doi.org/10.3390/s25226931