Early Detection of Jujube Shrinkage Disease by Multi-Source Data on Multi-Task Deep Network

Abstract

1. Introduction

2. Materials and Methods

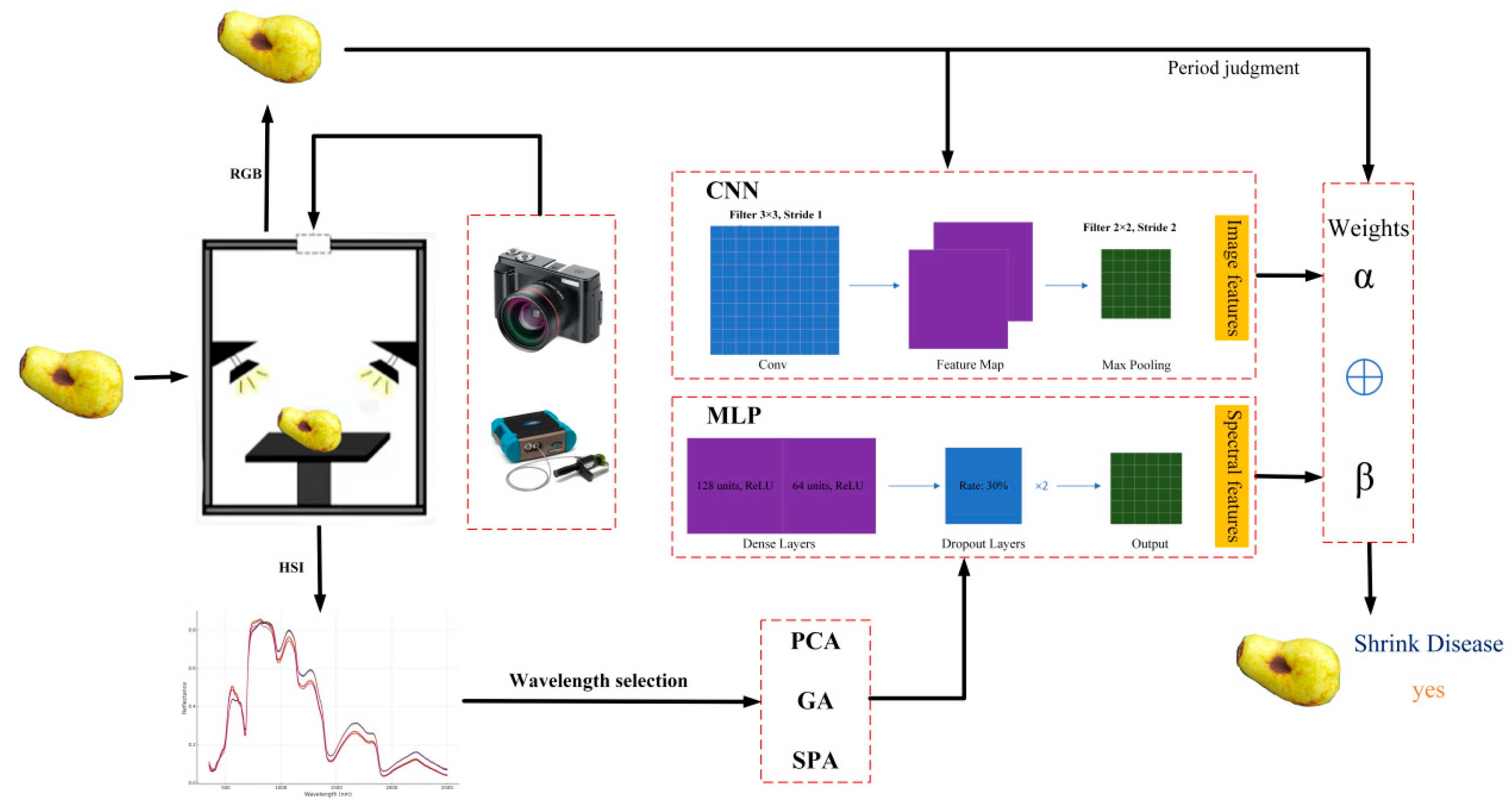

2.1. Overview

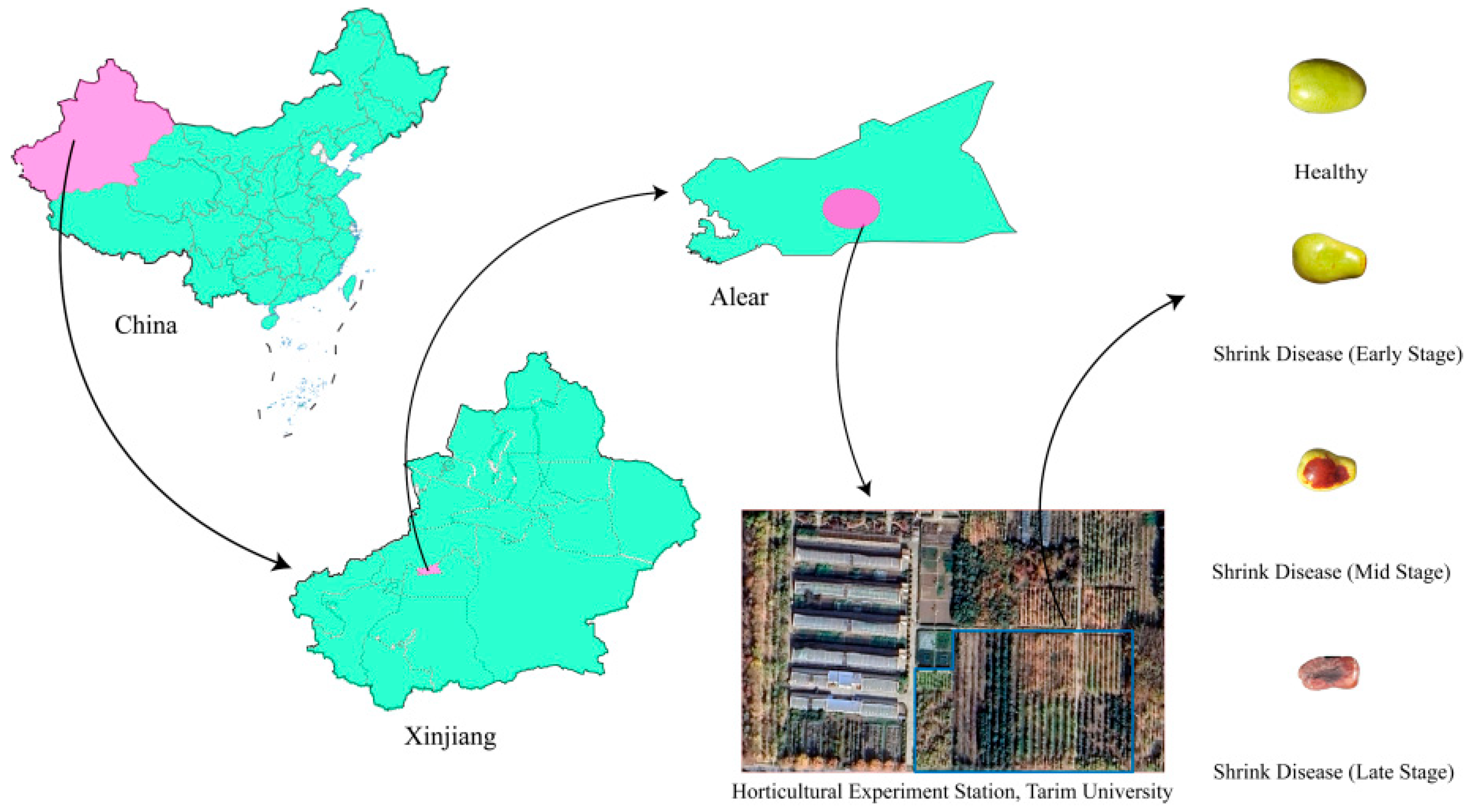

2.2. Shrinkage Disease Dataset for Jujubes

2.2.1. Data Acquisition

- (1)

- RGB Imaging

- (2)

- Hyperspectral Imaging

2.2.2. Data Preprocessing

- (1)

- RGB Feature Extraction

- (2)

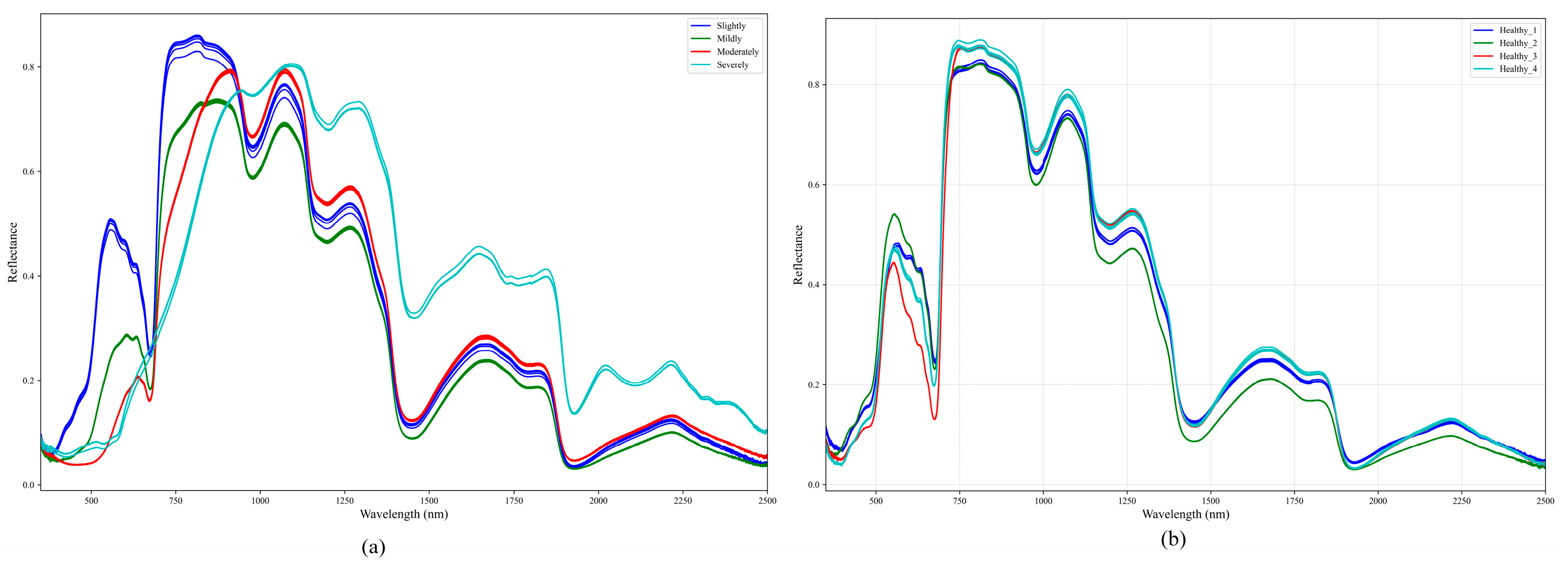

- Hyperspectral Data Wavelength Selection

2.2.3. Dataset Characteristics

2.3. Multimodal Feature Fusion Strategy

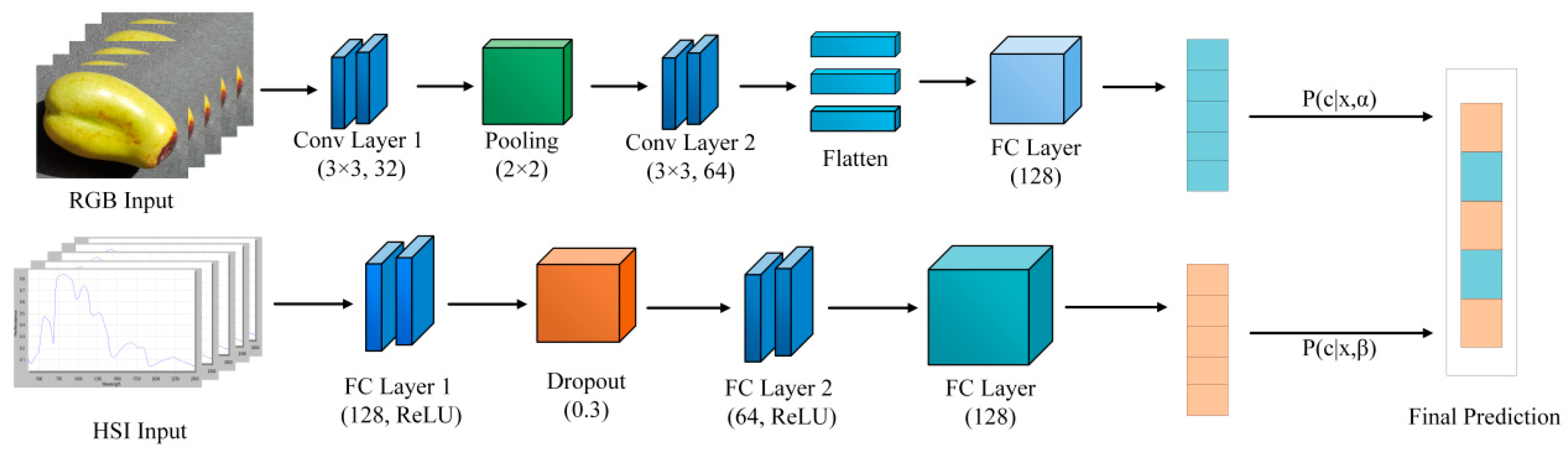

2.3.1. CNN-Based Feature Learning

2.3.2. MLP-Based Feature Learning

2.3.3. Dynamic Multimodal Feature Fusion

2.4. Performance Evaluation

3. Results

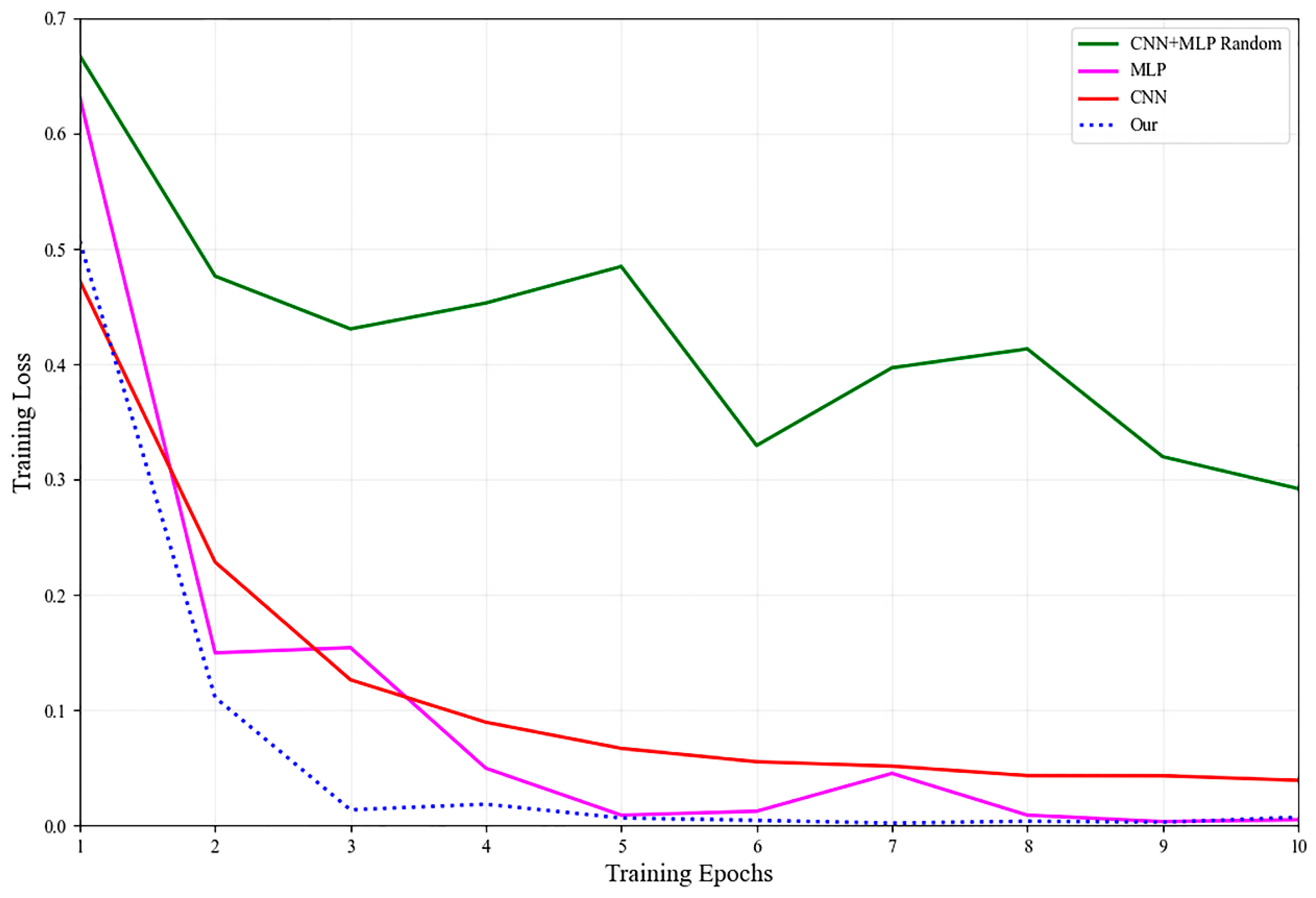

3.1. Model Training

3.2. Feature Band Selection

3.2.1. Hyperspectral Feature Band Analysis

3.2.2. Spectral Feature Selection

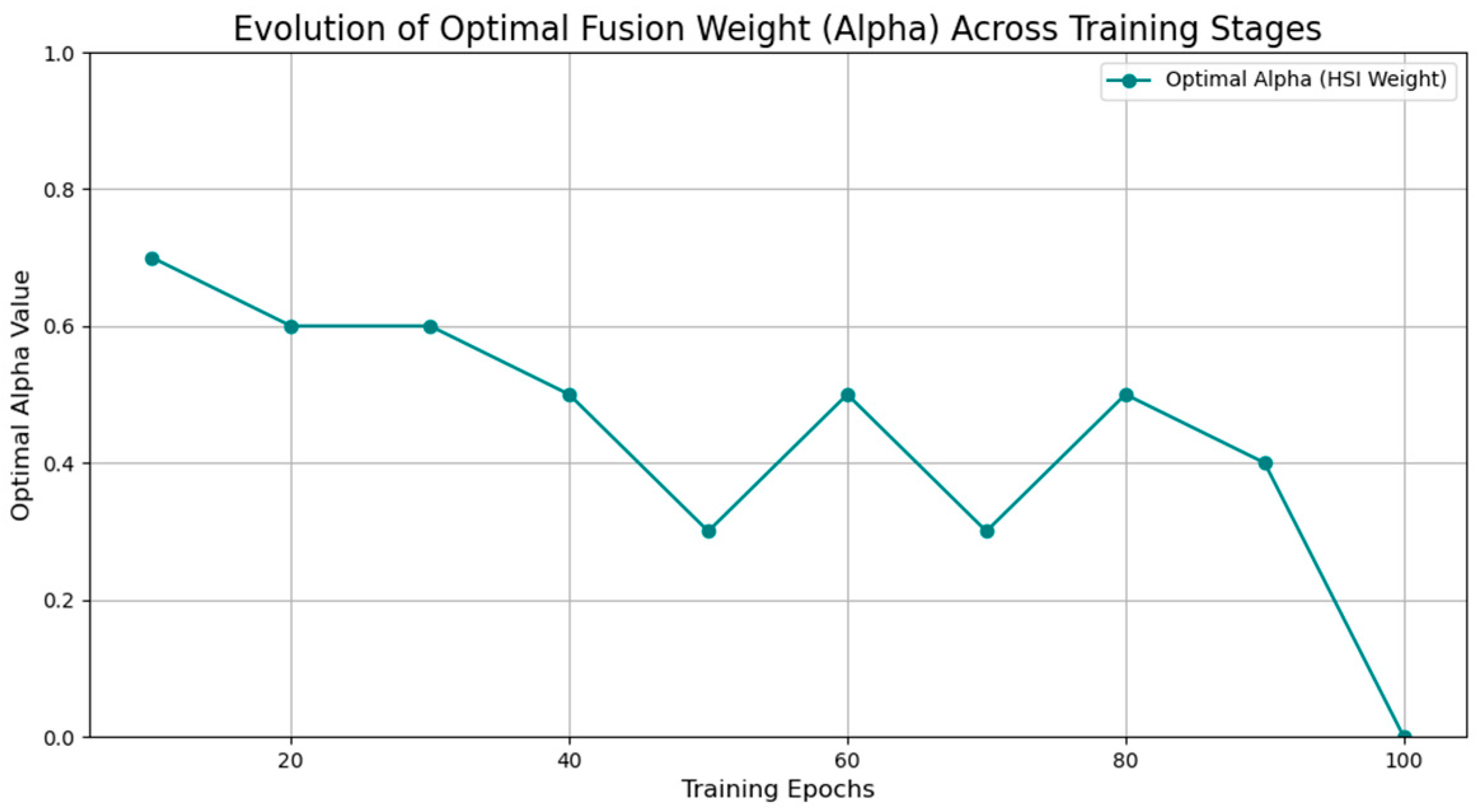

3.3. Fusion Weight Selection

3.4. Detection of Fruit Shrinking Disease in Jujubes

3.4.1. Quantitative Evaluation

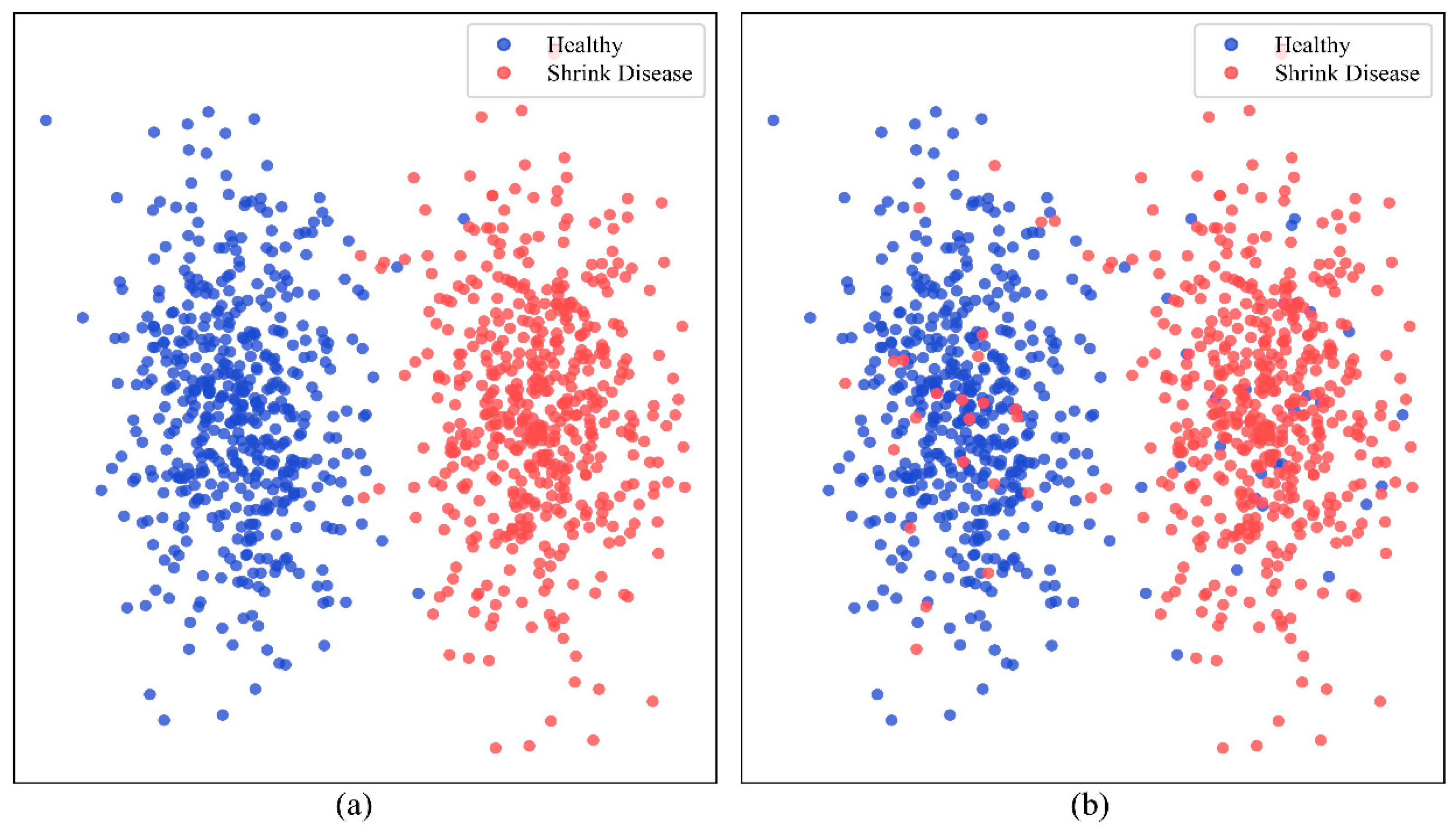

3.4.2. Qualitative Evaluation

3.5. Ablation Studies

3.5.1. Ablation on Detective Components

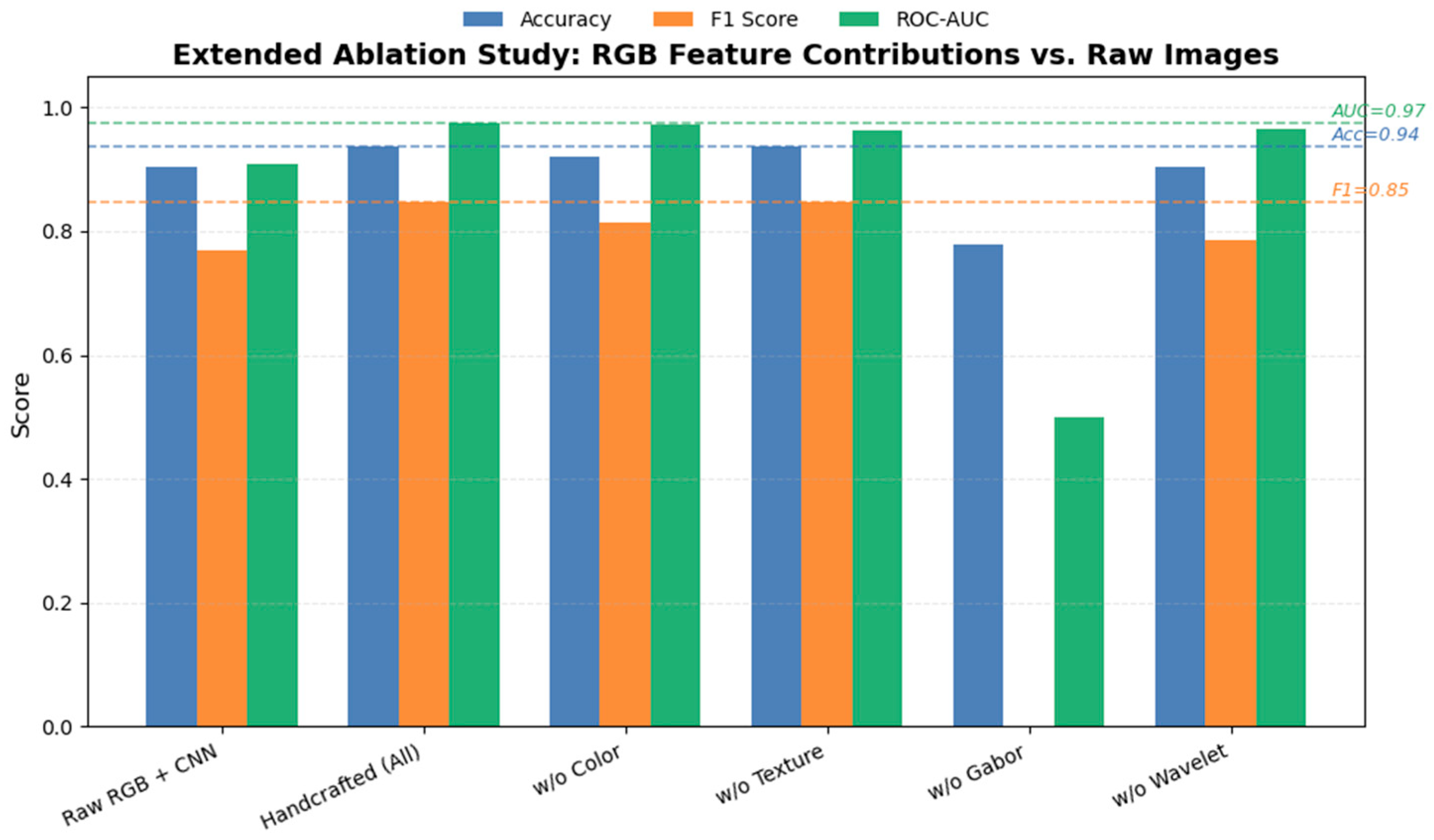

3.5.2. Ablation on RGB Feature Factors

4. Discussion

4.1. Quality Evaluation of Jujubewa

4.2. Hyperspectral and RGB Imaging Quality Detection in Other Fruits

4.3. Failure Samples Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Kamilaris, A.; Prenafeta-Boldú, F.X. A review of the use of deep learning in agriculture. Comput. Electron. Agric. 2022, 198, 107079. [Google Scholar]

- Jayapal, N.; Raja, P.; Visalakshi, N.K. Ginseng root rot detection using deep CNN. Comput. Electron. Agric. 2023, 210, 108258. [Google Scholar]

- Nan, H.; Wang, X.; Zhang, X.; Hu, Y.; Liu, B.; Chen, L.; Zhang, Y.; Liu, Y.; Zhu, X.; Zhao, J.; et al. RGB-D based maize leaf disease recognition with deep learning. Plant Methods 2021, 17, 97. [Google Scholar]

- Rathore, A.; Sharma, A.; Mittal, N.; Garg, S.; Kumar, R.; Dhaka, V.S.; Alenezi, F.; Alotaibi, Y.; Martinetz, T. LW17: Lightweight CNN for rice disease detection. Comput. Electron. Agric. 2021, 187, 106285. [Google Scholar]

- Singh, D.; Jackson, G.; Krishnan, S. A comprehensive review on computer vision for plant disease identification. Multimed. Tools Appl. 2021, 80, 36011–36036. [Google Scholar]

- Qin, J.; Burks, T.F.; Kim, M.S.; Chao, K.; Ritenour, M.A.; Bonn, W.G. Hyperspectral and multispectral imaging for identifying tomato diseases at early stages. Trans. ASABE 2018, 61, 1461–1471. [Google Scholar]

- Thomas, S.; Tits, L.; D’Haese, H.; Verstraeten, W.W.; Coppin, P. A review of the state-of-the-art in hyperspectral data processing for plant stress detection. Remote Sens. 2022, 14, 1335. [Google Scholar]

- Sun, X.; Xu, X.; Zhu, X. 3D-CNN-based soybean anthracnose identification using hyperspectral data. Remote Sens. 2021, 13, 223. [Google Scholar]

- Shi, Y.; Han, L.; Li, G.; Wang, R.; Du, Y.; Yang, Y. Early detection of potato late blight based on hyperspectral imaging. Comput. Electron. Agric. 2022, 194, 106752. [Google Scholar]

- Yu, Z.; Jiang, Y.; Yan, H.; Fan, G.; Jia, W. Feedback attention dense convolutional neural network for plant disease recognition. Inf. Sci. 2022, 584, 262–274. [Google Scholar]

- Guerri, J.; Bianconi, F.; Álvarez-Gila, A. Plant disease identification using CNNs and hyperspectral imaging. Comput. Electron. Agric. 2020, 178, 105770. [Google Scholar]

- Javidan, N.; Fathalian, A.; Liaghati, A. Hyperspectral image analysis for tomato fungal disease detection using machine learning. Comput. Electron. Agric. 2022, 198, 107052. [Google Scholar]

- Paoletti, M.E.; Haut, J.M.; Plaza, J.; Plaza, A. A new deep composite kernel for hyperspectral imaging classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–15. [Google Scholar] [CrossRef]

- Zheng, L.; Zhao, M.; Zhu, J.; Huang, L.; Zhao, J.; Liang, D.; Zhang, D. A hyperspectral–RGB fusion network for soybean seed damage detection. Plant Methods 2021, 17, 110. [Google Scholar]

- Sun, X.; Zhu, X.; Xu, X. Soybean anthracnose identification using integrated 3D-CNN model with RGB and HSI. Remote Sensing 2020, 12, 3391. [Google Scholar]

- Zhang, L.; Zhao, Y.; Zhou, C.; Zhang, J.; Yan, Y.; Chen, T.; Lv, C. JuDifformer: Multimodal fusion model with transformer and diffusion for jujube disease detection. Comput. Electron. Agric. 2025, 232, 110008. [Google Scholar] [CrossRef]

- Mesa, S.; Chiang, L. Multi-input deep learning fusion model for banana grading using MLP and RGB-HSI. Postharvest Biol. Technol. 2022, 194, 112059. [Google Scholar]

- Barbedo, J.G.A. Plant disease identification from individual lesions and spots using deep learning. Biosyst. Eng. 2019, 180, 96–107. [Google Scholar] [CrossRef]

- Lu, J.; Ehsani, R.; Shi, Y. A review of recent advances in hyperspectral imaging technology and its applications in agriculture. Remote Sens. 2020, 12, 1993. [Google Scholar] [CrossRef]

- Adão, T.; Hruška, J.; Pádua, L.; Bessa, J.; Peres, E.; Morais, R.; Sousa, J.J. Hyperspectral imaging: A review on UAV-based sensors, data processing and applications for agriculture and forestry. Remote Sens. 2017, 9, 1110. [Google Scholar] [CrossRef]

- Diaz-Pota, D.; Pancorbo, J.L.; Tarjuelo, J.M. Deep learning in multimodal fusion for sustainable plant care: A comprehensive review. Sustainability 2023, 17, 5255. [Google Scholar]

- Kundu, N.; Rani, G.; Dhaka, V.S.; Gupta, K. Multimodal deep learning framework for proactive plant disease diagnosis. Metall. Mater. Eng. 2024, 31, 221–238. [Google Scholar]

- Gitelson, A.A.; Merzlyak, M.N. Remote estimation of chlorophyll content in higher plant leaves. Int. J. Remote Sens. 1997, 18, 2691–2697. [Google Scholar] [CrossRef]

- Horler, D.N.H.; Dockray, M.; Barber, J. The red edge of plant leaf reflectance. Int. J. Remote Sens. 1983, 4, 273–288. [Google Scholar] [CrossRef]

- Nicolaï, B.M.; Beullens, K.; Bobelyn, E.; Peirs, A.; Saeys, W.; Theron, K.I.; Lammertyn, J. Nondestructive measurement of fruit and vegetable quality by means of NIR spectroscopy: A review. Postharvest Biol. Technol. 2007, 46, 99–118. [Google Scholar] [CrossRef]

- Osborne, B.G. Near-Infrared Spectroscopy in Food Analysis. In Encyclopedia of Analytical Chemistry; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2000. [Google Scholar]

- Lu, R.; Chen, J.; Wu, Q.; Li, Y.; Zhang, H. Evaluation and process monitoring of jujube hot air drying using hyperspectral imaging and deep learning models. J. Food Eng. 2023, 355. [Google Scholar]

- Zhang, Y.; Liu, F.; Zhao, C.; Chen, L.; Li, J. Deep learning-based jujube shrinkage disease detection via multi-source fusion. Postharvest Biol. Technol. 2023, 202. [Google Scholar]

- Jiang, H.; Sun, Z.; Wang, Y.; Liu, X.; Zhao, Y. YOLOv8-based surface defect detection in jujube fruits. Comput. Electron. Agric. 2023, 212. [Google Scholar]

- Hu, C.; Guo, J.; Xie, H.; Zhu, Q.; Yuan, B.; Gao, Y.; Ma, X.; Chen, J. RJ-TinyViT: An efficient vision transformer for red jujube defect classification. Sci. Rep. 2024, 14, 27776. [Google Scholar] [CrossRef] [PubMed]

- Zhang, Y.; Zhao, C.; Li, J.; Liu, F.; Zhao, Y. MLG-YOLO: Multi-level grid YOLO for jujube surface defect detection. Comput. Electron. Agric. 2023, 209. [Google Scholar]

- Wang, L.; Zhang, Y.; Wang, Y.; Ma, Y. Detection of black spot disease in winter jujube using hyperspectral imaging. Postharvest Biology and Technology 2023, 200. [Google Scholar]

- Chen, Z.; Wang, Y.; Yang, C.; He, J.; Zhou, X.; Shang, Z. UAV-based multispectral imaging for orchard-scale monitoring of jujube quality. Precis. Agric. 2023, 24. [Google Scholar]

- Liu, C.; Qiu, Z.; He, Y.; Chen, G.; Ruan, C.; Zhang, Y. Detection of soluble solids content in mango using hyperspectral imaging and chemometrics. Postharvest Biol. Technol. 2022, 192, 112029. [Google Scholar]

- Fu, X.; Xu, H.; Liu, F.; Ying, Y.; Jiang, H. Automated mango defect detection using hyperspectral imaging and image segmentation algorithms. Sensors 2022, 22, 3415. [Google Scholar]

- Lu, R.; Chen, J.; Wu, Q.; Liu, Y.; Zhang, Y.; Ouyang, A. Non-destructive internal quality assessment of pears using hyperspectral imaging and machine learning. Food Anal. Methods 2023, 16, 2942–2954. [Google Scholar]

- Wang, S.; Wang, Y.; Jin, J.; Zhang, Z.; Li, X. Estimation of nutrient concentrations in strawberry leaves and fruit using hyperspectral imaging. Front. Plant Sci. 2021, 12, 639514. [Google Scholar]

- Qiao, J.; Li, D.; Wang, R.; Wang, X.; Zhang, M. Detection of bruising in strawberries during postharvest handling using hyperspectral imaging. Postharvest Biol. Technol. 2023, 197, 111546. [Google Scholar]

- Cheng, J.; Wang, Z.; Shi, T.; Liu, G.; Sun, Y. Monitoring internal quality of peaches using hyperspectral imaging. Food Chem. 2022, 370, 130982. [Google Scholar]

- Yu, Y.; Lu, Y.; He, Z.; Wang, J.; Tang, Y.; Li, X. Non-destructive prediction of quality attributes in white grapes with hyperspectral imaging. Food Anal. Methods 2023, 16, 2135–2147. [Google Scholar]

- Wu, Y.; Sun, L.; Zhang, Q.; Tan, A.; Zou, X. Hyperspectral imaging combined with machine learning for citrus black spot detection. Comput. Electron. Agric. 2022, 195, 106837. [Google Scholar]

- Tian, X.; Xu, X.; Song, Q. Non-destructive estimation of soluble solids and ripeness in apples using HSI and Vis-NIR. Food Anal. Methods 2023, 16, 2999–3007. [Google Scholar]

| Samples | Raw Data | |

|---|---|---|

| Training set | Image | 257 |

| Jujube | 1285 | |

| Validation/Test set | Image | 30 |

| Jujube | 150 |

| Methods | GA (nm) | PCA (nm) | SPA (nm) | |

|---|---|---|---|---|

| Wavelength | ||||

| 1 | 389 | 535 | 902 | |

| 2 | 393 | 536 | 553 | |

| 3 | 528 | 537 | 1394 | |

| 4 | 553 | 538 | 747 | |

| 5 | 677 | 539 | 688 | |

| 6 | 747 | 545 | 2267 | |

| 7 | 778 | 546 | 1273 | |

| 8 | 878 | 547 | 603 | |

| 9 | 1070 | 553 | 1900 | |

| 10 | 1075 | 554 | 483 | |

| 11 | 1110 | 555 | 702 | |

| 12 | 1239 | 596 | 350 | |

| 13 | 1245 | 597 | 651 | |

| 14 | 1579 | 598 | 1620 | |

| 15 | 1624 | 684 | 412 | |

| 16 | 1674 | 685 | 1075 | |

| 17 | 1861 | 747 | 353 | |

| 18 | 1935 | 883 | 808 | |

| 19 | 2010 | 1070 | 673 | |

| 20 | 2276 | 1075 | 1070 | |

| Methods | Accuracy | F1 Score | Precision | Recall | ROC-AUC |

|---|---|---|---|---|---|

| SVM | 0.794 | 0.381 | 0.333 | 0.444 | 0.680 |

| RF | 0.921 | 0.667 | 0.833 | 0.556 | 0.955 |

| KNN | 0.921 | 0.615 | 1 | 0.444 | 0.935 |

| GBM | 0.905 | 0.571 | 0.80 | 0.444 | 0.892 |

| CNN+MLP Random | 0.841 | 0.545 | 0.600 | 0.500 | 0.837 |

| Our | 0.937 | 0.857 | 0.667 | 0.750 | 0.979 |

| Methods | Accuracy | F1 Score | Precision | Recall | ROC-AUC |

|---|---|---|---|---|---|

| MLP | 0.857 | 0.471 | 0.500 | 0.444 | 0.897 |

| CNN | 0.889 | 0.533 | 0.667 | 0.444 | 0.897 |

| CNN+KNN | 0.762 | 0.750 | 0.286 | 0.444 | 0.883 |

| SVM+MLP | 0.810 | 0.333 | 0.333 | 0.333 | 0.660 |

| CNN+MLP Random | 0.841 | 0.545 | 0.600 | 0.500 | 0.837 |

| Our | 0.937 | 0.857 | 0.667 | 0.750 | 0.984 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pan, J.; Zhou, L.; Geng, H.; Zhang, P.; Yan, F.; Shi, M.; Si, C.; Chen, J. Early Detection of Jujube Shrinkage Disease by Multi-Source Data on Multi-Task Deep Network. Sensors 2025, 25, 6763. https://doi.org/10.3390/s25216763

Pan J, Zhou L, Geng H, Zhang P, Yan F, Shi M, Si C, Chen J. Early Detection of Jujube Shrinkage Disease by Multi-Source Data on Multi-Task Deep Network. Sensors. 2025; 25(21):6763. https://doi.org/10.3390/s25216763

Chicago/Turabian StylePan, Junzhang, Lei Zhou, Hui Geng, Pengyu Zhang, Fenfen Yan, Mingdeng Shi, Chunjing Si, and Junjie Chen. 2025. "Early Detection of Jujube Shrinkage Disease by Multi-Source Data on Multi-Task Deep Network" Sensors 25, no. 21: 6763. https://doi.org/10.3390/s25216763

APA StylePan, J., Zhou, L., Geng, H., Zhang, P., Yan, F., Shi, M., Si, C., & Chen, J. (2025). Early Detection of Jujube Shrinkage Disease by Multi-Source Data on Multi-Task Deep Network. Sensors, 25(21), 6763. https://doi.org/10.3390/s25216763