1. Introduction

The rapid development of unmanned aerial vehicle technology has promoted its application in various industries [

1,

2,

3], but it has also led to incidents such as illegal flight and malicious abuse [

4,

5]. This not only poses a potential threat to personal privacy, but also threatens public safety. Therefore, detection and monitoring technology for drones is becoming increasingly important. Visual inspection based on object detection technology can achieve more intuitive and accurate recognition of drones by capturing and analyzing their visual features.

Object detection technology based on deep learning has been extensively researched. Common object detection frameworks include Faster Regions with Convolutional Neural Networks (Faster R-CNN) [

6] and You Only Look Once (YOLO) series [

7]. These detection algorithms typically rely on Non-Maximum Suppression (NMS) operations to eliminate redundant prediction boxes for final detection results. However, with the application of Transformers in image processing, detection algorithms based on Transformer architectures have been proposed, such as Detection Transformer (DETR) [

8]. By employing an object query mechanism, DETR eliminates the need for NMS, and its end-to-end detection paradigm has garnered increasing research attention. However, current object detection algorithms still have shortcomings in drone detection. For example, multiple downsampling is prone to the loss of shallow details in deep networks, leading to missed detections in small object detection at medium to long distances. Additionally, colors and textures in complex backgrounds are a form of interference that further exacerbates the risk of detection errors.

Torralba [

9] proposed a method for improving object detection by analyzing scene context information to address the problem of small object detection. It demonstrated that object detection not only relies on the appearance features of the target itself, but also on the relationship between the target and the background as an important basis. Especially in small object detection, target information is easily lost in deep networks, resulting in a lack of sufficient semantic information. In this case, modeling the relationship between the target and context will provide richer discriminative criteria, guiding attention to make the right choices.

However, traditional detection algorithms mainly rely on stacking small convolution kernels. Although this can efficiently extract local information, its ability to perceive contextual information is relatively weak, which is not conducive to modeling the relationship between target and scene information in drone detection tasks. In the case where small-sized targets lose information due to downsampling, the relationship between the target and scene information will actually be more valuable for detection. Therefore, it is necessary to find new network mechanisms to obtain contextual information while avoiding additional computational costs.

In addition, mainstream detection algorithms often model features in the spatial domain, but under the interference of similar background information or a lack of significant texture features in the target, the processing methods in the spatial domain are prone to misjudgment. In this case, the contour and boundary of the target are more reliable features that can be utilized. Therefore, compared to spatial-domain methods such as Convolutional Neural Networks (CNNs) or Transformer networks for feature acquisition of the entire image, frequency-domain processing methods have more opportunities because they guide the model to perceive changes in the contours and boundaries of the target in the image to obtain target features. Specifically, high-frequency signals correspond to drastically changing boundaries, and low-frequency signals correspond to the subjects within the boundaries. This provides a new perspective for extracting image features for small-sized target detection.

Based on the above, this article proposes a new unmanned aerial vehicle detection algorithm, Spatial-Frequency Aware Detection Transformer (SFA-DETR), which perceives and obtains small-sized target features by using a joint processing mechanism of spatial and frequency domains designed to improve the performance of unmanned aerial vehicle detection in complex scenes. The main contributions of this study are as follows:

In terms of spatial-domain modeling, a new backbone network, IncepMix, is proposed by combining Inception parallel with multi-receptive field information. It improves the modeling ability of target and context by dynamically fusing information from different receptive fields, and reduces computational costs through channel compression strategy.

In terms of frequency-domain modeling, a Frequency-Guided Attention (FGA) Block was designed to guide the attention direction of the attention mechanism by utilizing the frequency information in the image, making it more inclined to focus on the boundary and contour information of the target, thereby improving the positioning accuracy.

We adopt an adaptive sparse attention mechanism [

10] to replace the standard attention in the Attention-based Intra-scale Feature Interaction (AIFI), in order to highlight important semantics and suppress redundant interference.

3. Proposed Method

3.1. Overall Architecture

RT-DETR employs a CNN backbone for feature extraction, followed by a Transformer module at the network’s terminus to enable global information interaction. The Cross-scale Feature Fusion (CCFF) module integrates multi-level features from the backbone, with its output subsequently fed into the decoder. The model achieves end-to-end detection through an object query mechanism. To accelerate training, an IOU-aware query selection strategy is incorporated, introducing IOU constraints during training to guide the queries toward higher-quality candidate regions.

To further enhance drone detection performance, on the basis of RT-DETR, this paper proposes a detection algorithm based on spatial–frequency joint sensing: Spatial-Frequency Aware DETR (SFA-DETR). Its overall architecture is shown in

Figure 1. This method combines the advantages of the spatial-domain method and frequency-domain processing method to obtain richer perceptual information to guide the model to focus on key areas. In the aspect of spatial-domain modeling, this paper proposes the backbone network IncepMix, which helps the model make more effective use of context clues by dynamically fusing multi-scale receptive field information, so as to enhance the detection ability; In the aspect of frequency-domain modeling, a frequency-guided attention block (FGA block) is proposed, which integrates the shallow features of the trunk and the semantic features of CCFF. By extracting multi-level frequency information, the perception of edge changes of the model is enhanced, so as to improve the discrimination ability of the model under the interference of background information. Finally, the adaptive sparse attention mechanism is introduced to replace the standard attention mechanism of AIFI, highlighting the key semantic information and suppressing the interference of redundant information.

3.2. IncepMix Network Design

To acquire richer receptive field information and enhance the model’s detection capability, this paper proposes the IncepMix network, incorporating Inception’s design philosophy of parallel multi-branch fusion. Specifically, for the S3 layer, which serves as a shallow feature layer inclined to capture local information such as detailed textures, ResNet [

34] is adopted to fully preserve critical detailed information. For the S4 and S5 layers, two substructures—IncepMix-A and IncepMix-B—are designed, respectively. By integrating information from different receptive fields, these substructures enhance the model’s ability for multi-scale perception and contextual understanding.

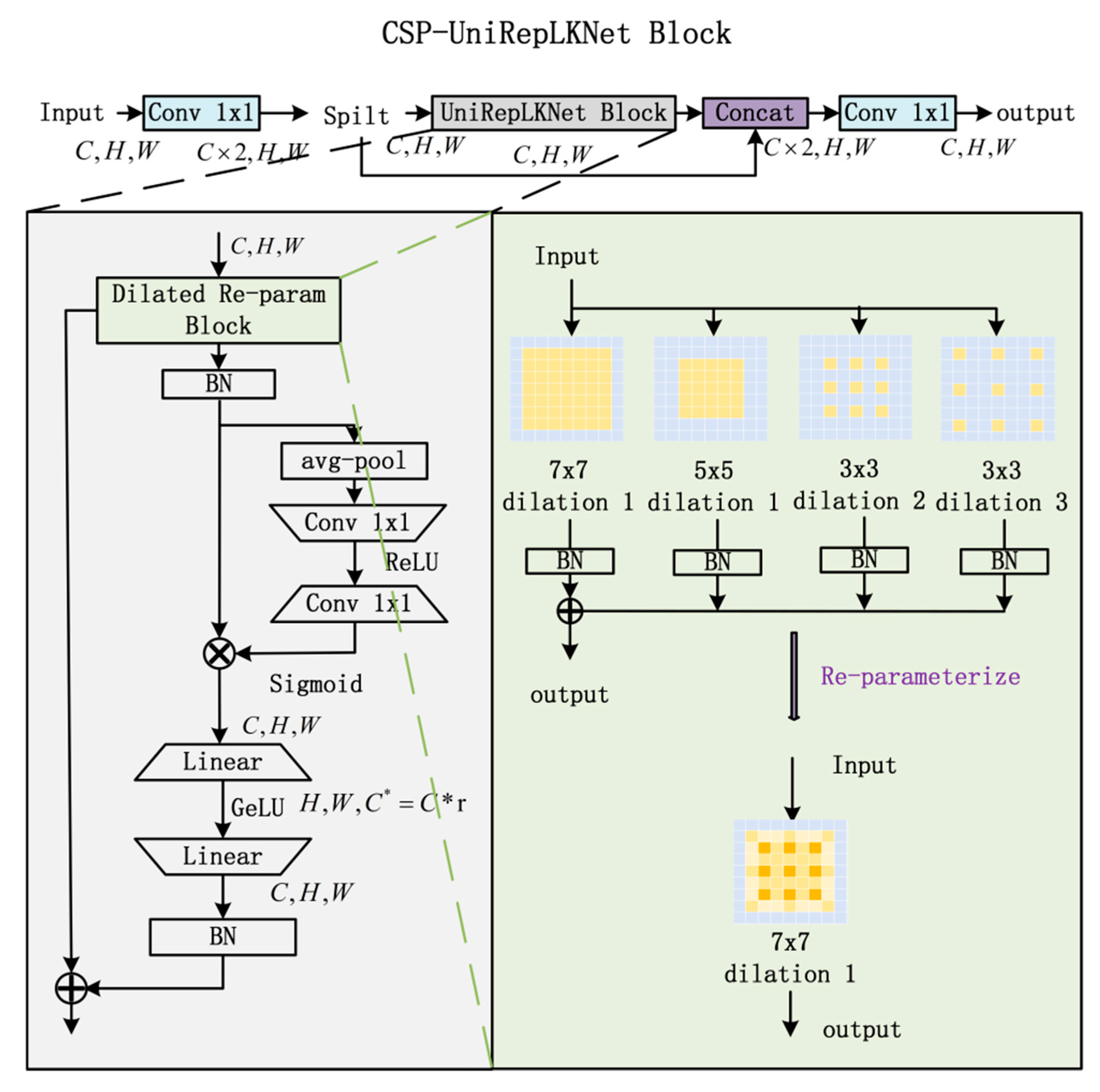

UniRepLKNet module is introduced to capture broader receptive field information. By integrating the design concept of Cross-Stage Partial Network (CSP), the CSP-UniRepLKNet block is proposed to enhance stability and efficiency, as illustrated in

Figure 2. UniRepLKNet employs a dilated reparameterization architecture, which improves representational capacity through parallel integration of large-kernel convolutions and small-scale dilated convolutions. Channel-wise attention and spatial feature aggregation are subsequently achieved using a Squeeze-and-Excitation (SE) mechanism followed by nonlinear transformation.

- 2.

IncepMix

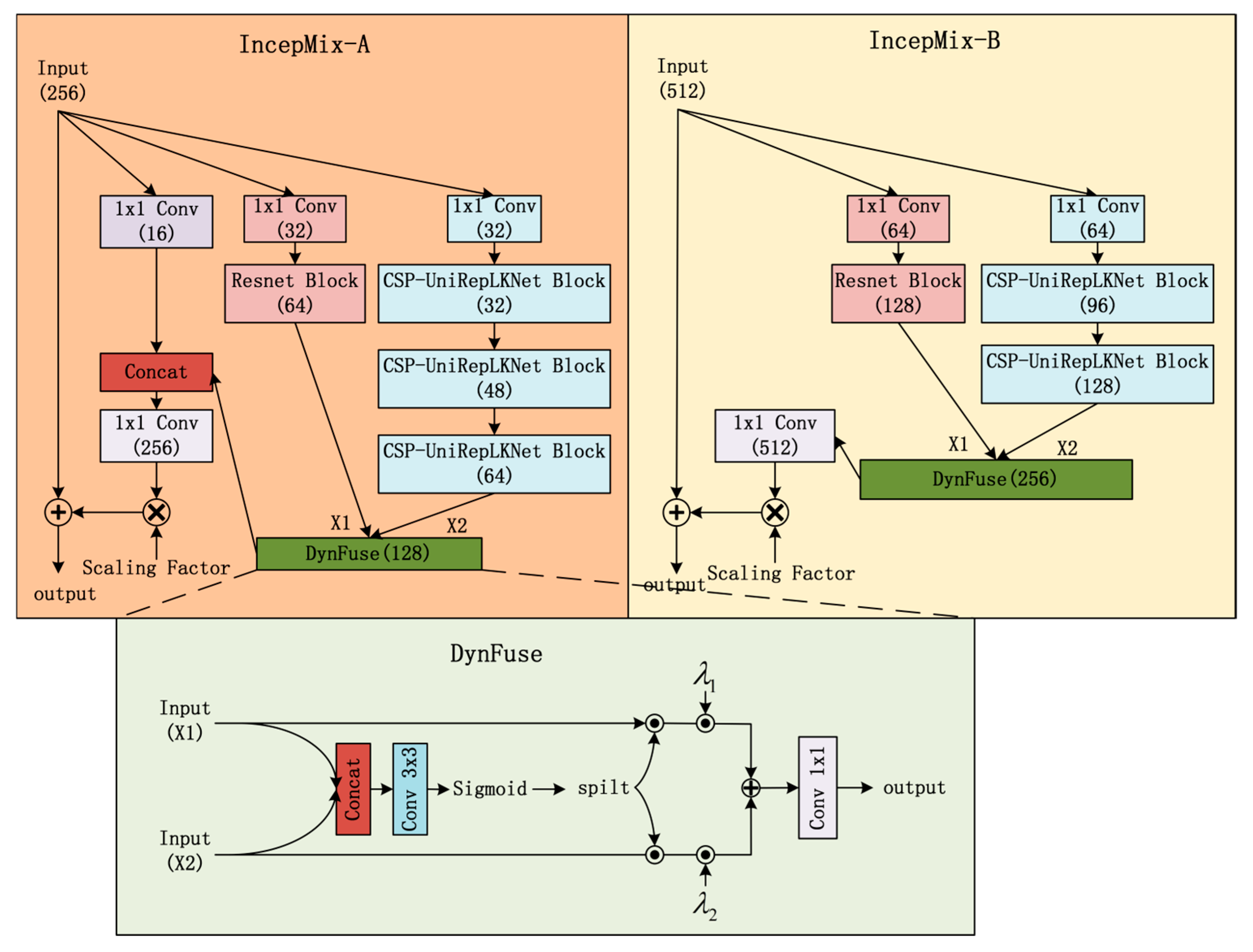

As shown in

Figure 3, IncepMix incorporates CSP-UniRepLKNet to capture contextual information and uses ResNet for extracting local features. The channel count of each branch is reduced to decrease computational cost. A progressive channel expansion strategy is applied in the large receptive field branch to enhance feature modeling layer by layer. Feature fusion from the two branches is dynamically performed via a DynFuse module. DynFuse conducts weighted fusion of the two branches’ outputs using attention-generated weights and trainable parameters, as expressed in Equation (1):

Here, are trainable weights, and ⊙ denotes element-wise multiplication. The fused features are scaled by a factor and then integrated with the input via a residual connection.

Based on the functional characteristics of different stages in the backbone network, IncepMix-A and IncepMix-B are specifically designed for the S4 and S5 layers, respectively. Considering that the S4 layer retains both spatial and semantic information, IncepMix-A incorporates a lightweight 1 × 1 convolutional branch to preserve the original feature structure and facilitate the transmission of fine-grained details. In contrast, as the S5 layer is primarily responsible for high-level semantic modeling, IncepMix-B employs a more compact channel expansion strategy to reduce computational redundancy and omits the 1 × 1 convolutional branch to focus exclusively on processing advanced semantics.

3.3. Design of Frequency-Guided Mechanism

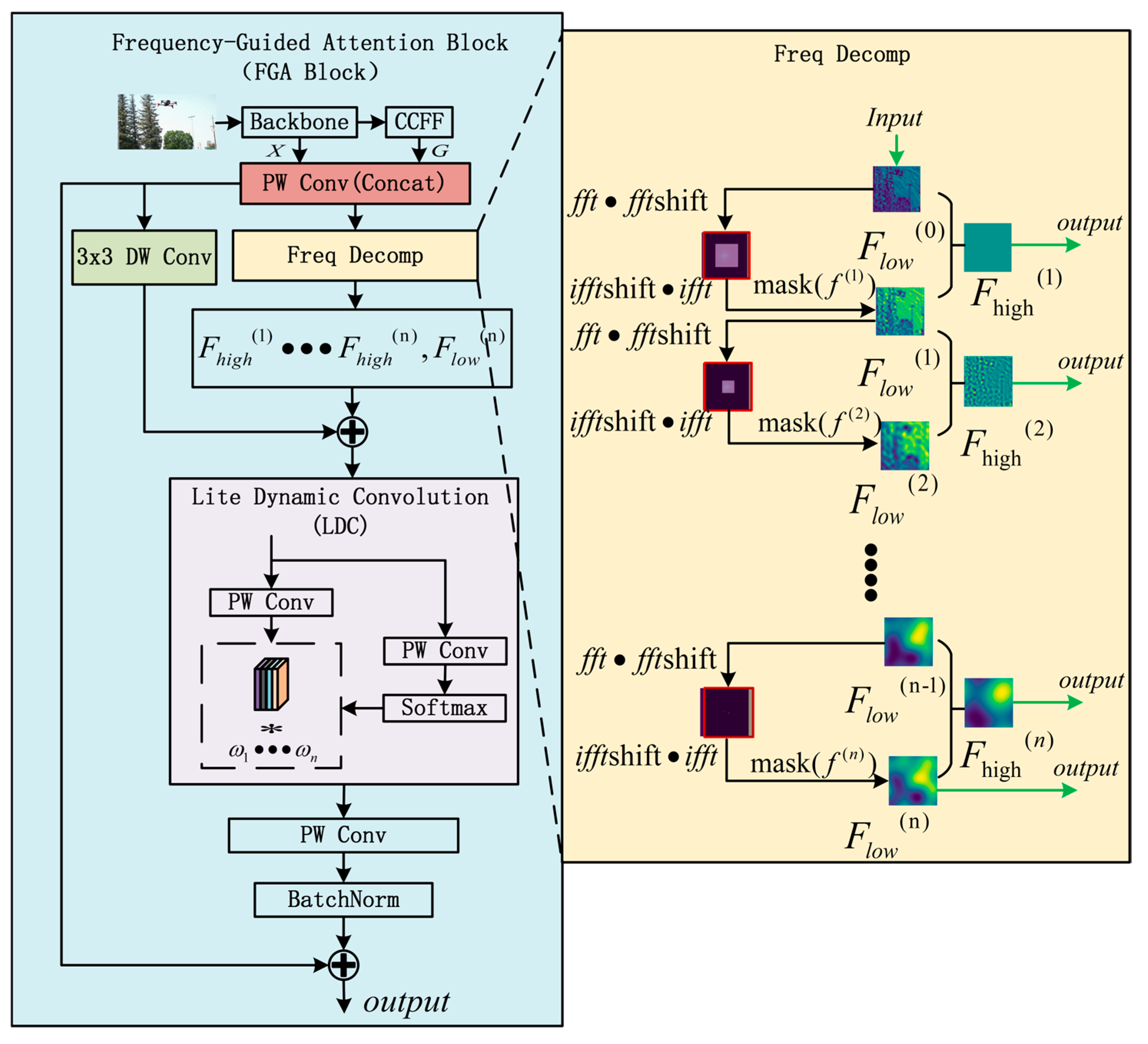

To address the challenges of distinguishing object–background boundaries and mitigating interference from similar background patterns, this paper proposes the Frequency-Guided Attention Block (FGA Block), a novel module that enhances localization precision through frequency-domain processing. As illustrated in

Figure 4, the approach integrates shallow features from the backbone network with Cross-level Context Fusion Features (CCFF) to preserve fine-grained details of small objects in deep layers while maintaining semantic richness from deep features.

The core of the method involves multi-level frequency information extraction via Fast Fourier Transform (FFT). Leveraging the non-trainable nature of FFT, the model maintains computational efficiency by avoiding additional gating mechanisms on frequency components. Since frequency representations lack spatial information, Depthwise Separable Convolution (DW Conv) is employed outside the frequency domain to capture spatial relationships. A lightweight channel attention-based dynamic convolution is subsequently applied to emphasize informative channels.

Extensive ablation studies in

Section 4.4.2 validate the effectiveness of the architectural design. The detailed algorithm proceeds as follows:

The FGA Block takes two feature maps as inputs: from the backbone network and from the CCFF module, where B denotes batch size, represents spatial dimensions, and indicates channel numbers. These features are concatenated and integrated through a fusion operation to produce feature map .

A frequency list

is defined where parameter

controls the mask size at different frequency levels. Notably, larger values of

produce smaller masks. Each mask

operates by preserving information from the central image region while suppressing peripheral areas, enabling focused frequency-domain processing, as expressed in Equation (2):

The input feature map

is first transformed into the frequency domain via Fast Fourier Transform (FFT), with low-frequency components shifted to the centre. Mask

is applied to extract the low-frequency information at the

level. The high-frequency information at this level is obtained by subtracting the

level low-frequency components from the

level features. Finally, multi-level high-frequency information along with the low-frequency

information from the final level are aggregated to produce the output representation

, as expressed in Equation (3):

- 2.

Spatial information enhancement and feature aggregation

Perform Depthwise Separable Convolution on

to introduce spatial information, as shown in Formula (4):

Fuse frequency-domain features with spatial information, as shown in Formula (5):

- 3.

Lightweight Dynamic Convolution (LDC)

Lightweight dynamic convolution is applied to

with channel-wise weighting mechanism. Firstly, the number of channels is compressed to

using Point-wise Convolution (PW Conv), with one branch generating feature responses and the other branch generating channel-wise weighting using softmax. Thus, more important channel information can be selected at each spatial position to suppress redundant features and achieve dynamic channel weighting, as shown in Formula (6). Finally, connect the output

with

to maintain the flow of the original information.

Here, represents the attention weight of the i-th channel at position , and represents the feature map value of the i-th channel at that position.

3.4. Adaptive Sparse Attention Mechanism

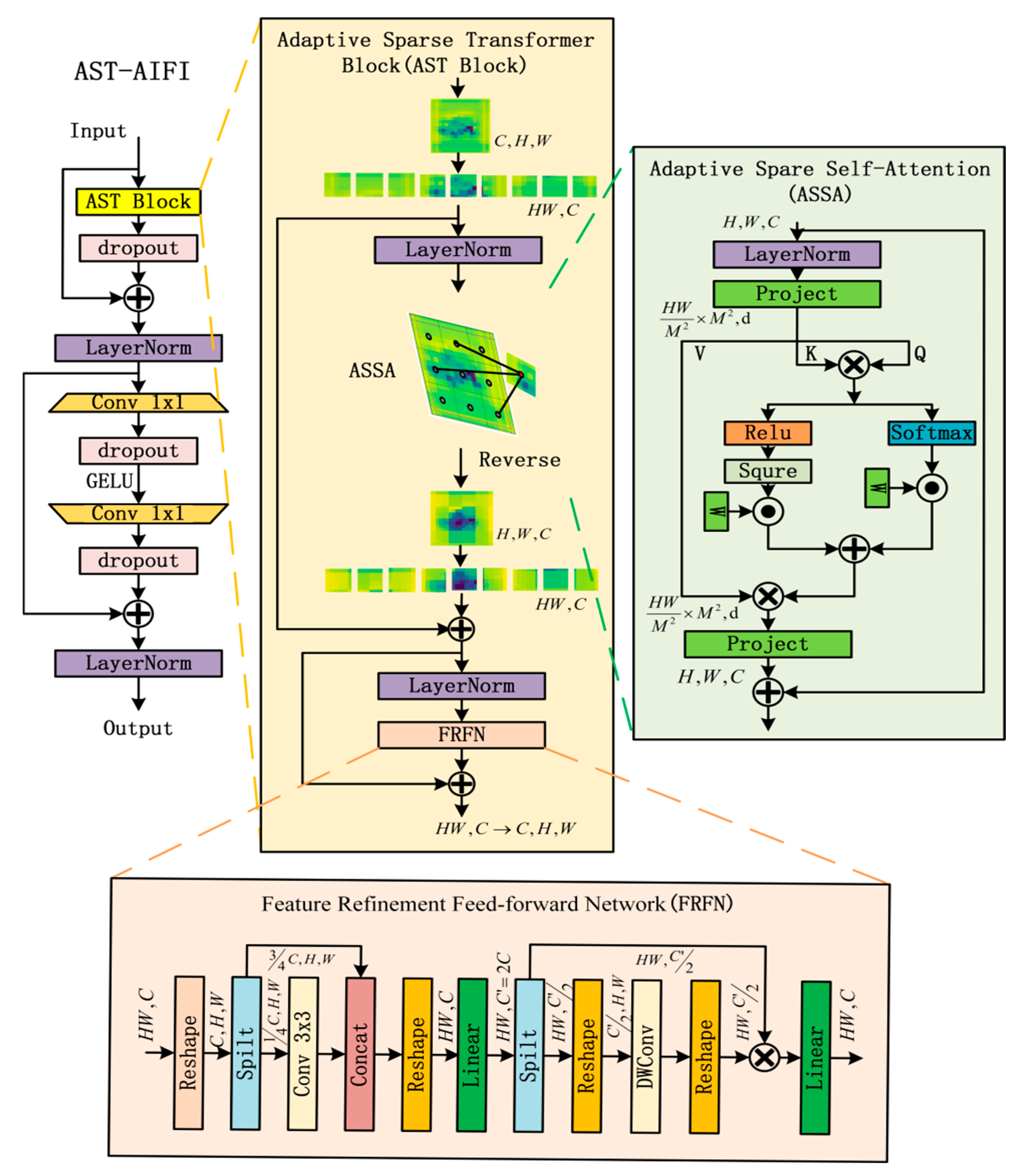

AIFI employs the standard attention mechanism to enable information interaction between features. However, in UAV detection tasks, interactions among all tokens may introduce redundant information from non-critical regions, diluting the response of key areas and thus degrading detection accuracy. To mitigate this issue, this paper replaces the standard attention mechanism in AIFI with an adaptive sparse attention mechanism and designs AST-AIFI, whose structure is illustrated in

Figure 5. The input feature map is processed through Adaptive Sparse Self-Attention (ASSA) to capture global contextual relationships and highlight critical spatial information. The Feature Refinement Feed-forward Network (FRFN) then transforms the features into the

for convolutional operations, extracting channel-wise information features. Finally, a feed-forward network is applied to further enhance the feature representation capability. The computational process of ASSA is as follows:

The input feature map is reshaped into

, which is then divided into non-overlapping windows of size

. For each window, the feature representation

is obtained. From this, the query matrix

, key matrix

, and value matrix

are generated. The attention is computed as:

where

denotes the final attention output,

represents a learnable relative position bias, and

refers to the scoring function.

Feature interaction is performed via Sparse Attention (SSA) and Dense Attention (DSA), as shown in Equations (8) and (9), respectively. SSA suppresses negative values through squared ReLU-based sparsification, filtering out query-key pairs with low matching scores while highlighting salient correlations. To prevent potential information loss, DSA facilitates global interaction among all elements via softmax, emphasizing critical information while suppressing less important components.

The outputs of SSA and DSA are fused using learnable weights, as defined in Equation (10):

Here, are normalized weights, and are learnable parameters.

5. Conclusions

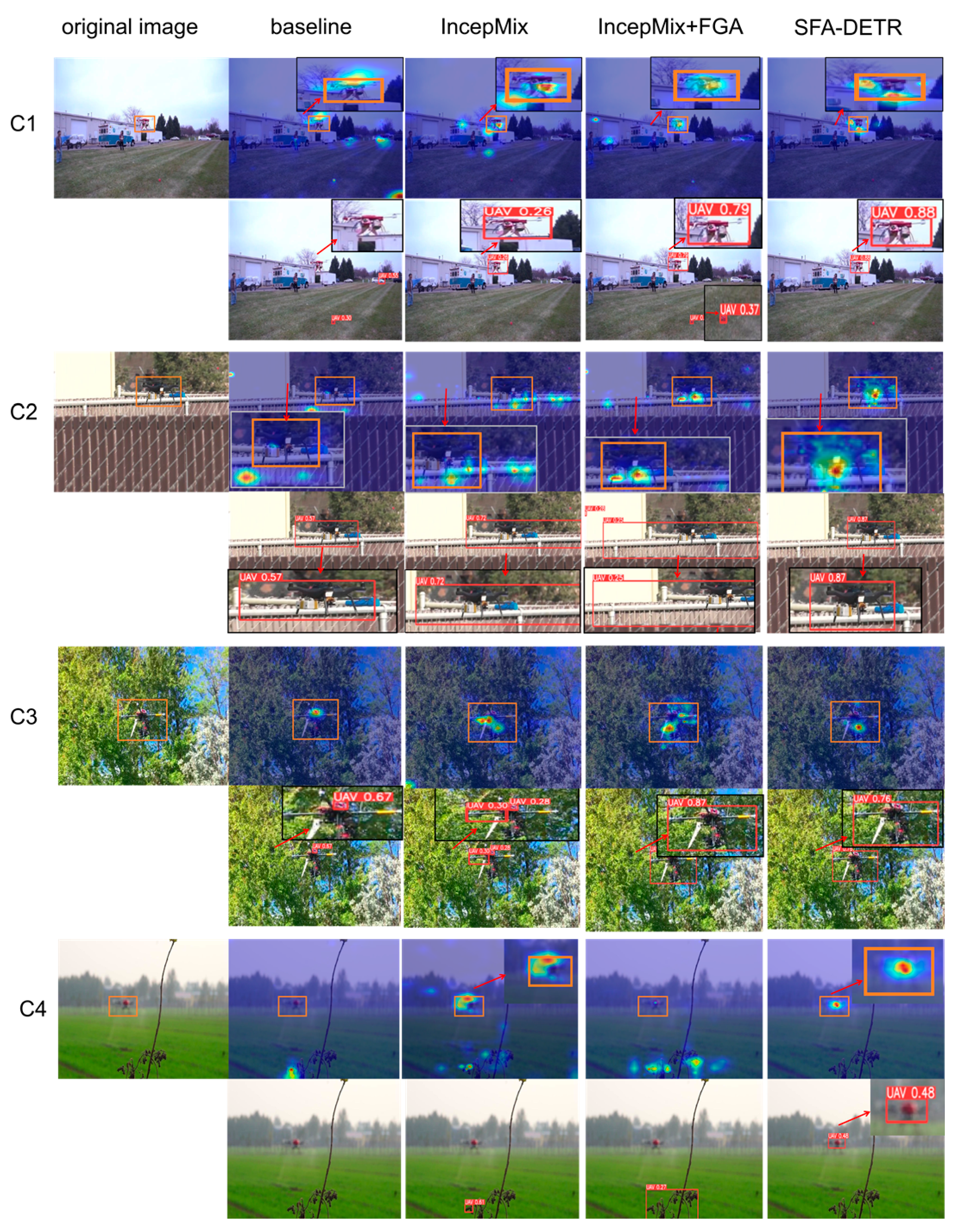

To address the challenges of detecting small UAV targets susceptible to background interference in complex environments, this paper proposes SFA-DETR, a detection algorithm based on joint spatial–frequency-domain perception. Built upon the RT-DETR architecture, the method enhances model perception from both spatial and frequency domains.

For spatial modeling, the IncepMix backbone network is designed to dynamically integrate multi-scale receptive field information, effectively improving contextual understanding while reducing computational overhead through a channel compression strategy. For frequency-domain modeling, the Frequency-Guided Attention (FGA) Block is introduced, which incorporates both backbone and CCFF features to capture local and global semantics. By extracting multi-level high- and low-frequency information and applying lightweight dynamic convolution based on channel attention, the model more accurately focuses on target boundaries and contours, enhancing detection performance in cluttered backgrounds. Additionally, an adaptive sparse attention mechanism is integrated into AIFI to emphasize critical semantics and suppress redundant information, further improving detection accuracy.

Experimental results validate that the proposed improvements work synergistically, boosting the model’s detection capability in challenging environments. However, the proposed method still has certain limitations. When the background contains dense high-frequency patterns similar to the target, distinguishing object boundaries becomes difficult, and the adaptive sparse attention may incorrectly dilute crucial edge information, reducing detection performance. When the texture or boundary information of the target is severely degraded, the localization accuracy may also be compromised. Future work will focus on enhancing the model’s boundary perception ability and strengthening the collaboration between spatial and frequency modeling to improve both detection accuracy and overall robustness.