FFformer: A Lightweight Feature Filter Transformer for Multi-Degraded Image Enhancement with a Novel Dataset †

Abstract

1. Introduction

- We propose the RMTD dataset, the first large-scale comprehensive multi-degradation benchmark including both synthetic and real degraded images, providing valuable resources for complex-scene image enhancement research.

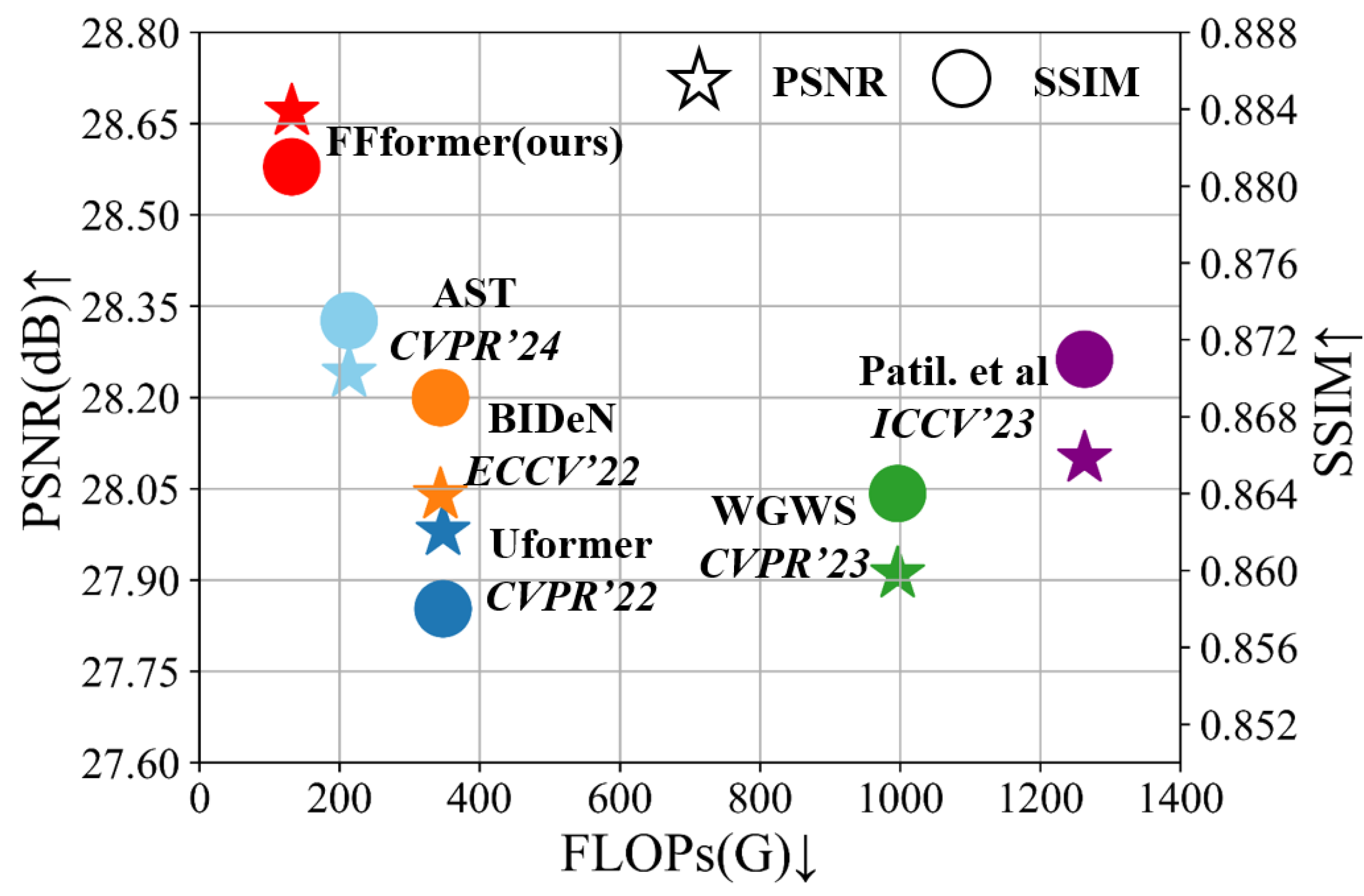

- We introduce the FFformer, an efficient image enhancement model based on Vision Transformers (ViT), which effectively removes redundant features from compounded degradations through its GFSA mechanism and FSFN.

- An SCM and an FAM are introduced in the image encoder to fully utilize the features of different-scale layers. Meanwhile, an FEB is integrated into the residual structure of the decoder to reinforce the clear background features.

- Extensive experiments on RMTD and other synthetic and real image datasets demonstrate that FFformer achieves leading performance in various complex scenes, proving the effectiveness of the proposed dataset and method in complex scene image enhancement.

2. Related Work

2.1. Image Restoration Datasets

2.2. Image Restoration Methods

2.2.1. Specific Degraded Image Restoration

2.2.2. General Degraded Image Restoration

2.3. ViT in Image Restoration

2.4. Feature Enhancement in Multi-Modal and Multi-Scale Learning

3. The Robust Multi-Type Degradation Dataset

3.1. Dataset Construction

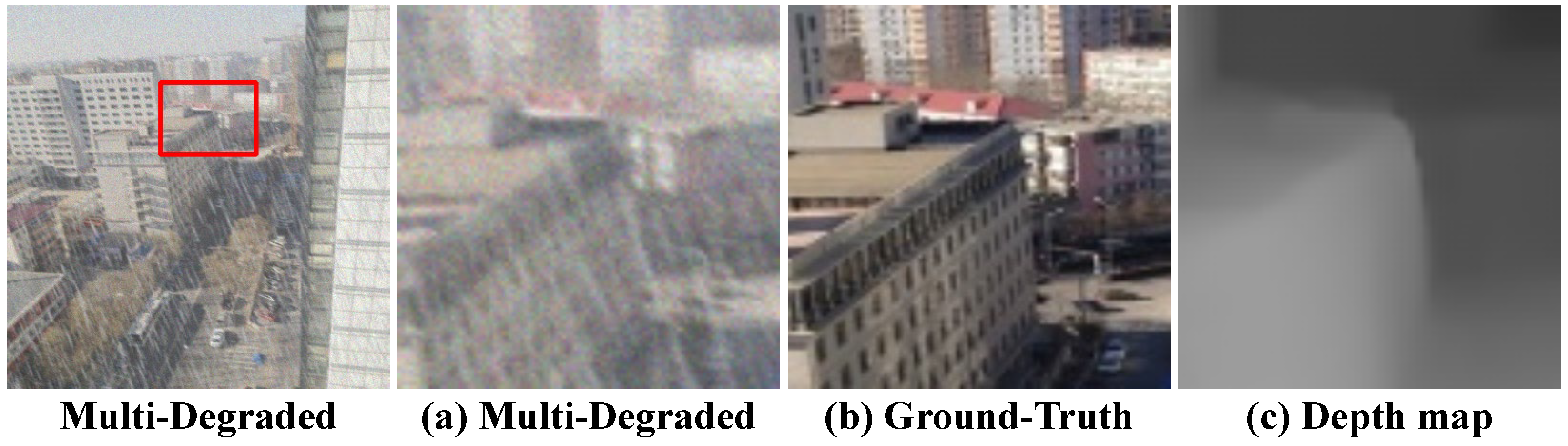

3.1.1. Synthetic Multi-Degraded Image Generation

3.1.2. Haze Simulation

3.1.3. Rain Simulation

3.1.4. Blur Simulation

3.1.5. Noise Simulation

3.1.6. Collection of Real-World Degraded Images

3.1.7. Object Detection Annotations

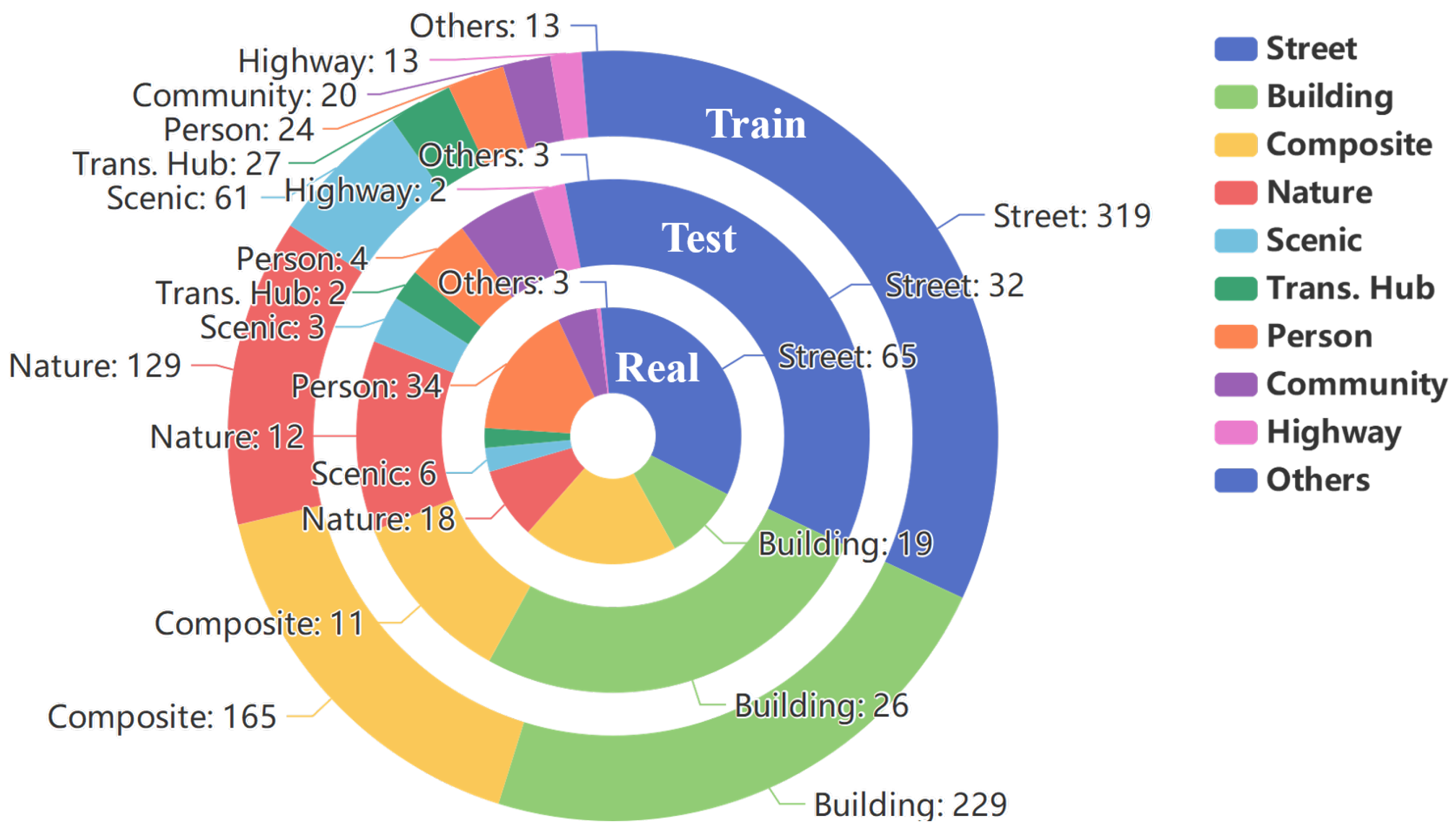

3.2. Dataset Statistics and Analysis

3.2.1. Scene Distribution

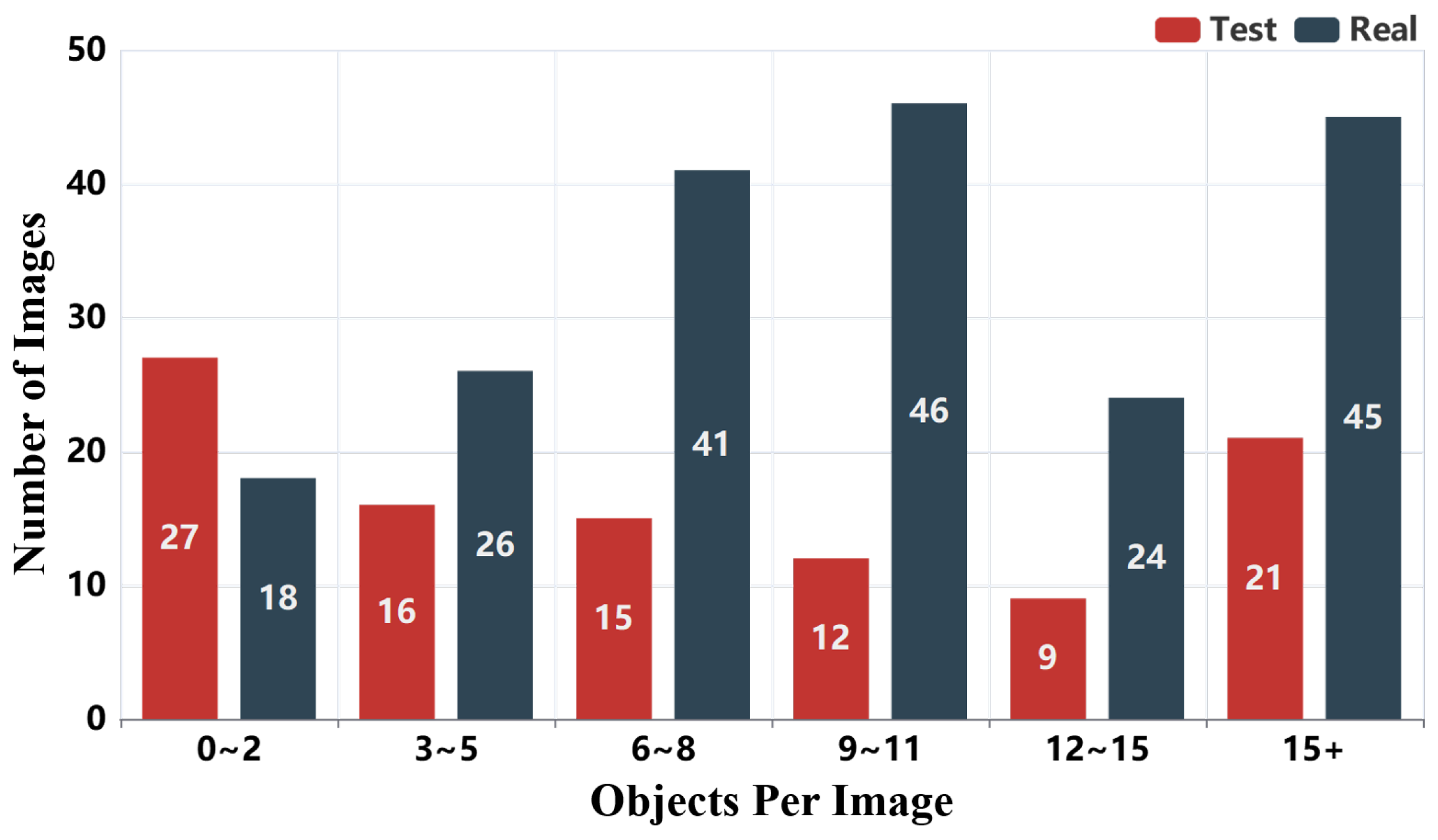

3.2.2. Object Annotation Statistics

3.2.3. Summary

4. Method

4.1. Overall Pipeline

4.2. Gaussian Filter Self-Attention

4.3. Feature Shrinkage Feed-Forward Network

- Sparsity Promotion: The soft-thresholding function zeros out all feature elements whose absolute values are below the threshold . This actively promotes sparsity in the feature representation, effectively filtering out a large number of weak or non-significant activations that are likely to be noise or less informative components.

- Noise Reduction: In signal processing theory, soft-thresholding is known to be the proximal operator for the L1-norm and is optimal for denoising signals corrupted by additive white Gaussian noise. By applying this principle to feature maps, the FSFN module acts as an adaptive feature denoiser. It shrinks the magnitudes of all features, aggressively pushing insignificant ones (presumed noise) to zero while preserving the significant ones (presumed signal).

4.4. Scale Conversion Module and Feature Aggregation Module

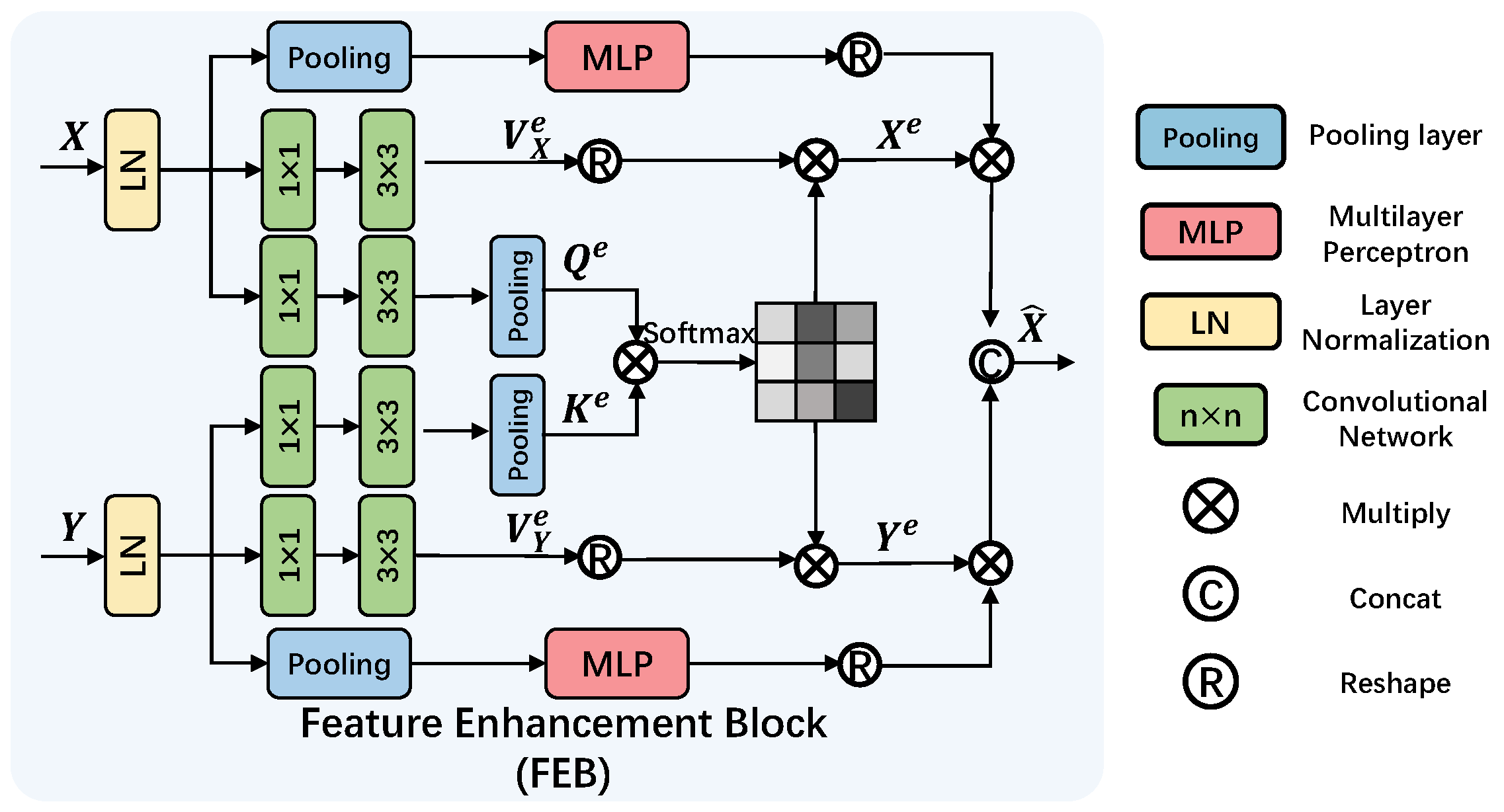

4.5. Feature Enhancement Block

5. Experiments and Analysis

5.1. Datasets

5.2. Implementation Details

5.3. Evaluation Metrics

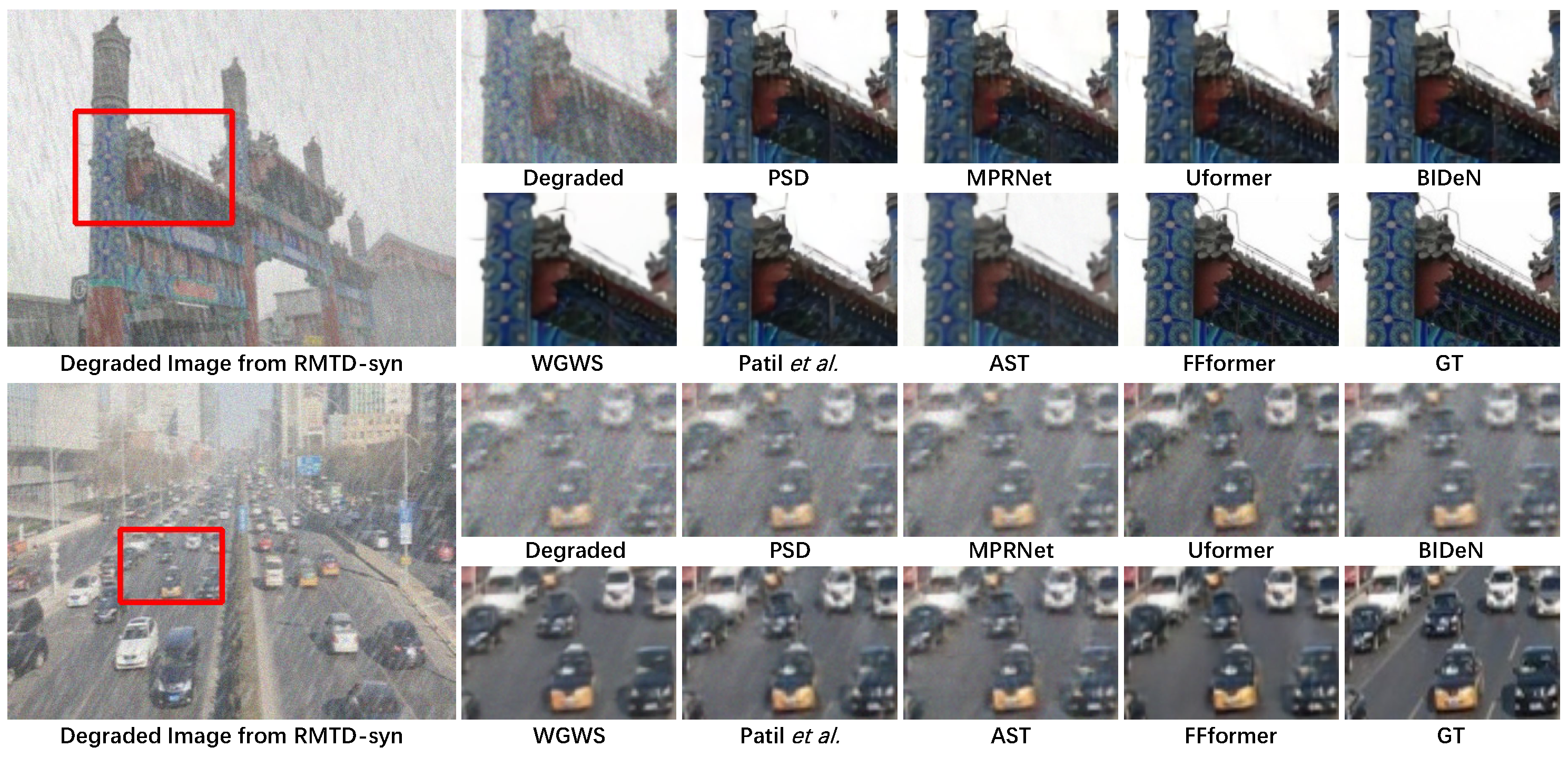

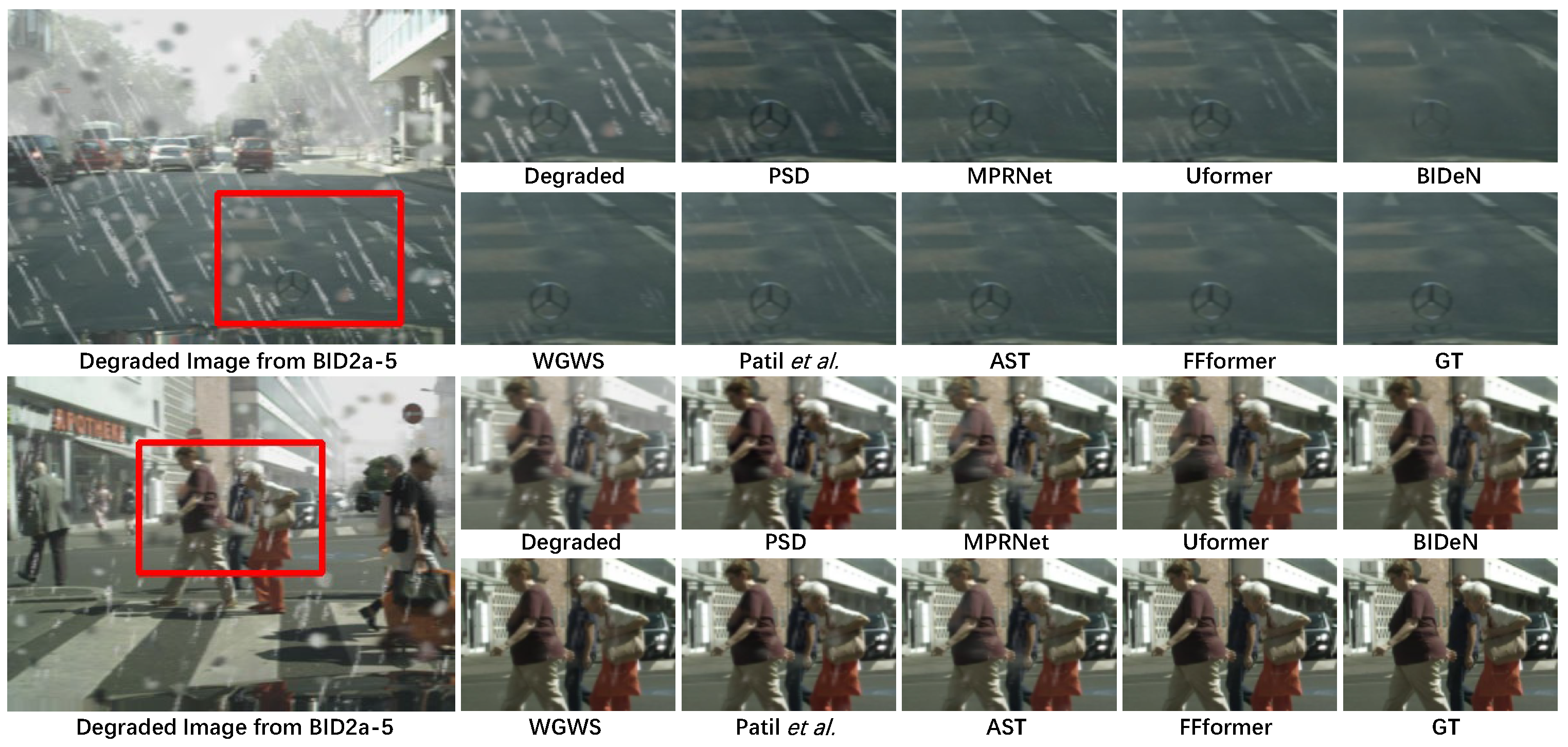

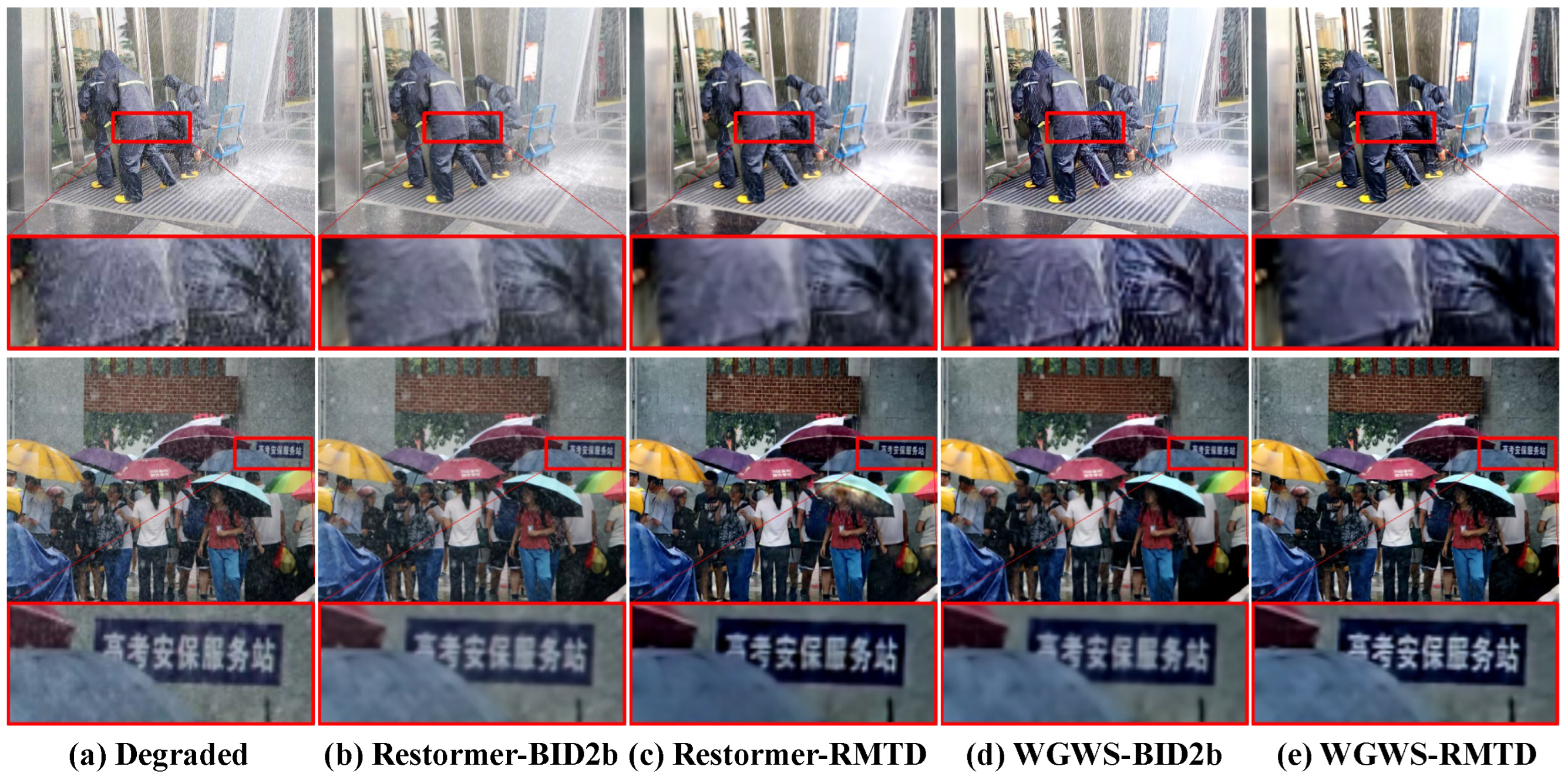

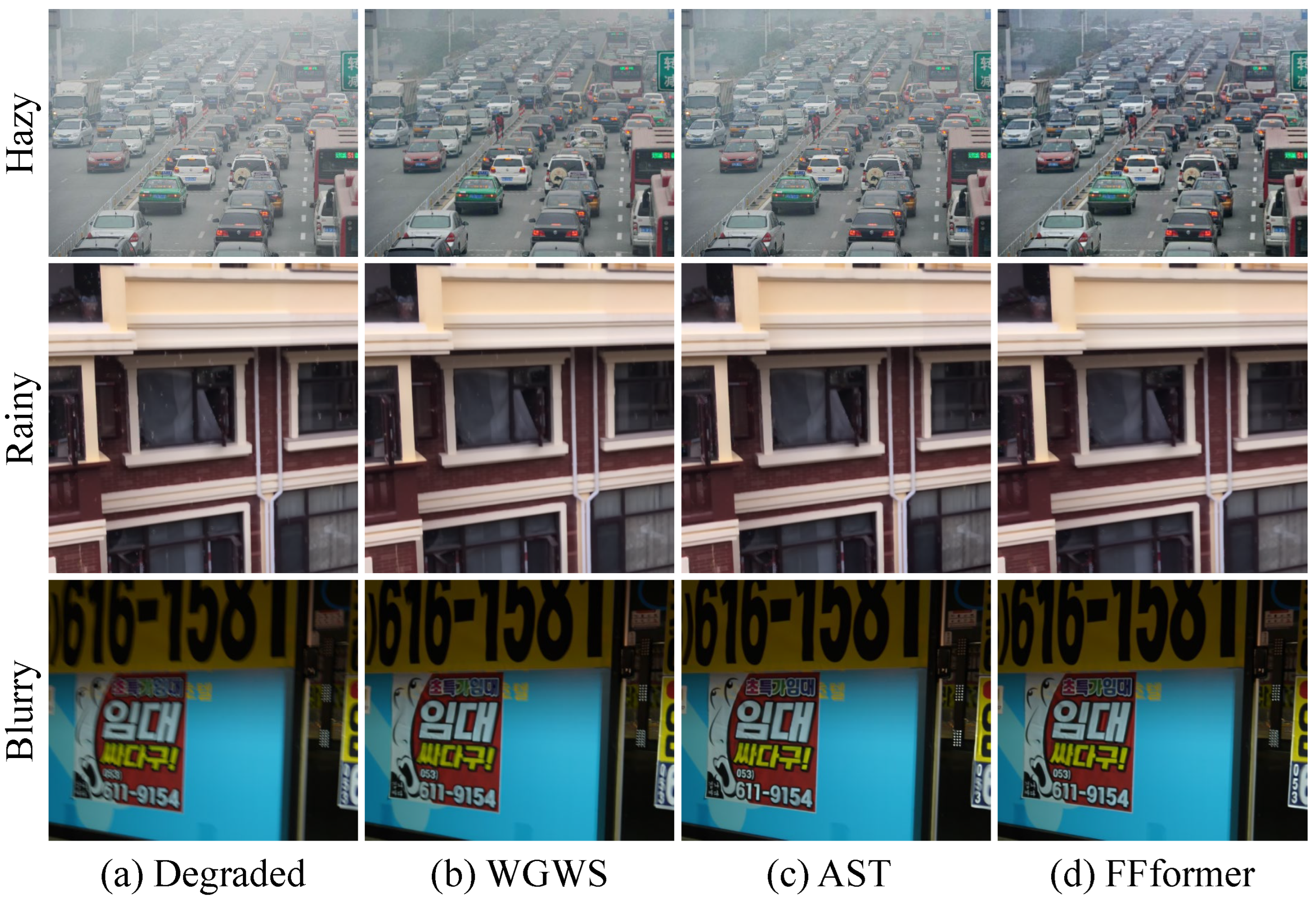

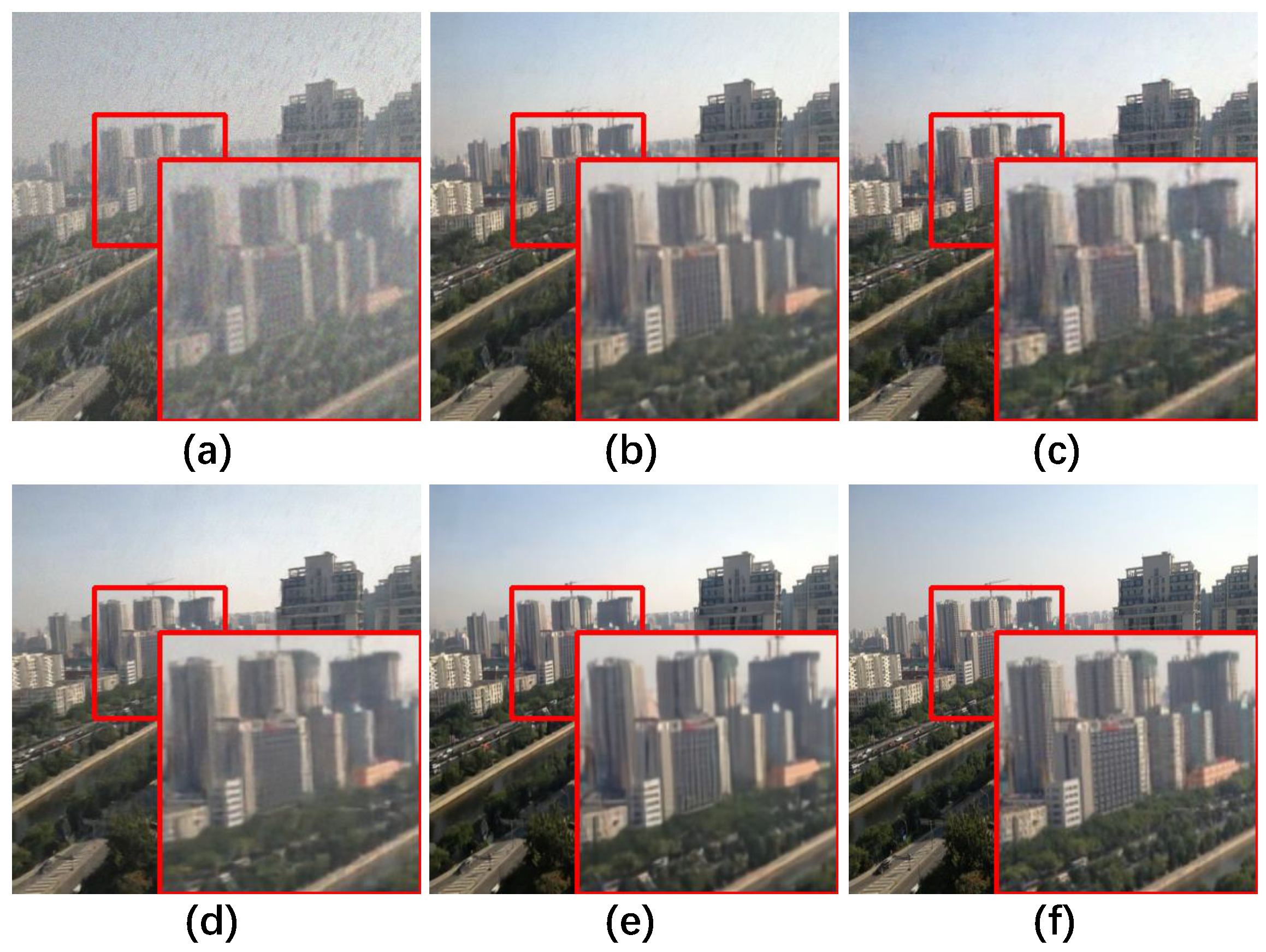

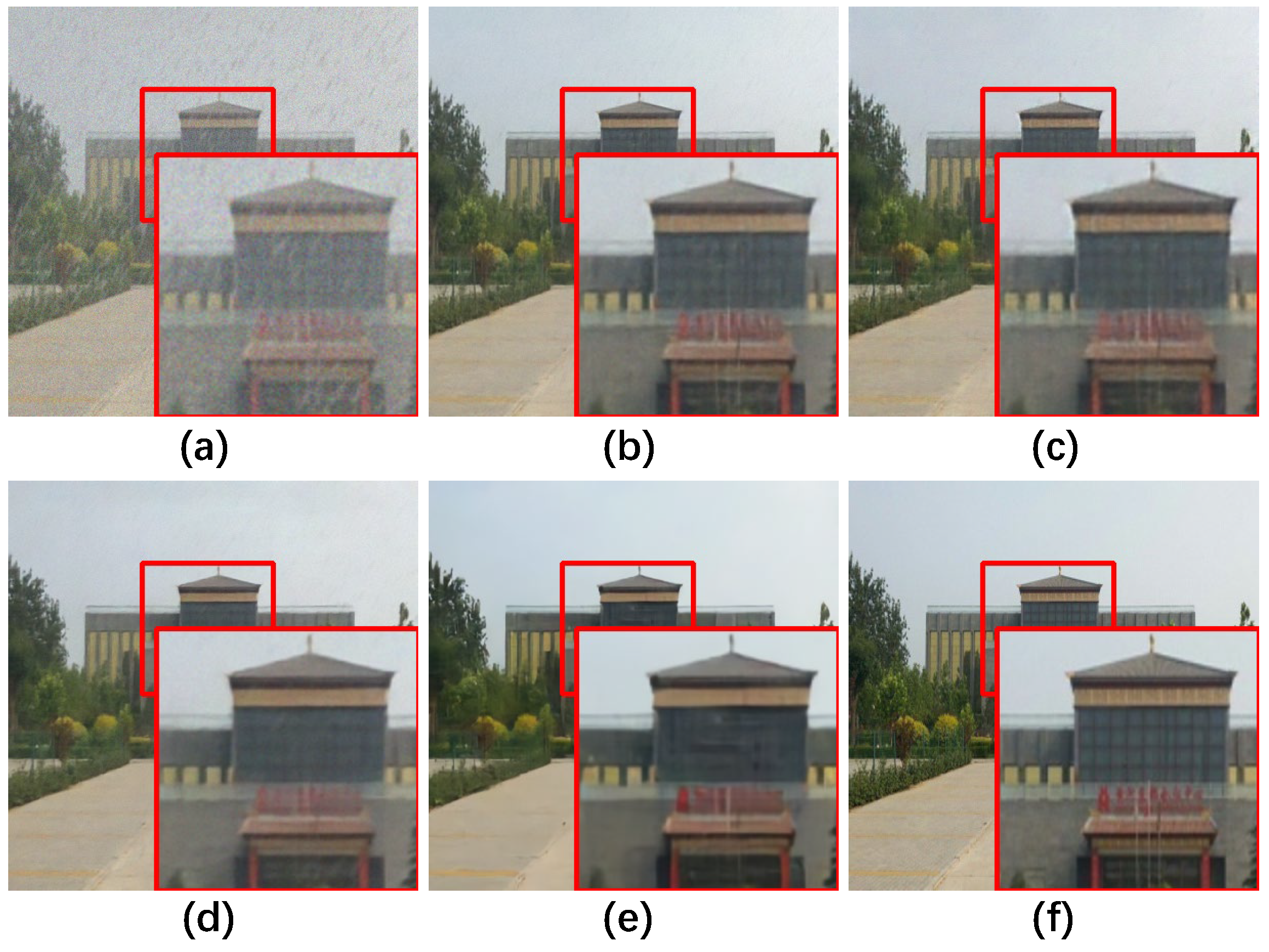

5.4. Comparisons with State-of-the-Art Methods

5.5. Ablation Studies

5.6. Study of Hyper-Parameters and Model Complexity

6. Conclusions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Li, B.; Ren, W.; Fu, D.; Tao, D.; Feng, D.; Zeng, W.; Wang, Z. Benchmarking single-image dehazing and beyond. IEEE Trans. Image Process. 2018, 28, 492–505. [Google Scholar] [CrossRef]

- Zhang, X.; Dong, H.; Pan, J.; Zhu, C.; Tai, Y.; Wang, C.; Li, J.; Huang, F.; Wang, F. Learning to restore hazy video: A new real-world dataset and a new method. In Proceedings of the CVPR, Nashville, TN, USA, 19–25 June 2021; pp. 9239–9248. [Google Scholar]

- Fu, X.; Huang, J.; Zeng, D.; Huang, Y.; Ding, X.; Paisley, J. Removing rain from single images via a deep detail network. In Proceedings of the CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 3855–3863. [Google Scholar]

- Wang, T.; Yang, X.; Xu, K.; Chen, S.; Zhang, Q.; Lau, R.W. Spatial attentive single-image deraining with a high quality real rain dataset. In Proceedings of the CVPR, Long Beach, CA, USA, 16–20 June 2019; pp. 12270–12279. [Google Scholar]

- Nah, S.; Hyun Kim, T.; Mu Lee, K. Deep multi-scale convolutional neural network for dynamic scene deblurring. In Proceedings of the CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 3883–3891. [Google Scholar]

- Rim, J.; Lee, H.; Won, J.; Cho, S. Real-world blur dataset for learning and benchmarking deblurring algorithms. In Proceedings of the ECCV, Glasgow, UK, 23–28 August 2020; pp. 184–201. [Google Scholar]

- Abdelhamed, A.; Lin, S.; Brown, M.S. A high-quality denoising dataset for smartphone cameras. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–22 June 2018; pp. 1692–1700. [Google Scholar]

- Han, J.; Li, W.; Fang, P.; Sun, C.; Hong, J.; Armin, M.A.; Petersson, L.; Li, H. Blind image decomposition. In Proceedings of the ECCV, Tel Aviv, Israel, 23–27 October 2022; pp. 218–237. [Google Scholar]

- Liu, Y.F.; Jaw, D.W.; Huang, S.C.; Hwang, J.N. DesnowNet: Context-aware deep network for snow removal. IEEE Trans. Image Process. 2018, 27, 3064–3073. [Google Scholar] [CrossRef]

- Chen, W.T.; Fang, H.Y.; Ding, J.J.; Tsai, C.C.; Kuo, S.Y. JSTASR: Joint size and transparency-aware snow removal algorithm based on modified partial convolution and veiling effect removal. In Proceedings of the Computer Vision–ECCV 2020: 16th European Conference, Glasgow, UK, 23–28 August 2020; pp. 754–770. [Google Scholar]

- Chen, W.T.; Fang, H.Y.; Hsieh, C.L.; Tsai, C.C.; Chen, I.; Ding, J.J.; Kuo, S.Y. All snow removed: Single image desnowing algorithm using hierarchical dual-tree complex wavelet representation and contradict channel loss. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 4196–4205. [Google Scholar]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 33, 2341–2353. [Google Scholar] [CrossRef] [PubMed]

- Berman, D.; Treibitz, T.; Avidan, S. Non-local image dehazing. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1674–1682. [Google Scholar]

- Li, Y.; Tan, R.T.; Guo, X.; Lu, J.; Brown, M.S. Rain streak removal using layer priors. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2736–2744. [Google Scholar]

- Hu, Z.; Cho, S.; Wang, J.; Yang, M.H. Deblurring low-light images with light streaks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3382–3389. [Google Scholar]

- Xu, J.; Zhao, W.; Liu, P.; Tang, X. An improved guidance image based method to remove rain and snow in a single image. Comput. Inf. Sci. 2012, 5, 49. [Google Scholar] [CrossRef]

- Pan, J.; Sun, D.; Pfister, H.; Yang, M.H. Blind image deblurring using dark channel prior. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1628–1636. [Google Scholar]

- Meng, G.; Wang, Y.; Duan, J.; Xiang, S.; Pan, C. Efficient image dehazing with boundary constraint and contextual regularization. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, Australia, 1–8 December 2013; pp. 617–624. [Google Scholar]

- Li, Y.; Tan, R.T.; Brown, M.S. Nighttime haze removal with glow and multiple light colors. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 11–18 December 2015; pp. 226–234. [Google Scholar]

- Chen, B.H.; Huang, S.C. An advanced visibility restoration algorithm for single hazy images. Acm Trans. Multimed. Comput. Commun. Appl. (TOMM) 2015, 11, 1–21. [Google Scholar] [CrossRef]

- Qiu, C.; Yao, Y.; Du, Y. Nested Dense Attention Network for Single Image Super-Resolution. In Proceedings of the 2021 International Conference on Multimedia Retrieval, Taipei, Taiwan, 21–24 August 2021; pp. 250–258. [Google Scholar]

- Du, X.; Yang, X.; Qin, Z.; Tang, J. Progressive Image Enhancement under Aesthetic Guidance. In Proceedings of the 2019 International Conference on Multimedia Retrieval, Ottawa, ON, Canada, 10–13 June 2019; pp. 349–353. [Google Scholar]

- Dong, H.; Pan, J.; Xiang, L.; Hu, Z.; Zhang, X.; Wang, F.; Yang, M.H. Multi-scale boosted dehazing network with dense feature fusion. In Proceedings of the IEEE/CVF Conference on CVPR, Seattle, WA, USA, 14–19 June 2020; pp. 2157–2167. [Google Scholar]

- Li, L.; Pan, J.; Lai, W.S.; Gao, C.; Sang, N.; Yang, M.H. Dynamic scene deblurring by depth guided model. IEEE Trans. Image Process. 2020, 29, 5273–5288. [Google Scholar] [CrossRef] [PubMed]

- Wang, C.; Xing, X.; Yao, G.; Su, Z. Single image deraining via deep shared pyramid network. Vis. Comput. 2021, 37, 1851–1865. [Google Scholar] [CrossRef]

- Cheng, B.; Li, J.; Chen, Y.; Zeng, T. Snow mask guided adaptive residual network for image snow removal. Comput. Vis. Image Underst. 2023, 236, 103819. [Google Scholar] [CrossRef]

- Jiang, K.; Wang, Z.; Yi, P.; Chen, C.; Huang, B.; Luo, Y.; Ma, J.; Jiang, J. Multi-scale progressive fusion network for single image deraining. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 8346–8355. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H.; Shao, L. Multi-stage progressive image restoration. In Proceedings of the CVPR, Nashville, TN, USA, 19–25 June 2021; pp. 14821–14831. [Google Scholar]

- Chen, W.T.; Huang, Z.K.; Tsai, C.C.; Yang, H.H.; Ding, J.J.; Kuo, S.Y. Learning multiple adverse weather removal via two-stage knowledge learning and multi-contrastive regularization: Toward a unified model. In Proceedings of the IEEE/CVF Conference on CVPR, New Orleans, LA, USA, 19–24 June 2022; pp. 17653–17662. [Google Scholar]

- Wang, Z.; Cun, X.; Bao, J.; Zhou, W.; Liu, J.; Li, H. Uformer: A general u-shaped transformer for image restoration. In Proceedings of the IEEE/CVF Conference on CVPR, New Orleans, LA, USA, 19–24 June 2022; pp. 17683–17693. [Google Scholar]

- Patil, P.W.; Gupta, S.; Rana, S.; Venkatesh, S.; Murala, S. Multi-weather Image Restoration via Domain Translation. In Proceedings of the ICCV, Paris, France, 2–6 October 2023; pp. 21696–21705. [Google Scholar]

- Zhu, Y.; Wang, T.; Fu, X.; Yang, X.; Guo, X.; Dai, J.; Qiao, Y.; Hu, X. Learning Weather-General and Weather-Specific Features for Image Restoration Under Multiple Adverse Weather Conditions. In Proceedings of the CVPR, Vancouver, BC, Canada, 18–22 June 2023; pp. 21747–21758. [Google Scholar]

- Zhou, S.; Chen, D.; Pan, J.; Shi, J.; Yang, J. Adapt or perish: Adaptive sparse transformer with attentive feature refinement for image restoration. In Proceedings of the CVPR, Seattle, WA, USA, 17–21 June 2024; pp. 2952–2963. [Google Scholar]

- Monga, A.; Nehete, H.; Kumar Reddy Bollu, T.; Raman, B. Dairnet: Degradation-Aware All-in-One Image Restoration Network with Cross-Channel Feature Interaction. SSRN 2025. SSRN:5365629. [Google Scholar]

- Zamir, S.W.; Arora, A.; Khan, S.; Hayat, M.; Khan, F.S.; Yang, M.H. Restormer: Efficient transformer for high-resolution image restoration. In Proceedings of the CVPR, New Orleans, LA, USA, 19–24 June 2022; pp. 5728–5739. [Google Scholar]

- Liang, J.; Cao, J.; Sun, G.; Zhang, K.; Van Gool, L.; Timofte, R. Swinir: Image restoration using swin transformer. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 1833–1844. [Google Scholar]

- Lee, H.; Choi, H.; Sohn, K.; Min, D. Knn local attention for image restoration. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 19–24 June 2022; pp. 2139–2149. [Google Scholar]

- Song, Y.; He, Z.; Qian, H.; Du, X. Vision transformers for single image dehazing. IEEE Trans. Image Process. 2023, 32, 1927–1941. [Google Scholar] [CrossRef]

- Chen, X.; Li, H.; Li, M.; Pan, J. Learning A Sparse Transformer Network for Effective Image Deraining. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 18–22 June 2023; pp. 5896–5905. [Google Scholar]

- Yang, S.; Hu, C.; Xie, L.; Lee, F.; Chen, Q. MG-SSAF: An advanced vision Transformer. J. Vis. Commun. Image Represent. 2025, 112, 104578. [Google Scholar] [CrossRef]

- Li, B.; Cai, Z.; Wei, H.; Su, S.; Cao, W.; Niu, Y.; Wang, H. A quality enhancement method for vehicle trajectory data using onboard images. Geo-Spat. Inf. Sci. 2025, 1–26. [Google Scholar]

- Yang, J.; Wu, C.; Du, B.; Zhang, L. Enhanced multiscale feature fusion network for HSI classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 10328–10347. [Google Scholar] [CrossRef]

- Tour-Beijing. Available online: https://www.tour-beijing.com/real_time_weather_photo/ (accessed on 1 August 2023).

- McCartney, E.J. Optics of the Atmosphere: Scattering by Molecules and Particles; American Institute of Physics: New York, NY, USA, 1976. [Google Scholar]

- Li, Z.; Snavely, N. Megadepth: Learning single-view depth prediction from internet photos. In Proceedings of the CVPR, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2041–2050. [Google Scholar]

- Flusser, J.; Farokhi, S.; Höschl, C.; Suk, T.; Zitova, B.; Pedone, M. Recognition of images degraded by Gaussian blur. IEEE Trans. Image Process. 2015, 25, 790–806. [Google Scholar] [CrossRef]

- Gong, D.; Yang, J.; Liu, L.; Zhang, Y.; Reid, I.; Shen, C.; Van Den Hengel, A.; Shi, Q. From motion blur to motion flow: A deep learning solution for removing heterogeneous motion blur. In Proceedings of the CVPR, Honolulu, HI, USA, 21–26 July 2017; pp. 2319–2328. [Google Scholar]

- Consul, P.C.; Jain, G.C. A generalization of the Poisson distribution. Technometrics 1973, 15, 791–799. [Google Scholar] [CrossRef]

- Tzutalin. Labelimg. Open Annotation Tool. Available online: https://github.com/HumanSignal/labelImg (accessed on 1 August 2024).

- Everingham, M.; Van Gool, L.; Williams, C.K.; Winn, J.; Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vis. 2010, 88, 303–338. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-time single image and video super-resolution using an efficient sub-pixel convolutional neural network. In Proceedings of the IEEE Conference on CVPR, Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 30–40. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Li, Y.; Zhang, K.; Cao, J.; Timofte, R.; Van Gool, L. Localvit: Bringing locality to vision transformers. arXiv 2021, arXiv:2104.05707. [Google Scholar]

- Loshchilov, I.; Hutter, F. Sgdr: Stochastic gradient descent with warm restarts. arXiv 2016, arXiv:1608.03983. [Google Scholar]

- Huynh-Thu, Q.; Ghanbari, M. Scope of validity of PSNR in image/video quality assessment. Electron. Lett. 2008, 44, 800–801. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, Y.; Yang, Y.; Liu, D. PSD: Principled synthetic-to-real dehazing guided by physical priors. In Proceedings of the CVPR, Nashville, TN, USA, 19–25 June 2021; pp. 7180–7189. [Google Scholar]

- Quan, R.; Yu, X.; Liang, Y.; Yang, Y. Removing raindrops and rain streaks in one go. In Proceedings of the CVPR, Nashville, TN, USA, 19–25 June 2021; pp. 9147–9156. [Google Scholar]

- Ma, L.; Li, X.; Liao, J.; Zhang, Q.; Wang, X.; Wang, J.; Sander, P.V. Deblur-nerf: Neural radiance fields from blurry images. In Proceedings of the CVPR, New Orleans, LA, USA, 19–24 June 2022; pp. 12861–12870. [Google Scholar]

- Fu, X.; Huang, J.; Ding, X.; Liao, Y.; Paisley, J. Clearing the skies: A deep network architecture for single-image rain removal. IEEE Trans. Image Process. 2017, 26, 2944–2956. [Google Scholar] [CrossRef] [PubMed]

- Jocher, G.; Chaurasia, A.; Qiu, J. YOLOv8. Available online: https://github.com/ultralytics/ultralytics (accessed on 20 October 2025).

| Dataset | Synthetic or Real | Number | Degradation Types | Real Test | Annotation |

|---|---|---|---|---|---|

| SIDD [7] | Real | 30,000 | Noise | Yes | No |

| RealBlur [6] | Real | 4556 | Blur | Yes | No |

| Rain1400 [3] | Synthetic | 14,000 | Rain | No | No |

| SPA [4] | Real | 29,500 | Rain | Yes | No |

| RESIDE [1] | Synthetic + Real | 86,645 + 4322 | Haze | Yes | RTTS |

| BID2a [8] | Synthetic | 3475 | 4 types | No | No |

| BID2b [8] | Synthetic + Real | 3661 + 1763 | 3 for train, 1 for test | Yes | No |

| RMTD | Synthetic + Real | 48,000 + 200 | 8 types | Yes | Test, Real |

| Category | Methods | Source |

|---|---|---|

| Task-specific Methods | DerainNet [64] | TIP’ 2017 |

| PSD [61] | CVPR’ 2021 | |

| CCN [62] | CVPR’ 2021 | |

| Deblur-NeRF [63] | CVPR’ 2022 | |

| Multiple Degradations Removal Methods | MPRNet [28] | CVPR’ 2021 |

| Uformer [30] | CVPR’ 2022 | |

| Restormer [35] | CVPR’ 2022 | |

| BIDeN [8] | ECCV’ 2022 | |

| WGWS [32] | CVPR’ 2023 | |

| Patil et al. [31] | ICCV’ 2023 | |

| AST [33] | CVPR’ 2024 |

| Datasets | BID2a-5 [8] | BID2a-6 [8] | RMTD-Syn |

|---|---|---|---|

| Degraded | 14.05/0.616 | 12.38/0.461 | 15.09/0.410 |

| DerainNet [64] | 18.68/0.805 | 17.53/0.721 | 21.43/0.772 |

| PSD [61] | 22.17/0.855 | 20.57/0.809 | 26.97/0.839 |

| CCN [62] | 20.86/0.831 | 19.74/0.782 | 25.41/0.830 |

| Deblur-NeRF [63] | 21.10/0.840 | 20.12/0.797 | 26.87/0.843 |

| MPRNet [28] | 21.18/0.846 | 20.76/0.812 | 27.31/0.860 |

| Uformer [30] | 25.20/0.880 | 25.14/0.850 | 27.98/0.858 |

| Restormer [35] | 25.24/0.884 | 25.37/0.859 | 28.02/0.868 |

| BIDeN [8] | 27.11/0.898 | 26.44/0.870 | 28.04/0.869 |

| WGWS [32] | 26.87/0.899 | 25.89/0.856 | 27.91/0.864 |

| Patil et al. [31] | 26.55/0.884 | 26.20/0.861 | 28.10/0.871 |

| AST [33] | 27.15/0.901 | 26.32/0.865 | 28.24/0.873 |

| FFformer (ours) | 27.41/0.905 | 26.51/0.871 | 28.67/0.880 |

| Datasets | BID2a-5 [8] | BID2a-6 [8] | RMTD-Syn |

|---|---|---|---|

| Degraded | 34.420/5.793 | 33.574/6.150 | 32.436/9.917 |

| DerainNet [64] | 33.877/5.742 | 32.015/6.062 | 30.617/4.967 |

| PSD [61] | 34.876/5.665 | 33.116/6.156 | 29.317/4.101 |

| CCN [62] | 33.624/5.736 | 34.394/6.134 | 30.261/4.575 |

| Deblur-NeRF [63] | 31.733/5.695 | 31.531/5.864 | 29.411/4.038 |

| MPRNet [28] | 31.348/5.567 | 32.377/5.969 | 28.518/3.950 |

| Uformer [30] | 30.627/5.324 | 31.001/5.599 | 29.690/4.183 |

| Restormer [35] | 29.137/5.346 | 30.482/5.504 | 30.234/3.870 |

| BIDeN [8] | 27.967/5.242 | 28.395/5.386 | 28.043/3.902 |

| WGWS [32] | 28.495/5.201 | 28.897/5.431 | 27.917/3.824 |

| Patil et al. [31] | 28.365/5.197 | 28.172/5.354 | 27.710/3.833 |

| AST [33] | 28.144/5.166 | 27.814/5.301 | 27.967/3.764 |

| FFformer (ours) | 27.134/5.084 | 26.313/5.146 | 26.451/3.685 |

| Training Set | BID2a [8] | BID2b [8] | RMTD-Syn |

|---|---|---|---|

| Degraded | 28.443/3.850 | 28.443/3.850 | 28.443/3.850 |

| DerainNet [64] | 29.431/3.871 | 29.991/3.955 | 29.624/3.844 |

| PSD [61] | 27.970/3.860 | 27.317/3.851 | 27.246/3.717 |

| CCN [62] | 28.412/3.812 | 27.961/3.974 | 28.791/3.901 |

| Deblur-NeRF [63] | 28.011/3.784 | 29.411/3.978 | 27.664/3.695 |

| MPRNet [28] | 27.318/3.759 | 27.118/3.801 | 26.417/3.672 |

| Uformer [30] | 28.682/3.647 | 29.013/3.661 | 27.011/3.590 |

| Restormer [35] | 27.302/3.756 | 27.034/3.720 | 26.181/3.604 |

| BIDeN [8] | 26.704/3.688 | 26.430/3.667 | 25.448/3.506 |

| WGWS [32] | 25.813/3.670 | 25.517/3.657 | 24.682/3.547 |

| Patil et al. [31] | 25.710/3.667 | 25.613/3.671 | 24.827/3.598 |

| AST [33] | 26.124/3.733 | 25.867/3.715 | 24.961/3.568 |

| FFformer (ours) | 25.012/3.562 | 25.334/3.541 | 23.437/3.427 |

| Datasets | RMTD-Syn | RMTD-Real |

|---|---|---|

| Degraded | 0.1580 | 0.5259 |

| Uformer [30] | 0.3710 | 0.5789 |

| BIDeN [8] | 0.3804 | 0.5893 |

| Patil et al. [31] | 0.3821 | 0.5876 |

| AST [33] | 0.3841 | 0.5955 |

| FFformer | 0.3965 | 0.6012 |

| Ground Truth | 0.4153 | - |

| Network | Component | PSNR ↑ | SSIM ↑ |

|---|---|---|---|

| baseline | MSA + FN [54] | 27.85 | 0.862 |

| Multi-head Attention | GFSA + FN [54] | 28.11 | 0.869 |

| Feed-forward Network | MSA + DFN [55] | 28.04 | 0.867 |

| MSA + FSFN | 28.12 | 0.871 | |

| Overall | GFSA + FSFN | 28.67 | 0.880 |

| Setting | Cross-Feature Attention | Intra-Feature Attention | PSNR↑ | SSIM ↑ |

|---|---|---|---|---|

| (a) | 27.91 | 0.858 | ||

| (b) | ✓ | 28.29 | 0.869 | |

| (c) | ✓ | 28.17 | 0.865 | |

| (d) | ✓ | ✓ | 28.67 | 0.880 |

| Settings | Layer Nums | Attention Heads | PSNR↑/SSIM↑ |

|---|---|---|---|

| (a) | 4, 4, 4, 4 | 2, 2, 4, 4 | 26.97/0.862 |

| (b) | 4, 4, 4, 4 | 1, 2, 4, 8 | 28.10/0.868 |

| (c) | 2, 4, 4, 6 | 2, 2, 4, 4 | 28.17/0.867 |

| (d) | 2, 4, 4, 6 | 1, 2, 4, 8 | 28.67/0.880 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y. FFformer: A Lightweight Feature Filter Transformer for Multi-Degraded Image Enhancement with a Novel Dataset. Sensors 2025, 25, 6684. https://doi.org/10.3390/s25216684

Zhang Y. FFformer: A Lightweight Feature Filter Transformer for Multi-Degraded Image Enhancement with a Novel Dataset. Sensors. 2025; 25(21):6684. https://doi.org/10.3390/s25216684

Chicago/Turabian StyleZhang, Yongheng. 2025. "FFformer: A Lightweight Feature Filter Transformer for Multi-Degraded Image Enhancement with a Novel Dataset" Sensors 25, no. 21: 6684. https://doi.org/10.3390/s25216684

APA StyleZhang, Y. (2025). FFformer: A Lightweight Feature Filter Transformer for Multi-Degraded Image Enhancement with a Novel Dataset. Sensors, 25(21), 6684. https://doi.org/10.3390/s25216684