Highlights

An integrated architecture for real-time event detection and response has been proposed, addressing the critical challenge of effective detection of unexpected events in wide-area surveillance. Utilizing the wide coverage of narrowband cellular networks (e.g., NB-IoT), the system ensures efficient image data transmission even in remote or rural areas, providing real-time alerts.

What are the main findings?

- The system detects abnormal events by analyzing sequential image frames using intelligent algorithms and stores images only upon anomaly detection, improving storage efficiency.

- By using the CoAP protocol to transmit encapsulated JPEG images and leveraging the MQTT protocol to deliver image data to client applications, the system achieves efficient data transmission and processing.

What is the implication of the main finding?

- This study offers an intelligent, scalable, and responsive solution for wide-area surveillance systems, overcoming limitations of traditional systems such as low storage efficiency, limited transmission range, and complex operation.

- The intelligent anomaly detection algorithms reduce the risks and costs associated with manual monitoring, enhancing both the efficiency and accuracy of anomaly detection.

Abstract

Effective detection of unexpected events in wide-area surveillance remains a critical challenge in the development of intelligent monitoring systems. Recent advancements in Narrowband Internet of Things (NB-IoT) and 5G technologies provide a robust foundation to address this issue. This study presents an integrated architecture for real-time event detection and response. The system utilizes the Constrained Application Protocol (CoAP) to transmit encapsulated JPEG images from NB-IoT modules to an Internet of Things (IoT) server. Upon receipt, images are decoded, processed, and archived in a centralized database. Subsequently, image data are transmitted to client applications via WebSocket, leveraging the Message Queuing Telemetry Transport (MQTT) protocol. By performing temporal image comparison, the system identifies abnormal events within the monitored area. Once an anomaly is detected, a visual alert is generated and presented through an interactive interface. The test results show that the image recognition accuracy is consistently above 98%. This approach enables intelligent, scalable, and responsive wide-area surveillance reliably, overcoming the constraints of conventional isolated and passive monitoring systems.

1. Introduction

Initially, electronic surveillance systems operated using analog signal transmission, commonly referred to as Closed-Circuit Television (CCTV). These systems predominantly employed analog matrix control and were constrained by several limitations, including short transmission distances, high operational complexity, limited storage capacity, and susceptibility to electromagnetic interference [1]. In the 1990s, the introduction of Digital Video Recorder (DVR) technology enabled the digitization and storage of analog video signals on high-capacity hard drives, significantly improving system capabilities. DVR systems offered enhanced video data retention, extended transmission ranges, and improved operational convenience compared to traditional analog solutions [2].

By the late 20th century, the proliferation of IP Video Surveillance (IPVS) systems, which leveraged internet-based technologies, markedly expanded surveillance coverage beyond earlier generations. These systems enabled integration with various platforms, utilized disk array architectures to increase storage capacity, and incorporated fault tolerance mechanisms through redundant network device configurations and failover protocols [3,4]. In recent years, the application of image processing techniques combined with intelligent algorithms has driven the evolution of intelligent surveillance technologies, resulting in increasingly large-scale, intelligent, and networked video monitoring systems [5,6,7]. As surveillance systems are deployed across a broader range of industries, public reliance on such technologies to ensure industrial and residential security continues to rise, accompanied by growing expectations for intelligent functionality.

With the emergence of the 5G era, Narrowband Internet of Things (NB-IoT) technology has laid a foundational framework for massive Machine-Type Communications (mMTC) within 5G networks. Its core advantage lies in providing efficient, reliable connectivity for a large number of low-power, low-cost, and widely distributed IoT devices. The benefits of NB-IoT modules are well-established [8], including near-complete nationwide base station coverage, narrow bandwidth utilization (180 kHz), and exceptionally low power consumption—averaging just 0.3 μA in Power Saving Mode (PSM) under a reference voltage of 3.3–5 V. Although Low-Power Wide-Area (LPWA) services have existed since the 2G era, global expansion revealed several challenges, including excessive terminal power consumption, high data transmission volumes, limited coverage, and elevated operational costs. Next-generation NB-IoT technologies effectively address these limitations.

Moreover, video surveillance systems inherently involve processing large volumes of image data. The integration of intelligent algorithms has begun to resolve long-standing issues associated with manual monitoring—such as “footage exists but anomalies are hard to identify,” “anomalies can be found, but detection is time-consuming,” and “services are available, but the cost is prohibitive.” These innovations also mitigate the risk of missed anomalies due to human oversight.

Therefore, in this paper, we propose an intermittent image capture approach, wherein intelligent algorithms detect anomalies through comparative analysis of sequential image frames. Images are stored only upon detection of an anomaly. Utilizing the extensive coverage provided by narrowband cellular networks (e.g., NB-IoT), the proposed system enables efficient image data transmission even from remote or rural areas, providing users with real-time alerts. Our experimental results show the transmission success rate achieves 100% at a baud rate of 115,200 bps. When faced with high-concurrency request volumes, the server maintains a 100% success rate and the image recognition accuracy is consistently above 98%. This paper provides a practical example and effect verification for the video detection application of NB-IOT, a new generation of low-power wide-area network communication technology.

2. Related Work

Based on the new generation of low-power wide-area network (WAN) IoT technology, remote detection of various parameters is extensively researched. For instance, the literature [9] presents the design of a smart city environment monitoring and optimization system based on NB-IoT technology. This system utilizes NB-IoT communication to acquire environmental monitoring data from the wide-area environment. Finally, the monitoring data are input into a BP neural network enhanced by the particle swarm optimization (PSO) method for environmental risk prediction. Similar studies can also be found in the literature [10,11,12,13,14]. Additionally, some scholars have conducted research on detecting data and performing control operations based on the NB-IoT system. The literature [15] introduces an intelligent street lamp control system based on narrowband internet of things (NB-IoT) technology. This system can automatically or remotely adjust the brightness and switch of street lamps according to demand and environmental conditions, while also monitoring and recording the current, voltage, power, and other data of street lamps. Similarly, the NB-IoT-based monitoring system for UAV networks presented in the literature [16] also tackles the challenge posed by the absence of a global IP address in the existing NB-IoT infrastructure. In recent years, research has progressively expanded into the realm of video detection. A video surveillance unit (VSU), as introduced in the literature [17], incorporates a motion detection function. Upon detecting motion within the camera’s field of view, images are captured, processed, compressed, and segmented to ensure they fit within the maximum payload size of LoRaWAN for transmission. In the literature [18] on smart IoT-based mobile sensors, a unit is used to collect information about the cane user and the surrounding obstacles while on the move, and an embedded machine learning algorithm is developed to identify the detected obstacles and alarm the user about their nature. There is also literature [19] focusing on video-based passenger counting systems. However, research in this area, similar to other studies [18,19], has not yet been applied to wide-area scenarios. In addition, some scholars specialize in the processing of video images. The literature [20] presents enhancement techniques and synthetic image generation methods utilizing YOLO, SSD, and EfficientDet deep learning models to enhance sea mine detection technology. The literature [21] introduces a searchable and revocable attribute-based encryption scheme specifically tailored for dynamic video anomaly detection scenarios, enhancing the security and privacy of video data. The literature [22] explores and develops image compression and data transmission methods that contribute to achieving stable low-rate transmission of images and data in Internet of Things (IoT) systems. Some of these studies are limited to the detection and management of environmental parameters, failing to fully reflect the on-site visual scene. Others are focused on the research of deep learning algorithms for video images, which is not compatible with remote narrowband IoT communication technology. Therefore, the proposed system combines IoT technology and optimized background subtraction method to detect moving targets, making it the most reasonable choice for video surveillance in a wide-area environment.

3. System Design

3.1. Subsection

The proposed system adopts a three-tier architecture comprising: (i) the embedded and sensor perception layer, (ii) the network layer utilizing narrowband cellular communication, and (iii) the Web backend service and application layer, integrating functionalities from the backend to the frontend [23,24,25].

First, the sensing layer (Embedded Devices & Sensors) is built around an ARM Cortex-M4 master chip, which acquires image data from an camera sensor via the RS232 protocol.

Next, the network layer (Narrowband Cellular Communication) employs NB-IoT for connectivity. In this stage, the NB module receives hex-encoded JPEG image data processed by the ARM processor and transmits it transparently to the server using the CoAP protocol, with data forwarding handled by base stations [26].

Finally, the service/application layer (Web Backend) is implemented using a Spring Boot-based microservices framework with a Vue.js frontend. The system integrates Eureka for service registration, Feign for service invocation, and Nginx for load balancing and IoT data collection. Image data is delivered via WebSocket, decoded, stored in a distributed MySQL cluster managed by Mycat, and made accessible through RESTful and Webservice APIs to support real-time monitoring and anomaly detection.

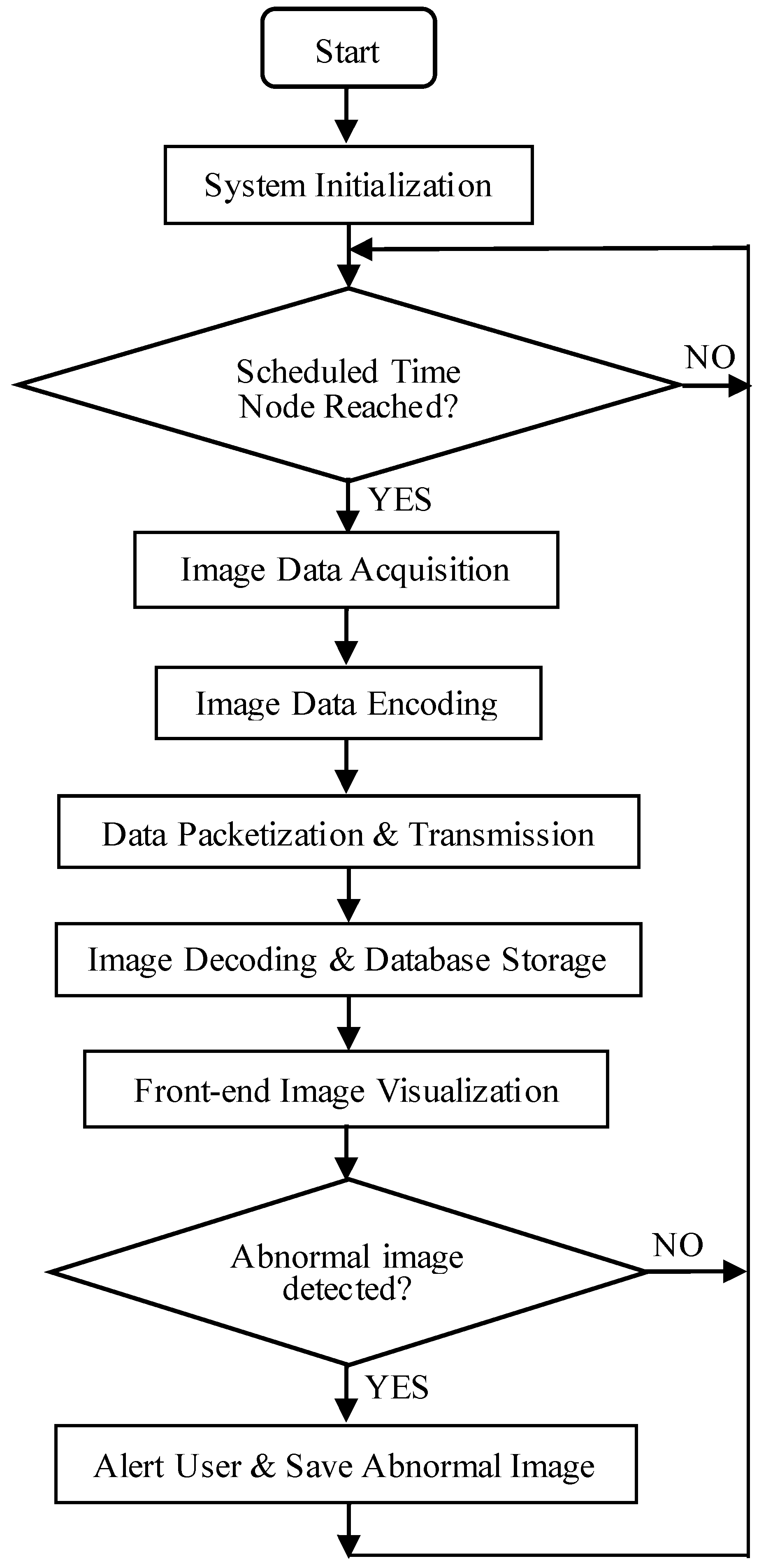

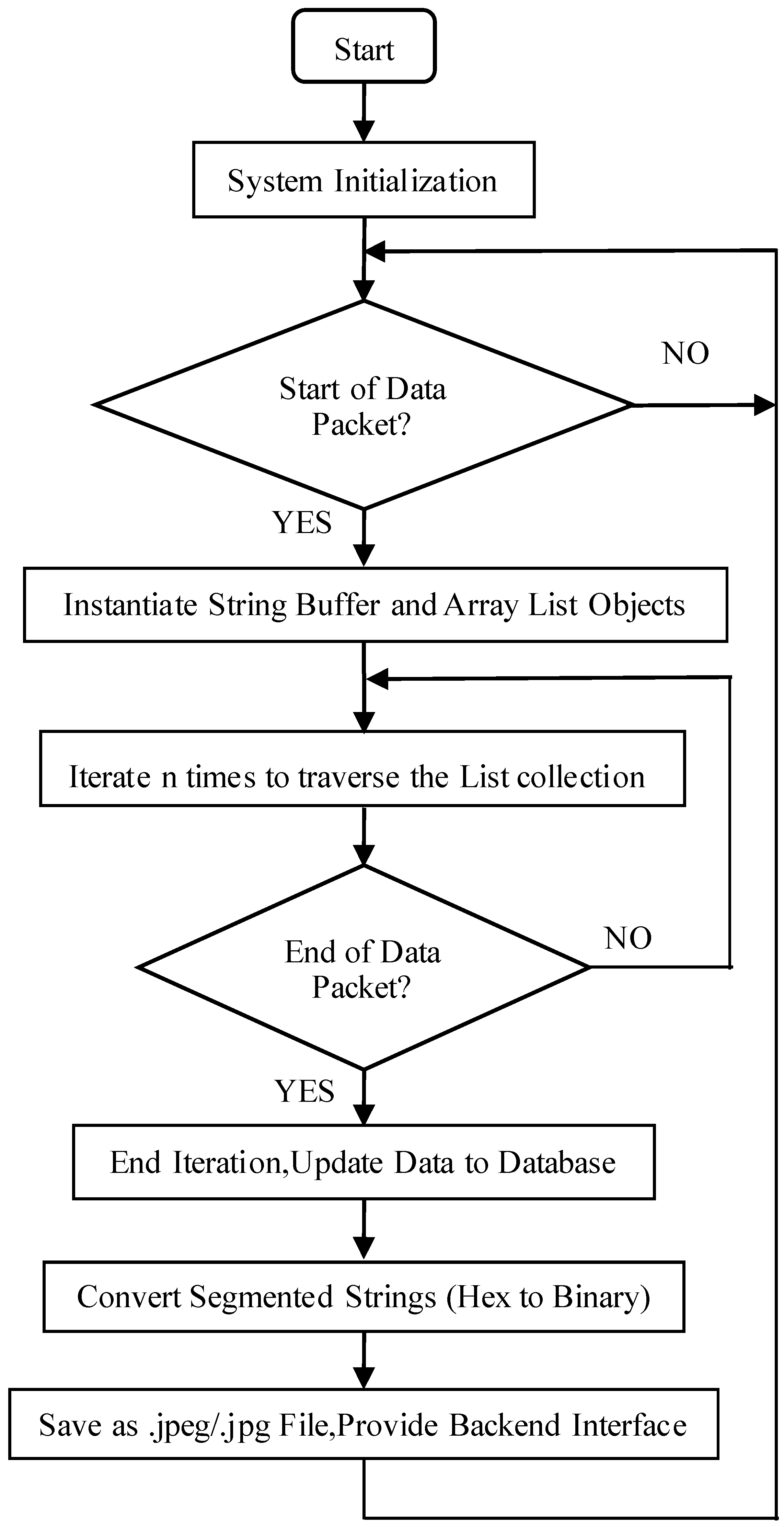

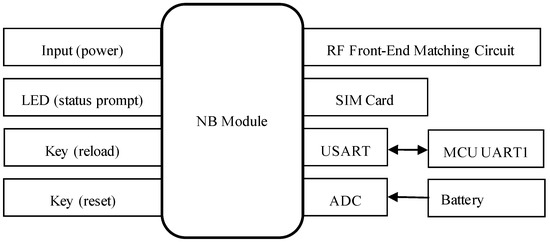

The design of the system software encompasses the development of encoding algorithms for raw image data, serial communication protocols, and communication interfaces among NB-IoT modules, base stations, and IoT servers, as well as the interaction between the Web service backend and IoT servers. Additionally, it includes the design of image encoding and decoding algorithms, as illustrated in Figure 1. The system initially captures image data at predefined, fixed intervals and encodes these images into hexadecimal format. This data is then transmitted via a serial port to the STM32F407 main controller, which subsequently forwards it to the NB-IoT module through the same serial interface. The NB-IoT module relays the data packets to the IoT service backend via the NB communication base station. Upon receipt, the backend processes the data using the business logic implemented in the Service layer and employs the Spring Data JPA persistence framework to store the processed results in a MySQL database. Finally, the frontend—developed with Vue.js—sends requests to the Spring Boot backend to retrieve the stored data, which is then rendered visually on the user interface. In the case of abnormal image detections, the images are archived, and users are promptly notified.

Figure 1.

Flowchart of the overall architecture of the system program.

3.2. System Perception Layer

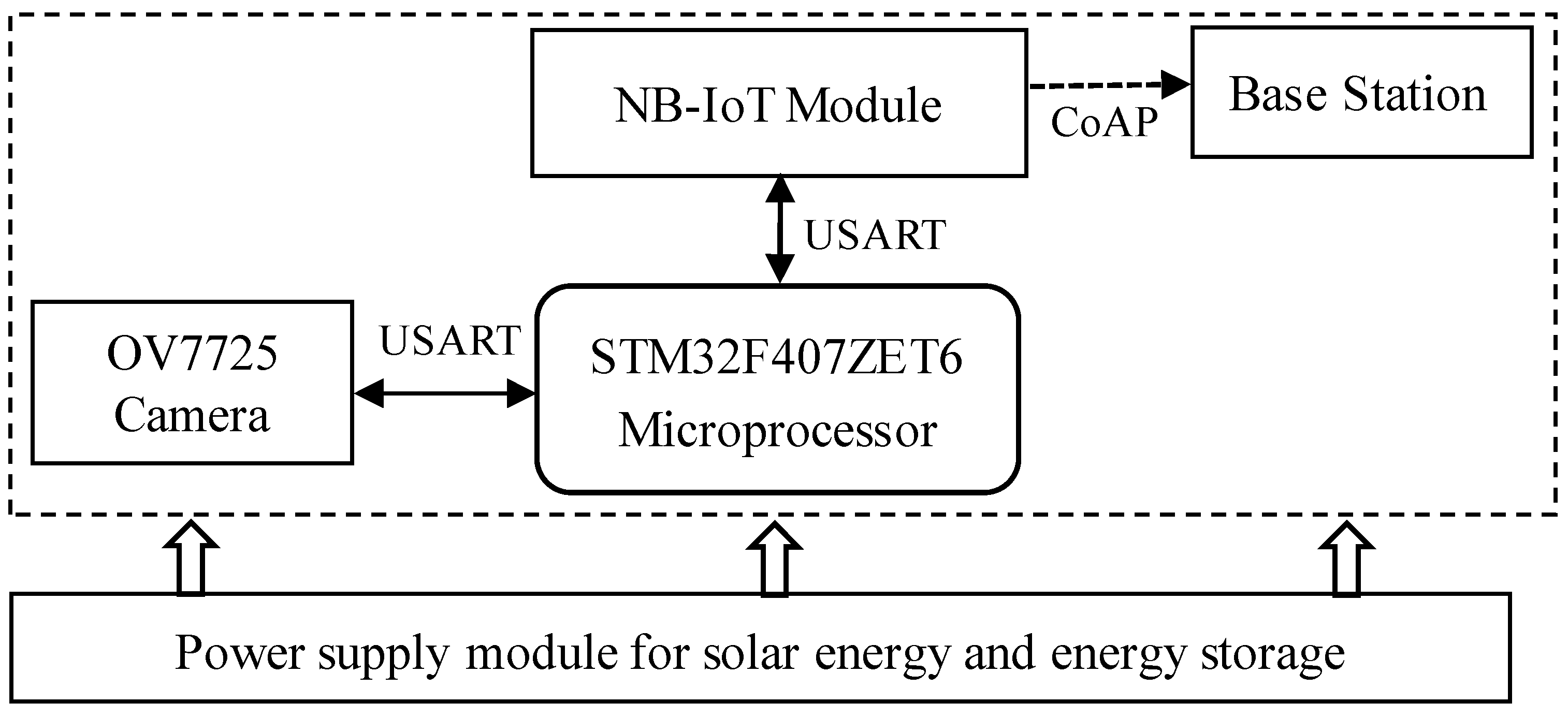

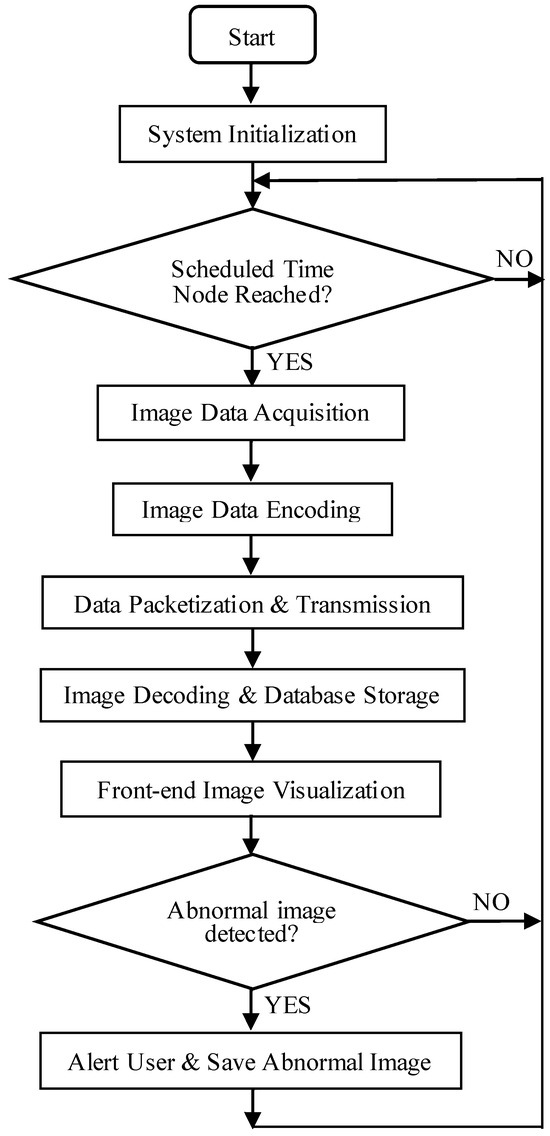

The system hardware primarily comprises a wide-area visual monitoring setup, consisting of four core components: an OV7725 camera sensor(Omnivision, Shanghai, China), an STM32F407ZET6 processor(STMicroelectronics, Lausanne, Switzerland), an NB-IoT communication module(China Mobile IOT, Chongqing, China), and a solar-powered energy supply unit. The OV7725 camera sensor captures image data at scheduled intervals and transmits it to the ARM-based processor for subsequent analysis. The perception layer relies on solar energy for power supply. During the day, the solar energy system charges the solid-state energy storage capacitor while also supplying power to modules such as the ARM processor, camera, and NB-IOT. At night, the solid-state energy storage capacitor releases stored energy to provide the electrical power required for the perception layer to operate.

3.2.1. Hardware Architecture of the System Perception Layer

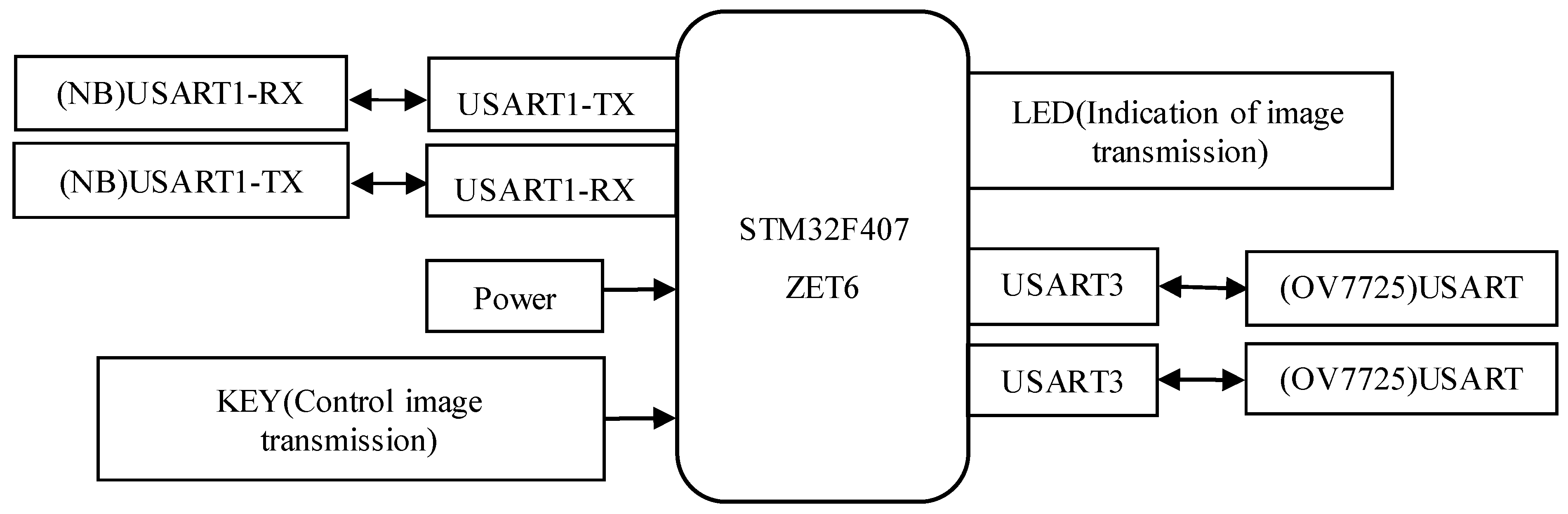

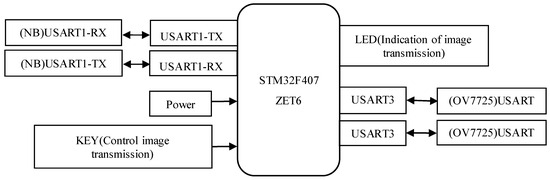

In the proposed design, the STM32F407ZET6 microcontroller, based on the ARM Cortex-M4 core, is selected as the central processing unit. Communication with the NB module is established via an RS-232 serial interface, while the OV7725 camera sensor interfaces with the main control unit through a USART serial connection. Furthermore, the system is powered by a solar energy supply coupled with an energy storage module. The hardware architecture of the system is illustrated in Figure 2.

Figure 2.

System hardware architecture diagram.

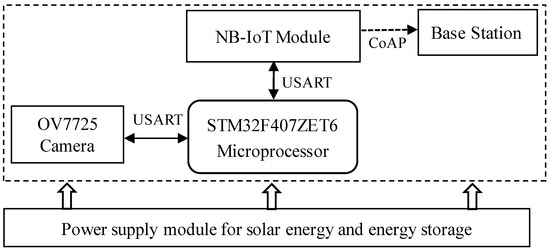

3.2.2. Design of the Hardware Circuitry for the Perception Layer

NB-IoT Module

The data on the maximum power consumption and transmission ranges of various communication modules are presented in Table 1. In wireless wide-area communication, achieving longer transmission distances inherently requires higher power consumption due to propagation losses. These communication technologies also vary in terms of latency and cost, and the best choice depends on the specific application scenario requirements. In the current IoT industry, there is a pressing demand for both extended communication ranges and cost-effective terminal solutions. These requirements can only be met by next-generation IoT chipsets, such as NB-IoT and LoRa, which are specifically designed to balance long-distance connectivity with low power consumption. Both NB-IoT and LoRa belong to the new generation of low-power wide-area network communication technologies, and their maintenance current in low-power working mode is only about 1 microampere. In terms of transmission distance, NB-IoT compensates for its coverage shortcomings through base station density, achieving coverage up to 35 km in suburban areas, but it relies on the coverage range of 4G/LTE networks. LoRa achieves a maximum single-point coverage of up to 15 km, but this is achieved through spread spectrum technology, which directly results in the maximum transmission rate typically below 50 kbps, much lower than the 250 kbps of NB-IoT communication. A comprehensive comparison reveals that LoRa is more suitable for low-data-volume, long-distance application scenarios such as agricultural monitoring and smart manhole covers, while NB-IoT is more suitable for frequent data interaction or low-latency requirements such as smart meter readings and real-time monitoring.

Table 1.

Relevant indicator information of communication module.

NB-IoT (Narrowband Internet of Things) is a dedicated communication network developed on the basis of cellular wireless infrastructure. It was introduced and promoted by network operators and telecommunications equipment manufacturers [27]. Operating within the licensed frequency bands of cellular networks, NB-IoT ensures stable and secure communications, effectively minimizing interference from other wireless devices. It supports CoAP protocol-based data transmission over extensive geographic areas [28,29,30]. When integrated with existing LTE network spectrum resources, NB-IoT can significantly reduce hardware costs. As an emerging wide-area network and wireless communication access solution, it is well-suited for large-scale deployment across diverse regions. From the very beginning of its standard design, NB-IoT technology has fully considered large-scale, low-cost, and low-power IoT deployment scenarios. In terms of handling network congestion, we adopt PSM/eDRX signaling reduction technology for prevention, and optimized RACH, multi-carrier, ACB/EAB access control, and QoS priority scheduling technologies for mitigation. In terms of handling latency, we utilize control plane optimization methods for fast transmission of small data packets, a power-saving mode that sacrifices real-time performance for delay tolerance, and priority scheduling technology to ensure low latency for critical services. In terms of ensuring QoS, we adopt differentiated services based on QCI, intelligent wireless resource scheduling, dedicated bearers in the core network, and retransmission to ensure high-reliability transmission. For example, In Power-Saving Mode (PSM), the system enters a deep sleep state, maintaining only essential active components, such as the clock, with microampere-level current consumption, thereby minimizing energy usage. During this state, the NB-IoT module remains registered on the remote CoAP network.

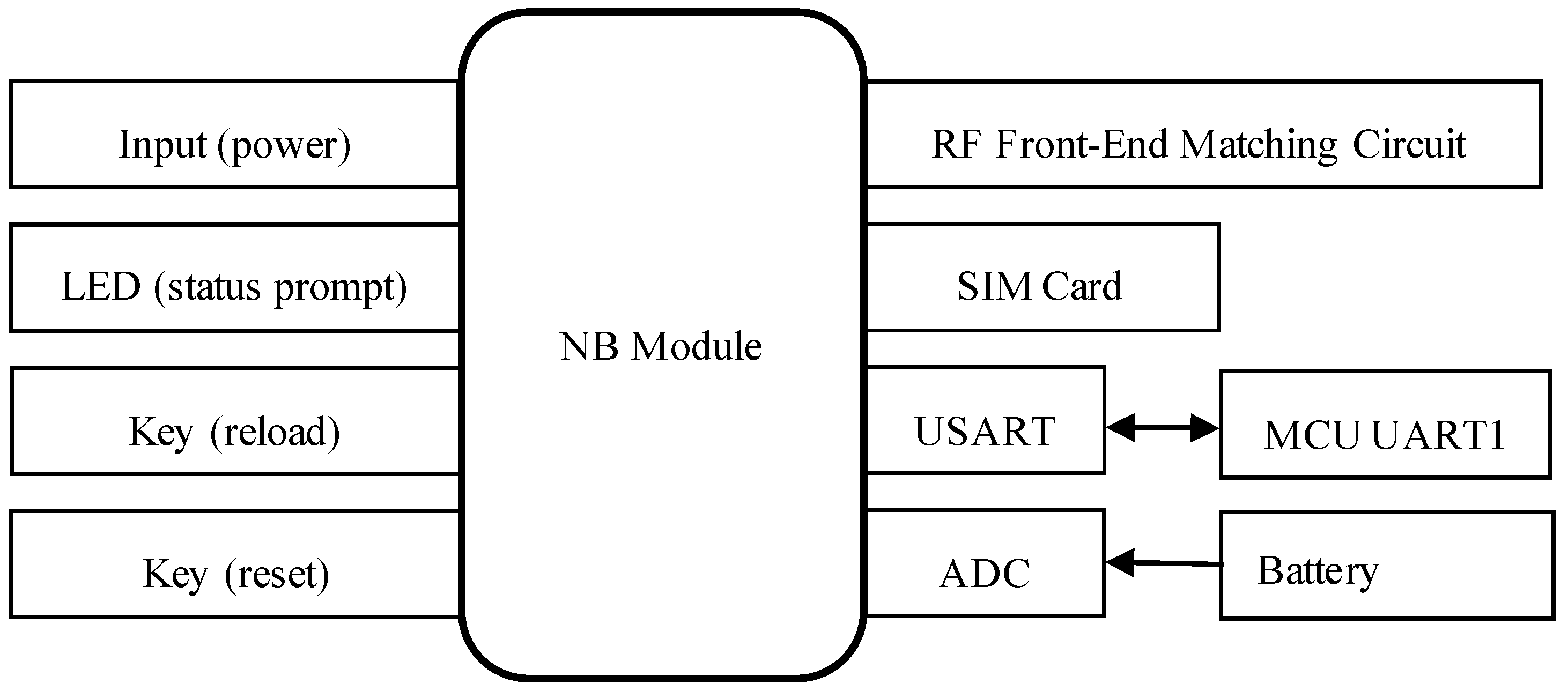

The peripheral hardware of the NB module in the system design includes a power supply unit, a status indicator LED, a reload button, a reset button, an antenna, and a SIM card. The baud rate for serial communication between the NB module and the main control MCU is configured to 9600. The schematic diagram of the NB module’s peripheral circuitry is presented in Figure 3. It is important to note that, when designing the antenna interface, a π-type impedance matching network is required for proper antenna connection. Additionally, the power supply voltage should be maintained as close as possible to the recommended 3.7 V to ensure optimal performance of the NB module.

Figure 3.

Design diagram of the peripheral circuit of the NB module.

STM32F407ZET6 Processor

The STM32F4 series, developed by STMicroelectronics, is a high-performance microcontroller family based on the ARM Cortex-M4 core, featuring a CPU clock frequency of up to 168 MHz. This series incorporates single-cycle DSP instructions and an integrated floating-point unit (FPU), significantly enhancing computational performance. The design requires high-speed serial communication, and the USART interface of the STM32F4 series supports data transmission rates of up to 10.5 Mbit/s. Compared with the STM32F1 series, the STM32F4’s serial interface enables reliable communication and data exchange with a camera module’s serial port, achieving an accuracy rate of up to 100%. This effectively resolves the issue of incomplete image data caused by data loss due to the lower serial transmission rates in the STM32F1 series [31,32]. Early testing revealed that when the USART3 of the STM32F103ZET6 rapidly received hexadecimal data transmitted by the OV7725 image sensor, the error and loss rate reached as high as 80%.

The peripheral circuit of the main control unit STM32F407ZET6 is shown in Figure 4. The two serial ports, USART1 and USART3, communicate with the NB module and the OV7725 camera respectively via serial communication, with baud rates set to 9600 and 115200. Upon triggering a single capture command via the button, the processor transparently transmits the data to an IoT terminal supporting the CoAP protocol, with an LED indicating the transmission status.

Figure 4.

Design diagram of the peripheral circuit for the main control STM32F407ZET6.

OV7725 Camera Sensor

Currently, CMOS cameras are extensively employed across diverse application scenarios, including products from manufacturers such as Hynix, Micron, and Samsung. The OV7725 camera module, developed by SmartView, supports continuous video output at resolutions up to 640 × 480 pixels at 60 Hz, offers high sensitivity, and incorporates a standard SCCB interface. It is equipped with multiple advanced features, including automatic edge enhancement, adaptive noise adjustment and suppression, frame synchronization modes, automatic band-pass filtering, and automatic white balance, all of which can be configured as required [33,34]. In this design, the OV7725 camera is selected for surveillance image acquisition, utilizing the RS232 communication standard to interface with the STM32F407 main control core unit. A single-shot command is executed to capture instantaneous images, which are then obtained in JPEG format.

3.2.3. Design of Image Encoding

The system employs the OV7725 camera sensor to capture raw data in JPEG format. The communication protocol specifications for the OV7725 camera sensor are summarized in Table 2, with the communication baud rate configured at 115,200 bps.

Table 2.

OV7725 camera sensor communication protocol.

The system processor communicates with the OV7725 camera sensor via serial communication in accordance with the specified protocol, transmitting corresponding data in hexadecimal format and processing the received data. The hexadecimal representation of JPEG image data begins with 0xFF and 0xD8 and terminates with 0xFF and 0xD9.

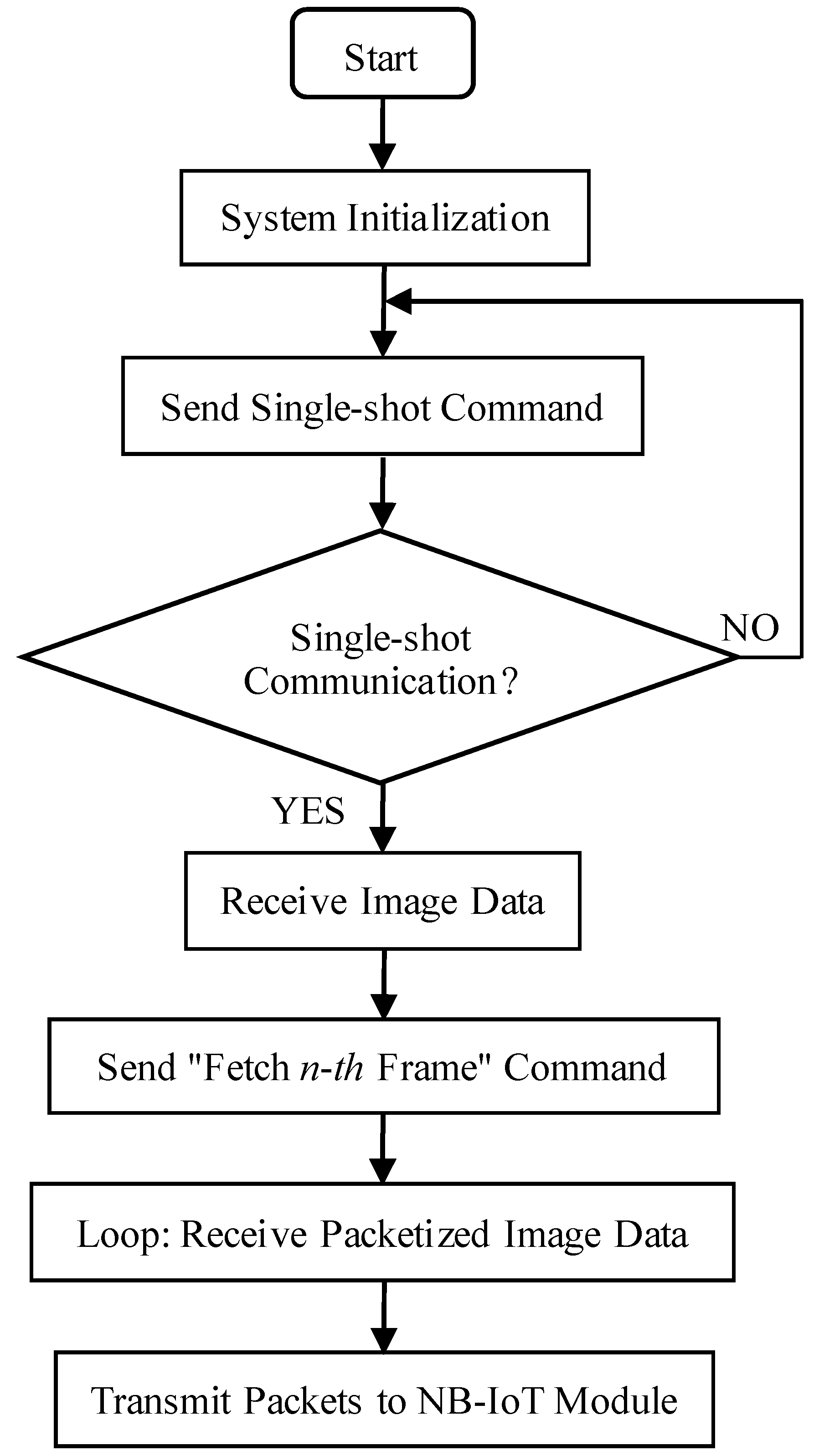

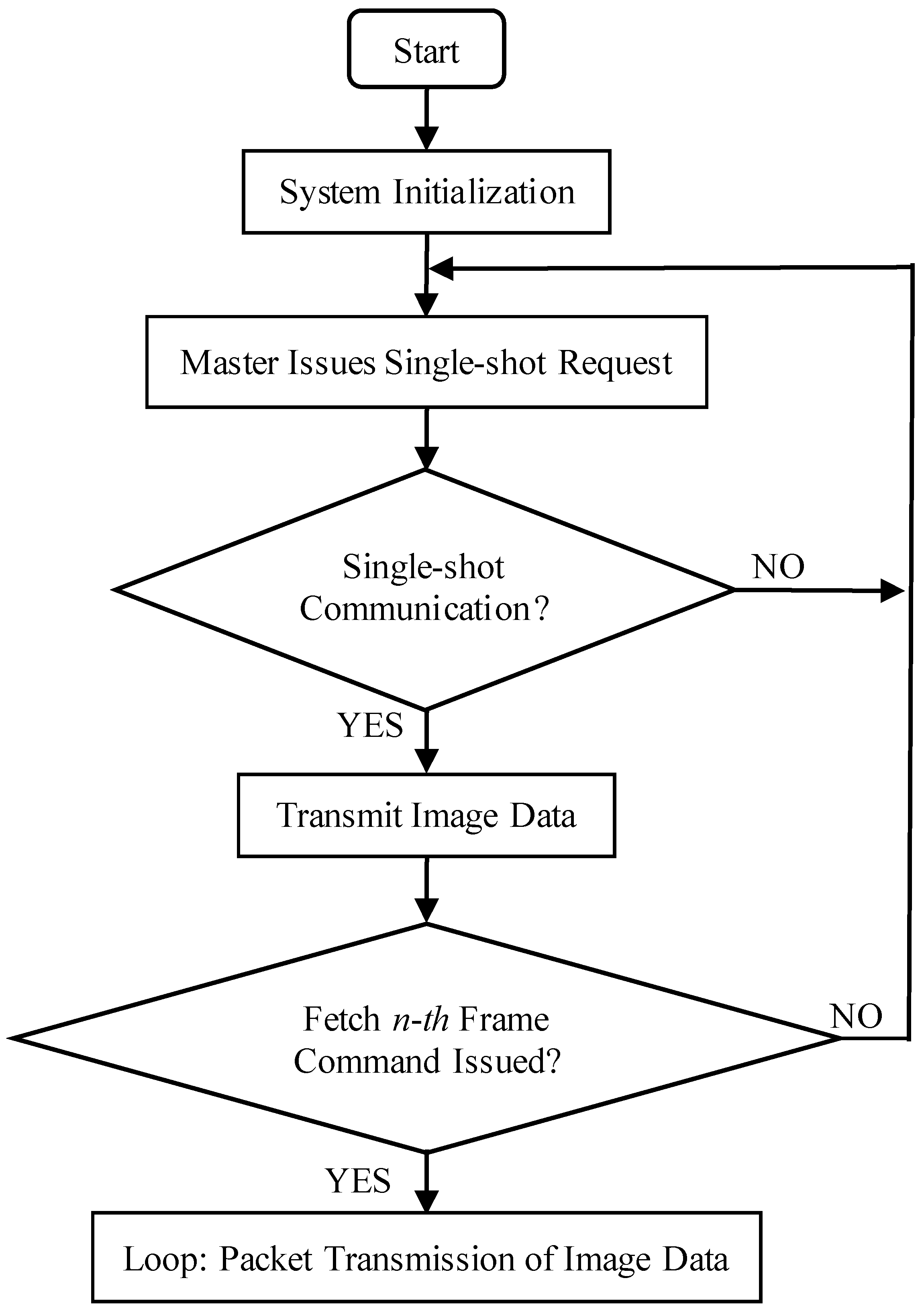

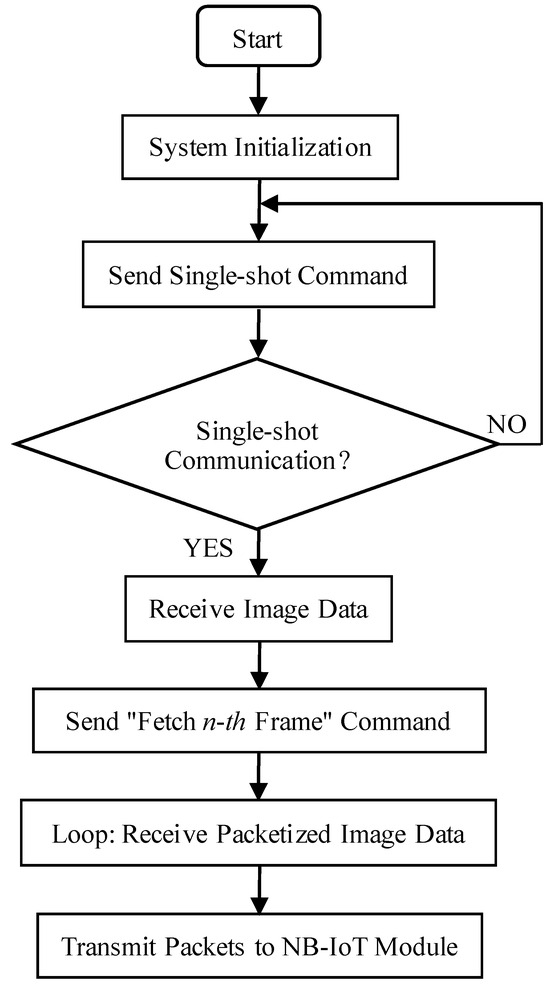

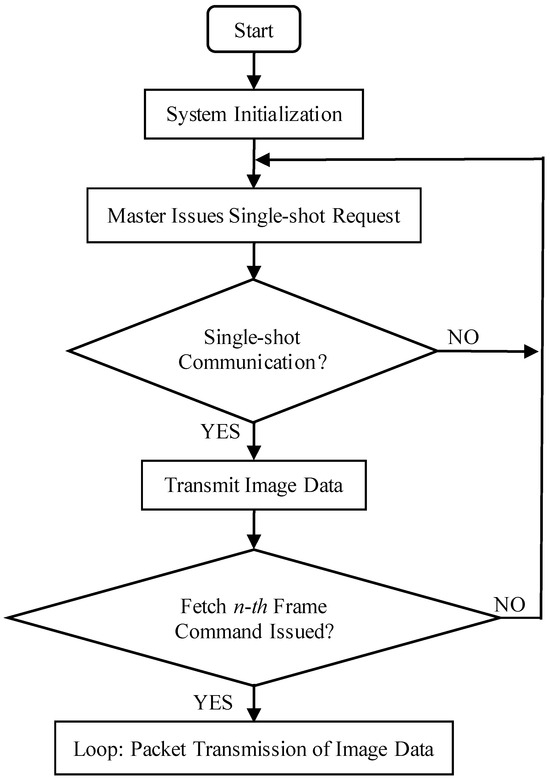

The communication process between the processor and the OV7725 camera sensor is illustrated in Figure 5, and the corresponding communication flowchart is presented in Figure 6. Initially, a hexadecimal command for a single-frame capture instruction is transmitted via the SCCB interface (see Table 2 for details). Upon receiving this command, the OV7725 immediately transfers image format data to the STM32F407 processor. Once the processor detects that the camera has completed the single-frame capture, it issues the command to retrieve the first frame of image data, as specified in Table 2, and subsequently initiates the data acquisition process.

Figure 5.

Master UART communication flowchart.

Figure 6.

OV7725 communication flowchart.

This acquisition process employs either a for or while loop to iterate under predefined conditions (e.g., valid HREF level), sequentially obtaining single-frame image data, where 0xn denotes the n-th frame. After the data is collected through conditional checks and iterative reading, the processor transmits it directly to the NB module. It is essential to ensure that the send buffer size of the USART3 serial port is appropriately configured (e.g., 1 KB) and that the buffer is cleared after each transmission cycle to prepare for subsequent data transfers.

3.3. Design of the System Service Layer

The design of the service application layer is implemented using the Spring Boot framework, integrated with Spring Cloud distributed microservice components. Service registration is performed through Eureka. Feign, in conjunction with Nginx, is employed to achieve a two-tier software load balancing mechanism. RabbitMQ serves as the message-oriented middleware. Mycat is utilized as the distributed MySQL middleware. WebSocket technology is applied to establish full-duplex communication with the IoT server. Upon receiving underlying image data, the system performs decoding operations, stores the processed results in a distributed MySQL cluster database, and exposes both RESTful and Web Service APIs. The frontend design adopts the Vue.js framework, deployed within a Node.js runtime environment. Data communication with backend RESTful and Web Service interfaces is implemented using the Axios library, encapsulated within Vue, to execute asynchronous AJAX requests. This architecture supports real-time data retrieval, enabling the visualization of monitoring information directly on the user interface. In cases of anomaly detection, the system issues timely alerts to users to ensure prompt responses.

3.3.1. Design of Image Decoding

Prior to transmitting images from the bottom layer, a data verification header and verification tail are appended, ensuring that the enclosed data conforms to the hexadecimal JPEG image format standard. Before publishing data to the broker via the MQTT protocol, the device must first subscribe to the designated topic [35,36]. MQTT is a TCP-based publish/subscribe protocol known as Message Queuing Telemetry Transport.

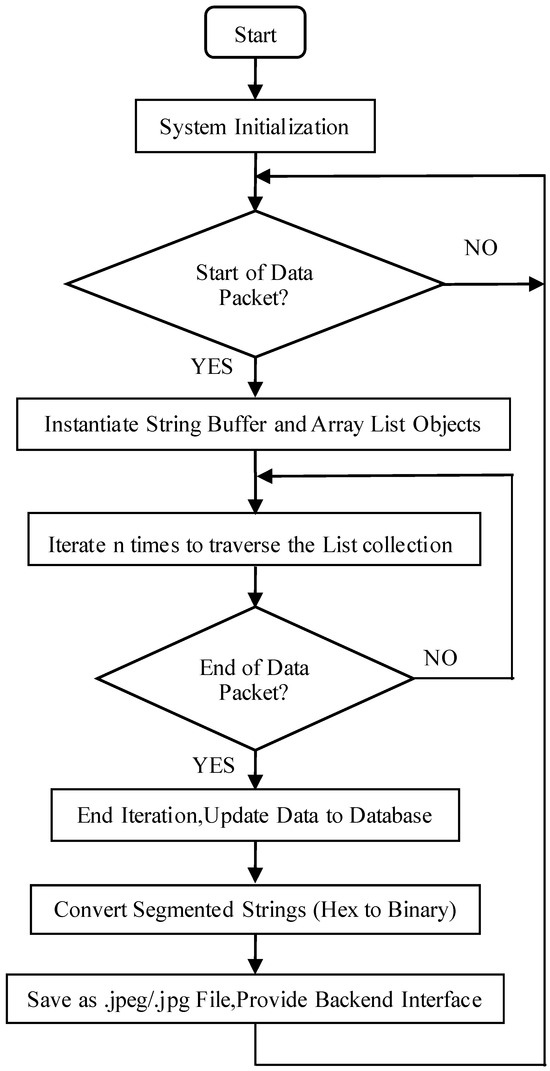

After successfully subscribing to the device, the system can continuously receive real-time data transmitted from the underlying layer, thereby establishing WebSocket full-duplex communication with the Spring Boot backend. Upon data reception, the backend first verifies the presence of a data header. If a header is detected, a ‘StringBuffer’ object and an ‘ArrayList’ collection object are instantiated. Once initialized, each incoming data segment is appended to the ‘StringBuffer’ through iterative concatenation. After multiple iterations, the ‘StringBuffer’ contains the complete image data in the required hexadecimal format.

It is important to note that when the underlying image encoding is transmitted to the service layer, the continuous nature of the data stream may cause issues if a ‘String’ type is used for reception. Therefore, the service layer employs a ‘StringBuffer’ object to accommodate variable-length strings. However, improper state management of the ‘StringBuffer’ can lead to residual data from previous transmissions, resulting in concatenation errors. To prevent this, the ‘StringBuffer’ session must be cleared each time new image data is received.

Once a complete image data packet has been assembled, it is extracted and stored in a ‘List’ collection object. Furthermore, during the WebSocket connection between the frontend and backend, variables must be cleared promptly after each transmission cycle to prepare for subsequent data reception. Failure to do so may result in data overwriting, thereby preventing the correct retrieval of hexadecimal-encoded JPEG image data.

After appropriate preprocessing, the data is mapped to the persistence layer via DataJpa, specifically targeting a MySQL database. Once the image data is stored, the hexadecimal representation is converted into a binary format and saved as a file in the project’s root directory, using either the .jpg or .jpeg format. Subsequently, a backend interface is implemented to map the stored data to static image resources. The detailed procedure for decoding JPEG data is illustrated in Figure 7.

Figure 7.

JPEG image data decoding workflow.

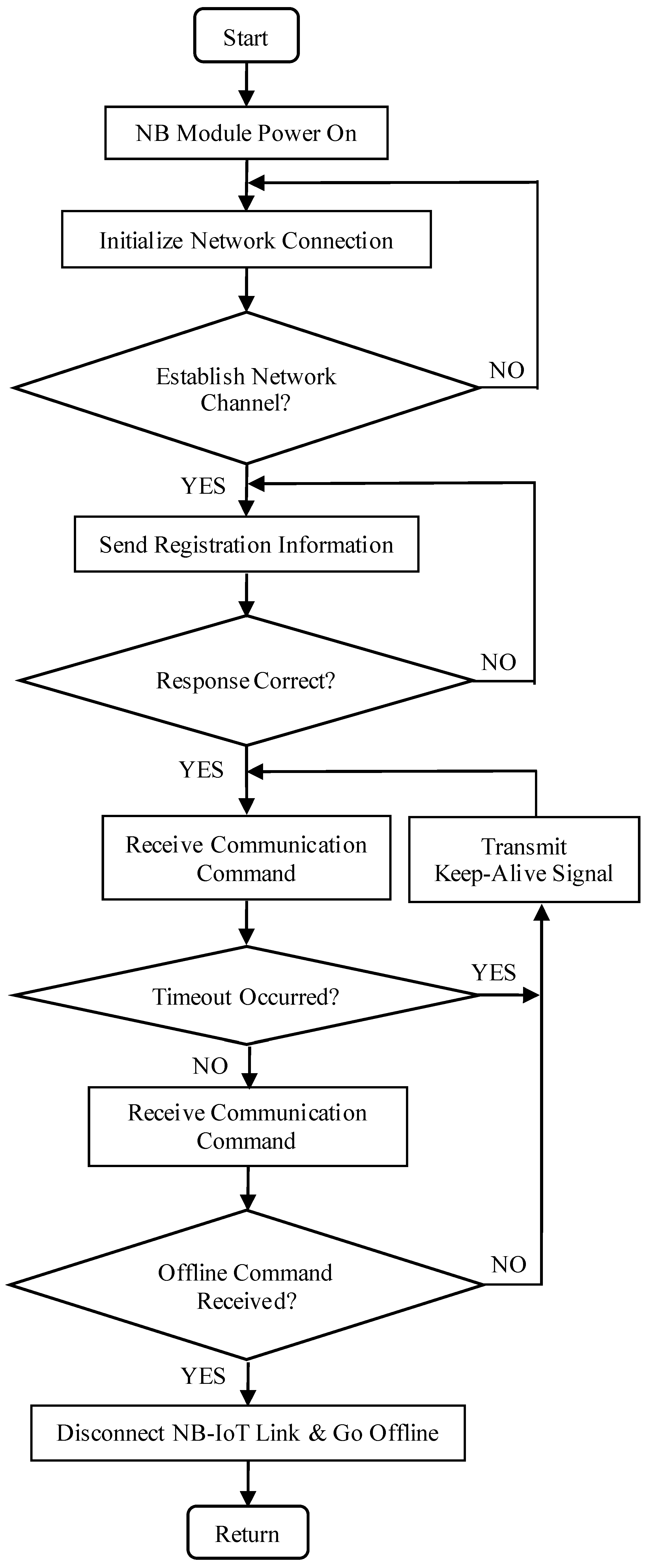

3.3.2. NB-IoT Communication Process

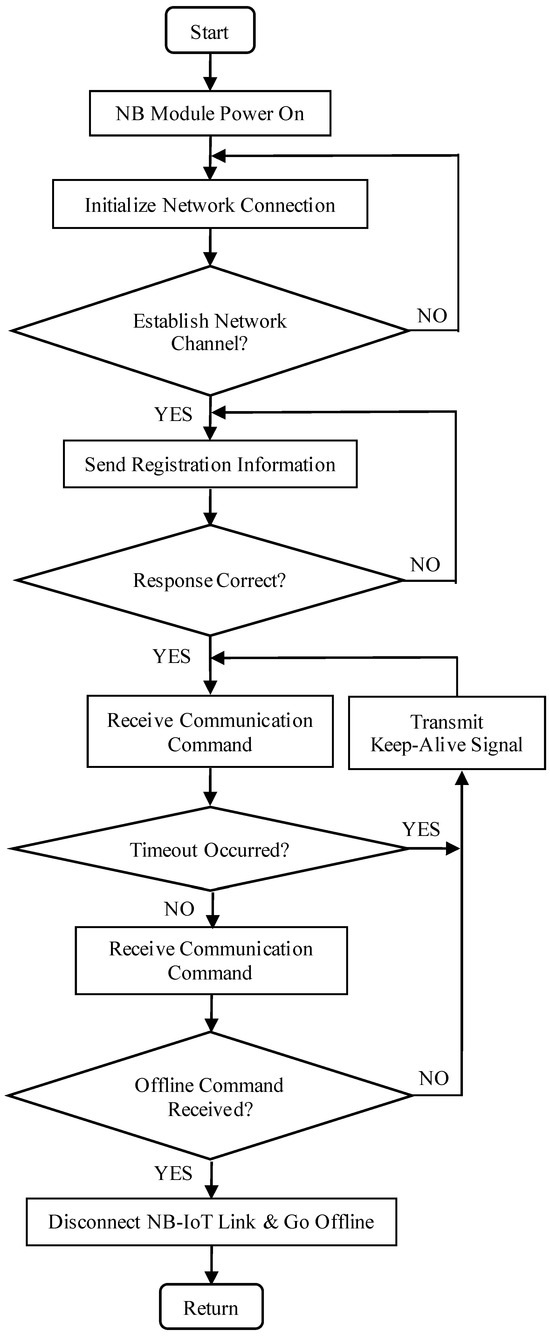

The NB module connects to the internet via a SIM card and employs the UDP/IP protocol stack to encapsulate data using the CoAP application-layer protocol for transparent transmission. The encapsulated data is relayed to nearby base stations, enabling communication with remote IoT servers. The IoT servers subsequently forward the image data packets to the service layer for further processing. The specific communication workflow for NB-IoT network residency includes network initialization, communication establishment, command reception, and other steps, as illustrated in Figure 8.

Figure 8.

NB-IoT-to-server communication protocol flow.

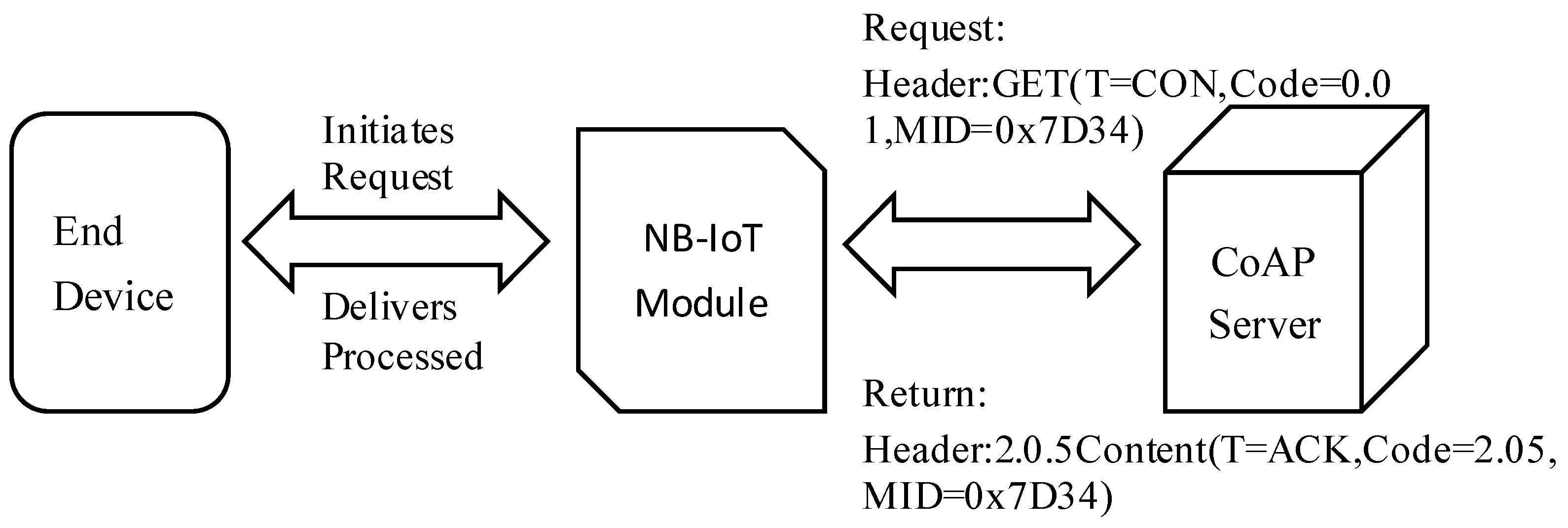

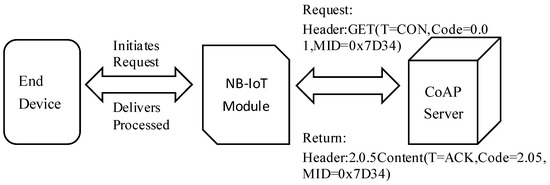

3.3.3. Transparent Transmission Principle Based on CoAP Protocol

NB-IoT primarily employs the lightweight CoAP for transparent data transmission [37]. Terminal devices encapsulate service data in the CoAP format and transmit it to the NB-IoT module. The module issues a GET request method with an optimized set of parameters embedded in the header. Upon receiving the GET request, the network-side service performs resource queries or executes device management operations, subsequently returning a corresponding response to the NB-IoT module. The response header contains the relevant service data, which the NB-IoT module parses and processes before the payload is transparently delivered to the terminal device. This architecture effectively exploits the short-packet characteristics of CoAP over UDP, in conjunction with the power-saving modes of NB-IoT (PSM/eDRX), thereby substantially reducing terminal power consumption and air-interface signaling overhead. The operational flow of transparent transmission within the NB-IoT module is illustrated in Figure 9.

Figure 9.

CoAP-based data passthrough mechanism diagram.

3.4. Design of the System Network Application Layer

3.4.1. Database Construction

Currently, mainstream databases include relational systems such as Oracle, MySQL, SQL Server, and SQLite. For database design, this study adopts the MySQL distributed management system. A master-replica cluster is constructed using MySQL middleware to enable read-write separation [38]. Furthermore, the database is vertically partitioned according to business function modules, while horizontal sharding of data is implemented using the modulo (Mod) algorithm. With the aid of MyCat middleware, routing rules and cluster strategies are specified within the configuration (conf) file.

The primary dataset in the proposed design comprises image data. As the image files are stored in hexadecimal JPEG format, the LONGBLOB data type is employed to accommodate this type of content, with the character encoding uniformly set to utf8mb4. Given that the backend development environment is Java-based, the persistence layer framework leverages the Spring ecosystem, specifically the Spring Data JPA framework. This framework automates the generation of Data Definition Language (DDL) table creation statements and facilitates interaction with the MySQL database through the JDBC driver.

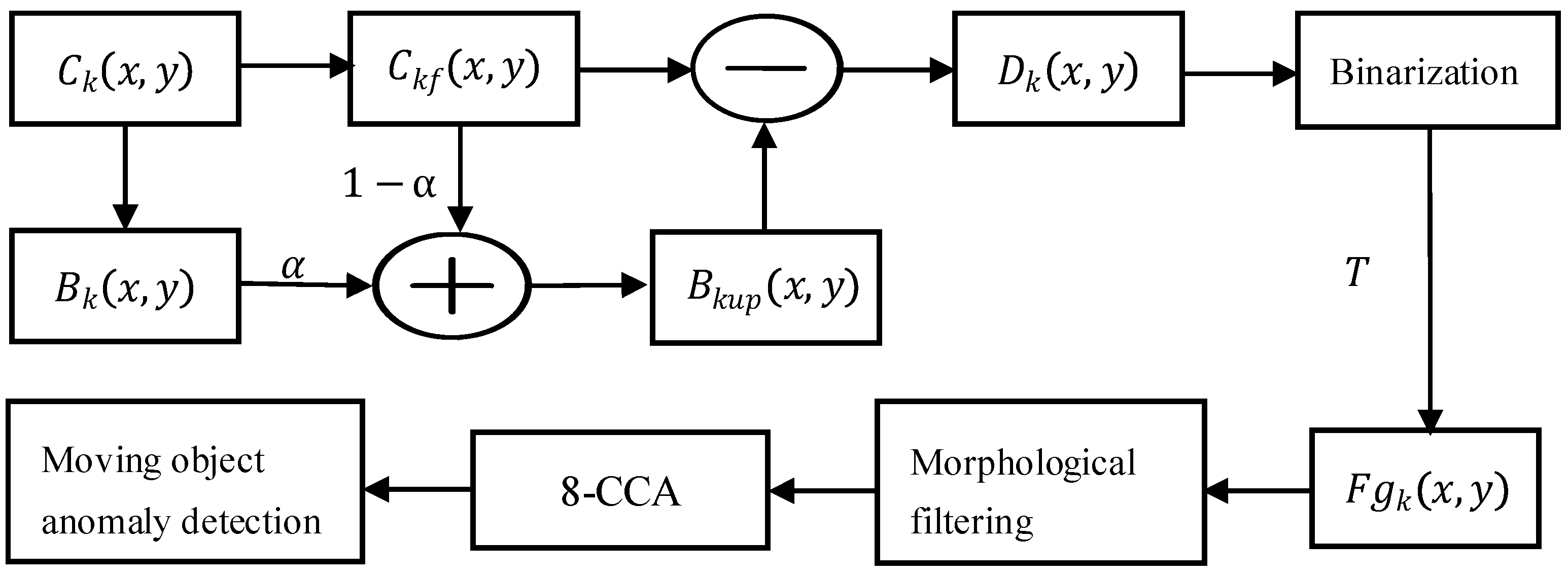

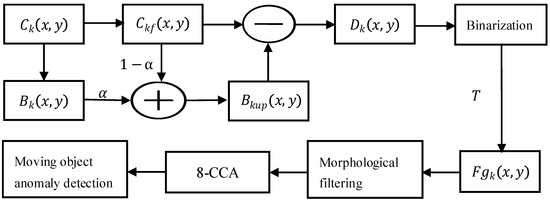

3.4.2. Image Processing Algorithms Design

For video content analysis in wide-area surveillance scenarios with minimal background variation, an optimized background subtraction method is often sufficient for effective implementation. This technique fundamentally operates by computing the difference between the current frame and a reference background frame to identify moving objects and associated anomalies [39,40]. The primary implementation challenge lies in constructing an accurate background model, as its precision directly influences the performance of moving object detection. To ensure robust operation, the background model must adapt dynamically to real-world environmental changes through continuous updates, thereby enhancing detection accuracy [41,42,43]. To meet this requirement, our design integrates background subtraction with adaptive background updating for reliable moving object detection. The overall detection workflow is illustrated in Figure 10.

Figure 10.

Processing pipeline of optimized background subtraction.

Sensor quantization artifacts result in non-uniform grayscale distributions within background imagery, presenting as both granular and impulse noise. To mitigate these effects, an initial Gaussian smoothing operation employing the canonical 1/16 kernel coefficients is applied prior to subsequent processing stages. This convolution-based filtering technique performs pixel-wise weighted averaging by systematically traversing the kernel across the image plane, with the weighted average computed as follows [44]

Per Equation (1), and , respectively, signify the unfiltered and Gaussian-filtered pixel values at coordinates in frame k. The convolution kernel assigns weights per spatial position (e.g., center weight . Boundary handling employs pixel replication to address incomplete neighborhoods.

An adaptive background update strategy refines based on the current frame, as defined in Equation (2), where α represents the learning rate. Each updated background pixel constitutes a weighted average between current and historical values. In implementation, α = 0.9, and the threshold T = 59.87 is determined through iterative optimization.

Binarization is subsequently performed. The absolute difference map, between the current frame and the updated background frame, is computed according to Equation (3).

The foreground region (representing moving objects) was subsequently segmented using threshold T via Equation (4), where T = 59.87 was determined through iterative optimization. The foreground region (representing moving objects) was subsequently segmented using the threshold T, as defined in Equation (4), where T = 59.87 was determined through iterative optimization.

Surveillance imagery is represented as a binary image with pixel values {0, 1}, where contours are denoted by 1. Based on the general principle that the contours of moving objects in consecutive frames do not occupy identical positions, further processing is applied: (1) contour pixels retained from previous frames are suppressed (assigned a value of 0) when they are no longer detected, and (2) newly emerged or persistent contours are preserved (assigned a value of 1). This procedure effectively removes static background artifacts while retaining the true contours of moving objects. The system subsequently verifies the persistence of trajectory formation toward image boundaries. Non-persistent moving objects trigger automated alerts, accompanied by the capture of evidentiary imagery.

4. System Testing and Analysis

4.1. Image Transmission Success Rate Testing and Analysis

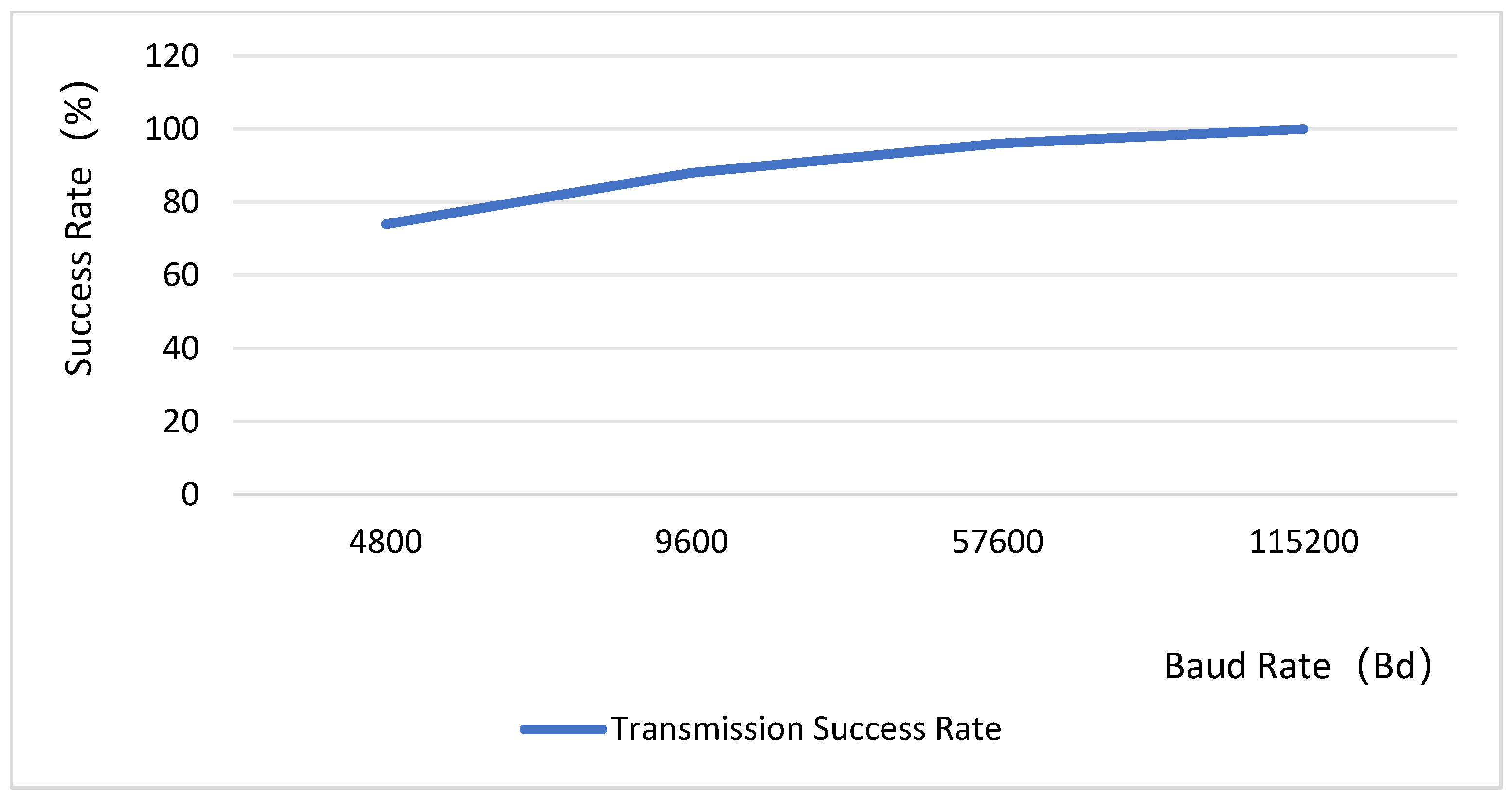

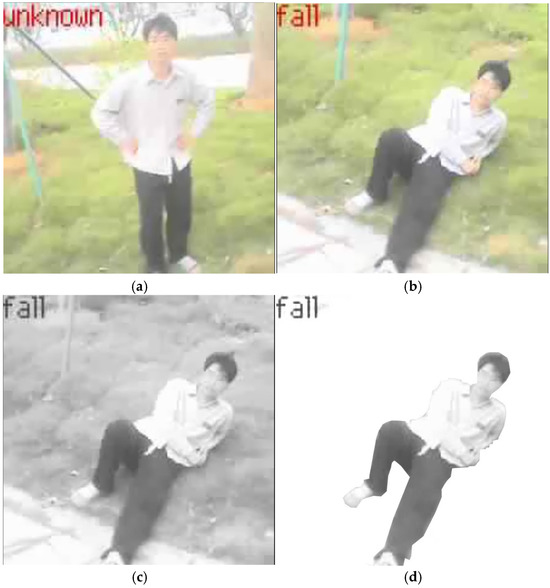

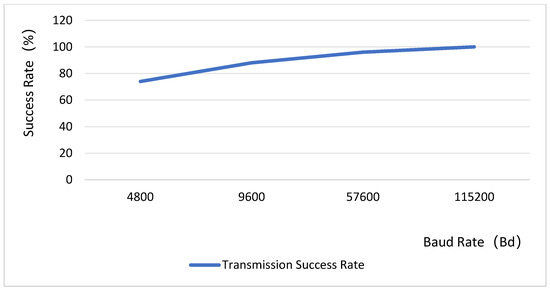

In the design, the baud rate for the bottom-layer image transmission is configured at 115,200 bps. Since the baud rate is inversely related to the transmission distance, a higher baud rate typically results in a reduced effective communication range. The system test image is shown in Figure 11, and the experimental data are presented in Figure 12. Given the minimal distance between the camera and the main control chip, the transmission success rate achieves 100% at a baud rate of 115,200 bps. The test data reveals a rough positive correlation between the success rate of image transmission and the baud rate, indicating a discrepancy between the theoretical general model (Shannon’s theorem) and specific engineering practices. This is because the communication environment between the camera and the main controller is a highly optimized scenario characterized by high bandwidth and high signal-to-noise ratio. Even with a high baud rate, the actual data rate is significantly lower than the channel capacity, far from reaching the channel limit. Therefore, a high baud rate does not lead to an increase in bit error rate. Instead, the primary source of errors becomes clock desynchronization. As the baud rate increases, the absolute total time required to complete the transmission of a frame of image data decreases, while the absolute clock deviation remains constant. The higher the baud rate, the smaller the accumulated error of clock deviation relative to the entire transmission period, making it less likely to drift beyond the edge of the bit position. Consequently, in this specific application of board-level interconnection, increasing the baud rate emerges as one of the most effective means to reduce transmission errors and enhance the success rate. The test results demonstrate that the system achieves a high success rate in image transmission, which is primarily attributed to the appropriate baud rate configuration that minimizes errors from clock synchronization. This characteristic ensures that basic data transmission remains largely unaffected by variations in illumination conditions.

Figure 11.

System experiment images.(a) Normal walking image on site. (b) Abnormal walking image on site. (c) Abnormal walking grayscale image. (d) Abnormal walking grayscale image with background removed.

Figure 12.

System image transmission success rate test.

4.2. Server Load Balancing Testing and Analysis

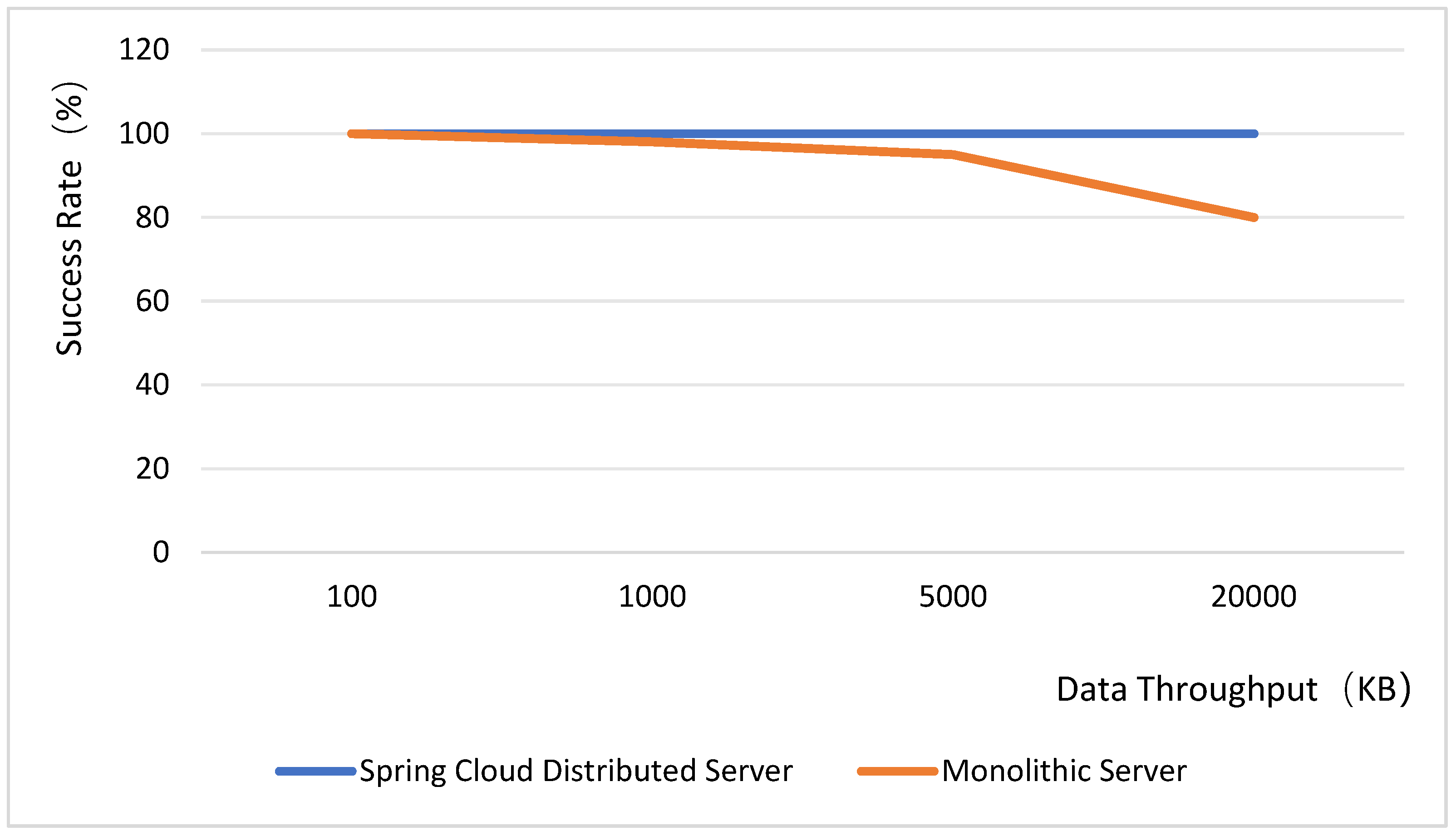

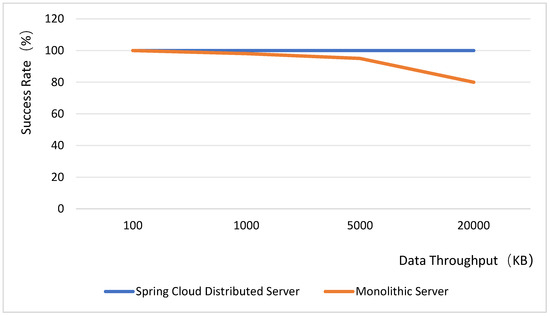

The backend utilizes the Spring Boot framework to develop the web server, integrates Spring Cloud microservices, and employs RabbitMQ as the message queue system. Since images are transmitted concurrently from multiple sources, the server may experience a surge in request traffic, potentially causing a crash. To mitigate this, Feign, integrated within Spring Cloud, is adopted as a load balancer. Regarding the database architecture, MyCat is used as the database middleware, enabling database sharding and read–write splitting through its configuration files. JUC concurrency testing is conducted to compare and analyze the differences in high-concurrency resilience and load performance between Spring Cloud databases and general service loads. The test results are presented in Figure 13. It is important to note that only the backend framework is tested in this context, excluding considerations of thread synchronization and security aspects. Therefore, when incorporating pessimistic and optimistic locking mechanisms into the tests, the success rate of Spring Cloud distributed microservices under high-concurrency request volumes remains at 100% in both scenarios. Tests indicate that the SpringCloud microservices architecture maintains stability under high concurrency conditions. When integrated with load balancers and database sharding strategies, it effectively handles the load from simultaneous multi-location image transmission. This capability is crucial for the system’s stability across diverse operational scenarios.

Figure 13.

Server concurrent load capacity test.

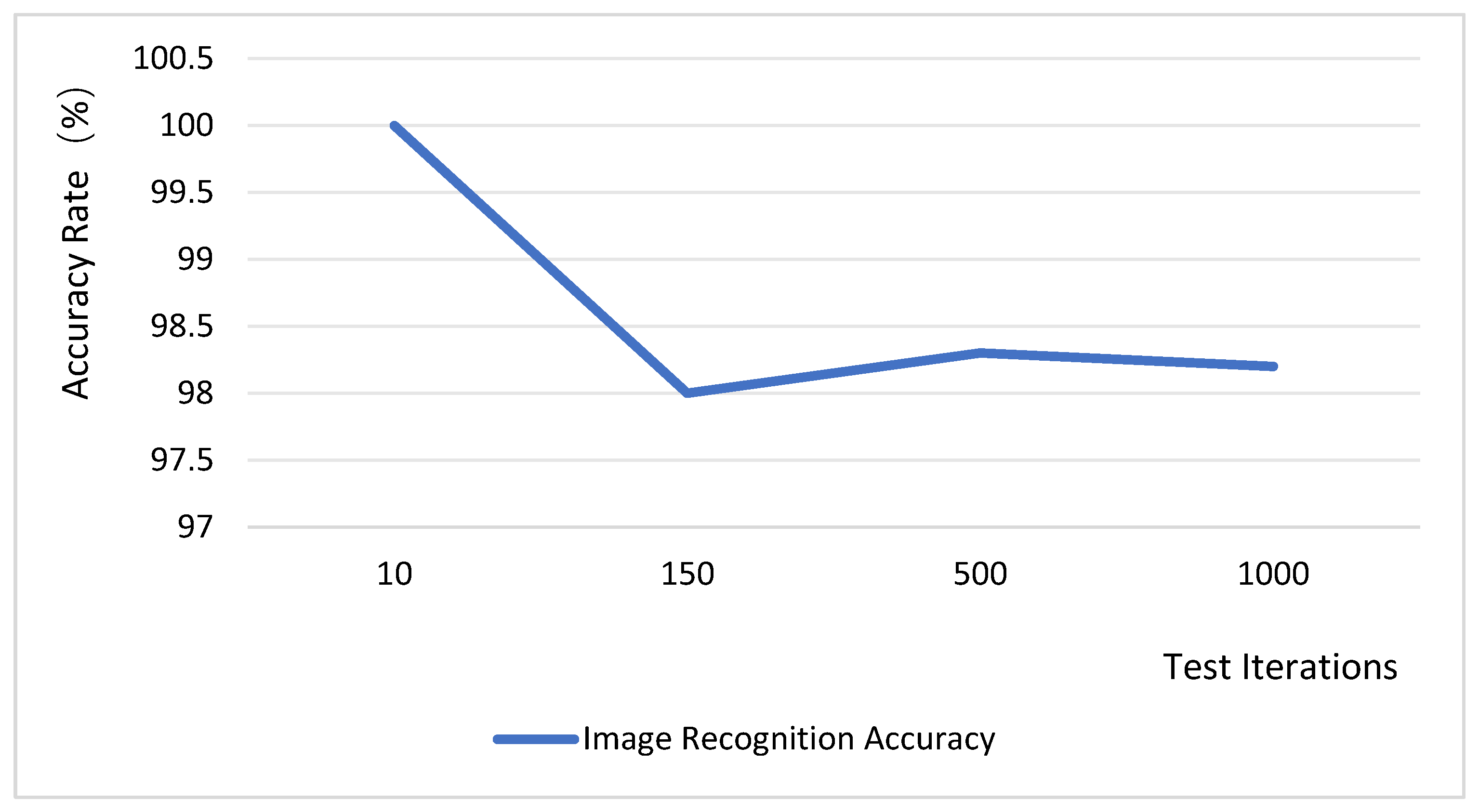

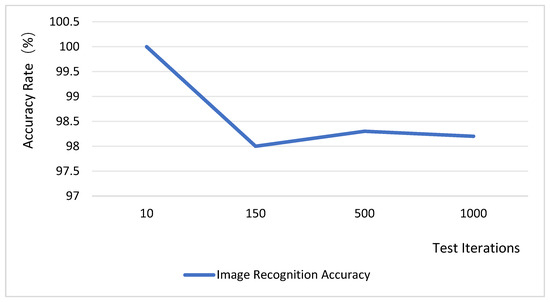

4.3. Testing and Analysis of Accuracy in Identifying Anomalous Images

The system performs threshold segmentation on the JPEG data acquired from the underlying layer, assigning pixel values greater than the threshold to 255 (pure white) and those below the threshold to 0 (pure black). Image anomaly detection is conducted by employing the abnormal image threshold as the classification criterion, which is then compared against inter-frame difference metrics. The data processing success rate was evaluated over 1000 trials, with the test results presented in Figure 14. According to these results, the image recognition accuracy consistently above 98%. These findings demonstrate that the system design is highly reliable. Although the binarization method employed by the system is straightforward, it demonstrates some adaptability to illumination changes, albeit with potential challenges under complex lighting conditions. Future work will explore the introduction of a dynamic threshold adjustment mechanism or the use of complementary multi-sensor data (e.g., from an illuminance sensor) to correct image acquisition, thereby enhancing the system’s overall adaptability to varying illumination.

Figure 14.

Image recognition accuracy rate test.

The system demonstrates considerable robustness to varying illumination in current tests, with its image processing approach proving both reliable and efficient under stable lighting. Concurrently, it exhibits strong adaptability to complex operational scenarios such as high concurrency, where the integrated software-hardware architecture ensures stability during intensive multi-node operations. For applications involving extreme illumination fluctuations or demanding high-fidelity image detail recognition, further optimizations—such as introducing adaptive thresholds and refining node collaboration mechanisms—will be required.

5. Conclusions

The system architecture guarantees reliable and stable performance throughout the entire workflow: starting from data acquisition via communication between the underlying ARM processor and the OV7725 camera sensor, continuing through image processing performed by the STM32F407 master control chip, and extending to server-side operations including decoding of image data transmitted to the user application server, MySQL database storage, and image format conversion. The final processing stages encompass image binarization, user notification for abnormal images, and comprehensive data visualization. Experimental tests have proven that the system’s transmission success rate has reached 100%. When the server faces high concurrent request volumes, the success rate can also stably maintain at 100%. Although the success rate of abnormal image recognition can be maintained above 98%, it has not yet reached 100% reliability, indicate that there is still room for improvement in our image processing algorithms.

By leveraging state-of-the-art Narrow Band Internet of Things (NB-IoT) technology, the design facilitates outdoor wide-area monitoring and early warning functionalities integrated with data visualization. This approach effectively overcomes the inherent limitations of traditional wide-area solutions—namely excessive bandwidth consumption, high power requirements, and constrained device connectivity—thereby achieving extensive coverage, ultra-low power consumption, and massive device connectivity for networked monitoring and visualized data operations. Regarding the research direction of wide-area visual monitoring for narrowband Internet of Things (NB-IoT), our future work will focus on utilizing NB-IoT to transmit more efficient image formats, enhancing the durability of the system across various lighting conditions and operational scenarios, and improving the accuracy and timeliness of abnormal image detection.

Author Contributions

Conceptualization, R.-C.H. and C.X.; methodology, G.Q.; software, G.Q.; validation, R.-C.H. and C. X.; formal analysis, R.-C.H.; resources, R.-C.H.; data curation, G.Q. and W.T.; writing—original draft preparation, W.T.; writing—review and editing, R.-C.H.; visualization, W.T. and C.X.; supervision, R.-C.H.; project administration, R.-C.H. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Fujian Universities and Colleges Engineering Research Center of Modern Facility Agriculture (Grant No.: G2KF2204), and the Computer Basic Education Teaching Research Project (number: 2025-AFCEC-320).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in the study are included in the article; further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Jeon, H.; Kim, H.; Kim, D.; Kim, J. PASS-CCTV: Proactive Anomaly surveillance system for CCTV footage analysis in adverse environmental conditions. Expert Syst. Appl. 2024, 254, 124391. [Google Scholar] [CrossRef]

- Balsa, J.; Fresnedo, Ó.; García-Naya, J.A.; Domínguez-Bolaño, T.; Castedo, L. JSCC-Cast: A Joint Source Channel Coding Video Encoding and Transmission System with Limited Digital Metadata. Sensors 2021, 21, 6208. [Google Scholar] [CrossRef]

- Sato, T.; Katsuyama, Y.; Qi, X.; Wen, Z.; Tamesue, K.; Kameyama, W.; Nakamura, Y.; Katto, J.; Sato, T. Compensation of Communication Latency in Remote Monitoring Systems by Video Prediction. IEICE Trans. Commun. 2024, E107-B, 945–954. [Google Scholar] [CrossRef]

- Enides, S. Unlock New Possibilities with IP-Based Video Surveillance Systems. Available online: https://qgis.org/ (accessed on 1 May 2022).

- Pradhan, G.; Prusty, M.R.; Negi, V.S.; Chinara, S. Advanced IoT-integrated parking systems with automated license plate recognition and payment management. Sci. Rep. 2025, 15, 2388. [Google Scholar] [CrossRef]

- Chuanhui, Z.; Zihao, W.; Zhiming, Z.; Jichang, G. Research on pipeline intelligent welding based on combined line structured lights vision sensing: A partitioned time–frequency-space image processing algorithm. Int. J. Adv. Manuf. Technol. 2024, 134, 5463–5479. [Google Scholar] [CrossRef]

- Iftikhar, K.; Anwar, S.; Khan, M.T.; Djawad, Y.A. An Intelligent Automatic Fault Detection Technique Incorporating Image Processing and Fuzzy Logic. J. Phys. Conf. Ser. 2019, 1244, 012035. [Google Scholar] [CrossRef]

- Mudita; Deepali, G. A Comprehensive Study of Recommender Systems for the Internet of Things. J. Phys. Conf. Series. 2021, 1969, 012045. [Google Scholar] [CrossRef]

- Wang, L.; Yang, H. Design of smart city environment monitoring and optimisation system based on NB-IoT technology. Int. J. Inf. Commun. Technol. 2024, 25, 47–61. [Google Scholar] [CrossRef]

- Siva Balan, R.V.; Gouri, M.S.; Senthilnathan, T.; Gondkar, S.R.; Gondar, R.R.; Loveline, Z.J.; Jothikumar, R. Development of smart energy monitoring using NB-IOT and cloud. Meas. Sens. 2023, 29, 100884. [Google Scholar] [CrossRef]

- Sanz, E.; Trincado, J.; Martínez, J.; Payno, J.; Morante, O.; Almeida-Ñaulay, A.F.; Berlanga, A.; Molina, J.M.; Zubelzu, S.; Patricio, M.A. Cloud-based system for monitoring event-based hydrological processes based on dense sensor network and NB-IoT connectivity. Environ. Model. Softw. 2024, 182, 106186. [Google Scholar] [CrossRef]

- Lin, J.; Xu, X. Design of a textile storage environment fire detection system based on ZigBee and NB-IoT. J. Phys. Conf. Ser. 2024, 2797, 012031. [Google Scholar] [CrossRef]

- Yan, Q.; Guo, D.; Chen, N.; Lv, X.; Li, S. Design of NB-IoT based Portable pH Detecto. J. Phys. Conf. Ser. 2025, 2975, 012021. [Google Scholar] [CrossRef]

- Liu, T.; Qin, F. Study on Industrial Wastewater Pollution Monitoring Technology Based on NB-IoT Wireless Communication Technology. Int. J. Grid High Perform. Comput. 2025, 17, 1–20. [Google Scholar] [CrossRef]

- Mai, Y.; Li, M.; Pei, Y.; Wu, H.; Su, Z. Research and Design of an Intelligent Street Lamp Control System Based on NB-IoT. Autom. Control. Comput. Sci. 2024, 58, 78–89. [Google Scholar] [CrossRef]

- Tang, J.; Zhu, X.; Lin, L.; Dong, C.; Zhang, L. Monitoring routing status of UAV networks with NB-IoT. J. Supercomput. 2023, 79, 19064–19094. [Google Scholar] [CrossRef]

- Ada, F.; Giacomo, P.; Alessandro, P. Quasi-real time remote video surveillance unit for lorawan-based image transmission. In Proceedings of the 2021 IEEE International Workshop on Metrology for Industry 4.0 & IoT (MetroInd4.0&IoT), Rome, Italy, 7–9 June 2021; pp. 588–593. [Google Scholar]

- Dhou, S.; Alnabulsi, A.; Al-Ali, A.R.; Arshi, M.; Darwish, F.; Almaazmi, S.; Alameeri, R. An IoT Machine Learning-Based Mobile Sensors Unit for Visually Impaired People. Sensors 2022, 22, 5202. [Google Scholar] [CrossRef]

- Pronello, C.; Garzón Ruiz, X.R. Evaluating the performance of video-based automated passenger counting systems in real-world conditions: A comparative study. Sensors 2023, 23, 7719. [Google Scholar] [CrossRef]

- Munteanu, D.; Moina, D.; Zamfir, C.G.; Petrea, S.M.; Cristea, D.S.; Munteanu, N. Sea Mine Detection Framework Using YOLO, SSD and EfficientDet Deep Learning Models. Sensors 2022, 22, 9536. [Google Scholar] [CrossRef]

- Jiang, L.; Yan, J.; Xian, W.; Wei, X.; Liao, X. Efficient Access Control for Video Anomaly Detection Using ABE-Based User-Level Revocation with Ciphertext and Index Updates. Appl. Sci. 2025, 15, 5128. [Google Scholar] [CrossRef]

- Cai, F.F.Z.; Jiang, C.Q.; Cheung, R.C.C.; Lam, A.H.F. An AIoT LoRaWAN Control System With Compression and Image Recovery Algorithm (CIRA) for Extreme Weather. IEEE Internet Things J. 2024, 11, 32701–32713. [Google Scholar] [CrossRef]

- Magaia, N.; Gomes, P.; Silva, L.; Sousa, B.; Mavromoustakis, C.X.; Mastorakis, G. Development of Mobile IoT Solutions: Approaches, Architectures, and Methodologies. IEEE Internet Things J. 2021, 8, 16452–16472. [Google Scholar] [CrossRef]

- Benbuk, A.A.; Kouzayha, N.; Costantine, J.; Dawy, Z. Charging and Wake-Up of IoT Devices using Harvested RF Energy with Near-Zero Power Consumption. IEEE Internet Things Mag. 2023, 6, 162–167. [Google Scholar] [CrossRef]

- Wei, Z. The design of library database management system based on MySQL. Appl. Comput. Eng. 2024, 38, 41–50. [Google Scholar] [CrossRef]

- Zhang, W. Greenhouse monitoring system integrating NB-IOT technology and a cloud service framework. Nonlinear Eng. 2024, 13, 20240053. [Google Scholar] [CrossRef]

- Lin, J.-C. NB-IoT Physical Random Access Channels (NPRACHs) With Intercarrier Interference (ICI) Reduction. IEEE Internet Things J. 2023, 11, 5427–5438. [Google Scholar] [CrossRef]

- Dumay, M.; Hassan, H.A.H.; Surbayrole, P.; Artis, T.; Barthel, D.; Pelov, A. Enabling Extremely Energy-Efficient End-to-End Secure Communications for Smart Metering Internet of Things Applications Using Static Context Header Compression. Appl. Sci. 2023, 13, 11921. [Google Scholar] [CrossRef]

- Han, C.; Zhang, W.; Li, M.; Tian, Y. Design of Smart Home System Based on Nb-Iot. J. Phys. Conf. Ser. 2022, 2254, 012039. [Google Scholar] [CrossRef]

- Ou, G.; Chen, Y.; Han, Y.; Sun, Y.; Zheng, S.; Ma, R. Design and Experiment of an Internet of Things-Based Wireless System for Farmland Soil Information Monitoring. Agriculture 2025, 15, 467. [Google Scholar] [CrossRef]

- Wei, W.; Tang, M.; Liu, J. A Method for FM Signal Localization Based on Wavelet Transform and Swin Transformer. In Proceedings of the 2024 2nd International Conference on Artificial Intelligence and Automation Control (AIAC), Guangzhou, China, 20–22 December 2024; pp. 356–364. [Google Scholar]

- Yue, Z.; Linwei, T. Multi-Channel Data Acquisition System Based on FPGA and STM32. J. Northwestern Polytech. Univ. 2020, 38, 351–358. [Google Scholar]

- Zhang, Y.; Li, H.; Zuo, Y.; Li, J.; Meng, S.; Ren, Y. Design of intelligent vehicle for thermal engine power based on OPENMV. In Proceedings of the Third International Conference on Intelligent Mechanical and Human-Computer Interaction Technology (IHCIT 2024), Hangzhou, China, 5–7 July 2024; pp. 8–90. [Google Scholar]

- Enhao, T.; Tong, L.; Kaijun, F.; Junhao, F. Research on Video Image Transmission Method Based on FPGA. IOP Conf. Ser. Mater. Sci. Eng. 2023, 382, 042031. [Google Scholar]

- Chatterjee, R.; Sinha, D.; Bhattacharya, S.; Biswal, L.; Mondal, B.; Bandyopadhyay, C. An IoT-Based Smart Tracking Application Integrated with Global Positioning System (GPS). In Proceedings of the International Conference on Recent Advances in Artificial Intelligence & Smart Applications, Kolkata, India, 14–15 December 2024; pp. 235–246. [Google Scholar]

- Sonam; Johari, R.; Garg, S.; Bawa, P.; Aggarwal, D. MIAWM:MQTT based IoT Application for Weather Monitoring. J. High Speed Netw. 2024, 30, 333–354. [Google Scholar] [CrossRef]

- Bansal, S.; Kumar, D. Enhancing constrained application protocol using message options for internet of things. Clust. Comput. 2022, 26, 1917–1934. [Google Scholar] [CrossRef]

- Tigrine, A.; Houamria, M.; Sahraoui, H.; Dahani, A.; Doumi, N.; Dine, K. A web-based system for real-time ECG monitoring using MySQL database and DigiMesh technology: Design and implementation. Med Biol. Eng. Comput. 2025, 1–25. [Google Scholar] [CrossRef]

- Zhuo, Y.; Han, D.; Xu, Z.; Yu, Y. Improved Mixed Gaussian Model for Background Subtraction Based on Color Channel Fusion. In Proceedings of the 2023 42nd Chinese Control Conference (CCC), Tianjin, China, 24–26 July 2023; pp. 7965–7970. [Google Scholar]

- Dai, Y.; Yang, L. Background subtraction for video sequence using deep neural network. Multimedia Tools Appl. 2024, 83, 82281–82302. [Google Scholar] [CrossRef]

- Xie, B. A fast video coding algorithm using data mining for video surveillance. Front. Phys. 2025, 13, 1633909. [Google Scholar] [CrossRef]

- Ruan, W.; Liang, C.; Yu, Y.; Wang, Z.; Liu, W.; Chen, J.; Ma, J. Correlation Discrepancy Insight Network for Video Re-identification. ACM Trans. Multimedia Comput. Commun. Appl. 2020, 16, 1–21. [Google Scholar] [CrossRef]

- Xu, X.; Hao, X.; Liu, Z.; Królczyk, G.; Stanislawski, R.; Gardoni, P.; Li, Z. A New Deep Model for Detecting Multiple Moving Targets in Real Traffic Scenarios: Machine Vision-Based Vehicles. Sensors 2022, 22, 3742. [Google Scholar] [CrossRef] [PubMed]

- Szeliski, R. Computer Vision: Algorithms and Applications; Springer: Cham, Switzerland, 2022. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).