Deep Learning-Driven Automatic Segmentation of Weeds and Crops in UAV Imagery †

Abstract

1. Introduction

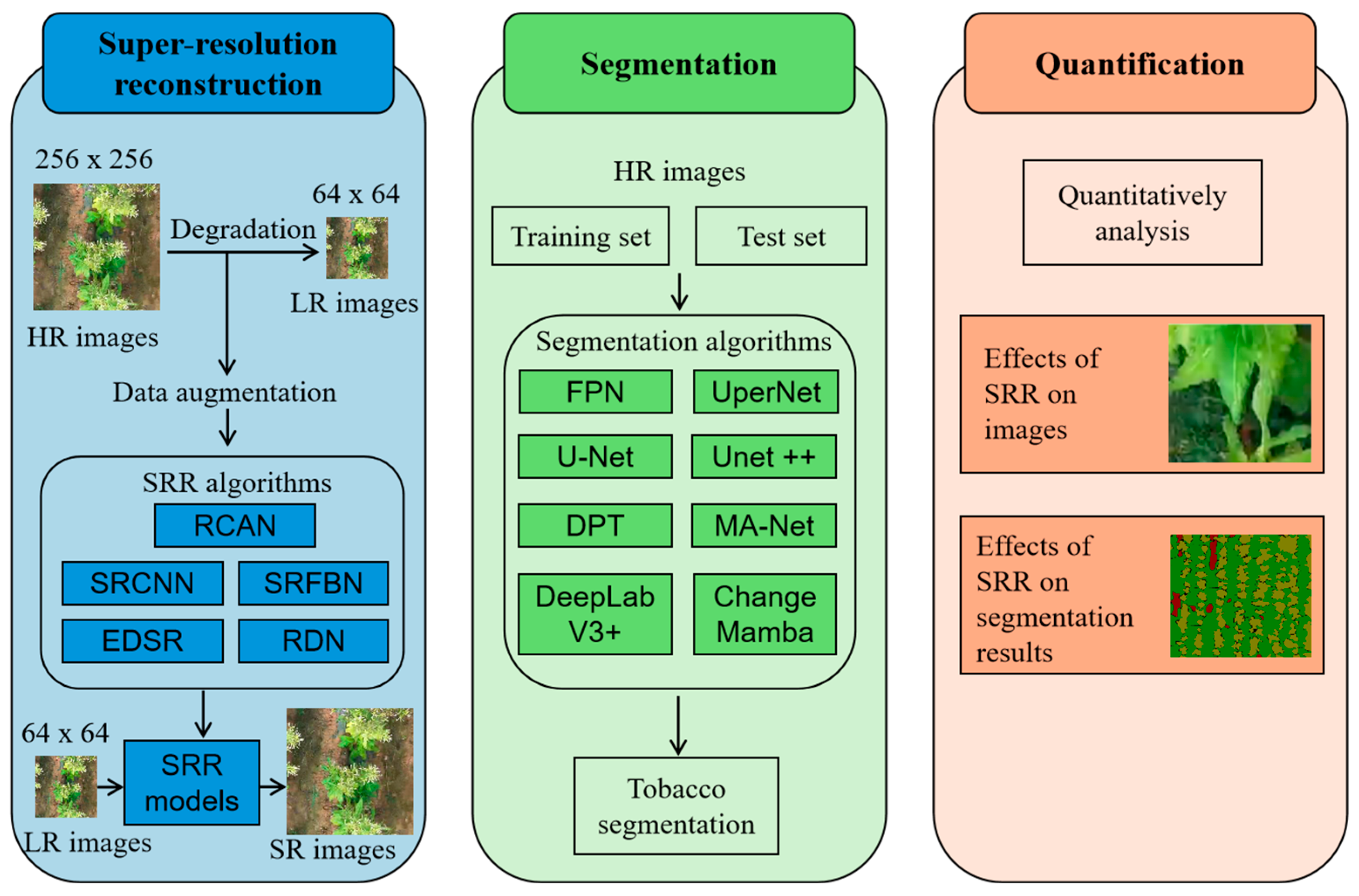

2. Methodology

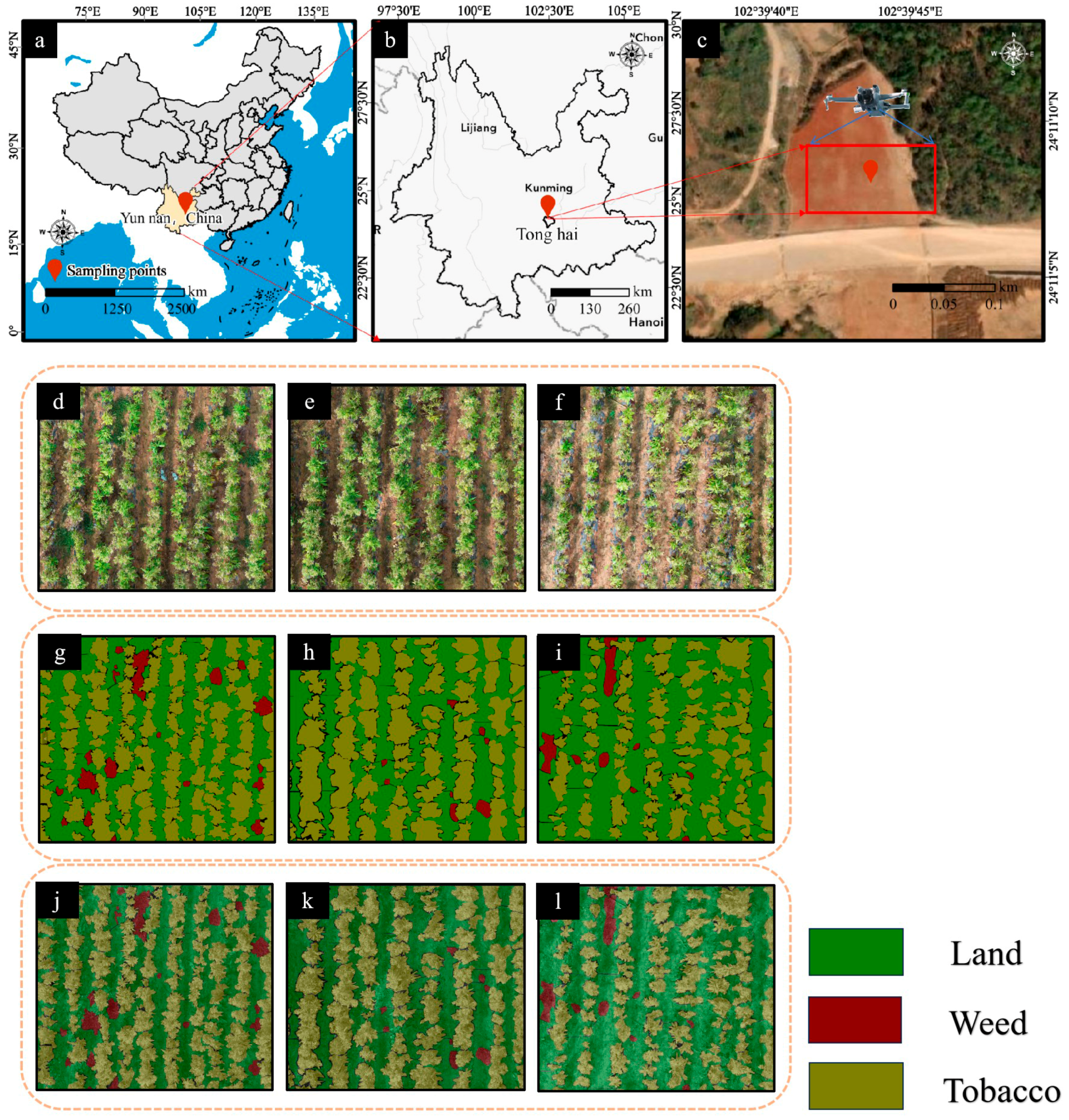

2.1. Survey Site

2.2. Data Collection

2.3. Image Preprocessing

2.4. Super-Resolution Reconstruction

2.4.1. Architecture of SRR Networks

2.4.2. Training of the Networks

2.4.3. Evaluation Metrics

2.5. Tobacco Segmentation

2.5.1. Network Architecture

FPN

UperNet

U-Net

Unet++

DeepLabV3+

MA-Net

DPT

ChangeMamba

2.5.2. Training of the Networks

2.5.3. Evaluation Metrics

3. Result

3.1. Dataset and Experiment Setting

3.1.1. Dataset Description

3.1.2. Experimental Setup

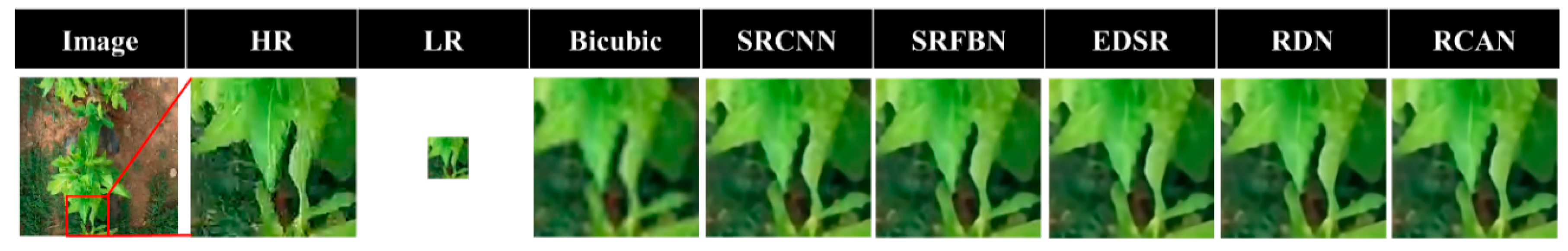

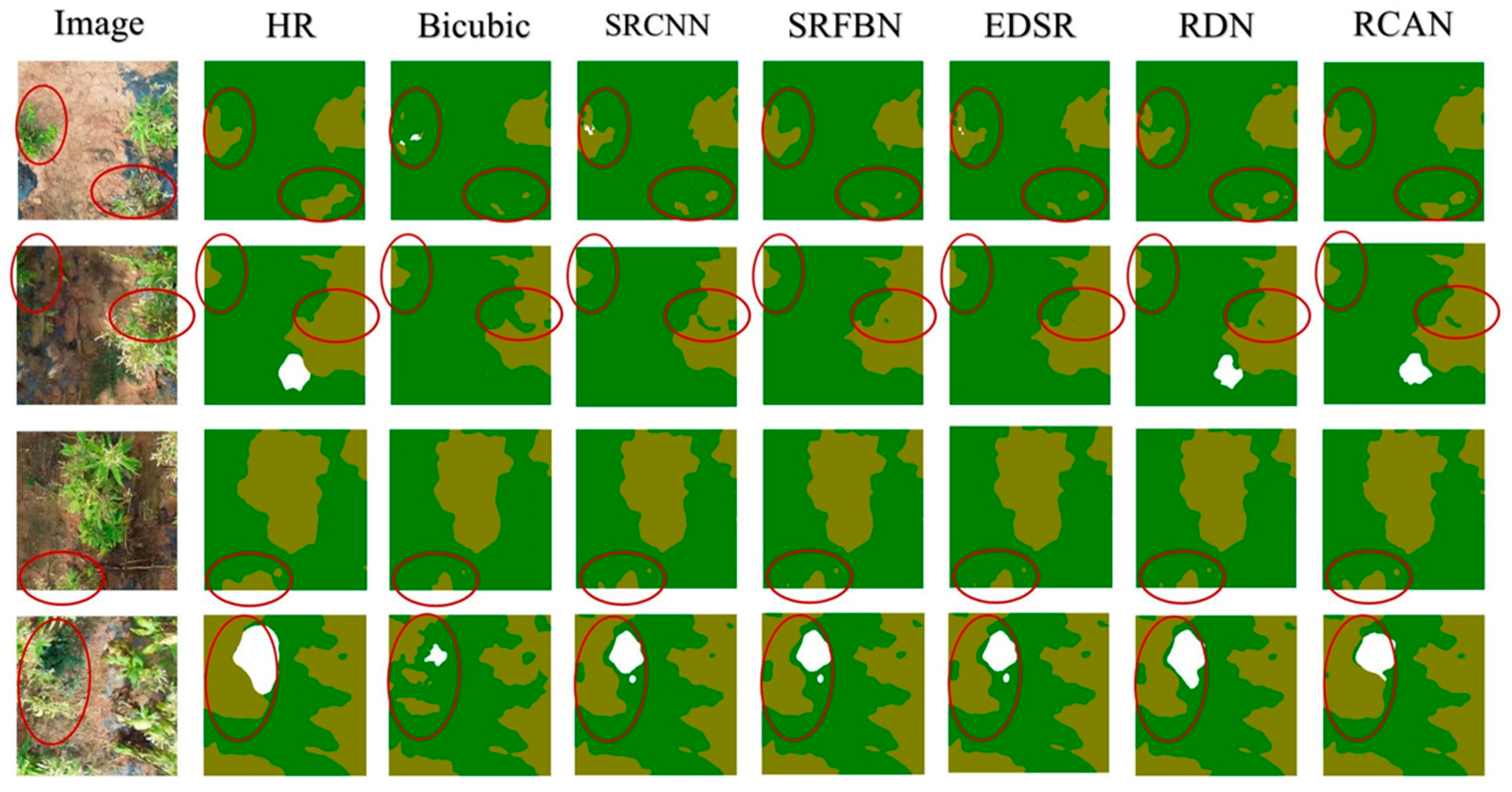

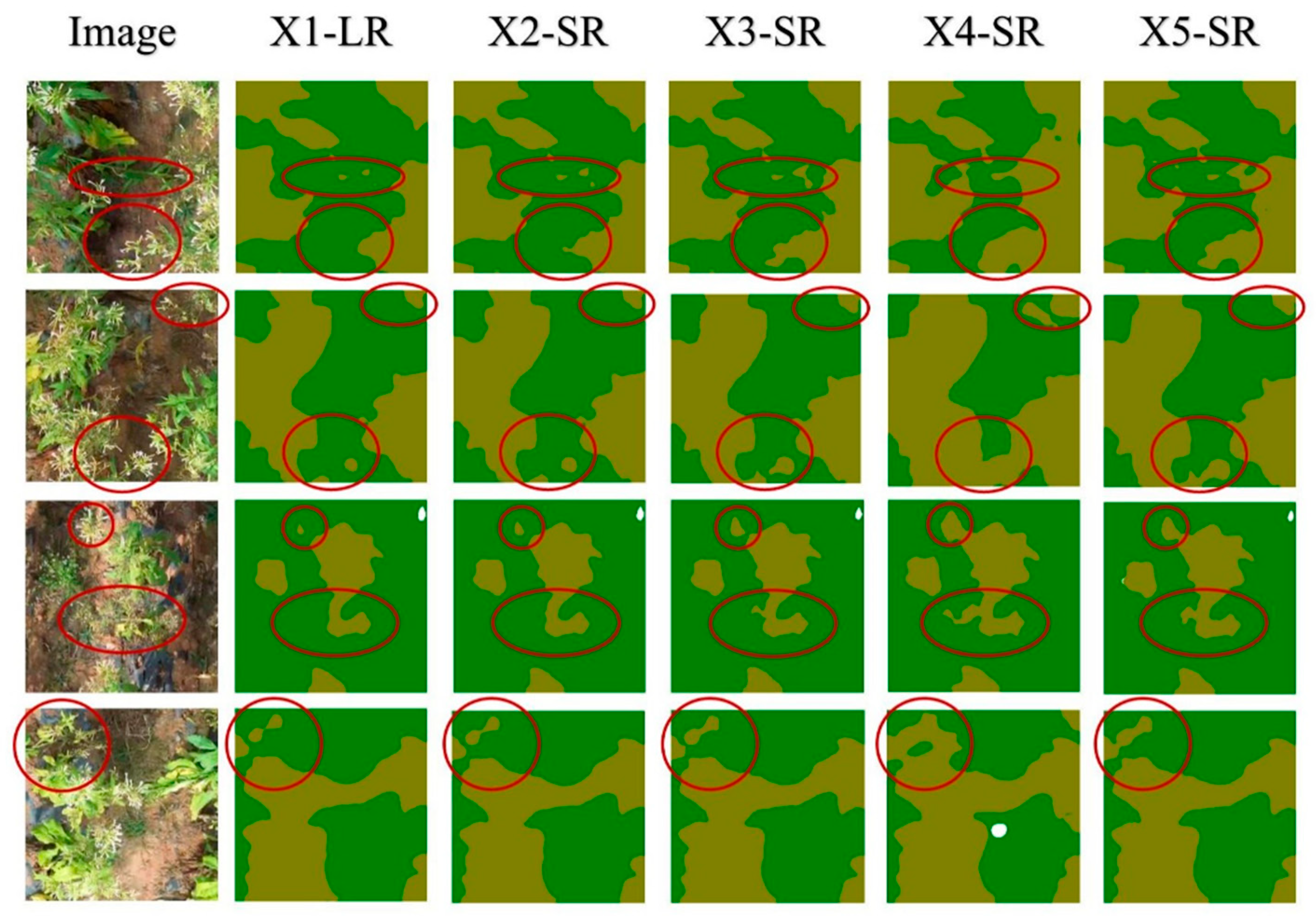

3.2. Analysis of the Super-Resolution Reconstruction

| Metrics (%) | HR | Bicubic | SRCNN | SRFBN | EDSR | RDN | RCAN |

|---|---|---|---|---|---|---|---|

| mIoU | 90.75 | 82.79 | 86.46 | 87.86 | 88.27 | 88.96 | 89.18 |

| IoU Green | 94.90 | 88.25 | 91.68 | 92.27 | 93.04 | 93.23 | 93.44 |

3.3. Analysis of Semantic Segmentation

3.3.1. Single Model Performance

3.3.2. Ensemble Learning Approach

4. Discussion

4.1. Impact of Magnification Factor

4.2. Computational Efficiency and Sensor Constraints

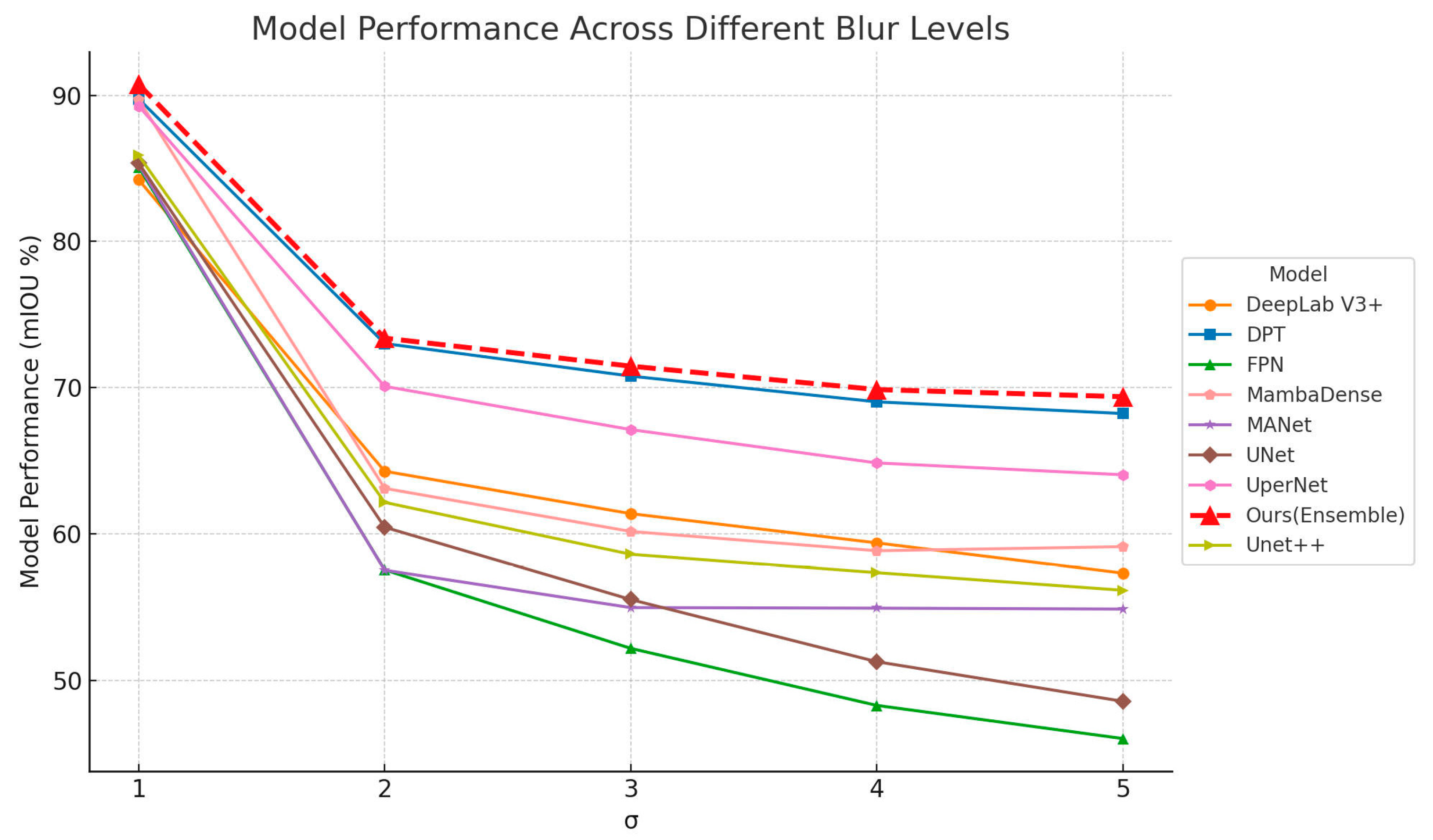

4.3. Impact of the Gaussian Blur

4.4. Impact of Gaussian Noise

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ben Jebli, M.; Boussaidi, R. Empirical evidence of emissions discourse related to food, beverage, and tobacco production in leading manufacturing nations. Environ. Sci. Pollut. Res. 2024, 31, 23968–23978. [Google Scholar] [CrossRef]

- Zafeiridou, M.; Hopkinson, N.S.; Voulvoulis, N. Cigarette smoking: An assessment of tobacco’s global environmental footprint across its entire supply chain. Environ. Sci. Technol. 2018, 52, 8087–8094. [Google Scholar] [CrossRef]

- Hendlin, Y.H.; Bialous, S.A. The environmental externalities of tobacco manufacturing: A review of tobacco industry reporting. Ambio 2020, 49, 17–34. [Google Scholar] [CrossRef]

- Guzel, M.; Turan, B.; Kadioglu, I.; Basturk, A.; Sin, B.; Sadeghpour, A. Deep learning for image-based detection of weeds from emergence to maturity in wheat fields. Smart Agric. Technol. 2024, 9, 100552. [Google Scholar] [CrossRef]

- Rezaei, E.E.; Webber, H.; Asseng, S.; Boote, K.; Durand, J.L.; Ewert, F.; Martre, P.; MacCarthy, D.S. Climate change impacts on crop yields. Nat. Rev. Earth Environ. 2023, 4, 831–846. [Google Scholar] [CrossRef]

- Lencucha, R.; Drope, J.; Magati, P.; Sahadewo, G.A. Tobacco farming: Overcoming an understated impediment to comprehensive tobacco control. Tob. Control 2022, 31, 308–312. [Google Scholar] [CrossRef] [PubMed]

- Sunil, G.C.; Upadhyay, A.; Zhang, Y.; Howatt, K.; Peters, T.; Ostlie, M.; Aderholdt, W.; Sun, X. Field-based multispecies weed and crop detection using ground robots and advanced YOLO models: A data and model-centric approach. Smart Agric. Technol. 2024, 9, 100538. [Google Scholar] [CrossRef]

- Gupta, S.K.; Yadav, S.K.; Soni, S.K.; Shanker, U.; Singh, P.K. Multiclass weed identification using semantic segmentation: An automated approach for precision agriculture. Ecol. Inform. 2023, 78, 102366. [Google Scholar] [CrossRef]

- Lecours, N. The harsh realities of tobacco farming: A review of socioeconomic, health and environmental impacts. In Tobacco Control and Tobacco Farming: Separating Myth from Reality; Cambridge University Press: Cambridge, UK, 2014; pp. 99–137. [Google Scholar] [CrossRef]

- Sharma, A.K.; Sharma, M.; Sharma, A.K.; Sharma, M. Mapping the impact of environmental pollutants on human health and environment: A systematic review and meta-analysis. J. Geochem. Explor. 2023, 255, 107325. [Google Scholar] [CrossRef]

- Lins, H.A.; Souza, M.d.F.; Batista, L.P.; Rodrigues, L.L.L.d.S.; da Silva, F.D.; Fernandes, B.C.C.; de Melo, S.B.; das Chagas, P.S.F.; Silva, D.V. Artificial intelligence for herbicide recommendation: Case study for the use of clomazone in Brazilian soils. Smart Agric. Technol. 2024, 9, 100699. [Google Scholar] [CrossRef]

- Coulibaly, S.; Kamsu-Foguem, B.; Kamissoko, D.; Traore, D. Deep learning for precision agriculture: A bibliometric analysis. Intell. Syst. Appl. 2022, 16, 200102. [Google Scholar] [CrossRef]

- Lu, Y.; Yang, W.; Zhang, Y.; Chen, Z.; Chen, J.; Xuan, Q.; Wang, Z.; Yang, X. Understanding the Dynamics of DNNs Using Graph Modularity. In Proceedings of the European Conference on Computer Vision (ECCV), Tel Aviv, Israel, 23–27 October 2022; pp. 225–242. [Google Scholar] [CrossRef]

- Huang, J.; Ma, Z.; Wu, Y.; Bao, Y.; Wang, Y.; Su, Z.; Guo, L. YOLOv8-DDS: A lightweight model based on pruning and distillation for early detection of root mold in barley seedling. Inf. Process. Agric. 2025; in press. [Google Scholar] [CrossRef]

- Wu, Y.; Huang, J.; Wang, S.; Bao, Y.; Wang, Y.; Song, J.; Liu, W. Lightweight Pepper Disease Detection Based on Improved YOLOv8n. AgriEngineering 2025, 7, 153. [Google Scholar] [CrossRef]

- Guo, L.; Huang, J.; Wu, Y. Detecting rice diseases using improved lightweight YOLOv8n. Trans. Chin. Soc. Agric. Eng. 2025, 41, 156–164. [Google Scholar] [CrossRef]

- Zheng, Y.Y.; Kong, J.L.; Jin, X.B.; Wang, X.Y.; Su, T.L.; Zuo, M. CropDeep: The crop vision dataset for deep-learning-based classification and detection in precision agriculture. Sensors 2019, 19, 1058. [Google Scholar] [CrossRef] [PubMed]

- Zhao, F.; He, Y.; Song, J.; Wang, J.; Xi, D.; Shao, X.; Wu, Q.; Liu, Y.; Chen, Y.; Zhang, G.; et al. Smart UAV-assisted blueberry maturity monitoring with Mamba-based computer vision. Precis. Agric. 2025, 26, 56. [Google Scholar] [CrossRef]

- Patel, D.; Gandhi, M.; Shankaranarayanan, H.; Darji, A.D. Design of an Autonomous Agriculture Robot for Real-Time Weed Detection Using CNN. In Advances in VLSI and Embedded Systems: Select Proceedings of AVES 2021; Springer Nature: Singapore, 2022; pp. 141–161. [Google Scholar] [CrossRef]

- Babu, V.S.; Ram, N.V. Deep residual CNN with contrast limited adaptive histogram equalization for weed detection in soybean crops. Trait. Du Signal 2022, 39, 717. [Google Scholar] [CrossRef]

- Gao, J.; Liao, W.; Nuyttens, D.; Lootens, P.; Alexandersson, E.; Pieters, J. Transferring learned patterns from ground-based field imagery to predict UAV-based imagery for crop and weed semantic segmentation in precision crop farming. arXiv 2022, arXiv:2210.11545. [Google Scholar] [CrossRef]

- Moazzam, S.I.; Khan, U.S.; Qureshi, W.S.; Nawaz, T.; Kunwar, F. Towards automated weed detection through two-stage semantic segmentation of tobacco and weed pixels in aerial imagery. Smart Agric. Technol. 2023, 4, 100142. [Google Scholar] [CrossRef]

- Tufail, M.; Iqbal, J.; Tiwana, M.I.; Alam, M.S.; Khan, Z.A.; Khan, M.T. Identification of tobacco crop based on machine learning for a precision agricultural sprayer. IEEE Access 2021, 9, 23814–23825. [Google Scholar] [CrossRef]

- Huang, L.; Wu, X.; Peng, Q.; Yu, X. Depth semantic segmentation of tobacco planting areas from unmanned aerial vehicle remote sensing images in plateau mountains. J. Spectrosc. 2021, 2021, 6687799. [Google Scholar] [CrossRef]

- Xu, B.; Fan, J.; Chao, J.; Arsenijevic, N.; Werle, R.; Zhang, Z. Instance segmentation method for weed detection using UAV imagery in soybean fields. Comput. Electron. Agric. 2023, 211, 107994. [Google Scholar] [CrossRef]

- Huang, Y.; Wen, X.; Gao, Y.; Zhang, Y.; Lin, G. Tree Species Classification in UAV Remote Sensing Images Based on Super-Resolution Reconstruction and Deep Learning. Remote Sens. 2023, 15, 2942. [Google Scholar] [CrossRef]

- Zeng, S.; Qi, D.; Chang, X.; Xiong, F.; Xie, S.; Wu, X.; Liang, S.; Xu, M.; Wei, X. Janusvln: Decoupling semantics and spatiality with dual implicit memory for vision-language navigation. arXiv 2025, arXiv:2509.22548. [Google Scholar]

- Liu, Z.; Han, J.; Liu, J.; Li, Z.C.; Zhai, G. Neighborhood evaluator for efficient super-resolution reconstruction of 2D medical images. Comput. Biol. Med. 2024, 171, 108212. [Google Scholar] [CrossRef]

- Chi, J.; Wei, X.; Sun, Z.; Yang, Y.; Yang, B. Low-Dose CT Image Super-resolution Network with Noise Inhibition Based on Feedback Feature Distillation Mechanism. J. Imaging Inform. Med. 2024, 37, 1902–1921. [Google Scholar] [CrossRef]

- Chen, H.; Song, J.; Han, C.; Xia, J.; Yokoya, N. Changemamba: Remote sensing change detection with spatio-temporal state space model. IEEE Trans. Geosci. Remote Sens. 2024, 62, 4409720. [Google Scholar] [CrossRef]

- Zeng, S.; Chang, X.; Xie, M.; Liu, X.; Bai, Y.; Pan, Z.; Xu, M.; Wei, X. FutureSightDrive: Thinking Visually with Spatio-Temporal CoT for Autonomous Driving. arXiv 2025, arXiv:2505.17685. [Google Scholar]

- Arun, P.V.; Herrmann, I.; Budhiraju, K.M.; Karnieli, A. Convolutional network architectures for super-resolution/sub-pixel mapping of drone-derived images. Pattern Recognit. 2019, 88, 431–446. [Google Scholar] [CrossRef]

- Aslahishahri, M.; Stanley, K.G.; Duddu, H.; Shirtliffe, S.; Vail, S.; Stavness, I. Spatial super-resolution of real-world aerial images for image-based plant phenotyping. Remote Sens. 2021, 13, 2308. [Google Scholar] [CrossRef]

- Nogueira, E.A.; Felix, J.P.; Fonseca, A.U.; Vieira, G. Deep Learning for Super Resolution of Sugarcane Crop Line Imagery from Unmanned Aerial Vehicles. In International Symposium on Visual Computing; Springer Nature: Cham, Switzerland, 2023. [Google Scholar] [CrossRef]

- Palan, V.A.; Thakur, S.; Sumith, N. Leveraging super-resolution technology in drone imagery for advanced plant disease diagnosis and prognosis. IEEE Access 2025, 13, 66432–66444. [Google Scholar] [CrossRef]

- Zhao, F.; Huang, J.; Liu, Y.; Wang, J.; Chen, Y.; Shao, X.; Ma, B.; Xi, D.; Zhang, M.; Tu, Z.; et al. A deep learning approach combining super-resolution and segmentation to identify weed and tobacco in UAV imagery. In Proceedings of the 2024 IEEE International Conference on Computer Science and Blockchain (CCSB), Shenzhen, China, 6–8 September 2024; pp. 594–597. [Google Scholar]

- Li, M.; Zhong, B.; Ma, K.K. MA-NET: Multi-scale attention-aware network for optical flow estimation. In Proceedings of the ICASSP 2022—2022 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Singapore, 22–27 May 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 2844–2848. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Image super-resolution using deep convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 295–307. [Google Scholar] [CrossRef]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced deep residual networks for single image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 136–144. [Google Scholar] [CrossRef]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual dense network for image super-resolution. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2472–2481. [Google Scholar]

- Sara, U.; Akter, M.; Uddin, M.S. Image quality assessment through FSIM, SSIM, MSE and PSNR—A comparative study. J. Comput. Commun. 2019, 7, 8–18. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar] [CrossRef]

- Xiao, T.; Liu, Y.; Zhou, B.; Jiang, Y.; Sun, J. Unified perceptual parsing for scene understanding. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 418–434. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, 5–9 October 2015, Proceedings, Part III 18; Springer International Publishing: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [CrossRef]

- Zhou, Z.; Rahman Siddiquee, M.M.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support: 4th International Workshop, DLMIA 2018, and 8th International Workshop, ML-CDS 2018, Held in Conjunction with MICCAI 2018, Granada, Spain, 20 September 2018, Proceedings 4; Springer International Publishing: Cham, Switzerland, 2018; pp. 3–11. [Google Scholar] [CrossRef]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar] [CrossRef]

- Ranftl, R.; Bochkovskiy, A.; Koltun, V. Vision transformers for dense prediction. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 12179–12188. [Google Scholar] [CrossRef]

- Goceri, E. Medical image data augmentation: Techniques, comparisons and interpretations. Artif. Intell. Rev. 2023, 56, 12561–12605. [Google Scholar] [CrossRef]

- Zhang, K.; Liang, J.; Van Gool, L.; Timofte, R. Designing a practical degradation model for deep blind image super-resolution. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 4791–4800. [Google Scholar] [CrossRef]

- Zhang, M.; Chen, T.E.; Gu, X.; Kuai, Y.; Wang, C.; Chen, D.; Zhao, C. UAV-borne hyperspectral estimation of nitrogen content in tobacco leaves based on ensemble learning methods. Comput. Electron. Agric. 2023, 211, 108008. [Google Scholar] [CrossRef]

- Xiang, C.; Wang, W.; Deng, L.; Shi, P.; Kong, X. Crack detection algorithm for concrete structures based on super-resolution reconstruction and segmentation network. Autom. Constr. 2022, 140, 104346. [Google Scholar] [CrossRef]

- González, D.; Patricio, M.A.; Berlanga, A.; Molina, J.M. A super-resolution enhancement of UAV images based on a convolutional neural network for mobile devices. Pers. Ubiquitous Comput. 2022, 26, 1193–1204. [Google Scholar] [CrossRef]

- Jiang, X.; Wang, N.; Xin, J.; Xia, X.; Yang, X.; Gao, X. Learning lightweight super-resolution networks with weight pruning. Neural Netw. 2021, 144, 21–32. [Google Scholar] [CrossRef]

- Donapati, R.R.; Cheruku, R.; Kodali, P. Real-Time Seed Detection and Germination Analysis in Precision Agriculture: A Fusion Model With U-Net and CNN on Jetson Nano. IEEE Trans. AgriFood Electron. 2023, 1, 145–155. [Google Scholar] [CrossRef]

- Wang, X.; Liang, X.; Zheng, J.; Zhou, H. Fast detection and segmentation of partial image blur based on discrete Walsh–Hadamard transform. Signal Process. Image Commun. 2019, 70, 47–56. [Google Scholar] [CrossRef]

| Metrics | Bicubic | SRCNN | SRFBN | EDSR | RDN | RCAN |

|---|---|---|---|---|---|---|

| PSNR (dB) | 23.90 | 24.61 | 24.89 | 24.96 | 24.97 | 24.98 |

| SSIM (%) | 63.44 | 67.81 | 68.95 | 69.36 | 69.47 | 69.48 |

| # | Decoder | Encoder | IoU Green | IoU White | IoU Brown | mIoU |

|---|---|---|---|---|---|---|

| CNN-based Encoder | ||||||

| 1 | FPN | EfficientNet-b5 | 75.18 | 63.73 | 78.46 | 85.09 |

| 2 | UNet | EfficientNet-b5 | 76.39 | 64.52 | 79.17 | 85.36 |

| 3 | DeepLabV3+ | EfficientNet-b5 | 71.04 | 59.04 | 75.34 | 84.21 |

| 4 | Unet++ | EfficientNet-b5 | 77.49 | 64.91 | 79.69 | 85.88 |

| 5 | FPN | ResNeXt101_32x8d | 77.95 | 66.06 | 80.26 | 86.65 |

| 6 | UNet | ResNeXt101_32x8d | 78.64 | 66.16 | 80.53 | 86.37 |

| 7 | DeepLabV3+ | ResNeXt101_32x8d | 75.96 | 64.38 | 78.95 | 86.78 |

| 8 | UNet++ | ResNeXt101_32x8d | 79.93 | 67.70 | 81.54 | 87.97 |

| 9 | FPN | ResNet101 | 75.32 | 63.30 | 78.36 | 86.11 |

| 10 | UNet | ResNet101 | 77.34 | 64.66 | 79.54 | 86.33 |

| 11 | DeepLabV3+ | ResNet101 | 74.35 | 60.94 | 77.08 | 86.59 |

| 12 | UNet++ | ResNet101 | 78.82 | 66.39 | 80.64 | 86.64 |

| 13 | MANet | EfficientNet-b5 | 73.82 | 63.98 | 78.08 | 85.23 |

| 14 | MANet | ResNeXt101_32x8d | 77.71 | 66.20 | 80.24 | 86.40 |

| 15 | MANet | ResNet101 | 76.21 | 64.48 | 79.12 | 86.09 |

| Transformer-based Encoder | ||||||

| 16 | FPN | SegFormer(mit_b5) | 77.47 | 64.00 | 79.33 | 89.34 |

| 17 | UNet | SegFormer(mit_b5) | 75.49 | 64.50 | 78.88 | 89.41 |

| 18 | MANet | SegFormer(mit_b5) | 77.52 | 64.25 | 79.44 | 88.91 |

| 19 | DPT | DINOv2(vit_1) | 80.67 | 67.99 | 81.87 | 90.04 |

| 20 | DPT | DINOv2(vit_b) | 80.50 | 66.79 | 81.37 | 90.18 |

| 21 | DPT | DINOv2(vit_s) | 80.10 | 66.87 | 81.31 | 89.75 |

| Mamba-based Encoder | ||||||

| 22 | ChangeMamba | VMamba (base) | 78.27 | 65.73 | 80.25 | 89.69 |

| 23 | ChangeMamba | VMamba (tiny) | 78.38 | 66.23 | 80.48 | 89.28 |

| 24 | ChangeMamba | VMamba (small) | 78.09 | 65.17 | 79.98 | 89.49 |

| 25 | UperNet | VMamba (base) | 77.92 | 64.38 | 79.61 | 89.26 |

| 26 | UperNet | VMamba (tiny) | 78.17 | 65.31 | 80.06 | 89.28 |

| 27 | UperNet | VMamba (small) | 77.53 | 64.33 | 79.47 | 89.52 |

| Ensemble Model | ||||||

| 17 + 19 + 20 + 22 (ours) | 94.90 | 81.43 | 95.91 | 90.75 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tao, J.; Qiao, Q.; Song, J.; Sun, S.; Chen, Y.; Wu, Q.; Liu, Y.; Xue, F.; Wu, H.; Zhao, F. Deep Learning-Driven Automatic Segmentation of Weeds and Crops in UAV Imagery. Sensors 2025, 25, 6576. https://doi.org/10.3390/s25216576

Tao J, Qiao Q, Song J, Sun S, Chen Y, Wu Q, Liu Y, Xue F, Wu H, Zhao F. Deep Learning-Driven Automatic Segmentation of Weeds and Crops in UAV Imagery. Sensors. 2025; 25(21):6576. https://doi.org/10.3390/s25216576

Chicago/Turabian StyleTao, Jianghan, Qian Qiao, Jian Song, Shan Sun, Yijia Chen, Qingyang Wu, Yongying Liu, Feng Xue, Hao Wu, and Fan Zhao. 2025. "Deep Learning-Driven Automatic Segmentation of Weeds and Crops in UAV Imagery" Sensors 25, no. 21: 6576. https://doi.org/10.3390/s25216576

APA StyleTao, J., Qiao, Q., Song, J., Sun, S., Chen, Y., Wu, Q., Liu, Y., Xue, F., Wu, H., & Zhao, F. (2025). Deep Learning-Driven Automatic Segmentation of Weeds and Crops in UAV Imagery. Sensors, 25(21), 6576. https://doi.org/10.3390/s25216576