Aero-Engine Ablation Defect Detection with Improved CLR-YOLOv11 Algorithm

Abstract

1. Introduction

2. Related Work

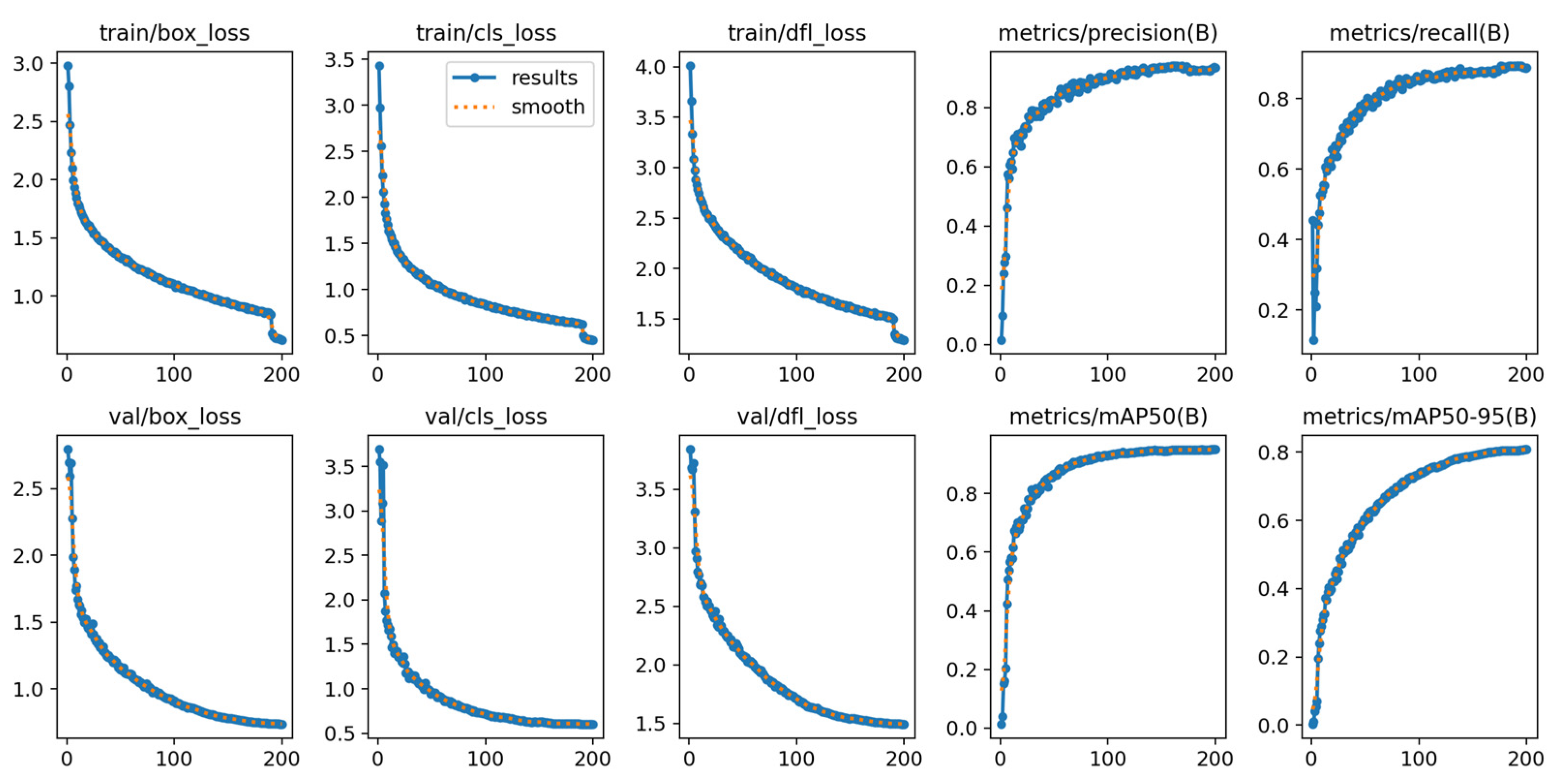

3. Methodology

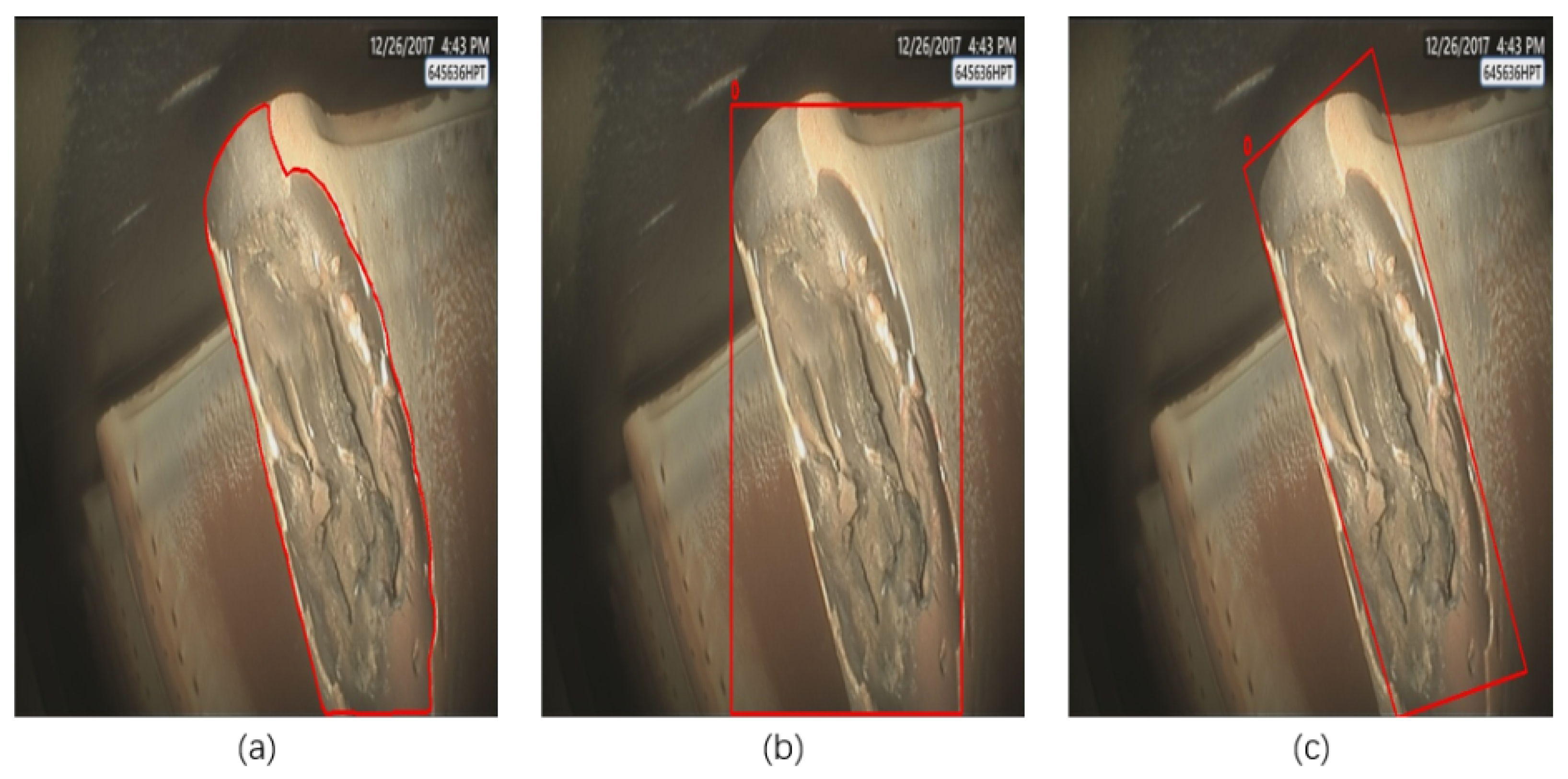

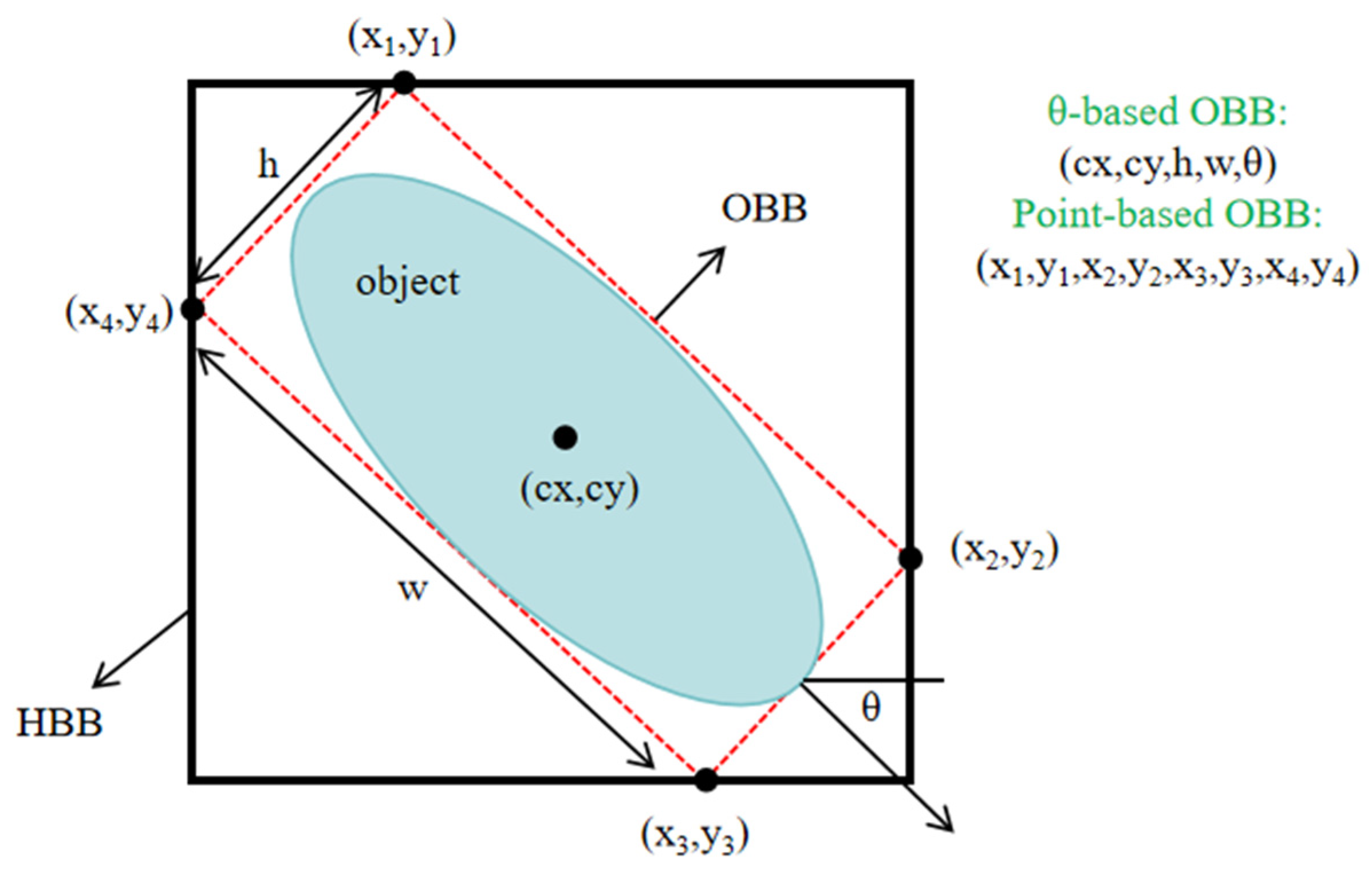

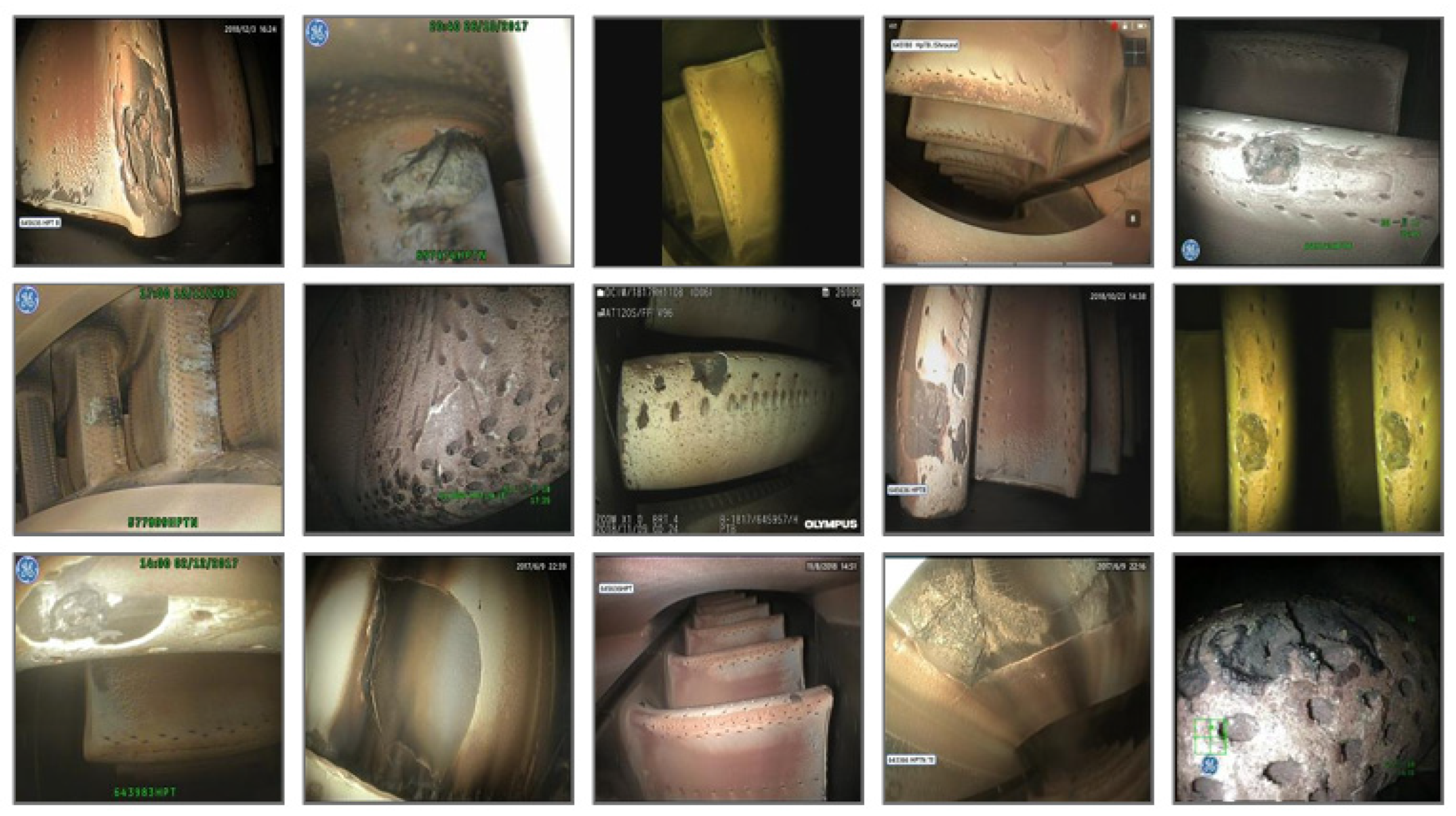

3.1. Data Processing

3.2. Architecture

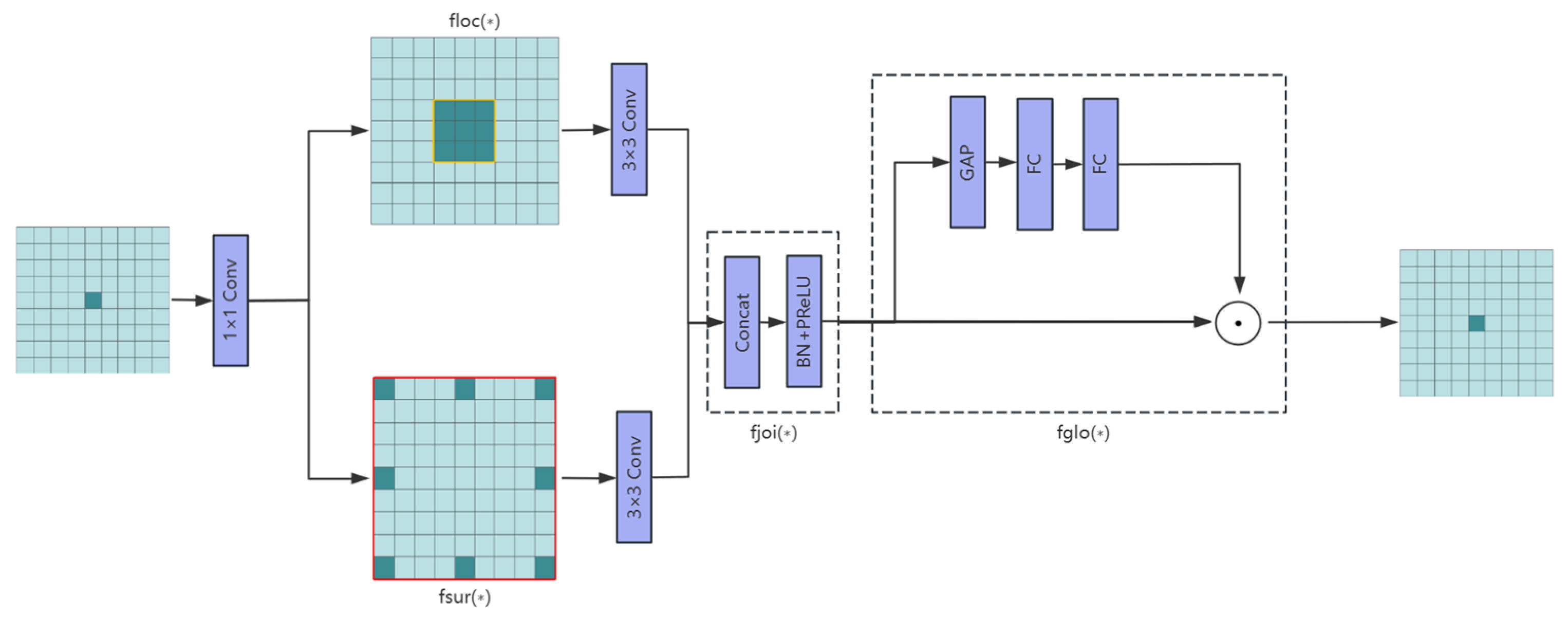

3.2.1. Context-Guided Module

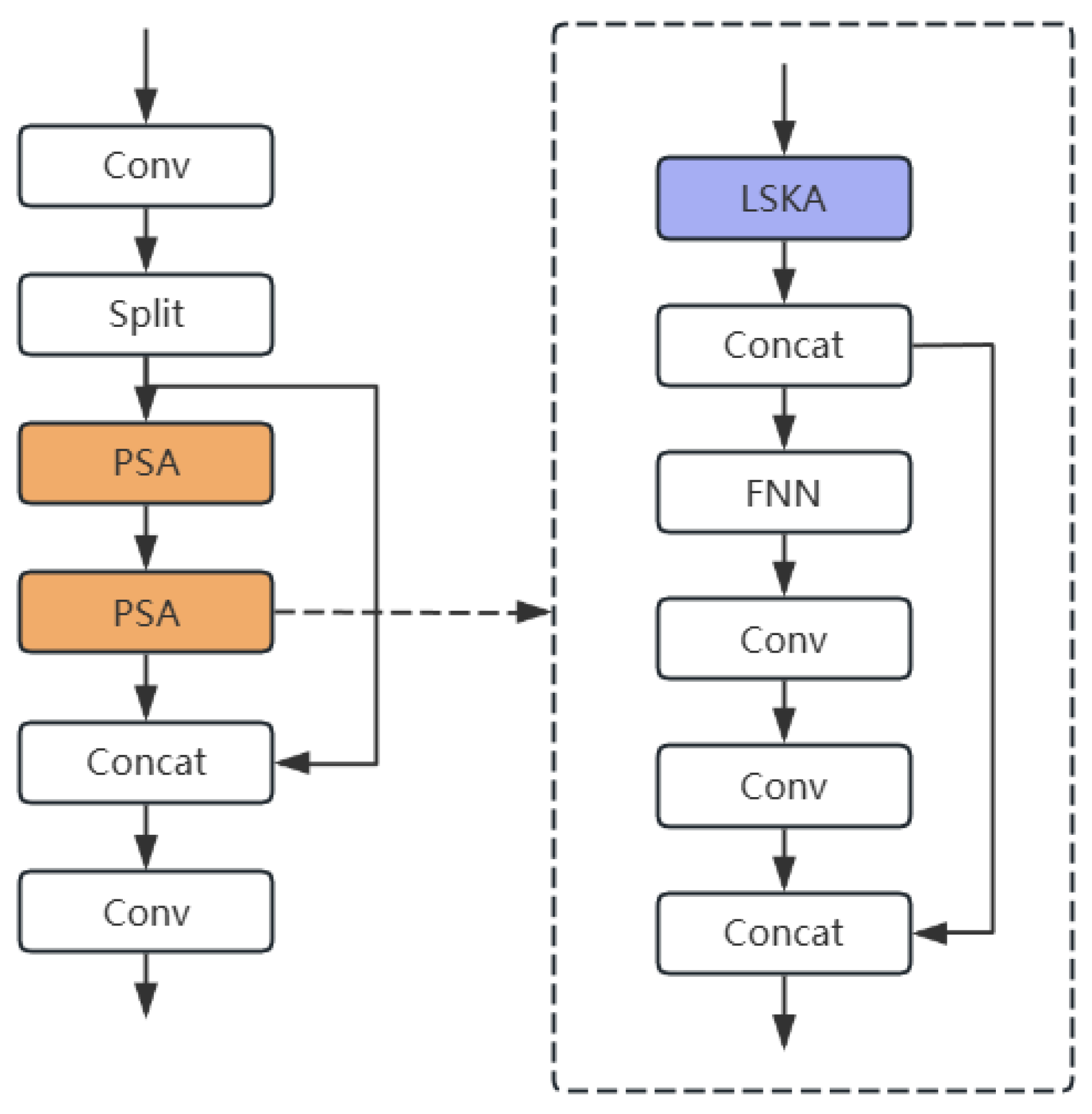

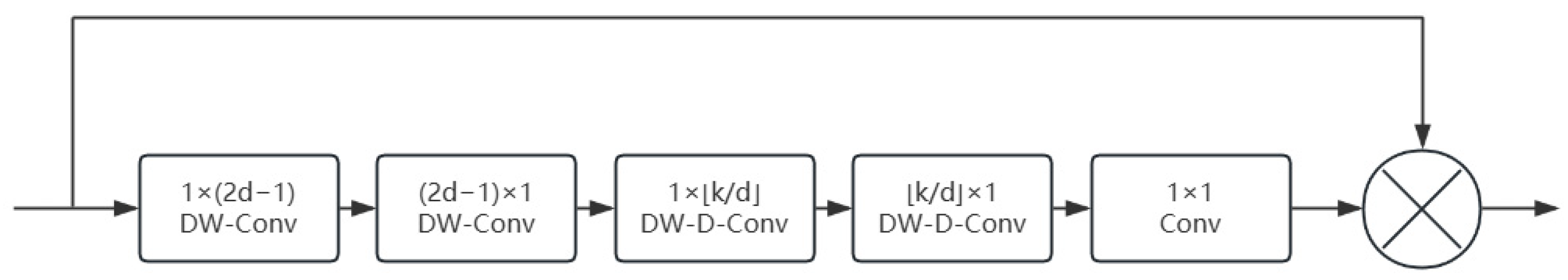

3.2.2. Separable Large-Kernel Attention Mechanism Module

4. Experiments and Results

4.1. Data Collection

4.2. Experimental Environment

4.3. Evaluation Metrics

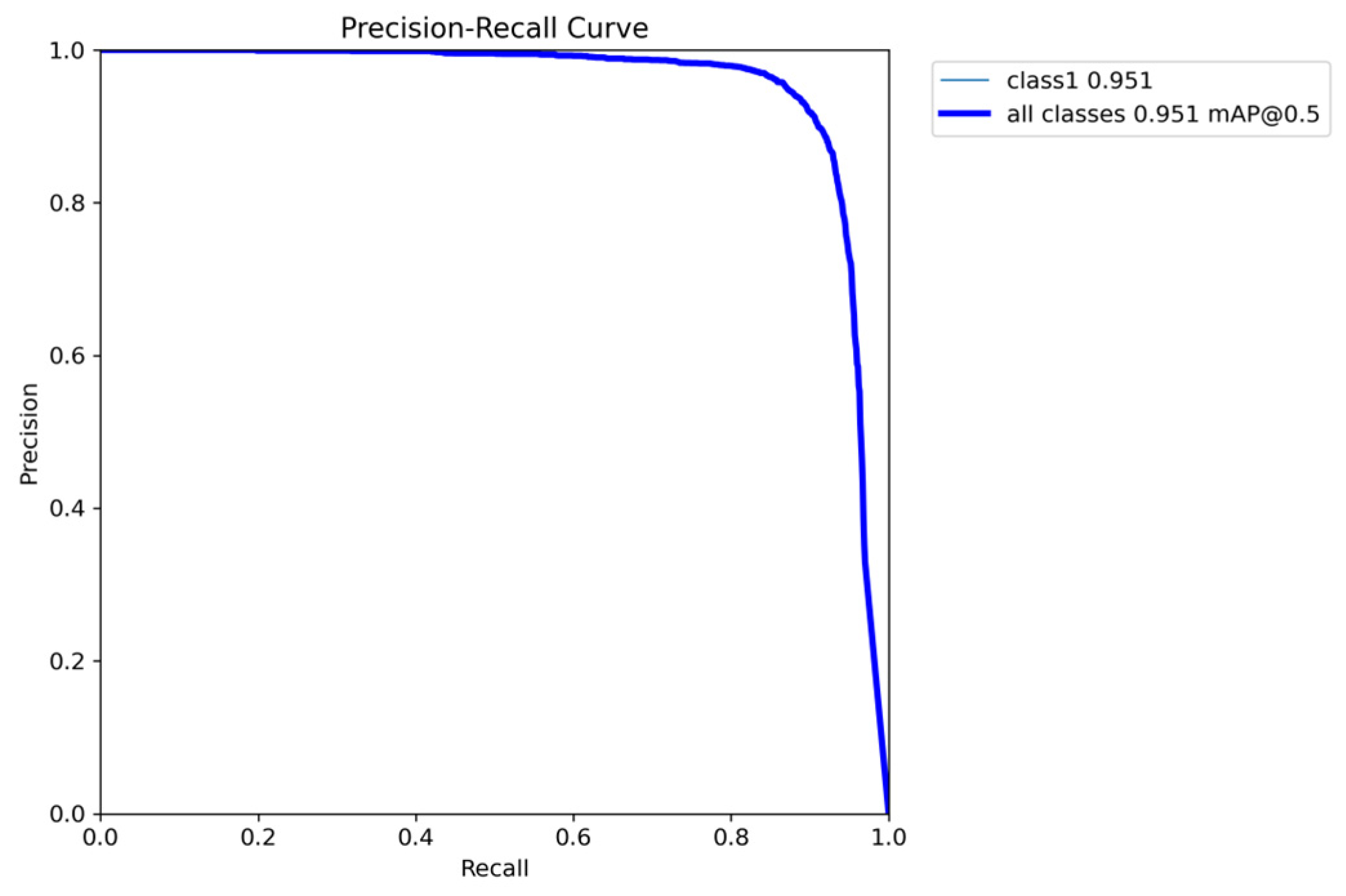

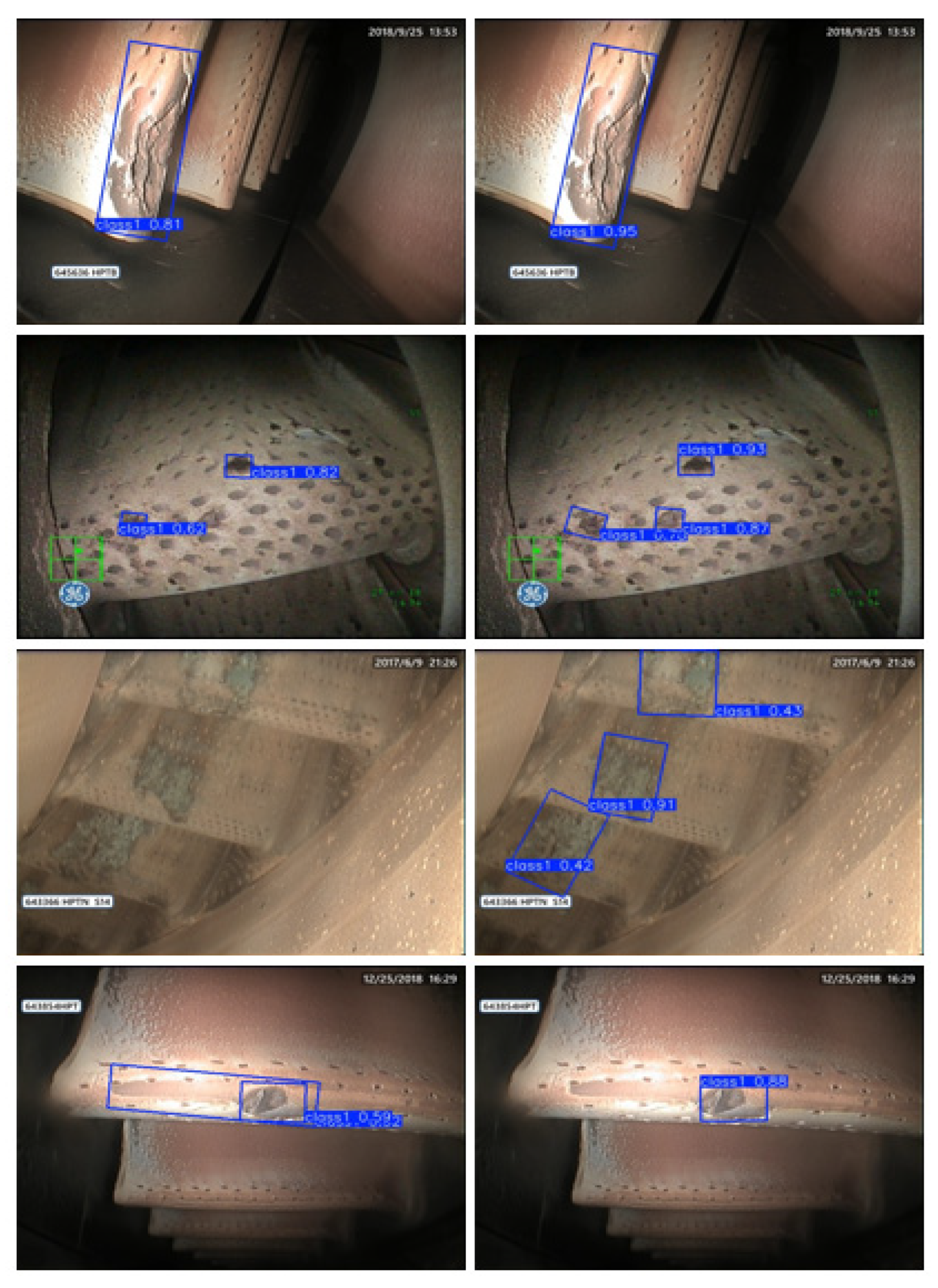

4.4. Comparative Experiments

4.5. Ablation Study

- Model 1 is the baseline YOLOv11-obb model without the specialized data augmentation. To maintain the same dataset size, it employs six augmentation methods: random flipping, vertical flipping, horizontal flipping, random scaling, random cropping, and random translation, ensuring the input dataset contains 5614 images—the same as that of CLO-YOLOv11.

- Model 2 is a CLO-YOLOv11 model using an alternative data augmentation approach: first applying CLAHE to the original dataset, followed by six augmentations—random rotation, random flipping, random translation, Mixup, Mosaic, and Gaussian filtering—and concluding with Z-score normalization.

- Model 3 is another CLO-YOLOv11 variant with an alternative augmentation strategy, differing from Model 3 by replacing Gaussian filtering with random cropping.

- Model 4 is the YOLOv11-OBB model enhanced with the specialized data augmentation strategy.

- Model 5 builds upon YOLOv11-OBB by incorporating both the specialized data augmentation and the C3K2CG module.

- Model 6 enhances YOLOv11-OBB with the specialized data augmentation and the C2PSLA module.

- Model 7 is the proposed CLO-YOLOv11 model in this study, which integrates the specialized data augmentation, C3K2CG module, and C2PSLA module into the YOLOv11-OBB architecture.

5. Discussion

5.1. Discussion on Model Parameter Selection and Data Augmentation Techniques

5.2. Model Interpretability Analysis

5.3. Limitations and Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Xie, X.L. Research on Performance Evaluation and Degradation Prediction Methods for Aero-Engines; Harbin Institute of Technology: Harbin, China, 2016. [Google Scholar]

- Hu, M.H.; Gao, J.J.; Jiang, Z.N.; Wang, W.M.; Zou, L.M.; Zhou, T.; Fan, Y.F.; Wang, Y.; Feng, J.X.; Li, C.Y. Research progress on vibration monitoring and fault diagnosis for aero-engine. Acta Aeronaut. Astronaut. Sin. 2024, 45, 630194. [Google Scholar]

- Yasuda, Y.D.V.; Cappabianco, F.A.M.; Martins, L.E.G.; Gripp, J.A.B. Aircraft visual inspection: A systematic literature review. Comput. Ind. 2022, 141, 103695. [Google Scholar] [CrossRef]

- Evans, A.G.; Mumm, D.R.; Hutchinson, J.W.; Meier, G.H.; Pettit, F.S. Mechanisms controlling the durability of thermal barrier coatings. Prog. Mater. Sci. 2001, 46, 505–553. [Google Scholar] [CrossRef]

- Hille, T.S.; Turteltaub, S.; Suiker, A.S.J. Oxide growth and damage evolution in thermal barrier coatings. Eng. Fract. Mech. 2011, 78, 2139–2152. [Google Scholar] [CrossRef]

- Padture, N.P.; Gell, M.; Jordan, E.H. Thermal Barrier Coatings for Gas-Turbine Engine Applications. Science 2002, 296, 280–284. [Google Scholar] [CrossRef]

- Chen, G.; Tang, Y. Aero-engine interior damage recognition based on texture features of borescope image. Chin. J. Sci. Instrum. 2008, 29, 1709–1713. [Google Scholar]

- Shen, Z.J.; Wan, X.L.; Ye, F.; Guan, X.J.; Liu, S.W. Deep learning based framework for automatic damage detection in aircraft engine borescope inspection. In Proceedings of the 2019 International Conference on Computing, Networking and Communications (ICNC), Honolulu, HI, USA, 18–21 February 2019; IEEE: New York, NY, USA, 2019; pp. 1005–1010. [Google Scholar]

- Zhang, H.B.; Zhang, C.Y.; Cheng, D.J.; Zhou, K.L.; Sun, Z.Y. Detection Transformer with Multi-Scale Fusion Attention Mechanism for Aero-Engine Turbine Blade Cast Defect Detection Considering Comprehensive Features. Sensors 2024, 24, 1663. [Google Scholar] [CrossRef]

- Mohammadi, S.; Rahmanian, V.; Sattarpanah Karganroudi, S.; Adda, M. Smart Defect Detection in Aero-Engines: Evaluating Transfer Learning with VGG19 and Data-Efficient Image Transformer Models. Machines 2025, 13, 49. [Google Scholar] [CrossRef]

- Xie, X.X.; Cheng, G.; Wang, J.B.; Yao, X.W.; Han, J.W. Oriented R-CNN for Object Detection. arXiv 2021, arXiv:2108.05699. [Google Scholar] [CrossRef]

- Zheng, X.X.; Yan, J.L. Application of mathematical morphology in surface defect detection of magnetic tiles. Comput. Eng. Appl. 2008, 16, 182–184. [Google Scholar]

- Zou, C.; Wang, B.W.; Sun, Z.G. Real-time defect image segmentation based on Gabor filter banks. Comput. Eng. Appl. 2010, 46, 185–187+197. [Google Scholar]

- Yan, X.; Zhang, Y.; Li, G.Y.; Tian, C.Q. Research on surface defect classification of aero-engine components based on ResNet. Comput. Sci. Appl. 2021, 11, 1256–1263. [Google Scholar]

- Wu, X.; Wei, X.L.; Xu, H.J.; Hou, Y.H.; Li, C.Z.; Yin, Y.Z. Aeroengine Blades Damage Detection and Measurement Based on Multimodality Fusion Learning. IEEE Trans. Instrum. Meas. 2024, 73, 1–15. [Google Scholar] [CrossRef]

- Huang, Y.F.; Tao, J.; Sun, G.; Wu, T.Y.; Yu, L.L. A novel digital twin approach based on deep multimodal information fusion for aero-engine fault diagnosis. Energy 2023, 270, 126894. [Google Scholar] [CrossRef]

- Ma, J.Q.; Shao, W.Y.; Ye, H.; Wang, L.; Wang, H.; Zheng, Y.B.; Xue, X.Y. Arbitrary-Oriented Scene Text Detection via Rotation Proposals. arXiv 2018, arXiv:1703.01086v3. [Google Scholar] [CrossRef]

- Qian, W.; Yang, X.; Peng, S.L.; Guo, Y.; Yan, J.C. Learning Modulated Loss for Rotated Object Detection. arXiv 2019, arXiv:1911.08299. [Google Scholar] [CrossRef]

- Ding, J.; Xue, N.; Long, Y.; Xia, G.S.; Lu, Q.K. Learning RoI Transformer for Detecting Oriented Objects in Aerial Images. arXiv 2018, arXiv:1812.00155. [Google Scholar] [CrossRef]

- Yang, X.; Yang, J.R.; Yan, J.C.; Zhang, T.F.; Guo, Z. SCRDet: Towards More Robust Detection for Small, Cluttered and Rotated Objects. arXiv 2019, arXiv:1811.07126. [Google Scholar] [CrossRef]

- Yang, X.; Yan, J.C.; Feng, Z.M.; He, T. R3Det: Refined Single-Stage Detector with Feature Refinement for Rotating Object. arXiv 2019, arXiv:1908.05612. [Google Scholar] [CrossRef]

- Law, H.; Deng, J. CornerNet: Detecting Objects as Paired Keypoints. arXiv 2019, arXiv:1808.01244. [Google Scholar] [CrossRef]

- Ming, Q.; Zhou, Z.Q.; Miao, L.J.; Zhang, H.W.; Li, L.H. Dynamic Anchor Learning for Arbitrary-Oriented Object Detection. arXiv 2020, arXiv:2012.04150. [Google Scholar] [CrossRef]

- Liu, Z.G.; Chen, Y.Q.; Gao, Y. Rotating-YOLO: A novel YOLO model for remote sensing rotating object detection. Image Vis. Comput. 2025, 154, 105397. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.L.; Chen, B.; Kalenichenko, D.; Wang, W.J.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar] [CrossRef]

- Wu, T.Y.; Tang, S.; Zhang, R.; Zhang, Y.D. CGNet: A Light-weight Context Guided Network for Semantic Segmentation. arXiv 2019, arXiv:1811.08201. [Google Scholar] [CrossRef] [PubMed]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The Cityscapes Dataset for Semantic Urban Scene Understanding. arXiv 2016, arXiv:1604.01685. [Google Scholar] [CrossRef]

- Wang, Q.L.; Wu, B.G.; Zhu, P.F.; Li, P.H.; Zuo, W.M.; Hu, Q.H. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. arXiv 2020, arXiv:1910.03151. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.T.; Cao, Y.; Hu, H.; Wei, Y.X.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. arXiv 2021, arXiv:2103.14030. [Google Scholar] [CrossRef]

- Lau, K.W.; Po, L.; Ur Rehman, Y.A. Large Separable Kernel Attention: Rethinking the Large Kernel Attention Design in CNN. Expert Syst. Appl. 2024, 236, 121352. [Google Scholar] [CrossRef]

- Chen, H.T.; Wang, Y.H.; Guo, J.Y.; Tao, D.C. VanillaNet: The Power of Minimalism in Deep Learning. arXiv 2023, arXiv:2305.12972. [Google Scholar] [CrossRef]

| Model | Input Size | P (%) | R (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) | F1-Score (%) |

|---|---|---|---|---|---|---|

| YOLOv5 | 640 × 640 | 87.1 ± 0.3 | 82.5 ± 0.4 | 85.7 ± 0.7 | 65.5 ± 0.5 | 84.7 ± 0.2 |

| YOLOv8 | 640 × 640 | 88.4 ± 0.4 | 83.5 ± 1.2 | 86.4 ± 0.3 | 67.6 ± 0.5 | 85.9 ± 0.5 |

| YOLOv11 | 640 × 640 | 88.4 ± 0.1 | 85.0 ± 0.8 | 87.7 ± 0.4 | 68.8 ± 1.0 | 86.7 ± 0.2 |

| YOLOv12 | 640 × 640 | 84.5 ± 0.4 | 81.2 ± 0.5 | 84.7 ± 0.6 | 64.9 ± 0.7 | 82.8 ± 0.4 |

| YOLOv11-obb | 640 × 640 | 91.1 ± 0.2 | 90.4 ± 0.4 | 91.8 ± 0.6 | 74.3 ± 0.6 | 90.7 ± 0.2 |

| Rotated-Faster R-CNN | 1024 × 1024 | - | 84.5 ± 0.3 | 75.9 ± 0.2 | - | - |

| R3Det | 1024 × 1024 | - | 84.6 ± 0.2 | 78.1 ± 0.2 | - | - |

| S2ANet | 1024 × 1024 | - | 86.4 ± 0.2 | 79.7 ± 0.3 | - | - |

| ours | 640 × 640 | 93.9 ± 0.6 | 88.4 ± 0.6 | 94.6 ± 0.4 | 78.5 ± 0.5 | 91.1 ± 0.5 |

| Model | P (%) | R (%) | mAP@0.5 (%) | mAP@0.5:0.95 (%) | F1-Score (%) |

|---|---|---|---|---|---|

| 1 | 0.2 | 0.4 | 0.6 | 0.6 | 0.2 |

| 2 | 0.2 | 0.8 | 0.4 | 1.0 | 0.2 |

| 3 | 0.2 | 0.6 | 0.2 | 0.6 | 0.3 |

| 4 | 0.3 | 0.8 | 0.4 | 0.6 | 0.5 |

| 5 | 0.4 | 0.3 | 0.2 | 0.5 | 0.3 |

| 6 | 0.4 | 0.6 | 0.3 | 0.6 | 0.4 |

| 7 | 0.6 | 0.6 | 0.4 | 0.5 | 0.5 |

| Model | Parameter | Computation Volume (GFLOPS) | Weights (MB) | Average Detection Time (ms) |

|---|---|---|---|---|

| YOLOv11-obb | 2,653,918 | 6.6 | 7.5 | 1.3 |

| C3K2CG-YOLOv11-obb | 2,995,018 | 7.9 | 8.2 | 1.4 |

| C2PSLA-YOLOv11-obb | 2,607,070 | 6.5 | 7.4 | 1.5 |

| CLR-YOLOv11 | 2,948,170 | 7.9 | 8.1 | 1.4 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Liu, J.; Xu, Y.; Fu, Q.; Qian, J.; Wang, X. Aero-Engine Ablation Defect Detection with Improved CLR-YOLOv11 Algorithm. Sensors 2025, 25, 6574. https://doi.org/10.3390/s25216574

Liu Y, Liu J, Xu Y, Fu Q, Qian J, Wang X. Aero-Engine Ablation Defect Detection with Improved CLR-YOLOv11 Algorithm. Sensors. 2025; 25(21):6574. https://doi.org/10.3390/s25216574

Chicago/Turabian StyleLiu, Yi, Jiatian Liu, Yaxi Xu, Qiang Fu, Jide Qian, and Xin Wang. 2025. "Aero-Engine Ablation Defect Detection with Improved CLR-YOLOv11 Algorithm" Sensors 25, no. 21: 6574. https://doi.org/10.3390/s25216574

APA StyleLiu, Y., Liu, J., Xu, Y., Fu, Q., Qian, J., & Wang, X. (2025). Aero-Engine Ablation Defect Detection with Improved CLR-YOLOv11 Algorithm. Sensors, 25(21), 6574. https://doi.org/10.3390/s25216574