Abstract

Achieving high-precision anomaly detection with incomplete sensor data is a critical challenge in industrial automation and intelligent manufacturing. This incompleteness often results from sensor failures, environmental interference, occlusions, or acquisition cost constraints. This study explicitly targets both types of incompleteness commonly encountered in industrial multimodal inspection: (i) incomplete sensor data within a given modality, such as partial point cloud loss or image degradation, and (ii) incomplete modalities, where one sensing channel (RGB or 3D) is entirely unavailable. By jointly addressing intra-modal incompleteness and cross-modal absence within a unified cross-distillation framework, our approach enhances anomaly detection robustness under both conditions. First, a teacher–student cross-modal distillation mechanism enables robust feature learning from both RGB and 3D modalities, allowing the student network to accurately detect anomalies even when a modality is missing during inference. Second, a dynamic voxel resolution adjustment with edge-retention strategy alleviates the computational burden of 3D point cloud processing while preserving crucial geometric features. By jointly enhancing robustness to missing modalities and improving computational efficiency, our method offers a resilient and practical solution for anomaly detection in real-world manufacturing scenarios. Extensive experiments demonstrate that the proposed method achieves both high robustness and efficiency across multiple industrial scenarios, establishing new state-of-the-art performance that surpasses existing approaches in both accuracy and speed. This method provides a robust solution for high-precision perception under complex detection conditions, significantly enhancing the feasibility of deploying anomaly detection systems in real industrial environments.

1. Introduction

3D anomaly detection, as a pivotal technology in intelligent manufacturing and quality control, has garnered significant attention in recent years. Within industrial visual inspection, traditional approaches predominantly rely on single modalities: either utilising RGB colour images [1] or depending on 3D point cloud data [2]. Methods relying solely on RGB appearance information often struggle with subtle structural defects, while approaches utilising only point cloud geometric information remain insensitive to colour and texture anomalies [3,4,5,6,7,8]. Consequently, integrating colour and shape cues has emerged as a crucial direction for enhancing detection performance, becoming a significant focus of current research.

In this paper, we make a clear distinction between incomplete sensor data and incomplete modality. Incomplete sensor data refers to degraded or partially missing measurements within a given modality. For example, in RGB images, this may appear as motion blur or overexposed regions, while in 3D point clouds, it may manifest as sparse or noisy regions caused by reflective or occluded surfaces. Such cases are directly reflected in public datasets such as MVTec-3D AD, where structured-light scanners often produce incomplete or noisy point clouds due to surface reflectivity or acquisition artefacts. In these cases, the modality itself remains available, but the fidelity of its captured information is compromised. Incomplete modality, on the other hand, denotes the complete absence of an entire sensing channel, such as when RGB images are unavailable but 3D point clouds are still captured (or vice versa). This situation often arises in practice due to sensor failures, cost constraints, or deployment limitations in industrial production lines.

Nevertheless, deploying multimodal anomaly detection on actual production lines presents two major challenges. Firstly, real-world data acquisition environments in industrial settings are often complex and variable, exacerbating the incompleteness and noise inherent in multimodal data. Specular reflections from glossy or metallic surfaces can cause overexposure in certain regions of RGB images, obscuring subtle defects and disrupting local textures. Simultaneously, these reflections interfere with depth sensors, resulting in sparse or noisy point cloud data acquisition [9]. Secondly, the high-speed movement of workpieces on production lines may induce motion blur in RGB images and cause temporal desynchronization in 3D acquisition, a phenomenon exacerbated under low-light conditions [5]. Moreover, sensor lenses frequently become contaminated by dust, oil, or vapour (common in industrial settings such as metal fabrication or packaging) [7], obscuring critical surface features and causing misclassification. Coupled with cost constraints and system complexity, deploying multiple high-resolution sensors at each inspection station is often impractical, compelling systems to adopt selective modal acquisition strategies. These practical challenges make acquiring comprehensive, high-quality multimodal data in industrial settings a significant hurdle. Moreover, processing high-density 3D point cloud data incurs substantial computational overhead. Point clouds of industrial workpieces typically contain millions of points. Unselectively feeding entire models into inference not only causes time-consuming processing that severely impacts production cycles but may also lead to inefficient model training and inference due to redundant points. Consequently, reducing the complexity of point cloud data processing while maintaining detection accuracy remains an urgent challenge [4].

To address the challenges of modality loss and computational overhead, this paper proposes a novel and efficient cross-modal framework for industrial anomaly detection. This framework adopts an integrated design philosophy combining cross-modal pre-training with modality-elastic inference, alongside a 3D feature extraction strategy that incorporates dynamic voxel subsampling and edge retention. First, during training, the teacher model utilises complete RGB and point cloud features to generate high-quality anomaly annotations. The student model learns to retain cross-modal complementary information through a distillation loss (comprising cross-entropy and KL divergence) while randomly missing either modality. During inference, the student model reliably detects anomalies using only available modality inputs, leveraging the cross-modal knowledge acquired during training. This approach of cross-modal pre-training, coupled with modality-deficient, resilient inference, significantly enhances the method’s robustness to incomplete modalities. Secondly, we designed a voxel resolution dynamic adjustment strategy to efficiently extract 3D structural features. Specifically, this strategy adaptively adjusts the voxel size for down-sampling based on local point cloud density, while maximizing the preservation of key geometric edge points to minimize the loss of useful information. This approach substantially reduces the volume of point cloud data and computational cost while maintaining sensitivity to anomalous regions, enabling the real-time processing of large-scale point clouds. Consequently, our method achieves efficient and robust detection of industrial product defects while balancing accuracy and efficiency.

The principal contributions of this work are summarised as follows:

- Integrated Cross-Modal Pre-training and Missing-Modal Resilient Inference Framework: Proposes a multimodal learning framework for industrial anomaly detection. During training, it fuses RGB and point cloud modalities to learn rich feature representations, while supporting missing-modal inputs during inference. This framework effectively addresses performance degradation caused by incomplete modalities in practical applications, substantially enhancing the robustness of anomaly detection.

- A dynamic voxel subsampling method with an edge-preserving strategy is designed for highly efficient feature extraction from large-scale point cloud data. It dynamically adjusts voxel subsampling ratios based on point cloud density while extracting structural features that preserve geometric edge information. This approach substantially reduces data volume and computational overhead while maintaining the ability to detect minute defects, laying the foundation for real-time 3D anomaly detection.

Performance Enhancement and Industrial Applicability: The method’s efficacy was validated on public benchmarks, achieving new performance benchmarks on datasets such as MVTec 3D-AD [3], with particularly outstanding results in single-modal inference and high-noise scenarios. Combining high accuracy with high efficiency, it demonstrates strong potential for industrial implementation.

2. Related Work

2.1. Two-Dimensional Industrial Anomaly Detection

As a key link in quality control, industrial anomaly detection has received extensive attention in recent years. In particular, feature embedding-based methods have shown excellent performance in recent studies.

Feature-embedding-based anomaly detection relies on pre-trained feature extractors (typically visual models trained on large-scale datasets) and specially designed anomaly detection modules [9]. Approaches such as PatchCore [10] and PaDiM [11] identify anomalies by constructing a feature memory bank of normal samples and computing distances between test samples and this memory bank during inference. Methods like SPADE and UniAD further incorporate self-supervised learning strategies, enhancing the discriminative power of feature representations through tasks such as contrastive learning or mask reconstruction [12]. Memory-based approaches have achieved notable success in industrial anomaly detection, offering the advantage of capturing multi-scale semantic and textural features while maintaining low computational complexity.

Leveraging the common principles underlying 2D and 3D anomaly detection, this paper extends memory-based methods to three-dimensional and multimodal settings. By designing a geometry-guided feature fusion mechanism, our approach effectively integrates complementary information from different modalities, significantly enhancing detection accuracy and robustness—particularly when handling small-sized, low-contrast industrial defects. Experimental results demonstrate that the proposed method achieves state-of-the-art performance across multiple industrial anomaly detection benchmarks.

2.2. Three-Dimensional Industrial Anomaly Detection

The field of industrial anomaly detection has expanded progressively from two-dimensional to three-dimensional spaces in recent years, addressing the challenges of detecting complex geometries and surface defects. In unsupervised three-dimensional anomaly detection (3D UIAD) for industrial scenarios, mainstream approaches typically utilise raw 3D point clouds or corresponding depth maps as input, aiming to identify and localise anomalous regions through spatial layout, geometric morphology, and depth features. Unlike RGB images, three-dimensional data lacks colour information. Consequently, detection accuracy is often low for minute anomalies, such as surface stains or rust spots, that exhibit no pronounced geometric or abrupt changes. However, for defects with distinct geometric features—such as cracks, holes, or misalignments—3D UIAD methods can achieve more reliable detection outcomes. Currently, the number of UIAD methods that rely solely on pure three-dimensional inputs remains limited.

To address this gap, 3D-ST [1] pioneered the introduction of a student–teacher framework within the three-dimensional point cloud domain. This approach constructs a teacher network specifically tailored for point clouds, pre-training it using local geometric descriptors and supplementary annotation data. This enables the teacher to learn high-dimensional representations of normal geometric structures. Subsequently, the student network learns to extract geometric features from raw point clouds under the guidance of the teacher. Consequently, during inference, accurate detection of geometric anomalies can be achieved using only unannotated point cloud input. Complementing this, Reg3D-AD [13] pioneered the application of a memory bank mechanism in 3D UIAD. It stores feature sets from normal samples within the memory bank, enabling rapid retrieval of similar features through comparative queries. This enables the system to identify whether a given point cloud exhibits geometric anomalies that deviate from the normal distribution, thereby effectively addressing the evaluation challenges posed by insufficient baseline data.

When processing high-resolution point clouds, some studies have further optimised approaches from both feature representation and computational efficiency perspectives. Group3AD [14] proposed a method based on group-level representations, partitioning the input point cloud into several semantic subgroups. It constructs contrastive learning tasks within individual samples, enabling the network to maintain sensitivity to minute geometric changes across different point cloud resolutions and scales. This achieves robust anomaly detection for high-resolution point clouds. In contrast, PointCore [15] focuses on integrating multi-source features within limited computational resources by tightly coupling locally co-occurring features stored in memory with global features extracted by the PointMAE pre-trained model, effectively avoiding the high computational overhead and feature mismatch issues associated with multi-memory schemes.

Additionally, PointCore employs a ranking-based normalisation strategy to mitigate the impact of distribution discrepancies between coordinate anomaly scores and PointMAE anomaly scores for extreme value pairs, thereby balancing the multi-channel anomaly scoring system. Addressing generalization challenges under sparse training data, R3D-AD [16] employs anomaly simulation strategies to generate representative pseudo-anomaly samples by simulating geometric distortions, such as “bulging,” “sinking,” and “damage,” in point clouds. Subsequently, diffusion models reconstruct these pseudo-samples, enabling the detection and localisation of minute anomalies in real point clouds through reconstruction error analysis.

In our research, we adopt a feature embedding-based methodological framework and propose an innovative teacher-student model. Unlike existing approaches that focus solely on local-level cross-modal alignment while neglecting global feature consistency, we design a multi-scale feature fusion mechanism. This simultaneously optimises intra-modal feature compactness and cross-modal information exchange, thereby enhancing anomaly detection accuracy and robustness while maintaining computational efficiency.

2.3. Voxel-Based Point Cloud Downsampling

Voxel-based downsampling represents a widely adopted data simplification technique in point cloud processing, originally proposed by Rusinkiewicz and Levoy in 2002 [17]. This method achieves dimensionality reduction and sparsification by partitioning three-dimensional space into a regular cubic grid (voxels) and replacing the original point set with the centroid of each non-empty voxel [11]. The core advantage of voxel subsampling lies in its ability to transform unstructured point cloud data into a regular, discrete representation. This significantly reduces computational complexity in subsequent processing while preserving key features of the original geometric structure [18].

In recent years, researchers have proposed various improved voxel subsampling methods to address the limitations of traditional approaches. Yang et al. introduced an adaptive voxel size-based point sampling method (AVS-Net), which dynamically adjusts voxel size according to the local density distribution of the point cloud, effectively balancing computational efficiency and geometric fidelity [5,9]. Xiao et al. proposed a hierarchical voxel segmentation subsampling method that balances point cloud subsampling effects by increasing the number of segmentation layers, achieving efficient subsampling while preserving key features [19].

Despite voxel down-sampling’s commendable performance with large-scale point cloud data, inherent limitations persist: fixed voxel sizes may cause geometric detail loss, particularly in regions with significant density variations; simple centroid calculations can blur high-curvature features like edges and corners; furthermore, quantisation errors during voxelisation may introduce new noise [20,21,22,23,24,25].

To address the limitations of existing voxel down-sampling methods, an algorithm is proposed that employs a dynamic voxel resolution adjustment strategy for adaptive down-sampling. Its focus lies in preserving critical structural information and detailed textures across different scales. The algorithm comprises two core modules: an adaptive down-sampling unit module and a point cloud contour extraction module. The adaptive subsampling unit module dynamically adjusts voxel parameters based on the local geometric characteristics of the point cloud, whilst the point cloud contour extraction module concentrates on preserving key structural features within the point cloud.

2.4. Cross-Modal Knowledge Distillation

Cross-Modal Knowledge Distillation (CMKD), an effective knowledge transfer paradigm, has made significant progress in multimodal learning over the past few years. Traditional multimodal architectures typically require complete modal inputs during both training and inference phases, severely limiting their applicability in practical scenarios involving modal deficiencies or sensor failures. Cross-modal knowledge distillation establishes knowledge bridges between different modalities, enabling models to maintain high performance under single-modal conditions while retaining the advantages of multimodal complementary information.

The theoretical foundation of cross-modal knowledge distillation can be traced back to Vapnik’s Learning Using Privileged Information (LUPI) framework [26], in which the teacher model accesses additional “privileged” modal information, while the student model must reason under conditions where this privileged information is absent. Unlike traditional knowledge distillation, cross-modal knowledge distillation faces the core challenge of domain gaps between modalities. This often renders direct application of unimodal knowledge distillation methods ineffective in cross-modal scenarios.

Recent research indicates that the domain gap manifests primarily in two aspects: modality imbalance and soft label misalignment [27]. The former reflects significant disparities in prediction confidence across modalities for target categories, while the latter reveals divergent prediction distributions for non-target categories. These discrepancies render straightforward imitation of the teacher modality by the student modality both challenging and limited in effectiveness.

In industrial anomaly detection, cross-modal knowledge distillation demonstrates unique advantages. Traditional approaches, such as the hallucination network proposed by Garcia et al., enhance video action recognition by generating RGB-D cross-modal representations. Wang et al.’s LCKD effectively addresses missing modality issues by identifying the most pertinent modality as the teacher [28,29,30,31].

However, existing research has overly focused on completing training for missing modalities to improve performance, while neglecting in-depth exploration of the intrinsic correlations between modalities. Our experimental results demonstrate that studying inter-modal correlations holds greater significance for guiding cross-modal knowledge distillation. Particularly in industrial anomaly detection scenarios, understanding the complementary relationships between different modalities (such as RGB images, depth maps, and thermal imaging) enables more effective utilization of existing modalities in quality control and leveraging human experts’ prior knowledge. This approach ultimately enhances the defect identification rate in multimodal industrial anomaly detection.

3. Methodology

3.1. Overview

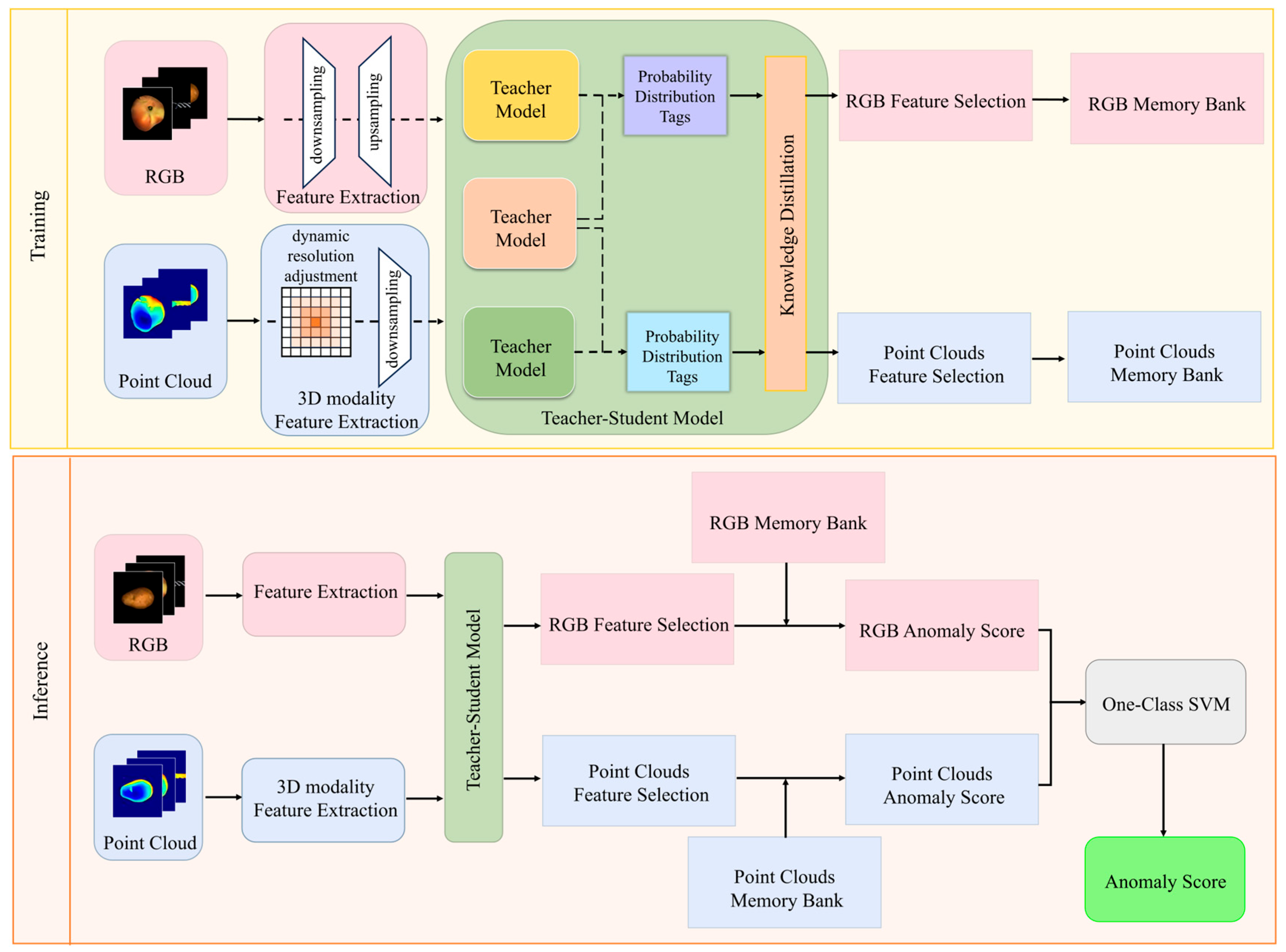

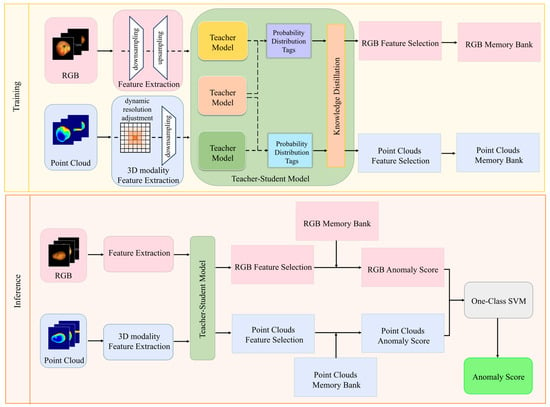

This paper presents an integrated framework, which is sequentially unfolded in Section 3, as illustrated in Figure 1.

Figure 1.

Dual-modal complementary learning during training, coupled with knowledge transfer perception for missing modalities during inference.

The core workflow comprises the following steps: First, utilizing the pre-trained image and point cloud networks described in Section 3.2 to freeze and extract high-level RGB and 3D features, thereby providing consistent input for subsequent distillation and memory construction. Subsequently, in Section 3.3, a teacher model generates bimodal “hallucinated” soft labels. Under a training scheme comprising 40% bimodal data, 30% RGB-deficient data, and 30% point cloud-deficient data, the student model learns robust joint representations guided by a cross-entropy plus KL divergence distillation loss. Anomalous data consist of samples containing real industrial defects such as surface scratches, dents, cracks, contaminations, or structural misalignments. Subsequently, in Section 3.4.1, based on the normal sample features extracted by the student model, orthogonal random projection is employed for dimensionality reduction. K-means clustering selects representative prototypes to construct a compact and efficient normal feature memory bank. Finally, in Section 3.4.2, anomaly scores are computed via pixel-level and image-level nearest neighbour distance matching against the memory bank. A single-class SVM enables two-tier decision fusion, achieving high-precision detection and localisation of industrial defects. Through this interlinked modular design, our method strikes a balance between detection accuracy and operational efficiency, while significantly enhancing model robustness against scenarios that are modality-deficient.

3.2. Integrated Framework for Cross-Modal Pre-Training and Elastic Inference Under Modal Deficiency

Building upon recent advancements such as the multimodal 3D detection model M3DM [30], the supervised Transformer framework DINO [32], and the point cloud masked autoencoder Point-MAE, pre-trained Transformers demonstrate exceptional performance in distinguishing differential features between normal and anomalous data. Given their proficiency in capturing data transformation relationships, this paper first employs them as deep feature extractors () to obtain high-level representations suitable for data compression and storage. Specifically, multimodal data input to the feature extractor is denoted as and , while the extracted output features are denoted as and .

3.2.1. RGB Feature Extraction from

To ensure stable feature extraction during pre-training, basic requirements were defined for the input RGB images. A minimum resolution of 224 × 224 pixels was adopted, consistent with the configuration of vision transformers such as DINO, which balances computational efficiency with the retention of fine-grained texture and defect details. Therefore, in industrial settings, we recommend imaging sensors with at least HD resolution (≥1280 × 720) and adequate illumination to minimise overexposure and specular reflections. These conditions set the lower bound for acceptable image quality and support reproducibility across diverse sensor sources.

For a given RGB image , we employ the pre-trained image feature extractor to map it into a low-dimensional semantic space, generating feature maps.

where and denote the spatial dimensions after downsampling, and represents the feature dimension. To achieve cross-modal alignment with point cloud features, bilinear upsampling is applied to this feature map, yielding the aligned feature representation

3.2.2. Three-Dimensional Point Cloud Feature Extraction

To efficiently process large-scale 3D point cloud data and effectively mitigate feature information loss, this paper proposes an adaptive downsampling algorithm based on a voxel-based dynamic resolution adjustment strategy. This method comprises two core modules: dynamic voxel resolution adjustment and adaptive downsampling. Working synergistically, they adaptively adjust the sampling scale to accommodate variations in local point density while precisely preserving critical geometric edge information within the point cloud. This achieves an optimal balance between data simplification and feature fidelity.

For the input point cloud

Given a structured point cloud, its inherent sparsity and non-uniform distribution must be carefully considered. To address this, farthest point sampling (FPS) [33] is applied to choose well-spaced centroids, which act as reference points for forming local neighborhoods through K-nearest neighbor (KNN) search from the original points. These subsets are subsequently forwarded to the feature extractor , which converts them into latent feature embeddings with dimension In practice, following PointNet++, a common setting is to sample 1024 centroids at the input layer, while larger-scale scene segmentation tasks typically adopt 4096 points to ensure sufficient coverage. However, traditional fixed voxel sizes often fail to balance global consistency with local detail when handling regions exhibiting pronounced local density variations. To overcome this limitation, we propose a dynamic voxel segmentation strategy that adaptively adjusts voxel size based on local point density.

To perform spatial voxelisation of the point cloud, we first compute the maximum and minimum values of the point cloud in the , and directions, denoted respectively as

Let

The maximum voxel block encompassing the entire point cloud can be constructed, with edge lengths in each direction. Within this maximum voxel block, we subdivide the three-dimensional space to generate a series of initial voxel cells for subsequent adaptive segmentation.

For each voxel unit v, denote its internal point set as , the number of points as , and the voxel volume as . We define the local point density

Based on this, we construct the voxel size function

where and denote the upper and lower bounds of the voxel size, respectively, and is the adjustment parameter. This strategy ensures smaller voxels are employed in dense regions to capture fine details, whilst larger voxels are used in sparse areas to enhance sampling efficiency.

Following adaptive sampling, for each set of points , within a voxel, we construct local geometric features reflecting the point cloud’s shape and edge information. First, for each point , we compute the covariance matrix using its neighbourhood set :

where

By performing an eigenvalue decomposition on , the eigenvector corresponding to the smallest eigenvalue is taken as the normal vector for point . Based on this, we define the local edge response metric:

This metric exhibits lower values in flat regions while significantly increasing at edges or areas of structural discontinuity, thereby providing effective guidance for subsequent edge preservation.

To smoothly map the sparsely extracted local features back onto the entire point cloud, we employ an improved inverse distance weighting (IDW) interpolation method. Let the neighbourhood sampling set of the point to be interpolated be denoted as, and the following equation gives its feature vector :

where the weighting function simultaneously accounts for spatial distance and normal vector similarity, defined as

and where they represent the standard deviation parameters for distance and normal vector similarity, respectively. This refinement not only ensures spatial smoothness but also enhances feature discrimination in edge regions.

Finally, through average pooling, we obtain the downsampled feature map . By merging the detected edge point set with the downsampled centroid point set, the final point set is formed, effectively preserving key geometric features of the point cloud while significantly reducing computational overhead.

Through the aforementioned design, this module achieves density-adaptive sampling and structure-aware feature extraction for point cloud data, subsequently restoring global features via geometry-guided interpolation. Experimental validation demonstrates that this method accurately preserves local geometric structures and edge information while ensuring data reduction efficiency, providing substantial support for subsequent feature fusion and anomaly detection.

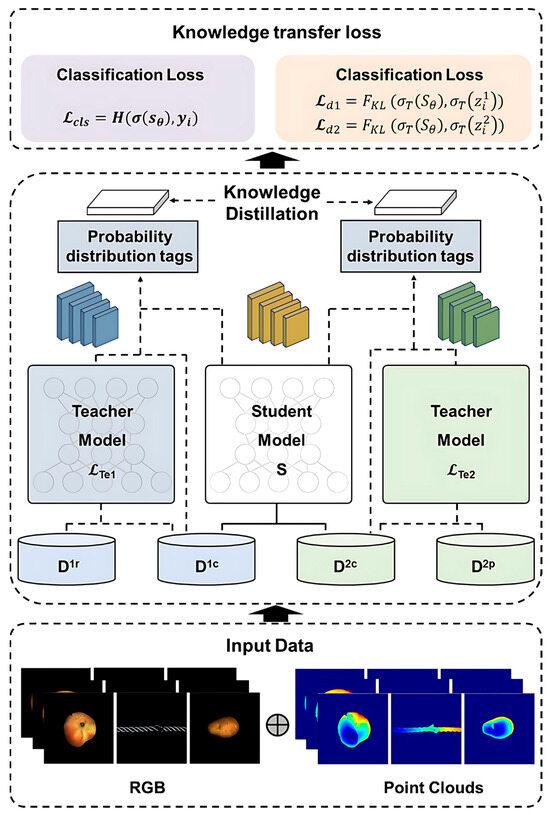

3.3. Cross-Distillation Mode

Industrial data acquisition environments are complex and variable, frequently resulting in missing or damaged modalities. This causes traditional multimodal models to rapidly degrade in performance during inference. Therefore, we propose a cross-modal knowledge distillation method based on a teacher-student framework. This enables the model to fully utilise all available data during training, achieving stable and accurate anomaly detection during inference even when certain modalities are missing.

Having acquired features from each modality, we describe our cross-modal training strategy. We propose a teacher-pupil model capable of fully utilising all training samples (even those with missing modalities), while ensuring stable anomaly detection during testing, even when only partial modalities are provided. The core idea is to train teacher models separately for each modality (using all available data for that modality), then use the knowledge from these teacher models to guide the training of a multimodal student model. This ensures that even if a sample lacks a particular modality, it can still provide valuable information for training the student model.

First, train two monomodal teacher models using all available data. Represent multimodal samples as . RGB samples are denoted as , while 3D point cloud samples are represented as . Train the two monomodal teacher models, respectively:

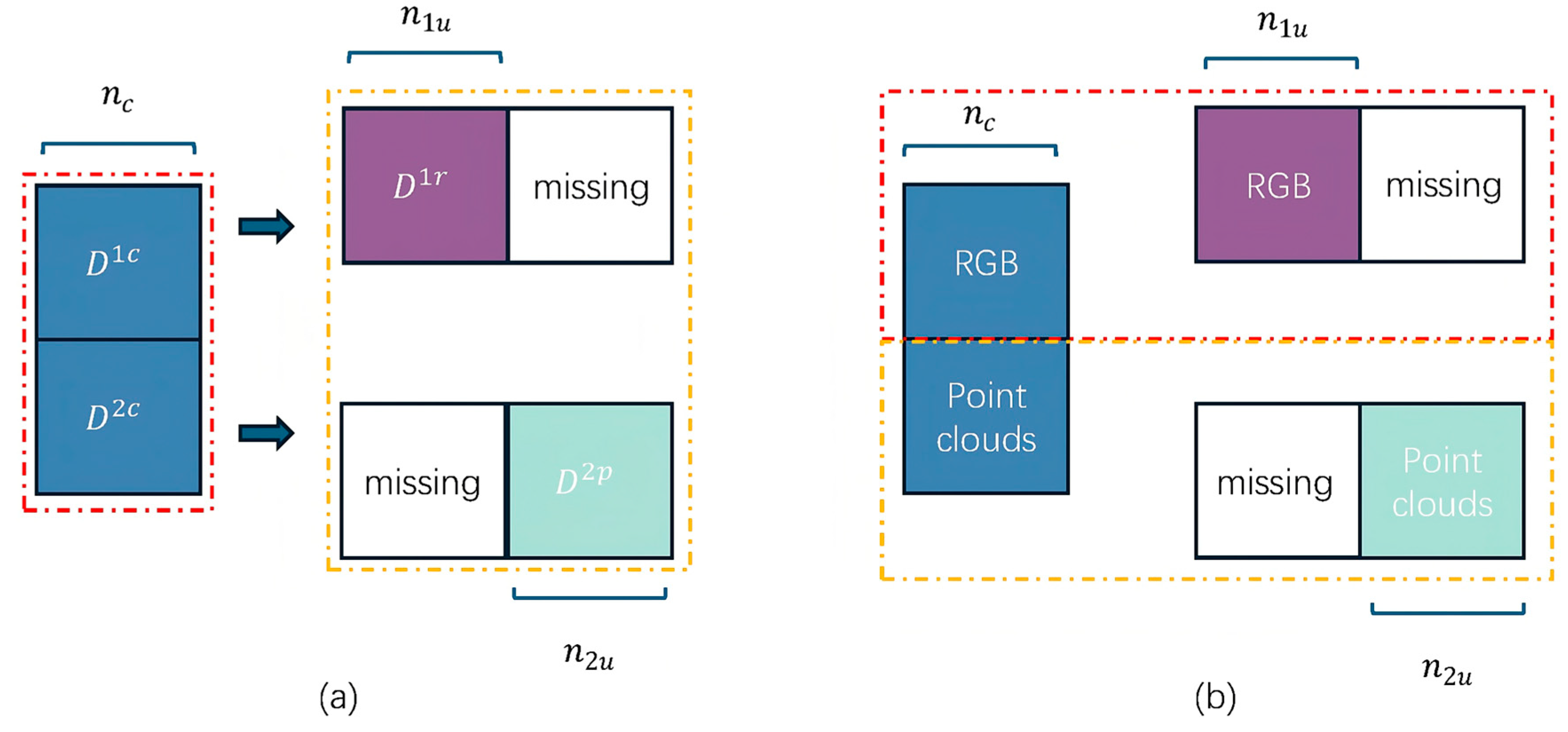

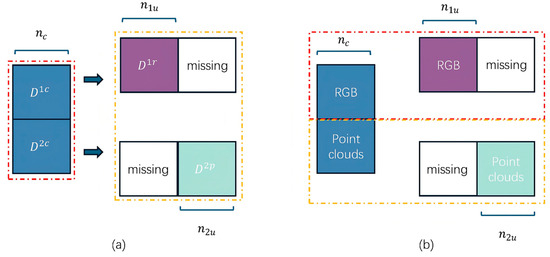

Figure 2a illustrates the data structure. Samples within the blue dashed box possess complete modalities, while those within the yellow dashed box contain only one modality.

where denotes the cross-entropy loss, represents the Softmax function, and .

Figure 2.

(a) The dataset comprises two categories: complete bimodal samples and single-modal samples only. (b) Sample selection strategy for constructing the dual-teacher model: samples within the red dashed box are used to train the first single-modal teacher model, while those within the yellow dashed box are used to train the second single-modal teacher model. This ensures that each teacher maximizes the utilization of information specific to their dedicated modality.

We then use these two teachers to annotate samples in . The log for the i-th sample is

where denotes the log score assigned by teacher j to the i-th sample.

Employing cross-modal knowledge distillation, we train a multimodal student model S that takes images and point clouds (where available) as input and outputs anomaly predictions. The student model is designed to fuse information from both modalities, enabling it to outperform single-modal teachers while adapting to diverse input scenarios. We construct S using a multimodal deep neural network (M-DNN) [34] with a dual-branch architecture, where one branch processes image features and the other handles point cloud features. If the M-DNN is trained following the approach in [35], that is, utilizing only samples with complete modalities {, }, the effective training set is restricted to . When is substantially smaller than and , a considerable portion of potentially valuable information is discarded. As a result, the number of available training samples becomes insufficient, which may hinder the learning capacity and generalization ability of the model. Therefore, we propose to train the M-DNN by distilling knowledge from two teacher models, and , which are pretrained on substantially larger datasets. Although the classification performance of each teacher may be limited due to their reliance on a single modality, they nevertheless capture modality-specific discriminative patterns. By providing this specialized expertise to the student, the teachers guide it toward learning a more comprehensive multimodal representation. Through this process, the student can effectively integrate complementary knowledge from both modalities, thereby achieving improved performance beyond what unimodal supervision alone can offer, see Algorithm 1.

| Algorithm 1: Algorithm of the proposed method for two modalities. |

| , , , , , for each training iteration do Train teacher model with {[, ], [, ]} end for for each training iteration do Train teacher model with {[X2c, X2u], [,]} end for Label the samples to train student model with the teachers with Equation (14). for each training iteration do Train student model with Equation (15) end for |

We denote the student network as , where represents its learnable parameters. The proposed method is trained by minimising the following global objective function:

where and are hyperparameters regulating the extent to which the student model acquires knowledge from the two teacher models. Larger values indicate the student network requires more information from the corresponding teacher model.

The classification loss is defined in cross-entropy form to measure the discrepancy between the student network’s predictions and the true labels:

where denotes cross-entropy and represents the softmax function.

To further enhance the student network’s performance, we conduct knowledge distillation from two teacher models: and . Specifically, the distillation loss measures the distributional difference between the student network’s output and the teacher models’ outputs using Kullback–Leibler divergence (KL divergence). Let the output vectors of the teacher models on sample be denoted as and , respectively. The distillation loss is then defined as follows:

where denotes the softmax function with temperature coefficient T, employed to smooth the output distribution while preserving greater dark knowledge.

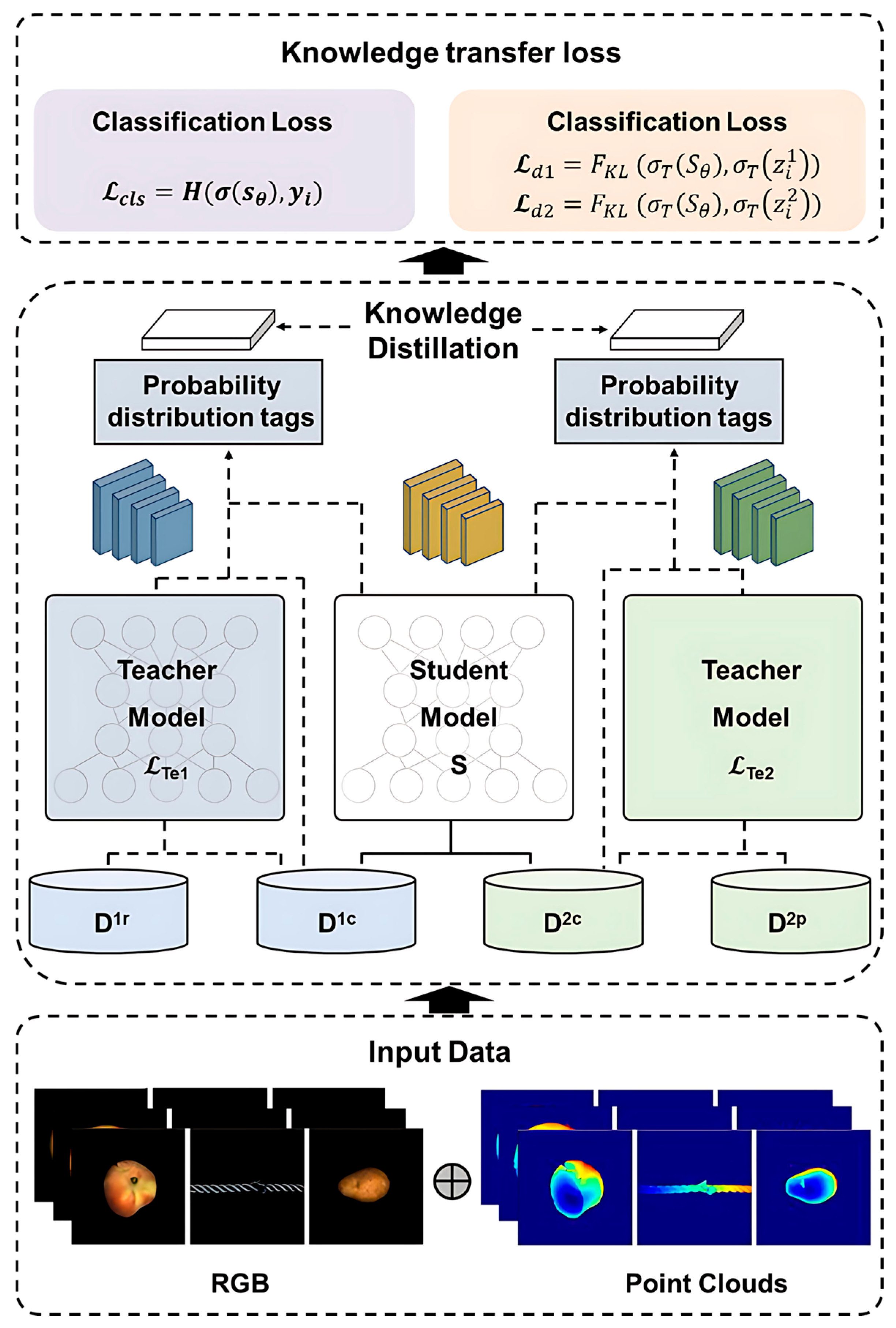

Through this training process, the student model S learns a fused representation that fully utilises information from both modalities and has encountered scenarios where one modality is missing during training (via corresponding teacher guidance). Consequently, during inference, the student model can reliably predict anomalies regardless of whether the input is bimodal or unimodal. Figure 2 illustrates the overall structure of the teacher-student distillation framework, see Figure 3.

Figure 3.

We first pre-train the teacher network on a full dataset containing both complete and missing modality samples, enabling it to learn robust feature representations across both multi-source information and incomplete input scenarios. Consequently, the student network effectively inherits the teacher network’s cross-modal representation capabilities even when receiving only partial modality inputs, achieving high-precision anomaly detection.

3.4. Unsupervised Feature Fusion

Inference Phase: For any input sample (potentially containing only RGB or point cloud data), the trained student model first extracts its multimodal feature representation. The extracted features are then matched against a pre-constructed normal feature memory bank using nearest neighbour matching, calculating anomaly scores at both image and pixel levels. Finally, a one-class SVM discriminator outputs anomaly detection results (including whether the entire image is anomalous and the pixel-level anomalous regions).

3.4.1. Kernel Selection for Memory Repository Construction

Reference feature library methods quantify abnormality by comparing test sample features with those in a normal sample feature library. Whilst directly storing all normal sample features is feasible, it proves prohibitively costly (requiring substantial storage space and time-consuming matching). Consequently, we require constructing a feature memory bank that is both comprehensive and efficient: on one hand, covering the diversity of normal data as fully as possible to maintain accuracy; on the other, eliminating redundant features to reduce storage and computational overhead. Thus, we aim to build a compact memory bank that encompasses normal data diversity whilst discarding redundant information.

To effectively address this challenge, we employ a clustering algorithm.

The fundamental approach involves partitioning the original feature space into k clusters (where k represents the desired core set size), then selecting one representative point from each cluster as an element within the memory repository. Clustering methods naturally group similar features and extract representative samples from each cluster.

Compared to directly storing all training features, our memory repository requires retaining only k core features, substantially reducing storage and comparison costs (complexity decreases from O(N) to O(k)). Simultaneously, clustering ensures that these core features cover the feature space of normal data as maximally as possible.

Specifically, given a feature set (containing feature vectors of dimensions), we employ the following steps:

- Apply the k-means clustering algorithm to partition into clusters

- For each cluster , select the sample closest to the cluster centre as its representative:where is the distance metric function. This metric should align with that used for calculating anomaly scores to ensure consistency between feature selection and anomaly detection processes.

- All selected representative points form the final core set

In determining the number of clusters , we adopted the Elbow Method, which calculates the Within-Cluster Sum of Squares (WCSS) for different values of k and plots the curve. As k increases, the WCSS decreases progressively, but after a certain point, the rate of decrease slows down markedly, resulting in an “elbow” shape in the curve. The corresponding value of at this elbow is regarded as the optimal choice. At this point, adding more clusters yields only marginal improvements. By applying this criterion, we ensure sufficient coverage of the diversity of normal data features while avoiding unnecessary redundancy in storage and computation, thereby achieving a balance between accuracy and efficiency. Consequently, the value of used in our experiments was determined based on this principle.

However, as the sample size increases, similarity calculations among high-dimensional features remain highly computationally intensive. To address this, we introduce Orthogonal Random Projection (Li et al., 2022) [36] to reduce feature dimensions. This method preserves relative distance relationships between features, significantly accelerating computation while maintaining acceptable representation quality. Experiments demonstrate that applying this technique increases feature extraction speed by approximately 3.5 times, while incurring a loss of less than 2% in detection accuracy.

Following the aforementioned teacher-student training, we obtained a student model capable of extracting fused features. Subsequently, we utilise this model to extract features from normal samples and construct a reference memory bank for anomaly detection.

3.4.2. Decision Layer Fusion

Previous anomaly detection approaches predominantly calculated singular anomaly scores at the image level or focused solely on pixel-level heatmaps. Our method, however, integrates both image-level and pixel-level assessments, employing a class-based Support Vector Machine (SVM) to fuse these two information streams. This dual-level decision fusion is anticipated to enhance detection reliability—ensuring overall accuracy at the image level while providing precise localisation at the pixel level.

During inference, the model calculates anomaly scores by comparing each real or generated feature map ( and ) against the corresponding memory repository. Two single-class top-port vector machines employing stochastic gradient descent techniques and serve as the decision layer fusion for image classification and segmentation:

C and S denote the final prediction scores for image classification and pixel segmentation, respectively. To balance the average anomaly scores across modalities, correction factors and are introduced and represent the anomaly scores for classification and segmentation, formulated as (Roth et al., 2022) [37]:

is the distance metric. The anomaly score for an image is determined by the maximum distance between its features and the most similar features in its memory bank:

During inference, to fully leverage knowledge acquired through multimodal training, we employ a memory bank matching strategy to assign anomaly scores to input feature maps. Specifically, let feature maps extracted from point clouds and RGB images be denoted as and , respectively, with corresponding memory banks and . To balance scoring scale differences across modalities, we introduce correction factors and δ. Ultimately, two One-Class Support Vector Machine (One-Class SVM) decision trees are employed to determine image classification and pixel-level segmentation, respectively. Their fusion formula is defined as:

where and represent the classification and segmentation decision functions, respectively (both implemented using One-Class SVM optimised by stochastic gradient descent), while and denote the overall image anomaly score and the anomaly response for each pixel, respectively.

To construct the scoring function, we derive two anomaly scoring metrics based on the distance between features and the memory repository:

- Classification Anomaly Score

Define the function to quantify the most “anomalous” local features within the feature map . Its construction proceeds as follows: First, identify the most difficult-to-match feature within that satisfies the following condition:

Then, let the most similar memory entry corresponding to be

Finally, the classification anomaly score is defined as

This score reflects the maximum degree of mismatch between the input sample’s overall feature distribution and that of the normal sample database.

- 2.

- Segmentation Anomaly Score

To characterise pixel-level anomalies, we define the function to compute the anomaly response at each position in the feature map, i.e.,

This definition represents the distance between the input feature at position and the most similar feature in the memory database. A larger distance indicates a higher likelihood of an anomaly at that position.

In summary, by computing anomaly scores for both modalities and applying scale normalisation via correction factors, the decision-layer fusion module comprehensively utilises multimodal information across classification and segmentation tasks. This enhances the overall accuracy and robustness of anomaly detection.

4. Experiments

4.1. Experimental Details

4.1.1. Dataset and Evaluation Metrics

Three-dimensional industrial anomaly detection remains in its infancy, with the MVTec-3D AD dataset [3] serving as the standard dataset for this task. We conducted experiments on this dataset. The MVTec-3D AD dataset comprises 10 categories, with 2656 training samples and 1137 test samples. All samples were acquired using industrial 3D sensors employing structured light technology for 3D scanning, with positional information stored as a 3-channel tensor representing x, y, and z coordinates. Additionally, each point in the dataset records RGB information aligned with its corresponding three-dimensional point cloud (PC). As all samples originate from the same viewpoint, the RGB information for each sample can be stored as a single image, thereby providing multimodal data encompassing both RGB and three-dimensional positional information for each sample. Each sample in this dataset comprises a colour point cloud image, providing point cloud coordinates in 3D space alongside its corresponding RGB image.

Recent multimodal industrial anomaly detection research (e.g., Bergmann et al., 2022) [4] predominantly utilises the MVTec-3D AD dataset, which records aligned point clouds and RGB images of real objects. Within each category, objects may exhibit 3 to 5 defects with pixel-level accuracy. The 3D sensor scans objects using structured light technology to acquire point cloud data, which is combined with RGB images to provide multimodal input for model training. Additionally, we evaluated our method on the purely synthetic RGB + 3D IAD dataset Eyecandies [38]. During evaluation, the performance of image-level and pixel-level anomaly detection was assessed using the area under the receiver operating characteristic curve (I-AUROC and P-AUROC) and the area under the probability overlap curve (AUPRO), respectively. Compared to P-AUROC, AUPRO places greater emphasis on the overlap between predicted results and each connected segment in the ground truth, effectively mitigating the impact of anomaly size on detection performance.

4.1.2. Data Preprocessing

This study conducted experiments in the Ubuntu 22.04 operating system environment using an NVIDIA RTX 3090 graphics card (manufactured by NVIDIA Corporation, Santa Clara, CA, USA). The environment dependencies and their corresponding versions are shown in Table 1.

Table 1.

List of environmental dependencies.

To mitigate the detrimental impact of background artefacts on classifier performance, the 3D point cloud preprocessing stage first employs the RANSAC algorithm for robust fitting of background planes within the point cloud. All points within 0.005 meters of this plane are subsequently removed, effectively eliminating irrelevant planar noise. This operation not only significantly reduces computational overhead during 3D feature extraction in both training and inference phases but also minimizes background interference during anomaly detection, thereby enhancing detection accuracy. Finally, to maintain consistent input dimensions with the subsequent multimodal feature extraction network, which employs adaptive downsampling via DINO and a voxel resolution dynamic adjustment strategy, the coordinates of the background-removed point cloud and the corresponding RGB images are uniformly resampled to 224 × 224 pixels. This ensures seamless integration between the preprocessing workflow and model input.

4.1.3. Experimental Implementation Details

For feature extraction, we employed models pre-trained on large-scale datasets: DINO for RGB images, pre-trained on the ImageNet dataset [39]; and an algorithm utilizing a dynamic voxel resolution adjustment strategy for adaptive down-sampling of point cloud data. This combined feature extraction approach references the design outlined in [40]. Specifically, the input RGB images are resized to 224 × 224 × 3, which is the standard input resolution required by the pre-trained DINO vision transformer. Following feature extraction by the pre-trained models, the feature representations are unsampled to a spatial dimension of 56 × 56, yielding a feature map with 768 channels. For point cloud data, we further introduced a dynamic voxel resolution adjustment mechanism based on local density to adaptively down-sample different regions: finer voxels are allocated in high-density areas, while larger voxels are employed in sparse regions. This approach minimises redundant points while maximising the preservation of geometric detail. Subsequently, structure-aware feature extraction is performed on the point set within each voxel unit: a neighbourhood point cloud is constructed via k-nearest neighbour search, its covariance matrix is computed, and principal normal vectors are obtained through feature decomposition. Edge response metrics are defined based on normal vector differences to identify and preserve geometric edges within the point cloud. Subsequently, utilising an enhanced inverse distance weighting [41] method, the sparsely extracted structure-aware features are smoothly interpolated onto a 56 × 56 spatial grid identical to the RGB feature map. The channel count is expanded to 768 dimensions to achieve cross-modal alignment. Finally, at the decision layer, we feed the aligned RGB and point cloud features in parallel to two One-Class SVMs. Optimization is performed using stochastic gradient descent [42] with a learning rate of 1.3 × 10−4 over 900 iterations, constructing a multimodal discriminative model that supports both image-level classification and pixel-level segmentation.

For multimodal cross-modal distillation, to simulate incomplete modalities in real-world scenarios, 40% of samples were randomly selected according to a discrete uniform distribution, ensuring that each sample had an equal probability of being chosen to retain both modalities. For the remaining samples, one modality was independently removed with a 30% dropout rate. All teacher networks employed a three-layer fully connected architecture, with hidden node counts optimised via validation sets within the range {16, 32, 64, 128, 256}. The student network within the TS (Teacher–Student) framework and the multimodal DNN (M-DNN) baseline retained identical network topologies to those used in the data generation phase, with weights initialized from scratch and trained anew. To prevent bias, the two sets of weight coefficients α and β in the distillation loss are consistently enforced to be equal, with their optimal values sought within {0.1, 0.2, …, 0.9}; the temperature parameter T is selected from five levels: {1, 5, 10, 15, 20}.

4.2. Comparative Experiments

Table 2, Table 3, Table 4 and Table 5 present our method’s category-wise and average performance comparisons for I-AUROC and AUPRO metrics across 10 classes on the MVTec 3D-AD dataset. To comprehensively evaluate our approach, we contrast it with existing methods encompassing single-RGB, single-3D point cloud, depth image, and multimodal approaches combining RGB with either point cloud or depth image. Experimental results demonstrate that, compared to methods utilising solely RGB data, our approach achieves a 2.1% improvement in I-AUROC and a 0.7% improvement in AUPRO relative to the best single-modality methods. When compared to single 3D point cloud approaches, our method achieves a 0.3% improvement in I-AUROC and a 0.4% improvement in AUPRO. Compared to state-of-the-art methods based on RGB plus point cloud or depth images, our approach achieves optimal performance with I-AUROC and AUPRO scores of 0.938 and 0.947, respectively. This represents a 2.2 percentage point improvement in I-AUROC and a 0.5 percentage point gain in AUPRO over the current best methods. These results fully validate the effectiveness and superiority of cross-modal knowledge distillation in industrial anomaly detection tasks.

Table 2.

I-AUROC results for MVTec 3D-AD using RGB-based methods.

Table 3.

AUPRO results for MVTec 3D-AD based on RGB method.

Table 4.

I-AUROC results for MVTec 3D-AD based on the 3D method.

Table 5.

AUPRO results for MVTec 3D-AD based on the 3D method.

The point cloud simplification ratio (PCR) is generally considered an important quantitative indicator for measuring the down-sampling effect of point cloud maps. Its calculation method is shown in the following formula [50]. This indicator can intuitively reflect the change ratio of the number of point clouds before and after down-sampling, thus providing a quantitative basis for the trade-off analysis between point cloud compression efficiency and geometric fidelity. In existing research on point cloud processing and map construction, the simplification ratio has been widely used to evaluate the effectiveness of different down-sampling strategies.

where NUM is the total number of points in the original point cloud, is the total number of points in the down-sampled point cloud, and R is the point cloud simplification ratio.

To evaluate the effectiveness of our proposed dynamic down-sampling algorithm, we conducted comparative experiments against several conventional down-sampling strategies, including voxel-based, uniform, random and farthest point sampling (FPS). The experiments were performed on raw point clouds containing 216,942 points. Table 6 summarizes the comparative analysis, demonstrating that our method achieves an excellent balance between simplification ratio (91.89%) and processing speed (0.0139 s), thereby highlighting its efficiency and suitability for real-time applications. Specifically, the algorithm reduces the number of points from 216,942 to 17,537 while preserving essential structural details, without requiring the extensive manual parameter tuning demanded by many traditional approaches. This adaptability not only simplifies the down-sampling process but also enhances its practicality and applicability in diverse industrial scenarios.

Table 6.

Quantitative comparison of different point cloud down-sampling strategies in the proposed framework.

To further validate that the observed performance gains are not attributable to random fluctuations, we conducted paired-sample t-tests comparing our method with representative baselines across the ten categories of the MVTec 3D-AD dataset. The results in Table 7, Table 8 and Table 9 demonstrate that our method achieves significantly higher I-AUROC scores than several representative approaches, including FPFH (t = 3.969, p < 0.05), AST (t = 2.191, p < 0.05), BTF (t = 2.251, p < 0.05), Shape-Guided (t = 0.694, p < 0.05), and M3DM (t = 2.045, p < 0.05). For AUPRO, our model also significantly surpasses the single point cloud baseline (t = 4.586, p < 0.05), whereas the difference with the single-RGB model is not statistically significant (t = 2.357, p > 0.05). Importantly, the t-test results obtained from Table 3, Table 4 and Table 5 reveal patterns consistent with those observed in Table 2: our method exhibits statistically significant improvements over the majority of baselines, with only a few comparisons against unimodal RGB models showing non-significant differences. Moreover, the ablation study confirms that dual-modality RGB + point cloud variants substantially outperform unimodal baselines (e.g., Dual-RGB vs. Single-RGB: t = 12.366, p < 0.05 for I-AUROC; t = 3.306, p < 0.05 for AUPRO), underscoring the effectiveness of multimodal knowledge transfer. Collectively, these findings provide compelling statistical evidence that the improvements of our approach are not only numerically superior but also robust, reproducible, and generalizable across datasets, modalities, and evaluation metrics. This reinforces the practical significance of cross-modal knowledge distillation for advancing industrial anomaly detection.

Table 7.

Experimental comparison table of various down-sampling methods.

Table 8.

Paired-sample t-test results for I-AUROC scores in the ablation study.

Table 9.

Paired-sample t-test results for AUPRO scores in the ablation study.

4.3. Melting Experiments

We conducted ablation experiments to validate the efficacy of the multi-modal training, few-modal inference (MTFI) workflow, and assessed its generalisation capability for asymmetric performance by swapping feature extractors and datasets. In our experiments, we replaced the original RGB feature extractor, DINO ViT-B/8 [51], with Swin Transformer and DINO ViT-S/8. We substituted the 3D point cloud feature extractor, Point-MAE, with PointNet and PointCNN, and switched the dataset from MVTec 3D-AD to Eyecandies. Experimental results, as summarized in Table 7, demonstrate that the MTFI-based approach significantly outperforms unimodal methods and, in certain scenarios, even surpasses dual-modal RGB-3D point cloud memory-based methods. However, when performing inference using RGB data, the MTFI process does not yield a substantial performance improvement over a single RGB memory. Collectively, these findings further indicate that the MTFI process effectively enhances the utilisation of multimodal training data when performing inference using the principal component (3D point cloud), see Table 10.

Table 10.

Ablation study for different feature extractors. The top and bottom of each row are the average I-AUROC and AUPRO.

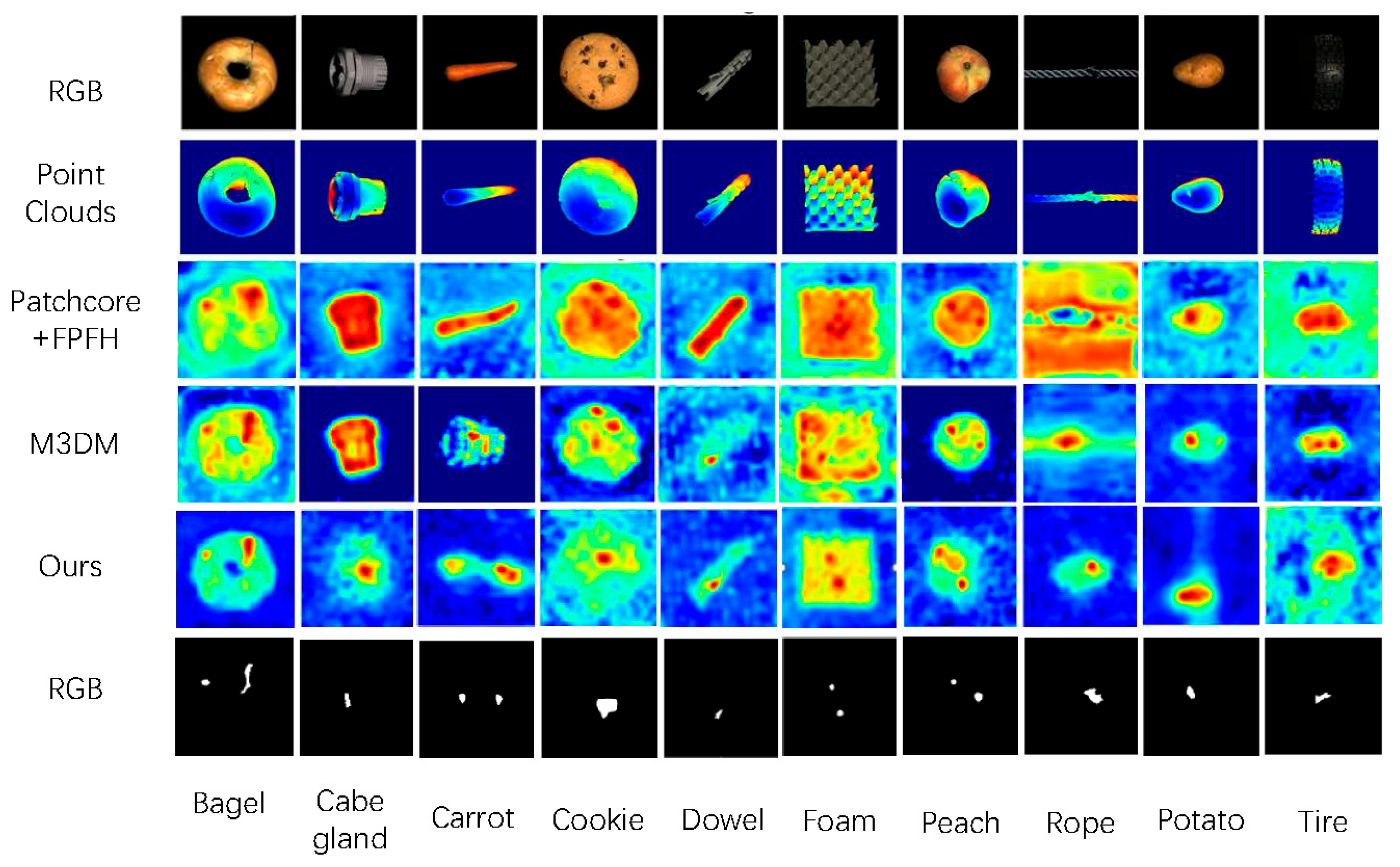

4.4. Visualisation Results

In this section, we present a more intuitive visualisation of the segmentation results for all classes in the MVTec-3D AD dataset. As shown in Figure 4, we display the heatmap results for our method and PatchCore + FPFH. Both FPFH and PatchCore utilise multimodal inputs. Compared to the results from PatchCore + FPFH, our method achieves superior segmentation maps.

Figure 4.

In comparison with existing approaches, the proposed method demonstrates robustness against noise and achieves a more precise delineation of the segmentation region.

5. Conclusions

This study presents an innovative framework for industrial anomaly detection that integrates cross-modal pre-training, missing-modality-resilient inference, and dynamic voxel down-sampling. The framework explicitly addresses two major types of data incompleteness encountered in industrial inspection: (i) incomplete sensor data within a modality, such as partial or noisy measurements, and (ii) incomplete modalities, where an entire sensing channel becomes unavailable. By jointly solving these challenges and mitigating the computational cost of 3D point-cloud processing, the proposed method achieves state-of-the-art performance on the MVTec 3D-AD dataset. It delivers stable results under single-modal or degraded-data conditions while maintaining high efficiency, providing a practical solution for real-world industrial deployment. These results confirm the framework’s capability to preserve both accuracy and efficiency under varying levels of information loss.

Nevertheless, the robustness of anomaly detection has an intrinsic upper bound: when data corruption or modality loss exceeds a critical threshold, the remaining signals may no longer contain sufficient discriminative information for reliable detection. From an information-theoretic perspective, once the mutual information between the observable input and the underlying anomaly distribution approaches zero, detection becomes theoretically infeasible. Although our cross-modal distillation pushes this boundary further than previous approaches, it cannot compensate for a complete absence of informative content. Future work should empirically and analytically quantify this limit through controlled ablation experiments and mutual-information analysis. This theoretical insight provides valuable guidance for enhancing network robustness and developing adaptive strategies under uncertain sensor availability.

When only intra-modal degradation occurs, the edge-preserving voxelization module can operate independently to improve geometric fidelity. In contrast, under total modality loss, the distilled student network performs inference by leveraging the cross-modal priors learned during training. Beyond these scenarios, future research may extend the framework to additional sensing modalities such as thermal imaging and X-ray, and explore automated selection of optimal modality combinations to further improve precision, efficiency, and robustness in industrial inspection. Overall, this work advances the development of modality-resilient multimodal learning for intelligent manufacturing and related industrial applications.

Author Contributions

J.X.: Writing—original draft, Methodology, Formal analysis, Conceptualization, Investigation, Validation. J.Y.: Writing—review and editing, Validation, Software. M.Y.: Supervision, Resources, Investigation, Funding acquisition. W.Y.: Supervision, Resources, Investigation, Funding acquisition. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding authors.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Zheng, J.; Huang, L. 3D ST-Net: A Large Kernel Simple Transformer for Brain Tumor Segmentation, and Cross-Modality Domain Adaptation for Medical Image Segmentation. In Brain Tumor Segmentation, and Cross-Modality Domain Adaptation for Medical Image Segmentation; Springer Nature: Cham, Switzerland, 2024; p. 106. [Google Scholar]

- Wang, Q.; Kim, M.K. Applications of 3D point cloud data in the construction industry: A fifteen-year review from 2004 to 2018. Adv. Eng. Inform. 2019, 39, 306–319. [Google Scholar] [CrossRef]

- Bergmann, P.; Jin, X.; Sattlegger, D.; Steger, C. The MVtec 3D-AD dataset for unsupervised 3D anomaly detection and localization. arXiv 2021, arXiv:2112.09045. [Google Scholar] [CrossRef]

- Bergmann, P.; Fauser, M.; Sattlegger, D.; Steger, C. MVTec AD—A Comprehensive Real-World Dataset for Unsupervised Anomaly Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 9592–9600. [Google Scholar]

- Gong, D.; Liu, L.; Le, V.; Saha, B.; Mansour, M.R.; Venkatesh, S.; Hengel, A.V.D. Memorising Normality to Detect Anomaly: Memory-Augmented Deep Autoencoder for Unsupervised Anomaly Detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 1705–1714. [Google Scholar]

- Zavrtanik, V.; Kristan, M.; Skočaj, D. Reconstruction by inpainting for visual anomaly detection. Pattern Recognit. 2021, 112, 107706. [Google Scholar] [CrossRef]

- Deng, H.; Li, X. Anomaly detection via reverse distillation from one-class embedding. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 9737–9746. [Google Scholar]

- Nayar, S.K.; Sanderson, A.C.; Weiss, L.E.; Simon, D.A. Specular surface inspection s using structured highlight and Gaussian images. IEEE Trans. Robot. Autom. 1990, 6, 208–218. [Google Scholar] [CrossRef]

- Jia, Z.; Wang, M.; Zhao, S. A review of deep learning-based approaches for defect detection in smart manufacturing. J. Opt. 2024, 53, 1345–1351. [Google Scholar] [CrossRef]

- Hattori, K.; Izumi, T.; Meng, L. Defect detection of apples using PatchCore. In Proceedings of the 2023 International Conference on Advanced Mechatronic Systems (ICAMechS), Melbourne, Australia, 4–7 September 2023; IEEE: New York, NY, USA; pp. 1–6. [Google Scholar]

- Liu, Y.; Zhang, C.; Dong, X. A survey of real-time surface defect inspection methods based on deep learning. Artif. Intell. Rev. 2023, 56, 12131–12170. [Google Scholar] [CrossRef]

- Perera, P.; Nallapati, R.; Xiang, B. Ocgan: One-Class Novelty Detection Using GANs with Constrained Latent Representations. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2898–2906. [Google Scholar]

- Liu, J.; Xie, G.; Chen, R.; Li, X.; Wang, J.; Liu, Y.; Wang, C.; Zheng, F. Real3d-ad: A dataset for point cloud anomaly detection. Adv. Neural Inf. Process. Syst. 2023, 36, 30402–30415. [Google Scholar]

- Zhu, H.; Xie, G.; Hou, C.; Shen, L. Towards High-resolution 3D Anomaly Detection via Group-Level Feature Contrastive Learning. In Proceedings of the 32nd ACM International Conference on Multimedia, Chicago, IL, USA, 28 October–1 November 2024; pp. 4680–4689. [Google Scholar]

- Zhao, B.; Xiong, Q.; Zhang, X.; Guo, J.; Liu, Q.; Xing, X.; Xu, X. Pointcore: Efficient unsupervised point cloud anomaly detector using local-global features. arXiv 2024, arXiv:2403.01804. [Google Scholar]

- Zhou, Z.; Wang, L.; Fang, N.; Wang, Z.; Qiu, L.; Zhang, S. R3D-AD: Reconstruction via Diffusion for 3D Anomaly Detection. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer Nature: Cham, Switzerland, 2024; pp. 91–107. [Google Scholar]

- Rusinkiewicz, S.; Hall-Holt, O.; Levoy, M. Real-time 3D model acquisition. ACM Trans. Graph. (TOG) 2002, 21, 438–446. [Google Scholar] [CrossRef]

- Sohail, S.S.; Himeur, Y.; Kheddar, H.; Amira, A.; Fadli, F.; Atalla, S.; Copiaco, A.; Mansoor, W. Advancing 3D point cloud understanding through deep transfer learning: A comprehensive survey. Inf. Fusion 2024, 113, 102601. [Google Scholar] [CrossRef]

- Lyu, W.; Ke, W.; Sheng, H.; Ma, X.; Zhang, H. Dynamic downsampling algorithm for 3D point cloud map based on voxel filtering. Appl. Sci. 2024, 14, 3160. [Google Scholar] [CrossRef]

- He, Q.; Wang, Z.; Zeng, H.; Zeng, Y.; Liu, Y. Svga-Net: Sparse Voxel-Graph Attention Network for 3D Object Detection from Point Clouds. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 22 February–1 March 2022; Volume 36, pp. 870–878. [Google Scholar]

- Gelfand, N.; Ikemoto, L.; Rusinkiewicz, S.; Levoy, M. Geometrically stable sampling for the ICP algorithm. In Proceedings of the Fourth International Conference on 3-D Digital Imaging and Modelling, 2003, 3DIM 2003, Banff, AB, Canada, 6–10 October 2003; IEEE: New York, NY, USA, 2003; pp. 260–267. [Google Scholar]

- Zhou, Q.; Sun, B. Adaptive K-means clustering-based under-sampling methods to solve the class imbalance problem. Data Inf. Manag. 2024, 8, 100064. [Google Scholar] [CrossRef]

- Yang, H.; Liang, D.; Zhang, D.; Liu, Z.; Zou, Z.; Jiang, X.; Zhu, Y. AVS-Net: Point sampling with adaptive voxel size for 3D scene understanding. arXiv 2024, arXiv:2402.17521. [Google Scholar] [CrossRef]

- Que, Z.; Lu, G.; Xu, D. Voxelcontext-Net: An Octree-Based Framework for Point Cloud Compression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 6042–6051. [Google Scholar]

- Zhou, Y.; Tuzel, O. VoxelNet: End-to-End Learning for Point Cloud-Based 3D Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Vapnik, V.; Vashist, A. A new learning paradigm: Learning using privileged information. Neural Netw. 2009, 22, 544–557. [Google Scholar] [CrossRef]

- Garcia, N.C.; Morerio, P.; Murino, V. Modality Distillation with Multiple Stream Networks for Action Recognition. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 103–118. [Google Scholar]

- Huo, F.; Xu, W.; Guo, J.; Wang, H.; Guo, S. C2kd: Bridging the modality gap for cross-modal knowledge distillation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 16006–16015. [Google Scholar]

- Nugroho, M.A.; Woo, S.; Lee, S.; Kim, C. AHFu-Net: Align, Hallucinate, and Fuse Network for Missing Multimodal Action Recognition. In Proceedings of the 2023 IEEE International Conference on Visual Communications and Image Processing (VCIP), Jeju, Republic of Korea, 4–7 December 2023; pp. 1–5. [Google Scholar]

- Chu, Y.M.; Chieh, L.; Hsieh, T.I.; Chen, H.T.; Liu, T.L. Shape-guided dual-memory learning for 3D anomaly detection. In Proceedings of the ICML 2023, Honolulu, HI, USA, 23–29 July 2023. [Google Scholar]

- Wang, H.; Ma, C.; Zhang, J.; Zhang, Y.; Avery, J.; Hull, L.; Carneiro, G. Learnable Cross-Modal Knowledge Distillation for Multi-Modal Learning with Missing Modality. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Vancouver, BC, Canada, 8–12 October 2023; pp. 216–226. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.-F. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. In Proceedings of the Advances in Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Cohen, G.; Sapiro, G.; Giryes, R. DNN or k-NN: That is the generalize vs. memorize question. arXiv 2018, arXiv:1805.06822. [Google Scholar]

- Roy, S.; Butman, J.A.; Reich, D.S.; Calabresi, P.A.; Pham, D.L. Multiple sclerosis lesion segmentation from brain MRI via fully convolutional neural networks. arXiv 2018, arXiv:1803.09172. [Google Scholar] [CrossRef]

- Chen, F.W.; Liu, C.W. Estimation of the spatial rainfall distribution using inverse distance weighting (IDW) in the middle of Taiwan. Paddy Water Environ. 2012, 10, 209–222. [Google Scholar] [CrossRef]

- Sui, W.; Lichau, D.; Lefèvre, J.; Phelippeau, H. Incomplete Multimodal Industrial Anomaly Detection via Cross-Modal Distillation. arXiv 2024, arXiv:2405.13571. [Google Scholar] [CrossRef]

- Zhang, Y.; Xiang, T.; Hospedales, T.M.; Lu, H. Deep Mutual Learning. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Salt Lake City, UT, USA, 18–23 June 2018; pp. 4320–4328. [Google Scholar]

- Chen, Z.; Li, L.; Niu, K.; Wu, Y.; Hua, B. Pose measurement of non-cooperative spacecraft based on point cloud. In Proceedings of the 2018 IEEE CSAA Guidance, Navigation and Control Conference (CGNCC), Xiamen, China, 10–12 August 2018; IEEE: New York, NY, USA, 2018; pp. 1–6. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Thoker, F.M.; Gall, J. Cross-modal knowledge distillation for action recognition. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; IEEE: New York, NY, USA, 2019; pp. 6–10. [Google Scholar]

- Parker, G.J.M.; Haroon, H.A.; Wheeler-Kingshott, C.A.M. A framework for a streamline-based probabilistic index of connectivity (PICo) using a structural interpretation of MRI diffusion measurements. J. Magn. Reson. Imaging Off. J. Int. Soc. Magn. Reson. Med. 2003, 18, 242–254. [Google Scholar] [CrossRef]

- Wang, Y.; Peng, J.; Zhang, J.; Liu, Z.; Gu, J.; Liu, Y. Multimodal Industrial Anomaly Detection via Hybrid Fusion. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 8032–8041. [Google Scholar]

- Horwitz, E.; Hoshen, Y. Back to the Feature: Classical 3D Features are (Almost) All You Need for 3D Anomaly Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 2968–2977. [Google Scholar]

- Defard, T.; Setkov, A.; Loesch, A.; Audigier, R. PADIM: A Patch Distribution Modelling Framework for Anomaly Detection and Localisation. In Proceedings of the International Conference on Pattern Recognition, Milan, Italy, 10–15 January 2021; pp. 475–489. [Google Scholar]

- Roth, K.; Pemula, L.; Zepeda, J.; Schölkopf, B.; Brox, T.; Gehler, P. Towards Total Recall in Industrial Anomaly Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 14318–14328. [Google Scholar]

- Balakrishnan, G.; Zhao, A.; Sabuncu, M.R.; Guttag, J.; Dalca, A.V. Voxelmorph: A learning framework for deformable medical image registration. IEEE Trans. Med. Imaging 2019, 38, 1788–1800. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.; Li, F.; Liu, S.; Zhang, L.; Su, H.; Zhu, J.; Ni, L.M.; Shum, H.Y. Dino: Detr with improved denoising anchor-boxes for end-to-end object detection. arXiv 2022, arXiv:2203.03605. [Google Scholar]

- Bonfiglioli, L.; Toschi, M.; Silvestri, D.; Fioraio, N.; De Gregorio, D. The eyecandies dataset for unsupervised multimodal anomaly detection and localization. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; pp. 3586–3602. [Google Scholar]

- Klimke, J. Web-Based Provisioning and Application of Large-Scale Virtual 3D City Models. Ph.D. Thesis, Universität Potsdam, Potsdam, Germany, 2018. [Google Scholar]

- Kenyeres, M.; Kenyeres, J.; Hassankhani Dolatabadi, S. Distributed Consensus Gossip-Based Data Fusion for Suppressing Incorrect Sensor Readings in Wireless Sensor Networks. J. Low Power Electron. Appl. 2025, 15, 6. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).