Self-Supervised Hierarchical Dilated Transformer Network for Hyperspectral Soil Microplastic Identification and Detection

Abstract

1. Introduction

2. Materials and Methods

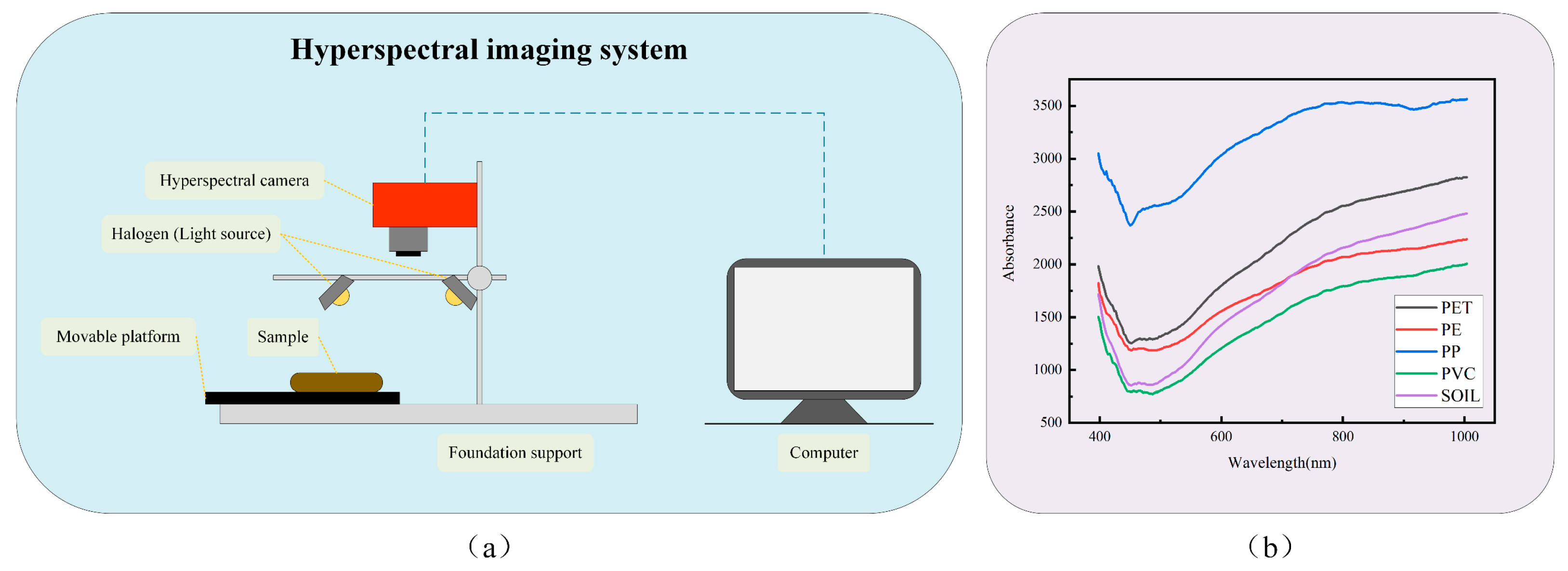

2.1. Dataset and Preprocessing

2.2. Proposed Method

2.2.1. Overview of Self-Supervised Hierarchical Dilated Transformer Networks

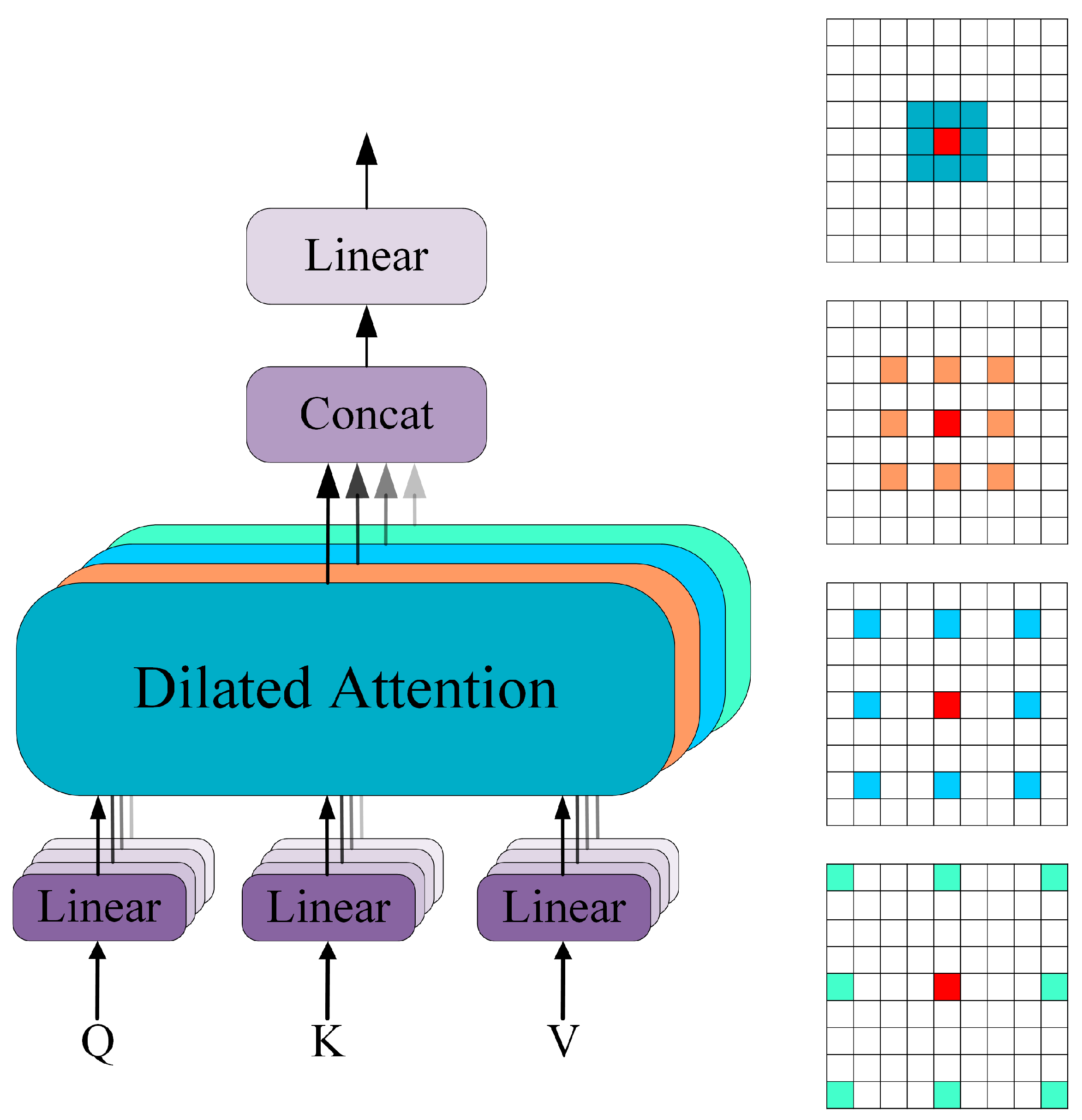

2.2.2. Hierarchical Dilated Transformer Network (HDTNet)

3. Results and Discussion

3.1. Evaluation Indicators

3.2. Model Training

3.3. Comparison of Detection Effects

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Akdogan, Z.; Guven, B. Microplastics in the environment: A critical review of current understanding and identification of future research needs. Environ. Pollut. 2019, 254, 113011. [Google Scholar] [CrossRef]

- Guo, J.-J.; Huang, X.-P.; Xiang, L.; Wang, Y.-Z.; Li, Y.-W.; Li, H.; Cai, Q.-Y.; Mo, C.-H.; Wong, M.-H. Source, migration and toxicology of microplastics in soil. Environ. Int. 2020, 137, 105263. [Google Scholar] [CrossRef] [PubMed]

- Issac, M.N.; Kandasubramanian, B. Effect of microplastics in water and aquatic systems. Environ. Sci. Pollut. Res. 2021, 28, 19544–19562. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Liu, X.; Hao, X.; Wang, J.; Zhang, Y. Distribution of low-density microplastics in the mollisol farmlands of northeast China. Sci. Total Environ. 2020, 708, 135091. [Google Scholar] [CrossRef] [PubMed]

- Allen, S.; Allen, D.; Phoenix, V.R.; Le Roux, G.; Durántez Jiménez, P.; Simonneau, A.; Binet, S.; Galop, D. Atmospheric transport and deposition of microplastics in a remote mountain catchment. Nat. Geosci. 2019, 12, 339–344. [Google Scholar] [CrossRef]

- Bläsing, M.; Amelung, W. Plastics in soil: Analytical methods and possible sources. Sci. Total Environ. 2018, 612, 422–435. [Google Scholar] [CrossRef]

- Li, Q.; Wu, J.; Zhao, X.; Gu, X.; Ji, R. Separation and identification of microplastics from soil and sewage sludge. Environ. Pollut. 2019, 254, 113076. [Google Scholar] [CrossRef]

- Zhang, Y.; Kang, S.; Allen, S.; Allen, D.; Gao, T.; Sillanpää, M. Atmospheric microplastics: A review on current status and perspectives. Earth-Sci. Rev. 2020, 203, 103118. [Google Scholar] [CrossRef]

- Chen, Y.; Leng, Y.; Liu, X.; Wang, J. Microplastic pollution in vegetable farmlands of suburb Wuhan, central China. Environ. Pollut. 2020, 257, 113449. [Google Scholar] [CrossRef]

- Geyer, R.; Jambeck, J.R.; Law, K.L. Production, use, and fate of all plastics ever made. Sci. Adv. 2017, 3, e1700782. [Google Scholar] [CrossRef]

- Wang, J.; Li, J.; Liu, S.; Li, H.; Chen, X.; Peng, C.; Zhang, P.; Liu, X. Distinct microplastic distributions in soils of different land-use types: A case study of Chinese farmlands. Environ. Pollut. 2021, 269, 116199. [Google Scholar] [CrossRef]

- Dissanayake, P.D.; Kim, S.; Sarkar, B.; Oleszczuk, P.; Sang, M.K.; Haque, M.N.; Ahn, J.H.; Bank, M.S.; Ok, Y.S. Effects of microplastics on the terrestrial environment: A critical review. Environ. Res. 2022, 209, 112734. [Google Scholar] [CrossRef] [PubMed]

- Huerta Lwanga, E.; Gertsen, H.; Gooren, H.; Peters, P.; Salánki, T.; van der Ploeg, M.; Besseling, E.; Koelmans, A.A.; Geissen, V. Microplastics in the Terrestrial Ecosystem: Implications for Lumbricus terrestris (Oligochaeta, Lumbricidae). Environ. Sci. Technol. 2016, 50, 2685–2691. [Google Scholar] [CrossRef] [PubMed]

- Ng, E.-L.; Huerta Lwanga, E.; Eldridge, S.M.; Johnston, P.; Hu, H.-W.; Geissen, V.; Chen, D. An overview of microplastic and nanoplastic pollution in agroecosystems. Sci. Total Environ. 2018, 627, 1377–1388. [Google Scholar] [CrossRef]

- Zhang, S.; Wang, J.; Liu, X.; Qu, F.; Wang, X.; Wang, X.; Li, Y.; Sun, Y. Microplastics in the environment: A review of analytical methods, distribution, and biological effects. TrAC Trends Anal. Chem. 2019, 111, 62–72. [Google Scholar] [CrossRef]

- Bank, M.S.; Mitrano, D.M.; Rillig, M.C.; Sze Ki Lin, C.; Ok, Y.S. Embrace complexity to understand microplastic pollution. Nat. Rev. Earth Environ. 2022, 3, 736–737. [Google Scholar] [CrossRef]

- Ai, W.; Chen, G.; Yue, X.; Wang, J. Application of hyperspectral and deep learning in farmland soil microplastic detection. J. Hazard. Mater. 2023, 445, 130568. [Google Scholar] [CrossRef]

- Tirkey, A.; Upadhyay, L.S.B. Microplastics: An overview on separation, identification and characterization of microplastics. Mar. Pollut. Bull. 2021, 170, 112604. [Google Scholar] [CrossRef]

- Ding, G.; Wang, J.; Wang, Y.; Wang, X.; Zhao, K.; Li, R.; Geng, X.; Wen, J.; Chen, B.; Zhao, X.; et al. An Innovative Miniaturized Cell Imaging System Based on Integrated Coaxial Dual Optical Path Structure and Microfluidic Fixed Frequency Sample Loading Strategy. IEEE Trans. Instrum. Meas. 2023, 72, 1–17. [Google Scholar] [CrossRef]

- Sun, J.; Dai, X.; Wang, Q.; van Loosdrecht, M.C.M.; Ni, B.-J. Microplastics in wastewater treatment plants: Detection, occurrence and removal. Water Res. 2019, 152, 21–37. [Google Scholar] [CrossRef]

- Ye, Y.; Yu, K.; Zhao, Y. The development and application of advanced analytical methods in microplastics contamination detection: A critical review. Sci. Total Environ. 2022, 818, 151851. [Google Scholar] [CrossRef] [PubMed]

- Elert, A.M.; Becker, R.; Duemichen, E.; Eisentraut, P.; Falkenhagen, J.; Sturm, H.; Braun, U. Comparison of different methods for MP detection: What can we learn from them, and why asking the right question before measurements matters? Environ. Pollut. 2017, 231, 1256–1264. [Google Scholar] [CrossRef] [PubMed]

- Simon-Sánchez, L.; Grelaud, M.; Garcia-Orellana, J.; Ziveri, P. River Deltas as hotspots of microplastic accumulation: The case study of the Ebro River (NW Mediterranean). Sci. Total Environ. 2019, 687, 1186–1196. [Google Scholar] [CrossRef] [PubMed]

- Wolff, S.; Kerpen, J.; Prediger, J.; Barkmann, L.; Müller, L. Determination of the microplastics emission in the effluent of a municipal waste water treatment plant using Raman microspectroscopy. Water Res. X 2019, 2, 100014. [Google Scholar] [CrossRef]

- Asensio-Montesinos, F.; Oliva Ramírez, M.; González-Leal, J.M.; Carrizo, D.; Anfuso, G. Characterization of plastic beach litter by Raman spectroscopy in South-western Spain. Sci. Total Environ. 2020, 744, 140890. [Google Scholar] [CrossRef]

- Primpke, S.; Dias, P.A.; Gerdts, G. Automated identification and quantification of microfibres and microplastics. Anal. Methods 2019, 11, 2138–2147. [Google Scholar] [CrossRef]

- Simon, M.; van Alst, N.; Vollertsen, J. Quantification of microplastic mass and removal rates at wastewater treatment plants applying Focal Plane Array (FPA)-based Fourier Transform Infrared (FT-IR) imaging. Water Res. 2018, 142, 1–9. [Google Scholar] [CrossRef]

- Lodhi, V.; Chakravarty, D.; Mitra, P. Hyperspectral Imaging System: Development Aspects and Recent Trends. Sens. Imaging 2019, 20, 35. [Google Scholar] [CrossRef]

- Yang, J.; Wu, C.; Du, B.; Zhang, L. Enhanced Multiscale Feature Fusion Network for HSI Classification. IEEE Trans. Geosci. Remote Sens. 2021, 59, 10328–10347. [Google Scholar] [CrossRef]

- Liao, J.; Wang, L. ATN-Hybrid: A hybrid attention network with deterministic-probabilistic mechanism for hyperspectral image classification. Geo-Spat. Inf. Sci. 2025, 1–22. [Google Scholar] [CrossRef]

- Liu, R.; Liang, J.; Yang, J.; He, J.; Zhu, P. Dual Classification Head Self-training Network for Cross-scene Hyperspectral Image Classification. arXiv 2025, arXiv:2502.17879. [Google Scholar] [CrossRef]

- Vidal, C.; Pasquini, C. A comprehensive and fast microplastics identification based on near-infrared hyperspectral imaging (HSI-NIR) and chemometrics. Environ. Pollut. 2021, 285, 117251. [Google Scholar] [CrossRef] [PubMed]

- Moroni, M.; Mei, A.; Leonardi, A.; Lupo, E.; Marca, F. PET and PVC Separation with Hyperspectral Imagery. Sensors 2015, 15, 2205–2227. [Google Scholar] [CrossRef] [PubMed]

- Xu, L.; Chen, Y.; Feng, A.; Shi, X.; Feng, Y.; Yang, Y.; Wang, Y.; Wu, Z.; Zou, Z.; Ma, W.; et al. Study on detection method of microplastics in farmland soil based on hyperspectral imaging technology. Environ. Res. 2023, 232, 116389. [Google Scholar] [CrossRef] [PubMed]

- Liu, F.; Rasmussen, L.A.; Klemmensen, N.D.R.; Zhao, G.; Nielsen, R.; Vianello, A.; Rist, S.; Vollertsen, J. Shapes of Hyperspectral Imaged Microplastics. Environ. Sci. Technol. 2023, 57, 12431–12441. [Google Scholar] [CrossRef]

- Yurtsever, M.; Yurtsever, U. Use of a convolutional neural network for the classification of microbeads in urban wastewater. Chemosphere 2019, 216, 271–280. [Google Scholar] [CrossRef]

- Padarian, J.; Minasny, B.; McBratney, A.B. Using deep learning to predict soil properties from regional spectral data. Geoderma Reg. 2019, 16, e00198. [Google Scholar] [CrossRef]

- Lorenzo-Navarro, J.; Castrillón-Santana, M.; Sánchez-Nielsen, E.; Zarco, B.; Herrera, A.; Martínez, I.; Gómez, M. Deep learning approach for automatic microplastics counting and classification. Sci. Total Environ. 2021, 765, 142728. [Google Scholar] [CrossRef]

- Park, H.; Park, S.; De Guzman, M.K.; Baek, J.Y.; Cirkovic Velickovic, T.; Van Messem, A.; De Neve, W. MP-Net: Deep learning-based segmentation for fluorescence microscopy images of microplastics isolated from clams. PLoS ONE 2022, 17, e0269449. [Google Scholar] [CrossRef]

- Wang, J.; Dong, J.; Tang, M.; Yao, J.; Li, X.; Kong, D.; Zhao, K. Identification and detection of microplastic particles in marine environment by using improved faster R–CNN model. J. Environ. Manag. 2023, 345, 118802. [Google Scholar] [CrossRef]

- Wang, D.; Hu, M.; Jin, Y.; Miao, Y.; Yang, J.; Xu, Y. HyperSIGMA: Hyperspectral Intelligence Comprehension Foundation Model. IEEE Trans. Pattern Anal. Mach. Intell. 2025, 47, 6427–6444. [Google Scholar] [CrossRef]

- Grill, J.-B.; Strub, F.; Altché, F.; Tallec, C.; Richemond, P.H.; Buchatskaya, E.; Doersch, C.; Pires, B.A.; Guo, Z.D.; Azar, M.G.; et al. Bootstrap your own latent: A new approach to self-supervised Learning. arXiv 2020. [Google Scholar] [CrossRef]

- Jiao, J.; Tang, Y.-M.; Lin, K.-Y.; Gao, Y.; Ma, J.; Wang, Y.; Zheng, W.-S. DilateFormer: Multi-Scale Dilated Transformer for Visual Recognition. arXiv 2023. [Google Scholar] [CrossRef]

- Mei, S.; Song, C.; Ma, M.; Xu, F. Hyperspectral Image Classification Using Group-Aware Hierarchical Transformer. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Zhang, X.; Su, Y.; Gao, L.; Bruzzone, L.; Gu, X.; Tian, Q. A Lightweight Transformer Network for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–17. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27-30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Yu, W.; Luo, M.; Zhou, P.; Si, C.; Zhou, Y.; Wang, X.; Feng, J.; Yan, S. MetaFormer Is Actually What You Need for Vision. arXiv 2021. [Google Scholar] [CrossRef]

- Melgani, F.; Bruzzone, L. Classification of hyperspectral remote sensing images with support vector machines. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1778–1790. [Google Scholar] [CrossRef]

- Ben Hamida, A.; Benoit, A.; Lambert, P.; Ben Amar, C. 3-D Deep Learning Approach for Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2018, 56, 4420–4434. [Google Scholar] [CrossRef]

- Hong, D.; Han, Z.; Yao, J.; Gao, L.; Zhang, B.; Plaza, A.; Chanussot, J. SpectralFormer: Rethinking Hyperspectral Image Classification with Transformers. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

| Configuration | Parameters |

|---|---|

| The operating system of the computer | Windows 11 Education, 64-bit |

| The processor of the computer | Intel(R) Core(TM) i7-8750H |

| Spectral coverage | 400~1000 nm |

| Band number | 224 |

| Rated power of the halogen lamp | Single not less than 35 W |

| Configuration | Version |

|---|---|

| System | Ubuntu 20.04.1, 64-bit |

| Processor | Intel(R) Xeon(R) Silver 4210R CPU @ 2.40GHz |

| GPU | NVIDIA GeForce RTX3090 |

| Language | Python 3.7.10 |

| CUDA | 11.7 |

| BYOL | Fusion Convolution Module | Dilation Attention Module | Poolformer Module | OA | AA | Kappa | Train Time (min) |

|---|---|---|---|---|---|---|---|

| - | √ | √ | √ | 98.65 | 96.74 | 94.39 | - |

| √ | - | √ | √ | 98.31 | 95.90 | 92.95 | - |

| √ | √ | - | √ | 97.78 | 95.36 | 90.86 | - |

| √ | √ | √ | - | 99.01 | 98.19 | 95.95 | 254 |

| √ | √ | √ | √ | 99.35 | 98.54 | 97.33 | 239 |

| Database | Indexes | SVM [48] | 3DCNN [49] | Spectral Former [50] | Proposed |

|---|---|---|---|---|---|

| PP | OA | 94.03 | 97.1 | 97.46 | 99.35 |

| AA | 85.48 | 93.36 | 94.32 | 98.54 | |

| Kappa | 78.5 | 87.90 | 89.45 | 97.33 | |

| PVC | OA | 89.37 | 93.69 | 93.68 | 98.33 |

| AA | 68.58 | 85.47 | 86.77 | 95.57 | |

| Kappa | 46.34 | 73.43 | 74.11 | 91.76 | |

| PE | OA | 91.89 | 94.86 | 95.02 | 98.05 |

| AA | 71.27 | 82.40 | 84.84 | 93.82 | |

| Kappa | 51.23 | 71.94 | 74.00 | 90.12 | |

| PET | OA | 96.8 | 97.94 | 98.12 | 98.82 |

| AA | 90.3 | 93.67 | 94.61 | 97.28 | |

| Kappa | 84.10 | 89.90 | 90.88 | 94.37 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, P.; Li, X.; Zhang, R.; Gu, Q.; Zhang, L.; Lv, J. Self-Supervised Hierarchical Dilated Transformer Network for Hyperspectral Soil Microplastic Identification and Detection. Sensors 2025, 25, 6517. https://doi.org/10.3390/s25216517

Wang P, Li X, Zhang R, Gu Q, Zhang L, Lv J. Self-Supervised Hierarchical Dilated Transformer Network for Hyperspectral Soil Microplastic Identification and Detection. Sensors. 2025; 25(21):6517. https://doi.org/10.3390/s25216517

Chicago/Turabian StyleWang, Peiran, Xiaobin Li, Ruizhe Zhang, Qiongchan Gu, Lianchi Zhang, and Jiangtao Lv. 2025. "Self-Supervised Hierarchical Dilated Transformer Network for Hyperspectral Soil Microplastic Identification and Detection" Sensors 25, no. 21: 6517. https://doi.org/10.3390/s25216517

APA StyleWang, P., Li, X., Zhang, R., Gu, Q., Zhang, L., & Lv, J. (2025). Self-Supervised Hierarchical Dilated Transformer Network for Hyperspectral Soil Microplastic Identification and Detection. Sensors, 25(21), 6517. https://doi.org/10.3390/s25216517