8.2. Single-Event Dataset Evaluation

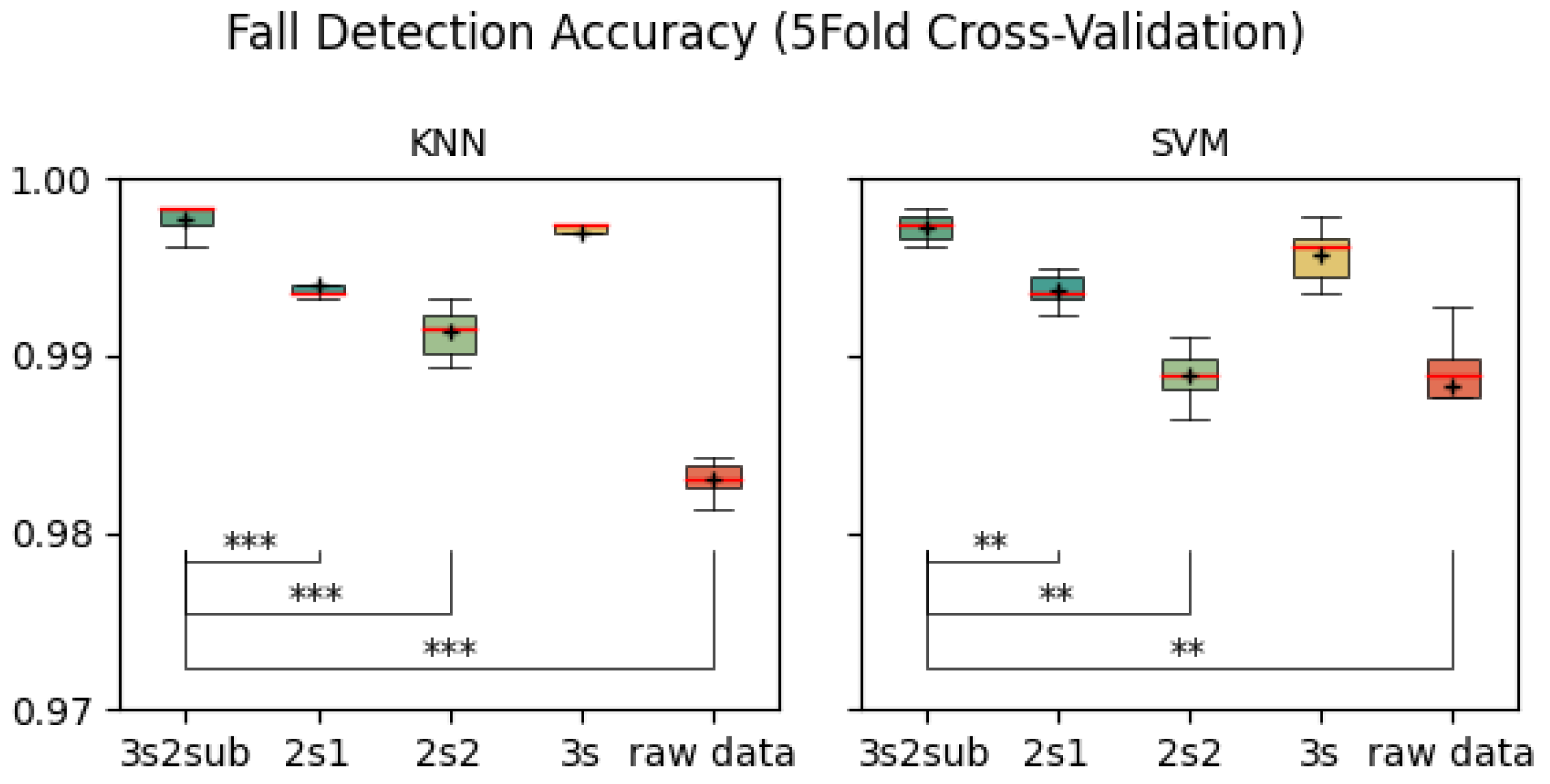

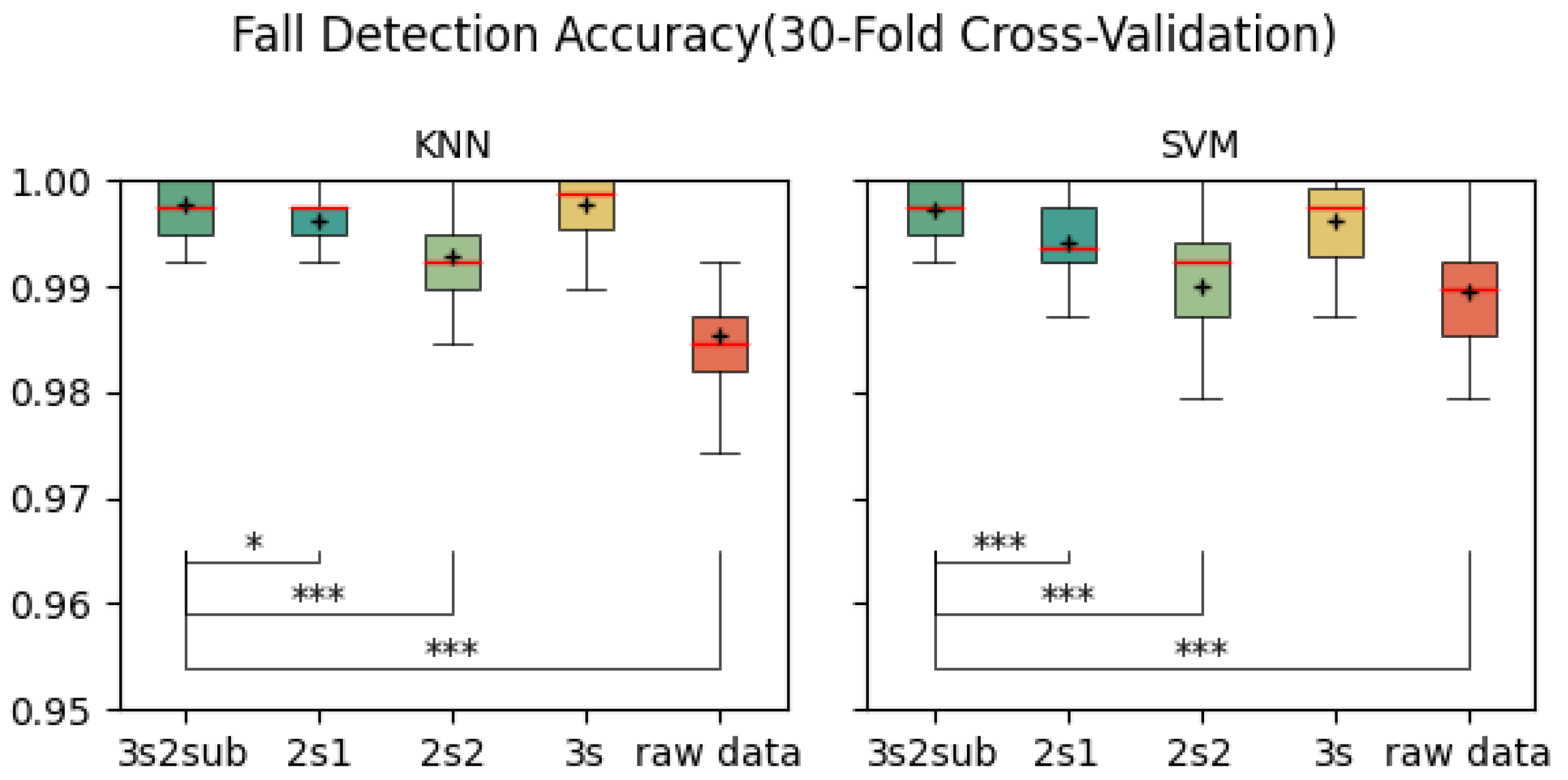

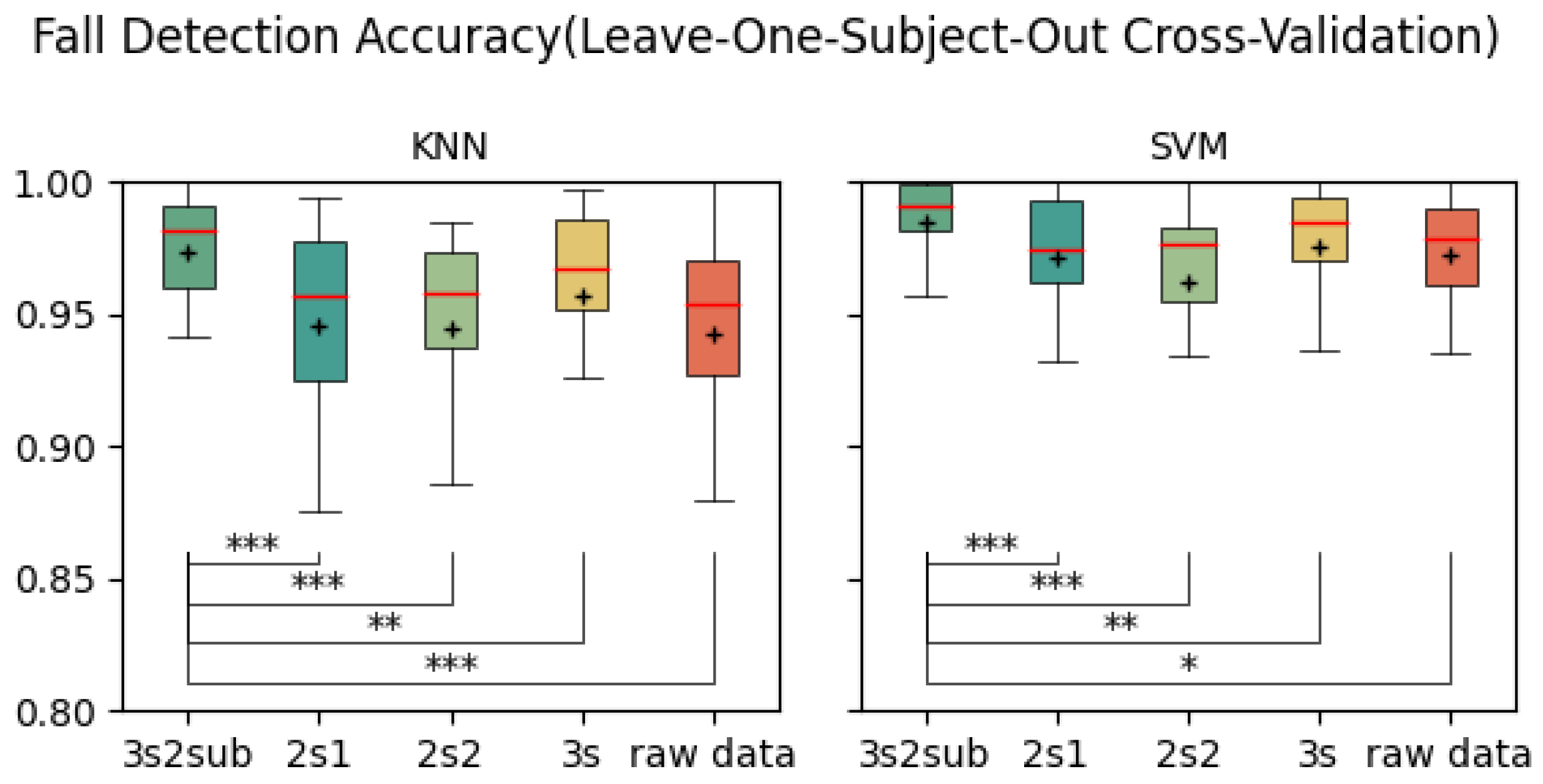

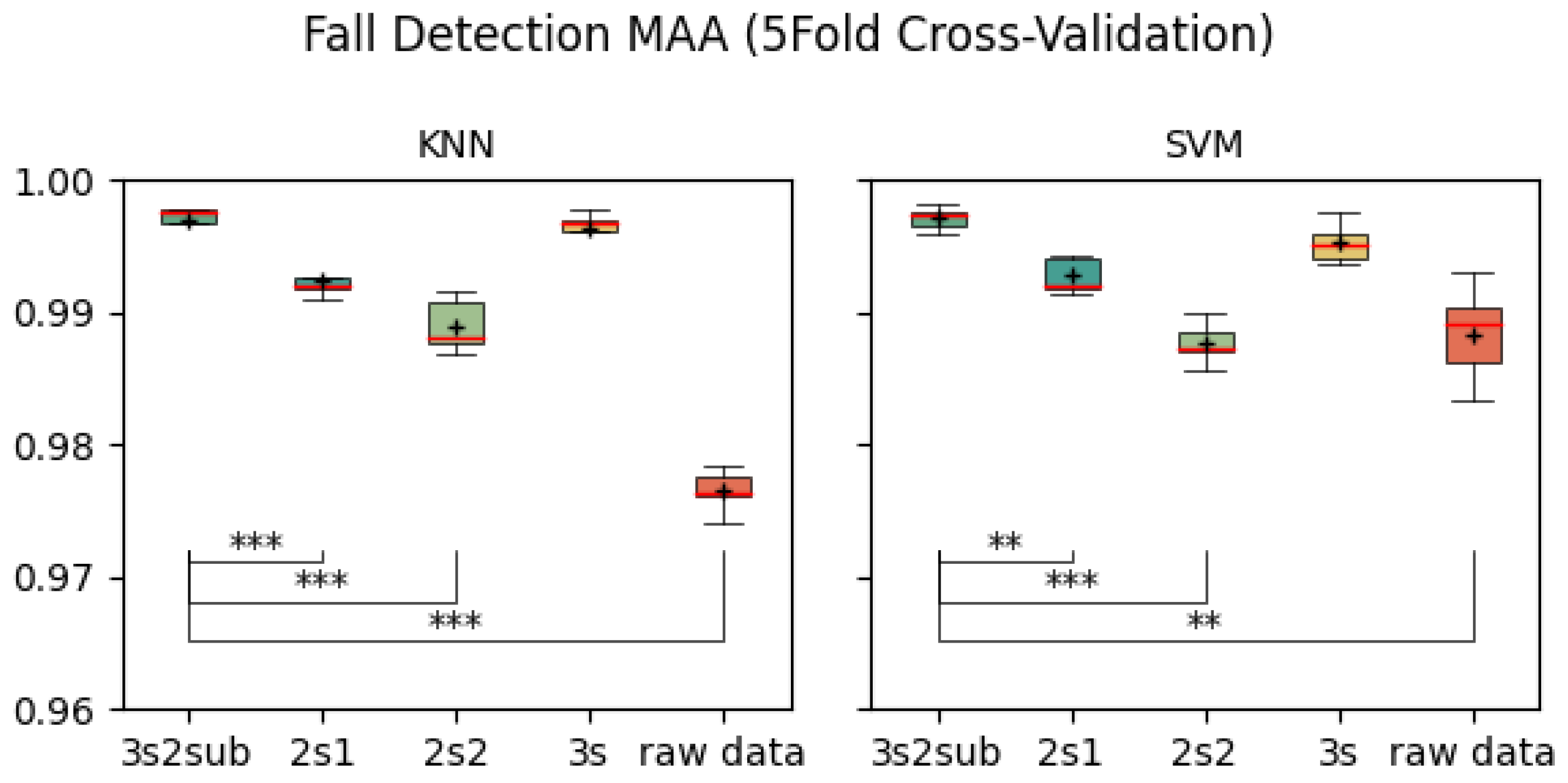

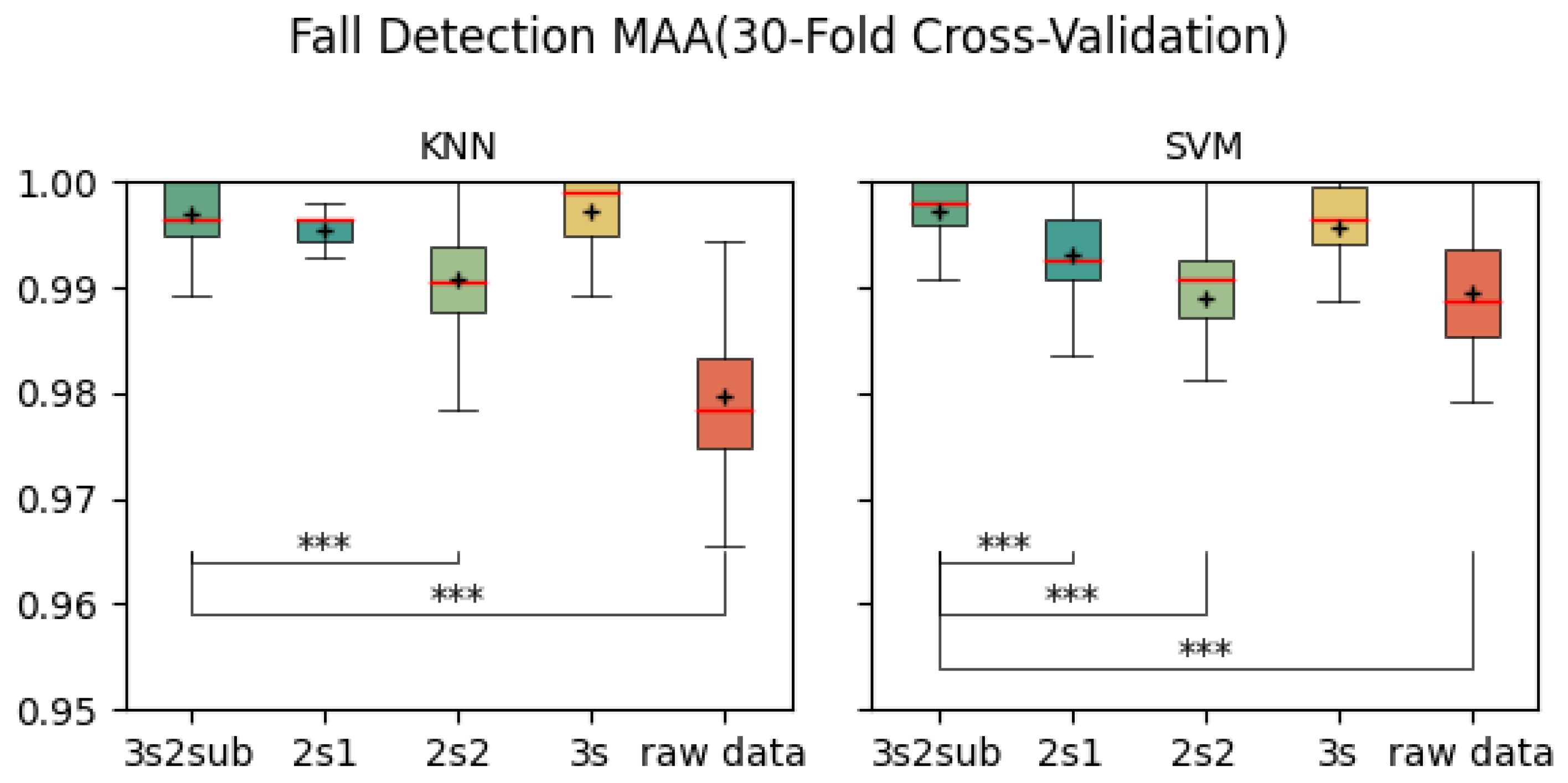

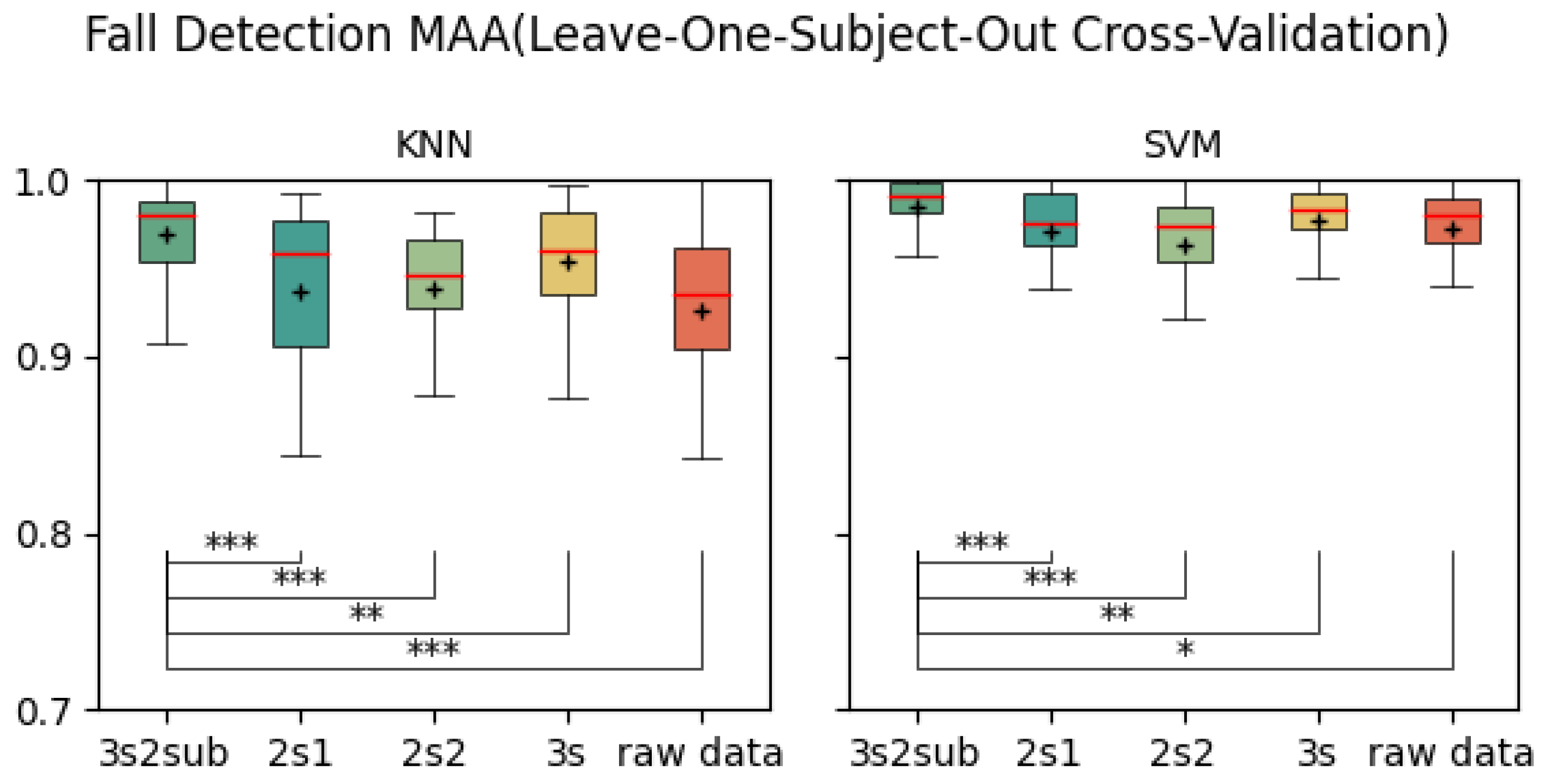

In both 5-Fold and 30-Fold Cross-Validation,

3s2sub outperformed all the control groups. Notably, there was a significant difference between

3s2sub and the

2s1,

2s2, and

Raw Data groups;

3s2sub was considered the most effective among them. Here, we attempt to prove whether separating the window into two sub-windows makes sense or not. In

Figure 5,

Figure 6,

Figure 8, and

Figure 9, although there is no significant difference between

3s2sub and

3s,

3s2sub has shorter boxes, meaning the test result distribution is more concentrated, so we believe that

3s2sub is more stable than

3s in these cases. In practice, we hope to reduce user operation; in other words, we hope the FDS does not need to collect data from users and be further retrained. As indicated by the results of the LOSO Cross-Validation, there is a significant difference between

3s2sub and

3s at the 0.1% level, so we believe that

3s2sub can detect falls from unseen users more effectively. Additionally, the result distribution of

3s2sub is more concentrated, so it is more stable. Overall, comparing the results between

3s2sub and

3s, we can conclude that

3s2sub is more effective than

3s. Thus, it makes sense to divide the window into two sub-windows. Beyond

3s2sub, we have tested

3s3sub (divide the 3 s window into three 1.5 s sub-windows and set the overlap between adjacent sub-windows to 50%). The results are not shown, because in 5-fold, 30-fold, and LOSO cross-validation there is no significant difference in T-test. Thus, we believe it is not necessary to divide the whole window into three sub-windows because it increases computational complexity.

As shown in

Table 4,

Table 5 and

Table 6,

3s2sub achieved the highest sensitivity, specificity, and F1 score in 5-Fold and 30-Fold Cross-Validation among all groups. In LOSO Cross-Validation,

3s2sub achieved the highest sensitivity, precision, and F1 score with SVM. For the test with KNN, it achieved the highest sensitivity and F1 score. The specificity of

3s2sub (98.31%) was slightly lower than that of the

Raw Data group (98.94%). This might be a flaw, but it is still comparable. The precision and F1 score of

Raw Data (92.59% and 95.60%) were obviously lower than those of

3s2sub (97.59% and 97.89%). In addition, the accuracy and MAA of

Raw Data were also lower than those of

3s2sub. Thus, taking various indicators into account, we believe that

3s2sub showed an advantage.

We performed an evaluation with the KNN and SVM classifiers. For the single-event data evaluation, as shown in

Table 4,

Table 5 and

Table 6, in the non-subject-difference (5-Fold and 30-Fold) cross-validation, KNN showed higher specificity and F1 score, while SVM showed higher sensitivity. In LOSO cross-validation, SVM showed higher precision and F1 score; KNN showed slightly higher specificity as well, but the difference was less than 1%. Overall, for single-event dataset evaluation, KNN and SVM showed similar performance.

8.3. Continuous-Event Dataset Evaluation

In the evaluation on the MobiAct dataset, as shown in

Table 7, in same-dataset validation, SVM and KNN exhibit similar performance levels in same-dataset validation. All evaluation metrics are at a similar level with those on UniMiB SHAR. KNN and SVM perform similarly, SVM shows slightly higher sensitivity while KNN shows higher specificity. These results are consistent with those on UniMiB SHAR.

In cross-dataset validation on UniMiB SHAR and MobiAct, results shown in

Table 8 indicate that KNN significantly outperforms SVM in this evaluation. Concretely, SVM is more sensitive (5% higher than KNN) for fall events, which is consistent with same-dataset evaluation (both on UniMiB SHAR and MobiAct), but accuracy (8% lower), F1 score (13% lower), and specificity (12% lower) are worse than KNN. The low specificity is unacceptable in a practical FDS. In addition, compared with results of same-dataset validation (shown in

Table 7), the performance of SVM drops significantly while KNN appears to be more resilient. Although the performance of KNN drops slightly, it remains acceptable. We believe this is a reasonable descent because of the difference between two datasets.

We attribute this performance gap primarily to domain shift between the training (UniMiB SHAR) and testing (MobiAct) datasets, which manifests in two major forms:

Subject domain shift—Motion patterns vary across individuals even for the same activity. This is evident even within a single dataset: as shown in

Table 5,

Table 6 and

Table 7, Leave-One-Subject-Out (LOSO) cross-validation consistently produces lower accuracy than random 30-fold validation, highlighting the impact of inter-subject variability.

Activity domain shift—The activity definitions and distributions differ substantially between UniMiB SHAR and MobiAct. UniMiB SHAR contains short-duration, well-segmented activities such as “Sitting down,” “Jumping,” and “Syncope,” while MobiAct includes more transitional activities which are not contained in UniMiB SHAR, such as “Step into a car” (CSI), “Step out of a car” (CSO).

Interestingly, while SVM appears more robust to subject-level variation—exhibiting a smaller drop from 30-fold to LOSO within the same dataset—it performs considerably worse than KNN in the cross-dataset scenario. This suggests that SVM may be more sensitive to activity-level domain shift, while KNN offers greater robustness under heterogeneous activity structures and execution contexts.

Based on this analysis, we selected the 3s2sub method with the KNN classifier for subsequent cross-dataset evaluation on the FARSEEING dataset, as it provides a better trade-off between sensitivity and generalization under domain shift conditions.

Table 12 shows a brief comparison of some smartphone-based fall detection algorithms, including two works that evaluated using UniMiB SHAR and two using MobiAct. It can be seen that our method achieved comparable results with state-of-the-art works, while using fewer vector, which indicates less computation.

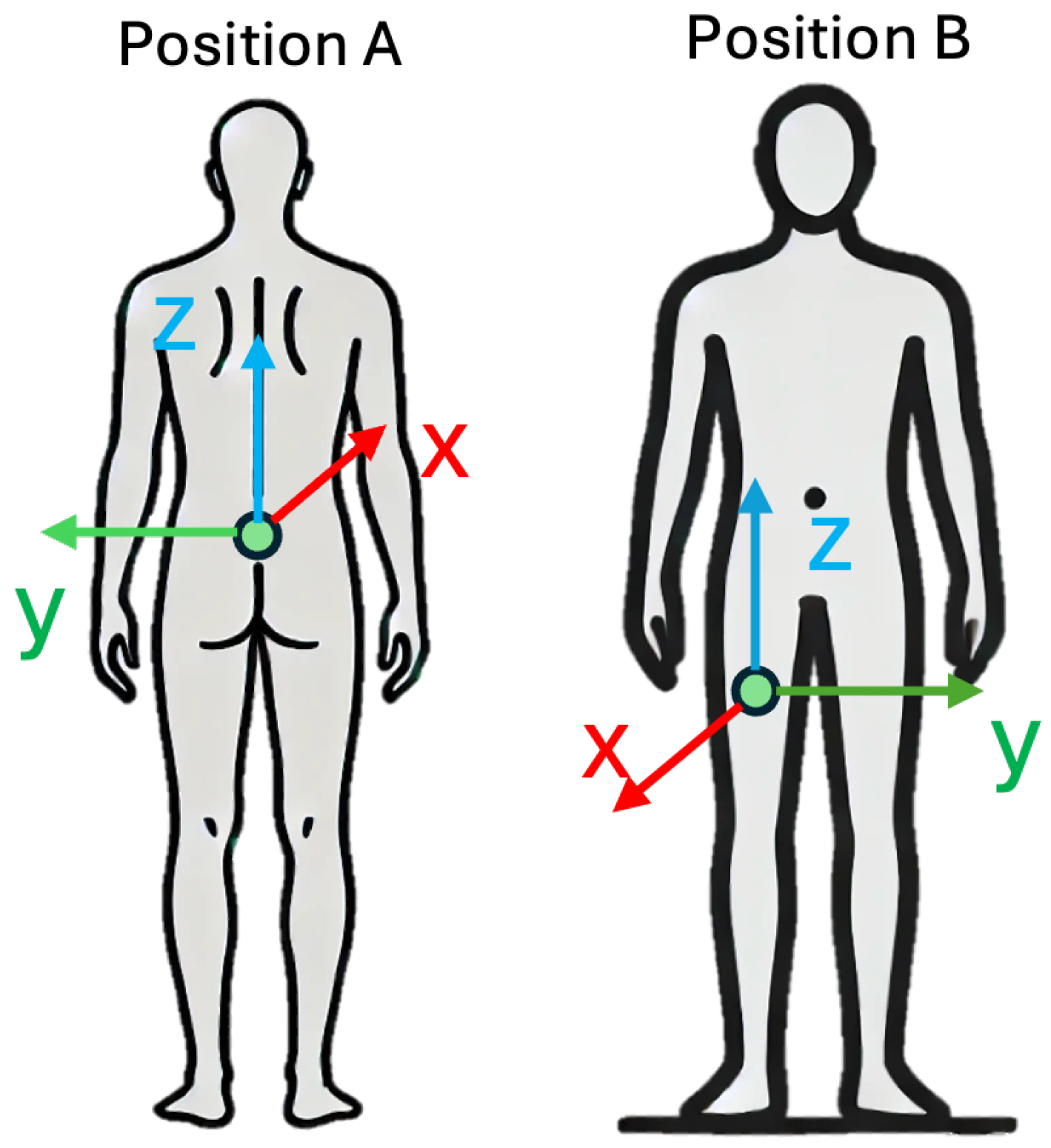

In addition, we performed cross-dataset evaluation on the FARSEEING dataset, which is a long-term (1200 s) real-world fall dataset. It included data collected from 15 subjects. Although FARSEEING data were not collected by smartphones, and device positions were not completely same as in our training set (UniMiB SHAR), we still used it to evaluate our method. Because (1) there may be differences between real-world data and lab-simulated data, it is necessary to evaluate using real-world data; (2) the sampling range (

or

) is the same as the smartphone’s built-in accelerometer; (3) the two positions in FARSEEING can be considered as two cases where the smartphone is placed in a very shallow trousers pocket (Position A) and a very deep trousers pocket (Position B). As the results shown in

Table 11, our method achieved 95.45% sensitivity (1 sample failed to detect) and 98.12% specificity on data from both positions, with 20% overlap of the sliding window. Concretely, for data collected from Position A, our model achieved a specificity of 98.51%, while for data collected from Position B, the specificity was 97.28%.

We think the reason for this difference is that Position A is closer to the position of a normal trousers pocket, and it is closer to the center of gravity of the human body, for which the data at this position are more suitable for measuring the overall movement of the human body, while Position B is too low. The data collected at this position may contain more noise caused by leg-only movements.

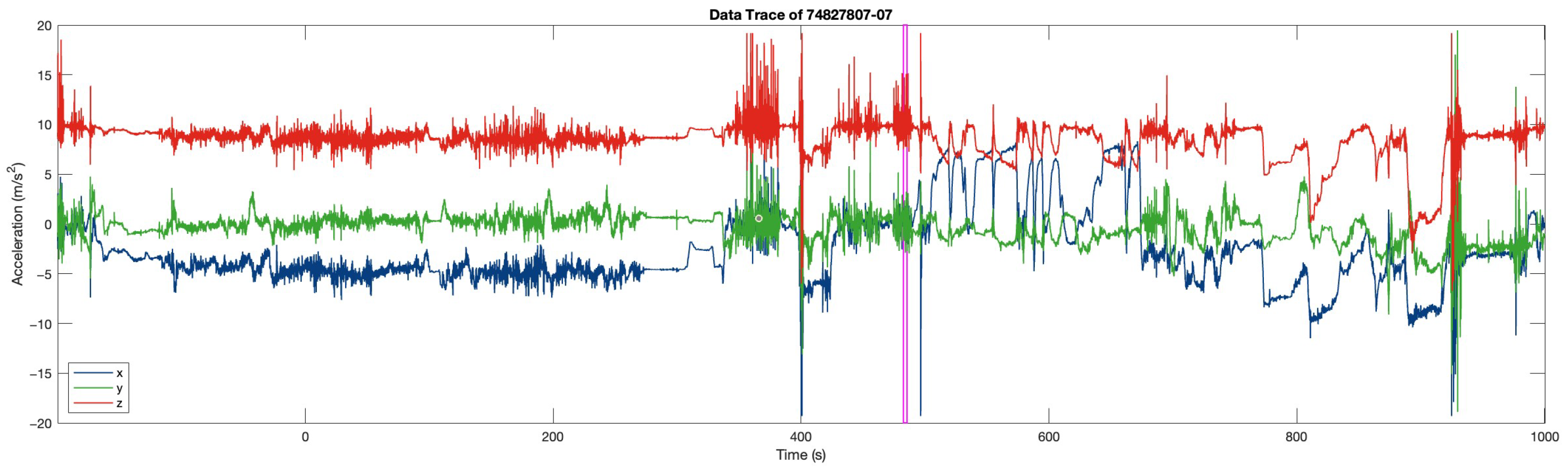

Figure 15 is an example data trace in FARSEEING. The magenta rectangular is the true positive (fall) window detected.

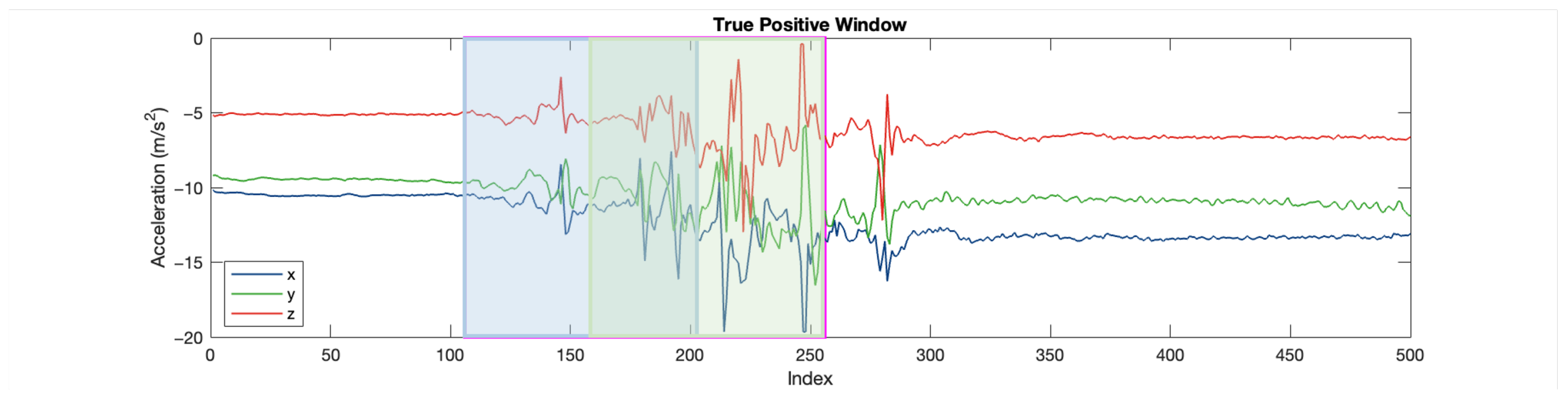

Figure 16 is the excerpt around the true positive window (for 10 s). The blue and green rectangular represents the two sub-windows. In the first sub-window (in blue), the acceleration changes obviously, with an amplitude of about

, but minor than that in the second sub-window. In the second sub-window (about

). This difference is consistent with our experience. The acceleration increases at the beginning of the fall, the speed of the body increases during the fall, and the speed becomes 0 at the moment of hitting the ground (the end of the fall), resulting in a greater acceleration at the end of the fall.

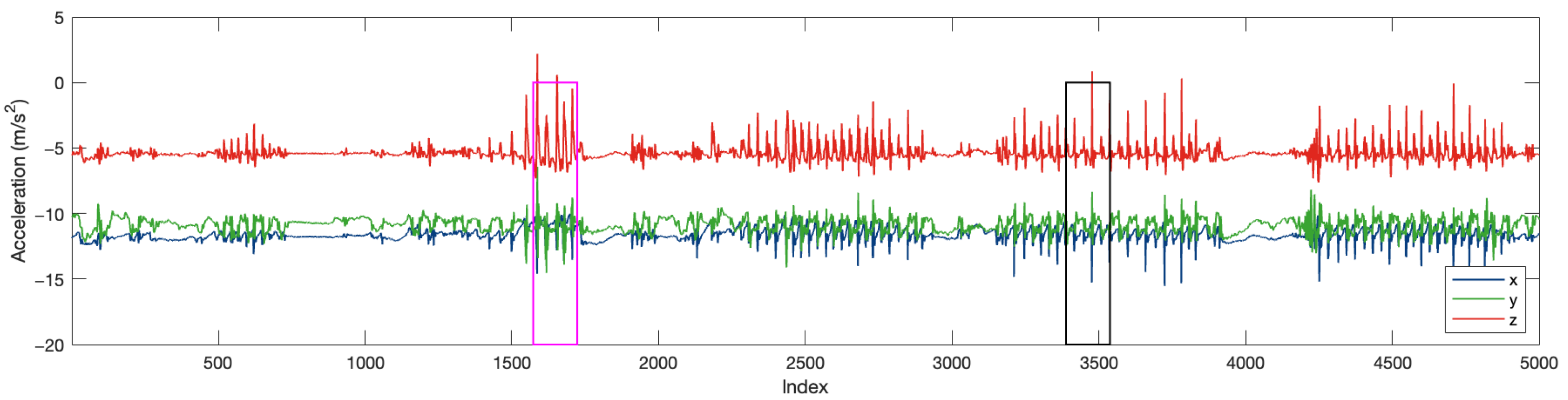

Figure 17 shows the data trace excerpt around false positive of the sample failed to detect.

Table 13 summarizes the works that performed cross-dataset validation on FARSEEING. J. Silva et al. [

32] proposed a transfer learning-based approach for FDS. They set 7.5 s window without overlap. With training on a self collected simulated dataset and test on FARSEEING, their method achieved 92% sensitivity. A. K. Bourke et al. [

33] proposed a ML based fall detection method that used Decision Tree (DT) as the classifier. They used a more complete version of FARSEEING, including 89 falls and 368 ADLs, which are all collected at Position A to evaluate the model. They reached accuracy of 88% and specificity of 87%. Yu, X. [

34] proposed the KFall dataset. They collected data at Position A with an inertial sensor. They used KFall to train a ConvLSTM model and tested it on FARSEEING. They achieved sensitivity of 93.33% and 73.33% specificity. Koo, B. [

35] proposed a Neural Network based approach which is called TinyFallNet. They used the KFall [

34] to train and achieved 86.67% sensitivity.

As shown, we achieved comparable sensitivity and specificity among these works.

Evaluation on the FARSEEING dataset is a key test of our approach, as it addresses the well-known “simulation-to-real world” gap faced by many fall detection systems. The scarcity of large-scale, publicly available real-world fall datasets is a major bottleneck in the research community, making any evaluation on such data, regardless of scale, extremely valuable.

However, we must acknowledge a key limitation of the FARSEEING dataset: its small sample size of only 22 verified fall events. This requires careful interpretation of the results, as it limits their broad statistical reliability. Therefore, our discussion of these results focuses not on claims of absolute real-world superiority, but rather on how this successful cross-dataset validation reveals the robustness and generalization capabilities of our 3s2sub feature set.

Despite being trained exclusively on simulated, laboratory-collected data (UniMiB SHAR), our model performs remarkably well on unseen, noisy, and heterogeneous real-world data such as FARSEEING. As shown in

Table 13, our method achieves the highest reported sensitivity (95.35%) and specificity (98.12%) compared to other published studies using this challenging dataset. The key to this result lies not only in the high scores themselves, but also in the fact that our feature engineering approach successfully transfers from the simulated to the real-world domain. This demonstrates that the statistical features we designed effectively capture the fundamentally invariant physical patterns of fall events, making them less susceptible to domain shifts that can degrade the performance of other models.

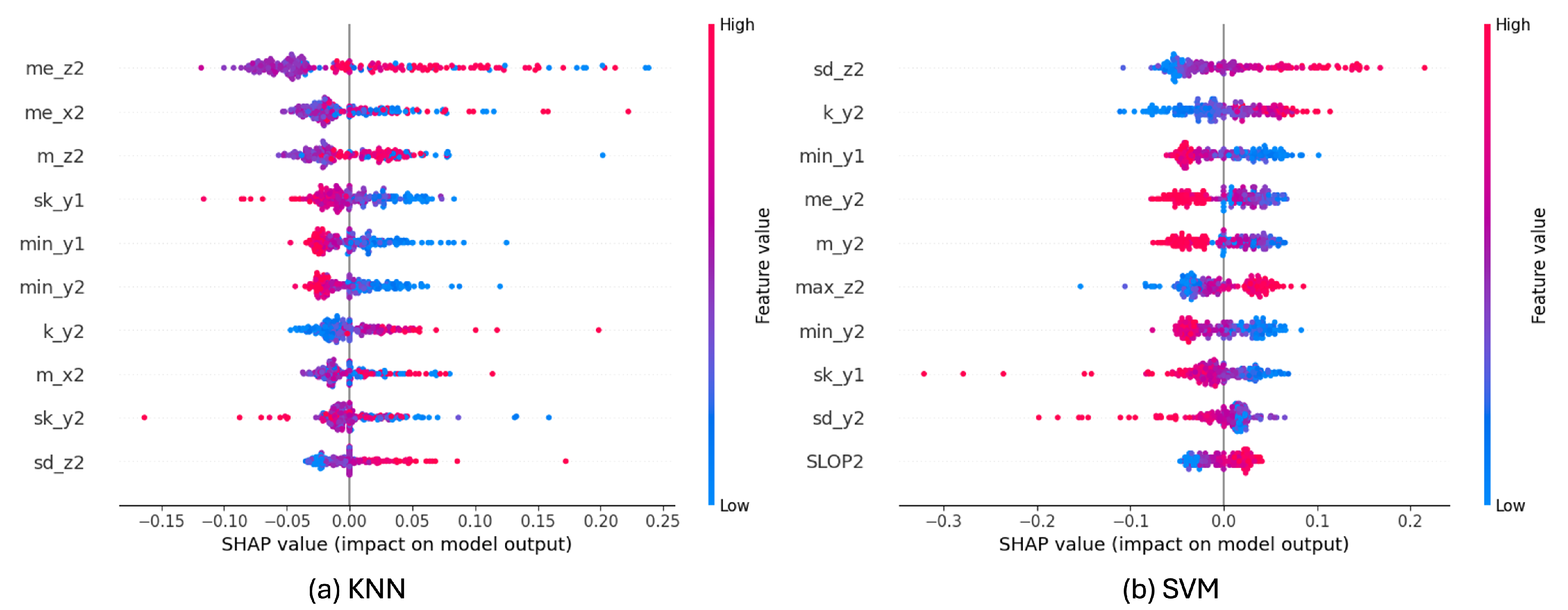

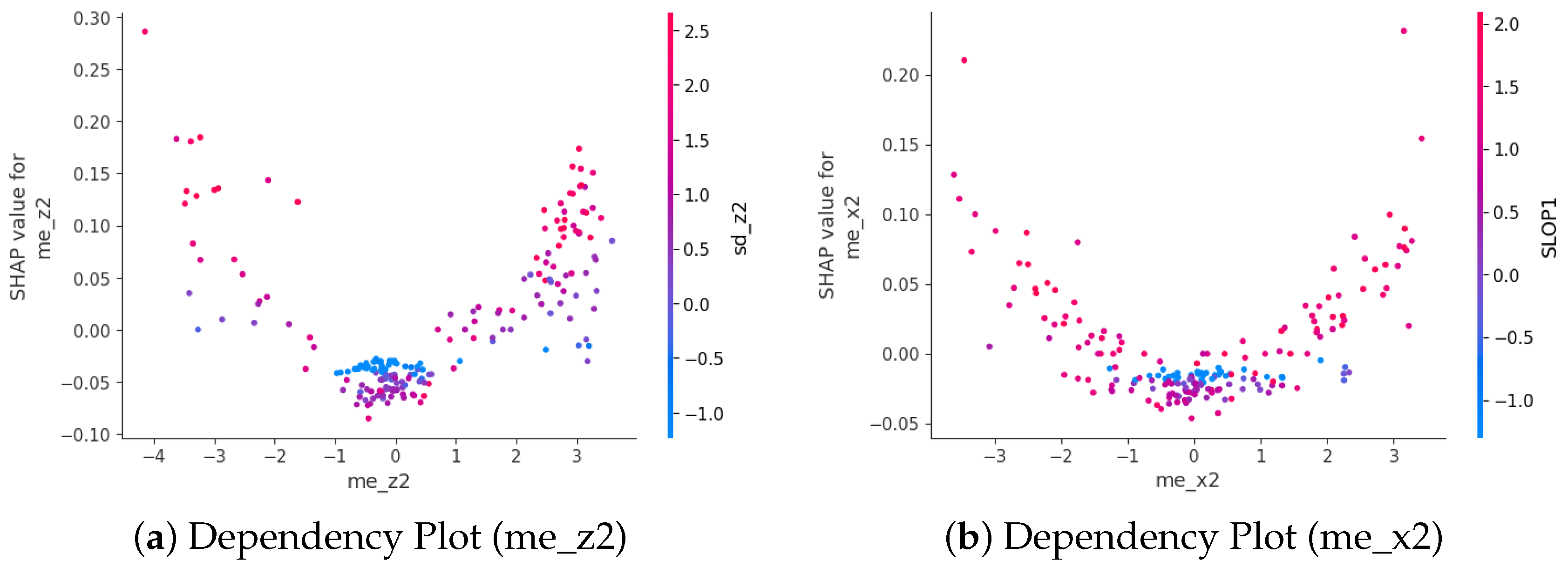

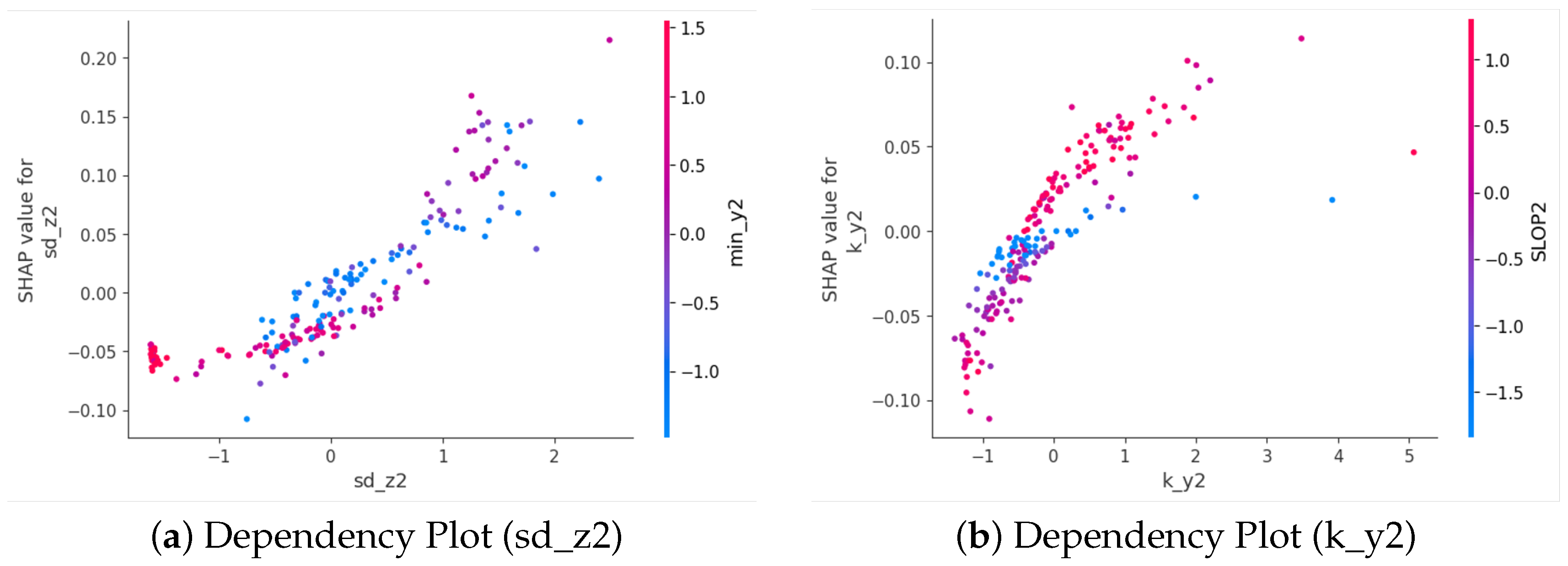

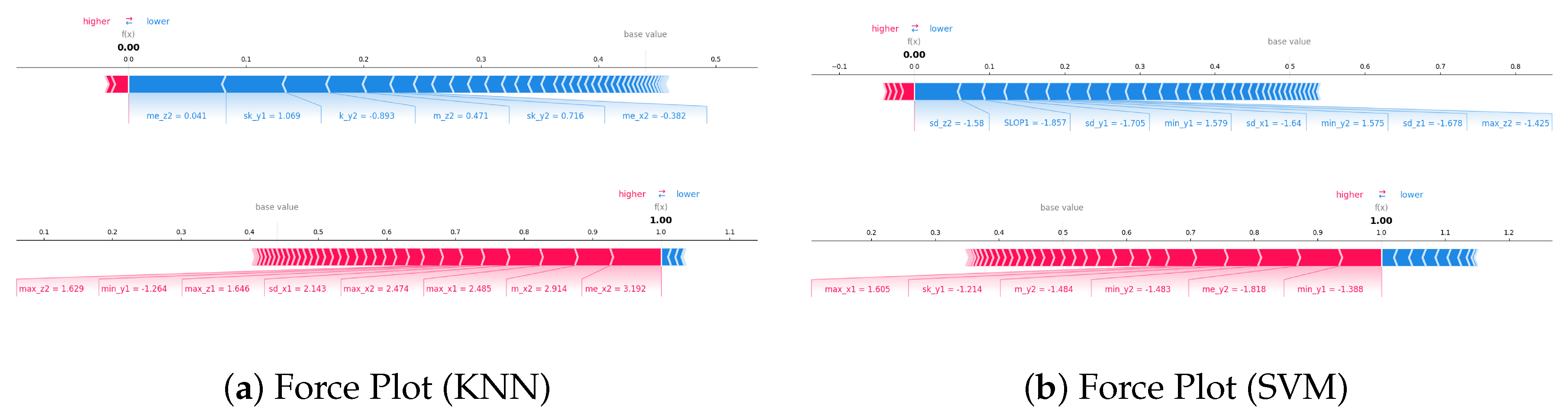

In summary, despite the limitations of the evaluation on FARSEEING, it provides strong evidence for the robustness of the 3s2sub framework. It demonstrates that a well-designed feature-based approach can achieve generalization from simulation to the real world, a critical and often difficult requirement for practical fall detection systems. We believe the success in such data-scarce conditions can be attributed to several factors: (1) the discriminative power of the proposed 3s2sub feature extraction method; (2) the resampling strategy to counteract class imbalance; and (3) the model’s reliance on generalizable, interpretable features, as confirmed by the SHAP analysis. Overall, these results indicate that the proposed approach can robustly handle both class imbalance and few-shot learning challenges in fall detection, making it well-suited for deployment in real-world settings where fall data is limited and imbalanced.

8.5. Failue Case Analysis

Our approach achieved impressive results. To provide a deeper understanding of our method and its practical feasibility, we analyzed false positives and false negatives. This analysis sheds light on some of the model’s limitations. Our analysis shows that the majority of false positives are generated by specific activities of daily living (ADLs) that involve rapid postural changes and sudden decelerations, closely resembling the characteristics of real falls. In the UniMiB SHAR dataset, the most frequently misclassified ADLs are “sitting down” and “falling from a standing position to lying down.” In the MobiAct dataset, “car stepping on” (CSI) is the primary source of false positives. The underlying physical reason for these misclassifications is that these activities produce sharp, high-intensity acceleration spikes upon impact (for example, with a chair or bed), which our model can confuse with the impact phase of a fall. This is a classic challenge in the field, and our analysis accurately identifies specific ADLs that require more sophisticated feature differentiation in future work.

Our investigation of false positives reveals that missed falls are primarily atypical or complex events, with acceleration profiles that differ from standard hard-hit falls. In the UniMiB SHAR dataset, missed falls include events such as “fainting,” “falling into an obstacle,” and “falling backward into a chair.” Similarly, in the MobiAct dataset, events such as “falling backward into a chair” (BSC) and “falling forward onto one’s knees” (FKL) are sometimes missed. These events share a common characteristic: non-standard impact features. For example, a fall cushioned by a chair (BSC) results in significantly reduced peak acceleration. Falls caused by syncope may manifest as a slow slip rather than a rapid fall. These findings are crucial because they highlight the model’s limitations with soft-impact or multi-stage impact falls and indicate the need for adding features sensitive to more subtle fall patterns.

In addition,

Figure 17 shows the data trace for the only false negative example on FARSEEING. We observe that the sensor recording range for this particular sample is

, which differs from the

range of our entire training set. This failure highlights the challenges posed by heterogeneity in real-world data, such as variations in sensor hardware. It emphasizes that, while this model is already strong, its robustness can be further improved by incorporating more diverse data during training.