1. Introduction

Gait analysis enables the identification of age-related musculoskeletal diseases and neurological movement disorders, while supporting the quantification of disease progression. For instance, gait patterns characterized by shuffled, small steps or lower gait velocities are associated with an increased risk of falling [

1]. A previous study demonstrated a correlation, with confidence coefficients ranging from 0.595 to 0.798, between gait velocity, step and stride length, and the Berg Balance Scale [

2]. Moreover, it has been shown that gait consistency and variability differ in individuals who fell within a 3-day study phase compared with healthy controls [

3]. Another study indicated that gait velocity is associated with survival in the elderly population [

4]. Changes in gait patterns can also manifest in the early stages of Parkinson’s disease (PD) [

5,

6]. Therefore, it is crucial to recognize such patterns as early as possible. Nonetheless, regular in-person screenings by specialists are often deemed impractical due to limited healthcare resources, patient discomfort, and lack of acceptance stemming from long travel distances, time constraints, or belief in their own health [

7].

Gait analysis systems, such as floor-based gait tracking [

8,

9] and camera-based systems utilizing 3D or RGB cameras—with or without markers [

10,

11,

12,

13]—are valuable tools for estimating spatio-temporal gait parameters, including step length, velocity, and swing/stance time. However, these systems are typically confined to specific research settings due to their requirement for complex room setups. This includes sensor carpet implementation and advanced camera configurations, as well as extended subject preparation times, including marker placement. Continuous monitoring via camera-based systems in everyday life is also impractical due to privacy concerns that arise in private environments. Moreover, the installation of sensor carpets or camera-based systems in home environments incurs substantial costs, limiting monitoring capabilities to certain areas within the living space. Therefore, such ambient sensors are unsuitable for continuous gait analysis in everyday living.

IMU-based systems provide a cost-effective alternative. IMUs are widely available in consumer devices and have proven effective for gait analysis, typically when placed at the ankle or shank [

14]. However, these placements can be inconvenient. In contrast, studies have shown that waist- or hip-mounted sensors, tested in populations with PD or Multiple Sclerosis (MS) [

15,

16], are both unobtrusive and comfortable, supporting their use for daily monitoring. Frequent monitoring supports clinically meaningful applications including fall-risk screening in older adults (e.g., discriminating high vs low fall risk using IMU features) [

17]; early detection and monitoring in Parkinson’s disease, where IMU gait parameters correlate with disease status [

18]; and rehabilitation tracking in MS or post-stroke patients, where IMU-derived gait features track recovery and asymmetries [

19,

20]. Bonanno et al. [

21] systematically reviewed wearable-sensor fall-risk approaches in neurological populations, noting the presence of methodological heterogeneity and the importance of clinically grounded, standardized techniques.

In this work, we investigate gait event detection and parameter estimation using data from a single waist-worn IMU. We compare ML approaches including CNN and LSTM Seq2Seq models against GAITRite® reference data. We further introduce an improved evaluation method using dynamic event-centered segmentation and demonstrate that waist-mounted IMUs can serve as a practical alternative to lower-limb sensors for accurate gait analysis. Because the waist offers a stable placement that is unobtrusive and easily implemented in a belt, it is especially suited for daily-life monitoring. While previous studies with waist-level sensors have predominantly focused on event timing, we extend this by demonstrating that a single waist-mounted IMU can reliably estimate temporal, spatial, and spatio-temporal gait parameters. This contribution broadens the scope of waist-worn IMUs and supports their use as a practical tool for everyday gait analysis.

2. Related Work

2.1. Use Cases

Two recent reviews have highlighted the diverse applications of IMU-based gait analysis. IMU-based gait analysis has been applied across diverse populations. A review by Mobbs et al. [

22] reported that most studies focused on healthy individuals, while others examined older adults, patients with PD, stroke survivors, individuals with ataxia, Huntington’s disease, or lower-limb amputations. Similarly, Prasanth et al. [

14] found that stroke-related impairments were the most common focus, followed by amputations, cerebral palsy, and PD. In both reviews, these conditions were identified retrospectively rather than being used as explicit searches.

2.2. Sensor Positions

The choice of sensor placement varies considerably across studies. Reviews show that foot and shank sensors remain the most common locations, often complemented by thigh or trunk placements in multi-sensor configurations [

14]. Other studies report different distributions, with frequent use of wrist as well as waist, hip, and chest sensors [

23]. Notably, when restricted to single-sensor setups, the waist emerges as the predominant location, selected in the majority of cases [

22]. Evaluations highlight a sensor position trade-off; lower-limb placements (foot/shank) yield the most accurate detection of HS and TO events and better estimates of stance and swing times, whereas trunk/waist sensors are more practical to wear but can lead to reduced precision in these measures [

24]. Cross-cohort tests further show that performance, particularly variability and asymmetry measures, is sensitive to sensor location and recording context (e.g., indoor vs. outdoor walking, healthy adults vs. PD), underscoring the importance of selecting the placement that best matches the target application [

25].

2.3. Algorithms

Support vector machines have long been applied to gait analysis in the context of neurodegenerative diseases such as Alzheimer’s disease, PD, Multiple Sclerosis (MS), and Amyotrophic Lateral Sclerosis (ALS) [

26]. More recently, deep learning methods such as CNNs and LSTMs have emerged, offering strong performance when sufficient training data are available, while instance-based and tree-based methods remain useful for smaller datasets.

Rule-based techniques, typically relying on thresholds or peak detection, are still widely used for gait event detection, particularly for HS and TO [

14]. Their low computational complexity makes them suitable for real-time clinical applications, but they are generally limited to event detection and do not provide spatial or spatio-temporal parameters.

3. Materials and Methods

This section outlines the methodology used in the study, beginning with a description of the dataset collected using an IMU sensor and the GAITRite

® system. Two ML-based gait event detection algorithms are explained. The methodology also includes step length estimation and the calculation of additional gait parameters, which, in combination with the gait event detection approach, form a two-stage algorithm. Finally, the evaluation methods and performance metrics for the algorithms are presented. We chose CNN and LSTM Seq2Seq architectures because they are widely established baselines for time-series and wearable-sensor modeling, including human activity and gait from IMUs. Prior work shows CNN/LSTM as standard, high-performing choices in time-series reviews and in wearable sensing/HAR and gait estimation specifically [

27,

28,

29,

30,

31,

32,

33].

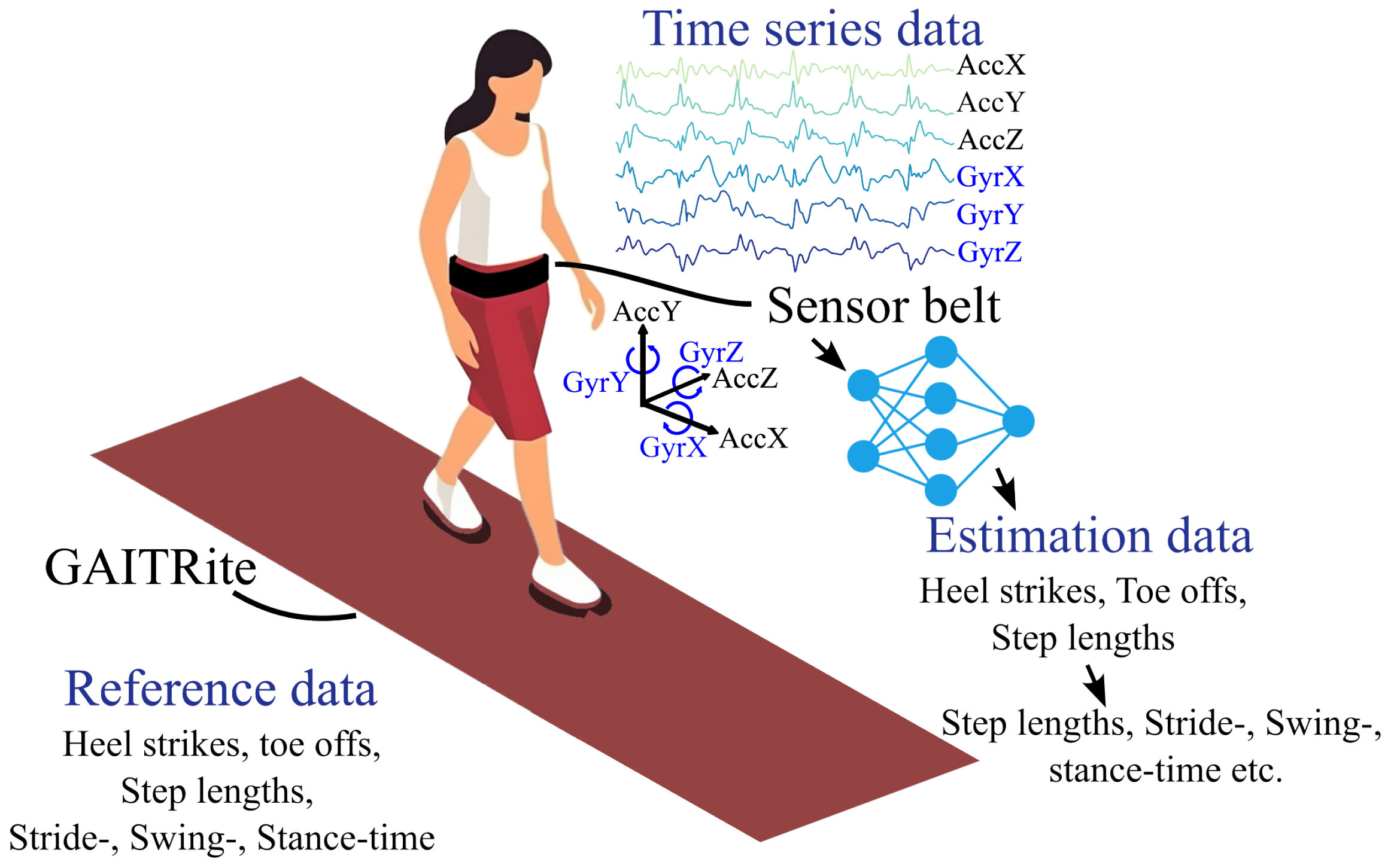

3.1. Dataset

The study dataset was collected at the University of Oldenburg using a single IMU sensor that was integrated into a waist-worn Humotion sensor belt, positioned between the L3 and L5 lumbar vertebrae [

2,

34,

35]. This IMU sensor includes a triaxial accelerometer, gyroscope, and magnetometer, with data sampled at 100 Hz. The placement and orientation of the sensor were adjusted according to the hip circumference of each participant to ensure stability and uniformity, crucial for accurate gait recording (see

Figure 1 for a schematic overview).

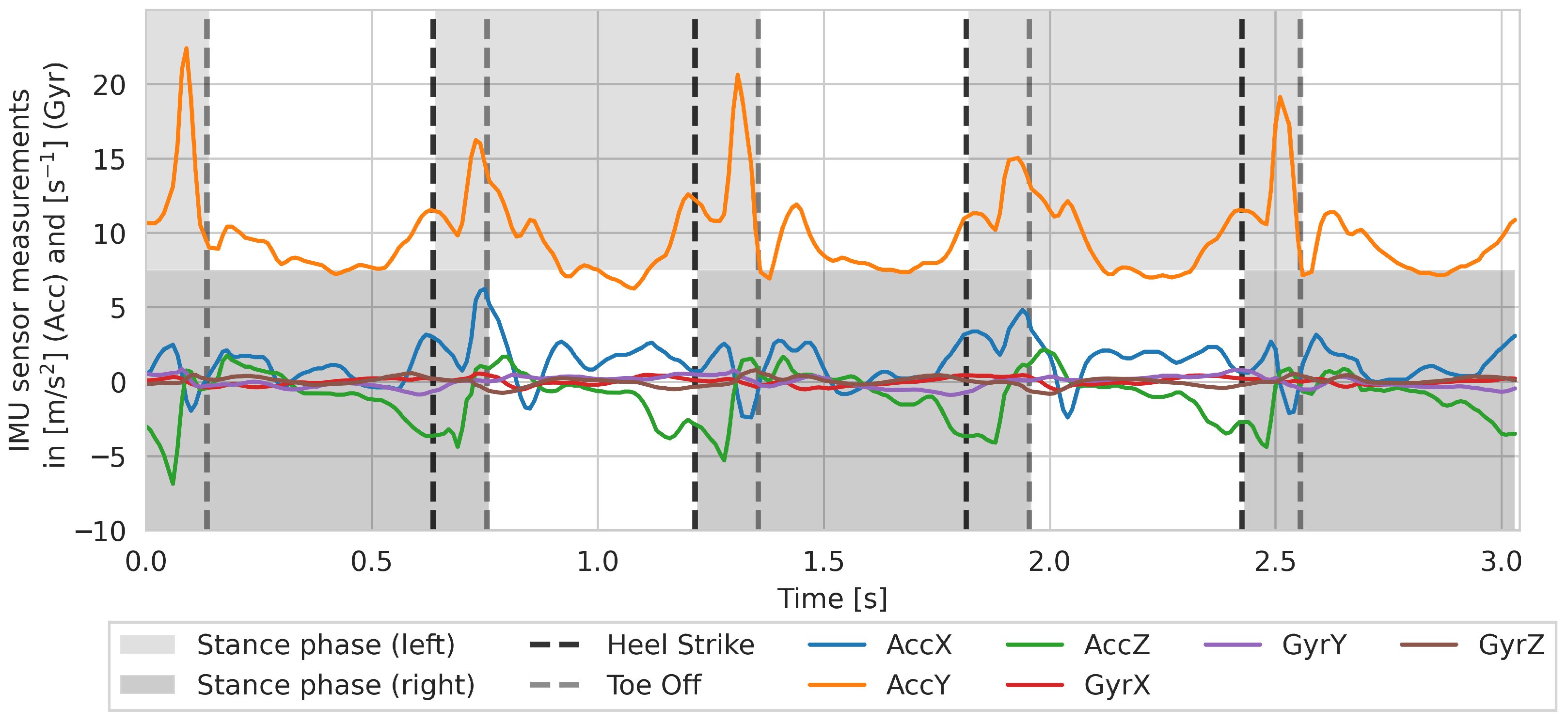

Figure A1 shows the sensor data of a single walk with gait events (HS and TO) during a 4 m walk.

The GAITRite

® system, a validated tool for gait parameter estimation [

36], was used as a reference, recording gait alongside the sensor belt. It records event-based measurements, capturing the exact time and location of foot contacts on its sensor grid, which are spaced at a minimum of 1.27 cm apart [

9].

As summarized in

Table A1, 69 subjects with ages ranging from 21 to 82 years are included in the dataset. The participants were healthy, with a median age of 57 years (standard deviation (SD) = 24; range: 21–82 years), of whom about 43% were female and 57% were male. Participants were instructed to walk continuously back and forth across the 4 m long GAITRite

® for 6 min. To capture a broader range of walking speeds, the walking test was conducted twice—once at a comfortable self-chosen (normal) pace and once at a self-chosen fast pace. Each walk was followed by short rest breaks. The walking speed conditions were not randomized across participants [

34].

The raw triaxial accelerometer and gyroscope signals from the waist-worn IMU were normalized channel-wise to zero mean and unit variance. Magnetometer data were not considered, since the aim was to use a purely body-centered representation without incorporating global information. Step lengths were obtained from GAITRite® as the Euclidean distance between consecutive HS positions. IMU and GAITRite streams were synchronized by estimating a constant time offset and shifting the IMU series accordingly. The raw IMU signals were used without additional filtering or transformation, apart from synchronization to GAITRite® events and normalization, as described. Walks were excluded if either modality was missing, if the duration was shorter than 2.64 s (264 samples), or if GAITRite failed to detect at least one step. Missed steps were inferred when the preceding step time exceeded 150% of the median step time for that walk, which led to the removal of 36 walks (about 0.8%). Double steps were defined as step times shorter than 10% of the median and resulted in discarding 32 walks (about 0.7%), where the first of each double-step pair was removed. Data before the first HS and after the last HS were trimmed, and each IMU sample was assigned the GAITRite-derived step length of its enclosing HS interval, creating sample-aligned labels for Seq2Seq training.

3.2. Gait Event Detection

For comparison, two different gait event detection algorithms were implemented, designed as a Seq2Seq, based on convolutional or recurrent neural network RNN design, which are described in the following section. First, the common principles of both algorithms are introduced, followed by an explanation of the respective networks’ architecture and the training phase.

The GAITRite

® data contains the timings of the HS and TO events per walk. Each walk

for subject

i consists of HS timestamps

and TO timestamps

. The number of gait events varies with each walk. To make this data available to the neural network as input, each walk is encoded by a binary time series. Each HS marks a new step and is the start of the double-support phase, meaning that two feet are touching the ground simultaneously. In contrast to that, the single-support phase is the phase where one foot touches the ground while the other is in the swing phase. Following this, the binary gait-support representation encodes for each time step whether it is a single- (0) or double-support phase (1). In this representation, gait events are identifiable at the transition points from 0 to 1 or vice versa, as depicted in

Figure 2.

Both neural networks process overlapping segments of the IMU signal. The six raw channels (three axes of acceleration and three axes of angular velocity) are divided into fixed-length windows

, extracted with a stride of one sample to ensure full coverage of the time series. For each window, the network outputs a sequence of the same length as the input segment, but with a single channel representing the estimated gait-support state at each time step (

Figure 3). Because consecutive windows overlap, each time point is predicted multiple times.

Among the overlapping windows, the median of all binary gait-support estimations (potentially rounded) is calculated as the final output estimation. Therefore, for each input time series with sequence length , the network produces an output time series , where represents the type of gait-support phase. The transitions in that time series are used to identify the HS and TO events, resulting in two output vectors and , where each value represents the estimated timestamp of either a HS or a TO of walk .

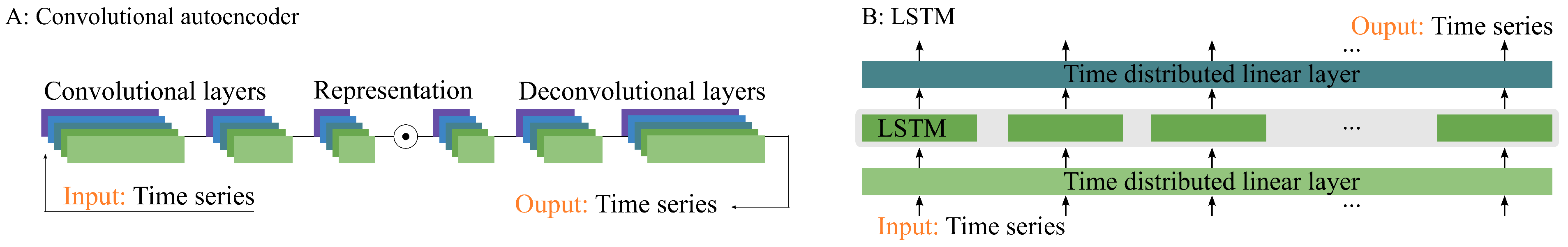

As visualized in

Figure 4, the two neural network architectures are based on a CNN and an LSTM. The CNN consists of a three-layer 1D-CNN as an encoder, followed by a symmetrical set of deconvolutional layers. The first two convolutional layers consist of 64 kernels with ReLU activation, batch normalization, and dropout. The third convolutional layer employs 32 kernels with a ReLU activation. The convolutional layers use a stride of 2 and maintain a fixed padding of 1 with a kernel size of 3. Subsequent deconvolutional layers mirror the same structure, leading to a single, sigmoid-activated layer.

The LSTM is a 3-layer stacked formation, and takes a linear encoding sequence, where each step in the sequence is individually encoded into 64 features using the same linear layer (also known as a time-distributed layer). The LSTM’s output sequence is then decoded from 64 features to a single feature using another time-distributed linear layer, followed by a sigmoid-activated layer.

During training, time series of length 264 are sampled from the IMU data. A random offset is applied to each sample, and a sub-sequence of 264 length is then cut out. The AdamW optimizer [

37] with a learning rate of 0.002, a weight decay of 0.01, and a batch size of 128 is used. The learning rate decreases by a factor of 2 as soon as the loss does not decrease over a span of 6 epochs. As a loss function, the Binary-Cross-Entropy loss for the gait event estimation is used. Leave-one-out cross-validation (LOOCV) is used to maximize the size of the training dataset. With LOOCV, the complete data of a single subject is withheld at a time and the training is executed with the rest. Only the unseen data of the left-out subject will be evaluated. The evaluation results are averaged over all folds. The training is stopped early if the loss does not decrease over a span of 24 epochs, or if the maximum number of 72 epochs has been completed.

3.3. Step Length Regression

In the following section, the algorithm for step length estimation for each consecutive HS event is described, which is visualized in

Figure 5. The estimation of step lengths is based on the same neural network architecture (either CNN or LSTM) as the ML-based gait event detector. Only the last activation function (sigmoid) is replaced by the identity function.

The GAITRite® data contains a step length annotation for each consecutive HS. As a preparatory step, the step lengths of each walk are converted into a time series with the same length as the corresponding IMU data of that walk. For each time step within the time span in between two consecutive HS, the same step length is assigned, given by the GAITRite® data. The resulting time series , with as the total number of time steps within , therefore looks like a step function. is to be estimated by the neural network, using the IMU data of a walk as .

Next, the HS event estimations are used for calculating the final step length estimations for each consecutive HS , with , where k is the number of estimated HS. The median of all estimations of the network within the time spans between each consecutive HS is calculated. Additionally, a step length is estimated for the time span from time step 0 to the first estimated HS, resulting in a final estimation of step lengths . Note that , the number of predicted step lengths, may differ from the total number of step lengths, annotated by the GAITRite®.

For training, the same randomized sampling strategy as for ML-based gait event detection is applied. Furthermore, LOOCV, optimizer, learning rate, weight decay, batch size, learning rate scheduler, and early stopping strategy remain the same. The loss function used is Soft-DTW [

38].

3.4. Additional Gait Parameter Estimation

The following gait parameters are calculated by using the HS and the respective step length estimations from the ML-based algorithm:

Cadence: First, the inverse of the time between two consecutive HS is calculated, and the average is taken of all such values in each walk.

Velocity: The velocity is computed by dividing each estimated step length by its corresponding step time.

Step time: The step time is computed by subtracting the time of each estimated HS from the time of the previous HS.

Stance time: The stance time is computed by subtracting the time of each TO from the time of the previous HS.

Swing time: The swing time is computed by subtracting the time of each HS from the time of the previous TO.

These gait parameters are all evaluated walk-wise.

3.5. Evaluation

3.5.1. Evaluation of Gait Event Detection

For the evaluation of gait event detection, each walk is divided into segments. The segments are defined by using the midpoint between consecutive gait events (either HS or TO) as the start and the midpoint between the next pair of gait events as the end, using the annotations of HS and TO timestamps from the GAITRite® (). The timestamp of the left boundary of the first segment is set to 0, and the right boundary of the last segment is set to . This segmentation ensures that each segment corresponds to exactly one reference gait event, allowing every estimated event in to be uniquely assigned.

A predicted event ( or ) is considered a true positive if it is the only detected event within the corresponding segment. Segments with multiple predictions are treated as over-estimations (false positives), while segments without any predictions are counted as false negatives. True negatives are not defined in this context, since by construction each segment is associated with one reference event. Based on this scheme, evaluation metrics such as accuracy, precision, recall, and F1-score are computed. In addition, the temporal precision of the detector is assessed by calculating the mean time difference between the estimated and reference events for all true positives.

This dynamic segmentation approach enables a meaningful assessment of temporal displacement between detected and reference events. Unlike fixed-window evaluation strategies, which can bias results through arbitrary window boundaries, the segmentation based on event midpoints provides an unbiased alignment to the reference data and captures the true temporal accuracy of the detectors.

3.5.2. Evaluation of Step Length Regression

In the GAITRite

® reference data, each walk

has a varying amount of annotated step lengths

, which correspond to the number of steps taken during the walk, which are defined by the number of HS occurring within

. As a basis for evaluating step lengths, the steps must also be detected by the algorithm. When a HS

is correctly detected (fulfilling the definition of a true positive according to

Section 3.5.1), the corresponding step length estimation

is used to calculate the error (mean error) with

.

4. Results

Prior to the evaluation, we investigated the influence of the window size parameter on the performance of the CNN-based gait event detection model. The window size determines the temporal span of input data considered for each prediction. We experimented with various window sizes, ranging from 8 to 496 time steps, with a step size of 16. In general, larger window sizes tend to produce better results. Beyond window sizes of 264 time steps, fewer walks meet the minimum length requirement, shrinking the training set. We found that this reduction in data outweighed any contextual gains from a longer window. Therefore, we selected a window size of 264 time steps for all subsequent evaluations, as it represented the best compromise between capturing sufficient gait event context and maximizing the utilization of our available data.

4.1. Evaluation of Gait Event Detection

The evaluation of the CNN- and LSTM-based gait event detectors is presented in

Table 1 and

Table 2. Both approaches achieved accuracy, precision, recall, and F1-scores consistently well above 98%. In terms of temporal precision, the CNN-based detector provided the lowest mean errors for HS and TO events (

Table 2).

4.2. Evaluation of Step Length Regression and Additional Gait Parameters

Table 3 summarizes the performance of our step length regressions and the gait parameters derived from it. The CNN-based method outperforms the LSTM-based method for most gait parameters. Consequently, the CNN-based step length estimation is considered for further evaluation, as it demonstrated better performance overall compared to the LSTM-based approach. The mean bias was 0.09 cm (with SD 4.64 cm; 95% CI [0.026, 0.163] cm).

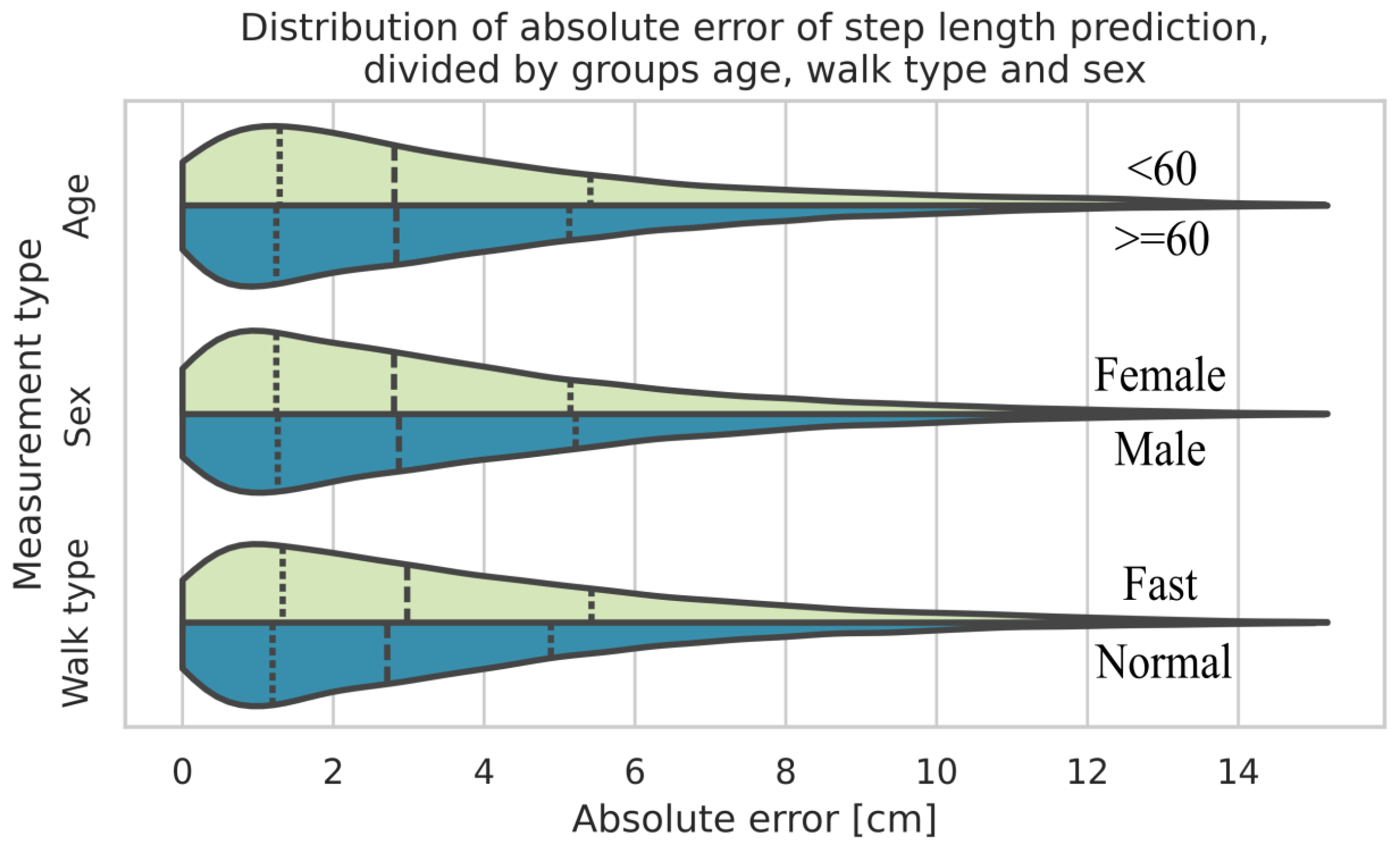

When the data is grouped by walk type (normal and fast), age, and sex, the biggest difference between two of the respective groups results from the walk type, where fast walks show a bigger estimation error compared to normal walks. Subsequently, the percentage error between the walk types does not differ much (5.97% for normal walks compared to 6.28% for fast walks).

Figure 6 presents a violin plot [

39] that shows the kernel density estimations for each individual group in direct comparison with their counterparts. The dotted lines represent the median value and the quartiles, respectively.

The intraclass correlation coefficient (ICC) reflecting mean differences between subjects for the step length errors was 0.35. This indicates that about a third of the total variance in errors can be attributed to differences between subjects.

Figure 7 shows the Bland–Altman [

40] plot for the step length estimation of the ML-based algorithm. The mean difference is 0.09 cm with a 1.96 SD interval of [−9.08 cm, 9.27 cm].

If the error is calculated separately for each test subject, large differences become apparent as the error for some subjects exhibits a systematic bias. The mean error values on a single subject range from 0.01 cm to the biggest underestimate of −8.32 cm and +7.69 cm as the biggest overestimate on average. The standard deviation exhibits a minimum of 1.71 cm and a maximum of 6.47 cm for individual subjects.

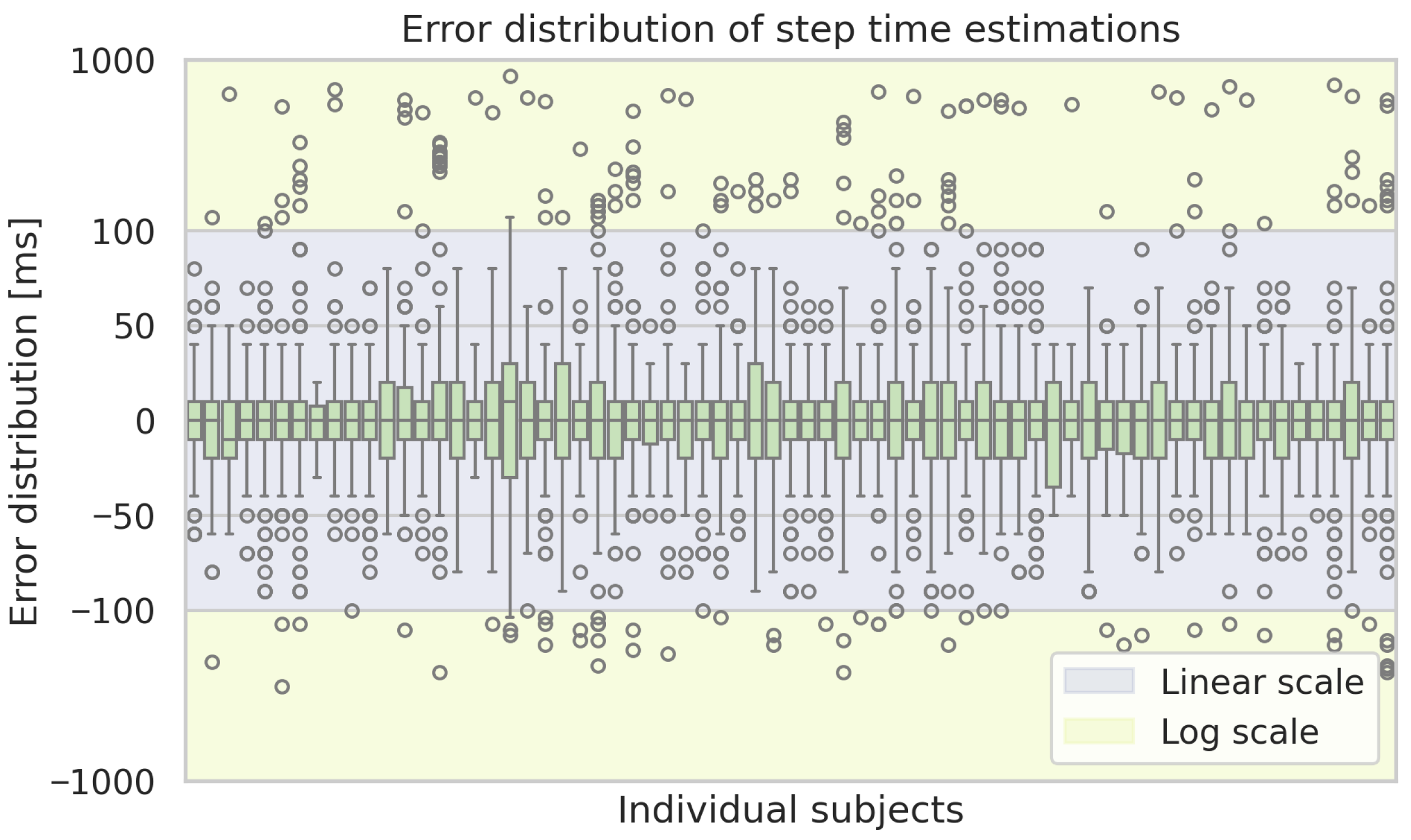

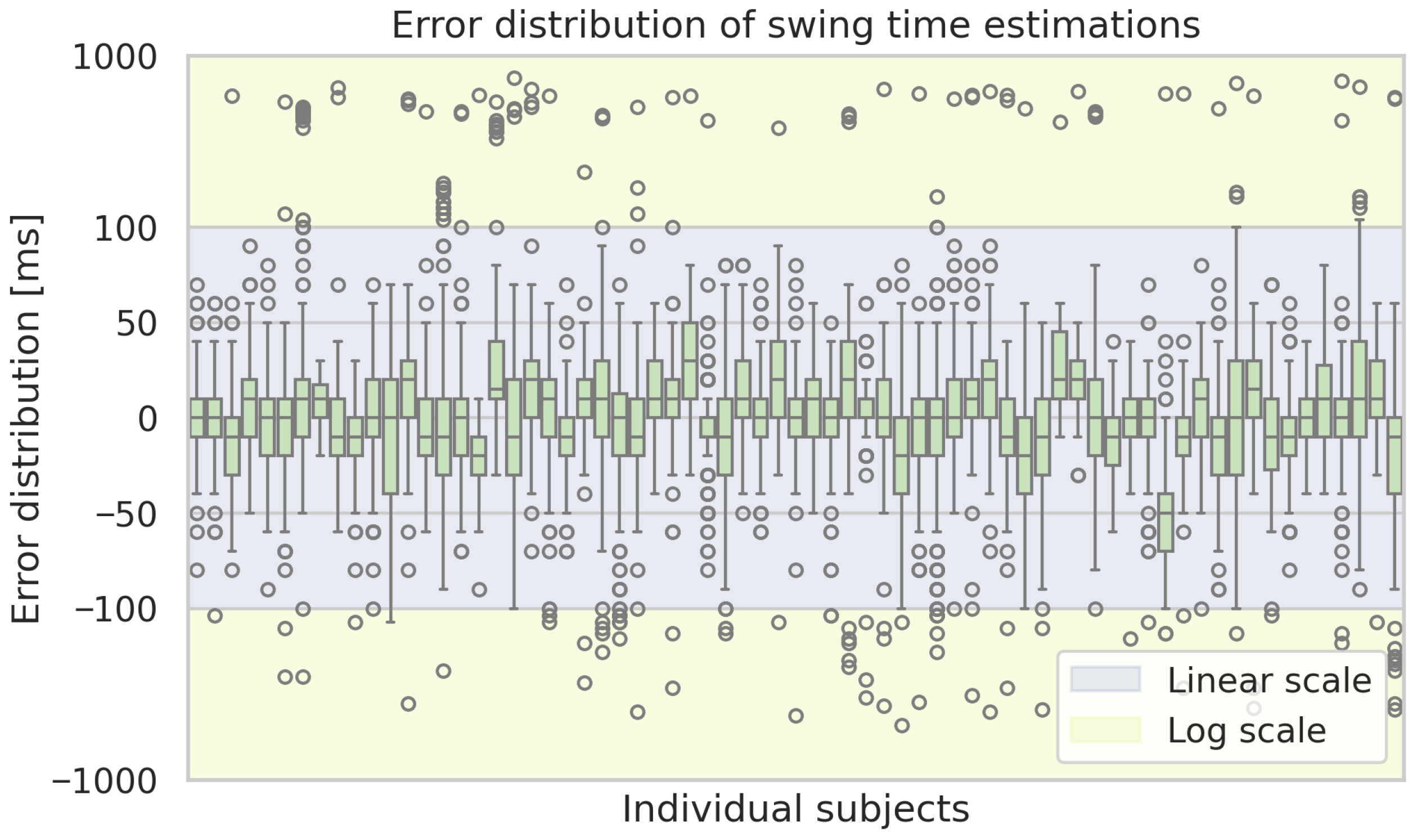

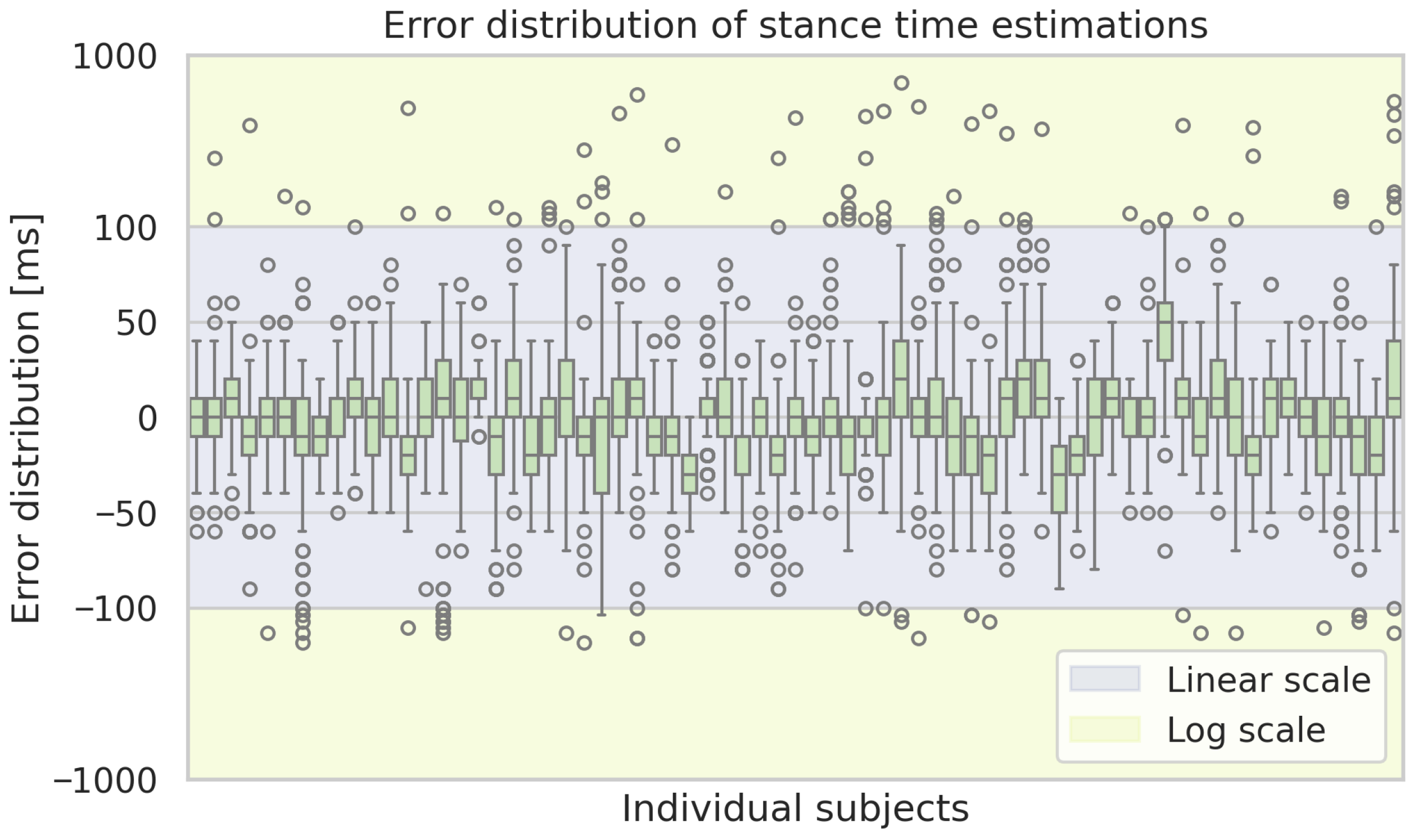

Figure A2 displays box plots of the step length error per subject. The same kind of plot for the remaining considered gait parameters is shown in the

Appendix A.

5. Discussion

By comparison of two ML-based methods for detecting gait events, the study found that the CNN-based approach performed best overall.

Continuing with the results from the ML-based approach, the Bland–Altman plot showed a mean bias of 0.09 cm between the GAITRite® system and the prediction model, indicating good overall agreement. Most differences (95%) fall within the limits of agreement ( cm to cm). The random scatter of points around the mean difference line indicates no systematic error.

It is challenging to make direct comparisons between our results and related work due to the influence of various factors. These include differences in dataset size, reference systems, the course of the route (e.g., straight ahead, on a staircase, or going around a bend), evaluation metrics, or subject cohorts (e.g., healthy or diseased individuals, or other demographic characteristics). However, we do compare individual gait parameters by focusing on publications that implement gait analysis primarily on healthy subjects walking on a straight course.

Table 4 and

Table 5 show the results of previous studies in comparison with the results of our best-performing CNN-based approach. Compared to the studies, our algorithm can estimate respective gait parameters with a very low error, e.g., with respect to step length, our algorithm exhibits the lowest mean offset and standard deviation among the considered alternatives. Nevertheless, the algorithm from Verbiest et al. [

41] performs particularly well regarding stride length, achieving a better mean and standard deviation compared to our approach for step length. For stance and swing time, our algorithm demonstrates mean and standard deviation errors that are intermediate compared to the other algorithms under consideration. With regard to step time, our algorithm has the second-smallest mean offset, while Vavasour et al. [

24] have the smallest standard deviation (1 ms compared to ours, with 31 ms).

These differences can be partly attributed to variations in dataset characteristics and sensor placement across studies. Shank- and foot-mounted sensors capture more localized limb motion with higher acceleration amplitudes and less soft-tissue damping, which facilitates the precise identification of gait events and spatial parameters. In contrast, waist-mounted sensor data includes trunk motion and may be more sensitive to clothing movement and body composition, which may introduce additional variability. Moreover, study protocols differ in walk length, number of steps, and reference systems used, each of which can influence reported accuracy and comparability. Hence, some of the observed performance gaps likely reflect methodological and biomechanical differences rather than purely algorithmic limitations.

Our ML-based approach employs a windowing approach and requires a minimum time series length of 2.64 s in this instance. Consequently, the algorithm can be used in a streaming approach, whereby the results may be updated with each new time step in real time. In the initial phase, a minimum of 2.64 s of data history is required. The proposed algorithm is expandable to accommodate an arbitrary number of sensor channels. It is also possible to combine several sensors. In our case, we used one sensor, which resulted in six channels for the acceleration and angular velocity. To date, the algorithm has been developed for the purpose of detecting gait parameters during walking. During running and sprinting, the body experiences periods of no ground contact, complicating the detection of gait events using the binary representation of the gait cycle, which indicates single- and double-support phases. Nevertheless, an extension of the algorithm is planned to be developed to detect gait events during these gaits. This extension will take into account not only the single- and double-support phases but also a phase of no support. Currently, the algorithm is limited to identifying HS and TO events. Other gait events, such as heel-off and toe-on, are not detectable using our method.

While the proposed approach achieved high accuracy for gait event detection and strong agreement in step length estimation, performance on some derived temporal parameters was more modest, with only moderate correlations (0.55–0.57) for swing and stance times. This indicates that the method is best suited to the detection of gait events and step length, while secondary (derived) parameters may require further refinement. The moderate correlations for swing and stance times result from temporal inaccuracies at HS and TO detection that lead to error propagation in derived measures. Future work could address this by improving the event detection algorithm and incorporating more temporal context, for example, through transformer-based sequence models or temporal attention mechanisms.

Beyond this, our evaluation was limited to 69 healthy adults performing short, repeated 4 m walks in a laboratory setting, which restricts generalizability to pathological populations and real-world walking conditions. The 4 m length of the GAITRite® runway also required frequent turning, which could in principle influence gait patterns and IMU signals. However, only straight-ahead walking segments, as instructed to the participants, were recorded for analysis. The GAITRite® reference system itself has finite spatial resolution, which may introduce small errors. In addition, the algorithm requires 2.6 s of data history before producing first outputs, and accuracy may be affected by variability in waist sensor placement. Finally, the current event detection is restricted to HS and TO, while other gait events (e.g., heel-off, toe-on) remain undetected. The ICC of 0.35 indicates that about one-third of the variance in step length errors is explained by mean differences between subjects. This suggests that the model does not perform uniformly across participants, and an ICC closer to zero would be preferable for subject-invariant generalization.

6. Conclusions

Because waist-worn IMUs are unobtrusive, wearable, and cost-effective, they hold promise for use in continuous monitoring. While a significant amount of previous research has focused on determining gait parameters from IMU sensors placed on the foot or the shank, we introduced an algorithm for gait parameter estimation that achieves comparable accuracy to existing methods for foot and shank placements. Furthermore, the set of gait parameters derived from a single waist-worn sensor in our study addresses a gap in existing research. Notably, similar sensor setups have primarily concentrated on gait event detection and temporal parameters.

Our algorithm demonstrates convincing results when compared to existing works on shank-, foot-, or multi-sensor settings. These results are well within the range of corresponding common age- and disease-related variations. This could lead to earlier detection of gait abnormalities and improved management of gait-related disorders. Early detection of these conditions is crucial for timely intervention and improved patient outcomes. For instance, changes in gait patterns, such as shuffled, small steps, or lower gait velocities, are associated with increased fall risks and can be early indicators of neurological issues. The ability of our method to accurately estimate gait parameters from a waist-worn IMU sensor, being a minimally invasive approach, makes it particularly suitable for long-term continuous monitoring in everyday settings. Thus, we believe that the proposed system has the potential to impact the field of gait analysis by enabling continuous monitoring of gait patterns in everyday life settings.

Validating our algorithms on publicly accessible datasets would enhance comparability with other methods. However, to the best of our knowledge, there are currently no open-access datasets available that are comparable to ours regarding waist-worn IMU sensors with a GAITRite® reference sensor. Nevertheless, we have maximized comparability by primarily comparing our approach to studies that utilize a similar dataset (i.e., healthy adults walking in a straight line). We intend to further evaluate the algorithm’s suitability for everyday living conditions in an upcoming study covering subjects in ecological gait conditions. In addition, potential applications include home monitoring and rehabilitation, where long-term gait tracking could inform timely interventions and therapy adjustments. Practical limitations such as battery life during continuous monitoring and variability in belt placement must be considered; these can be mitigated via power-aware operation and robust orientation handling (e.g., frequent self-calibration via instructions). Finally, integration into real-time wearable systems may enable applications in rehabilitation, fall-risk screening, or mobility monitoring.

Author Contributions

Conceptualization, R.S. and J.T.; methodology, R.S. and J.T.; software, R.S.; validation, R.S.; formal analysis, R.S. and H.H.P.; investigation, R.S. and H.H.P.; resources, R.S. and H.H.P.; data curation, R.S. and H.H.P.; writing—original draft preparation, R.S. and H.H.P.; writing—review and editing, R.S., H.H.P., and S.F.; visualization, R.S.; supervision, A.H. and S.F.; project administration, A.H. and S.F.; funding acquisition, A.H. and S.F. All authors have read and agreed to the published version of the manuscript.

Funding

This research received funding from the German Federal Ministry of Education and Research (01ZZ2007).

Institutional Review Board Statement

This study was granted ethical approval by the ethical committee of the University of Oldenburg, with the approval code number Drs. 33/2016.

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study for the use of the data for scientific purposes.

Data Availability Statement

The code for the algorithms presented in this study is available upon reasonable request from the corresponding author.

Acknowledgments

The authors thank all participants of the study for their time and effort. We acknowledge financial support by the German Federal Ministry of Education and Research (01ZZ2007).

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| IMU | Inertial Measurement Unit |

| HS | Heel Strike |

| TO | Toe Off |

| ML | Machine Learning |

| CNN | Convolutional Neural Network |

| LSTM | Long Short-Term Memory (network) |

| Seq2Seq | Sequence-to-Sequence (model) |

| LOOCV | Leave-One-Out Cross-Validation |

| SD | Standard Deviation |

| CC | Correlation Coefficient |

| MAE | Mean Absolute Error |

| ME | Mean Error |

| PD | Parkinson’s Disease |

| MS | Multiple Sclerosis |

| ALS | Amyotrophic Lateral Sclerosis |

Appendix A

Figure A1.

Exemplary time series of measurements from the sensor belt (IMU sensor) during a walk of a subject on a distance of 4 m. The y-axis indicates the vertical direction, which is why the data of AccY shows an increased acceleration.

Figure A1.

Exemplary time series of measurements from the sensor belt (IMU sensor) during a walk of a subject on a distance of 4 m. The y-axis indicates the vertical direction, which is why the data of AccY shows an increased acceleration.

Table A1.

Statistics of the study cohort and the recorded data.

Table A1.

Statistics of the study cohort and the recorded data.

| | Parameter | Mean (Std) | Min/Max |

|---|

| Subjects | N = 69 |

| Height [cm] | 173 (10) | 151/196 |

| Weight [kg] | 77 (13) | 53/103 |

| Age [years] | 57 (24) | 21/82 |

| Walks per subject | 66 (16) | 28/92 |

| Walks | N = 3588 |

| Walk duration [ms] | 3210 (725) | 2000/7100 |

| Steps per walk | 4.6 (1.2) | 2/12 |

| Steps | N = 17,643 |

| Step length [cm] | 59.22 (6.57) | 36.85/85.09 |

| Stride time [ms] | 574 (69) | 410/2160 |

| Stance time [ms] | 161 (37) | 20/850 |

| Swing time [ms] | 416 (55) | 290/767 |

| Cadence [1/min] | 105.7 (11.2) | 81.0/146.0 |

| Speed [m/s] | 0.86 (0.10) | 0.63/1.20 |

Figure A2.

Box plots of the estimation errors of the ML-based approach for step lengths for each subject. Boxes show the interquartile range with medians, whiskers indicate the spread, and dots represent outliers.

Figure A2.

Box plots of the estimation errors of the ML-based approach for step lengths for each subject. Boxes show the interquartile range with medians, whiskers indicate the spread, and dots represent outliers.

Figure A3.

Box plots of the estimation errors of the ML-based approach of the step time estimations for each subject. Boxes show the interquartile range with medians, whiskers indicate the spread, and dots represent outliers.

Figure A3.

Box plots of the estimation errors of the ML-based approach of the step time estimations for each subject. Boxes show the interquartile range with medians, whiskers indicate the spread, and dots represent outliers.

Figure A4.

Box plots of the estimation errors of the ML-based approach of the swing time estimations for each subject. Boxes show the interquartile range with medians, whiskers indicate the spread, and dots represent outliers.

Figure A4.

Box plots of the estimation errors of the ML-based approach of the swing time estimations for each subject. Boxes show the interquartile range with medians, whiskers indicate the spread, and dots represent outliers.

Figure A5.

Box plots of the estimation errors of the ML-based approach of the stance time estimations for each subject. Boxes show the interquartile range with medians, whiskers indicate the spread, and dots represent outliers.

Figure A5.

Box plots of the estimation errors of the ML-based approach of the stance time estimations for each subject. Boxes show the interquartile range with medians, whiskers indicate the spread, and dots represent outliers.

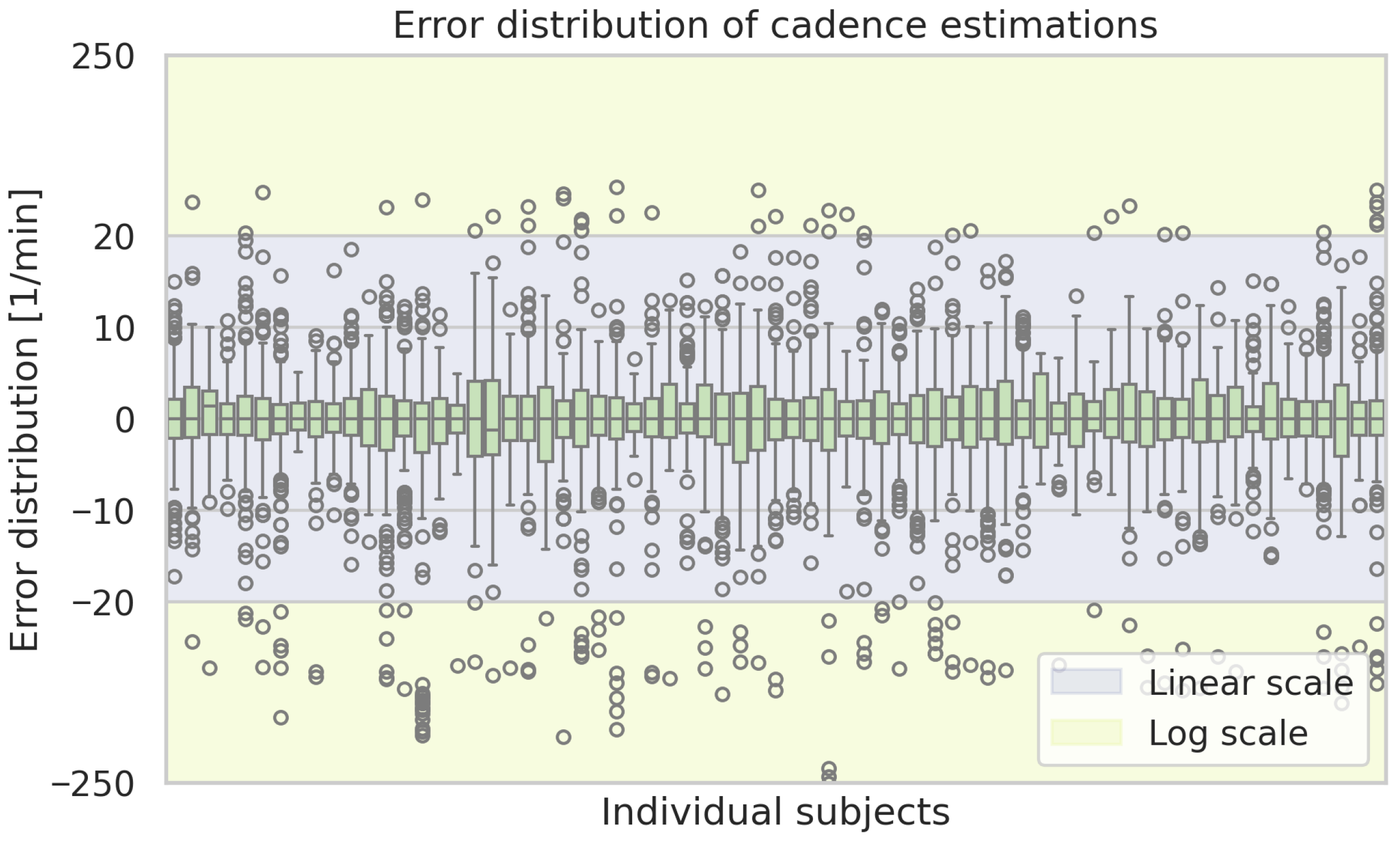

Figure A6.

Box plots of the estimation errors of the ML-based approach of the cadence estimations for each subject. Boxes show the interquartile range with medians, whiskers indicate the spread, and dots represent outliers.

Figure A6.

Box plots of the estimation errors of the ML-based approach of the cadence estimations for each subject. Boxes show the interquartile range with medians, whiskers indicate the spread, and dots represent outliers.

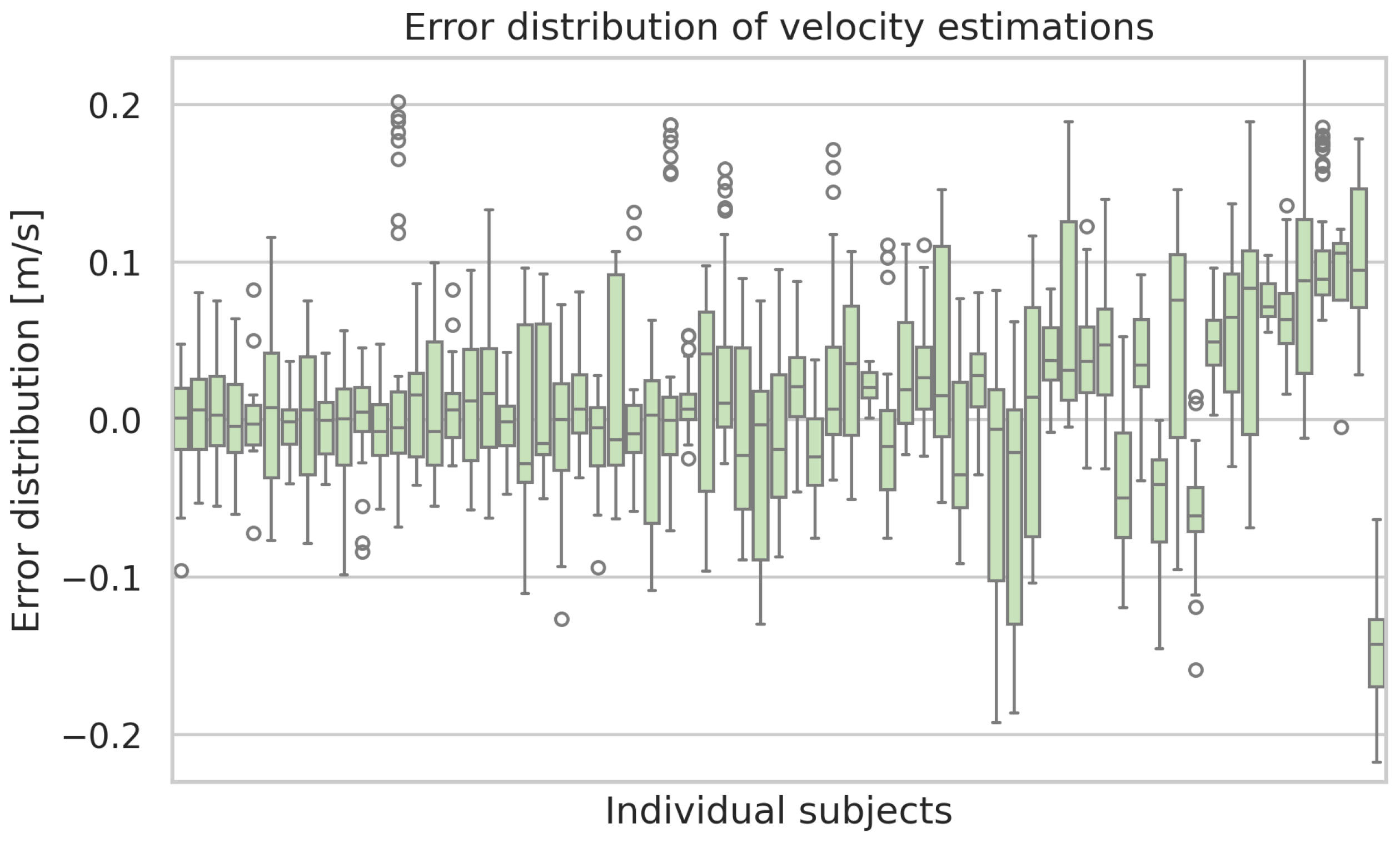

Figure A7.

Box plots of the estimation errors of the ML-based approach of the velocity estimations for each subject. Boxes show the interquartile range with medians, whiskers indicate the spread, and dots represent outliers.

Figure A7.

Box plots of the estimation errors of the ML-based approach of the velocity estimations for each subject. Boxes show the interquartile range with medians, whiskers indicate the spread, and dots represent outliers.

References

- Verghese, J.; Holtzer, R.; Lipton, R.B.; Wang, C. Quantitative Gait Markers and Incident Fall Risk in Older Adults. J. Gerontol. Ser. A Biol. Sci. Med. Sci. 2009, 64A, 896–901. [Google Scholar] [CrossRef]

- Fudickar, S.; Kiselev, J.; Stolle, C.; Frenken, T.; Steinhagen-Thiessen, E.; Wegel, S.; Hein, A. Validation of a Laser Ranged Scanner-Based Detection of Spatio-Temporal Gait Parameters Using the aTUG Chair. Sensors 2021, 21, 1343. [Google Scholar] [CrossRef] [PubMed]

- Weiss, A.; Brozgol, M.; Dorfman, M.; Herman, T.; Shema, S.; Giladi, N.; Hausdorff, J.M. Does the Evaluation of Gait Quality During Daily Life Provide Insight Into Fall Risk? A Novel Approach Using 3-Day Accelerometer Recordings. Neurorehabilit. Neural Repair 2013, 27, 742–752. [Google Scholar] [CrossRef]

- Studenski, S. Gait Speed and Survival in Older Adults. JAMA 2011, 305, 50–58. [Google Scholar] [CrossRef]

- Pistacchi, M.; Gioulis, M.; Sanson, F.; Giovannini, E.D.; Filippi, G.; Rossetto, F.; Marsala, S.Z. Gait analysis and clinical correlations in early Parkinsons disease. Funct. Neurol. 2017, 32, 28–34. [Google Scholar] [CrossRef] [PubMed]

- Wilson, J.; Alcock, L.; Yarnall, A.J.; Lord, S.; Lawson, R.A.; Morris, R.; Taylor, J.P.; Burn, D.J.; Rochester, L.; Galna, B. Gait Progression Over 6 Years in Parkinson’s Disease: Effects of Age, Medication, and Pathology. Front. Aging Neurosci. 2020, 12, 577435. [Google Scholar] [CrossRef] [PubMed]

- Chien, S.Y.; Chuang, M.C.; Chen, I.P. Why People Do Not Attend Health Screenings: Factors That Influence Willingness to Participate in Health Screenings for Chronic Diseases. Int. J. Environ. Res. Public Health 2020, 17, 3495. [Google Scholar] [CrossRef]

- Vítečková, S.; Horáková, H.; Poláková, K.; Krupička, R.; Růžička, E.; Brožová, H. Agreement between the GAITRite® System and the Wearable Sensor BTS G-Walk® for measurement of gait parameters in healthy adults and Parkinson’s disease patients. PeerJ 2020, 8, e8835. [Google Scholar] [CrossRef]

- Webster, K.E.; Wittwer, J.E.; Feller, J.A. Validity of the GAITRite® walkway system for the measurement of averaged and individual step parameters of gait. Gait Posture 2005, 22, 317–321. [Google Scholar] [CrossRef]

- Steinert, A.; Sattler, I.; Otte, K.; Röhling, H.; Mansow-Model, S.; Müller-Werdan, U. Using New Camera-Based Technologies for Gait Analysis in Older Adults in Comparison to the Established GAITRite System. Sensors 2019, 20, 125. [Google Scholar] [CrossRef]

- Büker, L.C.; Zuber, F.; Hein, A.; Fudickar, S. HRDepthNet: Depth Image-Based Marker-Less Tracking of Body Joints. Sensors 2021, 21, 1356. [Google Scholar] [CrossRef] [PubMed]

- Stenum, J.; Rossi, C.; Roemmich, R.T. Two-dimensional video-based analysis of human gait using pose estimation. PLoS Comput. Biol. 2021, 17, e1008935. [Google Scholar] [CrossRef]

- Dunn, M.; Kennerley, A.; Murrell-Smith, Z.; Webster, K.; Middleton, K.; Wheat, J. Application of video frame interpolation to markerless, single-camera gait analysis. Sports Eng. 2023, 26, 22. [Google Scholar] [CrossRef]

- Prasanth, H.; Caban, M.; Keller, U.; Courtine, G.; Ijspeert, A.; Vallery, H.; von Zitzewitz, J. Wearable Sensor-Based Real-Time Gait Detection: A Systematic Review. Sensors 2021, 21, 2727. [Google Scholar] [CrossRef] [PubMed]

- Ullrich, M.; Roth, N.; Kuderle, A.; Richer, R.; Gladow, T.; Gasner, H.; Marxreiter, F.; Klucken, J.; Eskofier, B.M.; Kluge, F. Fall Risk Prediction in Parkinson’s Disease Using Real-World Inertial Sensor Gait Data. IEEE J. Biomed. Health Inform. 2023, 27, 319–328. [Google Scholar] [CrossRef]

- Kirk, C.; Küderle, A.; Micó-Amigo, M.E.; Bonci, T.; Paraschiv-Ionescu, A.; Ullrich, M.; Soltani, A.; Gazit, E.; Salis, F.; Alcock, L.; et al. Mobilise-D insights to estimate real-world walking speed in multiple conditions with a wearable device. Sci. Rep. 2024, 14, 1754. [Google Scholar] [CrossRef]

- Lockhart, T.E.; Soangra, R.; Yoon, H.; Wu, T.; Frames, C.W.; Weaver, R.; Roberto, K.A. Prediction of fall risk among community-dwelling older adults using a wearable system. Sci. Rep. 2021, 11, 20976. [Google Scholar] [CrossRef]

- Tsakanikas, V.; Ntanis, A.; Rigas, G.; Androutsos, C.; Boucharas, D.; Tachos, N.; Skaramagkas, V.; Chatzaki, C.; Kefalopoulou, Z.; Tsiknakis, M.; et al. Evaluating Gait Impairment in Parkinson’s Disease from Instrumented Insole and IMU Sensor Data. Sensors 2023, 23, 3902. [Google Scholar] [CrossRef]

- Voisard, C.; de l’Escalopier, N.; Ricard, D.; Oudre, L. Automatic gait events detection with inertial measurement units: Healthy subjects and moderate to severe impaired patients. J. NeuroEng. Rehabil. 2024, 21, 104. [Google Scholar] [CrossRef]

- Felius, R.A.W.; Geerars, M.; Bruijn, S.M.; van Dieën, J.H.; Wouda, N.C.; Punt, M. Reliability of IMU-Based Gait Assessment in Clinical Stroke Rehabilitation. Sensors 2022, 22, 908. [Google Scholar] [CrossRef] [PubMed]

- Bonanno, M.; Ielo, A.; De Pasquale, P.; Celesti, A.; De Nunzio, A.M.; Quartarone, A.; Calabrò, R.S. Use of Wearable Sensors to Assess Fall Risk in Neurological Disorders: Systematic Review. JMIR mHealth uHealth 2025, 13, e67265. [Google Scholar] [CrossRef]

- Mobbs, R.J.; Perring, J.; Raj, S.M.; Maharaj, M.; Yoong, N.K.M.; Sy, L.W.; Fonseka, R.D.; Natarajan, P.; Choy, W.J. Gait metrics analysis utilizing single-point inertial measurement units: A systematic review. mHealth 2022, 8, 9. [Google Scholar] [CrossRef]

- Keogh, A.; Argent, R.; Anderson, A.; Caulfield, B.; Johnston, W. Assessing the usability of wearable devices to measure gait and physical activity in chronic conditions: A systematic review. J. Neuroeng. Rehabil. 2021, 18, 138. [Google Scholar] [CrossRef]

- Vavasour, G.; Giggins, O.M.; Flood, M.W.; Doyle, J.; Doheny, E.; Kelly, D. Waist—What? Can a single sensor positioned at the waist detect parameters of gait at a speed and distance reflective of older adults’ activity? PLoS ONE 2023, 18, e0286707. [Google Scholar] [CrossRef]

- Celik, Y.; Stuart, S.; Woo, W.L.; Godfrey, A. Wearable Inertial Gait Algorithms: Impact of Wear Location and Environment in Healthy and Parkinson’s Populations. Sensors 2021, 21, 6476. [Google Scholar] [CrossRef]

- Cicirelli, G.; Impedovo, D.; Dentamaro, V.; Marani, R.; Pirlo, G.; D’Orazio, T.R. Human Gait Analysis in Neurodegenerative Diseases: A Review. IEEE J. Biomed. Health Inform. 2022, 26, 229–242. [Google Scholar] [CrossRef]

- Romijnders, R.; Warmerdam, E.; Hansen, C.; Schmidt, G.; Maetzler, W. A Deep Learning Approach for Gait Event Detection from a Single Shank-Worn IMU: Validation in Healthy and Neurological Cohorts. Sensors 2022, 22, 3859. [Google Scholar] [CrossRef] [PubMed]

- Dehzangi, O.; Taherisadr, M.; ChangalVala, R. IMU-Based Gait Recognition Using Convolutional Neural Networks and Multi-Sensor Fusion. Sensors 2017, 17, 2735. [Google Scholar] [CrossRef] [PubMed]

- Moura Coelho, R.; Gouveia, J.; Botto, M.A.; Krebs, H.I.; Martins, J. Real-time walking gait terrain classification from foot-mounted Inertial Measurement Unit using Convolutional Long Short-Term Memory neural network. Expert Syst. Appl. 2022, 203, 117306. [Google Scholar] [CrossRef]

- Arshad, M.Z.; Jamsrandorj, A.; Kim, J.; Mun, K.R. Gait Events Prediction Using Hybrid CNN-RNN-Based Deep Learning Models through a Single Waist-Worn Wearable Sensor. Sensors 2022, 22, 8226. [Google Scholar] [CrossRef] [PubMed]

- Sharifi Renani, M.; Myers, C.A.; Zandie, R.; Mahoor, M.H.; Davidson, B.S.; Clary, C.W. Deep Learning in Gait Parameter Prediction for OA and TKA Patients Wearing IMU Sensors. Sensors 2020, 20, 5553. [Google Scholar] [CrossRef]

- Hannink, J.; Kautz, T.; Pasluosta, C.F.; Gasmann, K.G.; Klucken, J.; Eskofier, B.M. Sensor-Based Gait Parameter Extraction with Deep Convolutional Neural Networks. IEEE J. Biomed. Health Inform. 2017, 21, 85–93. [Google Scholar] [CrossRef]

- Skvortsov, D.; Chindilov, D.; Painev, N.; Rozov, A. Heel-Strike and Toe-Off Detection Algorithm Based on Deep Neural Networks Using Shank-Worn Inertial Sensors for Clinical Purpose. J. Sensors 2023, 2023, 1–9. [Google Scholar] [CrossRef]

- Fudickar, S.; Stolle, C.; Volkening, N.; Hein, A. Scanning Laser Rangefinders for the Unobtrusive Monitoring of Gait Parameters in Unsupervised Settings. Sensors 2018, 18, 3424. [Google Scholar] [CrossRef] [PubMed]

- Fudickar, S.; Kiselev, J.; Frenken, T.; Wegel, S.; Dimitrowska, S.; Steinhagen-Thiessen, E.; Hein, A. Validation of the ambient TUG chair with light barriers and force sensors in a clinical trial. Assist. Technol. 2018, 32, 1–8. [Google Scholar] [CrossRef] [PubMed]

- McDonough, A.L.; Batavia, M.; Chen, F.C.; Kwon, S.; Ziai, J. The validity and reliability of the GAITRite system’s measurements: A preliminary evaluation. Arch. Phys. Med. Rehabil. 2001, 82, 419–425. [Google Scholar] [CrossRef]

- Loshchilov, I.; Hutter, F. Decoupled Weight Decay Regularization. arXiv 2017, arXiv:1711.05101. [Google Scholar] [CrossRef]

- Cuturi, M.; Blondel, M. Soft-DTW: A differentiable loss function for time-series. In Proceedings of the 34th International Conference on Machine Learning, ICML’17, Sydney, Australia, 6–11 August 2017; Volume 70, pp. 894–903. [Google Scholar]

- Hintze, J.L.; Nelson, R.D. Violin Plots: A Box Plot-Density Trace Synergism. Am. Stat. 1998, 52, 181–184. [Google Scholar] [CrossRef]

- Martin Bland, J.; Altman, D. Statistical Methods for Assessing Agreement Between Two Methods of Clinical Measurement. Lancet 1986, 327, 307–310. [Google Scholar] [CrossRef]

- Verbiest, J.R.; Bonnechère, B.; Saeys, W.; Van de Walle, P.; Truijen, S.; Meyns, P. Gait Stride Length Estimation Using Embedded Machine Learning. Sensors 2023, 23, 7166. [Google Scholar] [CrossRef]

- Storm, F.A.; Buckley, C.J.; Mazzà, C. Gait event detection in laboratory and real life settings: Accuracy of ankle and waist sensor based methods. Gait Posture 2016, 50, 42–46. [Google Scholar] [CrossRef] [PubMed]

- Ducharme, S.W.; Lim, J.; Busa, M.A.; Aguiar, E.J.; Moore, C.C.; Schuna, J.M.; Barreira, T.V.; Staudenmayer, J.; Chipkin, S.R.; Tudor-Locke, C. A Transparent Method for Step Detection Using an Acceleration Threshold. J. Meas. Phys. Behav. 2021, 4, 311–320. [Google Scholar] [CrossRef] [PubMed]

- Teufl, W.; Lorenz, M.; Miezal, M.; Taetz, B.; Fröhlich, M.; Bleser, G. Towards Inertial Sensor Based Mobile Gait Analysis: Event-Detection and Spatio-Temporal Parameters. Sensors 2018, 19, 38. [Google Scholar] [CrossRef] [PubMed]

Figure 1.

Schematic overview of the data that is used in this work. The reference data comes from GAITRite®, while the algorithms make estimations based on the measurements of the IMU sensor.

Figure 1.

Schematic overview of the data that is used in this work. The reference data comes from GAITRite®, while the algorithms make estimations based on the measurements of the IMU sensor.

Figure 2.

Representation of the human gait cycle through a binary time series. A value of 1 means that the step is in the double-support phase at the current time, while a value of 0 indicates single-support. This representation is used as a trainable label. The transition between the two phases mark HS or TO events.

Figure 2.

Representation of the human gait cycle through a binary time series. A value of 1 means that the step is in the double-support phase at the current time, while a value of 0 indicates single-support. This representation is used as a trainable label. The transition between the two phases mark HS or TO events.

Figure 3.

Overview of our windowing approach to sampling the time series (1), for same-length inputs (2), and for the CNN (3). To create a final estimation, overlapping fixed-length windows (264 time steps) with median filtering are used to derive event predictions (4–6).

Figure 3.

Overview of our windowing approach to sampling the time series (1), for same-length inputs (2), and for the CNN (3). To create a final estimation, overlapping fixed-length windows (264 time steps) with median filtering are used to derive event predictions (4–6).

Figure 4.

Two neural network architectures. (A) A Seq2Seq model using 1-dimensional convolutional and deconvolutional kernels, e.g., a convolutional autoencoder. Architecture (B) is based on an LSTM with time-distributed linear layers as encoder and decoder.

Figure 4.

Two neural network architectures. (A) A Seq2Seq model using 1-dimensional convolutional and deconvolutional kernels, e.g., a convolutional autoencoder. Architecture (B) is based on an LSTM with time-distributed linear layers as encoder and decoder.

Figure 5.

Combined HS detection and step length regression using a CNN or an LSTM as a Seq2Seq model with a windowing approach. The time series data from the sensor belt are fed as input (1) to the neural network (2a), which provides an estimation whether each time step is within a single- or double-support phase (3a). Based on this, the HS are identified as phase change from the single-support to the double-support phase (4a). The neural network for step length estimation uses the same input data (2b) and estimates for each time step a step length. The timestamps of the HS estimations are then used to average the section between neighboring events of the step length estimations (3b). Such averages are then used as the final step length estimation (4b).

Figure 5.

Combined HS detection and step length regression using a CNN or an LSTM as a Seq2Seq model with a windowing approach. The time series data from the sensor belt are fed as input (1) to the neural network (2a), which provides an estimation whether each time step is within a single- or double-support phase (3a). Based on this, the HS are identified as phase change from the single-support to the double-support phase (4a). The neural network for step length estimation uses the same input data (2b) and estimates for each time step a step length. The timestamps of the HS estimations are then used to average the section between neighboring events of the step length estimations (3b). Such averages are then used as the final step length estimation (4b).

Figure 6.

Distribution of absolute errors of estimated step lengths of the CNN-based approach, segmented according to age groups, sex, and types of walk.

Figure 6.

Distribution of absolute errors of estimated step lengths of the CNN-based approach, segmented according to age groups, sex, and types of walk.

Figure 7.

Bland–Altman plot of the step lengths estimations of the ML-based approach and reference values based on standard deviation (SD) and mean difference (Mean diff.).

Figure 7.

Bland–Altman plot of the step lengths estimations of the ML-based approach and reference values based on standard deviation (SD) and mean difference (Mean diff.).

Table 1.

Accuracy, precision, recall, and F1-score of the HS and TO detections. The better performance is marked in bold.

Table 1.

Accuracy, precision, recall, and F1-score of the HS and TO detections. The better performance is marked in bold.

| Metric | Method | HS | TO |

|---|

| Accuracy | LSTM | 99.03% | 98.54% |

| CNN | 98.94% | 98.65% |

| Precision | LSTM | 99.05% | 98.92% |

| CNN | 98.93% | 98.76% |

| Recall | LSTM | 99.98% | 99.61% |

| CNN | 99.99% | 99.80% |

| F1-score | LSTM | 99.51% | 99.26% |

| CNN | 99.46% | 99.28% |

Table 2.

Temporal deviation between estimated gait event timings and the true event timings, which is quantified by the mean absolute error (MAE) and mean error (ME) with standard deviation (SD). The better performance is marked in bold.

Table 2.

Temporal deviation between estimated gait event timings and the true event timings, which is quantified by the mean absolute error (MAE) and mean error (ME) with standard deviation (SD). The better performance is marked in bold.

| Metric | Method | MAE | ME ± SD |

|---|

| HS [ms] | LSTM | 27.45 | −2.34 ± 37.17 |

| CNN | 20.43 | −1.45 ± 31.30 |

| TO [ms] | LSTM | 26.89 | −2.52 ± 40.82 |

| CNN | 21.75 | −2.01 ± 36.56 |

Table 3.

Gait parameter estimation errors with mean error (ME), standard deviation (SD), and the Pearson correlation coefficient (CC) for the CNN. All results are highly significant (p ≪ 0.001).

Table 3.

Gait parameter estimation errors with mean error (ME), standard deviation (SD), and the Pearson correlation coefficient (CC) for the CNN. All results are highly significant (p ≪ 0.001).

| Gait Parameter | ME ± SD | CC |

|---|

| Step length [cm] | 0.09 ± 4.69 | 0.78 |

| Cadence [1/min] | −0.266 ± 7.736 | 0.79 |

| Velocity [m/s] | 0.0047 ± 0.069 | 0.75 |

| Stride time [ms] | −1.11 ± 43.48 | 0.79 |

| Step time [ms] | 0.88 ± 31.18 | 0.57 |

| Swing time [ms] | 1.01 ± 50.01 | 0.55 |

| Stance time [ms] | 0.88 ± 31.18 | 0.57 |

Table 4.

Comparison of results (mean ± standard deviation) from previous studies with our ML-based approach—temporal gait parameters. For comparison, we considered only studies processing data from healthy subjects walking in a straight line.

Table 4.

Comparison of results (mean ± standard deviation) from previous studies with our ML-based approach—temporal gait parameters. For comparison, we considered only studies processing data from healthy subjects walking in a straight line.

| Sensor Placement | Author | Stride Time [ms] | Step Time [ms] | Stance Time [ms] |

|---|

| Waist | Our | −1 ± 43 | 1 ± 31 | 1 ± 31 |

| [42] | 6 ± 1 | 9 ± 3 | 13 ± 12 |

| [24] | 0.2 ± 2 | 0.1 ± 1 | - |

| [43] | - | - | - |

| Shank | [42] | 6 ± 2 | 9 ± 4 | 44 ± 13 |

| [31] | 0 ± 30 | - | -10 ± 40 |

| [33] | −21 ± 91 | −8 ± 41 | - |

| Feet | [31] | −10 ± 40 | - | −10 ± 30 |

| [32] | 0 ± 70 | - | 0 ± 70 |

| Wrist | [43] | - | - | - |

| Multiple pos. | [44] | 2 ± 20 | 2 ± 30 | −8 ± 30 |

| Sensor Placement | Author | Swing Time [ms] | Cadence [1/min] | |

| Waist | Our | 1 ± 50 | −0.27 ± 7.74 | |

| [42] | - | - | |

| [24] | - | - | |

| [43] | - | 0.33 ± 1.9 | |

| Shank | [42] | - | - | |

| [31] | 0 ± 30 | 0.6 ± 5.4 | |

| [33] | - | 0.589 ± 1.144 | |

| Feet | [31] | −10 ± 30 | 1.2 ± 6 | |

| [32] | 0 ± 50 | - | |

| Wrist | [43] | - | −0.07 ± 5.17 | |

| Multiple pos. | [44] | 10 ± 30 | −0.296 ± 6.05 | |

Table 5.

Comparison of results from previous studies with our ML-based approach (ML-Seq2Seq)–spatial (+Spatio-temporal) gait parameters. For comparison, we considered only studies processing data from healthy subjects, walking in a straight line.

Table 5.

Comparison of results from previous studies with our ML-based approach (ML-Seq2Seq)–spatial (+Spatio-temporal) gait parameters. For comparison, we considered only studies processing data from healthy subjects, walking in a straight line.

| Sensor Placement | Author | Step Length [cm] | Stride Length [cm] |

|---|

| Waist | Our | 0.09 ± 4.69 | - |

| Shank | [42] | - | - |

| [31] | −0.6 ± 5.6 | 0.4 ± 9.7 |

| [33] | - | - |

| Foot/Feet | [31] | −1.7 ± 5.2 | -3.0 ± 8.7 |

| [32] | - | −0.15 ± 6.09 |

| [41] | - | 0.07 ± 4.3 |

| Multiple pos. | [44] | 0.6 ± 8 | 0.5 ± 7 |

| Sensor Placement | Author | Step Width [cm] | Velocity [m/s] |

| Waist | Our | - | 0.005 ± 0.069 |

| Shank | [42] | - | - |

| [31] | 0.85 ± 4.6 | - |

| [33] | - | - |

| Foot/Feet | [31] | 1.1 ± 5.1 | - |

| [32] | −0.09 ± 4.22 | - |

| [41] | - | - |

| Multiple pos. | [44] | 0.8 ± 6 | 0.003 ± 0.05 |

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).