An Intelligent Joint Identification Method and Calculation of Joint Attitudes in Underground Mines Based on Smartphone Image Acquisition

Abstract

1. Introduction

- (1)

- Smartphone images + RC-Unet enable pixel-accurate joint segmentation.

- (2)

- CBAM + ASPP boosts thin-joint perception under uneven lighting.

- (3)

- Image cutting + image rotation yields a large, balanced underground dataset.

- (4)

- PCP converts 2D masks to 3D attitudes with ≈degree-level accuracy.

- (5)

- Lightweight pipeline deploys easily in underground environments.

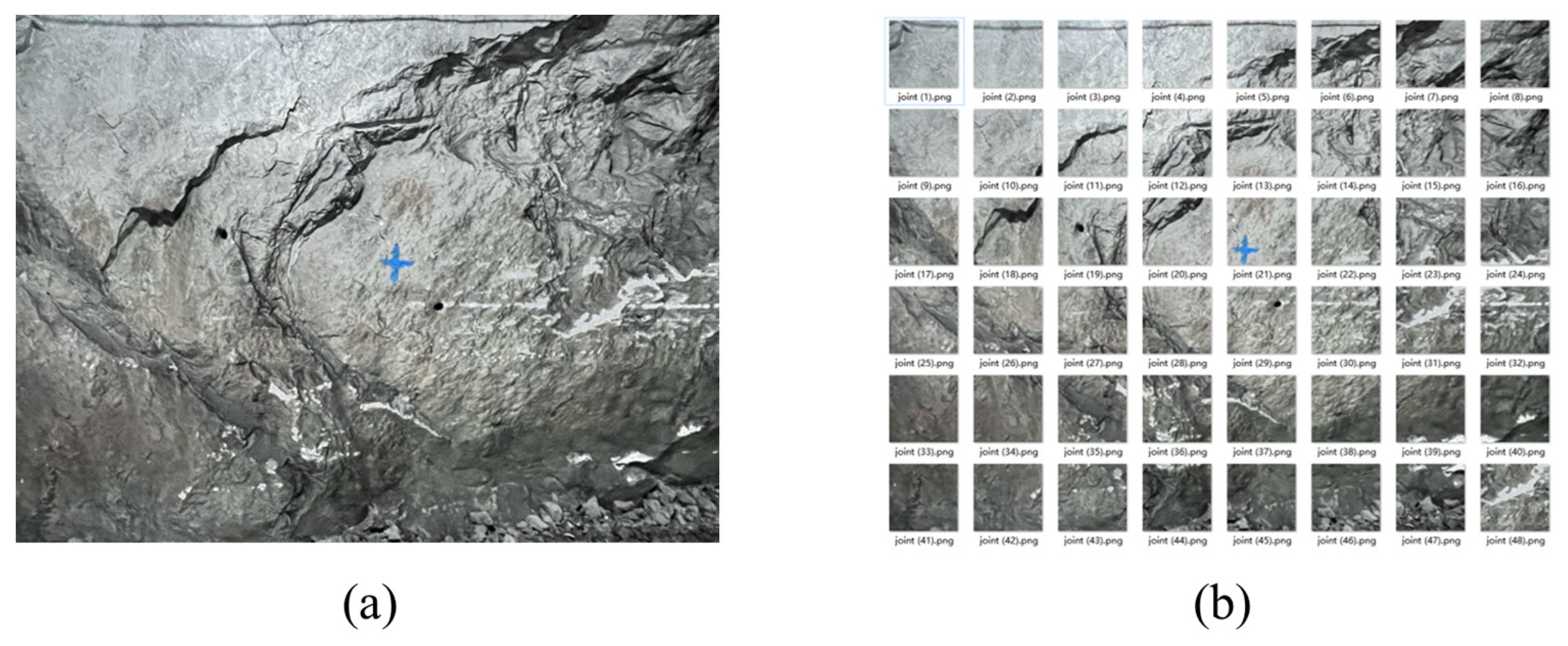

2. Joint Information Acquisition of Rocks Based on Smartphone Image Acquisition

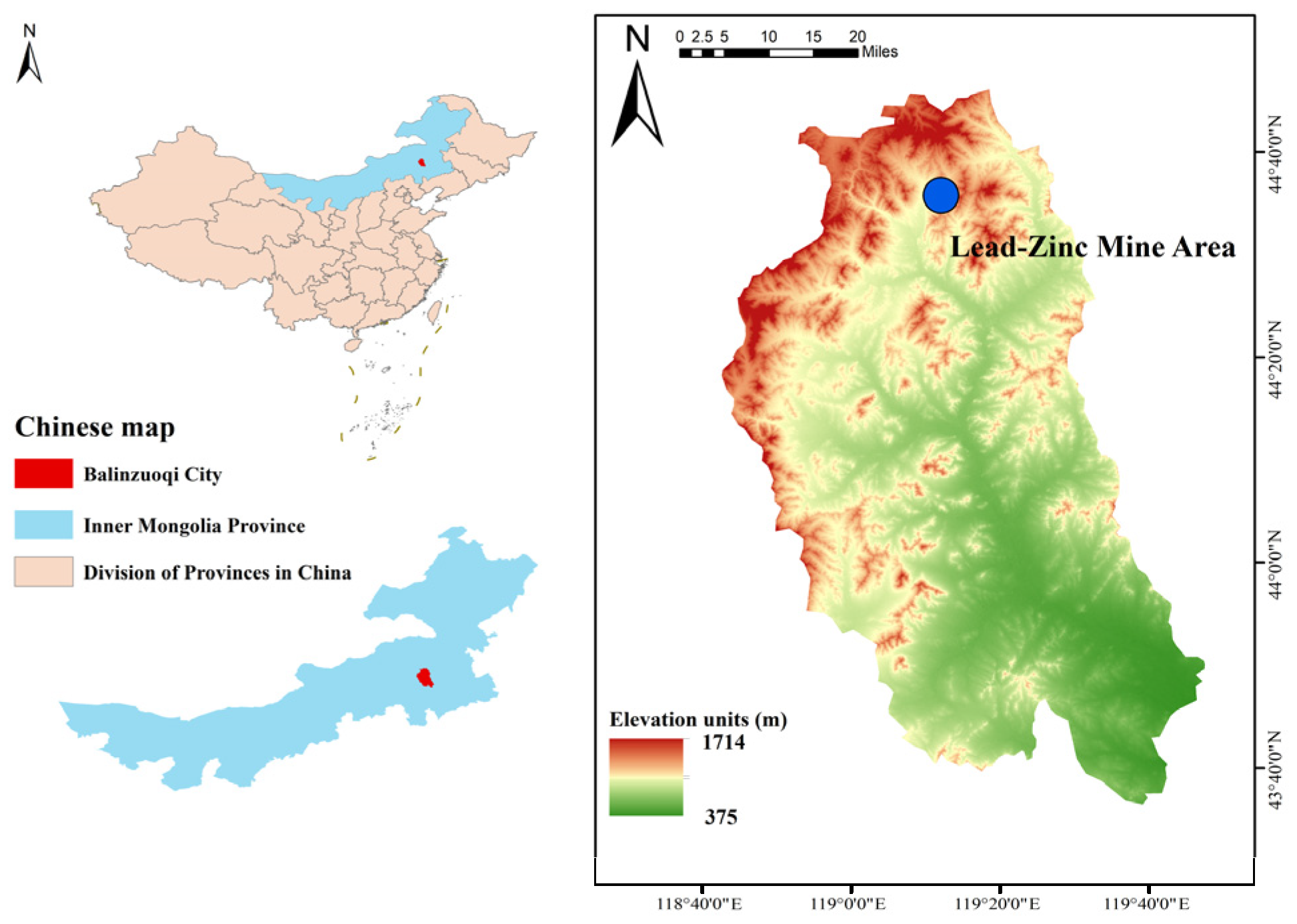

2.1. Joint Information Acquisition Points of Rocks

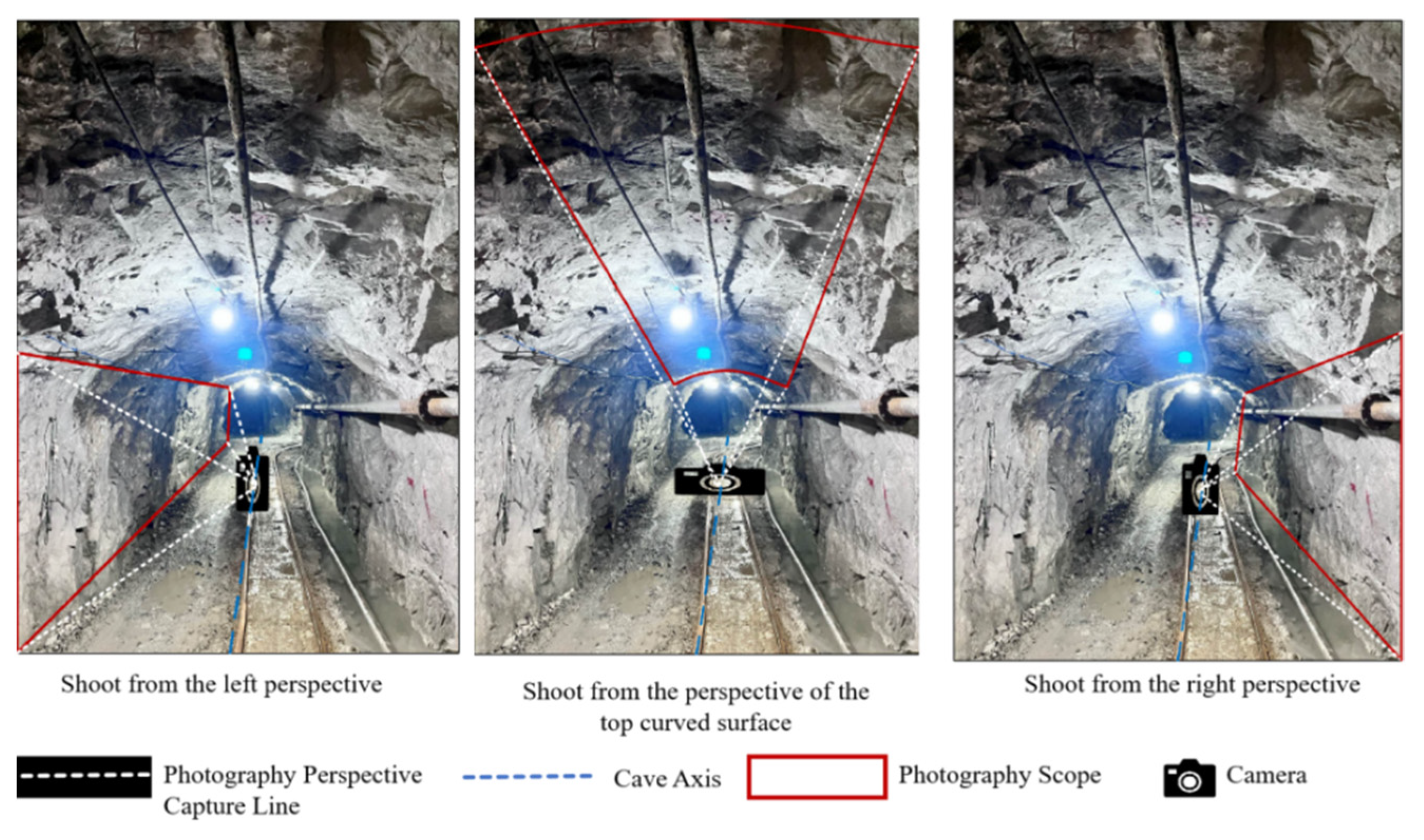

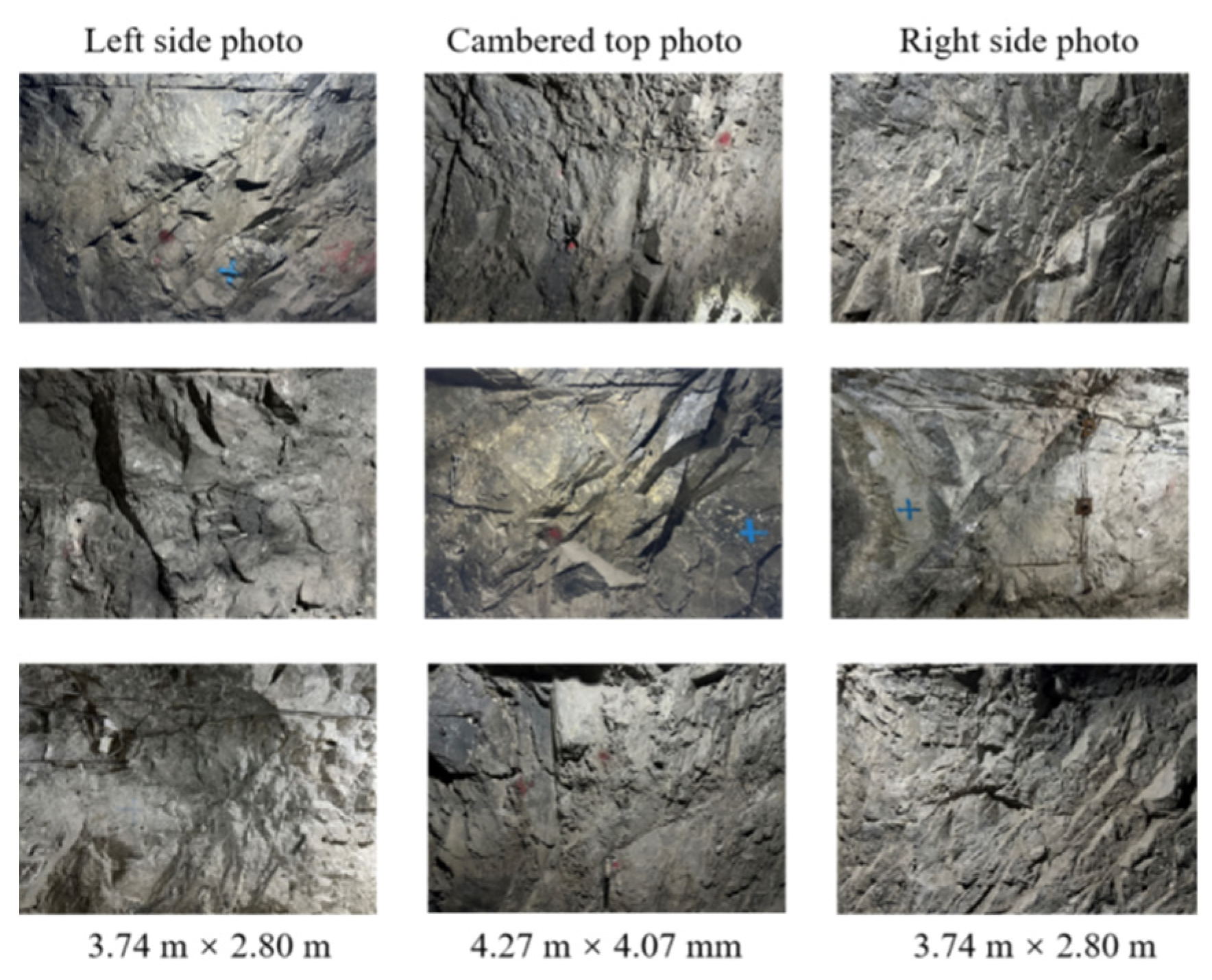

2.2. Data Acquisition

3. Dataset Creation

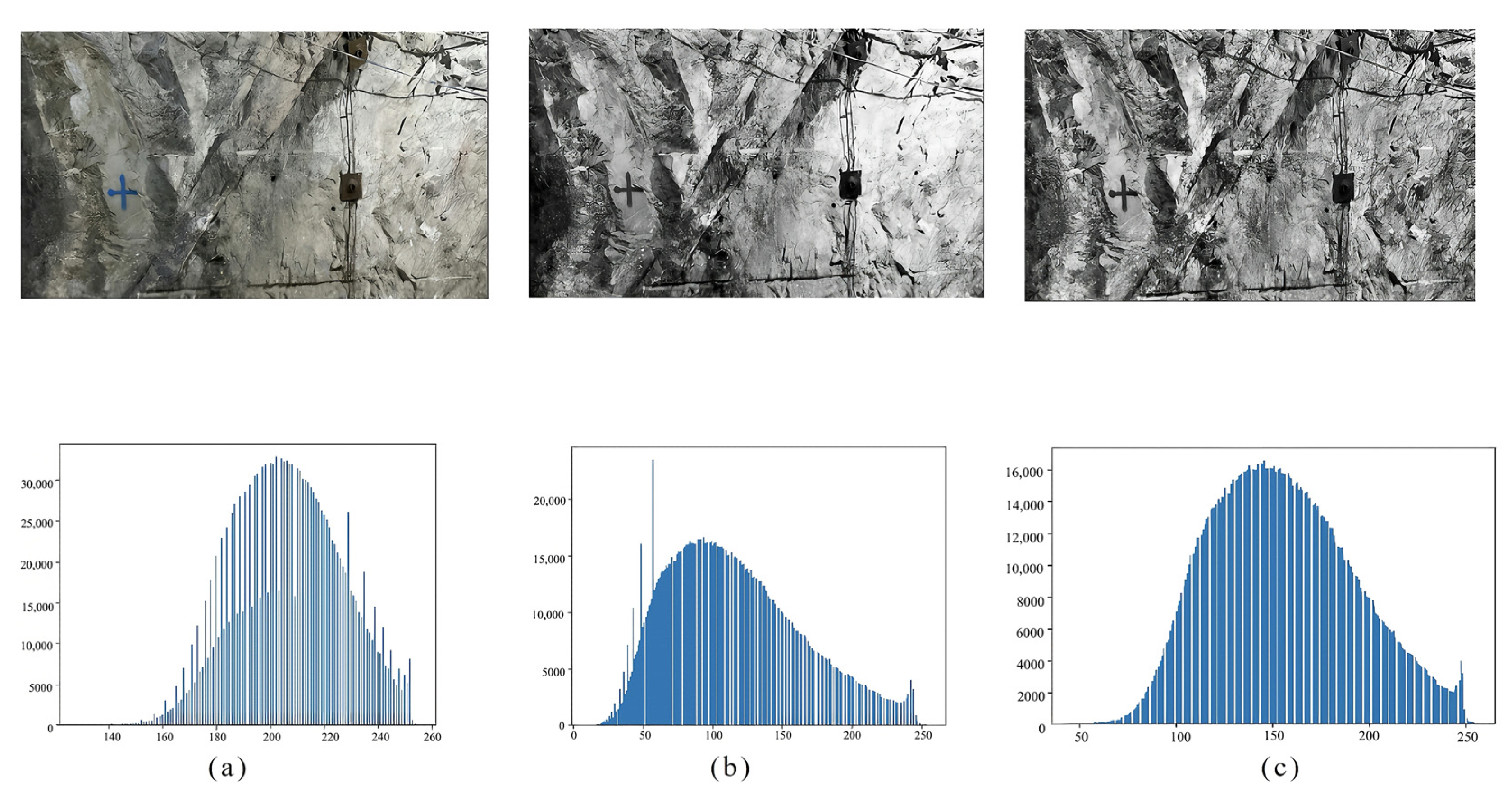

3.1. Preprocessing of Images in the Dataset

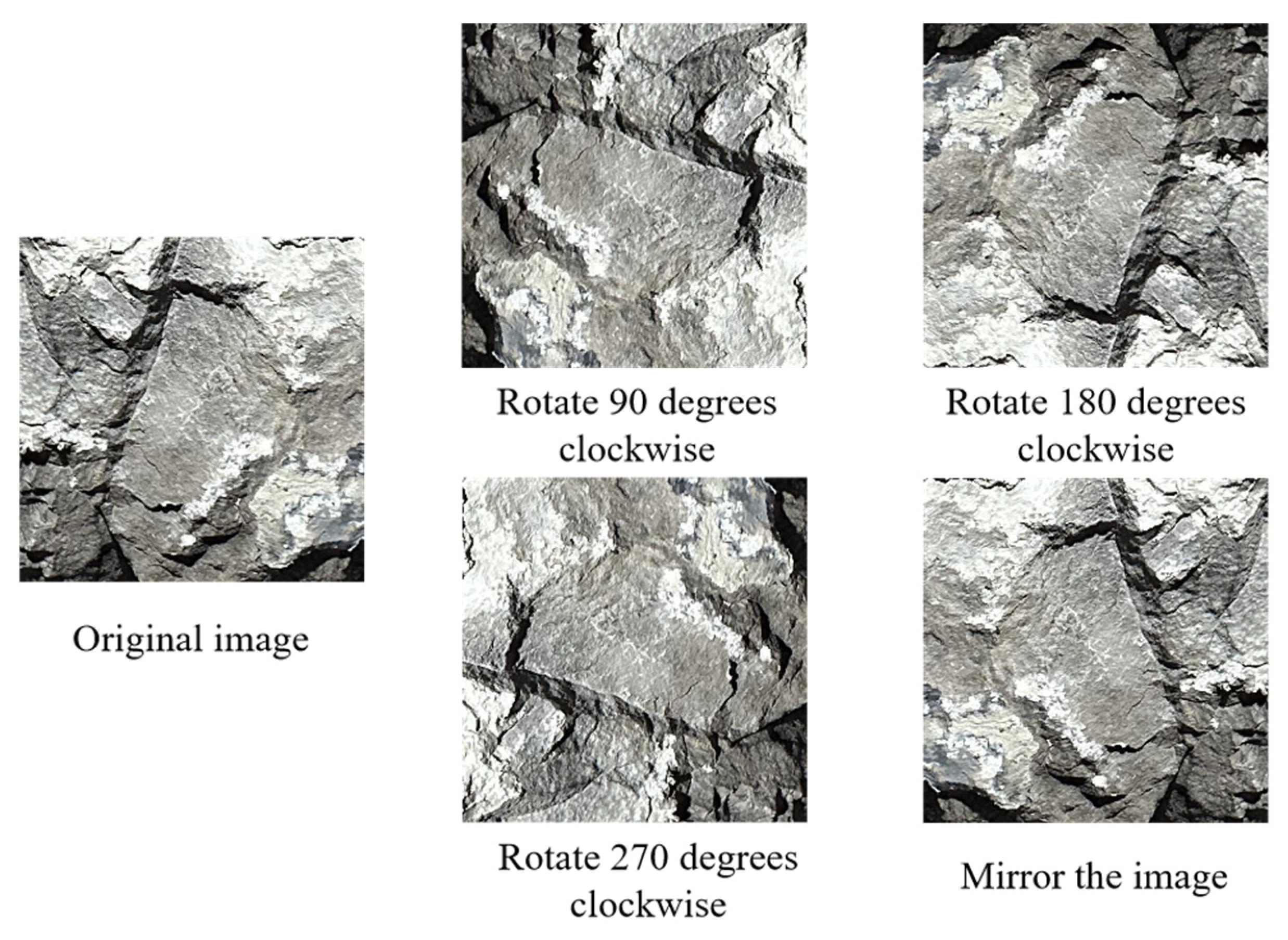

3.2. Image Data Augmentation

3.2.1. Data Augmentation by Image Cutting

3.2.2. Data Augmentation by Using the Image Rotation Method

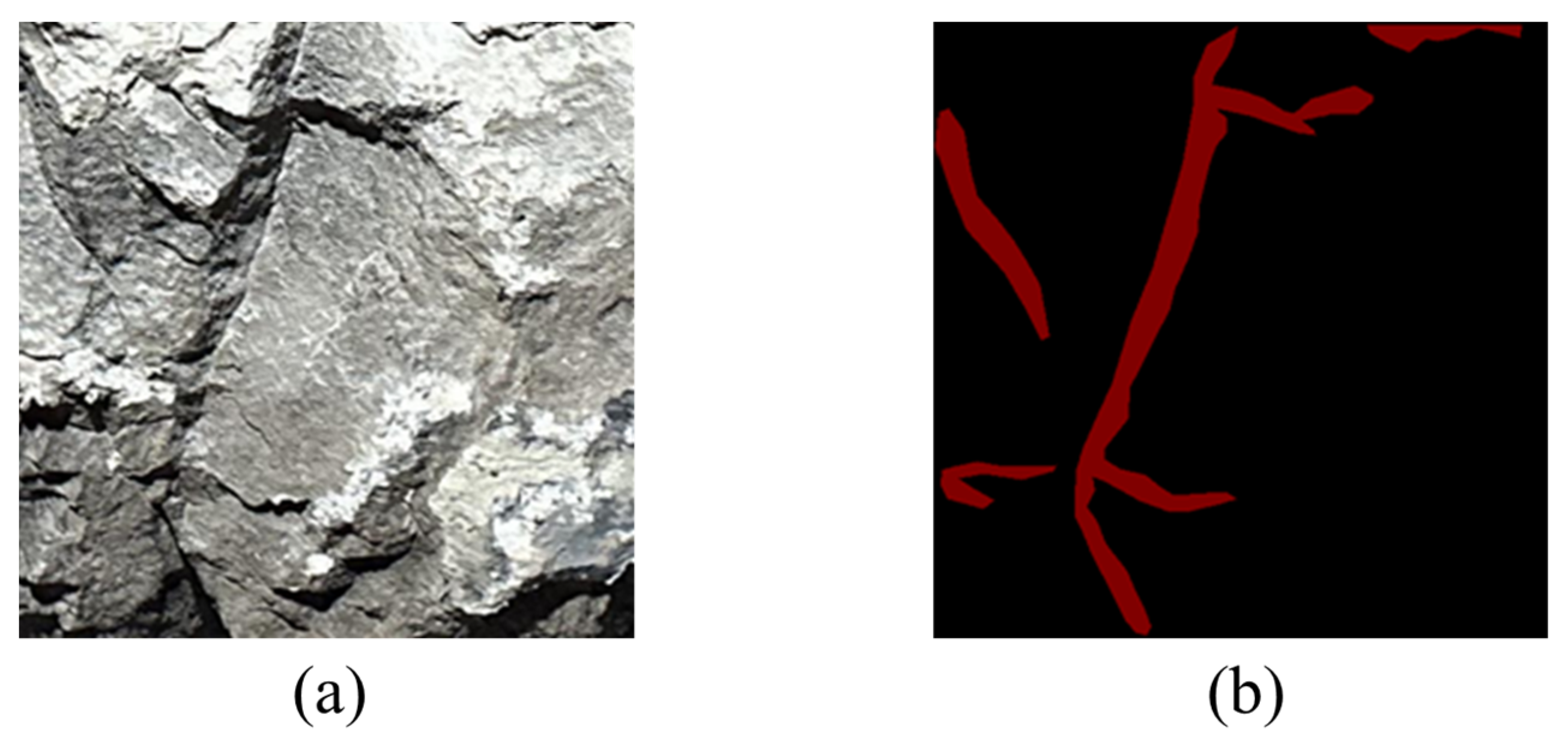

3.3. Dataset Labeling and Partitioning

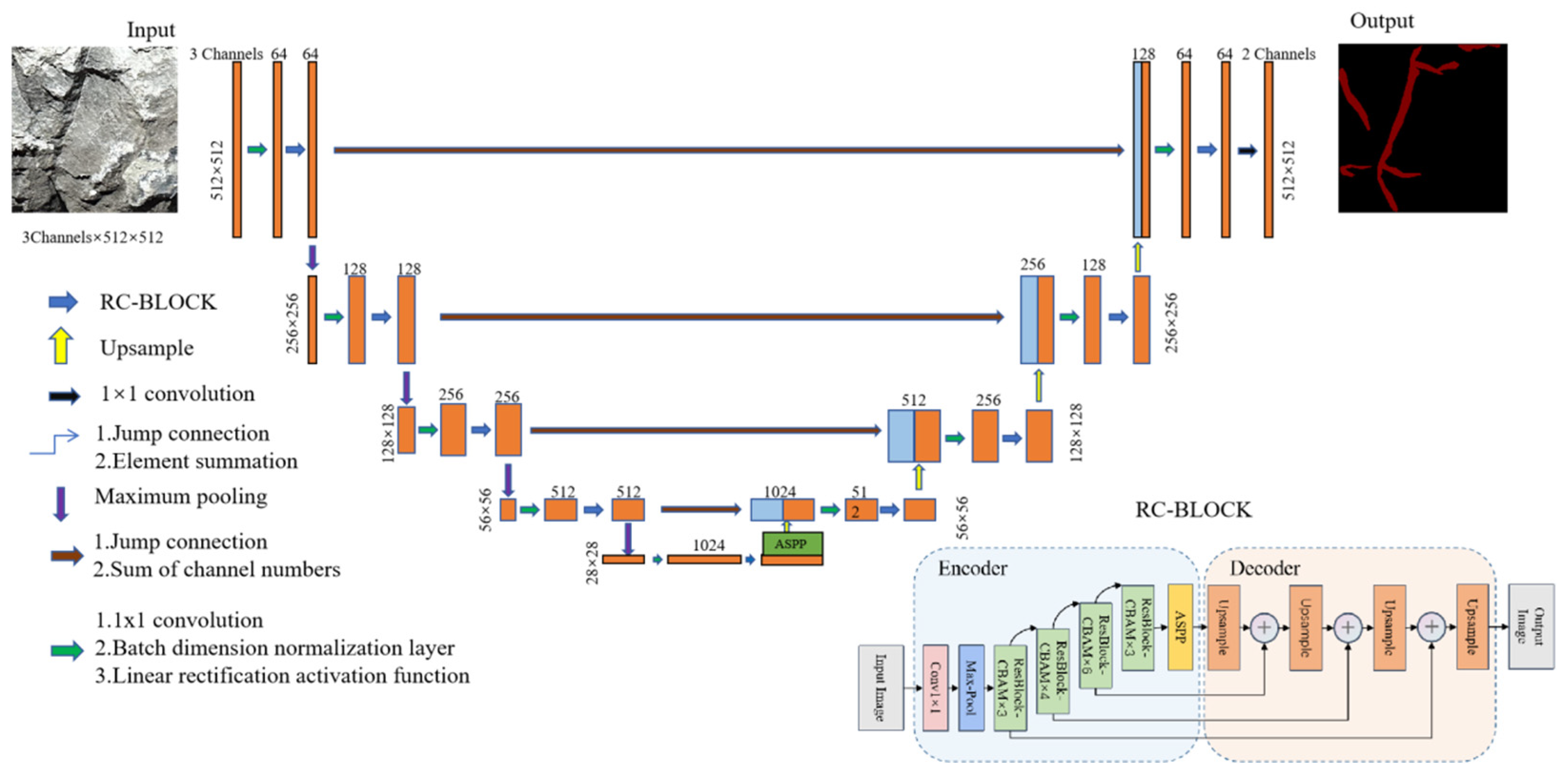

4. Selection and Improvement of Joint Trace Identification Algorithms

4.1. Comparison and Selection of Algorithms

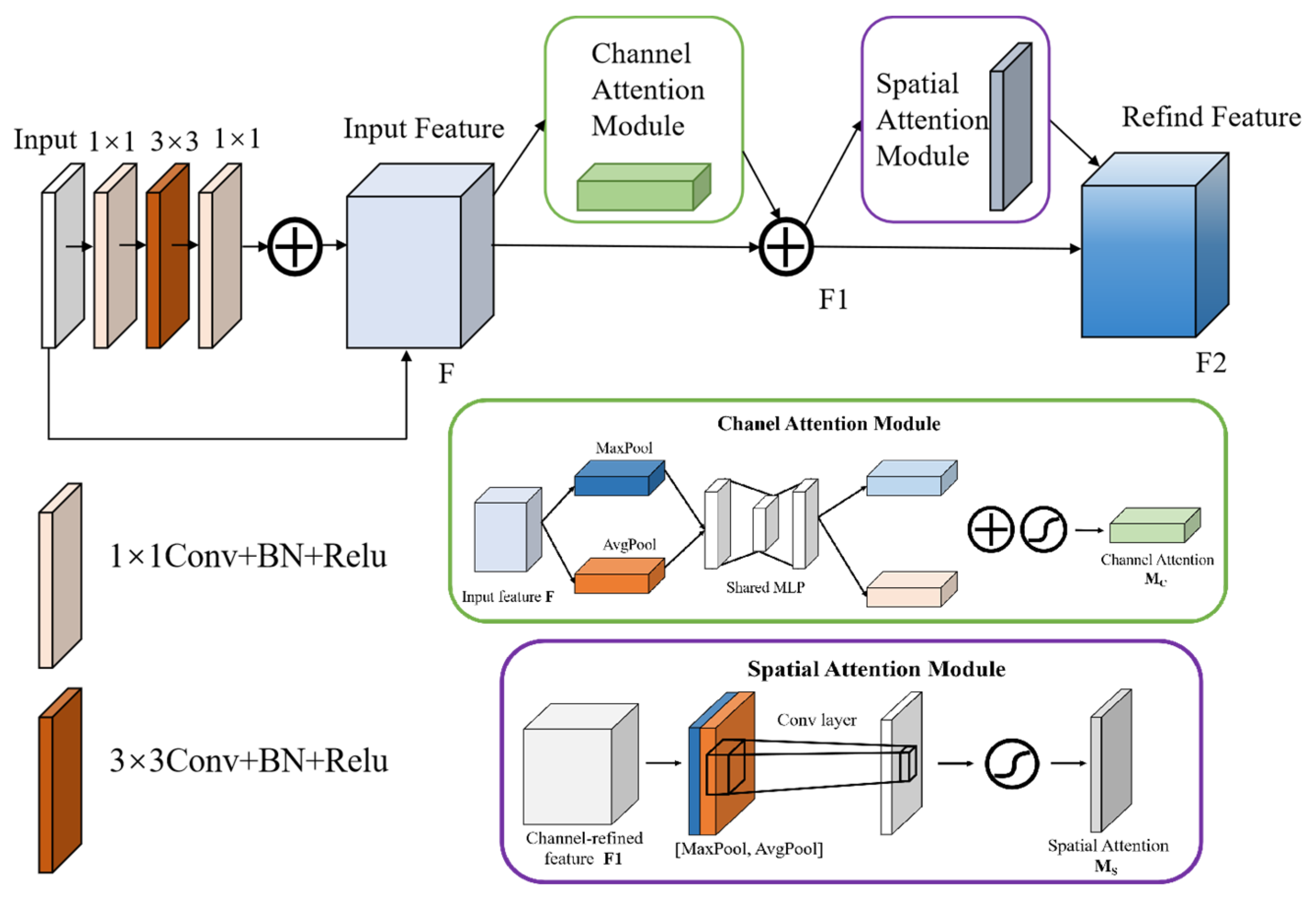

4.2. Improvement of the RC-Unet Model

4.2.1. Res-Net

4.2.2. Introduction of the Attention Mechanism

4.2.3. RC-Unet Workflow and Pseudocode

| Algorithm 1. Forward Propagation of the Proposed RC-Unet |

5. Effect Analysis and Indices of the Joint Identification Model

5.1. Parameter Selection

5.2. Selection of Loss Functions

5.3. Evaluation Indices

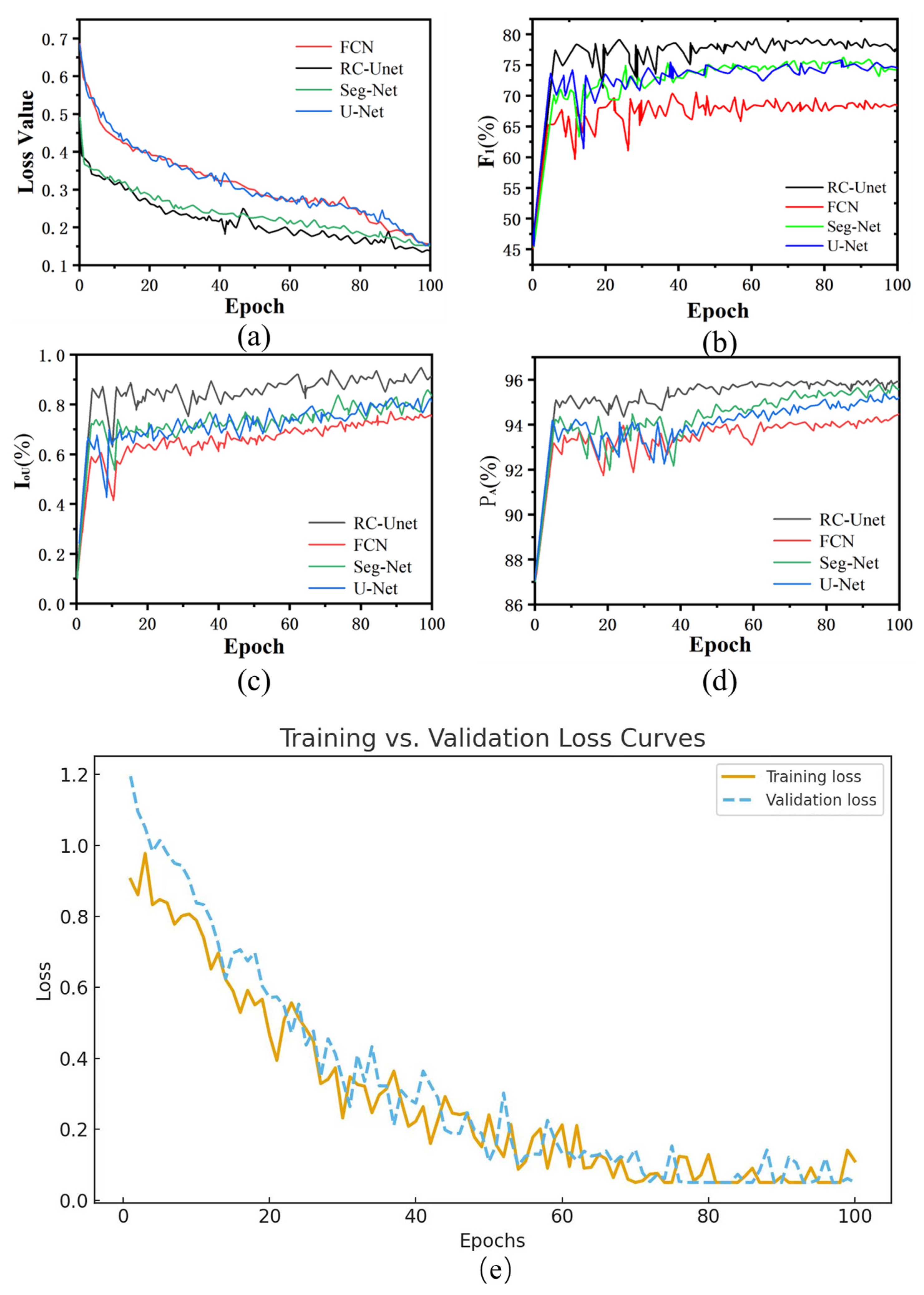

5.4. Model Comparison and Experimental Data Analysis

5.5. Analysis of Classification and Identification Experimental Data of Joint Images

5.6. Ablation Experiments

5.7. Statistical Validation

5.8. Comparison with Recent Methods

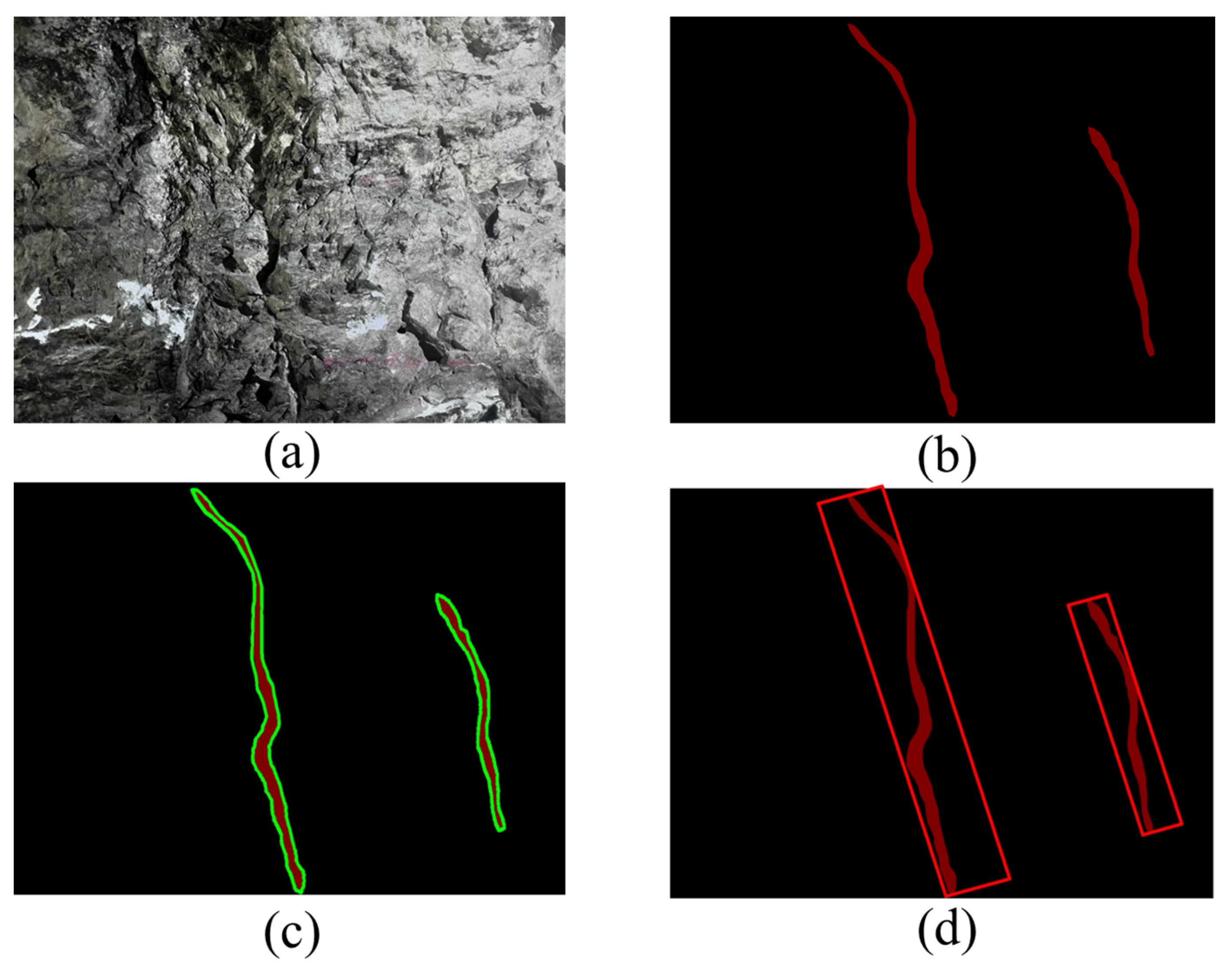

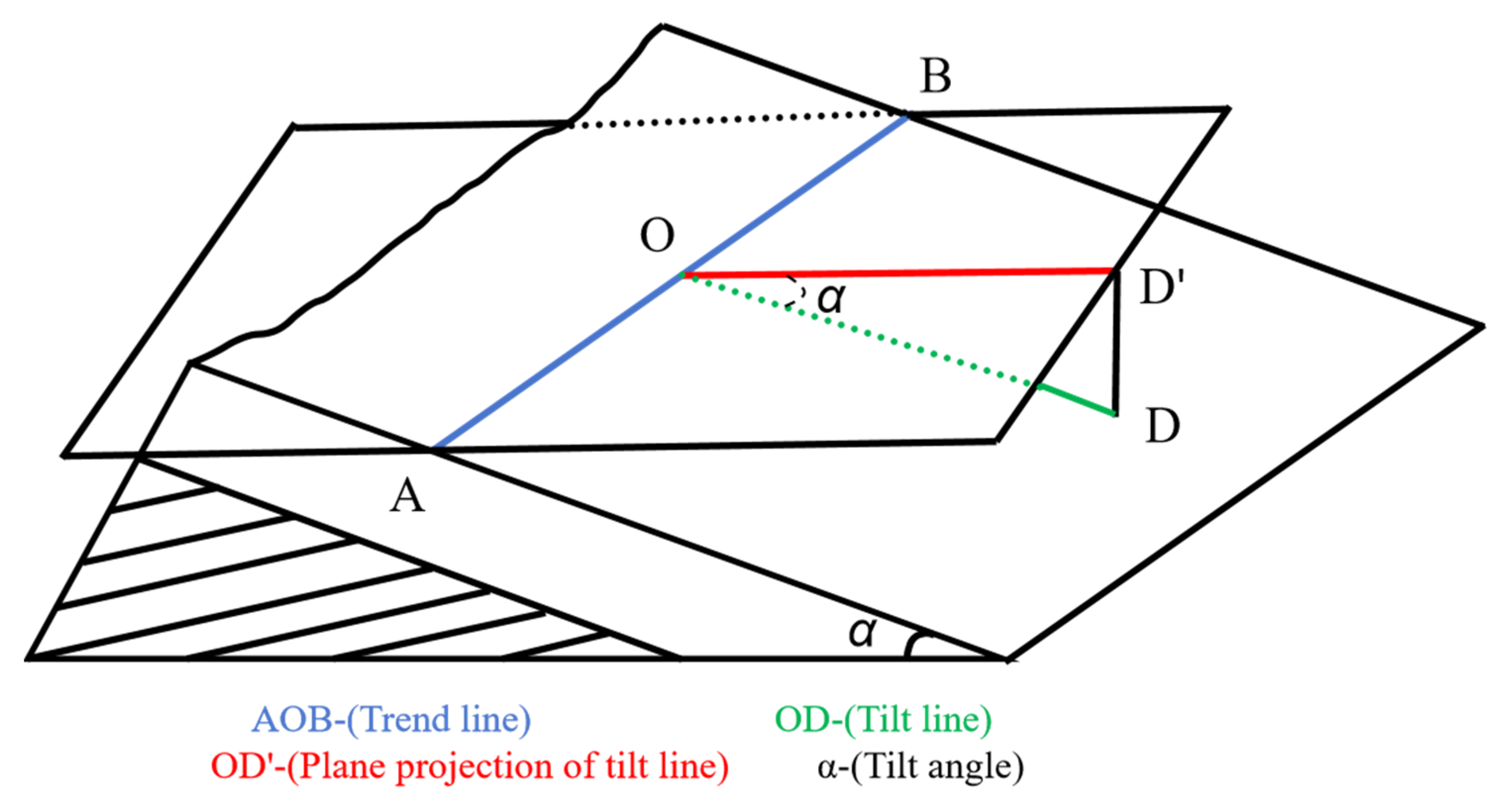

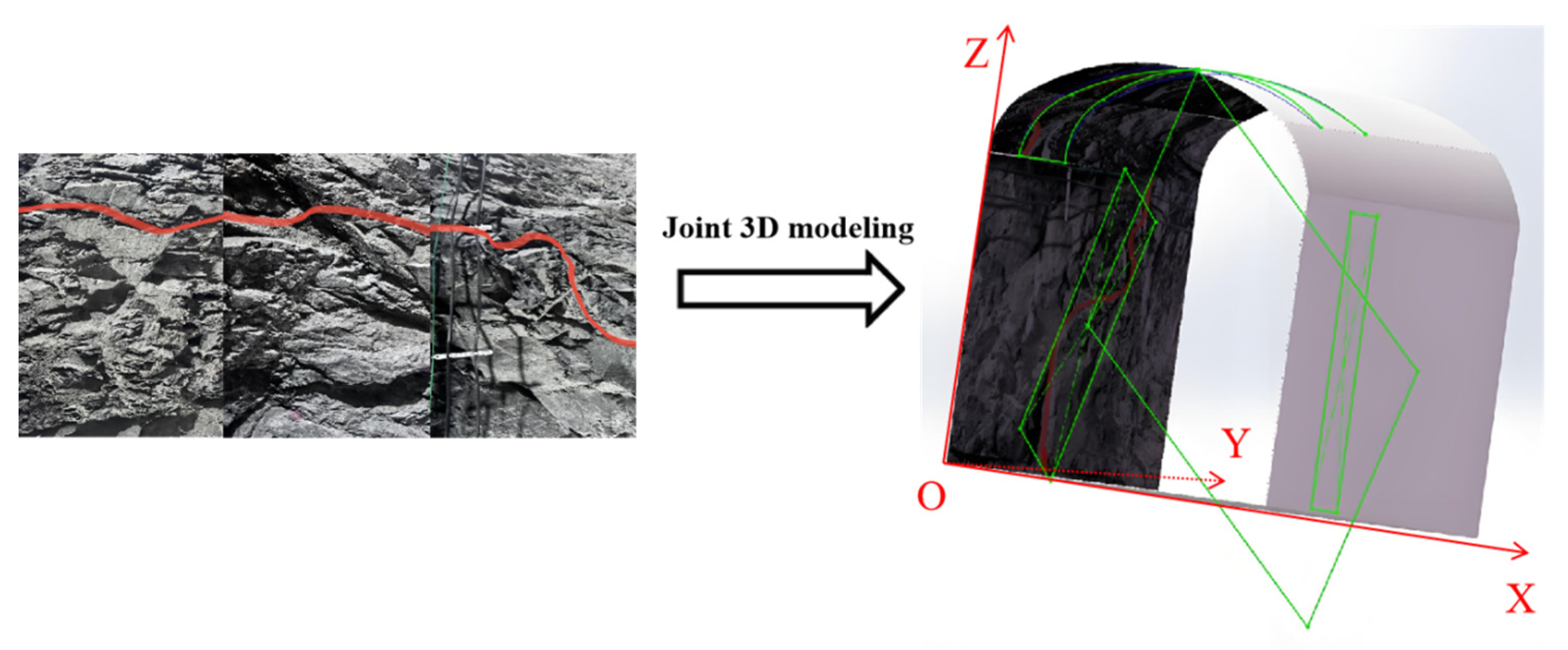

6. Joint Data Extraction and Attitude Calculation

6.1. Statistical and Calculation Methods of Pixel Areas of Joints in the Area

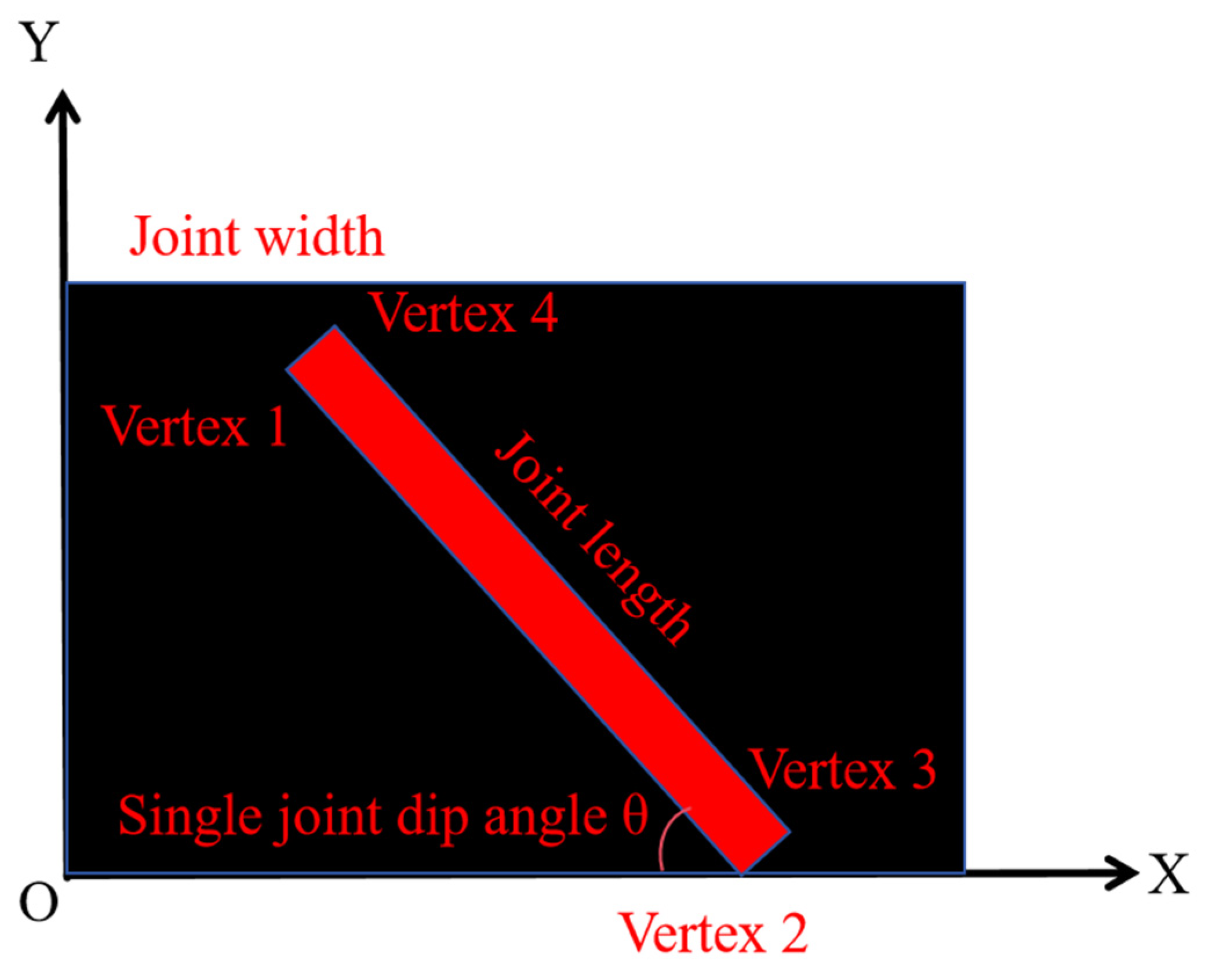

6.2. Statistical Method for the Length and Width of a Single Joint

6.3. Attitude Calculation of 3D Joint Planes Using the PCP Algorithm

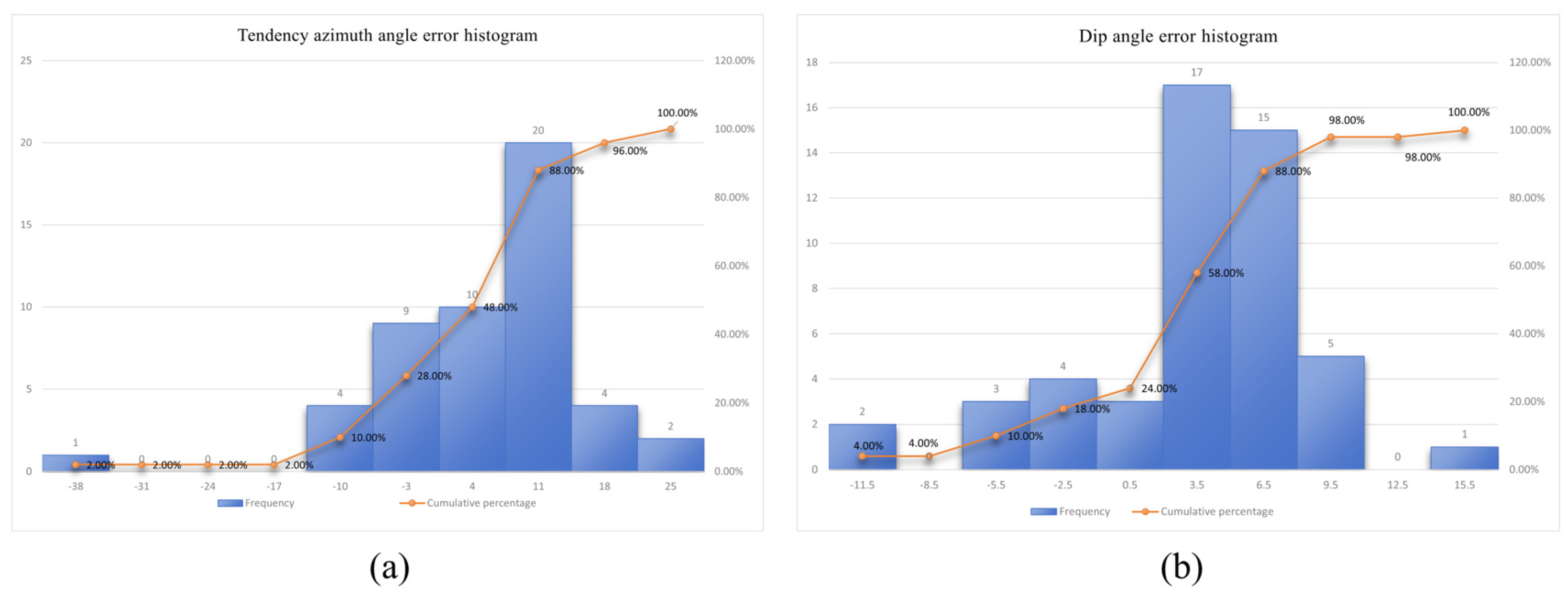

6.4. Analysis of Joint Attitude Calculation Results

7. Conclusions

- (1)

- To solve problems of artificial geological cataloging, including the low efficiency and susceptibility to subjective factors, the established multi-module RC-Unet was used for intelligent identification of joints in underground rock mass. Comparison with artificially drawn results reveals that the accuracy of intelligent identification is higher than 90%, so it can be used as an auxiliary means of geological cataloging.

- (2)

- RC-Unet shows a low loss in joint identification, which is only 0.147. In addition, F1, IoU, and PA indices are all superior to those of FCN, Seg-Net, and U-Net models. Therefore, RC-Unet exhibits more obvious applicability to joint identification.

- (3)

- The PCP attitude algorithm based on the Open-CV library yields results that agree well with artificial measurements. Comparison shows that the errors of 50 joints tested are lower than 2°, which means that the PCP attitude algorithm is applicable to the geological description of the underground surrounding rocks.

- (4)

- With the rapid development of artificial intelligence and smartphone hardware, the artificial geological cataloging in the complex underground environment can be gradually replaced. The research findings can not only reduce the operational risks for underground workers, but also provide a new idea for the big-data collection of geological information and intelligent auxiliary analysis. They are of great significance for the intelligent construction of mines.

- (1)

- The accuracy of 3D attitude calculation is bounded by the 2D segmentation quality; joints narrower than ~2 px or severely occluded may be missed.

- (2)

- CLAHE parameters and exposure vary across sites; domain shifts caused by camera devices, lighting, or lithology require light re-tuning or fine-tuning.

- (3)

- The PCP plane assumption presumes locally planar walls and a valid arch proportion (λ); strong curvature or camera pose errors may degrade 3D mapping accuracy.

- (4)

- Compared with SAM-/transformer-based approaches, RC-Unet is lighter and more deployment-friendly underground, but it lacks promptable interaction and large-scale pretraining. Future work will explore SAM-/DINOv2-style adapters for low-shot adaptation and self-calibration using multi-view constraints.

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Zhang, Y. Application of Photographic Geological Logging System in Zhangji Coal Mine Working Face. Technol. Innov. Prod. 2020, 66–68. Available online: https://kns.cnki.net/kcms2/article/abstract?v=L1vQOn58HG2M8Y7yx6PA6T1MWZCySd_a0t47BCiR9jvaP-MEjpZOaS26t-H0WVhxINplfEEj5B2mxj6cUPnr3Ex9Bk0Rs2SJ2Gj6a4QhDqEHh9NB05g5vTRJFrPx2eGuoNw_OG7b9vVwf673thUG4gja4S5W81XilnYQEve_fKWBMTmCV8mGMHev6w5V51x9ADHoUZxcHK4=&uniplatform=NZKPT (accessed on 4 October 2025).

- Liu, J. The applications of digital image processing in engineering geological catalogue. Technol. Dev. Enterp. 2004, 23, 6–8. [Google Scholar]

- Hinton, G.E.; Osindero, S.; Teh, Y.-W. A fast learning algorithm for deep belief nets. Neural Comput. 2006, 18, 1527–1554. [Google Scholar] [CrossRef] [PubMed]

- Yan, Y.; Deng, C.; Li, L.; Zhu, L.; Ye, B. Survey of image semantic segmentation methods in the deep learning era. J. Image Graph. 2023, 28, 3342–3362. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Xue, D.J.; Tang, Q.C.; Wang, A. Geometric intelligent identification of rock concrete cracks based on FCN. Chin. J. Rock Mech. Eng. 2019, 38 (Suppl. S2), 3393. [Google Scholar]

- Xue, Y.-D.; Li, Y.-C. A method of disease recognition for shield tunnel lining based on deep learning. J. Hunan Univ. (Nat. Sci.) 2018, 45, 100–109. [Google Scholar]

- Huang, H.-W.; Li, Q.-T. Image recognition for water leakage in shield tunnel based on deep learning. Chin. J. Rock Mech. Eng. 2017, 36, 2861–2871. [Google Scholar]

- Zhang, Z.X.; Liu, Q.J.; Wang, Y.H. Road extraction by deep residual U-net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749. [Google Scholar] [CrossRef]

- Hanat, T.; Lin, H. Intelligent identification of cracks on concrete surface combining self-attention mechanism and deep learning. J. Raihw. Sci. Eng. 2021, 18, 844. [Google Scholar]

- Zhang, Z.-S.; Wang, S.-H.; Wang, P.-Y.; Wang, C.-G. Intelligent identification and extraction of geometric parameters for surface fracture networks of rocky slopes. Chin. J. Geotech. Eng. 2021, 43, 2240–2248. [Google Scholar]

- Karimpouli, S.; Tahmasebi, P. Segmentation of digital rock images using deep convolutional autoencoder networks. Comput. Geosci. 2019, 126, 142–150. [Google Scholar] [CrossRef]

- Song, W.; Zheng, N.; Liu, X.; Qiu, L.; Zheng, R. An improved U-Net Convolutional Networks for Seabed Mineral Image Segmentation. IEEE Access 2019, 7, 82744–82752. [Google Scholar] [CrossRef]

- Chen, C.M.; Zhang, L.; Song, H.J. The Surveying of Rock’s Attribute in Digital Geology Logging System. Surv. Map. Geol. Miner. Resour. 2002, 18, 11–14. [Google Scholar] [CrossRef]

- Lin, H.; Huang, J.; Zhang, L. Measurement of structural plane of rock mass using oblique photography technology of UAV. Eng. Investig. 2019, 47, 61–65. [Google Scholar]

- Song, L.; Xu, M.; Lu, S. Program Implementation of Fractal Dimension Algorithm of Orientation Pole Distribution for Joints. South-North Water Transf. Water Sci. Technol. 2014, 12, 151–155. [Google Scholar] [CrossRef]

- Harith, S.S.; Amin, K.A.; Ashidi, N.I.M. Improvement of image enhancement for mammogram images using FADHECAL. Comput. Methods Biomech. Biomed. Eng. Imaging Vis. 2022, 10, 67–75. [Google Scholar]

- Badrinarayanan, V.; Handa, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Robust Semantic Pixel-Wise Labelling. arXiv 2015, arXiv:1505.07293. [Google Scholar]

- Abas, M.A.H.; Ismail, N.; Yassin, A.I.M.; Taib, M.N. VGG16 for Plant Image Classification with Transfer Learning and Data Augmentation. Int. J. Eng. Technol. 2018, 7, 90–94. [Google Scholar] [CrossRef]

- Yang, X.; Yu, C.; Wang, X. Fusion ASPP-Attention and Context Semantic for Complex Scene Semantic Segmentation. Comput. Simul. 2020, 37, 204–208+230. [Google Scholar]

- Song, T.; Song, Y.; Wang, Y.; Huang, X. Residual network with dense block. J. Electron. Imaging 2018, 27, 053036. [Google Scholar] [CrossRef]

- Lu, X.; Chang, E.Y.; Hsu, C.N.; Du, J.; Gentili, A. Multi-classification study of the tuberculosis with 3D CBAM-ResNet and EfficientNet. CEUR Workshop Proc. 2021, 2936, 1305–1309. [Google Scholar]

- Gong, H.; Liu, L.; Liang, H.; Zhou, Y.; Cong, L. A State-of-the-Art Survey of Deep Learning Models for Automated Pavement Crack Segmentation. Int. J. Transp. Sci. Technol. 2024, 13, 44–57. [Google Scholar] [CrossRef]

- Zhang, C.; Puspitasari, F.D.; Zheng, S.; Li, C.; Qiao, Y.; Kang, T.; Hong, C.S. A Survey on Segment Anything Model (SAM): Vision Foundation Model Meets Prompt Engineering. arXiv 2023, arXiv:2306.06211. [Google Scholar] [CrossRef]

- Brondolo, F.; Beaussant, S. DINOv2 Rocks Geological Image Analysis: Classification, Segmentation, and Interpretability. J. Rock Mech. Geotech. Eng. 2025, in press. [Google Scholar] [CrossRef]

- HSudre, C.H.; Li, W.; Vercauteren, T.; Ourselin, S.; Jorge Cardoso, M. Generalised Dice Overlap as a Deep Learning Loss Function for Highly Unbalanced Segmentations. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; DLMIA 2017, ML-CDS 2017, MICCAI 2017; Springer: Cham, Switzerland, 2017; pp. 240–248. [Google Scholar]

- Ma, Y.; Wang, W.; Fan, L.; Yuan, C.; Tian, X.; Shu, S. Research on crack distribution characteristics and control technology of surrounding rock in soft rock roadway under different lateral pressure coefficients. Energy Sci. Eng. 2024, 12, 3852–3868. [Google Scholar] [CrossRef]

- Lu, L.; Yuan, X.; Liu, X.; Mao, D.; Zhong, D. 3D modeling method based on the pattern recognition of vectorized original geological logging data. China Min. Mag. 2023, 32, 85–90. [Google Scholar]

| Model | F1 (%) | IoU (%) | PA (%) |

|---|---|---|---|

| FCN | 70.56 | 76.18 | 94.03 |

| U-Net | 75.18 | 83.97 | 95.38 |

| Seg-Net | 76.78 | 85.16 | 95.67 |

| RC-Unet | 78.97 | 94.55 | 95.83 |

| Sequence | Image | Label file | RC-Unet | U-Net | Seg-Net | FCN |

|---|---|---|---|---|---|---|

| Complex combined joints |  |  |  |  |  |  |

| Simple combined joints |  |  |  |  |  |  |

| Complex dense joints |  |  |  |  |  |  |

| Simple dense joints |  |  |  |  |  |  |

| Simple sparse joints |  |  |  |  |  |  |

| Combination | U | U + A | U + RC | U + RC + A |

|---|---|---|---|---|

| IoU (%) | 83.97 | 84.12 | 94.55 | 77.22 |

| Metric | Comparison | Mean Difference | 95% CI | p-Value | Effect Size (d) |

|---|---|---|---|---|---|

| F1 | RC-Unet vs. U-Net | +0.07 | [0.04,0.10] | 0.001 | 0.85 |

| IoU | RC-Unet vs. Seg-Net | +0.05 | [0.03,0.08] | 0.002 | 0.70 |

| PA | RC-Unet vs. FCN | +0.06 | [0.02,0.09] | 0.004 | 0.65 |

| seq | Scanner Dip (°) | Scanner Azimuth (°) | Algorithm Dip (°) | () Algorithm Azimuth (°) | () | () |

|---|---|---|---|---|---|---|

| 1 | 156.5 | 76.9 | 157.3 | 77.4 | 0.8 | 0.5 |

| 2 | 186.7 | 15.3 | 189.5 | 16.5 | 2.8 | 1.2 |

| 3 | 351.2 | 8.9 | 359.3 | 17.4 | 8.1 | 8.5 |

| 4 | 125.2 | 19.6 | 136.3 | 25.6 | 11.1 | 6.0 |

| 5 | 268.4 | 58.6 | 274.1 | 74.1 | 5.7 | 15.5 |

| 6 | 114.2 | 23.6 | 118.7 | 15.2 | 4.5 | −8.4 |

| 7 | 214.6 | 44.4 | 223.6 | 49.6 | 9.0 | 5.2 |

| 8 | 341.2 | 59.6 | 336.9 | 47.3 | −4.3 | −12.3 |

| 9 | 187.6 | 6.3 | 196.3 | 14.7 | 8.7 | 8.4 |

| 10 | 25.3 | 29.9 | 30.2 | 35.6 | 4.9 | 5.7 |

| ⋯⋯ | ||||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, G.; Zhu, J.; Jin, C.; Mao, X.; Wang, Q. An Intelligent Joint Identification Method and Calculation of Joint Attitudes in Underground Mines Based on Smartphone Image Acquisition. Sensors 2025, 25, 6410. https://doi.org/10.3390/s25206410

Li G, Zhu J, Jin C, Mao X, Wang Q. An Intelligent Joint Identification Method and Calculation of Joint Attitudes in Underground Mines Based on Smartphone Image Acquisition. Sensors. 2025; 25(20):6410. https://doi.org/10.3390/s25206410

Chicago/Turabian StyleLi, Guang, Jinyao Zhu, Changyu Jin, Xinyang Mao, and Qiang Wang. 2025. "An Intelligent Joint Identification Method and Calculation of Joint Attitudes in Underground Mines Based on Smartphone Image Acquisition" Sensors 25, no. 20: 6410. https://doi.org/10.3390/s25206410

APA StyleLi, G., Zhu, J., Jin, C., Mao, X., & Wang, Q. (2025). An Intelligent Joint Identification Method and Calculation of Joint Attitudes in Underground Mines Based on Smartphone Image Acquisition. Sensors, 25(20), 6410. https://doi.org/10.3390/s25206410