An Improved Step Detection Algorithm for Indoor Navigation Problems with Pre-Determined Types of Activity

Abstract

1. Introduction

2. Materials and Methods

2.1. State of the Art Applications of Machine Learning in Indoor Navigation

2.2. Step-Detection—Overview of Techniques and Knowledge

2.3. Proposed Approach

- A mobile application that records sensor data and activity parameters (steps, type of activity);

- A server application that stores collected data, which was later processed and used for training.

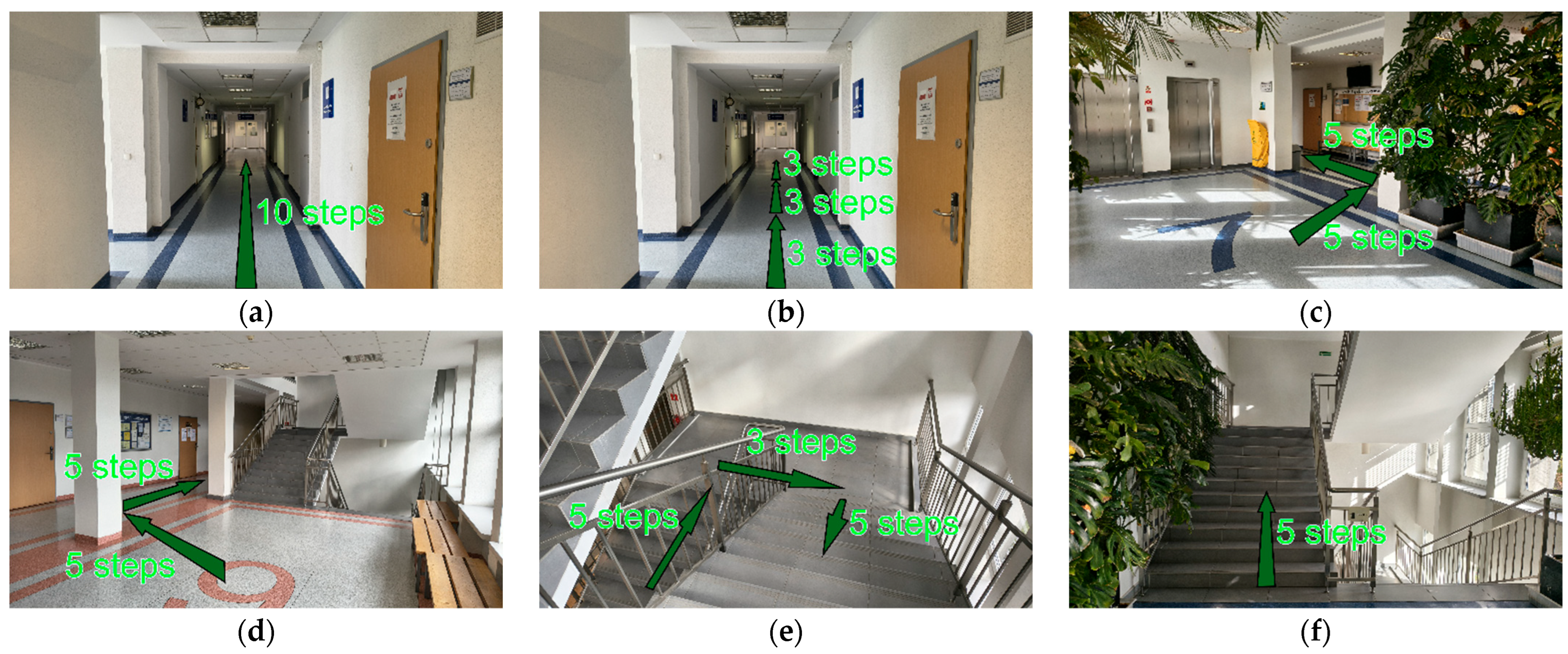

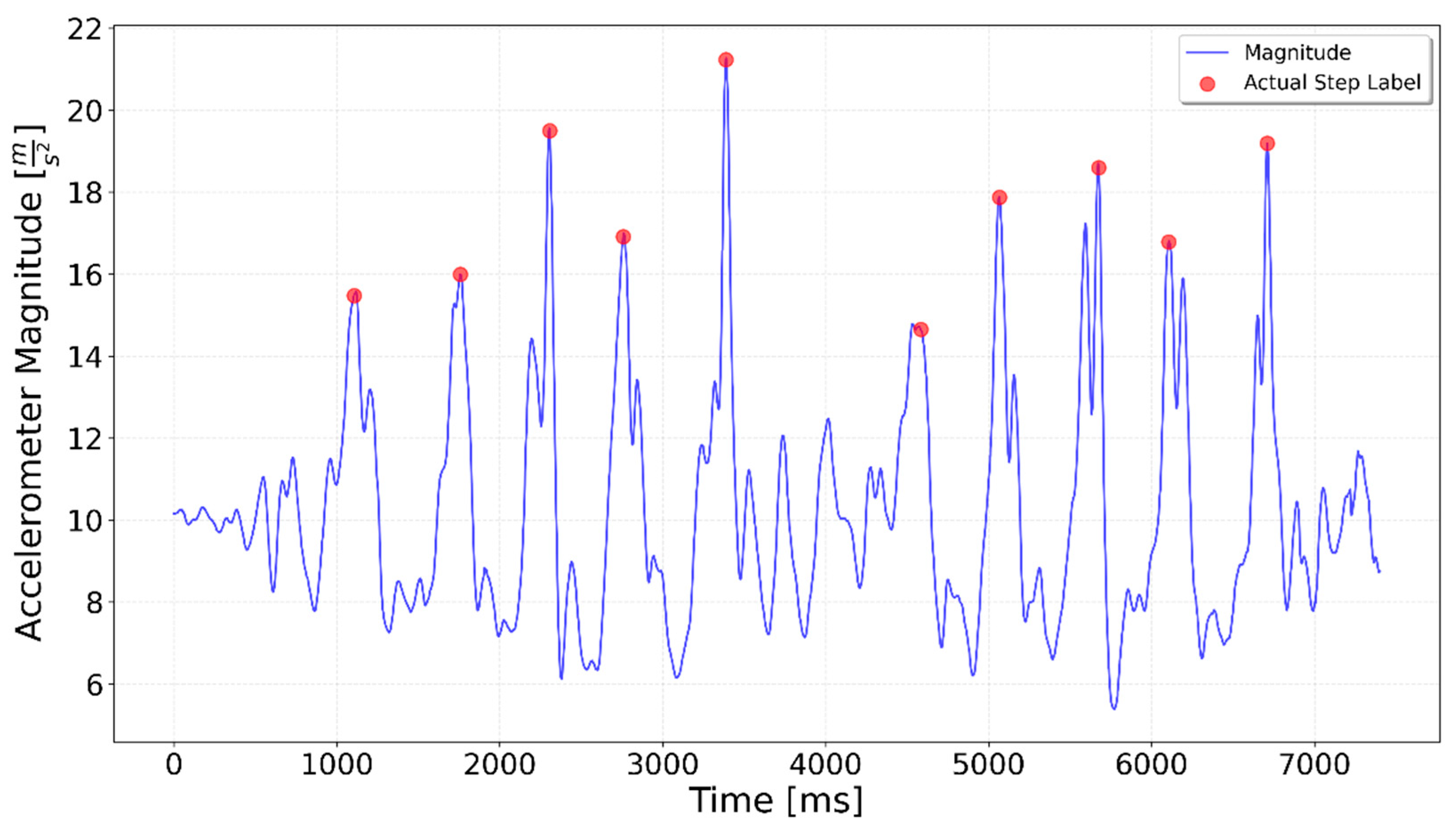

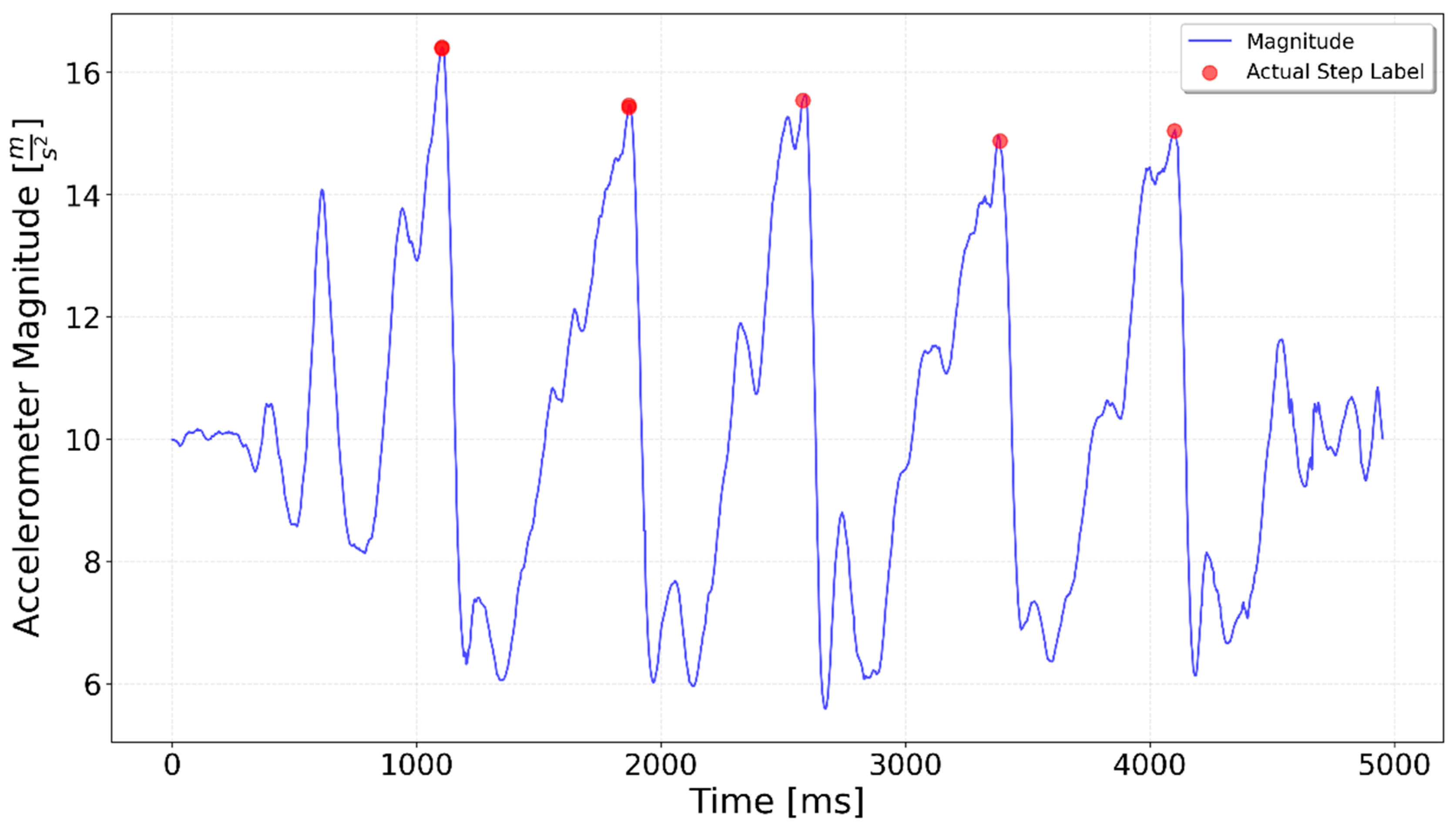

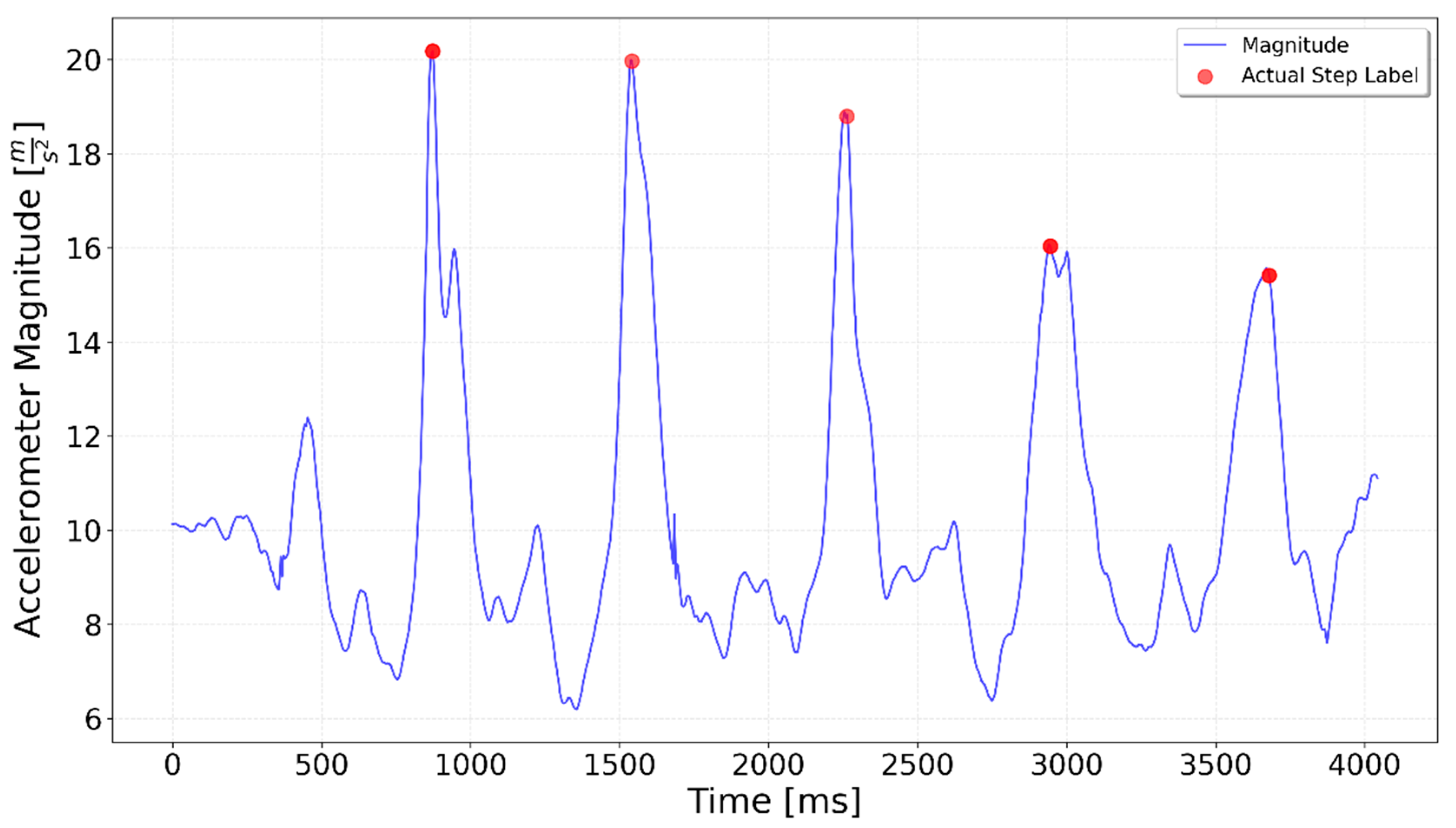

- Flat 10: 10 steps in a straight line. A baseline test to evaluate the accuracy of straightforward step counting;

- Interrupted 3 × 3: 3 steps, pause, 3 steps, pause, 3 steps. Designed to test the algorithm’s ability to handle interrupted walking patterns;

- Left Turn: 5 steps, left turn, 5 steps. Introduces a change in direction to assess the sensitivity of the step detection mechanism to turns;

- Right Turn: 5 steps, right turn, 5 steps. Similar to the previous test but involving a right turn to evaluate symmetry in directional changes;

- Stairs Transition: 5 steps up/down stairs, 3 flat steps, 5 steps. Designed to simulate walking up and down stairs with a transition to flat ground, testing the mechanism’s ability to adapt to elevation changes;

- Stairs: 5 steps up/down stairs. A simplified version of the previous test focused solely on elevation changes.

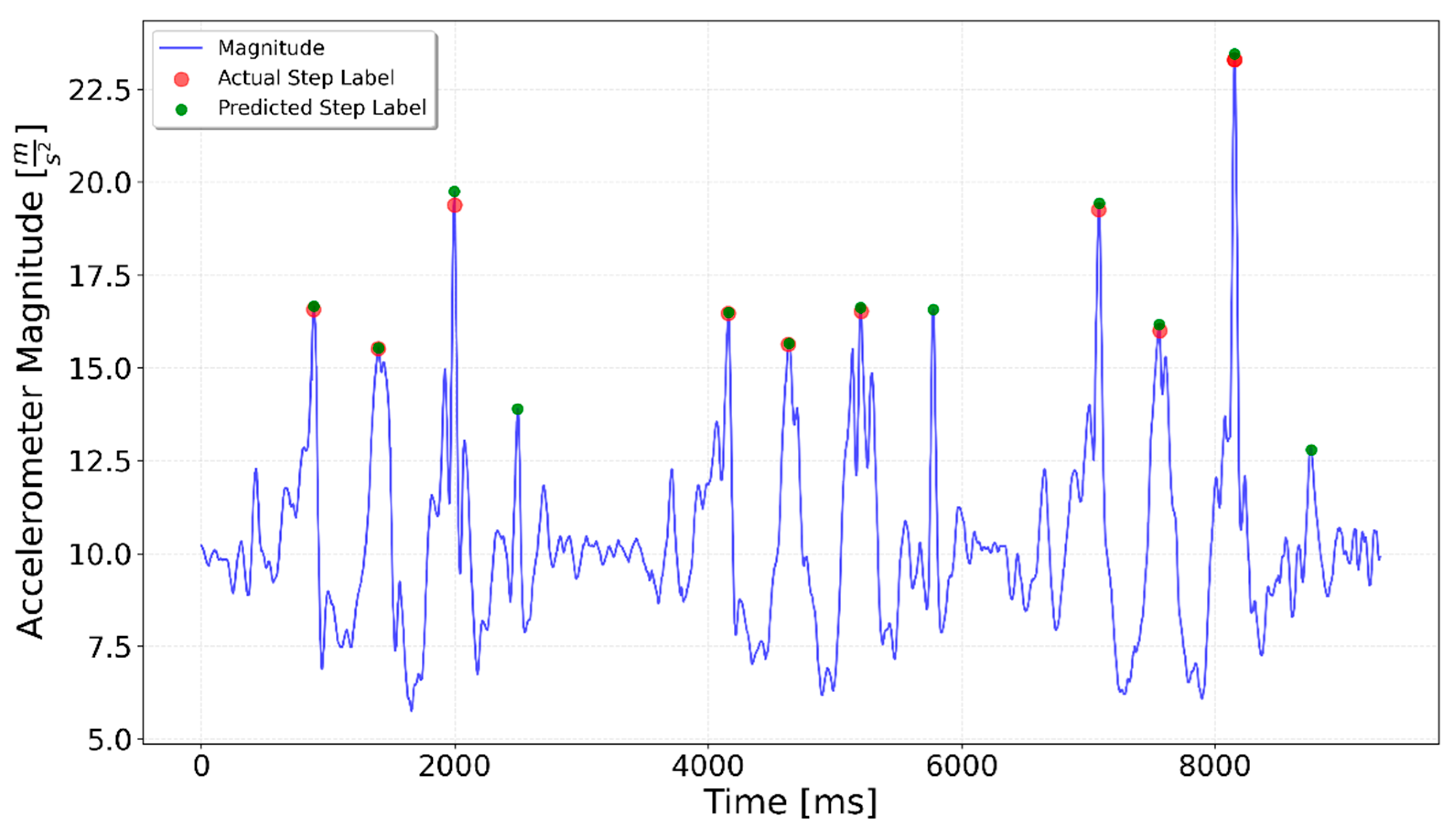

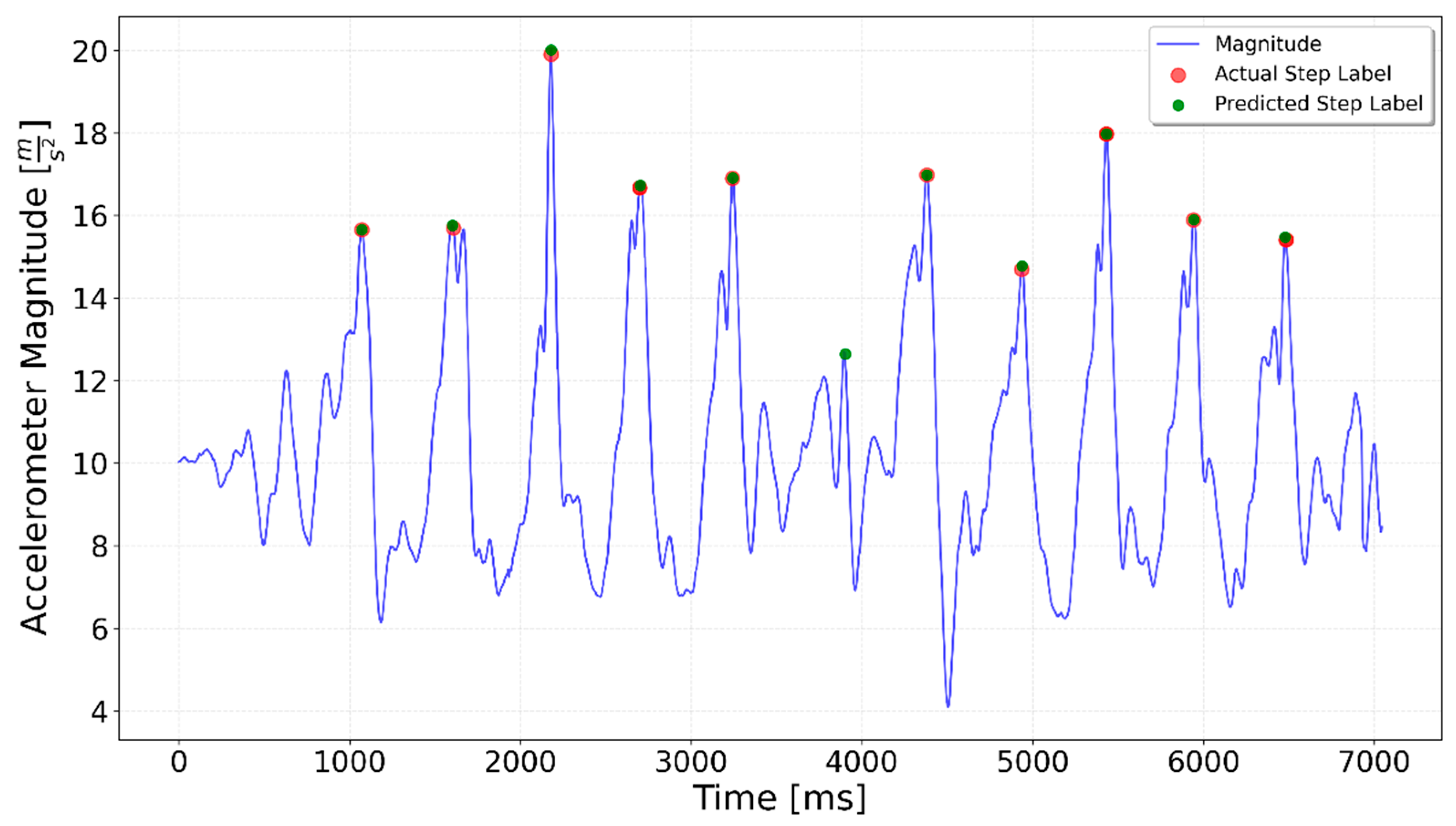

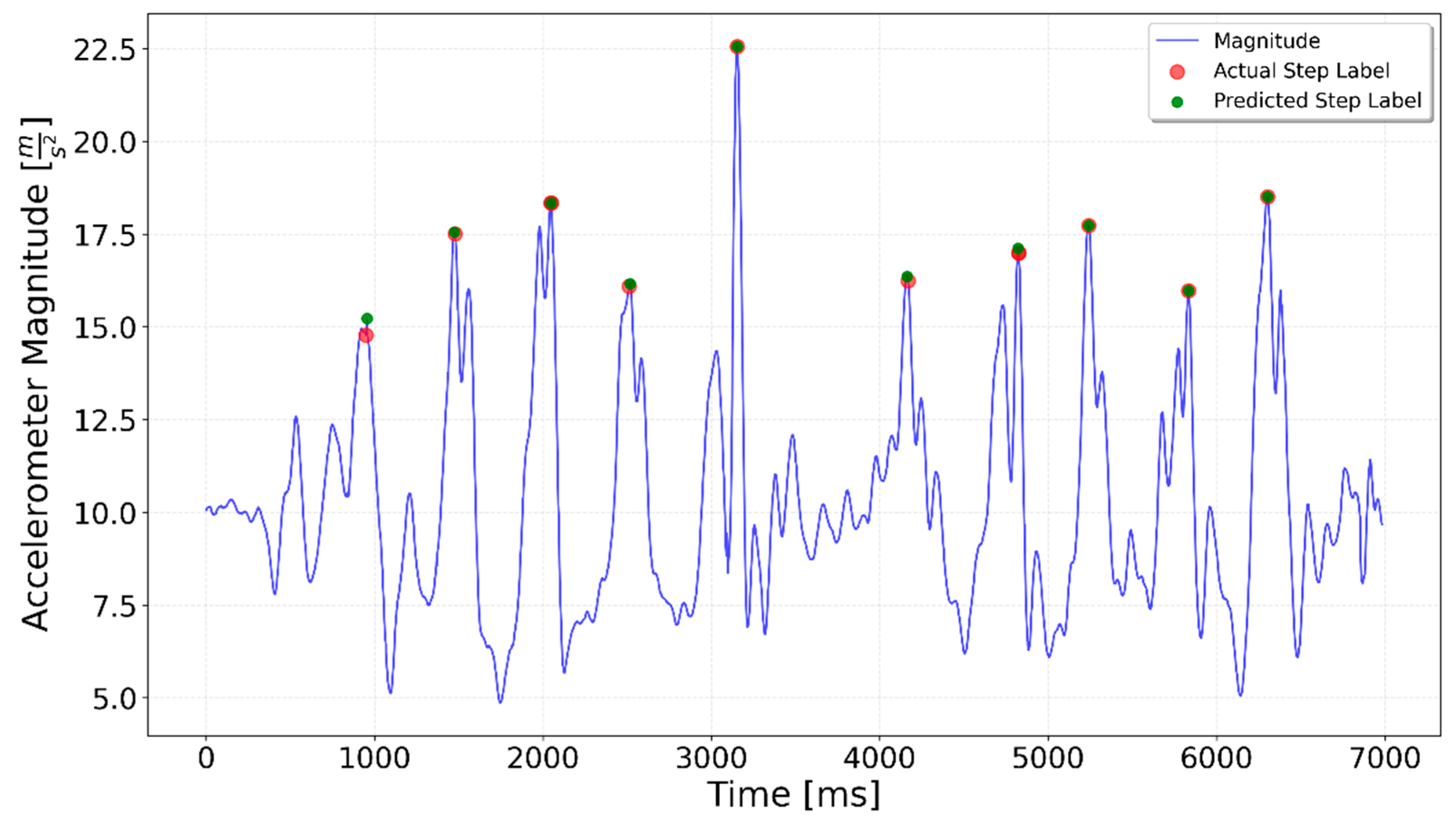

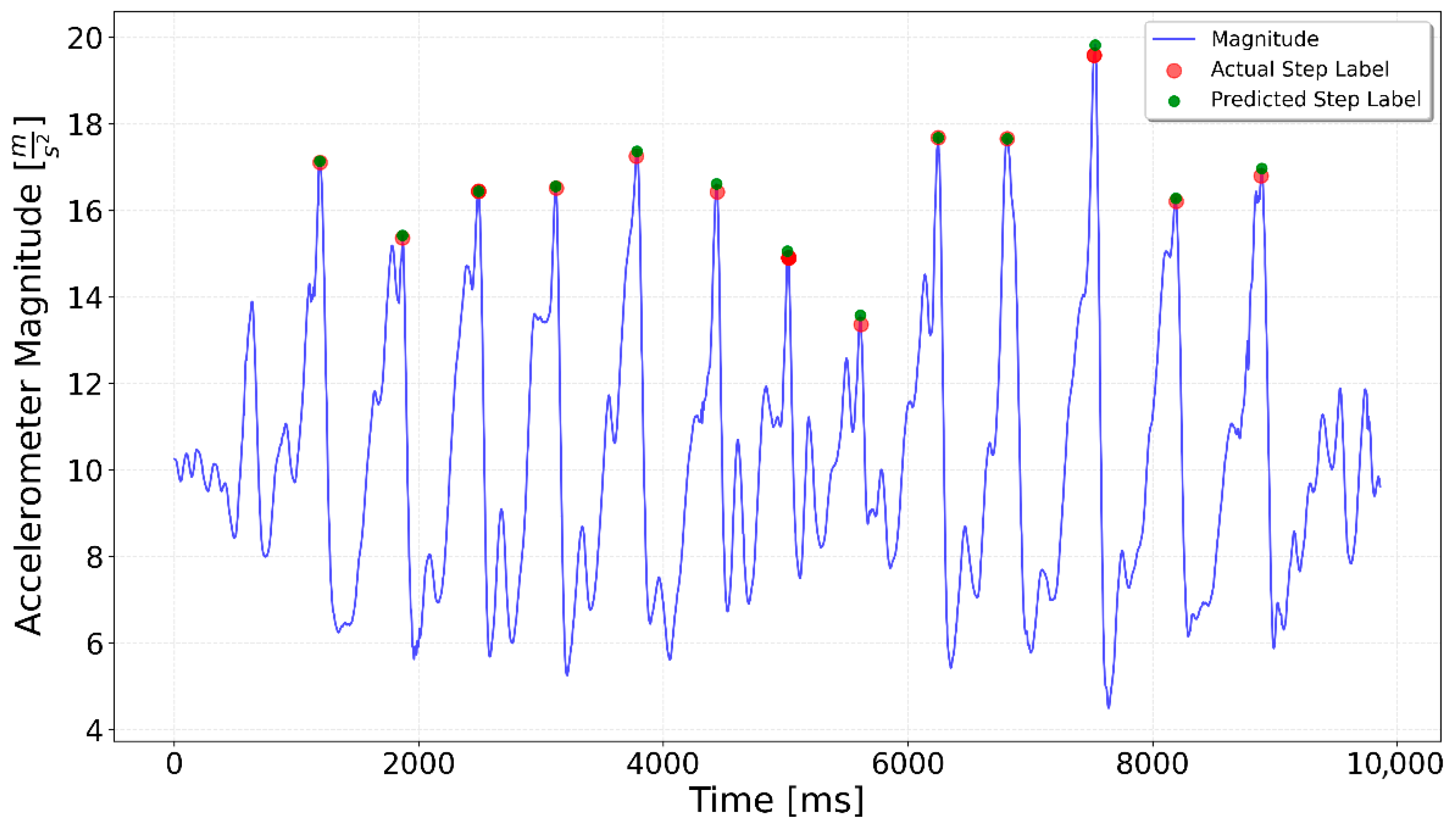

3. Results

4. Discussion

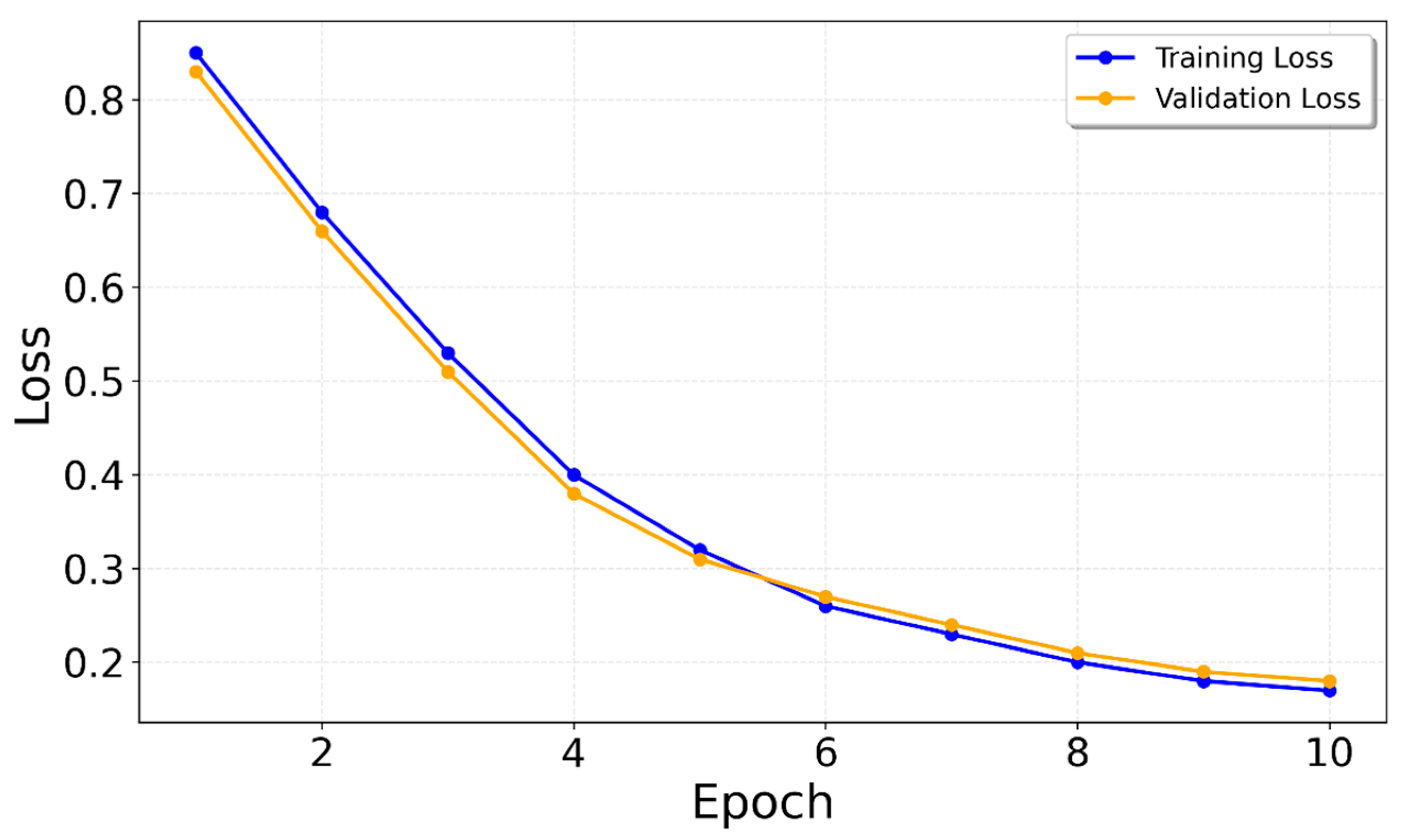

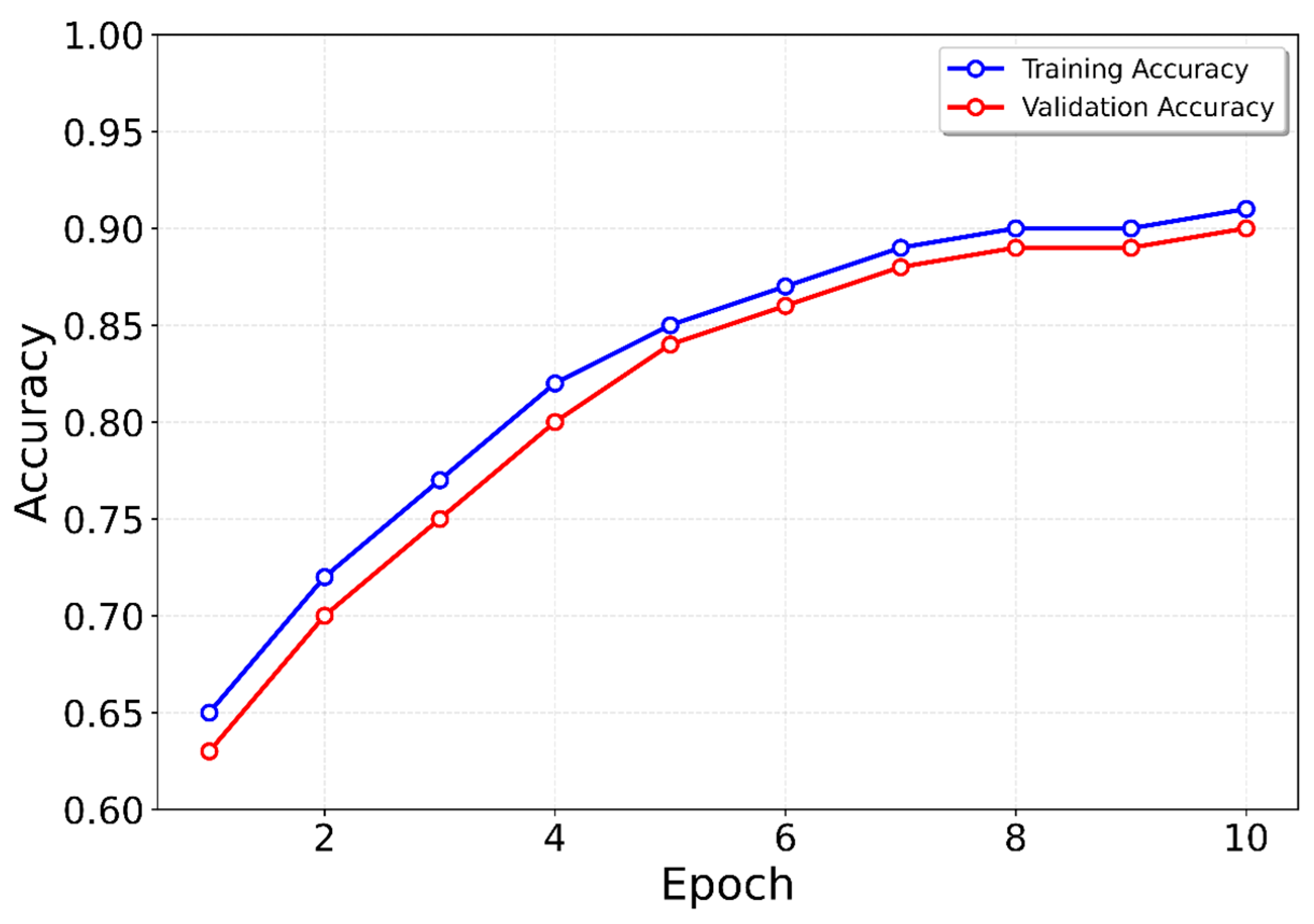

4.1. Generalized Network Approach

4.2. Specialized Network Approach

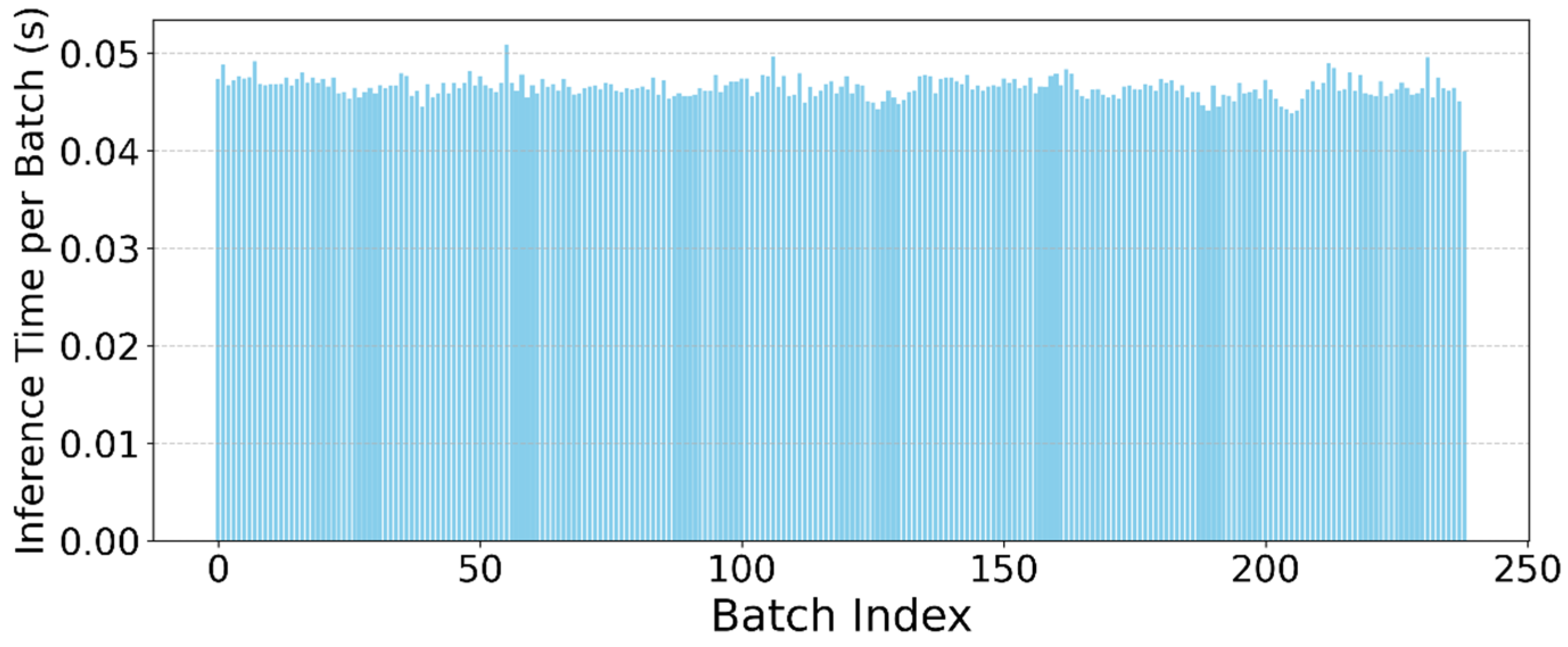

4.3. Algorithm Inference Efficiency Considerations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| BLE | Bluetooth low energy |

| CEEMDAN-HT | Complete Ensemble Empirical Mode Decomposition with Adaptive Noise Hilbert Transform |

| CNN | Convolutional neural network |

| CSI | Channel state information |

| CPU | Central processing unit |

| GPS | Global positioning system |

| GPU | Graphics processing unit |

| GRU | Gated recurrent unit |

| IMU | Inertial measurement unit |

| IN | Indoor navigation |

| LSTM | Long short-term memory |

| MCCIF-SDE | Multicondition constraints and instantaneous frequency-based SD and estimation |

| PIR | Passive infrared |

| RF | Radio frequency |

| RSSI | Received signal strength indicator |

| SVM | Support vector machine |

| YOLO | You Only Look Once |

References

- Hou, X.Y.; Bergmann, J. Pedestrian Dead Reckoning with Wearable Sensors: A Systematic Review. IEEE Sens. J. 2021, 21, 143–152. [Google Scholar] [CrossRef]

- Xing, Y.D.; Wu, H. Indoor dynamic positioning system based on strapdown inertial navigation technology. In Proceedings of the 2011 International Conference on Optical Instruments and Technology: Optoelectronic Measurement Technology and Systems, Beijing, China, 6–9 November 2011; p. 820115. [Google Scholar]

- Khatun, M.A.; Abu Yousuf, M.; Ahmed, S.; Uddin, Z.; Alyami, S.A.; Al-Ashhab, S.; Akhdar, H.F.; Khan, A.; Azad, A.; Moni, M.A. Deep CNN-LSTM with Self-Attention Model for Human Activity Recognition Using Wearable Sensor. IEEE J. Transl. Eng. Health Med. 2022, 10, 2700316. [Google Scholar] [CrossRef]

- Morar, A.; Moldoveanu, A.; Mocanu, I.; Moldoveanu, F.; Radoi, I.E.; Asavei, V.; Gradinaru, A.; Butean, A. A Comprehensive Survey of Indoor Localization Methods Based on Computer Vision. Sensors 2020, 20, 2641. [Google Scholar] [CrossRef]

- El-Sheimy, N.; Li, Y. Indoor navigation: State of the art and future trends. Satell. Navig. 2021, 2, 7. [Google Scholar] [CrossRef]

- Retscher, G. Indoor Navigation—User Requirements, State-of-the-Art and Developments for Smartphone Localization. Geomatics 2023, 3, 1–46. [Google Scholar] [CrossRef]

- Kang, T.; Shin, Y. Indoor Navigation Algorithm Based on a Smartphone Inertial Measurement Unit and Map Matching. In Proceedings of the 2021 International Conference on Information and Communication Technology Convergence (ICTC), Jeju Island, Republic of Korea, 20–22 October 2021; pp. 1421–1424. [Google Scholar] [CrossRef]

- Zhang, H.; Yuan, W.; Shen, Q.; Li, T.; Chang, H. A handheld inertial pedestrian navigation system with accurate step modes and device poses recognition. IEEE Sens. J. 2015, 15, 1421–1429. [Google Scholar] [CrossRef]

- Obeidat, H.; Shuaieb, W.; Obeidat, O.; Abd-Alhameed, R. A Review of Indoor Localization Techniques and Wireless Technologies. Wirel. Pers. Commun. 2021, 119, 289–327. [Google Scholar] [CrossRef]

- Priscilla, A.; Eyobu, O.S.; Oyana, T.J.; Han, D.S. A Multi-Model Fusion-Based Indoor Positioning System Using Smartphone Inertial Measurement Unit Sensor Data. TechRxiv 2020. [Google Scholar] [CrossRef]

- Wang, X.; Chen, G.; Cao, X.; Zhang, Z.; Yang, M.; Jin, S. Robust and Accurate Step Counting Based on Motion Mode Recognition for Pedestrian Indoor Positioning Using a Smartphone. IEEE Sens. J. 2022, 22, 4893–4907. [Google Scholar] [CrossRef]

- Borsuk, A.; Chybicki, A.; Zieliński, M. Classification of User Behavior Patterns for Indoor Navigation Problem. Sensors 2025, 25, 4673. [Google Scholar] [CrossRef]

- Mekruksavanich, S.; Jitpattanakul, A. LSTM Networks Using Smartphone Data for Sensor-Based Human Activity Recognition in Smart Homes. Sensors 2021, 21, 1636. [Google Scholar] [CrossRef]

- Sousa Lima, W.; Souto, E.; El-Khatib, K.; Jalali, R.; Gama, J. Human Activity Recognition Using Inertial Sensors in a Smartphone: An Overview. Sensors 2019, 19, 3213. [Google Scholar] [CrossRef]

- Alanazi, M.; Aldahr, R.S.; Ilyas, M. Human Activity Recognition through Smartphone Inertial Sensors with ML Approach. Eng. Technol. Appl. Sci. Res. 2024, 14, 12780–12787. [Google Scholar] [CrossRef]

- Moreira, D.; Barandas, M.; Rocha, T.; Alves, P.; Santos, R.; Leonardo, R.; Vieira, P.; Gamboa, H. Human Activity Recognition for Indoor Localization Using Smartphone Inertial Sensors. Sensors 2021, 21, 6316. [Google Scholar] [CrossRef]

- Ignatov, A. Real-time human activity recognition from accelerometer data using Convolutional Neural Networks. Appl. Soft Comput. 2018, 62, 915–922. [Google Scholar] [CrossRef]

- Sprager, S.; Juric, M.B. Inertial Sensor-Based Gait Recognition: A Review. Sensors 2015, 15, 22089–22127. [Google Scholar] [CrossRef] [PubMed] [PubMed Central]

- Polak, L.; Rozum, S.; Slanina, M.; Bravenec, T.; Fryza, T.; Pikrakis, A. Received Signal Strength Fingerprinting-Based Indoor Location Estimation Employing Machine Learning. Sensors 2021, 21, 4605. [Google Scholar] [CrossRef]

- Zeleny, O.; Fryza, T.; Bravenec, T.; Azizi, S.; Nair, G. Detection of Room Occupancy in Smart Buildings. Radioengineering 2024, 33, 432–441. [Google Scholar] [CrossRef]

- Yongchareon, S.; Yu, J.; Ma, J. Efficient Deep Learning-Based Device-Free Indoor Localization Using Passive Infrared Sensors. Sensors 2025, 25, 1362. [Google Scholar] [CrossRef]

- Chiu, C.-C.; Wu, H.-Y.; Chen, P.-H.; Chao, C.-E.; Lim, E.H. 6G Technology for Indoor Localization by Deep Learning with Attention Mechanism. Appl. Sci. 2024, 14, 10395. [Google Scholar] [CrossRef]

- Bi, J.; Wang, J.; Yu, B.; Yao, G.; Wang, Y.; Cao, H.; Huang, L.; Xing, H. Precise step counting algorithm for pedestrians using ultra-low-cost foot-mounted accelerometer. Eng. Appl. Artif. Intell. 2025, 150, 110619. [Google Scholar] [CrossRef]

- Lee, H.H.; Choi, S.; Lee, M.J. Step Detection Robust against the Dynamics of Smartphones. Sensors 2015, 15, 27230–27250. [Google Scholar] [CrossRef]

- Salvi, D.; Velardo, C.; Brynes, J.; Tarassenko, L. An optimised algorithm for accurate steps counting from smart-phone accelerometry. In Proceedings of the 40th Annual International Conference of the IEEE Engineering in Medicine and BiologySociety (EMBC), Honolulu, HI, USA, 18–21 July 2018; pp. 4423–4427. [Google Scholar] [CrossRef]

- Gu, F.; Khoshelham, K.; Shang, J.; Yu, F.; Wei, Z. Robust and accurate smartphone-based step counting for indoor localization. IEEE Sens. J. 2017, 17, 3453–3460. [Google Scholar] [CrossRef]

- Seo, J.; Chiang, Y.; Laine, T.H.; Khan, A.M. Step counting on smartphones using advanced zero-crossing and linear regression. In Proceedings of the 9th International Conference on Ubiquitous Information Management and Communication (IMCOM ′15), New York, NY, USA, 8–10 January 2015; Volume 106, pp. 1–7. [Google Scholar] [CrossRef]

- Zhang, M.; Jia, J.; Chen, J.; Deng, Y.; Wang, X.; Aghvami, A.H. Indoor Localization Fusing WiFi with Smartphone Inertial Sensors Using LSTM Networks. IEEE Internet Things J. 2021, 8, 13608–13623. [Google Scholar] [CrossRef]

- Abiad, N.A.; Kone, Y.; Renaudin, V.; Robert, T. Smartstep: A Robust STEP Detection Method Based on SMARTphone Inertial Signals Driven by Gait Learning. IEEE Sens. J. 2022, 22, 12288–12297. [Google Scholar] [CrossRef]

- Ren, P.; Elyasi, F.; Manduchi, R. Smartphone-Based Inertial Odometry for Blind Walkers. Sensors 2021, 21, 4033. [Google Scholar] [CrossRef]

- Sadhukhan, P.; Mazumder, S.; Chowdhury, C.; Paiva, S.; Das, P.K.; Dahal, K.; Wang, X. IRT-SD-SLE: An Improved Real-Time Step Detection and Step Length Estimation Using Smartphone Accelerometer. IEEE Sens. J. 2023, 23, 30858–30868. [Google Scholar] [CrossRef]

- Song, Z.; Park, H.-J.; Thapa, N.; Yang, J.-G.; Harada, K.; Lee, S.; Shimada, H.; Park, H.; Park, B.-K. Carrying Position-Independent Ensemble Machine Learning Step-Counting Algorithm for Smartphones. Sensors 2022, 22, 3736. [Google Scholar] [CrossRef]

- Sarwar, M.Z.S. Step Detection. Master’s Thesis, Technical University of Applied Sciences Würzburg-Schweinfurt, Würzburg and Schweinfurt, Germany, 2023. Available online: https://github.com/zakriyahmed/Step-Detection-Master-Thesis/blob/main/Master%20Thesis.pdf (accessed on 28 August 2025).

- Hameem1, Step Detection Using Machine Learning, 2022. Available online: https://github.com/Hameem1/Step-Detection-using-Machine-Learning (accessed on 28 August 2025).

- Ngo, T.T.; Makihara, Y.; Nagahara, H.; Mukaigawa, Y.; Yagi, Y. OU-ISIR Biometric Database. 2011. Available online: http://www.am.sanken.osaka-u.ac.jp/BiometricDB/SimilarActionsInertialDB.html (accessed on 28 August 2025).

- Shi, F.; Zhou, W.; Yan, X.; Shi, Y. Novel Step Detection Algorithm for Smartphone Indoor Localization Based on CEEMDAN-HT. IEEE Trans. Instrum. Meas. 2024, 73, 8507509. [Google Scholar] [CrossRef]

- Shi, L.-F.; Jing, F.; Wu, X.-H.; Shi, Y. Efficient Step Detection and Step Length Estimation Method Based on Smartphones. IEEE Trans. Instrum. Meas. 2025, 74, 9525611. [Google Scholar] [CrossRef]

- Han, L.; Sun, Q.; Wang, Z.; Ma, T. An Adaptive Step Detection Method for Smartphones Based on Time-Dependent Decay Mechanism. IEEE Sens. J. 2025, 25, 25363–25372. [Google Scholar] [CrossRef]

- Li, C.; Yu, S.; Dong, X.; Yu, D.; Xiao, J. Improved 3-D PDR: Optimized Motion Pattern Recognition and Adaptive Step Length Estimation. IEEE Sens. J. 2025, 25, 9152–9166. [Google Scholar] [CrossRef]

- Tiwari, S.; Jain, V.K. A novel step detection technique for pedestrian dead reckoning based navigation. ICT Express 2023, 9, 16–21. [Google Scholar] [CrossRef]

- Shaikh, U.Q.; Shahzaib, M.; Shakil, S.; Bhatti, F.A.; Saeed, M.A. Robust and adaptive terrain classification and gait event detection system. Heliyon 2023, 9, e21720. [Google Scholar] [CrossRef]

| Type | Real per Type | Detected per Type | Accuracy | Recall | F1-Score |

|---|---|---|---|---|---|

| Flat 10 | 104 | 95 | 0.93 | 0.85 | 0.89 |

| Left Turn | 107 | 103 | 0.94 | 0.91 | 0.92 |

| Right Turn | 103 | 100 | 0.96 | 0.93 | 0.95 |

| Downstairs Transition | 115 | 105 | 0.90 | 0.82 | 0.86 |

| Upstairs Transition | 116 | 109 | 0.91 | 0.85 | 0.88 |

| Upstairs | 114 | 104 | 0.92 | 0.84 | 0.88 |

| Downstairs | 102 | 103 | 0.93 | 0.94 | 0.93 |

| Interrupted 3 × 3 | 105 | 111 | 0.88 | 0.93 | 0.90 |

| Total | 0.93 | 0.88 | 0.90 |

| Type | Real per Type | Detected per Type | Accuracy | Recall | F1-Score |

|---|---|---|---|---|---|

| Flat 10 | 104 | 104 | 0.99 | 0.99 | 0.99 |

| Left Turn | 107 | 108 | 0.97 | 0.98 | 0.97 |

| Right Turn | 103 | 100 | 0.97 | 0.94 | 0.95 |

| Downstairs Transition | 115 | 114 | 0.97 | 0.96 | 0.96 |

| Upstairs Transition | 116 | 112 | 0.96 | 0.93 | 0.94 |

| Upstairs | 114 | 113 | 0.97 | 0.96 | 0.96 |

| Downstairs | 102 | 102 | 0.95 | 0.95 | 0.95 |

| Interrupted 3 × 3 | 105 | 106 | 0.95 | 0.96 | 0.95 |

| Total | 0.96 | 0.96 | 0.96 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zieliński, M.; Chybicki, A.; Borsuk, A. An Improved Step Detection Algorithm for Indoor Navigation Problems with Pre-Determined Types of Activity. Sensors 2025, 25, 6358. https://doi.org/10.3390/s25206358

Zieliński M, Chybicki A, Borsuk A. An Improved Step Detection Algorithm for Indoor Navigation Problems with Pre-Determined Types of Activity. Sensors. 2025; 25(20):6358. https://doi.org/10.3390/s25206358

Chicago/Turabian StyleZieliński, Michał, Andrzej Chybicki, and Aleksandra Borsuk. 2025. "An Improved Step Detection Algorithm for Indoor Navigation Problems with Pre-Determined Types of Activity" Sensors 25, no. 20: 6358. https://doi.org/10.3390/s25206358

APA StyleZieliński, M., Chybicki, A., & Borsuk, A. (2025). An Improved Step Detection Algorithm for Indoor Navigation Problems with Pre-Determined Types of Activity. Sensors, 25(20), 6358. https://doi.org/10.3390/s25206358