1. Introduction

In 2019, China clearly stated that by 2035, a strong transportation country will be built, achieving the goal of covering the major cities in the country in 3 h. To achieve this goal, trains must increase operational speeds, and a more comprehensive safety monitoring system is necessary for train operations. However, high-speed rails (with speeds exceeding 200 km/h) involve multiple advanced technologies and face multiple and multi-level safety challenges [

1,

2,

3]. Specifically, the extensive track lines span complex and dynamic environments, with challenges categorised into natural and external factors. Natural factors include extreme weather conditions such as heavy snowstorms, strong winds, and geological disasters. In addition, external threats, such as foreign object intrusions, falling debris, and high-altitude rockfalls from mountainous terrain, further add risk to the system.

To address these safety challenges, cameras can achieve real-time monitoring and rapid response to various potential safety threats. Furthermore, they have the advantages of wide coverage, uninterrupted operation in all weather conditions, and over-the-horizon detection [

4] and do not need frequent personnel inspections on site. Meanwhile, due to the high speed, the on-board cameras cost more for the same image clarity and have scarcer computing resources for the operational environment than the trackside cameras. However, existing video systems generate a large amount of data. If these videos are processed through manual review and post-review methods, they will cost a large amount of labour and material costs. Nowadays, the application of computer vision has brought new solutions to this challenge [

4,

5]. In addition, it also has powerful data analysis capabilities, which can perform intelligent video analysis, ensuring the safe operation of the train in harsh environments.

In real life, a large amount of data obtained is not labelled. Moreover, due to the specificity of the high-speed train, it is impossible to generate or collect enough foreign object intrusion videos, leading to a data imbalance [

6]. However, object detection usually requires a large amount of precisely labelled data, while weakly supervised anomaly detection [

7] requires only a small number of fully labelled data, and unsupervised anomaly detection does not require fully labelled data. For the track scene, it greatly reduces the cost of detecting a large number of anomalies. The unpredictability of foreign object intrusions in track environments makes weakly supervised and unsupervised methods more suitable for railway applications due to their ability to generalise better to new anomalies without prior knowledge of all possible intrusion types. The scientific objective of this paper is to develop an intelligent vision system for real-time monitoring of the intrusion of the railway perimeter. This system aims to overcome the core challenges of data annotation costs, adaptability to complex environments, and real-time processing efficiency by integrating a dual-frame approach of weakly supervised video anomaly detection and unsupervised foreground segmentation. The main contributions of this paper are as follows.

- (1)

Based on the Xception3D (X3D) [

8] network, a novel approach is proposed by integrating the nonlocal module [

9] and multiscale temporal attention. It combines the Top-K sample selection strategy with an amplitude score/feature loss function to enhance the distinction of the classification boundary between normal and abnormal samples. At the same time, the time-smoothing constraint is incorporated to ensure the consistency of detection in continuous video frames.

- (2)

An improved foreground segmentation algorithm based on the Variational Autoencoder (VAE) [

10] is proposed. It incorporates spatial position encoding to enhance the accuracy of target positioning. The down/upsampling modules are optimized to improve the efficiency of image feature extraction and the model size is reduced, thus accelerating the computation speed.

- (3)

The proposed foreground segmentation algorithm utilizes the idea of pixel-level background weight distribution and incorporates it into the design of the loss function, aiming to jointly optimize background authenticity and anti-noise performance.

The remaining sections of this paper are organised as follows.

Section 2 specifically elaborates on the current methods for tracking foreign objects that invade vision, the methods for tracking foreign objects that invade video, and the current state of foreground segmentation. The weakly supervised video detection and unsupervised foreground segmentation models are thoroughly described in

Section 3.

Section 4 discusses the ablation experiments and comparative experiments of the model. Finally,

Section 5 summarises the entire paper, and prospects are given.

2. Related Work

Vision-based technologies for foreign object intrusion detection encompass a diverse array of methods, which can be broadly categorised into traditional vision detection algorithms and deep learning-based detection algorithms. Traditional vision detection includes motion difference or background modelling techniques [

11], weighted classification approaches that integrate scene priors and motion patterns, and methods that involve feature description and multiscale analysis [

12,

13]. From the perspective of motion difference, Sehchan et al. [

11] develop a multicamera monitoring system for real-time observation of platform areas, using frame difference to detect intrusion by person or obstacles. However, this method is sensitive to environmental changes, leading to high false alarm rates, and threshold selection significantly affects accuracy. Regarding feature description and multiscale analysis, Thanh et al. [

13] introduce a real-time pedestrian detection scheme for embedded visual systems. This system uses a histogram of orientated gradients (HOG) with selective search to reduce processing time, optimising candidate region searches via adaptive Gaussian mixture model (AGMM) background subtraction, and employing approximate HOG calculations for speed. Li et al. [

12] propose an automatic intrusion detection algorithm that takes advantage of multiscale images and dynamic candidate regions. The changes in the grey projection curve are analysed to screen candidate regions and accurately extract targets from high-resolution images using background difference techniques. Comparative experiments on three videos of railway monitoring demonstrate efficiency and accuracy in real-time detection.

Track foreign object intrusion detection technologies based on deep learning can generally be divided into two categories, which are detection technologies based on 2D images [

14,

15,

16,

17] and detection technologies based on 3D vision [

18,

19,

20,

21]. In the area of detection based on 2D images, methods are classified into static image analysis [

16] and video sequence analysis [

22]. Static image processing employs techniques such as image segmentation [

23] and object detection algorithms [

24], such as Faster R-CNN and YOLO, to accurately identify and localise foreign objects on tracks. Video sequence analysis uses approaches such as optical flow and background difference. Through the analysis of continuous video frames, it effectively captures transient foreign object intrusion events that occur in the track area [

25,

26,

27]. In 3D vision, detection focusses on point cloud processing and stereo vision. Researchers combine 3D LiDAR and other sensing devices to gather point cloud data, employing deep learning architectures such as PointNet [

28] and PointCNN [

29] to precisely position foreign objects in three-dimensional space. Stereo vision involves using binocular or multi-camera systems to construct depth images using parallax calculations, enhancing the identification of foreign objects through deep learning. Qi et al. [

30] improve detection by preprocessing radar data to filter out interference while retaining targets within a safe range, effectively combining radar and machine vision for joint obstacle detection. Jin et al. [

15] introduce a microwave radar-based method to address the limitations of machine vision in real-time performance and environmental adaptability. Their technique involves offline error correction, the construction of a clearance area in radar coordinates, and tracking moving targets, achieving a comprehensive detection rate of around 90% in complex railway environments. Although computer vision applications in track intrusion detection have made significant strides across various technologies, current research often overlooks the complexities of the data set and its impact on model generalisation. Weakly supervised and unsupervised techniques are used to streamline data set production and improve model performance in terms of real-time response, detection accuracy, and adaptability to challenging environments.

Video anomaly detection is a key technology for identifying and locating abnormal events in surveillance videos [

31]. It has a wide range of applications in many fields, such as security monitoring, autonomous driving, and industrial production monitoring. The essence of tracking perimeter foreign object intrusion detection is also a video anomaly detection task. According to different data and methods, video anomaly detection [

32] can be roughly divided into four categories: supervised learning, unsupervised learning, weakly supervised learning, and self-supervised learning. The following explains in detail the application status and achievements of these methods in research.

In the field of unsupervised video anomaly detection, Generative Cooperative Learning (GCL) [

33] is proposed. It uses low-frequency features of abnormal events to establish cross-supervision between the generator and the discriminator, thereby enhancing its performance, all without the need for labelled data. MyeongAh et al. [

34] develop the Implicit Two-Path Autoencoder (ITAE), which uses two encoders to capture visual and dynamic features. In combination with a normalised flow model, it effectively models normal feature distributions to detect anomalies. Che et al. [

35] introduce an adversarial 3D convolutional autoencoder within a framework of joint learning to deep-learnt normal spatiotemporal patterns and their correlations, demonstrating superior performance in multiple public datasets.

In the field of weakly supervised video anomaly detection, Didik et al. [

23] propose a method that combines the extraction of relational features, self-attention, and conditional random fields to capture spatiotemporal interactions, multiscale dependencies, and local/global relationships of CNN features in videos. Li et al. [

36] design a scale-aware learning method that incorporates a spatial relationship module and multiscale patch aggregation. It effectively identifies local abnormal patterns and captures spatiotemporal dynamics, demonstrating state-of-the-art results on the UCF-Crime and ShanghaiTech dataset.

In the field of self-supervised video anomaly detection, Wu et al. [

37] propose the self-supervised sparse representation framework (S3R), which combines dictionary learning and self-supervision to distinguish normal and abnormal segment features in both one-class and weakly supervised settings. Wang et al. [

22] develop a spatiotemporal puzzle pretext task that decouples spatial and temporal dimensions to train models on puzzles of varying difficulty. This approach effectively captures subtle spatiotemporal differences in anomalies and outperforms traditional reconstruction-based and prediction-based methods, particularly on the ShanghaiTech dataset.

Current video anomaly detection methods primarily focus on anomalies in foreground appearance and motion patterns, which leads to insufficient attention to the contextual semantic information of the foreground. This results in a high false-positive rate when defining anomalies across various scenes and behaviours. In terms of the feature extraction of the model, more emphasis should be placed on spatiotemporal information in video. This situation highlights the importance of this paper, especially in the use of video anomaly detection methods to address intrusion detection problems of tracking.

Background extraction and foreground segmentation technologies aim to accurately distinguish objects of interest (i.e., the foreground) from relatively fixed background or non-key areas of interest in images or video [

38]. In many real-life scenarios, this technology has been widely applied, covering multiple frontier fields, such as security monitoring systems, unmanned driving systems, and remote sensing of unmanned aerial vehicles [

39,

40,

41,

42]. According to differences in research methods, background extraction and foreground segmentation technologies can be divided into two categories, namely traditional methods and deep learning-based methods [

43,

44,

45,

46,

47,

48]. The following will further elaborate on the performance and achievements of these different methods in practical scientific research and applications.

In traditional methods, the ViBe Algorithm [

49] compares the historical and current values of pixels. It adaptively updates the background model by randomly selecting pixel values and propagates updates to neighbouring pixels for robust background modelling. St-Charles et al. [

50] design a new method named PAWCS, which uses a robust dictionary model based on colour and local binary characteristics and self-adjusts parameters through a feedback mechanism to adapt to complex conditions. St-Charles [

51] later proposed a new universal pixel-level segmentation method called SuBSENSE. By fusing spatiotemporal binary features and colour information, it can detect concealed foreground objects more accurately while ignoring most of the illumination changes. This method uses a pixel-level feedback loop to dynamically adjust internal parameters without manual intervention.

In the field of deep learning, facing the challenges of segmentation of motion objects in complex environments, Long et al. [

52] propose two architectures for segmentation of motion objects, one employing a triple encoder for multiscale information capture and the other integrating a feature pooling module for scene complexity. Both of them utilise transpose convolutions for precise segmentation in the decoding stage. Tezcan et al. [

53] introduce spatiotemporal domain data enhancement techniques in response to the problem of the insufficient generalization ability of algorithms for unseen videos in the background subtraction task and applied them to the video-independent supervised background subtraction algorithm BSUV-Net, upgrading it to version 2.0. Yang et al. [

54] combine multiscale spatiotemporal features using a novel layer structure to improve the detection of moving objects in video sequences. Akilan et al. [

55] propose a 3D CNN-LSTM model that adopts a dual encoding and slow decoding strategy to improve the quality of the foreground representation. It improves the problem of inaccurate foreground boundaries caused by single-frame detection by making full use of spatiotemporal clues.

Although background extraction and foreground segmentation technologies have made significant progress in various scenarios, there are still some challenges and deficiencies. For example, they may show certain limitations when dealing with drastic changes in illumination, complex background dynamics, and large-scale scenes. Although there have been breakthroughs in moving object segmentation in complex environments, there are also problems, such as a large demand for training samples and the need to improve generalisation ability. The comparison results of the various studies elaborated above are shown in

Table 1.

3. Materials and Methods

In the detection of railway video anomalies, two key issues must be addressed: one is the timing of anomalies in the video stream and the other is the category and location of these anomalies. To address the first issue, a weakly supervised video anomaly detection method is proposed. After the specific anomalous video frames are obtained, an unsupervised foreground segmentation technique is introduced to locate the anomalies within the image.

3.1. Weakly Supervised Video Anomaly Detection Based on the Trackside Camera

In railway environments, foreign object intrusion events are rare and diverse in types, making it costly to obtain a large number of accurately labelled abnormal samples. Weakly supervised learning only requires video-level labels to train the model, which significantly reduces the labelling burden while possessing a generalization ability for unknown abnormal types. Therefore, this paper adopts a weakly supervised video anomaly detection method.

To address the issues of complex dataset annotation and unknown target detection, the framework for weakly supervised foreign object intrusion detection and tracking based on video surveillance is shown in

Figure 1. It comprises the following components: video input settings; a video preprocessing module; a backbone pre-trained with large video datasets; an attention enhancement module, which includes the non-local for establishing global dependencies and the multiscale temporal attention module for establishing local temporal dependencies; a linear classifier module; Top-K selection; and a loss function encompassing the amplitude score loss function, the amplitude feature loss function, and the time-smoothing term loss function.

3.1.1. Input Mask and Far-Point Information Amplification

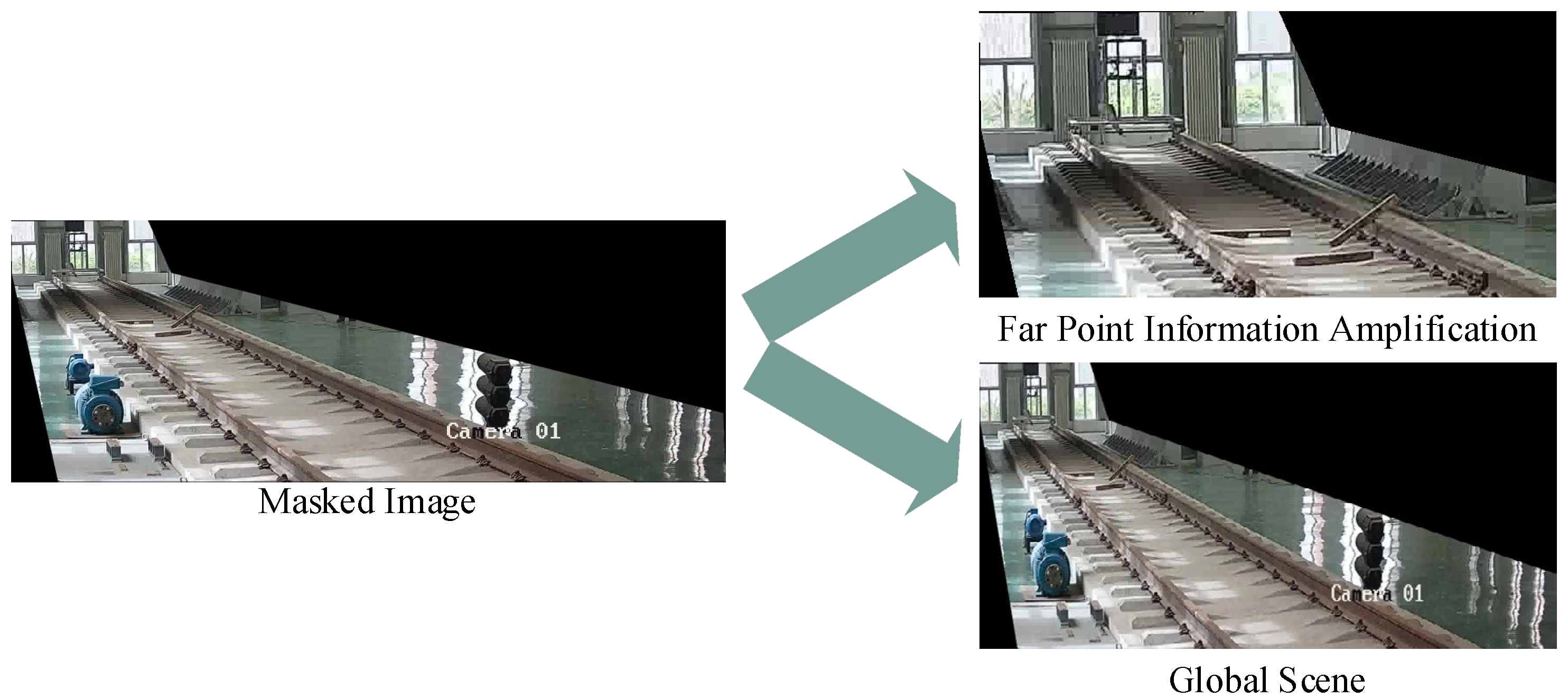

In railway scenarios, there is often a lot of interference, which requires video pre-processing to highlight key areas within the railway clearance. This pre-processing involves image mask cropping and far-point information amplification, as shown in

Figure 2. Image mask cropping, based on track boundaries, uses image masks to select specific areas for regions of interest (ROIs), with uninterested areas set as background colour for easier subsequent processing. A strategy for far-point information amplification is proposed: local images in the far-point area of the track are separately cropped and combined with uncropped complete images to form a set of complementary image samples (the Far point indicates the area within the image where the target is far from the shooting position). This dual-perspective approach ensures that both macro-context and micro-details are preserved for comprehensive analysis. These samples highlight detailed features of distant targets in the current frame and reflect features of the global scene, providing additional perspective for anomaly detection and improving detection efficiency. The integration of these two image types effectively addresses the challenge of detecting small or distant objects in complex railway environments.

3.1.2. Backbone Network and Attention Modules

The backbone X3D is pre-trained by Kinetics-400 (K400), a large-scale action recognition benchmark dataset. Meanwhile, non-local attention is adopted to process the feature maps obtained by the X3D. To ensure clarity and reproducibility, the framework is constructed from publicly available components: the X3D backbone [

8] and the non-local attention module [

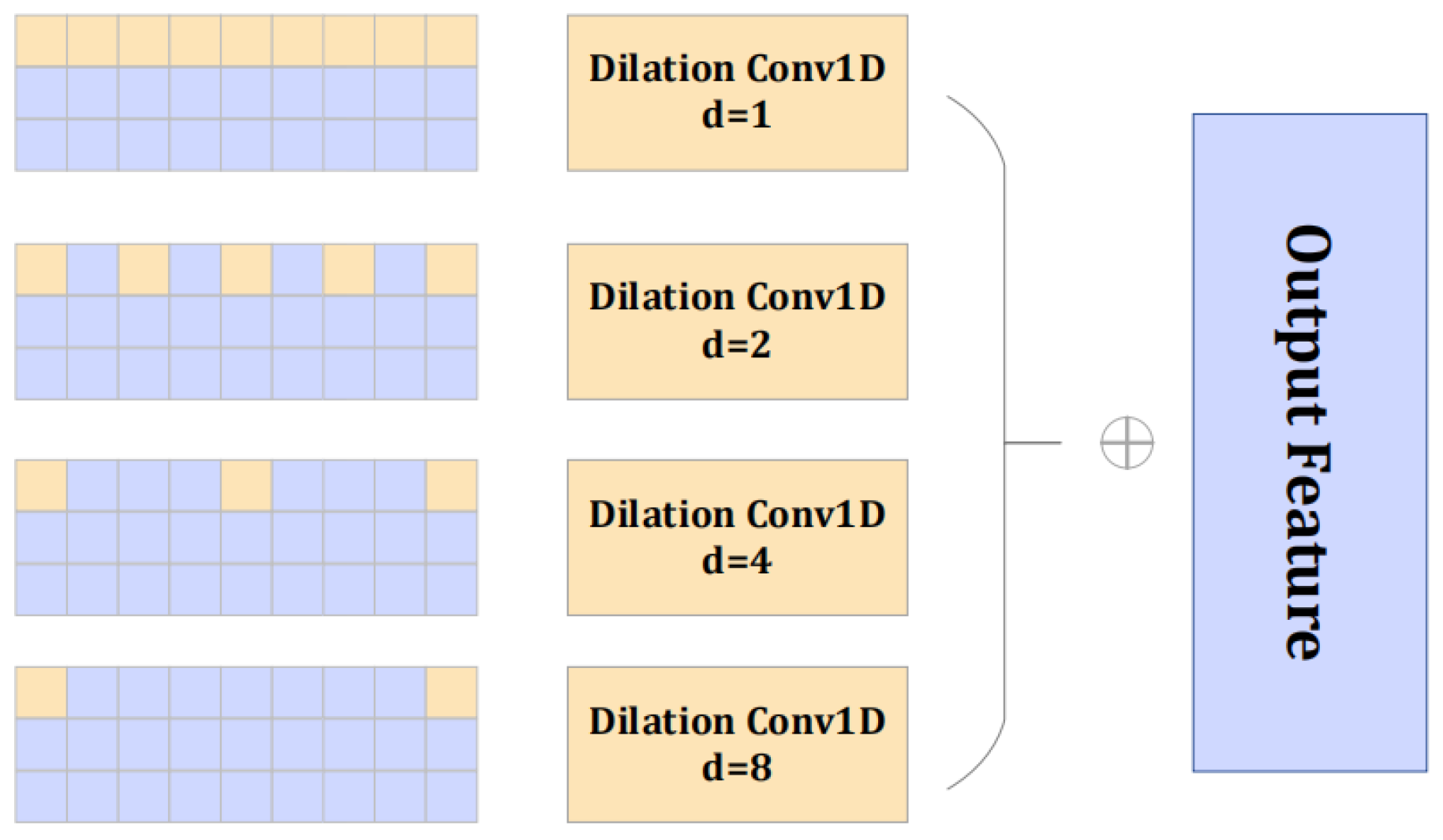

9] are implemented according to their original publications. Our key contribution lies in the novel integration of these components with a dedicated multiscale temporal attention module and a tailored loss function, detailed in the following sections. These modifications are modular and can be implemented within standard deep-learning frameworks such as PyTorch (The version number is 1.13.0). However, in the anomaly detection task, the local correlation in the temporal dimension is also of indisputable importance. For this reason, a pyramid structure is introduced, and one-dimensional dilated convolution is used to deeply explore the multiscale characteristics of video segments in the time dimension. The multiscale temporal attention module helps learn multiscale feature from the pre-extracted features

. Given the features

, the kernel

of the one-dimensional dilated convolution operator, where

,

,

. The multiscale temporal attention can be represented by Equation (1) and

Figure 3.

where

represents the index of the dilated convolution operator,

represents the output feature after applying the dilated convolution in the temporal dimension, and the different dilated convolution operators with different are

.

3.1.3. Top-K Sample Selection and Loss Function

After being enhanced by the nonlocal and multiscale temporal attention, these optimized feature vectors will pass through the classifier to generate scores corresponding to video frames. Furthermore, the top K scores with the highest absolute values are filtered out from the scores of normal and abnormal video frames, respectively, namely Top-K sample selection. Based on this, the amplitude score loss is calculated. Subsequently, according to the frame indices corresponding to the loss of these highest K scores, the corresponding feature vectors are extracted to calculate the loss of amplitude features of the prominent K features. By quantitative analysis of the difference amplitudes among these maximum frame-level scores, the optimal K value is selected. The experimental results show that when K = 3, the maximum score difference is significant, indicating that the model performs best under this value.

Based on Top-K selection, losses are calculated for the selected scores and the corresponding features. The prototype of the score loss function is the cross-entropy loss function, but the input is only the scores of the normal and abnormal video frames. The specific representation is shown in Equation (2).

where

represents the video frame,

represents the label of the video where the video frame is located, and

represents the model operation.

To increase the distinction of the loss function between normal and abnormal videos, in terms of features, Top-K selection is also used to select the features with the largest differences. Through the L2 norm, the features can be converted into a positive number, and this positive number can represent the score corresponding to the feature. The L2 norm of the scores of K video frames is calculated in the video, as shown in Equation (3). Next, the loss of feature amplitude is calculated by Equations (4) and (5), respectively.

where

means a normal sample, while

means an anomaly.

Normal and abnormal frames in the video often appear continuously. That is, several adjacent frames of a normal frame or an abnormal frame are also normal frames or abnormal frames, respectively. Therefore, the smoothness constraint loss (SCL) is added, so that after multiple trainings, the scores between adjacent normal frames and abnormal frames in a video are relatively close. Here,

is a preset hyperparameter, which limits the value of the time-smoothing loss function within a reasonable range, and

represents the index of the current frame in the video, as shown in Equation (6).

Finally, the loss function consists of three parts, the amplitude score loss function, the amplitude feature loss function, and the time-smoothing loss function, as shown in Equation (7).

3.2. Track Anomaly Location Algorithm Based on Unsupervised Background Extraction

After detecting abnormal video frames, an unsupervised foreground segmentation method is introduced in this paper to accurately locate the position of foreign objects. This method does not rely on pixel-level annotations, making it suitable for scenarios where annotation resources are limited in practical engineering. Through the multi-scale VAE (Variational Autoencoder) structure and pixel-level background weight design, the model can still maintain high segmentation accuracy in complex background and small target scenarios.

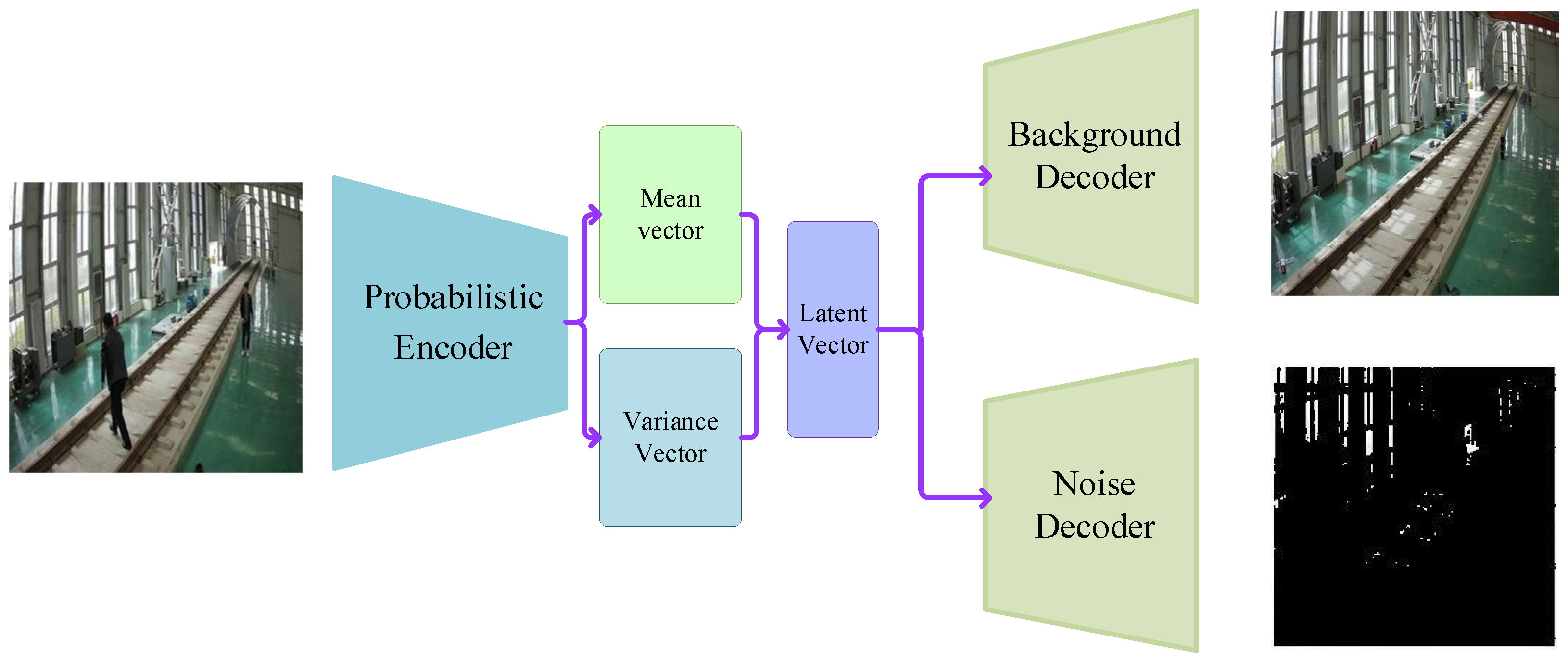

For a segmentation task, as shown in

Figure 4, VAE first uses the decoder to analyse the input image and then generates the mean vector and the variance vector representing its internal information. Based on these statistical parameters, the model further synthesises a latent vector containing the input image information. This latent vector then passes through two different decoding paths. On the one hand, the background decoder focusses on analysing and reconstructing the background of the image. However, the noise decoder is dedicated to capturing and separating random noise in the input image.

The basic VAE is highly sensitive to lighting, leading to misclassification of the foreground in areas with dramatic lighting changes, while potentially missing smaller foreign object details in poorly lit environments. Additionally, the model exhibits blurry performance in background generation, especially when the input image size increases, making it challenging to simultaneously enhance the clarity of the output background. Therefore, the unique feature of this model is that it divides the encoder and decoder modules into three progressive levels. In the first two levels, two layers of upsampling/downsampling modules are configured, respectively. After these layers, the low-dimensional variance and mean feature vectors are extracted, and then the latent representation space is constructed. The third level integrates a layer of conventional upsampling/downsampling modules and a layer of dynamical upsampling/downsampling modules. A dynamical layer is enabled as needed to generate higher-dimensional latent variables. These latent variables at different levels capture different scale image features, from fine- to coarse-grained. All of the above descriptions are illustrated in

Figure 5.

By adopting this multi-level VAE architecture, the model effectively integrates the latent image feature information at various scales. It is worth noting that, due to parameter sharing, the parameters for three VAEs operating in parallel are equivalent to those of a single VAE with the highest complexity. This design enables efficient parameter reuse between the low-level and high-level VAE structures.

In the decoder part, the separated background image decoder and noise image decoder are merged into a unified encoder, which outputs four-channel features, with the aim of promoting in-depth fusion among different latent variables. In addition, in the double layer upsampling/downsampling modules of the third level, a layer of adaptively adjustable upsampling/downsampling layer is specially introduced, aiming to enhance the adaptability and processing flexibility of the model for input images of different sizes.

To enhance the adaptability and generalisability for input images of different sizes, the corresponding number of sampling layers is determined on the basis of the input images of different sizes. Specifically, for smaller images (for example, less than or equal to 500 × 500 pixels), 5 sampling blocks are selected and the number of convolutional channels is set to {3, 64, 160, 160, 32, 16}. For larger images, 6 sampling blocks are set and the corresponding number of convolutional channels is set to {3, 64, 160, 160, 160, 32, 16}. This allocation of the number of channels can ensure that the model fully captures the features of the image with a limited number of parameters, thereby achieving efficient and accurate feature extraction. It can not only maintain low computational complexity, but also dig and integrate image features at a deeper level, further improving the performance and robustness of the model in complex scenarios.

3.2.1. Design of Encoder and Decoder Architecture

To locate the position of foreign objects more accurately, the spatial position encoding is added to two encoding channels, namely, the row encoding channel and the column encoding channel. In each convolutional block for encoding and deconvolutional block for decoding, it is expressed as follows.

where

and

represent the encoding of the height and width of the image, respectively,

and

are the row index and column index, respectively.

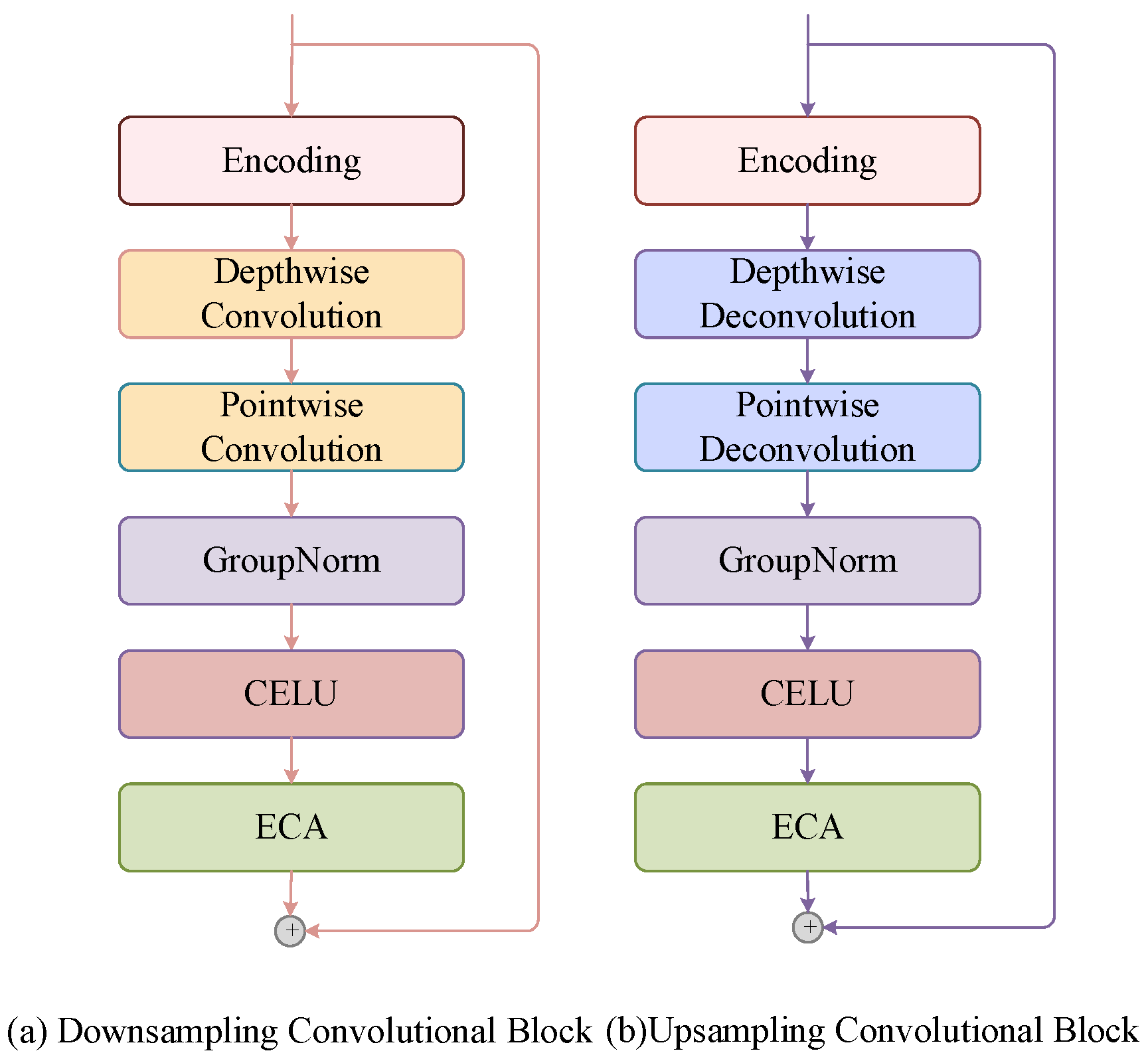

Traditional convolutional modules have a large computation and numerous parameters. Therefore, as shown in

Figure 6, this paper proposes the use of depth-wise convolution and point-wise convolution to replace conventional convolution operations [

56]. Then, the Group Normalisation layer and the Continuously Differentiable Exponential Linear Unit (CELU) activation function are used to reduce overfitting and improve the generalisation ability. To enhance the ability of the model to distinguish and focus on the features of each convolutional channel and strengthen its robustness to illumination noise, Efficient Channel Attention (ECA) [

57] is introduced into the network. Through the application of this attention module, the model can explore multichannel information more meticulously, thereby improving the quality of depicting and reconstructing complex backgrounds.

3.2.2. Pixel-by-Pixel Background Weight Coefficient

Compared to other image generation models, background generation places greater emphasis on the distinction between background and foreground information. To enhance the reconstruction loss, a background coefficient is introduced, which makes the reconstruction loss, which originally focused solely on image generation. It is more suitable for the background generation subtask. The calculation process is detailed in Equations (9)–(14).

Here,

is denoted as the value of the pixel in the c-th channel of image , where , , and h and w represent the height and width of the image, respectively. Similarly, represents the pixel value of the reconstructed background at the same position. Then, the local reconstruction loss of pixel based on the L1 norm can be described by Equation (9). After the above processing, the mask information is too blurred and incoherent. Therefore, a smoothing filtering process can be added to increase the smoothness of the mask. A square kernel with a size of is used to calculate the average of the mask. Considering the continuity of foreground and background pixels, the nearby pixels of foreground pixels are probably foreground pixels. The nearby pixels of the background pixels are most likely the background pixels. Additionally, if the sizes of the input images are different, the number of pixels occupied by the foreground mask is also different. Therefore, k is set with and γ, a positive hyperparameter that can adjust the threshold to distinguish between background and foreground, and denotes the rounding function. Finally, the error caused by the foreground is amplified, making the reconstruction loss with a hyperparameter tend to reconstruct the background rather than the entire image.

3.2.3. Loss Function

As the weight changes, the reconstruction loss will change according to the different weight values of each pixel. For pixels considered background pixels, their weights are close to 1, and the reconstruction loss will be more affected by these pixels. For pixels that are not background pixels, their weights are close to 0, and the reconstruction loss is less affected by these pixels.

Therefore, in the VAE, the model adds a decoder with only one output channel, which is used to decode the estimation

of the pixel error

. The previously calculated background weight coefficient is added to it for weighting, so that the loss is mainly limited to the background area, as shown in Equation (13).

The multi-scale VAE architecture contains latent variables at multiple scales (levels). Each scale of latent variables has the corresponding means and variances, and they all affect the KL divergence loss. For a multiscale hierarchical VAE, the total KL divergence can be defined as follows.

where

represents the latent variable at the

-th scale level.

is the total number of scale levels.

is the approximate posterior distribution of the high-level latent variable

given the observed data

and the low-level latent variable

.

is the corresponding prior distribution.

Then the KL divergence can be represented by Equation (15).

where the mean of the latent variable generated by the encoder network is

and the variance is

. The final loss function is the weighted sum of the reconstruction loss based on the background weight, the noise estimation loss based on the background weight, and the KL regularization loss. As shown in Equation (16),

,

, and

are weight coefficients.

3.3. Dataset

The experiment is conducted using a real track at the experimental base. The camera is installed on one side of the track to detect foreign objects in the direction of a single train’s travel. The horizontal and vertical distances between the camera and the centre of the track line are both 2 m. The cameras used in the experimental base are Hikvision Smart265 cameras (Manufactured by Hangzhou Hikvision Digital Technology Co., Ltd., located in China.) with a focal length of 6 mm. The video resolution of the videos is and the frame rate is 25 Frames Per Second (FPS).

Foreign objects in track intrusion typically include hard objects such as stones and concrete blocks, soft objects such as plastic sheets and kites, liquid substances such as debris flow and torrential flood, and living organisms such as humans and animals. In the experiment, some typical foreign objects are simulated, including persons, helmets, boxes of various sizes, bags, long steel, and ponding, etc. The track videos film in the Huanghua laboratory are sampled evenly at intervals of about 20 s to obtain video clips with a duration of 2 s. These video clips are classified according to the types of foreign objects, including four categories, normal, abnormal (human), abnormal (object), and abnormal (ponding). The data set is divided into a training set and a testing set in an 8:2 ratio.

Table 2 shows the division of the training set and the testing set of the Huanghua track anomaly detection dataset.

LabelMe [

58] can mark the standard contours of abnormal objects, pedestrians, or ponds, which are converted into mask images of the foreground. The number of images in the training set and the test set is shown in

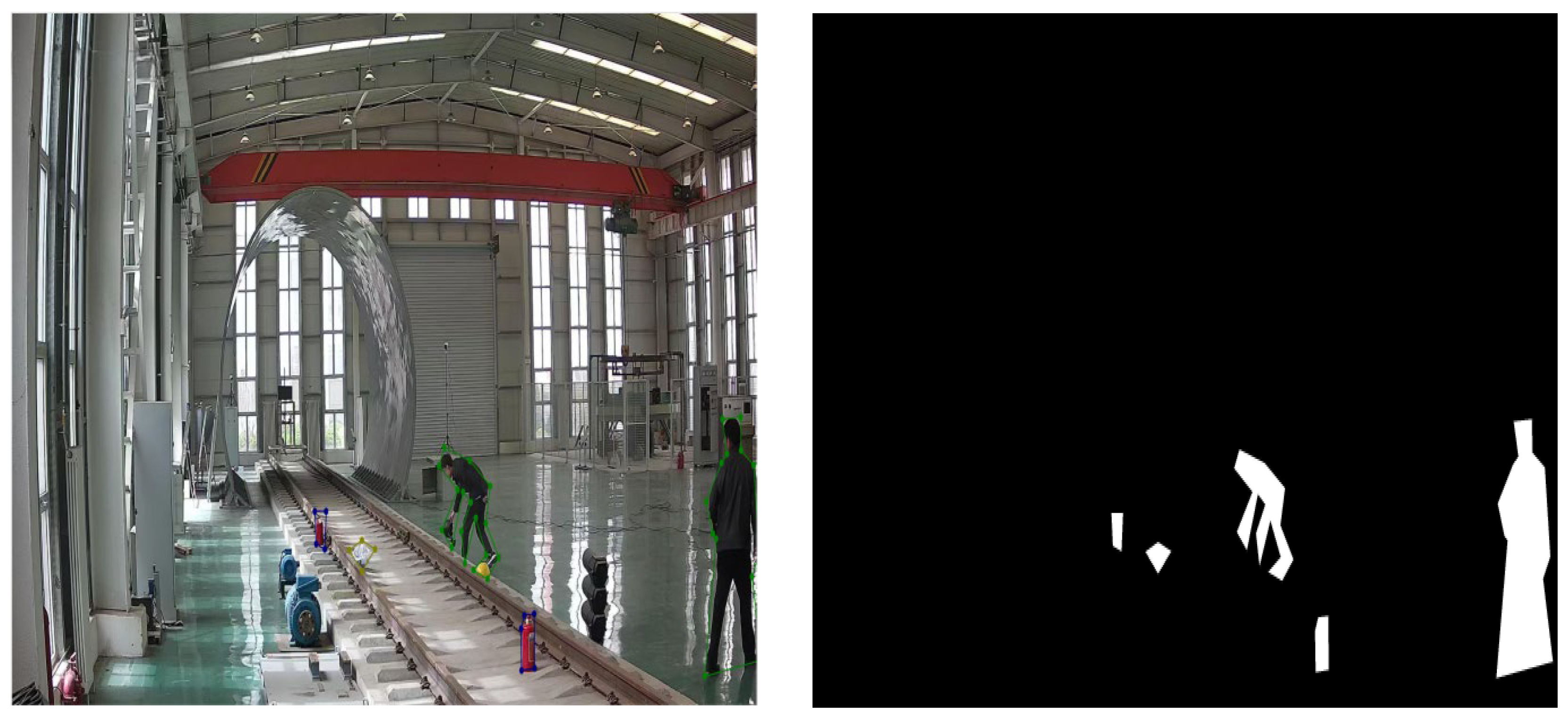

Table 3. The target contour and foreground mask are shown in

Figure 7. In foreign object intrusion scenarios, the training set uses only normal samples for model training, with no need for abnormal samples. During testing, normal and abnormal samples are used. However, image samples containing foreign objects are difficult to collect, resulting in a scarcity of abnormal samples. Since only normal samples are used during training, the use of a large number of normal samples for testing would lead to artificially inflated test accuracy. Therefore, only a small number of normal samples and abnormal samples are used in the testing process.

3.4. Experimental Environment and Evaluation Metrics

All models are optimized using Adam with weight decay of 0.005 and momentum of 0.9. The learning rate is initialised at 0.001 and is decreased by a factor of 10 after 8 and 11 epochs, respectively. For the X3D, the X width is 1, the X-neck is 2.25, and the X-depth is 2.2. The , , and are set to 0.25, 6, and 320, respectively. The deep learning experiments are all carried out on the A5000 and the Intel (R) Xeon (R) E5-2620 v3 @ 2. 40 GHz CPU. The operating system is Ubuntu 20.04 and the CUDA version is 12.0.

The area under the receiver operating characteristic curve (AUC) is used to evaluate the classification performance of the model. The calculation of the AUC is as follows. Suppose the number of positive samples is

, the number of negative samples is

,

represents the True Positive Rate, and

represents the False Positive Rate, then

and

can be expressed by Equation (17).

where

is the number of True Positives,

is the number of False Negatives,

is the number of False Positives, and

is the number of True Negatives. The AUC is the area under the ROC curve and can be calculated by Equation (18).

where

represents the inverse function of

and

is a variable ranging from 0 to 1.

The pixel-level F1 score metric evaluates the similarity between the generated foreground mask and the ground-truth mask by measuring the alignment of foreground pixels. Combining precision and recall, it offers a comprehensive assessment of the quality of background generation.

Precision indicates the proportion of pixels that are correctly classified as the mask in the generated foreground mask. Recall indicates the proportion of pixels in the ground-truth foreground mask that are correctly classified as the foreground. The calculation is shown in Equation (19).

The F1 score is used as a comprehensive evaluation of precision and recall. The range of values of the F1 score is 0 to 1, and a higher value indicates a better degree of match between the generated background and the ground truth background. Calculating the F1 score is shown in Equation (20).

In addition, based on the proposed model, eight groups of experiments are conducted for different values of K, and it is compared to determine whether the selection of the K value is related to the final AUC metric.

Figure 8 shows that when

K on the horizontal axis varies between 1 and 3, the AUC metric of the model increases as the value of

K increases and when

K on the horizontal axis varies between 3 and 8, the AUC metric of the model decreases as

K increases. The highest AUC metric that can be obtained is 0.9964, and the lowest is 0.9732, indicating that the setting of the

parameter indeed has an impact on the final result and is sufficient to show that the proper

K value can effectively improve the performance of the model.

4. Results

To verify the effectiveness of the improved algorithm for detecting foreign objects in videos, the impacts of three improvement modules on the performance of the video anomaly detection model, namely video preprocessing strategies, attention enhancement, and loss function optimisation, are systematically explored through ablation experiments, as shown in

Table 4. Compared to baseline, preprocessing, the attention mechanism and the loss function improve the AUC metrics by 7.29%, 2.23% and 7.95%, respectively. Amplification of the far-point information and the mask can both improve the AUC of the model, which fully demonstrates the importance of constructing the track clearance as the ROI and processing the far-point information when dealing with railway scenes. The Top-K selection is implemented for baseline X3D, and the corresponding amplitude score/feature loss function is introduced, leading to a 7.01% increase in the AUC metric. It turns out that the effectiveness of the top-K and loss function optimisation in improving the discriminative ability of the model. More detailed information can be found in

Table 4. The results demonstrate that the optimisation measures in these three aspects could all significantly improve the accuracy of the model, specifically reflected in the increase in AUC.

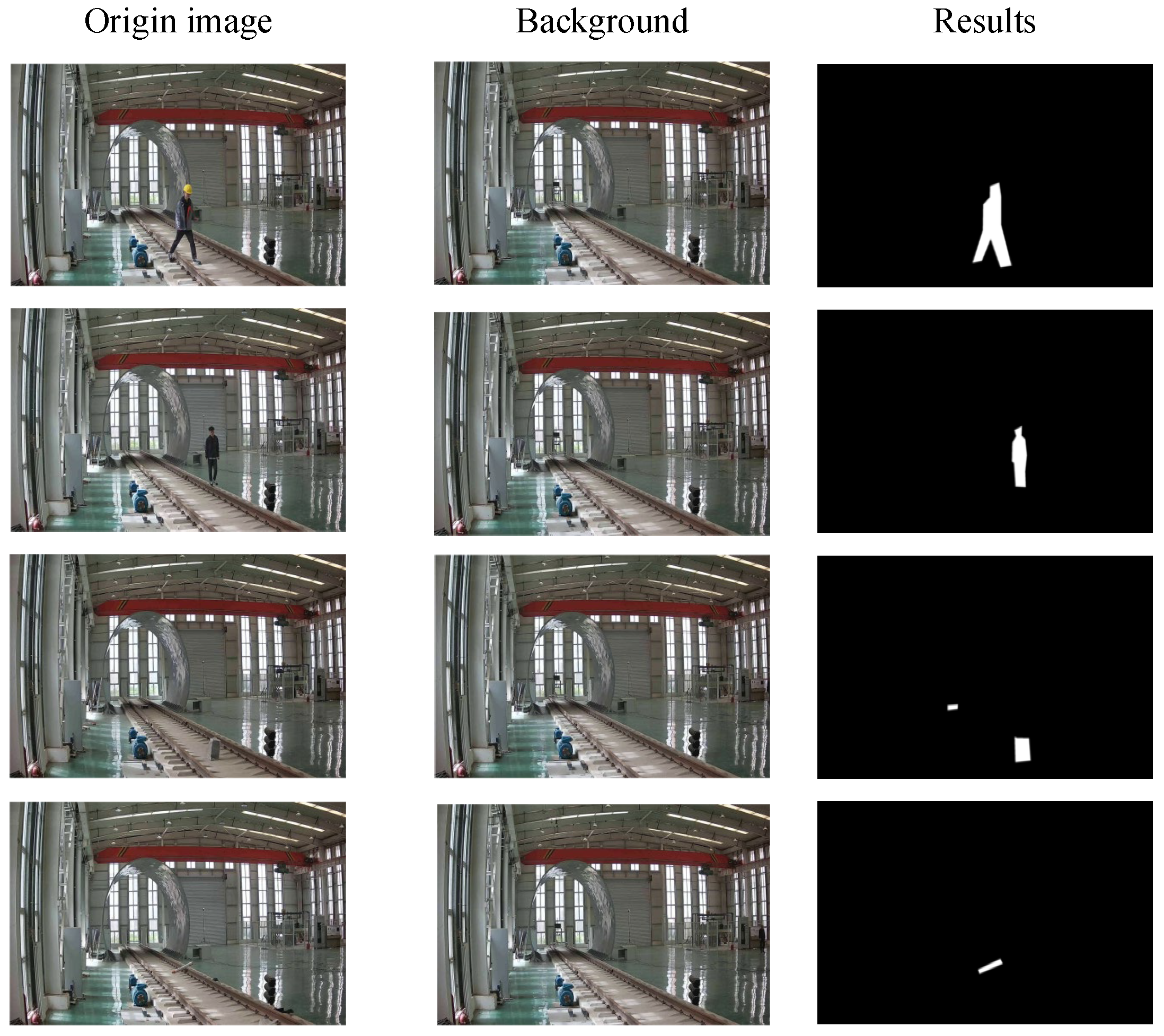

To further obtain the location of foreign objects, anomaly localisation experiments are conducted on the Huanghua rail anomaly localisation data set using an unsupervised foreground segmentation algorithm. In this experiment, the algorithm is compared and analysed in detail with the basic VAE, AE-NE. Upon examining the data in

Table 5, it is evident that the proposed algorithm shows better performance compared to other models in most of the evaluation metrics. Specifically, without considering the setting of ROI area and image cropping operations, the algorithm achieves improvements of 3.34%, 2.80%, and 3.08%, respectively, in the three key metrics of precision, recall, and F1 score. After incorporating ROI area setting and image cropping strategies, compared to baseline, the improvement ranges of the method on the above three metrics are further refined to 2.54%, 3.46%, and 3.03%. This result fully proves the effectiveness and superiority of the proposed algorithm. By observing

Figure 9, in the typical sample cases shown in the third row, the baseline method fails to effectively identify the details of tiny foreign objects under faint shadows. However, the proposed algorithm detects and highlights them successfully. Therefore, it can be concluded that, compared to the baseline, the segmentation algorithm demonstrates a significant improvement in recognition performance when dealing with low-light environments and scenarios to identify tiny objects.

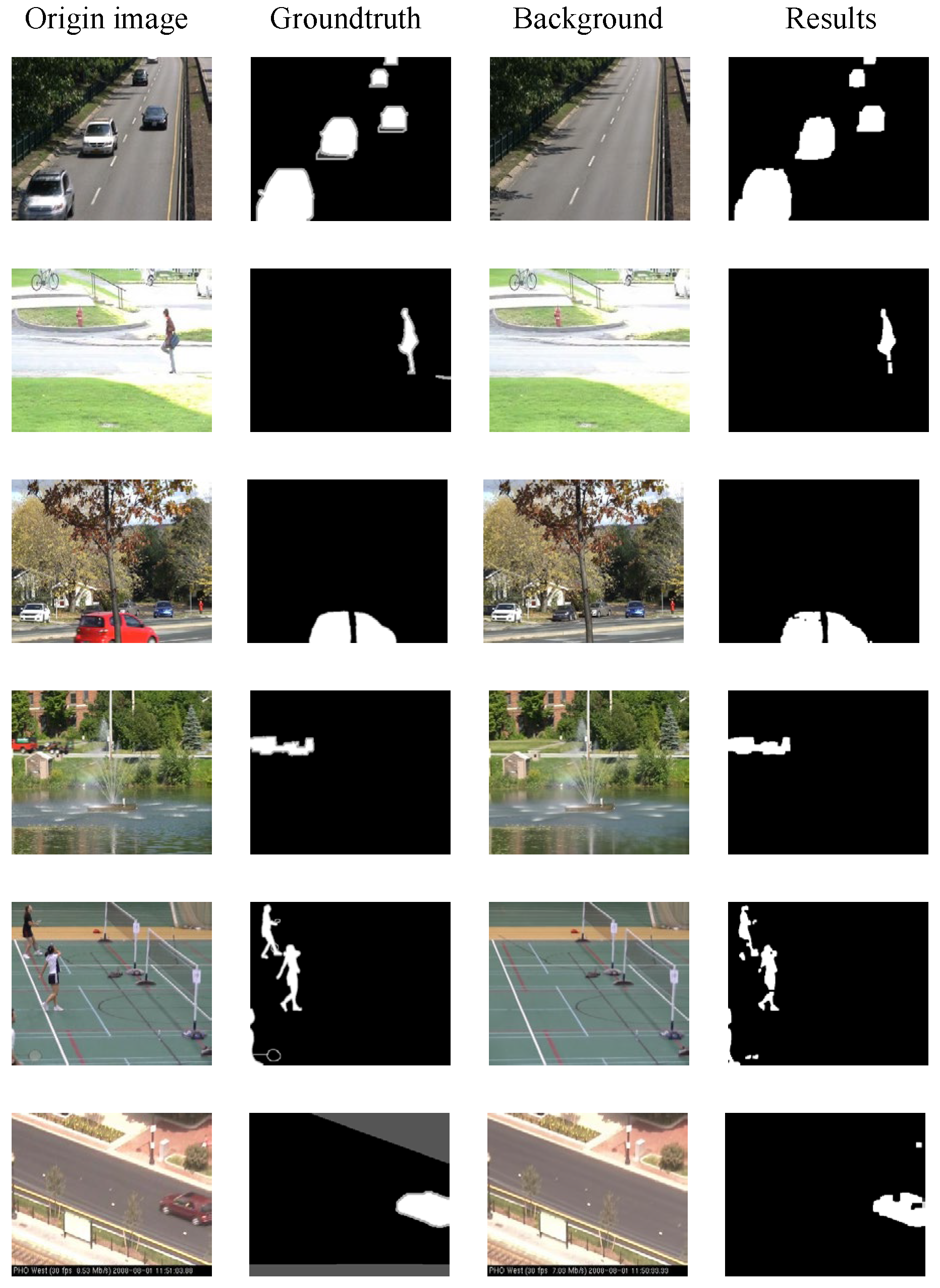

To better demonstrate the superiority of the proposed unsupervised foreground segmentation algorithm,

Table 6 provides a comparison of the F1 scores between the proposed algorithm and other current advanced models in the CDnet2014 dataset. It is evident that, in terms of overall average performance, the model proposed in this paper has achieved an improvement of 5.34% compared to the second-best performing model, the Autoencoder with Noise Contrastive Estimation (AE-NE) [

41]. Furthermore, our model has achieved the best performance in the categories of Bad Weather, Camera Jitter, Dynamic Background, Intermittent Object Motion, and Thermal. Specifically, compared to AE-NE, our model has achieved improvements of 5.76%, 3.67%, 26.78%, 0.15%, and 11.95% in these categories, respectively.

These results highlight the sound performance of the unsupervised foreground segmentation algorithm in a variety of complex environments, as shown in

Figure 10. The algorithm is applicable to scenarios such as low illumination, heat flow disturbances, low frame rates, and other basic situations.

5. Discussion

With the increasing speed of trains and the growing number of risk factors, video-based monitoring for foreign object intrusion, which operates under all weather conditions, plays a crucial role in ensuring the safe operation of railways. To address data imbalances and the labour-intensive nature of data annotation in railway scenarios, a weakly supervised video anomaly detection method is proposed. It uses track clearance with ROI masks, far-point amplification, and global scene analysis for video preprocessing. Enhanced feature extraction is achieved using X3D with nonlocal and multiscale temporal attention mechanisms. The model is optimized through Top-K selection and tailored loss functions. After obtaining specific abnormal video frames, an unsupervised VAE-based segmentation algorithm further refines anomaly location by integrating spatial position encoding and optimising the sampling module to balance accuracy and efficiency. Comprehensive experiments carried out on relevant datasets demonstrate the superior generalisability and effectiveness of the proposed approach in detecting foreign object intrusions. Upon successful detection and segmentation of an intrusion, the system triggers real-time audiovisual alarms at the railway operation control centre. More critically, this alert can be directly integrated with the signalling system to automatically prompt approaching trains to slow down or initiate an emergency stop, thereby preventing potential collisions and ensuring the safety of passengers and infrastructure. This enables proactive measures such as alerting approaching trains to slow down or stop, thereby preventing potential collisions and ensuring the safety of passengers and infrastructure, while minimizing service disruptions. Additionally, the method proposed in this paper not only meets the requirements for high-speed rail wheelsets, but can also be applied in various scenarios that require video-based intrusion detection, such as maglev railways, highways, and airports.

Despite promising results, the proposed framework has certain limitations. In terms of processing speed, while the system processes a 2 s clip in 0.41 s, achieving an end-to-end latency low enough for instantaneous braking decisions at the highest train speeds (e.g., over 300 km/h) remains a challenge, as the cumulative delay from detection to alarm dissemination and driver reaction must be critically minimized. Regarding potential hazards, the system is primarily designed for intrusion detection rather than risk assessment; it cannot distinguish between a high-risk obstacle (e.g., a large metal object) and a lower-risk one (e.g., a plastic bag), which could lead to unnecessary emergency braking if not coupled with a risk assessment module. Furthermore, the reliance on technical solutions based purely on visual data makes the system susceptible to performance degradation under extreme weather conditions (e.g., heavy fog or torrential rain) that severely obscure camera visibility, and it does not leverage complementary sensors such as LiDAR or radar for redundancy in such scenarios.

Although the detection of foreign objects is addressed in this paper, the specific location and size of these objects still need to be further estimated. Therefore, in future work, video foreign object intrusion detection under multi-scenario conditions will be further considered, particularly focusing on the robustness of the model under complex scenario conditions. By combining binocular vision and camera self-calibration, we aim to effectively estimate and evaluate the size and position of foreign objects, thus assessing the risk degree of foreign object intrusion on the track.