Quality Assessment of Solar EUV Remote Sensing Images Using Multi-Feature Fusion

Abstract

Highlights

- A novel hybrid framework for assessing solar EUV image quality was developed, combining deep learning features from a HyperNet-based model with 22 handcrafted physical and statistical indicators.

- The fusion of these feature types significantly improved the performance of image quality classification, achieving a high accuracy of 97.91% and an AUC of 0.9992.

- This method provides a robust and scalable solution for the automated quality con-trol of large-scale solar EUV observation data streams, which is crucial for space weather forecasting.

- The research demonstrates the effectiveness of a multi-feature fusion approach for complex image quality assessment tasks, offering a new direction for similar applica-tions in remote sensing.

Abstract

1. Introduction

2. Materials and Methods

2.1. Data Acquisition from the Detector

2.2. Dataset and ROI Selection Strategy

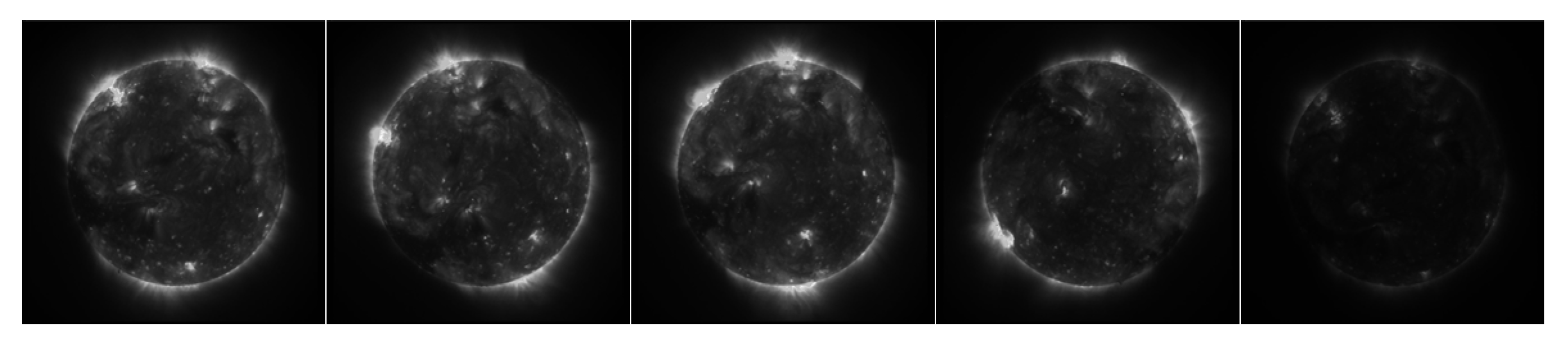

2.2.1. Image Degradation Simulation and Labeling

2.2.2. Implementation Details

2.2.3. ROI Selection Strategy

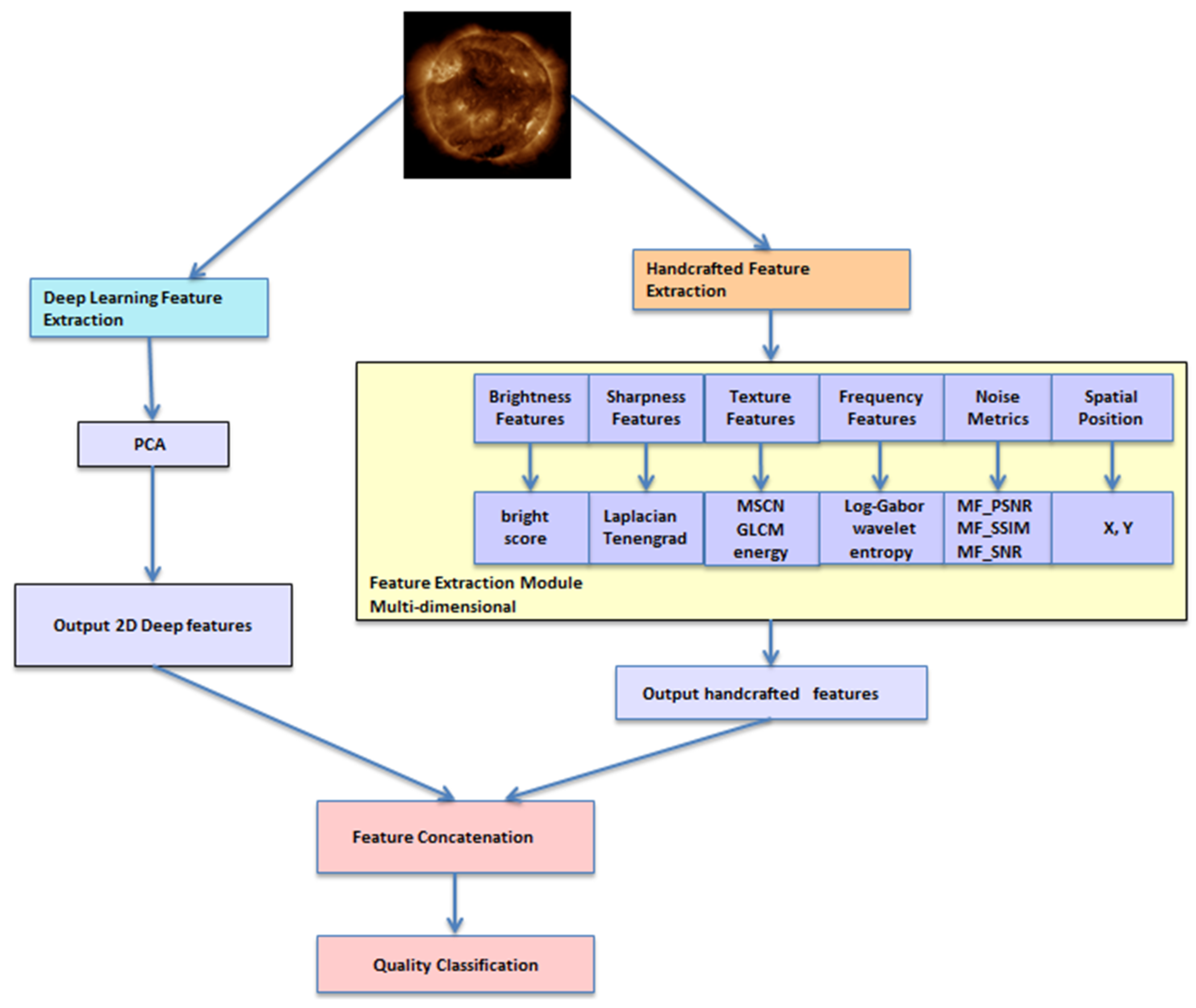

2.3. Feature Extraction and Fusion

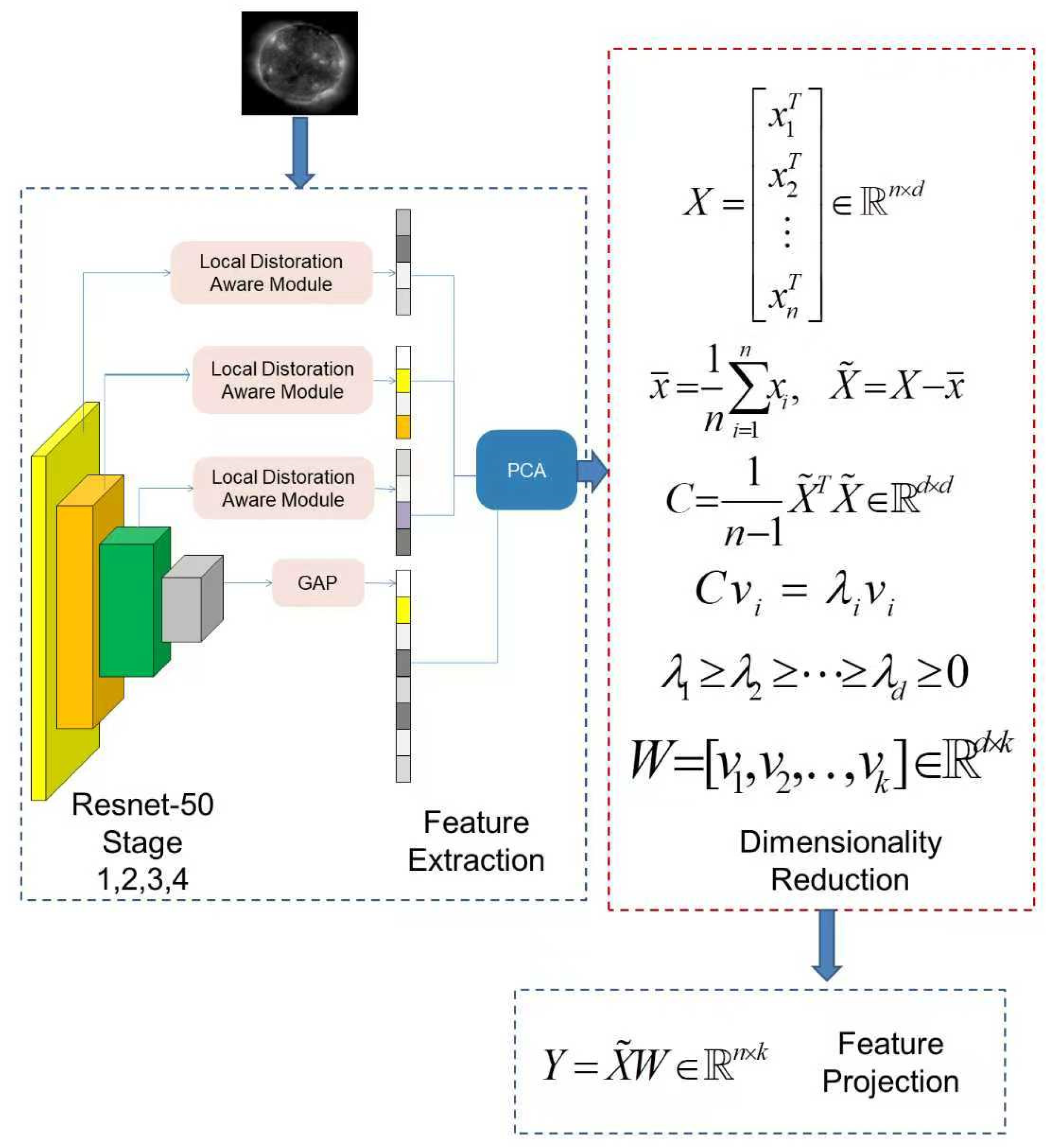

2.3.1. Deep Learning Feature Extraction

2.3.2. Handcrafted Physical–Statistical Features

2.3.3. Feature Fusion and Classification

3. Results

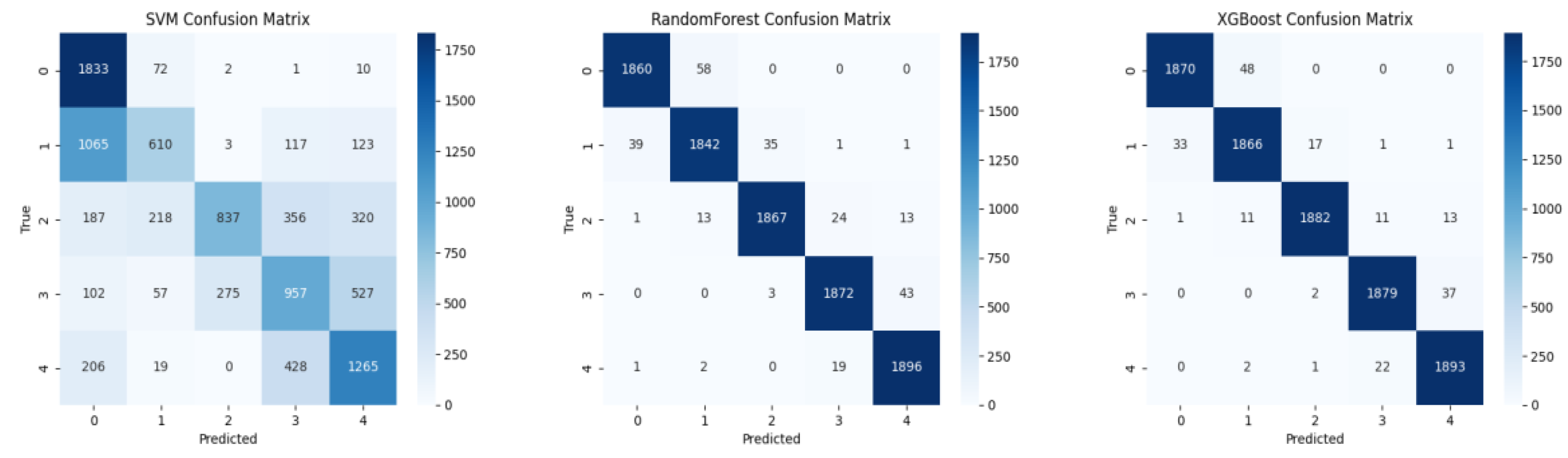

3.1. Performance Analysis

3.2. Feature Analysis

3.3. Ablation Study

3.4. On-Orbit Measurement Verification and Solar Physics Interpretation

3.4.1. Normal Observation Images and Stable Physical Features

3.4.2. Exposure Variation and Image Photometric Attenuation

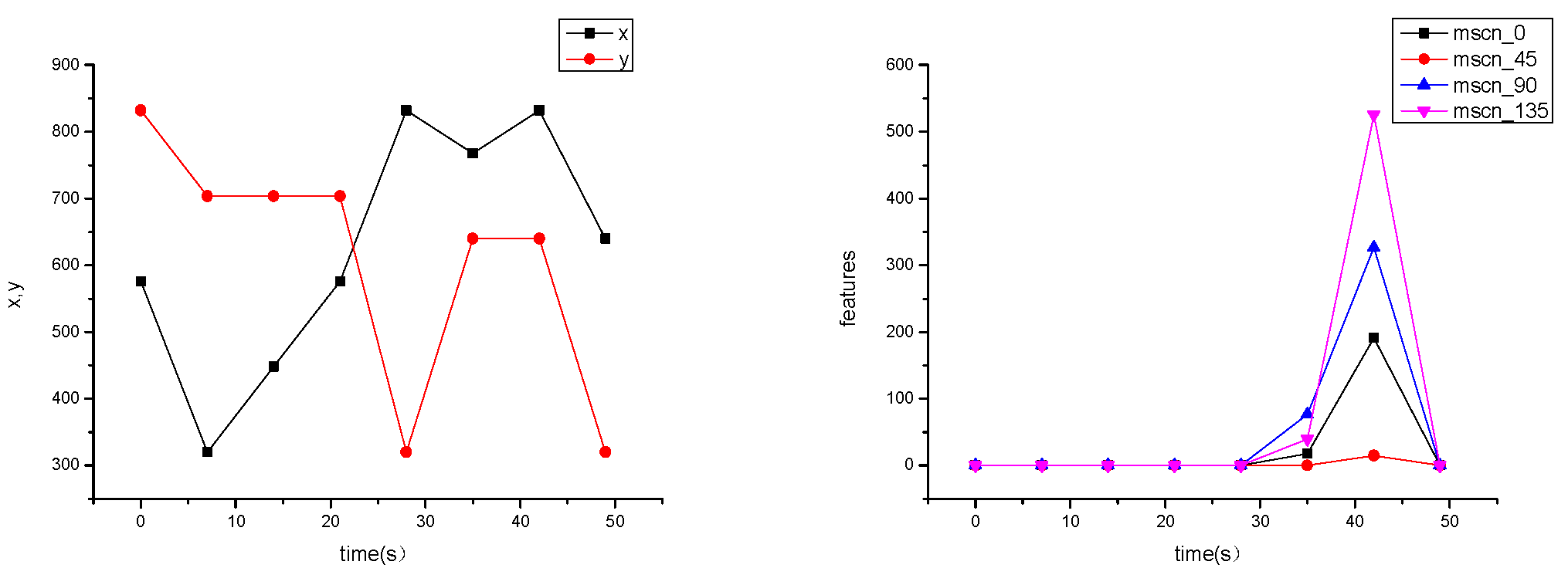

3.4.3. Field-of-View Deviation

3.5. Cross-Dataset Validation Considerations

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Feature | Definition | Solar EUV Image Meaning |

|---|---|---|

| brightness | Mean brightness of the image block | Indicates local radiative intensity; higher brightness corresponds to heated coronal regions. |

| score | Normalized image quality score | Combines brightness and gradient, reflecting both illumination and structural activity of solar regions. |

| gradient | Average gradient magnitude of the block | Highlights edges such as coronal loop boundaries, filaments, or CME fronts. |

| laplacian | Variance of the Laplacian (focus measure) | Sensitive to small-scale variations, revealing fine coronal structures. |

| sharpness | Sharpness based on edge strength | High sharpness indicates well-resolved loop systems or active region cores. |

| mscn_0 | MSCN coefficient in 0° direction | Captures local structural correlation along horizontal direction. |

| mscn_45 | MSCN coefficient in 45° direction | Captures local structural correlation along diagonal direction (45°). |

| mscn_90 | MSCN coefficient in 90° direction | Captures local structural correlation along vertical direction. |

| mscn_135 | MSCN coefficient in 135° direction | Captures local structural correlation along diagonal direction (135°). |

| glcm_contrast | GLCM contrast feature | High contrast values reveal strong magnetic neutral lines or flare kernels. |

| glcm_homogeneity | GLCM homogeneity feature | Quiet Sun regions show high homogeneity; active regions show lower values. |

| glcm_energy | GLCM energy feature | Indicates textural regularity; high energy corresponds to repetitive loop structures. |

| log_gabor | Log-Gabor filter response | Captures multi-scale curved structures, useful for identifying CME fronts or loops. |

| MFGS | Median Filter Gradient Similarity metric | A solar-specific focus measure; evaluates clarity of coronal fine structures. |

| PSNR | Peak Signal-to-Noise Ratio | Reflects overall fidelity of the image, useful for quantifying degradation effects. |

| SSIM | Structural Similarity Index | Sensitive to luminance, contrast, and structural changes in solar features. |

| SNR | Signal-to-Noise Ratio | Indicates detectability of faint eruptions or coronal dimmings against background noise. |

| LH_entropy | Wavelet entropy of LH sub-band | Reflects complexity of horizontal structures (e.g., plasma flows). |

| HL_entropy | Wavelet entropy of HL sub-band | Reflects complexity of vertical structures (e.g., coronal loops). |

| HH_entropy | Wavelet entropy of HH sub-band | Reflects diagonal structural complexity, often linked to turbulence. |

| x | x-coordinate of the upper-left corner of the block | Preserves spatial context, showing whether the region lies near disk center or limb. |

| y | y-coordinate of the upper-left corner of the block | Preserves spatial context, showing whether the region lies near disk center or limb. |

References

- Benz, A.O. Flare observations. Living Rev. Sol. Phys. 2017, 14, 2. [Google Scholar] [CrossRef]

- Temmer, M. Space weather: The solar perspective. Living Rev. Sol. Phys. 2021, 18, 4. [Google Scholar] [CrossRef]

- Yashiro, S.; Gopalswamy, N.; Michalek, G.; St. Cyr, O.C.; Plunkett, S.P.; Rich, N.B.; Howard, R.A. A Catalog of White Light Coronal Mass Ejections Observed by the SOHO Spacecraft. J. Geophys. Res. Space Phys. 2004, 109, A07105. [Google Scholar] [CrossRef]

- BenMoussa, A.; Gissot, S.; Schühle, U.; Del Zanna, G.; Auchere, F.; Mekaoui, S.; Jones, A.R.; Dammasch, I.E.; Deutsch, W.; Dinesen, H.; et al. On-orbit degradation of solar instruments. Sol. Phys. 2013, 288, 389–434. [Google Scholar] [CrossRef]

- Müller, D.; St. Cyr, O.C.; Zouganelis, I.; Gilbert, H.R.; Marsden, R.; Nieves-Chinchilla, T.; Antonucci, E.; Auchere, F.; Berghmans, D.; Horbury, T.S.; et al. The Solar Orbiter mission—Science overview. Astron. Astrophys. 2020, 642, A1. [Google Scholar] [CrossRef]

- Raouafi, N.E.; Matteini, L.; Squire, J.; Badman, S.T.; Velli, M.; Klein, K.G.; Chen, C.H.K.; Whittlesey, P.L.; Laker, R.; Horbury, T.S.; et al. Parker Solar Probe: Four years of discoveries at solar cycle minimum. Space Sci. Rev. 2023, 219, 8. [Google Scholar] [CrossRef]

- Zhu, Z.M.; Leng, X.Y.; Guo, Y.; Li, C.; Li, Z.; Lu, X.; Huang, F.; You, W.; Deng, Y.; Su, J.; et al. Research on the principle of multi-perspective solar magnetic field measurement. Res. Astron. Astrophys. 2025, 25, 045011. [Google Scholar] [CrossRef]

- Deng, Y.; Zhou, G.; Dai, S.; Wang, Y.; Feng, X.; He, J.; Jiang, J.; Tian, H.; Yang, S.; Hou, J.; et al. Solar Polar Orbit Observatory. Sci. Bull. 2023, 68, 298–308. [Google Scholar] [CrossRef]

- Mittal, A.; Moorthy, A.K.; Bovik, A.C. No-reference image quality assessment in the spatial domain. IEEE Trans. Image Process. 2012, 21, 4695–4708. [Google Scholar] [CrossRef]

- Zhang, L.; Shen, Y.; Li, H. VSI: A visual saliency-induced index for perceptual image quality assessment. IEEE Trans. Image Process. 2015, 23, 4270–4281. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Li, F.; Liu, H. A survey of DNN methods for blind image quality assessment. IEEE Access 2019, 7, 123788–123806. [Google Scholar] [CrossRef]

- Agnolucci, L.; Galteri, L.; Bertini, M.; Del Bimbo, A. Arniqa: Learning distortion manifold for image quality assessmentt. In Proceedings of the 2024 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2024; pp. 6189–6198. [Google Scholar] [CrossRef]

- Chiu, T.-Y.; Zhao, Y.; Gurari, D. Assessing Image Quality Issues for Real-World Problems. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3643–3652. [Google Scholar] [CrossRef]

- Madhusudana, P.C.; Birkbeck, N.; Wang, Y.; Adsumilli, B.; Bovik, A.C. Image quality assessment using contrastive learning. In Proceedings of the 2022 IEEE/CVF Winter Conference on Applications of Computer Vision Workshops (WACVW), Waikoloa, HI, USA, 4–8 January 2022. [Google Scholar] [CrossRef]

- Golestaneh, S.A.; Dadsetan, S.; Kitani, K.M. No-reference image quality assessment via transformers, relative ranking, and self-consistency. In Proceedings of the 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 3–8 January 2022. [Google Scholar] [CrossRef]

- Kang, L.; Ye, P.; Li, Y.; Doermann, D. Convolutional neural networks for no-reference image quality assessment. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1733–1740. [Google Scholar] [CrossRef]

- Su, S.; Yan, Q.; Zhu, Y.; Zhang, C.; Ge, S.; Sun, W.; Zhang, Y. Blindly assess image quality in the wild with a content-aware hypernetwork. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition(CVPR), Seattle, WA, USA, 13–19 June 2020; pp. 3667–3676. [Google Scholar]

- Wang, C.; Lv, X.; Fan, X.; Ding, W.; Jiang, X. Two-channel deep recursive multi-scale network based on multi-attention for no-reference image quality assessment. Int. J. Mach. Learn. Cybern. 2023, 14, 2421–2437. [Google Scholar] [CrossRef]

- Yang, S.; Wu, T.; Shi, S.; Lao, S.; Gong, Y.; Cao, M.; Wang, J.; Yang, Y. Maniqa: Multi-dimension attention network for no-reference image quality assessment. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), New Orleans, LA, USA, 19–20 June 2022; pp. 1191–1200. [Google Scholar] [CrossRef]

- Jarolim, R.; Veronig, A.M.; Pötzi, W.; Podladchikova, T. Image-quality assessment for full-disk solar observations with generative adversarial networks. Astron. Astrophys. 2020, 643, A72. [Google Scholar] [CrossRef]

- Deng, H.; Zhang, D.; Wang, T.; Liu, Z.; Xiang, Y.; Jin, Z.; Cao, W. Objective image-quality assessment for high-resolution photospheric images by median filter-gradient similarity. Sol. Phys. 2015, 290, 1479–1489. [Google Scholar] [CrossRef]

- Popowicz, A.; Radlak, K.; Bernacki, K.; Orlov, V. Review of image quality measures for solar imaging. Sol. Phys. 2017, 292, 187. [Google Scholar] [CrossRef]

- Denker, C.; Dineva, E.; Balthasar, H.; Verma, M.; Kuckein, C.; Diercke, A.; Manrique, S.J.G. Image quality in high-resolution and high-cadence solar imaging. Sol. Phys. 2018, 293, 44. [Google Scholar] [CrossRef]

- Huang, Y.; Jia, P.; Cai, D.; Cai, B. Perception evaluation: A new solar image quality metric based on the multi-fractal property of texture features. Sol. Phys. 2019, 294, 133. [Google Scholar] [CrossRef]

- So, C.W.; Yuen, E.L.H.; Leung, E.H.F.; Pun, J.C.S. Solar image quality assessment: A proof of concept using variance of Laplacian method and its application to optical atmospheric condition monitoring. Publ. Astron. Soc. Pac. 2024, 136, 044504. [Google Scholar] [CrossRef]

- Chen, B.; Ding, G.X.; He, L.P. Solar X ray and Extreme Ultraviolet Imager (X EUVI) loaded onto China’s Fengyun 3E satellite. Light Sci. Appl. 2022, 11, 29. [Google Scholar] [CrossRef] [PubMed]

- Hosu, V.; Lin, H.; Sziranyi, T.; Saupe, D. KonIQ-10k: An ecologically valid database for deep learning of blind image quality assessment. IEEE Trans. Image Process. 2020, 29, 4041–4056. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Jolliffe, I. Principal component analysis. In International Encyclopedia of Statistical Science; Lovric, M., Ed.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 1094–1096. [Google Scholar]

- Yeganeh, H.; Wang, Z. Objective quality assessment of tone-mapped images. IEEE Trans. Image Process. 2012, 22, 657–667. [Google Scholar] [CrossRef]

- Pertuz, S.; Puig, D.; Garcia, M.A. Analysis of focus measure operators for shape-from-focus. Pattern Recognit. 2013, 46, 1415–1432. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst. Man Cybern. 1973, SMC-3, 610–621. [Google Scholar] [CrossRef]

- Deng, D.; Zhang, J.; Wang, T.; Su, J. A new algorithm of image quality assessment for photospheric images. Res. Astron. Astrophys. 2015, 15, 349–358. [Google Scholar]

- Krotkov, E. Focusing. Int. J. Comput. Vis. 1988, 1, 223–237. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Kovesi, P. Image features from phase congruency. Videre: J. Comput. Vis. Res. 1999, 1, 1–26. [Google Scholar]

- Zhang, L.; Zhang, L.; Mou, X.; Zhang, D. FSIM: A feature similarity index for image quality assessment. IEEE Trans. Image Process. 2011, 20, 2378–2386. [Google Scholar] [CrossRef] [PubMed]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A scalable tree boosting system. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar] [CrossRef]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Lemen, J.R.; Title, A.M.; Akin, D.J.; Boerner, P.F.; Chou, C.; Drake, J.F.; Duncan, D.W.; Edwards, C.G.; Friedlaender, F.M.; Heyman, G.F.; et al. The atmospheric imaging assembly (AIA) on the solar dynamics observatory (SDO). Sol. Phys. 2012, 275, 17–40. [Google Scholar] [CrossRef]

| Parameter | Value |

|---|---|

| Type | Back-illuminated, frame transfer |

| Average quantum efficiency | 45% |

| Pixel resolution | 1024 × 1024 |

| Pixel size | 13 μm × 13 μm |

| Peak full well capacity | 100 ke− |

| Output responsivity | 3.5 μV/e− |

| Readout noise | 8 e− rms (1.33 MHz) |

| Output ports | 2 |

| Degradation Type | L1 | L2 | L3 | L4 | L5 |

|---|---|---|---|---|---|

| Defocus Blur | Radius 3 | Radius 5 | Radius 9 | Radius 13 | Radius 17 |

| Motion Blur | 3 @ 0° | 5 @ 30° | 9 @ 45° | 13 @ 60° | 17 @ 90° |

| Gaussian Blur | 3, σ = 0.5 | 5, σ = 1.0 | 9, σ = 2.0 | 13, σ = 3.0 | 17, σ = 4.0 |

| Gaussian Noise | Var 0.0005 | Var 0.001 | Var 0.005 | Var 0.01 | Var 0.02 |

| Salt–Pepper Noise | Prob 0.0005 | Prob 0.001 | Prob 0.005 | Prob 0.01 | Prob 0.02 |

| Mixed Blur+Noise* | D3 + M3 @ 0° + G3,σ0.5 + N (G0.0005/S&P0.0005) | D5 + M5 @ 30° + G5,σ1.0 + N (G0.001/S&P0.001) | D9 + M9 @ 45° + G9,σ2.0 + N (G0.005/S&P0.005) | D13 + M13 @ 60° + G13,σ3.0 + N (G0.01/S&P0.01) | D17 + M17 @ 90° + G17,σ4.0 + N (G0.02/S&P0.02) |

| Overexposure | +10 | +15 | +20 | +30 | +40 |

| Category | Features | References |

|---|---|---|

| Brightness | Activity score, mean intensity | [30] |

| Sharpness | Mean gradient, Laplacian, Tenengrad | [31] |

| Texture | MSCN coefficient consistency; GLCM contrast, homogeneity, energy | [9,32] |

| Noise/Fidelity | MFGS; PSNR, SSIM, SNR vs. median-filtered version | [33,34,35] |

| Frequency | Log-Gabor responses, wavelet entropy | [36] |

| Spatial | Patch coordinates | [37] |

| Feature Type | Classifier | Accuracy | Precision | Recall | F1 Score | AUC | Training Time (s) | Prediction Time (s) |

|---|---|---|---|---|---|---|---|---|

| Deep Features (HyperIQA PCA 2D) | SVM | 0.5311 | 0.6954 | 0.5311 | 0.5320 | 0.8328 | 161.11 | 21.85 |

| XGBoost | 0.7727 | 0.7734 | 0.7727 | 0.7730 | 0.9641 | 0.66 | 0.015 | |

| Random Forest | 0.7951 | 0.7954 | 0.7951 | 0.7952 | 0.9691 | 6.65 | 0.208 | |

| Handcrafted Features (22D) | SVM | 0.5742 | 0.5988 | 0.5742 | 0.5560 | 0.8887 | 209.34 | 29.65 |

| XGBoost | 0.9750 | 0.9751 | 0.9750 | 0.9750 | 0.9990 | 1.49 | 0.024 | |

| Random Forest | 0.9696 | 0.9697 | 0.9696 | 0.9696 | 0.9985 | 10.68 | 0.146 | |

| Fused Features (22 + 2D) | SVM | 0.5737 | 0.5986 | 0.5737 | 0.5556 | 0.8885 | 214.38 | 30.93 |

| XGBoost * | 0.9791 | 0.9792 | 0.9791 | 0.9792 | 0.9992 | 1.25 | 0.024 | |

| Random Forest | 0.9736 | 0.9736 | 0.9736 | 0.9736 | 0.9988 | 10.75 | 0.157 |

| Feature | Observed Variation |

|---|---|

| Low-level metrics (score, brightness, gradient, Laplacian, sharpness) | ≤0.1 (normalized units) |

| PSNR(Peak Signal-to-Noise Ratio) | ±3–4 dB |

| SNR (Signal-to-Noise Ratio) | ±3–4 dB |

| GLCM-based texture features | < 0.01 |

| Spatial coordinates | Stable (no geometric misregistration) |

| Overall contrast (Michelson contrast) | 80 |

| Log-Gabor energy | 0.004 |

| MFGS (median filter–gradient similarity) | ≤0.025 |

| SSIM (structural similarity index) | <0.011 |

| HH entropy (high-frequency entropy) | 1.8 |

| PC1 (first principal component) | ≤0.05 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dai, S.; He, L.; Xu, S.; Sun, L.; Chen, H.; Yu, S.; Wu, K.; Wang, Y.; Xuan, Y. Quality Assessment of Solar EUV Remote Sensing Images Using Multi-Feature Fusion. Sensors 2025, 25, 6329. https://doi.org/10.3390/s25206329

Dai S, He L, Xu S, Sun L, Chen H, Yu S, Wu K, Wang Y, Xuan Y. Quality Assessment of Solar EUV Remote Sensing Images Using Multi-Feature Fusion. Sensors. 2025; 25(20):6329. https://doi.org/10.3390/s25206329

Chicago/Turabian StyleDai, Shuang, Linping He, Shuyan Xu, Liang Sun, He Chen, Sibo Yu, Kun Wu, Yanlong Wang, and Yubo Xuan. 2025. "Quality Assessment of Solar EUV Remote Sensing Images Using Multi-Feature Fusion" Sensors 25, no. 20: 6329. https://doi.org/10.3390/s25206329

APA StyleDai, S., He, L., Xu, S., Sun, L., Chen, H., Yu, S., Wu, K., Wang, Y., & Xuan, Y. (2025). Quality Assessment of Solar EUV Remote Sensing Images Using Multi-Feature Fusion. Sensors, 25(20), 6329. https://doi.org/10.3390/s25206329