1. Introduction

Metal welding, as a critical process in modern manufacturing, is widely applied in industries such as construction and rail transport [

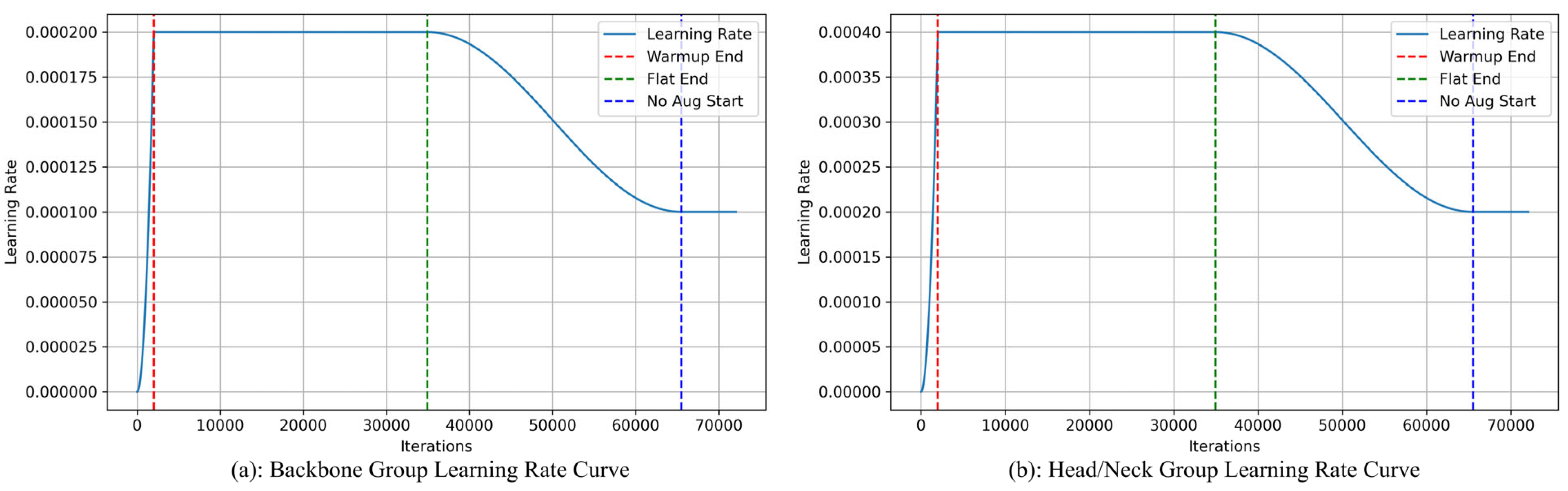

1]. The quality of welding directly impacts the structural strength, service life, and operational safety of products, thereby determining the reliability of manufacturing systems and the overall performance of engineering projects [

2]. In the context of increasingly complex industrial structures and continuously rising performance requirements, high-quality welding processes are not only a crucial foundation for ensuring the stable operation of equipment but also a key enabler for advancing high-end manufacturing, efficient assembly, and sustainable development. However, due to the involvement of multiple complex factors in the welding process, including material properties, welding process parameters, and external environmental conditions, welding defects are complex to avoid altogether [

3]. These defects not only weaken the mechanical properties of the welded joint but may also lead to equipment failure or even safety accidents, particularly in applications with extremely high structural integrity requirements, such as pressure vessels and rail transit. Even minor welding defects can result in catastrophic consequences. Therefore, welding defect detection is a critical step in improving welding process productivity and quality [

4]. Common defect types, such as cracks, porosity, and spatter [

5], are often difficult to detect in the early stages but can easily become stress concentration points during service, leading to fatigue failure, stress corrosion cracking, or even complete system dysfunction. Additionally, such hidden defects significantly increase post-production maintenance and operational costs, severely limiting quality control and sustainable development in manufacturing. Traditional welding defect detection primarily relies on non-destructive testing technologies such as X-rays, ultrasonic waves, thermographic testing, and visual inspection [

6]. Although these techniques provide a certain level of accuracy, performance is limited by the complexity of defects and irregular surface textures, leading to challenges in accuracy, real-time performance, and cost control. Furthermore, these methods rely heavily on manual operators, which in large-scale or high-precision inspection scenarios often leads to reduced detection efficiency, increased labour costs, and limited data processing capabilities [

7], particularly in complex structures or large-scale weld inspections, where modern manufacturing demands are not met. While some high-precision detection methods can identify minute defects, the application of such methods is often associated with high costs and low detection efficiency. Conversely, low-cost detection solutions may struggle to meet high-precision detection requirements. Therefore, achieving a reasonable trade-off between accuracy, processing speed, and resource cost is still a core challenge for welding defect detection technology.

With the rapid development of artificial intelligence technology, defect detection based on deep learning has gradually become the core content of machine detection. Deep learning object detection algorithms utilise convolutional neural networks (CNNs) to convert raw input information into more abstract, higher-dimensional features for learning, with strong feature representation and high-dimensional feature generalization capabilities that enable adaptation to more complex scenarios [

8]. The application of deep learning methods to welding defect detection not only enables automatic identification and classification of defect types but also significantly improves detection efficiency and accuracy while reducing errors caused by human intervention, providing a revolutionary solution for welding defect detection [

9]. These techniques can currently be roughly divided into two categories: the two-stage object detection algorithm, whose detection process is divided into two steps—candidate region generation and subsequent classification—with typical representatives including Fast-RCNN and Mask-RCNN [

10,

11]; its main feature is that it generates a set of candidate regions in the first stage, followed by classification and bounding box regression of these candidate regions in the second stage. These methods typically achieve high detection accuracy and are particularly suitable for tasks requiring high detection accuracy. However, slow inference speeds limit their applicability to real-time detection tasks [

12]. In contrast, one-stage detection methods represented by the You Only Look Once (YOLO) series [

13,

14,

15,

16,

17], Real-Time Detection Transformer (RT-DETR) [

18], and Single-Shot MultiBox Detector (SSD) [

19] adopt an end-to-end architecture, directly predicting both the object category and location in the image without an intermediate candidate region generation process. These methods significantly improve inference speed and are suitable for applications with high real-time requirements. In recent years, such methods have garnered widespread attention for balancing detection accuracy and efficiency. However, these general-purpose object detection models still face significant challenges when directly applied to the highly specialized, fine-grained task of identifying welding defects. Welding defects typically exhibit petite sizes, blurred boundaries, irregular shapes, and textures highly similar to the weld seam background [

20].

In recent years, visual Transformer-based models have achieved impressive accuracy in object detection tasks [

21]. As the latest proposed next-generation end-to-end detection framework, DEIM (DETR with Improved Matching) demonstrates excellent detection performance in welding defect detection tasks. By introducing an optimized matching strategy, it significantly improves training convergence speed and matching stability, addressing the shortcomings of the original DETR in target allocation [

22]. Additionally, DEIM designs a matching-aware loss function that dynamically adjusts the weights of low-quality matching samples. Consequently, the model’s resilience in complicated environments is improved, leveraging the Transformer’s global modelling capabilities, DEIM can better capture defect regions with complex structures and substantial background interference in weld seam images. However, despite DEIM’s advantages in detection accuracy and end-to-end simplified workflow, its performance in weld defect detection still faces certain challenges. The standard convolutional backbone network adopted by DEIM has limited perception capabilities for minor, low-contrast defects, making the complete extraction of fine-grained semantic information challenging. Additionally, its feature fusion strategy is relatively shallow, posing a risk of missed detections for multi-scale defects, especially those of petite sizes [

23]. Furthermore, while the global attention mechanism of the standard Transformer excels in overall context modelling, its perception capabilities remain insufficient when handling fine-grained defects such as minor cracks on weld surfaces, particularly in complex scenarios where defects have low contrast with the background, leading to feature expression confusion. In addition, the training process demonstrates slow convergence, requiring numerous iterations to achieve stability and thereby increasing both training time and computational cost. The one-to-one matching strategy used by the Hungarian algorithm results in a minimal number of positive samples and low matching quality, especially in the field of small object detection, limiting the model’s learning effectiveness [

24,

25].

In order to solve the aforementioned problems, this study offers a multi-module collaborative network for metal welding flaw detection, DEIM-SFA, which integrates an improved DEIM algorithm. First, to augment the feature retention and small object identification capabilities of the primary network during the downsampling stage, a spatial-depth conversion convolution (SPD-Conv) module is introduced at certain downsampling positions in the HGNetv2 main network. Through a sub-pixel reordering mechanism [

26], spatial information is efficiently mapped to the channel dimension, thereby realizing lossless spatial-to-channel rearrangement and enabling efficient information transmission. Second, to strengthen feature learning and mitigate overfitting, the neck incorporates an FTPN module designed with a multi-level feature fusion strategy. This structure serves as the encoder component of the DEIM model, using deformable convolutional kernels to adapt to objects of different scales and effectively process features of varying scales. Finally, to more effectively retain channel information and enhance spatial modelling capabilities, an efficient attention mechanism is introduced, EMA (Efficient Multi-Scale Attention) [

27], which rearranges features and maps some channels to the batch dimension, enabling spatial semantic information to be expressed more evenly across each feature group, thereby enhancing the model’s contextual awareness. According to experimental results, the model enhances information retention, multi-scale feature fusion, and noise suppression in images, making the sensing process more robust to complex backgrounds and environmental disturbances. The following are our primary contributions:

Proposing a downsampling method with a spatial-information-preserving design. The network backbone was designed to integrate the SPD-Conv module. Unlike traditional downsampling operations, SPD-Conv can rearrange feature maps without losing information, retaining fine spatial details and encoding them into the channel dimension, thereby providing the subsequent network with richer feature representations that are conducive to the identification of minor defects.

Designing a multi-scale dynamic feature fusion neck: The FTPN network was constructed as the model neck to replace the original feature fusion module. FTPN leverages its unique multi-scale dynamic focusing and feature diffusion mechanisms to adaptively aggregate and enhance features from different layers, significantly improving the model’s ability to model defects of varying sizes and blurred edges.

Incorporating an efficient context-aware attention mechanism: The EMA module is added to the network. This mechanism enables the model to efficiently establish global context dependencies through channel reorganisation and grouping across spatial dimensions, thereby more accurately focusing on the actual defect regions and effectively suppressing interference from complex background textures.

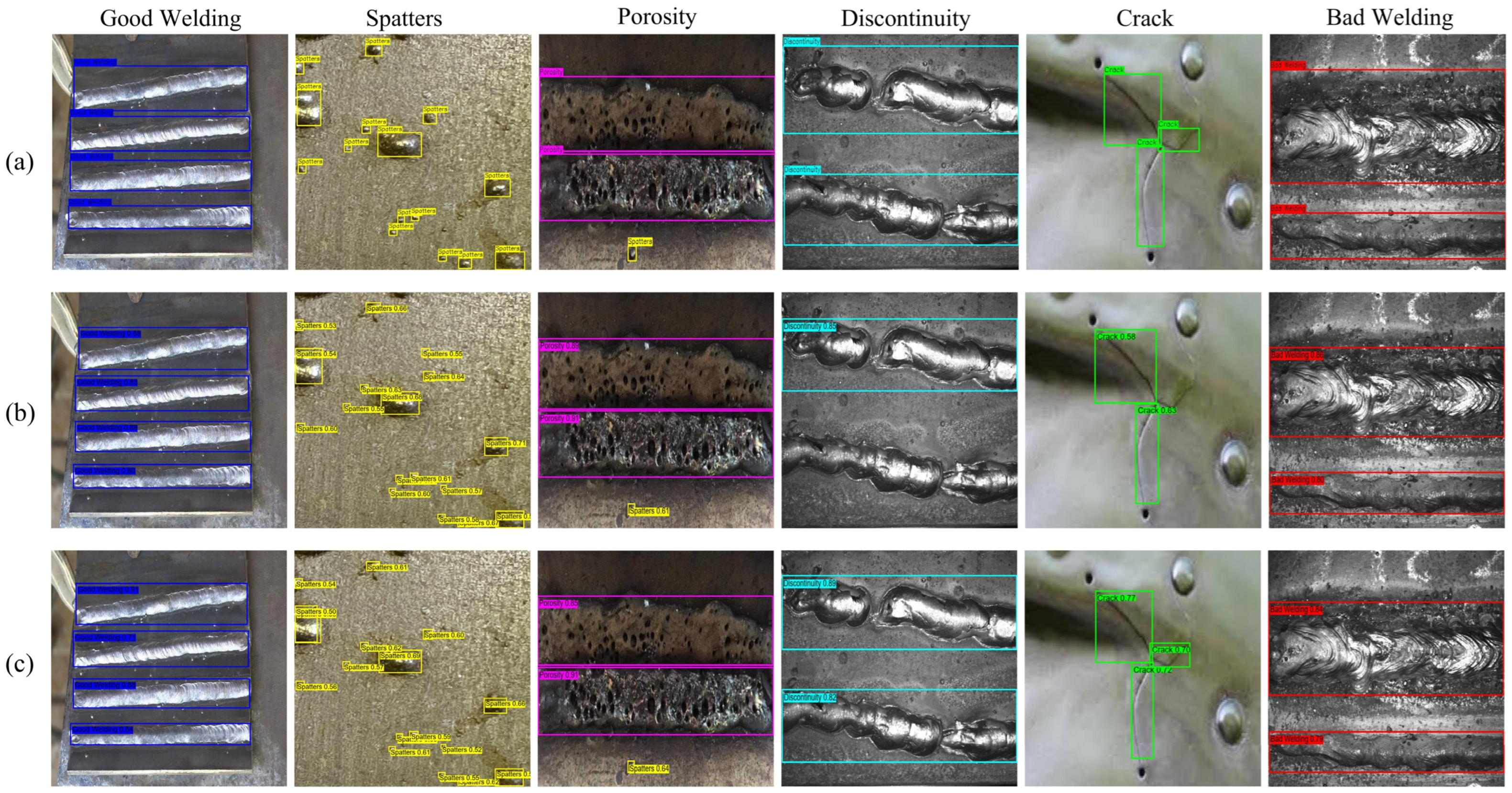

Constructing a high-quality industrial welding defect dataset: To realistically evaluate model performance and promote research in this field, an industrial welding defect dataset comprising 2733 images across six categories was collected, organised, and annotated. This dataset covers a wide range of welding defects encountered in actual applications and can serve as a rigorous standard for further research and verification.

2. Methodology

The infrastructure of the DEIM-SFA network is based on the current state-of-the-art single-stage object detector, DEIM (DETR with Improved Matching) [

25]. DEIM was selected as the benchmark model due to its excellent balance between detection accuracy and inference speed. The model adopts a highly modular design, offering five pre-configured versions: nano (n), small (s), medium (m), large (l), and extra-large (x). After comparative analysis, the DEIMs version was chosen as the fundamental framework for this investigation, striking the best possible compromise between model complexity and detection performance. The DEIM architecture consists of three main parts: a detection head with sophisticated optimization techniques, a bidirectional fusion neck, and an effective backbone network. The backbone network is HGNetv2, composed of a Stem module, multiple HG-Blocks, and several convolutional modules, which are used to achieve multi-scale hierarchical feature extraction. These multi-scale features are then fed into a neck that combines a Feature Pyramid Network (FPN) and a Path Aggregation Network (PANet) [

28,

29], which deeply fuses high-level semantic information with low-level spatial details through bidirectional information flow to generate feature maps rich in contextual information. Finally, the detection head uses these fused features for bounding box regression and class prediction. It combines two key training strategies for performance optimisation: the Dense One-to-One Matching strategy optimises the traditional Hungarian matching [

30], assigning each object an appropriate prediction head to avoid neglecting ‘small objects’ or ‘minority class objects’; a loss function sensitive to matching quality is introduced, enabling dynamic adjustment of weights for suboptimal samples to further enhance the model’s detection robustness in complex backgrounds.

2.1. Improved Overall Network Architecture

The overall structure of DEIM-SFA is shown in

Figure 1. To enhance the network’s performance in detecting targets of different scales, this paper modifies the HGNetv2 backbone structure of the original DEIM model. First, in the backbone network, the original Stage 2 spatial downsampling module is replaced with an SPD-Conv module. SPD-Conv achieves more efficient information compression through spatial pyramid depth convolution while preserving rich spatial details and multi-scale semantic perception capabilities. Since SPD-Conv requires a large receptive field to capture spatial pyramid features, its effects are not prominent in shallow feature maps. Therefore, it is only replaced in Stage 2 to achieve the best balance. Secondly, to address the issue of target details being weakened during continuous convolution, the EMA attention mechanism is introduced into all HG-Block modules. This module effectively improves the network’s modeling capacity of small targets and conspicuous features in complex background by adaptively increasing spatial and channel information and directing the network to concentrate on crucial locations. Finally, to further improve feature fusion capabilities, we replace the neck structure based on FPN and PANet in the original model with an innovatively improved FTPN architecture. The FTPN structure combines multi-scale diffusion (MS-DF) with a Transformer encoder, which enables precise fusion of features across scales while incorporating global context, enhancing the recognition of defects of varying sizes and types. The selective application of the Transformer encoder to deep features ensures a balance between computational efficiency and performance. Collectively, this integration of three modules provides a unique framework that addresses the specific challenges of welding defect detection—including small target sizes, complex backgrounds, and diverse defect types—and provides superior accuracy and robustness compared to conventional approaches.

Our framework is the first to systematically integrate SPD-Conv, FTPN, and EMA within the DEIM network. This integration is theoretically motivated by the complementary strengths of the modules: SPD-Conv enhances local detail preservation, FTPN strengthens multi-scale fusion, and EMA improves contextual attention. Their joint design enables a balanced architecture tailored for welding defect detection. The model achieves a good balance between accuracy and computational cost, requiring only 11.87 million parameters and 36.35 GFLOPs. This lightweight design shows potential for deployment on edge devices and embedded sensors, which may enable real-time online monitoring in industrial environments.

2.2. SPD-Conv Module for Fine-Grained Feature Retention

In the task of detecting defects in metal welding, the accurate identification of small defects (such as welding cracks and spatter) has always been a significant challenge. Compared to large-scale structural changes, some defects often exhibit characteristics such as small size, irregular shape, and blurred boundaries [

31], resulting in them covering merely a small portion of the pixels in the picture. This leads to insufficient effective feature representation, thereby affecting the model’s detection accuracy. To solve these concerns, the SPD-Conv module was deployed at the downsampling stage of the backbone network, replacing the original stride-based convolution and pooling layers. The theoretical motivation for this choice lies in its ability to preserve fine-grained spatial details while achieving efficient multi-scale feature representation. The general idea of SPD-Conv is that a Space-to-Depth transformation is first applied to redistribute spatial information into the channel dimension, and a stride-free convolution is then used to compress and fuse these features, thereby reducing spatial resolution while avoiding the loss of small-target details. Unlike traditional fixed convolutions, SPD-Conv dynamically adjusts its receptive field based on the geometric properties of different regions within the input image, effectively improving the modelling accuracy of weld defect boundaries, shapes, and distribution characteristics. Additionally, SPD-Conv enhances the expression capability of shallow-layer features while maintaining network lightweightness, effectively improving small object detection performance and overall robustness in steel weld defect detection tasks.

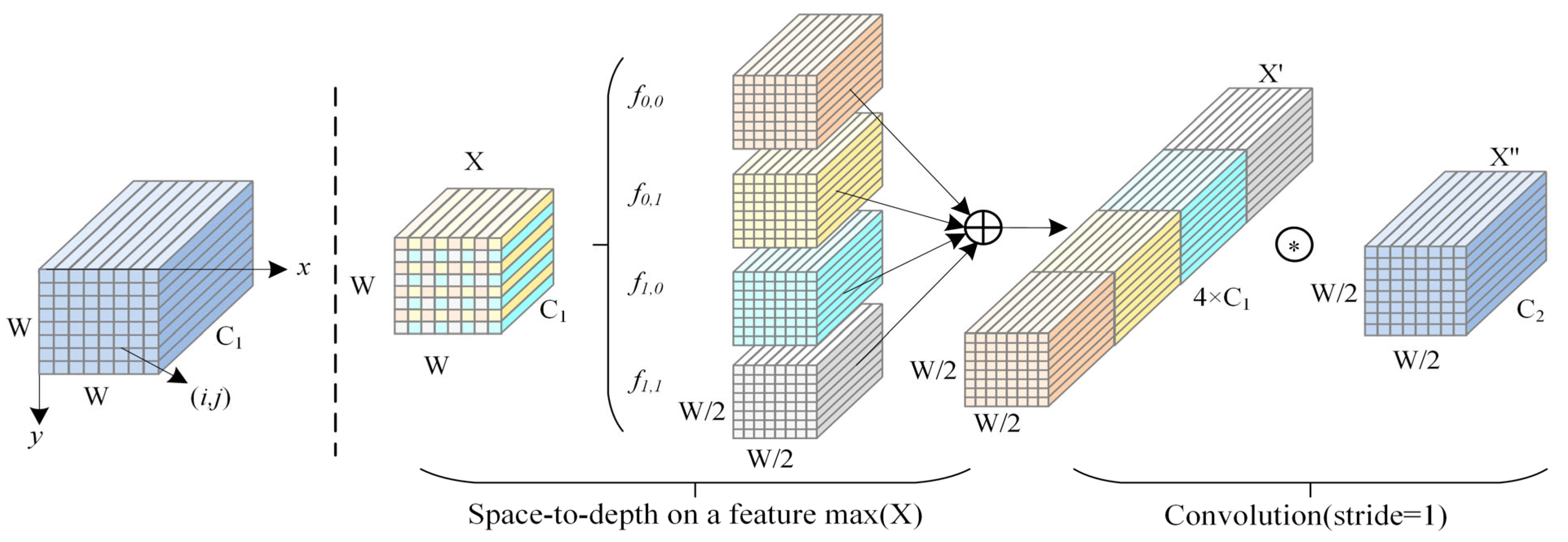

The SPD-Conv module consists of two core components: the Space-to-Depth Transformation Layer and a volume-preserving layer [

32]. These components enhance feature expression capabilities from both the spatial dimension and channel volume perspectives, thereby improving the model’s ability to model structural details. The architecture of the SPD-Conv module is shown in

Figure 2. First, a Space-to-Depth transformation is applied to the input feature map by specifying a stride S, which partitions and rearranges the spatial dimensions accordingly. The input feature map X has a shape of W × W × C1; it is divided into S2 sub-feature maps

f(x, y), whose range is as follows:

These sub-feature maps are then concatenated along the channel axis to generate a feature map of size × × S2C1. When S = 2, the input is divided into four sub-feature maps f(0,0), f(0,1), f(1,0), and f(1,1), each of dimension × × C1. After concatenation, the resulting feature map has four times the original number of channels. This process effectively preserves the fine-grained structural information of the original space. It encodes it into the channel dimension in a more compact form, thereby significantly enhancing the network’s ability to model local textures and edge details.

Finally, a stride-free convolution layer with a D2 filter (stride = 1) [

33] was added to X′ to aggregate and compress high-dimensional channel features, extracting discriminative semantic representations and transforming

X′ into

X″, as shown in the following equation:

Unlike traditional downsampling using stride convolution, SPD-Conv avoids further compression of the spatial dimension. This convolution operation aims to retain as much distinctive feature information as possible, effectively preventing the loss of small target information. The final output feature map X has dimensions of × × C2.

2.3. FTPN Neck Network for Deep Multi-Scale Fusion

In welding defect detection, the choice of the Feature-focused Diffusion Pyramid Network (FTPN) as the neck architecture is theoretically motivated by the need to enhance multi-scale feature representation and cross-level interaction. Traditional neck networks based on FPN and PANet employ linear top-down and bottom-up pathways. While this design establishes cross-level connections, the depth and breadth of feature interactions are limited, leading to insufficient non-linear fusion of high-resolution details from shallow layers with high-order semantic information from deep layers, thereby limiting the model’s ability to characterise defects with extreme sizes or ambiguous morphologies [

34,

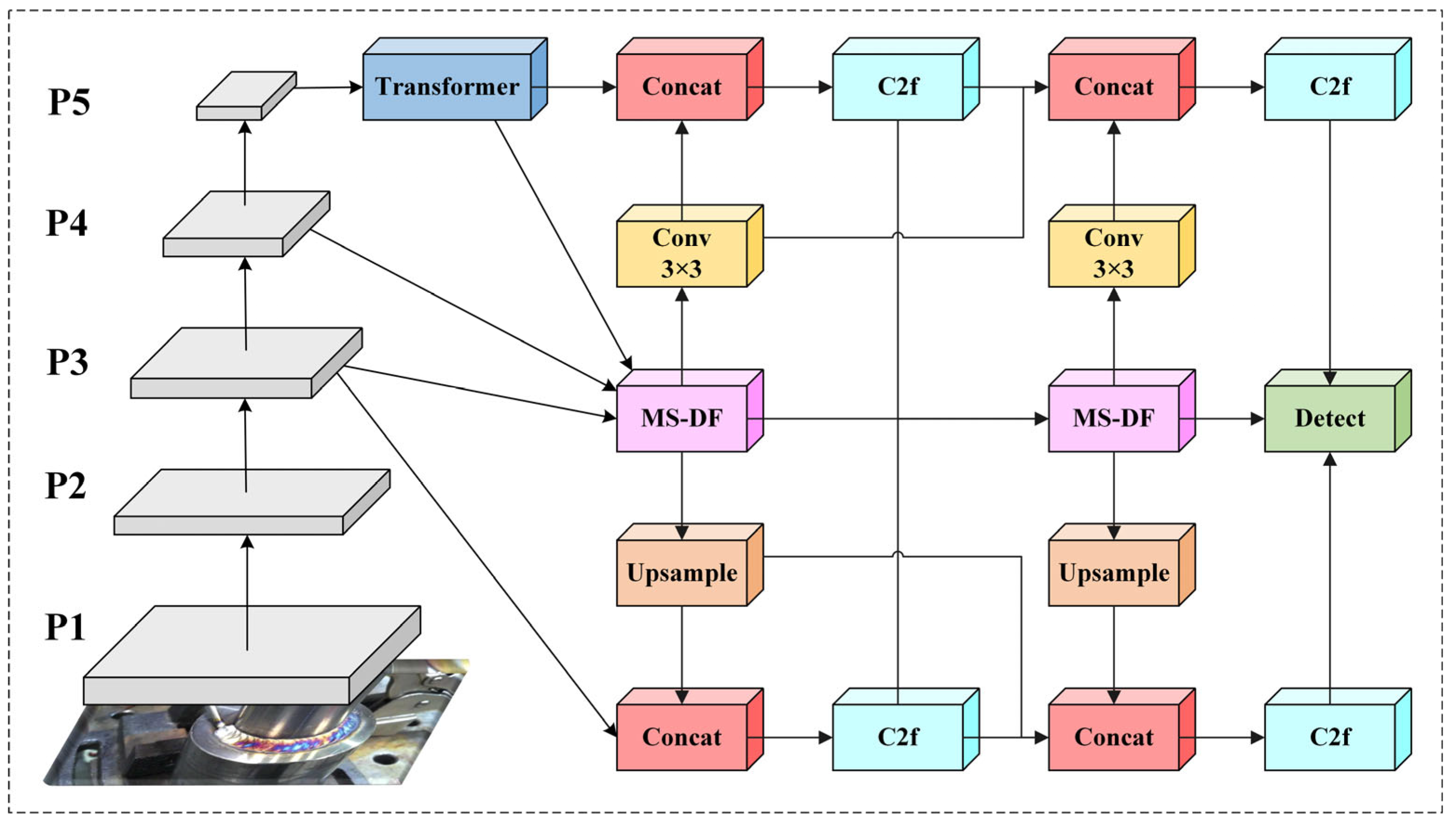

35]. To overcome this bottleneck, FTPN addresses this limitation by introducing an iterative, bidirectional feature refinement mechanism through the Multi-Scale Dynamic Fusion (MS-DF) module, enabling deeper and more effective multi-scale feature integration while minimizing semantic information loss. Furthermore, a lightweight Transformer encoder is applied to the highest-level feature map (P5), which contains the strongest semantic information, in order to enhance global context modeling and establish long-range dependencies via self-attention. This significantly improves the model’s ability to identify welding defects in complex backgrounds. Its structural diagram is shown in

Figure 3. The Transformer encoder process can be expressed as:

where

X represents the input high-level feature map,

Flatten () denotes flattening the input feature map along the spatial dimension into a sequence format, and PE is a learnable position encoding used to introduce spatial position information and preserve positional signals.

TransformerEncoder () represents the main body of the encoder, typically consisting of multiple layers of multi-head self-attention and feedforward networks, used to model global dependencies within the sequence.

Reshape () is used to restore the sequence features output by the encoder to their original spatial feature map shape; the final output Y is the high-level semantic feature map. Through this mechanism, the Transformer encoder significantly boosts the feature map’s spatial coherence and semantic representation capacity, providing more discriminative semantic support for subsequent multi-scale feature fusion and defect detection. Then, the three feature maps (P3, P4, P5) are fused at multiple scales through the first-stage MS-DF module, and the fused features are upsampled to the P3 layer and downsampled to the P5 layer for feature propagation. Subsequently, the second-stage MS-DF module performs a second multi-scale fusion on the output of the first stage and again conducts bidirectional feature propagation. Finally, three enhanced multi-scale feature maps are output, achieving efficient multi-scale feature fusion and bidirectional information flow.

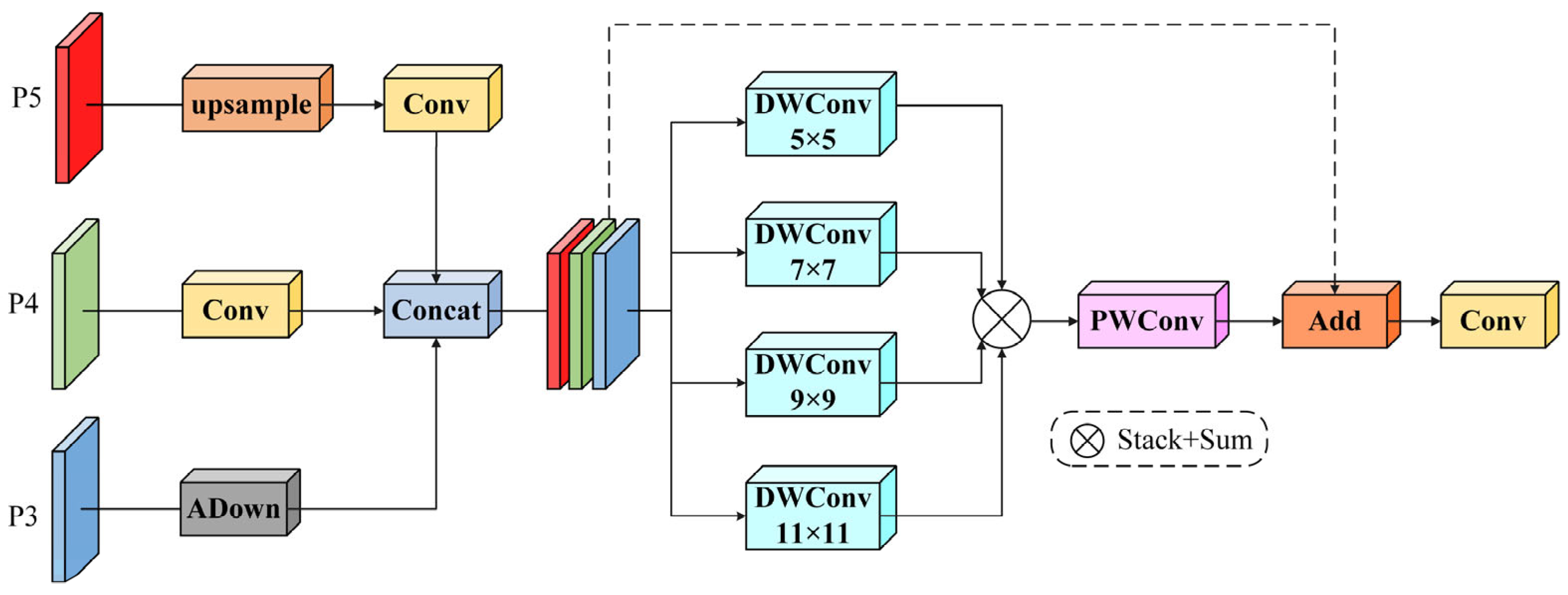

In FTPN, the MS-DF module is its core innovative unit, specifically designed to process feature inputs at three different scales. The MS-DF dynamically integrates cross-scale features by simultaneously analyzing both adjacent-level and same-level feature representations, effectively integrating cross-layer information to compensate for the lack of spatial detail in shallow feature maps, enabling more accurate capture of defect changes. The structure is shown in

Figure 4. This module first divides the input feature map and performs different operations based on scale differences. The input P3 layer undergoes feature downsampling via the Adown module, reducing the input feature size while extracting more representative features, thereby preserving low-level detail information. The input P4 layer passes through a convolutional layer, while the input P5 layer first undergoes upsampling followed by convolution. Subsequently, the concatenated features are processed through multi-core deep convolutional aggregation, which can be formally expressed as:

where

C denotes the concatenated feature maps. Residual connections are used to enhance feature expression, and finally, a 1 × 1 convolution is applied to adjust the output dimensions, ensuring that low-level high-resolution features and high-level semantic features are complementary and enhance each other under an optimized fusion strategy. The design of the MS-DF module implicitly adjusts the contribution of features across layers. Through an optimized feature fusion strategy, the high-frequency details of shallow features and the abstract semantics of deep features are deeply integrated, significantly improving the model’s context modeling capabilities.

2.4. Efficient Multi-Scale Attention (EMA) Mechanism for Feature Refinement

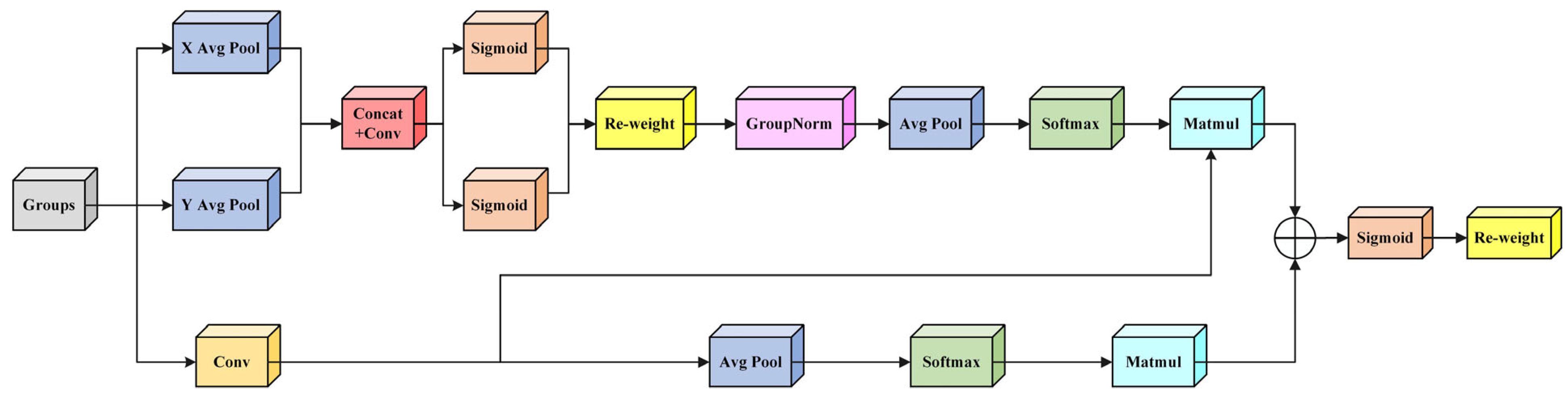

The selection of the Efficient Multi-scale Attention (EMA) mechanism is theoretically motivated by the intrinsic characteristics of welding defect detection tasks. The baseline model employs the HGNetv2 backbone network, which integrates the classic Efficient Squeeze-and-Excitation (ESE) attention mechanism [

36]. Its core mechanism utilizes global spatial pooling operations to achieve dimensional compression from two-dimensional features to one-dimensional channel descriptors, thereby establishing dependencies between channels. While this method effectively captures inter-channel relationships, it comes at the cost of losing spatial structural information. For metal welding defect detection tasks, defects such as cracks, porosity, and spatter exhibit highly irregular and localised spatial distributions. Suppose spatial position information is lost during feature extraction. In that case, the model will struggle to accurately identify the prominent features of these local regions, leading to reduced recognition capabilities for small targets or weakly textured defects. This is a suboptimal choice for dense prediction tasks that require precise spatial localization. By contrast, EMA is explicitly designed to address this shortcoming. Its theoretical advantage lies in its joint modeling of multi-scale spatial dependencies and cross-channel interactions. Through directional pooling along height and width, EMA preserves global spatial context while maintaining orientation-aware representations. At the same time, local convolutions capture fine-grained structural details, and the cross-spatial attention mechanism adaptively calibrates features across regions. This synergistic design allows EMA to retain spatial awareness without sacrificing computational efficiency. The network architecture is shown in

Figure 5. The EMA module is composed of two parts: parallel subnetworks and cross-spatial attention mechanisms, achieving synergistic optimization of directional perception and regional modeling. The spatial awareness of EMA is realized through directional pooling and cross-spatial interaction. Specifically, the input features are grouped along the channel dimension, and three parallel paths are used to extract attention channel weights. In these three paths, the first two paths process features through 1 × 1 convolutions, employing two separate 1D adaptive pooling modules that aggregate features globally along each spatial dimension (height and width) for feature encoding, to capture global contextual information in different spatial directions. The third path employs 3 × 3 convolutions to capture local spatial features. For the path, 3 × 3 convolutions extract local detail features, and cross-spatial interaction paths (including feature calibration and matrix interaction) are used. Then, cross-spatial attention fusion is achieved through an innovative bidirectional softmax matrix multiplication, and finally, the original features are adaptively adjusted using dynamically generated attention weights. The entire structure reduces computational complexity through batch processing. By exploiting hierarchical feature extraction across multiple scales and synergistic spatial-channel interactions, the framework achieves efficient feature enhancement while maintaining the input and output dimensions unchanged.

The integration of EMA strengthens the network’s capacity to capture multi-scale contextual information and adaptively adjust channel feature responses. In the HGNetv2 backbone network, this improves the model’s ability to model long-range dependencies and multi-scale contextual information, significantly enhancing performance in object detection tasks. This module enables the network to focus on more discriminative features, thereby improving overall performance. Experimental results show that using EMA as the feature aggregation method achieves superior detection and segmentation accuracy compared to the original model’s ESE aggregation method in scenes with complex backgrounds. Overall, the EMA attention mechanism serves as an efficient feature aggregation module for the HGNetv2 backbone network, enhancing feature representation capabilities while maintaining computational efficiency, thereby providing a robust feature foundation for downstream visual tasks.

4. Conclusions

This paper addresses key challenges in industrial welding scenarios, such as low feature recognition accuracy in complex backgrounds and insufficient multi-scale information fusion, by proposing a high-performance detection network named DEIM-SFA. The model achieves this through systematic optimisation of the baseline architecture DEIMs, incorporating three structural innovations: (1) introducing an information-lossless SPD-Conv downsampling module, inspired by the space-to-depth concept, to better preserve the fine-grained features required for identifying minor defects; (2) integrating an improved EMA attention mechanism, derived from existing attention structures but enhanced with spatial perception capability, thereby enabling adaptive refinement of key features in complex weld textures; (3) constructing an FTPN deep fusion neck to achieve more robust and efficient multi-scale feature interaction; (4) systematically combining these modules within DEIM for the first time to construct a principled, task-oriented framework tailored to welding defect detection. Extensive experimental and analytical results demonstrate that the proposed DEIM-SFA significantly outperforms various mainstream detectors, including the latest YOLO series and RT-DETR, across multiple core evaluation metrics. Especially when handling defects with small sizes, blurred boundaries, and complex textures, the model strikes a better balance between computational economy and performance, and it exhibits remarkable detection accuracy.

In practical deployment, DEIM-SFA can serve as an intelligent visual sensing node on edge devices within Industrial Internet of Things systems, providing high-precision defect detection data to enhance product quality, operational safety, and structural lifespan, thereby supporting smart manufacturing and critical infrastructure monitoring. Although this study focuses on visual sensing, the modular framework of DEIM-SFA can be extended to other sensing modalities, offering great potential in multimodal sensing applications. The overall performance of the improved model has been enhanced, but there are still some limitations, especially in terms of detection speed, which still has room for improvement. In future work, a systematic analysis of key parameters will be conducted. Such a sensitivity study is expected to provide valuable insights into the robustness and optimal configuration of DEIM-SFA, further guiding model design and deployment. In addition, lightweight techniques such as model quantization and pruning will be explored to enable real-world deployment and validation, including assessments of memory consumption, latency, and robustness in practical industrial environments, while also integrating multimodal sensor data to enhance model stability and expand its application scope in more complex and diverse industrial scenarios.