DocCPLNet: Document Image Rectification via Control Point and Illumination Correction

Abstract

1. Introduction

2. Related Work

2.1. Geometric Unwarping

2.2. Document Illumination Correction

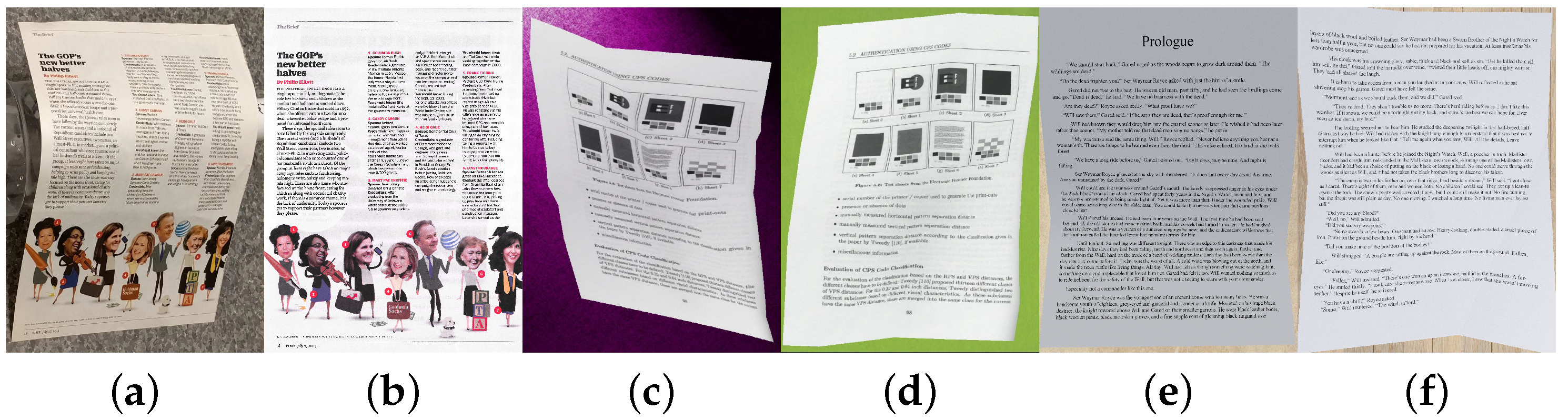

3. Dataset

4. Methodology

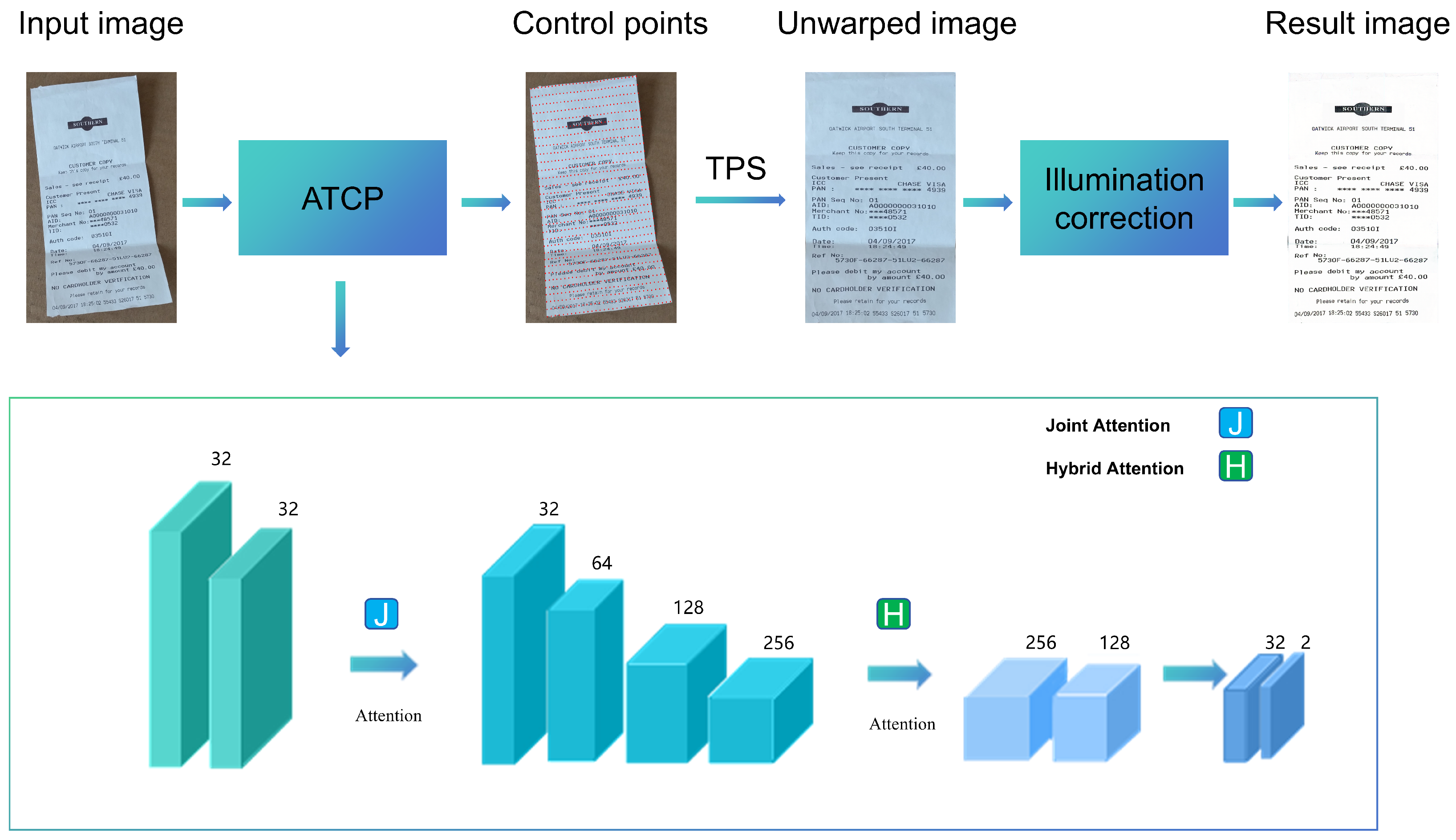

4.1. Architecture Overview

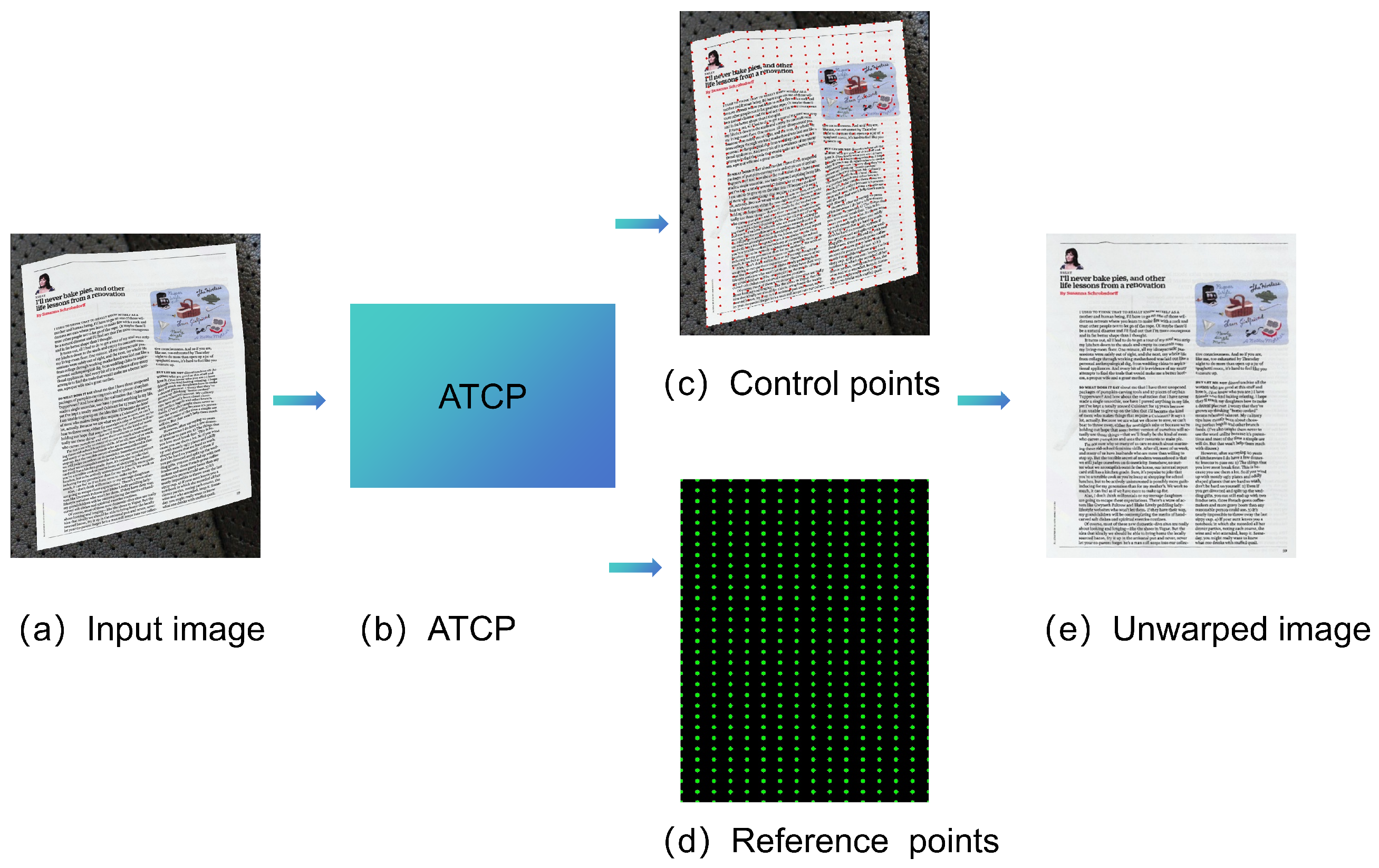

4.2. Geometric Unwarping Network

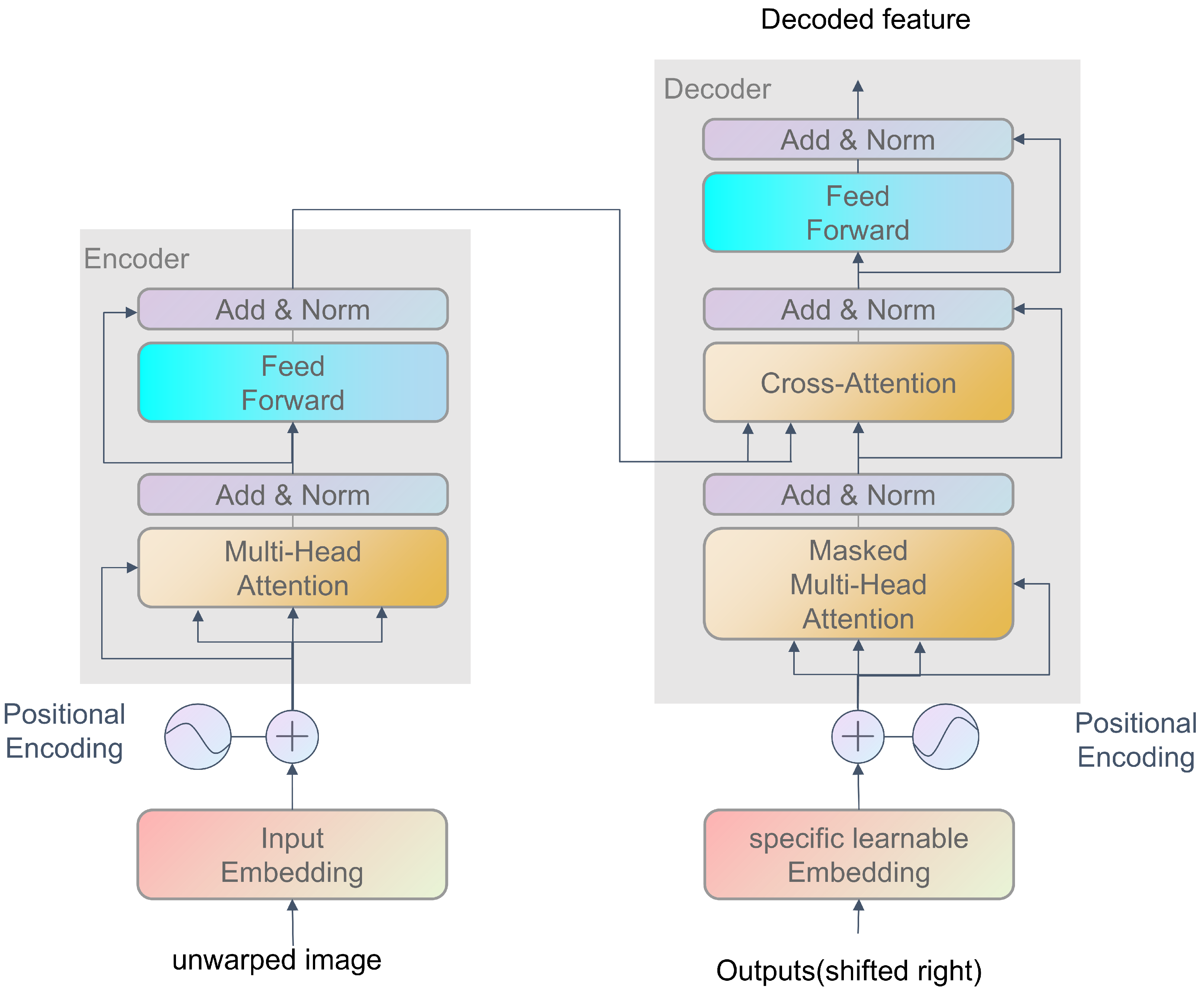

4.3. Illumination Correction Network

5. Experiments

5.1. Evaluation Metrics

5.2. Implementation Details

5.3. Experimental Results

5.4. Experimental Ablation

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, L.; Zhang, Y.; Tan, C. An improved physically-based method for geometric restoration of distorted document images. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 728–734. [Google Scholar] [CrossRef] [PubMed]

- Brown, M.S.; Seales, W.B. Document restoration using 3D shape: A general deskewing algorithm for arbitrarily warped documents. In Proceedings of the Eighth IEEE International Conference on Computer Vision, ICCV 2001, Vancouver, BC, Canada, 7–14 July 2001; Volume 2, pp. 367–374. [Google Scholar]

- Meng, G.; Wang, Y.; Qu, S.; Xiang, S.; Pan, C. Active flattening of curved document images via two structured beams. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 3890–3897. [Google Scholar]

- Ma, K.; Shu, Z.; Bai, X.; Wang, J.; Samaras, D. Docunet: Document image unwarping via a stacked u-net. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4700–4709. [Google Scholar]

- Xie, G.W.; Yin, F.; Zhang, X.Y.; Liu, C.L. Document dewarping with control points. In Proceedings of the Document Analysis and Recognition–ICDAR 2021: 16th International Conference, Lausanne, Switzerland, 5–10 September 2021; Proceedings, Part I 16. Springer: Berlin/Heidelberg, Germany, 2021; pp. 466–480. [Google Scholar]

- Meijering, E. A chronology of interpolation: From ancient astronomy to modern signal and image processing. Proc. IEEE 2002, 90, 319–342. [Google Scholar] [CrossRef]

- Das, S.; Ma, K.; Shu, Z.; Samaras, D.; Shilkrot, R. Dewarpnet: Single-image document unwarping with stacked 3d and 2d regression networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Reoublic of Korea, 27 October–2 November 2019; pp. 131–140. [Google Scholar]

- Xue, C.; Tian, Z.; Zhan, F.; Lu, S.; Bai, S. Fourier document restoration for robust document dewarping and recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 4573–4582. [Google Scholar]

- Zhang, L.; Yip, A.M.; Tan, C.L. Photometric and geometric restoration of document images using inpainting and shape-from-shading. In Proceedings of the National Conference on Artificial Intelligence, Vancouver, BC, Canada, 22–26 July 2007; Volume 22, p. 1121. [Google Scholar]

- Wada, T.; Ukida, H.; Matsuyama, T. Shape from shading with interreflections under a proximal light source: Distortion-free copying of an unfolded book. Int. J. Comput. Vis. 1997, 24, 125–135. [Google Scholar] [CrossRef]

- Li, X.; Zhang, B.; Liao, J.; Sander, P.V. Document rectification and illumination correction using a patch-based CNN. ACM Trans. Graph. (TOG) 2019, 38, 168. [Google Scholar] [CrossRef]

- Feng, H.; Wang, Y.; Zhou, W.; Deng, J.; Li, H. Doctr: Document image transformer for geometric unwarping and illumination correction. arXiv 2021, arXiv:2110.12942. [Google Scholar]

- Wang, Y.; Zhou, W.; Lu, Z.; Li, H. Udoc-gan: Unpaired document illumination correction with background light prior. In Proceedings of the 30th ACM International Conference on Multimedia, Lisboa, Portugal, 10–14 October 2022; pp. 5074–5082. [Google Scholar]

- Tian, Y.; Narasimhan, S.G. Rectification and 3D reconstruction of curved document images. In Proceedings of the CVPR 2011, Providence, RI, USA, 20–25 June 2011; pp. 377–384. [Google Scholar]

- Cao, H.; Ding, X.; Liu, C. Rectifying the bound document image captured by the camera: A model based approach. In Proceedings of the Seventh International Conference on Document Analysis and Recognition, Edinburgh, UK, 3–6 August 2003; pp. 71–75. [Google Scholar]

- Liang, J.; DeMenthon, D.; Doermann, D. Geometric rectification of camera-captured document images. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 591–605. [Google Scholar] [CrossRef] [PubMed]

- Feng, H.; Zhou, W.; Deng, J.; Wang, Y.; Li, H. Geometric representation learning for document image rectification. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; pp. 475–492. [Google Scholar]

- Yang, L.; Zhang, R.Y.; Li, L.; Xie, X. Simam: A simple, parameter-free attention module for convolutional neural networks. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual Event, 18–24 July 2021; pp. 11863–11874. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Wang, Z.; Simoncelli, E.P.; Bovik, A.C. Multiscale structural similarity for image quality assessment. In Proceedings of the Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, Pacific Grove, CA, USA, 9–12 November 2003; Volume 2, pp. 1398–1402. [Google Scholar]

- You, S.; Matsushita, Y.; Sinha, S.; Bou, Y.; Ikeuchi, K. Multiview rectification of folded documents. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 505–511. [Google Scholar] [CrossRef] [PubMed]

- Levenshtein, V.I. Binary codes capable of correcting deletions, insertions, and reversals. Sov. Phys. Dokl. 1966, 10, 707–710. [Google Scholar]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in pytorch. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Smith, R. An overview of the Tesseract OCR engine. In Proceedings of the Ninth International Conference on Document Analysis and Recognition (ICDAR 2007), Curitiba, Brazil, 23–26 September 2007; Volume 2, pp. 629–633. [Google Scholar]

- Xie, G.W.; Yin, F.; Zhang, X.Y.; Liu, C.L. Dewarping document image by displacement flow estimation with fully convolutional network. In Proceedings of the Document Analysis Systems: 14th IAPR International Workshop, DAS 2020, Wuhan, China, 26–29 July 2020; Proceedings 14. Springer: Berlin/Heidelberg, Germany, 2020; pp. 131–144. [Google Scholar]

| Methods | MS-SSIM ↑ | LD ↓ | ED ↓ | CER ↓ |

|---|---|---|---|---|

| Distored | 0.246 | 20.507 | 2789.1 | 0.613 |

| DocUNet [4] | 0.413 | 14.193 | 1239.12 | 0.384 |

| DocProj [11] | 0.272 | 19.51 | 1165.93 | 0.3818 |

| Doctr [12] | 0.496 | 8.014 | 339.59 | 0.1164 |

| DDCP [5] | 0.475 | 9.106 | 1248.06 | 0.34 |

| Geotr [17] | 0.502 | 8.287 | 592.29 | 0.183 |

| DewarpFlow [25] | 0.428 | 7.771 | 1260.83 | 0.35 |

| DewarpNet [7] | 0.4693 | 8.987 | 744.77 | 0.23 |

| DocCPLNet | 0.477 | 8.687 | 594.89 | 0.180 |

| DocUNet | |||

|---|---|---|---|

| Methods | (a) | (b) | (c) |

| +Joint Attention | ✓ | ✓ | ✓ |

| +Hybrid Attention | ✓ | ✓ | |

| +GIT | ✓ | ||

| MS-SSIM ↑ | 0.476 | 0.472 | 0.477 |

| LD ↓ | 9.043 | 8.541 | 8.687 |

| ED ↓ | 958.05 | 754.16 | 594.89 |

| CER ↓ | 0.25 | 0.21 | 0.18 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ni, H.; Han, J.; Wang, C.; Zhang, S.; Li, R. DocCPLNet: Document Image Rectification via Control Point and Illumination Correction. Sensors 2025, 25, 6304. https://doi.org/10.3390/s25206304

Ni H, Han J, Wang C, Zhang S, Li R. DocCPLNet: Document Image Rectification via Control Point and Illumination Correction. Sensors. 2025; 25(20):6304. https://doi.org/10.3390/s25206304

Chicago/Turabian StyleNi, Hongyin, Jiayu Han, Chiyuan Wang, Shuo Zhang, and Ruiqi Li. 2025. "DocCPLNet: Document Image Rectification via Control Point and Illumination Correction" Sensors 25, no. 20: 6304. https://doi.org/10.3390/s25206304

APA StyleNi, H., Han, J., Wang, C., Zhang, S., & Li, R. (2025). DocCPLNet: Document Image Rectification via Control Point and Illumination Correction. Sensors, 25(20), 6304. https://doi.org/10.3390/s25206304