1. Introduction

Emotion is a complex psychological state formed in humans through external stimuli or internal drives, encompassing multiple dimensions such as subjective experience, external expression, and physiological response [

1]. Emotion recognition, as a vital branch of artificial intelligence, aims to enable computers to perceive, understand, and express emotions, thereby achieving more natural human computer interaction. In recent years, emotion recognition has been widely applied in scenarios such as intelligent assistants, adaptive education, medical psychological interventions, and entertainment interactions [

2]. For individuals with hearing impairments, the absence of auditory and linguistic abilities severely limits their emotional perception and expression, often leading to social communication barriers and psychological adaptation issues [

3]. Therefore, developing robust emotion recognition methods not only helps improve communication and quality of life for the hearing impaired but also provides new technological support for related psychological interventions and rehabilitation training.

Most current emotion recognition research predominantly concentrates on unimodal approaches. Facial expression, as the most direct manifestation of affective states, has achieved notable advancements in classification accuracy through the application of deep learning architectures such as the Convolutional Neural Networks (CNN) and the residual networks (ResNet) [

4]. However, facial expressions are significantly influenced by factors such as lighting, occlusion, and deliberate disguise, making their sole reliance for emotion recognition inherently limited. On the other hand, EEG signals directly reflect central nervous system activity, offering high physiological authenticity and objectivity. Researchers often extract emotion related information through features such as power spectra, Short Time Fourier transforms, and Differential Entropy (DE), and utilize models like Support Vector Machines (SVM), Long Short Term Memory Networks (LSTM), EEGNet, or multi-branch CNNs for classification. EEG based methods demonstrate strong performance on public datasets like DEAP and SEED [

5], but signals susceptibility to artifacts and significant inter-individual variability pose challenges for practical applications.

To overcome the limitations of single-modal approaches, multimodal emotion recognition has gradually emerged as a research hotspot. By integrating complementary information from different modalities, models can achieve higher robustness and accuracy in complex environments [

6]. Multimodal fusion methods primarily encompass input level fusion, feature level fusion, and decision level fusion. Input level fusion directly concatenates raw data from different modalities, which can easily lead to dimensional imbalance and noise propagation. Decision level fusion involves independent classification across modalities followed by weighting or voting, preserving modality independence but struggling to fully leverage cross-modal interaction information. Feature level fusion models analyze intermodal relationships within the feature space and are considered an effective approach for enhancing performance. However, most current methods still rely on simple concatenation or static weighting, lacking deep modeling of intermodal interactions [

7]. They are particularly challenged in dynamically adjusting the importance of different modalities based on context.

In the field of emotion recognition research for individuals with hearing impairments, existing studies have demonstrated distinct brain region response characteristics during emotional processing compared to the general population [

8]. For instance, their emotional functional brain regions exhibit heightened activity in the parietal and occipital lobes, with more pronounced interhemispheric differences in emotional responses [

9]. This provides new theoretical grounding for multimodal approaches integrating electroencephalographic signals and facial expressions. However, most existing studies on hearing impaired individuals remain confined to unimodal approaches or lack systematic cross-modal interaction modeling. Effectively capturing the spatiotemporal dependencies of EEG signals and deeply integrating them with the overt features of facial expressions represents a critical challenge in enhancing emotion recognition performance for this population.

Based on this, this paper proposes a Multimodal Multi-Head Attention Fusion Neural Network (MMHA-FNN) tailored for individuals with hearing impairments. This method utilizes DE and bilinear interpolation to generate EEG brain topography maps. It then employs an EEG feature extraction module based on the Mobile Inverted Bottleneck Convolution (MBConv) architecture to learn the spatiotemporal characteristics of brain regions. Concurrently, a facial feature extraction module using a residual network architecture captures the deep semantic information of facial expressions. Finally, during the feature fusion stage, the Transformer multi-head attention mechanism is introduced to dynamically model the interactive relationship between EEG and facial features, enabling adaptive weighting and information enhancement across modalities. Experimental results on the Multimodal Emotion Database for Hearing-Impaired Individuals (MED-HI) demonstrate that this method achieves an outstanding performance in the four-classification task.

2. Related Work

Emotion recognition, as one of the core tasks in the field of affective computing, has garnered significant attention in recent years. Research on different modalities has deepened, particularly the integration of physiological signals such as EEG with non-physiological signals like facial expressions, offering new approaches to enhance the robustness and accuracy of emotion recognition.

In single-modal research, EEG serves as a crucial signal source for emotion recognition due to its ability to directly reflect neural activity in the brain. Previous studies have demonstrated that DE serves as an effective feature for characterizing emotional states. Duan et al. [

10] found that DE outperforms features such as energy spectrum (ES) in emotion classification, with particularly significant recognition performance in the

band. On the publicly available DEAP dataset, a fusion model combining 2D convolutional layers based on DE features with LSTM achieves classification accuracies of 91.9% and 92.3% on the valence and arousal dimensions, respectively [

11]. On the SEED-V dataset, Yao et al. [

12] combined DE with the ResNet18 network to achieve five-category emotion classification, attaining an average accuracy of 95.61%. Multimodal fusion methods have also drawn significant research attention. Wu et al. [

13] conducted a comprehensive review of multimodal emotion recognition, highlighting that feature extraction and fusion strategies are crucial for enhancing system performance, while emphasizing that lightweight models represent a future challenge.

Improvements to EEG feature extraction and fusion mechanisms have also garnered significant attention. EEG FuseNet employs an unsupervised GAN-CNN-RNN hybrid architecture to achieve deep representation and fusion of EEG spatiotemporal features, demonstrating strong generalization capabilities [

14]. Wu et al. [

15] utilized EEG clustering coefficients and feature centrality to perform multimodal emotion recognition through DCCA. Furthermore, the introduction of Transformers in multimodal fusion has further propelled research advancements. The graph neural network framework proposed by Devarajan et al. integrates EEG, facial expressions, and other physiological signals for emotion recognition, demonstrating the advantages of graph structures in modal fusion [

16]. Recent reviews also highlight that designing fusion algorithms, modal representations, and classification mechanisms remains a core challenge in current work, demanding deeper alignment and modeling of intermodal relationships [

17]. Hu et al. [

18] proposed STRFLNet, a spatio-temporal representation fusion learning network that integrates EEG-based features with advanced cross-modal fusion, achieving state-of-the-art accuracy on public datasets. Cai et al. [

19] designed an EEG-SWTNS neural network that leverages spectral images of EEG signals, effectively capturing frequency domain patterns for emotion recognition. In addition, Pfeffer et al. explored Transformer-based models for EEG signal analysis in brain–computer interface tasks, highlighting the strong representational power of Transformer structures in biomedical signals [

20].

In summary, DE features combined with deep learning networks demonstrate high efficiency in EEG emotion recognition. Cross-modal fusion, particularly when incorporating attention mechanisms, significantly improves recognition accuracy. However, these studies primarily focus on general populations and publicly available datasets. Research on multimodal emotion recognition for the hearing-impaired population remains relatively scarce, with a notable gap in methods for deep interaction fusion. Therefore, this paper proposes an MMHA-FNN tailored for the hearing-impaired population. The model utilizes DE spatiotemporal feature matrices as input, extracting temporal and spatial features through MBConv and ResNet modules, respectively. During the fusion stage, a Transformer multi-head self-attention mechanism is introduced to enable dynamic feature level interaction between EEG signals and facial expressions.

3. Materials and Methods

3.1. The Model Architecture of MMHA-FNN

The model architecture of MMHAFNN is shown in

Figure 1. This network architecture is primarily divided into three components: the facial expression feature extraction module, the EEG feature extraction module, and the feature fusion module. The Facial Expression Feature Extraction Module (FEFEM) is a residual linkage-based CNN module that learns multi-level facial expression features to represent emotion related information in subjects’ faces. Its layer-by-layer feature extraction and subsampling mechanism enables the network to progressively capture subtle changes in facial expressions—from local details to global structures. EFEM is an EEG spatio-temporal feature extraction network based on the MBConv module, which learns spatio-temporal features between electrodes by generating differential entropy brain topography representations using DE features and bilinear interpolation. Ultimately, the deep features extracted by FEFEM and EFEM will be fed into FFM for information exchange across different modalities. The fusion strategy within FFM, based on a multi-head self-attention mechanism, can capture dependencies within features and adaptively balance the importance of different modalities under multimodal input.

3.2. Multimodal Data Preprocessing and Feature Extraction

The MED-HI dataset consists of 300 trials from 15 hearing-impaired subjects (12 males and 3 females, aged 18–25). Emotional states (happy, sad, fear, and calmness) were elicited using 20 subtitled film clips (~200 s each), with five clips per category. EEG signals were recorded with a 64-channel Neuroscan SymAmps2 system at 1000 Hz (downsampled to 200 Hz), while facial expressions were simultaneously captured at 30 Hz. Each trial included a 5 s cue, video viewing, 45 s self-report, and a short rest, with longer breaks after every four clips. Preprocessed data were segmented into 1 s non-overlapping windows, yielding 4407 samples for emotion recognition [

6].

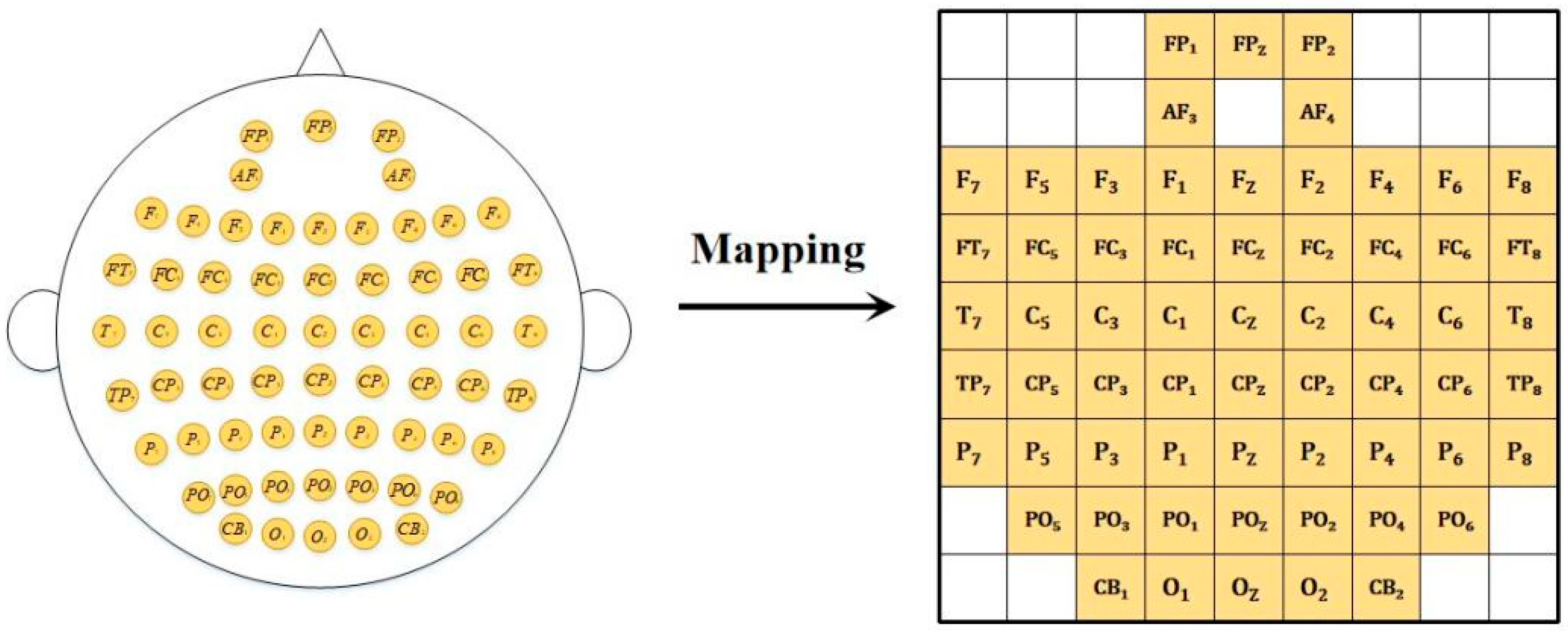

After preprocessing the EEG, DE features can be extracted for emotion recognition. For 1 s samples, DE features are extracted from the δ band (1–4 Hz),

θ band (4–8 Hz),

α band (8–12 Hz),

β band (12–30 Hz), and

γ band (30–50 Hz). Furthermore, to generate differential entropy brain topography maps, bilinear interpolation was applied to the DE features using the Cartesian coordinates of 62 electrodes obtained from the EEGLAB toolbox. Additionally, channels in the differential entropy brain topography correspond to distinct frequency bands, resulting in a data dimension of 4407 × 5 × 224 × 224 (number of samples × number of channels × width × height). The 3rd and 4th dimensions correspond to the interpolated EEG topography maps (224 × 224 resolution), obtained by bilinear interpolation of 62 electrodes across 5 frequency bands. Furthermore, zero padding was applied to fill the edge regions of the differential entropy brain topography. An example of the differential entropy brain topography is shown in

Figure 2. The original formula for differential entropy is

Here,

X denotes a continuous time series, and

f(

x) is the probability density function of

X. After frequency band decomposition, the EEG signal essentially follows a Gaussian distribution

N (

μ, σ2). Therefore, the calculation formula for the differential entropy feature can be expressed as

The formula for bilinear interpolation is

Here, represents the values of the four electrodes surrounding the interpolation point, while relates to the coordinate distances between the point of interest and the diagonal points.

Facial expression images with background information removed are resized to 256 × 256 pixels using center cropping. To accelerate convergence of image data during neural network training, facial expression data undergoes normalization processing:

Here, denotes the normalized value of the th feature in the j-th sample of the ith subject, denotes the maximum value of the th feature across all samples of the i-th subject, and denotes the minimum value of the th feature across all samples of the i-th subject. This transforms the feature values between −1 and 1, yielding the preprocessed facial expression features (3 × 256 × 256).

3.3. Facial Feature Learning Based on FEFEM

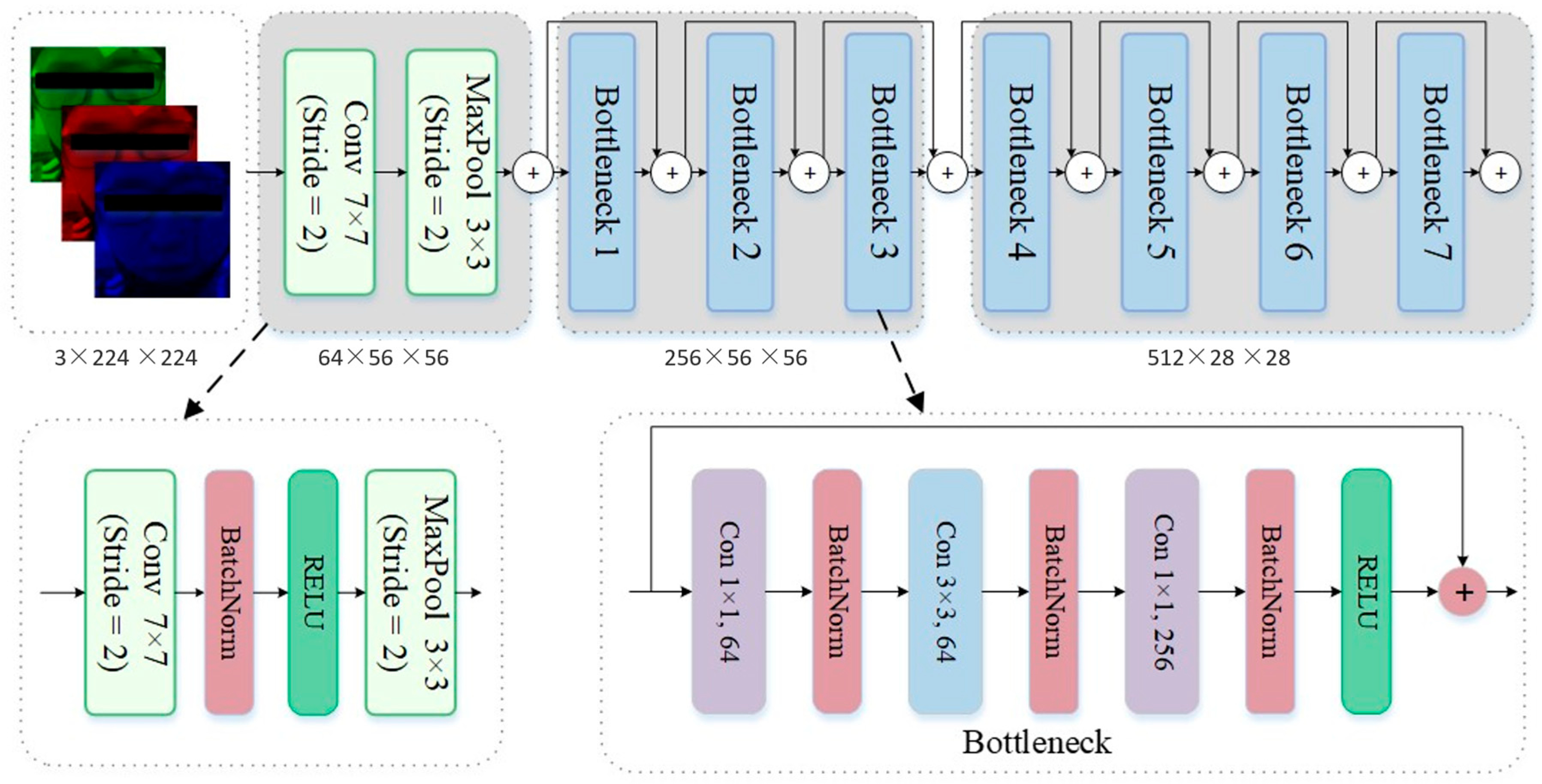

The FEFEM core employs Residual Network (ResNet) modules. ResNet is based on the structure of convolutional neural networks, with its core concept being the introduction of residual structures within the network.

Figure 3 illustrates a schematic diagram of a residual structure in a Bottleneck layer. In traditional convolutional networks, increasing the number of convolutional layers is necessary to enhance model performance. However, excessive convolutional layers can lead to the vanishing gradient problem. The introduction of residual connections effectively resolves this issue.

In the input layer of FEFEM, the input image passes through a 7 × 7 convolutional kernel with a stride of 2 to capture low-level edge features. Combined with a BatchNorm layer that performs batch normalization on each input channel, this accelerates training convergence and enhances model stability. The ReLU layer introduces a nonlinear activation function, enabling the model to process complex input data while increasing the sparsity of network outputs to prevent overfitting. Finally, a 3 × 3 max pooling layer with a stride of 2 reduces the feature map resolution. By selecting the maximum value, it preserves key information while reducing computational complexity.

The Bottleneck block in the feature extraction layer extracts deep features through three convolutional layers (1 × 1, 3 × 3, and 1 × 1 convolutions). It reduces and restores the number of channels via 1 × 1 convolutions while processing the spatial dimension with 3 × 3 convolutions, thereby achieving a balance between computational complexity and network performance. Each Bottleneck block introduces residual connections, mitigating the vanishing gradient problem in deep networks by directly adding inputs to outputs, ensuring smoother feature propagation. Some Bottleneck blocks also incorporate downsampling operations (Stride = 2) to reduce feature map resolution and enhance feature globality. Within each Bottleneck block, the number of channels progressively expands to extract richer facial expression features.

3.4. EEG Spatio-Temporal Feature Extraction Based on EFEM

The preprocessed differential entropy brain topography map is input into the EFEM framework to extract EEG spatiotemporal features. The EFEM architecture integrates CNN and Squeeze-and-Excitation (SE) attention mechanism modules, employing MBConv modules and dimensionality reduction strategies for feature extraction. The module structure is illustrated in

Figure 1.

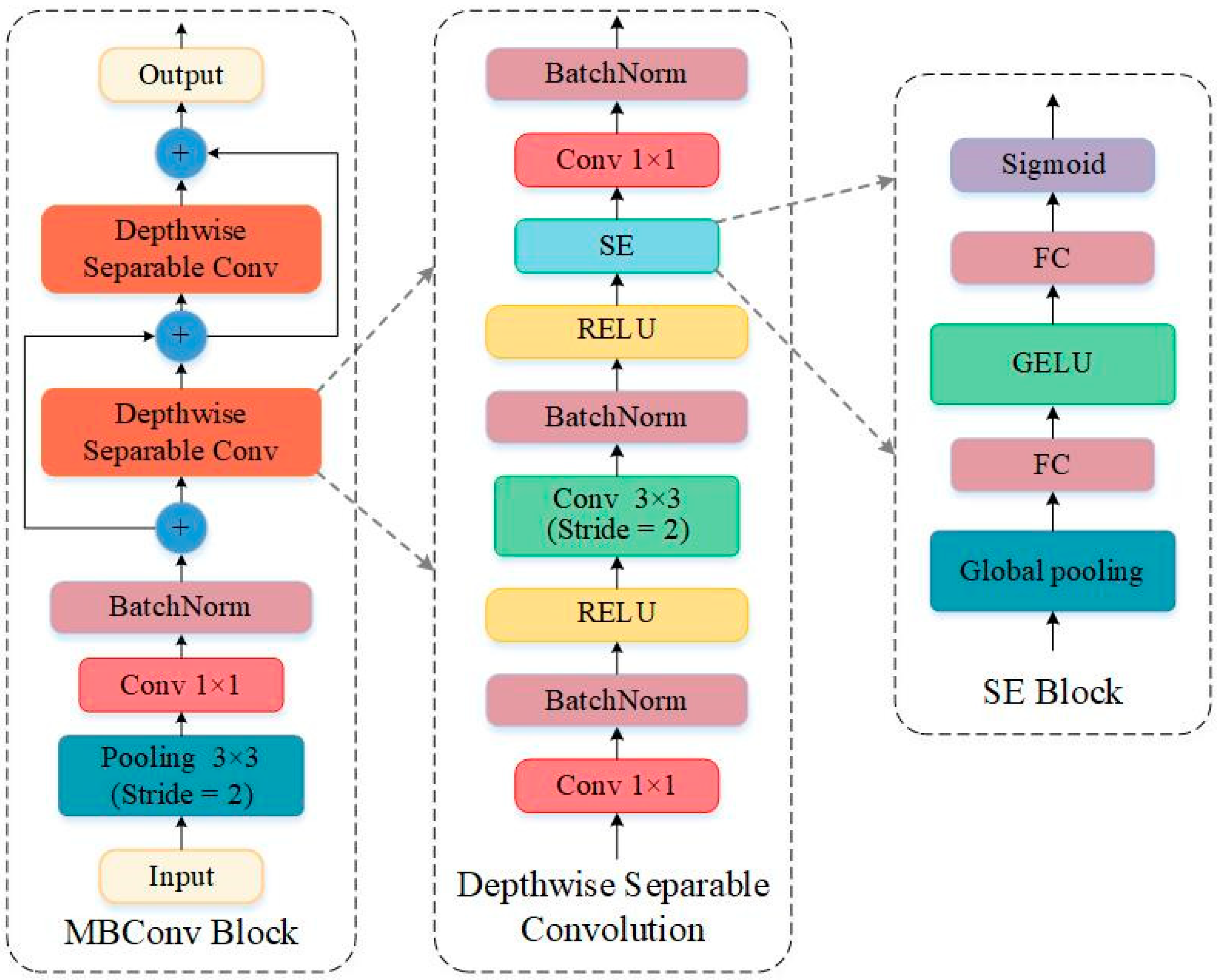

At the input layer, the 5-channel differential entropy brain topography features undergo preliminary feature extraction through a convolutional layer with a 3 × 3 kernel and stride of 2. Batch normalization and nonlinear mapping are then performed via BatchNorm and GELU layers. The GELU activation function captures the complexity of input data more effectively than the traditional ReLU activation function, particularly when processing neural signals, as it better handles nonlinear information. The second 3 × 3 convolutional layer is used for local feature encoding of the EEG.

At the deep feature extraction layer, the extracted differential entropy brain topography features are processed using MobileNet’s MBConv architecture to efficiently capture spatial and frequency domain characteristics. The MBConv module combines depthwise separable convolutions with an attention mechanism (SE Block) to further compress and expand features, as illustrated in

Figure 4. Specifically, the input features undergo spatial downsampling via a Max Pooling layer, followed by feature dimension reduction using a 1 × 1 convolutional layer. The reduced-dimension features then pass through two Depthwise Separable Conv layers to extract EEG spatiotemporal features.

The Depthwise Separable Conv layer consists of two main components. One is the Depthwise Convolution, which performs independent convolution operations on different channels of the input data—this distinguishes it from traditional convolution. It transforms a tensor of size into one of size . Traditional convolution requires parameters of size , whereas Depthwise Convolution requires only parameters. This is because in traditional convolution operations, each convolution kernel simultaneously acts on every input channel of the input data, leading to a dramatic increase in the number of parameters and computational complexity in deep learning. In Depthwise Convolution, each channel of the input data corresponds to a dedicated convolutional kernel. This approach significantly reduces the model’s parameter count and computational load, resulting in a lighter-weight model and lower training costs. The other component is Pointwise Convolution, which combines features from different channels. It transforms information from all input channels into a new feature channel, thereby generating a higher-dimensional feature representation that encompasses all channels. This enhances the model’s expressive power.

To enhance feature expressiveness, the SE module was incorporated during the design of Depthwise Separable Convolution. This module employs global average pooling to compute global features for each channel, utilizes a linear layer to model inter-channel relationships, and performs channel reweighting after obtaining channel weights via Sigmoid activation, thereby boosting the expressiveness of important features.

3.5. Multimodal Feature Fusion Based on FFM

The core of FFM is the Transformer module. After multimodal deep features are concatenated, they are fed into the FFM network for feature fusion and classification. As shown in

Figure 5, Layer Normalization (LN), Multi-head Self-Attention (MSA), and Multiple Layer Perception (MLP) form the main components of the Transformer encoder. Therefore, the operations within FFM can be expressed as

Here,

denotes the MSA operation,

denotes the MLP operation, and

denotes the LN operation. Additionally,

represents the number of blocks. It can be observed that residual connections are employed within the Transformer encoder, effectively mitigating the vanishing gradient problem and model degradation issues. Moreover, LN plays a crucial role in Transformer models by reducing training time and enhancing generalization capabilities. The multi-head self-attention module further boosts the model’s representational power, which will be detailed below.

Here,

Q denotes the Query vector,

K represents the Key vector, and

V signifies the Value vector. Within the self-attention mechanism, the input feature

Z must first undergo a linear transformation to yield

Q,

K, and

V of identical dimensions.

, and

are mapping matrices, and the dimensions of

,

, and

are all

. Multiplying

and

computes the similarity between each query vector and all key vectors, then the resulting similarity is used to calculate the weighted sum between each query vector and all value vectors. Note that the Scale function controls the numerical range of the

×

dot product via the scaling factor

, ensuring stability in softmax computation and gradient calculation. The attention function is expressed as

Here, Attention(∙) denotes the self-attention operation.

Finally, stacking multiple self-attention mechanisms yields a multi-head self-attention mechanism. This approach mirrors the use of multiple convolutional kernels in CNNs, where learning features with different semantic meanings enhances the model’s representational capacity. The formula for multi-head self-attention can be expressed as

Here, , .

4. Results

Each subject provided 20 sets of emotional data, comprising five instances of each emotion. For EEG, emotional features were extracted using one-second non-overlapping time windows; for facial expressions, the first frame of each video segment per second was selected for feature extraction. Each modality yielded 4407 samples. For experimental validation, five-fold cross-validation was employed. To prevent data leakage, the 20 emotion sets were divided into five folds, each containing one clip each of happy, calm, sad, and fearful expressions. The “feature concatenation” and “decision-layer fusion” used the same unimodal encoders (EFEM/FEFEM) and training protocol, so the only change is the fusion mechanism. During training, four folds served as the training set, with the remaining fold as the validation set. The final results represent the average outcome across all five-fold experiments.

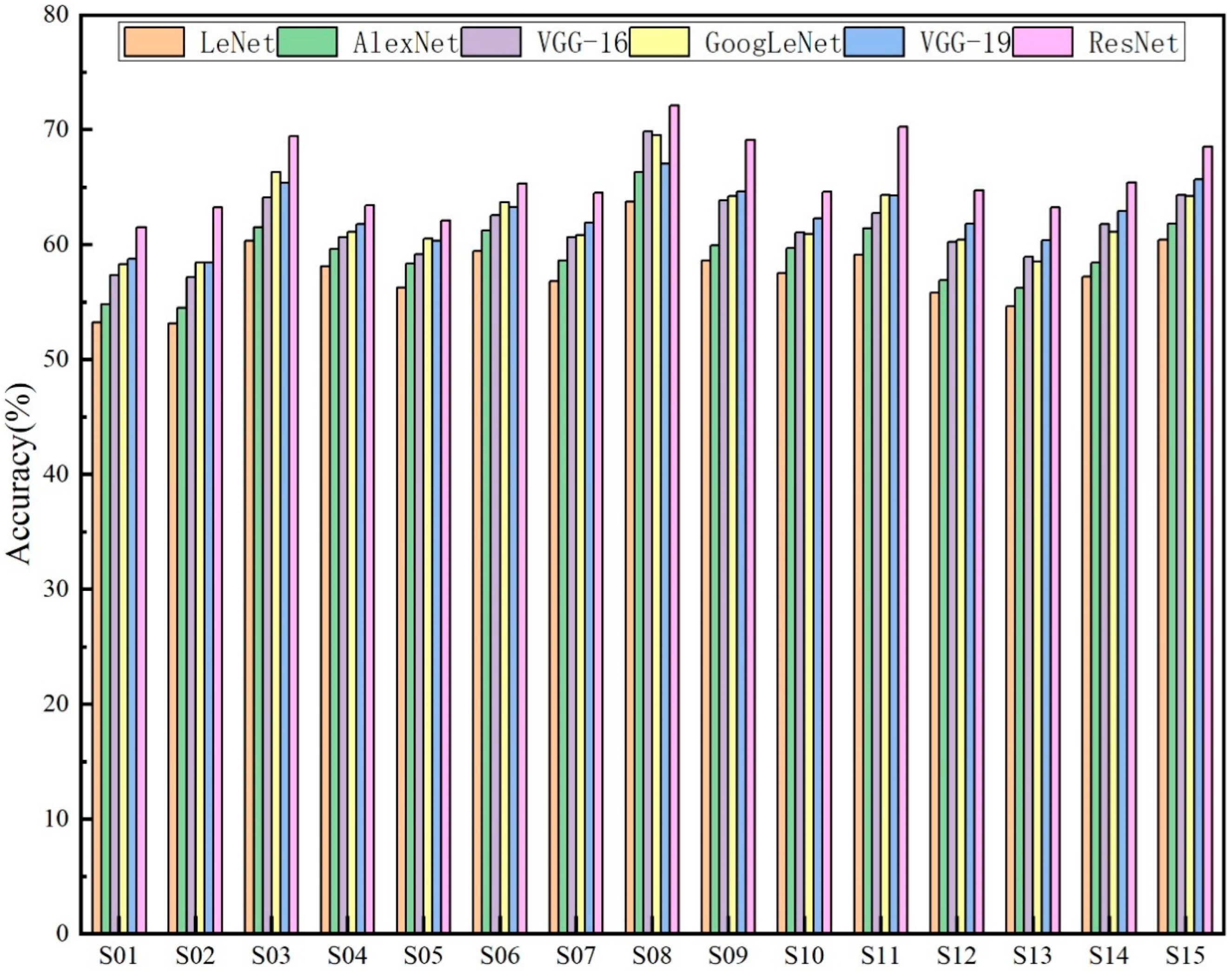

4.1. Experimental Results of FEFEM

When selecting facial expression feature extraction networks, we compared the emotional recognition performance of six established deep learning models (LeNet, AlexNet, VGG-16, GoogLeNet, VGG-19, and ResNet) on facial expression image data from 15 hearing-impaired subjects. The model hyperparameter settings are detailed in

Table 1. The optimizer employed was Adam, with a learning rate of 10

−3 and a batch size of 32. Training proceeded for 100 iterations, utilizing the cross-entropy loss function.

During the training of deep learning models, to prevent the model from becoming trapped in local optima, we employ a cosine annealing strategy (Cosine Annealing LR) to regulate the learning rate throughout the training process. The formula is as follows:

Here, denotes the current learning rate, while and represent the minimum and maximum values of the learning rate, respectively. indicates the current iteration count, and denotes the maximum iteration count. In this paper, the initial learning rate is set to 10−3, and the learning rate is updated once per iteration according to the cosine annealing rule.

The experimental results of FEFEM are shown in

Figure 6. The average accuracy of the ResNet model reached 65.75%, the VGG-19 model achieved an average accuracy of 62.91%, the GoogLeNet model reached 62.16%, the VGG-16 model attained 61.62%, the AlexNet model recorded 59.28%, and the LeNet model achieved 57.61%. Concurrently, from a holistic perspective, across the subject-dependent experiments involving 15 participants, the ResNet model demonstrated consistently superior emotional recognition performance compared to the other five models. This further indicates that the ResNet architecture is more effective at extracting deep features associated with emotions from facial expression images.

The box plot in

Figure 7 illustrates the classification accuracy performance of six distinct neural network architectures. By analysing the median, interquartile range, and outliers for each model’s effectiveness, a comprehensive understanding of their accuracy performance and variability in classification tasks is achieved. ResNet demonstrated the strongest performance across all models, achieving the highest median accuracy (65.31%) and exhibiting superior consistency in performance compared to others. This indicates that its deep architecture is better equipped to capture complex emotion features. VGG-16 and VGG-19 follow closely, exhibiting high median accuracy and relatively low variability. GoogLeNet and AlexNet also demonstrate stable performance, though their accuracy slightly trails the VGG variants. In contrast, LeNet5, owing to its shallow network structure, exhibits the lowest median accuracy and greatest variability, struggling to effectively handle more complex facial expression classification tasks.

4.2. Experimental Results of EFEM

To evaluate the effectiveness of the proposed EFEM feature extraction method, this paper compares its emotion recognition results with those of Support Vector Machine (SVM), K-Nearest Neighbours (KNN), Gaussian Naive Bayes (GNB), Random Forest (RF), Linear Discriminant Analysis (LDA), and Adaptive Boosting (AB). DE was employed as the feature input, with other parameter settings detailed in

Table 2.

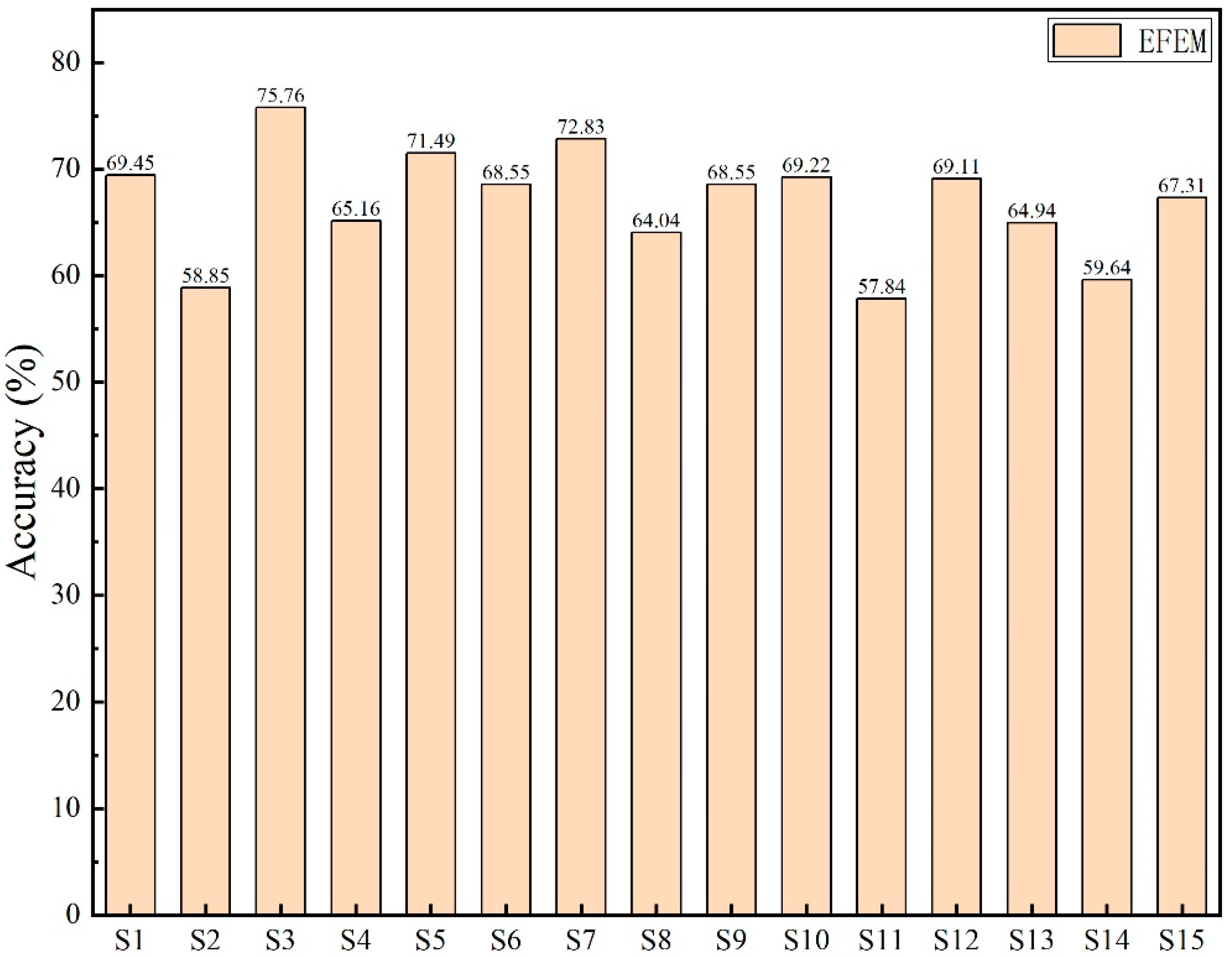

The emotion recognition results of various classifiers on 15 hearing-impaired subjects are shown in

Table 3. Overall, the EFEM deep learning approach demonstrated the best performance, achieving an average recognition accuracy of 66.85%, significantly higher than the other six machine learning classifiers. Compared to the other four machine learning classifiers, the SVM and LDA classifiers demonstrated relatively better performance. For several subjects, their accuracy rates also exceeded 60% (e.g., S05, S06, S11, and S12), though there were instances where accuracy fell below 30% (e.g., S03 and S08). This stems from the fact that machine learning classifiers rely on manually extracted features, which perform poorly when processing highly non-linear EEG signals.

The standard deviation (STD) of the results further indicates that machine learning exhibits unstable performance in EEG-based emotion recognition tasks. In contrast, EFEM not only achieves a high average accuracy but also demonstrates a smaller standard deviation in its results, suggesting that deep learning methods possess stronger feature learning capabilities and generalization abilities in emotion recognition tasks.

Figure 8 provides a more intuitive illustration of EFEM’s emotion recognition outcomes using single-modal EEG data. The figure reveals that subjects S02 and S11 exhibited relatively poor recognition results, achieving accuracy rates below 60%. Subject S03 demonstrated the highest emotion recognition accuracy, reaching 75.76%.

4.3. Experimental Results for MMHA-FNN

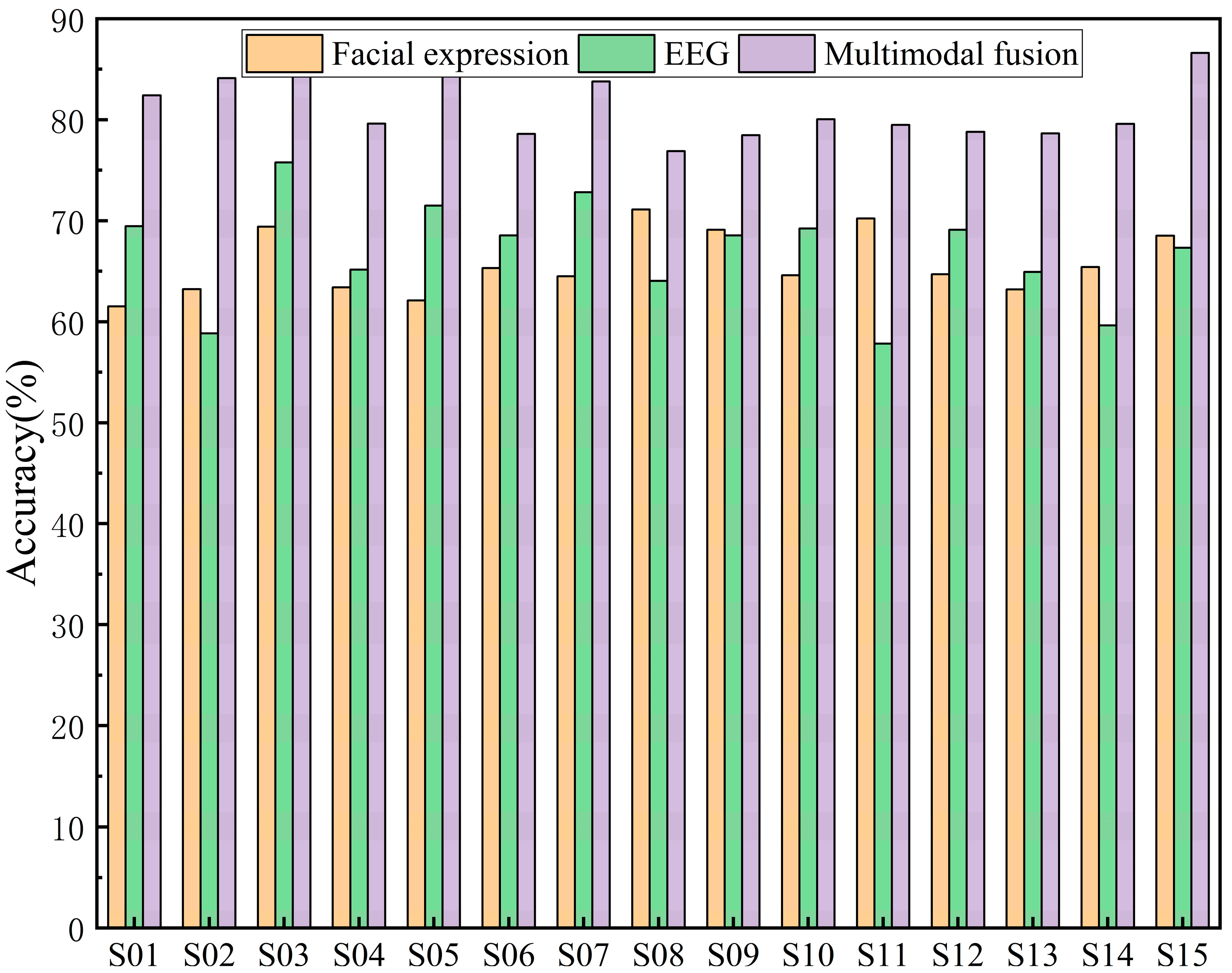

The comparison of classification accuracy among 15 hearing-impaired subjects in an emotion recognition task based on different modalities (facial expressions and EEG signals) and their multimodal fusion is presented in

Figure 9. The figure demonstrates that the multimodal fusion approach exhibits a significant advantage, with accuracy consistently higher than that of single-modality facial expressions or EEG alone. This indicates that multimodal fusion strategies effectively leverage the complementary nature of facial expressions and EEG signals to enhance emotion recognition performance. Overall, the EEG modality demonstrated superior emotion recognition accuracy for most subjects compared to the facial expression modality. This is because, as a physiological signal, EEG is less susceptible to masking than the non-physiological facial expression modality, thereby more accurately reflecting the subject’s genuine emotional state. However, for a minority of subjects, facial expressions demonstrated superior emotion recognition performance to EEG. This discrepancy arises because EEG signals are susceptible to noise interference. Consequently, multimodal fusion methods effectively mitigate this weakness, thereby enhancing the accuracy and robustness of emotion recognition.

As shown in

Table 4, the average results of single-modal versus multi-modal emotion recognition are compared. Experimental findings indicate that multi-modal fusion achieves optimal emotion recognition performance at 81.14%, surpassing both EEG single-modal (66.85%) and facial expression single-modal (65.75%) approaches. The average accuracy of EEG single-modal emotion recognition is marginally higher than that of facial expression single-modal.

To further evaluate the trade-off between computational overhead and performance of the proposed method, this study compared the results of different models across dimensions including number of parameters (in millions), computational effort (GFLOPs), training duration (hours), and classification accuracy (%), trained on an NVIDIA RTX 3090 GPU, as shown in

Table 5. It can be observed that the traditional ResNet-18 model exhibits the lowest parameter count and FLOPs, yet its accuracy rate of merely 65.7% falls short of meeting the demands of multimodal emotion recognition. Representative recent multimodal fusion approaches, such as Cross-Attention Transformer and Graph Fusion (GCN + Transformer), achieve significant accuracy improvements (79.2% and 80.5%), albeit with relatively higher parameter counts and computational complexity. We conducted a comparative analysis with reference [

6]. Although both studies employed MED-HI electroencephalogram topography and facial expression data, the proposed MMHA-FNN introduced feature-level interaction fusion technology based on Transformers, thereby achieving an approximate 3% improvement in accuracy. The proposed MMHA-FNN achieves an average accuracy of 81.1% while maintaining low computational overhead, outperforming existing comparative methods.

In addition, we performed Leave-One-Subject-Out (LOSO) cross-validation to evaluate subject-independent generalization. The proposed MMHA-FNN achieved an average accuracy of 78.2%, significantly higher than decision-level fusion (67.4%) and feature concatenation (73.1%). These results confirm that MMHA-FNN can generalize to unseen subjects, further supporting its practical applicability. Concurrently, we conducted a t-test experiment. Results show that the improvements of MMHA-FNN over single-modality approaches (ResNet and EFEM) and even advanced baselines (Cross-Attention and GNN Fusion) are statistically significant (p < 0.05).

5. Discussion

The proposed MMHA-FNN demonstrates significant advantages in multimodal emotion recognition tasks for hearing-impaired subjects. Experimental results indicate that EEG and facial expression features exhibit distinct complementarity: EEG directly reflects neural activity during emotional processing, offering robust physiological authenticity and stability; whereas facial expressions provide overt emotional cues more closely aligned with real-world communication scenarios. Their integration enables the model to simultaneously capture intrinsic physiological responses and extrinsic expression changes, thereby substantially enhancing overall recognition performance.

Analysis of the results indicates that multimodal fusion effectively enhances emotion recognition performance. To further investigate the complementarity between EEG and facial expression features during multimodal fusion, this study analysed the confusion matrices across different modalities for 15 hearing-impaired subjects. The results are presented in

Figure 10.

Figure 10a presents the confusion matrix results based solely on EEG. It is evident that EEG features demonstrate optimal performance in recognizing ‘happy’ and ‘neutral’, achieving 73% and 70% accuracy, respectively. However, recognition accuracy for ‘sad’ is notably lower, at only 56%.

Figure 10b presents the single-modality facial expression-based emotion recognition results. Here, the ‘fear’ emotion achieved the highest recognition accuracy at 75% on average. Conversely, the accuracy for ‘sad’ was low at 53%, with 19% of ‘fear’ emotion samples misclassified as ‘sad’. This may stem from both emotions belonging to the negative category, which are prone to confusion in single-modal facial expression recognition.

Figure 10c presents the confusion matrix for multimodal fusion-based emotion recognition. It demonstrates that the recognition accuracy for ‘sad’ improved by nearly 20% post-fusion, reaching 77%. Although the multimodal recognition accuracy for “fear” was slightly lower than that achieved through facial expression analysis alone, the overall multimodal fusion approach demonstrated greater robustness. This approach effectively leverages the complementary strengths of different modalities, thereby enhancing the overall effectiveness of emotion recognition.

To further examine how MMHA-FNN leverages cross-modal information, we quantified the average attention weights assigned to EEG frequency bands and facial regions across all subjects. As shown in

Table 6, the δ and

θ bands received the highest weights (0.28 and 0.24, respectively), indicating their strong role in capturing sadness and calmness. In contrast, β and γ bands were relatively more influential in happy and fear classification. For facial features, the eye and mouth regions contributed most (0.31 and 0.27), aligning with prior findings that emotional salience often manifests in these areas. One limitation is that we selected the first frame per second for facial streams. This may introduce sampling bias. Future work should average frames across each second or use a lightweight temporal module to better capture dynamics.

In addition to its methodological contributions, this work also provides important translational implications. The distinctive activation patterns observed in the parietal and occipital lobes of hearing-impaired subjects indicate that emotional processing in this population relies more heavily on visual and spatial pathways than auditory cues. This neurological characteristic not only supports the integration of EEG and facial modalities within the proposed MMHA-FNN framework but also motivates the development of multimodal attention mechanisms capable of adaptively weighting cross-modal information.

From a broader perspective, this study represents an early step toward practical affective interfaces for special-needs users. While the present implementation employs high-density EEG and laboratory-based recordings, future research will aim to translate these findings into portable, reduced-channel, and wearable systems that can monitor emotional states in real-world settings. Such developments could contribute to rehabilitation training, emotional feedback therapy, and assistive communication technologies for hearing-impaired individuals, ultimately bridging neuroscience insights with human–computer interaction applications.

6. Conclusions

This paper addresses the specific requirements for emotion recognition in hearing-impaired subjects by proposing a multi-modal fusion neural network (MMHA-FNN) based on a multi-head attention mechanism. The model extracts spatio-temporal features from EEG signals via DE brain topography, integrating these with facial expression feature representations derived from a ResNet-based architecture. At the feature layer, a Transformer multi-head self-attention mechanism facilitates dynamic cross-modal interaction and weighted fusion. Experimental results demonstrate limitations in single-modal methods’ recognition accuracy, with EEG and facial expressions achieving 66.85% and 65.75%, respectively. Multimodal fusion significantly enhances overall performance. The proposed MMHA-FNN achieves an average accuracy of 81.14% across four emotion recognition tasks in the MED-HI database, outperforming traditional concatenation fusion and decision-layer fusion approaches.

Author Contributions

Methodology, S.F. and Q.W.; Software, S.F.; Validation, Q.W. and Y.S.; Data curation, Q.W.; Writing—original draft, S.F.; Writing—review & editing, Y.S.; Visualization, K.Z.; Supervision, K.Z. and Y.S.; Project administration, Y.S.; Funding acquisition, K.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work is Supported by National Natural Science Foundation of China (Grant No. 22304130), Key Technologies R&D Program of Tianjin (Grant No. 24YFZCSN00030).

Institutional Review Board Statement

The Ethics Committee of Tianjin University of Technology has approved this experiment for the emotion recognition research (May 2020).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Picard, R.W.; Vyzas, E.; Healey, J. Toward machine emotional intelligence: Analysis of affective physiological state. IEEE Trans. Pattern Anal. Mach. Intell. 2001, 23, 1175–1191. [Google Scholar] [CrossRef]

- Yu, P.; He, X.P.; Li, H.Y.; Dou, H.W.; Tan, Y.Y.; Wu, H.; Chen, B.D. FMLAN: A novel framework for cross-subject and cross-session EEG emotion recognition. Biomed. Signal Proces. 2025, 100, 106912. [Google Scholar] [CrossRef]

- Bai, Z.L.; Hou, F.Z.; Sun, K.X.; Wu, Q.Z.; Zhu, M.; Mao, Z.M.; Song, Y.; Gao, Q. SECT: A Method of Shifted EEG Channel Transformer for Emotion Recognition. IEEE J. Biomed. Health 2023, 27, 4758–4767. [Google Scholar] [CrossRef] [PubMed]

- Zheng, W.M.; Zhou, X.Y.; Zou, C.R.; Zhao, L. Facial expression recognition using kernel canonical correlation analysis (KCCA). IEEE Trans. Neural Netw. 2006, 17, 233–238. [Google Scholar] [CrossRef] [PubMed]

- Zhu, X.L.; Liu, C.; Zhao, L.; Wang, S.M. EEG Emotion Recognition Network Based on Attention and Spatiotemporal Convolution. Sensors 2024, 24, 3464. [Google Scholar] [CrossRef] [PubMed]

- Li, D.H.; Liu, J.Y.; Yang, Y.; Hou, F.Z.; Song, H.T.; Song, Y.; Gao, Q.; Mao, Z.M. Emotion Recognition of Subjects With Hearing Impairment Based on Fusion of Facial Expression and EEG Topographic Map. IEEE Trans. Neural Syst. Rehabil. Eng. 2023, 31, 437–445. [Google Scholar] [CrossRef] [PubMed]

- Hou, G.Q.; Yu, Q.W.; Chen, G.; Chen, F. A Novel and Powerful Dual-Stream Multi-Level Graph Convolution Network for Emotion Recognition. Sensors 2024, 24, 7377. [Google Scholar] [CrossRef] [PubMed]

- Bai, Z.L.; Liu, J.J.; Hou, F.Z.; Chen, Y.R.; Cheng, M.Y.; Mao, Z.M.; Song, Y.; Gao, Q. Emotion recognition with residual network driven by spatial-frequency characteristics of EEG recorded from hearing-impaired adults in response to video clips. Comput. Biol. Med. 2023, 152, 106344. [Google Scholar] [CrossRef] [PubMed]

- Zhu, M.; Bai, Z.L.; Wu, Q.Z.; Wang, J.C.; Xu, W.H.; Song, Y.; Gao, Q. CFBC: A Network for EEG Emotion Recognition by Selecting the Information of Crucial Frequency Bands. IEEE Sens. J. 2024, 24, 30451–30461. [Google Scholar] [CrossRef]

- Duan, R.-N.; Zhu, J.-Y.; Lu, B.-L. Differential entropy feature for EEG-based emotion classification. In Proceedings of the 2013 6th International IEEE/EMBS Conference on Neural Engineering (NER), SannDiego, CA, USA, 6–8 November 2013; pp. 81–84. [Google Scholar]

- Li, X.; Song, D.; Zhang, P.; Zhang, Y.; Hou, Y.; Hu, B. Exploring EEG features in cross-subject emotion recognition. Front. Neurosci. 2018, 12, 162. [Google Scholar] [CrossRef] [PubMed]

- Yao, L.X.; Lu, Y.; Qian, Y.K.; He, C.J.; Wang, M.J. High-Accuracy Classification of Multiple Distinct Human Emotions Using EEG Differential Entropy Features and ResNet18. Appl. Sci. 2024, 14, 6175. [Google Scholar] [CrossRef]

- Wu, Y.; Mi, Q.W.; Gao, T.H. A Comprehensive Review of Multimodal Emotion Recognition: Techniques, Challenges, and Future Directions. Biomimetics 2025, 10, 418. [Google Scholar] [CrossRef] [PubMed]

- Liang, Z.; Zhou, R.S.; Zhang, L.; Li, L.L.; Huang, G.; Zhang, Z.G.; Ishii, S. EEGFuseNet: Hybrid Unsupervised Deep Feature Characterization and Fusion for High-Dimensional EEG With an Application to Emotion Recognition. IEEE Trans. Neural Syst. Rehabil. Eng. 2021, 29, 1913–1925. [Google Scholar] [CrossRef] [PubMed]

- Wu, X.; Zheng, W.L.; Li, Z.Y.; Lu, B.L. Investigating EEG-based functional connectivity patterns for multimodal emotion recognition. J. Neural Eng. 2022, 19, 016012. [Google Scholar] [CrossRef] [PubMed]

- Devarajan, K.; Ponnan, S.; Perumal, S. Enhancing Emotion Recognition Through Multi-Modal Data Fusion and Graph Neural Networks. Intell.-Based Med. 2025, 12, 100291. [Google Scholar] [CrossRef]

- Pillalamarri, R.; Shanmugam, U. A review on EEG-based multimodal learning for emotion recognition. Artif. Intell. Rev. 2025, 58, 131. [Google Scholar] [CrossRef]

- Hu, F.; He, K.; Wang, C.; Zheng, Q.; Zhou, B.; Li, G.; Sun, Y. STRFLNet: Spatio-Temporal Representation Fusion Learning Network for EEG-Based Emotion Recognition. IEEE Trans. Affect. Comput. 2025, 1–16. [Google Scholar] [CrossRef]

- Cai, M.P.; Chen, J.X.; Hua, C.C.; Wen, G.L.; Fu, R.R. EEG emotion recognition using EEG-SWTNS neural network through EEG spectral image. Inf. Sci. 2024, 680, 121198. [Google Scholar] [CrossRef]

- Pfeffer, M.A.; Ling, S.S.H.; Wong, J.K.W. Exploring the frontier: Transformer-based models in EEG signal analysis for brain-computer interfaces. Comput. Biol. Med. 2024, 178, 108705. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).