Obstacle-Aware Crowd Surveillance with Mobile Robots in Transportation Stations

Abstract

1. Introduction

- First, we introduce the crowd surveillance system using mobile robots and intelligent vehicles to provide obstacle avoidance in transportation stations and smart buildings.

- Then, we officially define a main research problem that aims to minimize the distance traveled by robots when the crowd surveillance is created in the given transportation area with smart buildings where there are obstacles and these obstacles are randomly assigned priorities of security levels and monitoring importance.

- To solve the problem by applying various settings and running multiple simulations, we develop two different schemes. One method is based on dividing a given area in half and separately making it as well as another method is to exclude coordinates by drawing lines overall.

- Moreover, we evaluate the performance of the proposed schemes based on numerical results by expansive simulations according to various settings and scenarios and provide discussions and analysis for the obtained outcomes.

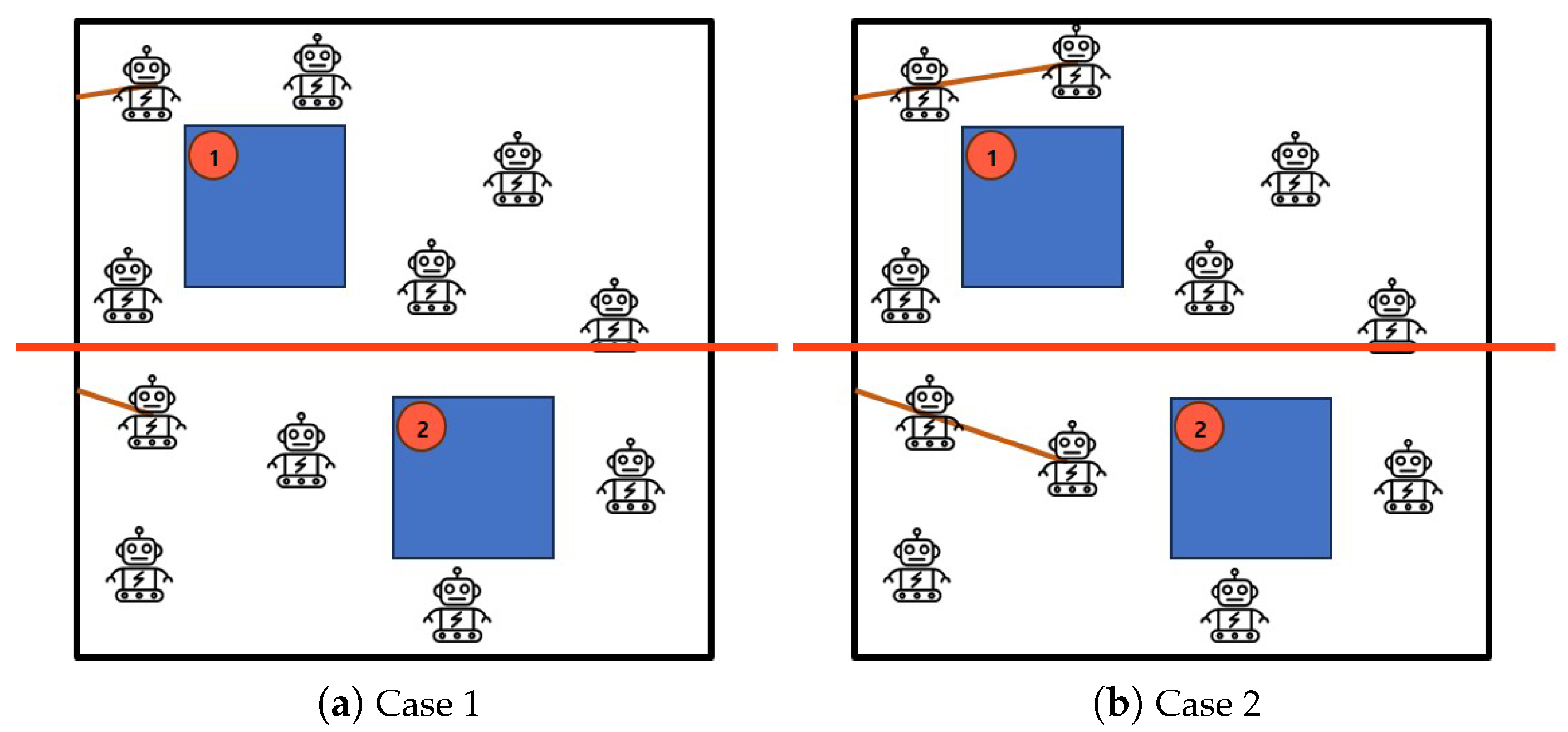

2. System Overview and Problem Definition

2.1. System Settings and Assumptions

- The given transportation station area is a 2D square area where there may exist obstacles. Each obstacle is given priority at random.

- Security importance in transportation station area is determined by the given priority and can be ignored if it is lower than a certain priority.

- The system components include mobile robots where each mobile robot has the equal speed of movement.

- Mobile robots have communication systems for efficient communication and cooperation.

- If a mobile robot crashes, it can not be used. The mobile robot moves the floor and moves to specific positions to provide crowd surveillance.

- Mobile robots can move freely in all areas.

- Mobile robots work together to determine the optimal path and placement to create crowd surveillance.

2.2. System Conditions

2.3. Problem Definition

3. Proposed Schemes

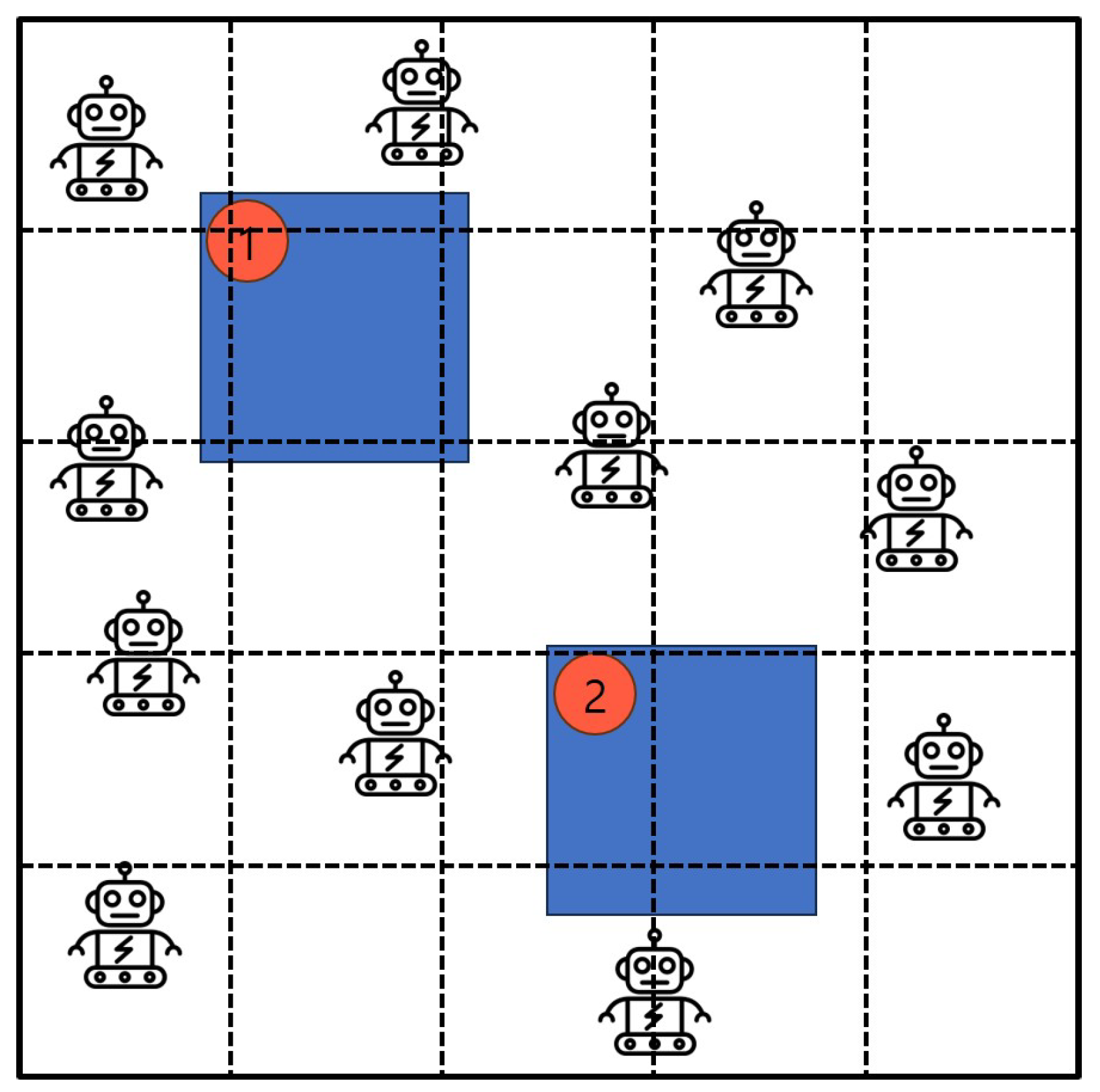

3.1. Algorithm 1: Half-Divided-Positioning

- Check the given square area.

- Identify the location of randomly scattered mobile robots.

- Verify the location of obstacles in the area and identify randomly assigned priorities.

- Obstacles whose priority is below a certain value are excluded.

- Divide the area in half horizontally.

- A crowd surveillance is constituted by moving one robot at a time in two divided areas.

- Calculate the total distance traveled by mobile robots and return it as final output .

| Algorithm 1 Half-Divided-Positioning |

| Inputs: , Output: 1: accept the given area S; 2: verify the positions of mobile robots M in S; 3: set ; 4: set total distance = 0; 5: recognize the locations of obstacles T in S; 6: assign the priority to each obstacle; 7: divide S in half and set them as and ; 8: while a crowd surveillance is not formed do 9: select a robot m in M; 10: move m to min(, ); 11: estimate the moving distance and add it to total distance; 12: if the crowd surveillance is generated completely in S then 13: exit 14: update total distance to ; 15: return ; |

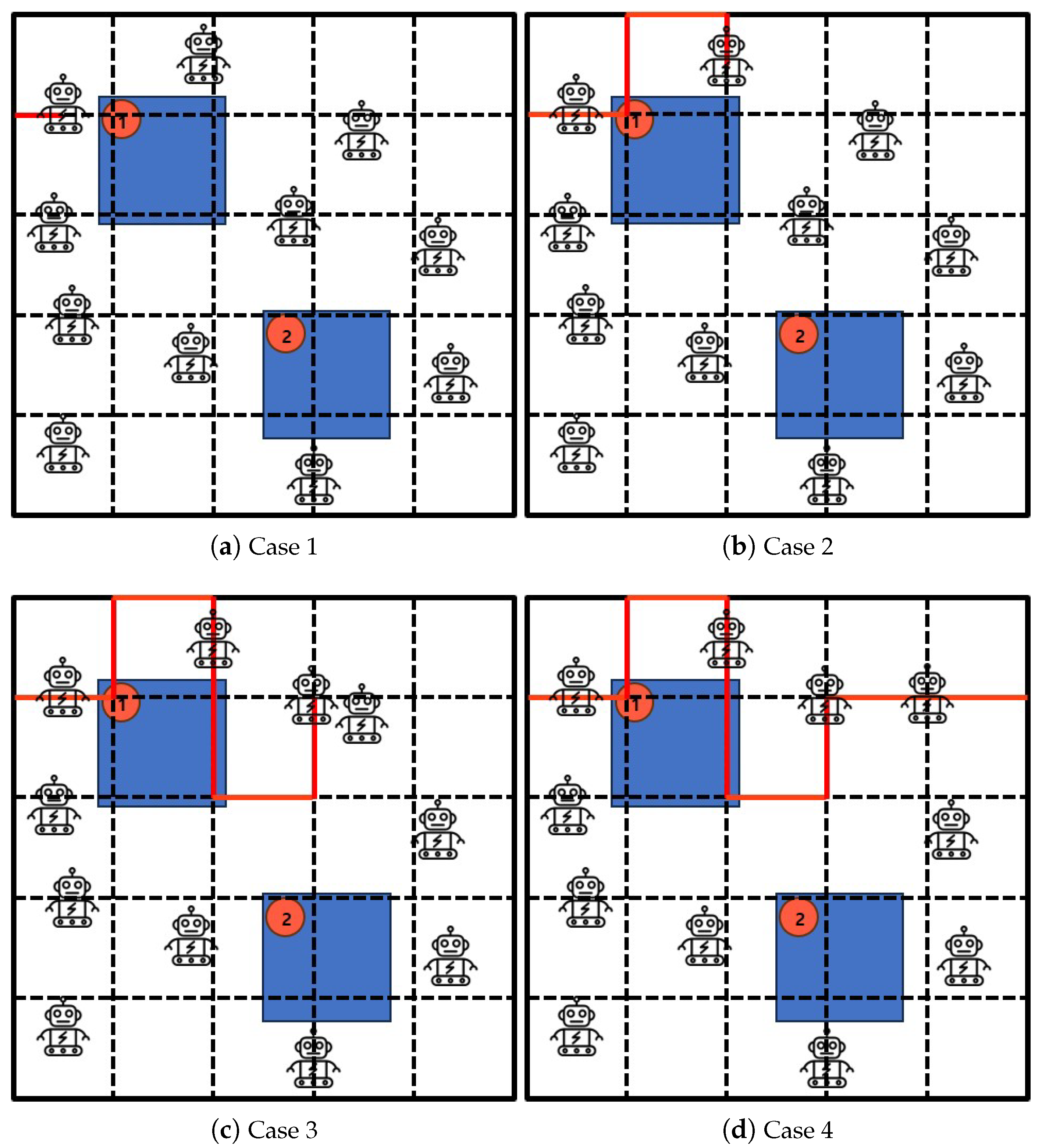

3.2. Algorithm 2: Excluded-Coordinated-Movement

- Verify the given transportation space.

- Place mobile robots randomly in the given space.

- Identify the arbitrary location of mobile robots in all regions.

- Check the location of obstacles within the area and identify randomly assigned priorities.

- Exclude obstacles with priorities which do not exceed a certain value.

- Draw a line and give coordinates to all areas.

- Plan the robot’s travel path, excluding coordinates of past parts.

- Move the robot to a planned path and create a crowd surveillance.

- Estimate the total moving distance by mobile robots and return it as final output .

| Algorithm 2 Excluded-Coordinated-Movement |

|

Inputs: , Output: 1: verify the given area S; 2: locate a set of mobile robots M into S; 3: check the positions of M; 4: set ; 5: set total distance = 0; 6: set a security priority bound b; 7: identify the positions of obstacles T in S; 8: while a priority is assigned to every obstacle T do 9: assign the priority p to an obstacle t of T; 10: if p < b then 11: exclude t from T; 12: generate the line-based coordinates in S; 13: while a crowd surveillance is not formed do 14: select a robot m in M; 15: move a robot to position for crowd surveillance with excluding coordinates of past parts; 16: calculate the moving distance and add it to total distance; 17: if the crowd surveillance is created completely in S then 18: exit 19: update total distance to ; 20: return ; |

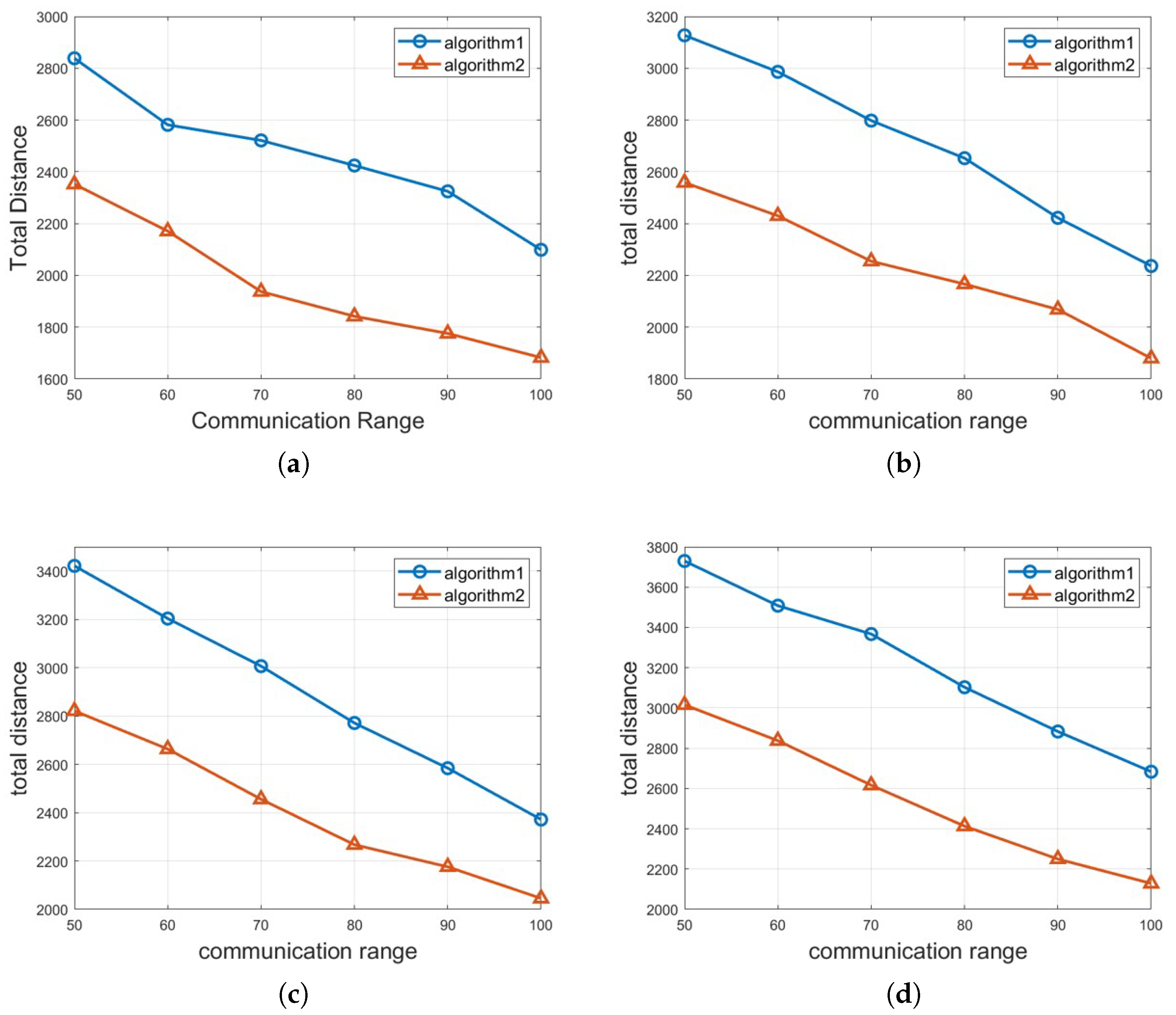

4. Experimental Evaluations and Discussions

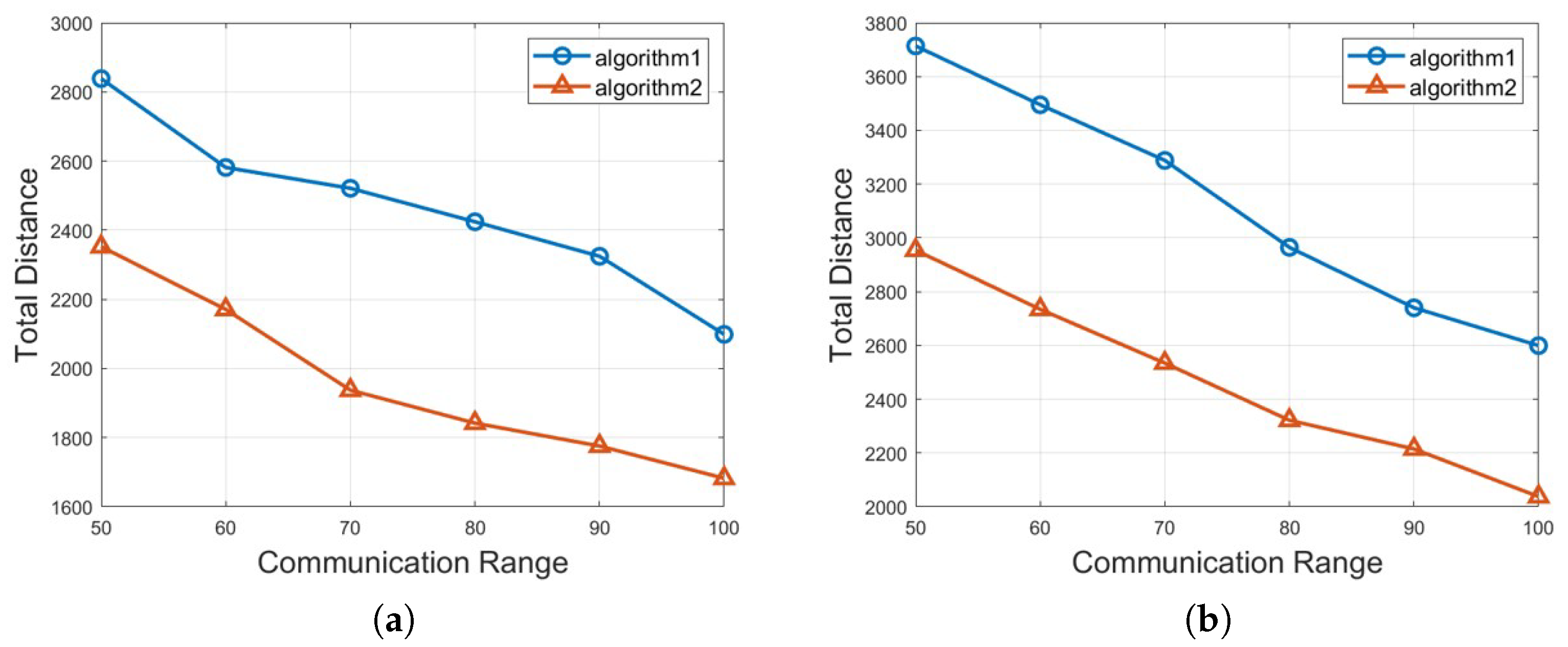

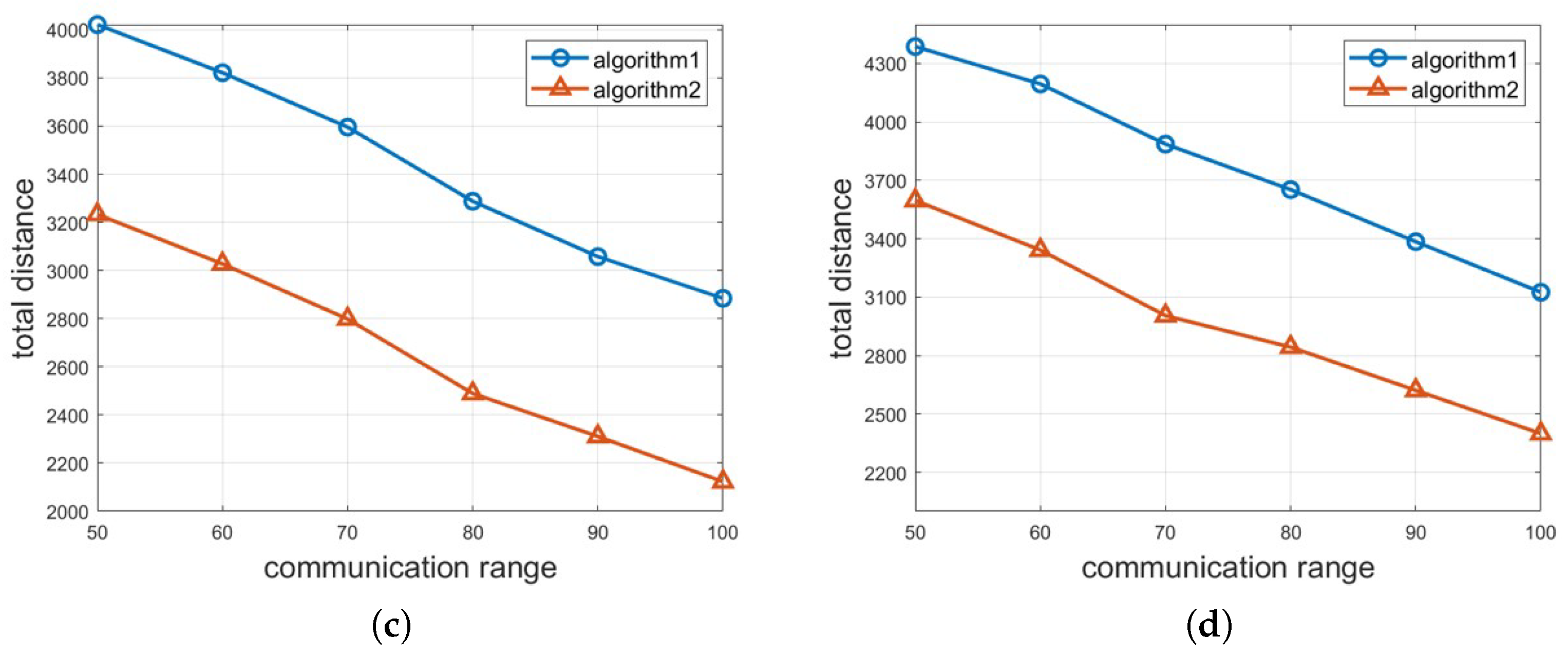

4.1. Simulation Results

4.2. Complexity Analysis

5. Concluding Remarks

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| IoT | Internet of Things |

| IIoT | Industrial Internet of Things |

| RAN | Radio Access Network |

References

- Zhu, Y.; Mao, B.; Kato, N. On a novel high accuracy positioning with intelligent reflecting surface and unscented kalman filter for intelligent transportation systems in B5G. IEEE J. Sel. Areas Commun. 2024, 42, 68–77. [Google Scholar] [CrossRef]

- Scotece, D.; Noor, D.A.; Foschini, L.; Corradi, A. 5G-Kube: Complex telco Core infrastructure deployment made low-cost. IEEE Commun. Mag. 2023, 61, 26–30. [Google Scholar] [CrossRef]

- Tuong, V.; Noh, W.; Cho, S. Sparse CNN and deep reinforcement learning-based D2D scheduling in UAV-assisted industrial IoT network. IEEE Trans. Ind. Inform. 2024, 20, 213–223. [Google Scholar] [CrossRef]

- Ha, T.; Masood, A.; Na, W.; Cho, S. Intelligent multi-path TCP congestion control for video streaming in internet of deep space things communication. ICT Express 2023, 9, 860–868. [Google Scholar] [CrossRef]

- Yeh, C.; Jo, G.D.; Ko, Y.-J.; Chung, H.K. Perspectives on 6G wireless communications. ICT Express 2023, 9, 82–91. [Google Scholar] [CrossRef]

- Ahammed, T.B.; Patgiri, R.; Nayak, S. A vision on the artificial intelligence for 6G communication. ICT Express 2023, 9, 197–210. [Google Scholar] [CrossRef]

- Hu, H.; Xiong, K.; Yang, H.; Ni, Q.; Gao, B.; Fan, P.; Letaief, K.B. AoI-minimal online scheduling for wireless-powered IoT: A lyapunov optimization-based approach. IEEE Trans. Green Commun. Netw. 2023, 7, 2081–2092. [Google Scholar] [CrossRef]

- Chatzakis, M.; Fatourou, P.; Kosmas, E.; Palpanas, T.; Peng, B. Odyssey: A journey in the land of distributed data series similarity search. Proc. VLDB Endow. 2023, 16, 1140–1153. [Google Scholar]

- Zhao, R.; Wang, Y.; Xue, Z.; Ohtsuki, T.; Adebisi, B.; Gui, G. Semisupervised Federated-Learning-Based Intrusion Detection Method for Internet of Things. IEEE Internet Things J. 2023, 10, 8645–8657. [Google Scholar] [CrossRef]

- Hossain, A.; Hossain, M.; Ansari, N. Dual-band aerial networks for priority-based traffic. IEEE Trans. Veh. Technol. 2023, 72, 9500–9510. [Google Scholar] [CrossRef]

- Kyung, Y.; Ko, H.; Lee, J.; Pack, S.; Park, N.; Ko, N. Location-aware B5G LAN-type services: Architecture, use case, and challenges. IEEE Commun. Mag. 2023, 62, 88–94. [Google Scholar] [CrossRef]

- Ko, H.; Pack, S.; Leung, V.C.M. Performance optimization of serverless computing for latency-guaranteed and energy-efficient task offloading in energy-harvesting industrial IoT. IEEE Internet Things J. 2023, 10, 1897–1907. [Google Scholar] [CrossRef]

- Cruz, M.; Abbade, L.; Lorenz, P.; Mafra, S.B.; Rodrigues, J.J.P.C. Detecting compromised IoT devices through XGBoost. IEEE Trans. Intell. Transp. Syst. 2023, 24, 15392–15399. [Google Scholar] [CrossRef]

- Zhao, M.; Adib, F.; Katabi, D. Emotion recognition using wireless signals. Commun. ACM 2018, 61, 91–100. [Google Scholar] [CrossRef]

- Kim, H.; Ben-Othman, J.; Cho, S.; Mokdad, L. Intelligent aerial-ground surveillance and epidemic prevention with discriminative public and private services. IEEE Netw. 2018, 36, 40–46. [Google Scholar] [CrossRef]

- Lee, S.; Lee, S.; Choi, Y.; Ben-Othman, J.; Mokdad, L.; Hwang, K.; Kim, H. Task-oriented surveillance framework for virtual emotion informatics in polygon spaces. IEEE Wirel. Commun. 2023, 30, 104–111. [Google Scholar] [CrossRef]

- Lin, P.; Song, Q.; Yu, F.; Wang, D.; Jamalipour, A.; Guo, L. Wireless virtual reality in beyond 5G systems with the internet of intelligence. IEEE Wirel. Commun. 2021, 28, 70–77. [Google Scholar] [CrossRef]

- Herrera, J.L.; Galán-Jiménez, J.; Foschini, L.; Bellavista, P.; Berrocal, J.; Murillo, J.M. QoS-aware fog node placement for intensive IoT applications in SDN-fog scenarios. IEEE Internet Things J. 2022, 9, 13725–13739. [Google Scholar] [CrossRef]

- Song, R.; Wu, J.; Pan, Q.; Imran, M.; Naser, N.; Jones, R.; Verikoukis, C.V. Zero-Trust Empowered Decentralized Security Defense against Poisoning Attacks in SL-IoT: Joint Distance-Accuracy Detection Approach. Proc. IEEE Globecom 2023, 2766–2771. [Google Scholar]

- Memos, V.A.; Psanni, K.E. Optimized UAV-based data collection from MWSNs. ICT Express 2023, 9, 29–33. [Google Scholar] [CrossRef]

- Liu, Y.; Xiong, K.; Zhang, W.; Yang, H.; Fan, P.; Letaief, K.B. Jamming-enhanced secure UAV communications with propulsion energy and curvature radius constraints. IEEE Trans. Veh. Technol. 2023, 72, 10852–10866. [Google Scholar] [CrossRef]

- Messous, M.A.; Senouci, S.; Sedjelmaci, H.; Cherkaoui, S. A Game Theory Based Efficient Computation Offloading in an UAV Network. IEEE Trans. Veh. Technol. 2019, 68, 4964. [Google Scholar] [CrossRef]

- Lhazmir, S.; Oualhaj, O.A.; Kobbane, A.; Ben-Othman, J. Matching Game With No-Regret Learning for IoT Energy-Efficient Associations With UAV. IEEE Trans. Green Commun. Netw. 2020, 4, 973–981. [Google Scholar] [CrossRef]

- Srivastava, V.; Debnath, S.K.; Bera, B.; Das, A.K.; Park, Y.; Lorenz, P. Blockchain-envisioned provably secure multivariate identity-based multi-signature scheme for internet of vehicles environment. IEEE Trans. Intell. Transp. Syst. 2022, 71, 9853–9867. [Google Scholar] [CrossRef]

- Saad, W.; Sanjab, A.; Wang, Y.; Kamhoua, C.A.; Kwiat, K.A. Hardware trojan detection game: A prospect-theoretic approach. IEEE Trans. Veh. Technol. 2022, 66, 7697–7710. [Google Scholar] [CrossRef]

- Feng, S.; Lu, X.; Sun, S.; Niyato, D.; Hossain, E. Securing large-scale D2D networks using covert communication and friendly jamming. IEEE Trans. Wirel. Commun. 2024, 23, 592–606. [Google Scholar] [CrossRef]

- Asheralieva, A.; Niyato, D.; Xiong, Z. Auction-and-learning based lagrange coded computing model for privacy-preserving, secure, and resilient mobile edge computing. IEEE Trans. Mob. Comput. 2023, 22, 744–764. [Google Scholar] [CrossRef]

- Xiao, Y.; Yan, C.; Lyu, S.; Pei, Q.; Liu, X.; Zhang, N.; Dong, M. Defed: An edge-feature-enhanced image denoised network against adversarial attacks for secure internet of things. IEEE Internet Things J. 2023, 10, 6836–6848. [Google Scholar] [CrossRef]

- Kang, J.; Ryu, D.; Baik, J. A Case Study of Industrial Software Defect Prediction in Maritime and Ocean Transportation Industries. J. KIISE 2020, 47, 769–778. [Google Scholar] [CrossRef]

- Park, S.; Kang, M.; Park, J.; Chom, S.; Han, S. Analyzing the Effects of API Calls in Android Malware Detection Using Machine Learning. J. KIISE 2021, 48, 257–263. [Google Scholar] [CrossRef]

- Hu, X.; Zhang, H.; Ma, D.; Wang, R.; Zheng, J. Minor class-based status detection for pipeline network using enhanced generative adversarial networks. Neurocomputing 2021, 424, 71–83. [Google Scholar] [CrossRef]

- Huang, L.; Zhou, M.; Hao, K.; Hou, E. A survey of multi-robot regular and adversarial patrolling. IEEE/CAA J. Autom. Sin. 2019, 6, 894–903. [Google Scholar] [CrossRef]

- Lee, S. An Efficient Coverage Area Re-Assignment Strategy for Multi-Robot Long-Term Surveillance. IEEE Access 2023, 11, 33757–33767. [Google Scholar] [CrossRef]

- Zhang, J.; Wu, Y.; Zhou, M. Cooperative Dual-Task Path Planning for Persistent Surveillance and Emergency Handling by Multiple Unmanned Ground Vehicles. IEEE Trans. Intell. Transp. Syst. 2024, 11, 16288–16299. [Google Scholar] [CrossRef]

- Naranjo, J.E.; Jimenez, F.; Anguita, M.; Rivera, J.L. Automation Kit for Dual-Mode Military Unmanned Ground Vehicle for Surveillance Missions. IEEE Intell. Transp. Syst. Mag. 2024, 12, 125–137. [Google Scholar] [CrossRef]

- Yang, H.; Yao, C.; Liu, C.; Chen, Q. RMRL: Robot Navigation in Crowd Environments with Risk Map-Based Deep Reinforcement Learning. IEEE Robot. Autom. Lett. 2023, 8, 7930–7937. [Google Scholar] [CrossRef]

- Hong, Y.; Ding, Z.; Yuan, Z.; Chi, W.; Sun, L. Obstacle Avoidance Learning for Robot Motion Planning in Human–Robot Integration Environments. IEEE Trans. Cogn. Dev. Syst. 2023, 15, 2169–2178. [Google Scholar] [CrossRef]

- Chen, L.; Wang, Y.; Miao, Z.; Feng, M.; Zhou, Z.; Wang, H. Toward Safe Distributed Multi-Robot Navigation Coupled with Variational Bayesian Model. IEEE Trans. Autom. Sci. Eng. 2024, 21, 7583–7598. [Google Scholar] [CrossRef]

- Xidias, E.; Zacharia, P. Balanced task allocation and motion planning of a multi-robot system under fuzzy time windows. Eng. Comput. 2024, 41, 1301–1326. [Google Scholar] [CrossRef]

- Mishra, M.; Poddar, P.; Agrawal, R.; Chen, J.; Tokekar, P.; Sujit, P.B. Multi-Agent Deep Reinforcement Learning for Persistent Monitoring with Sensing, Communication, and Localization Constraints. IEEE Trans. Autom. Sci. Eng. 2024, 1–13, Early Access. [Google Scholar] [CrossRef]

- Huang, L.; Zhou, M.; Hao, K.; Han, H. Multirobot Cooperative Patrolling Strategy for Moving Objects. IEEE Trans. Syst. Man Cybern. Syst. 2023, 53, 2995–3007. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Choi, Y.; Kim, H. Obstacle-Aware Crowd Surveillance with Mobile Robots in Transportation Stations. Sensors 2025, 25, 350. https://doi.org/10.3390/s25020350

Choi Y, Kim H. Obstacle-Aware Crowd Surveillance with Mobile Robots in Transportation Stations. Sensors. 2025; 25(2):350. https://doi.org/10.3390/s25020350

Chicago/Turabian StyleChoi, Yumin, and Hyunbum Kim. 2025. "Obstacle-Aware Crowd Surveillance with Mobile Robots in Transportation Stations" Sensors 25, no. 2: 350. https://doi.org/10.3390/s25020350

APA StyleChoi, Y., & Kim, H. (2025). Obstacle-Aware Crowd Surveillance with Mobile Robots in Transportation Stations. Sensors, 25(2), 350. https://doi.org/10.3390/s25020350