A DAG-Based Offloading Strategy with Dynamic Parallel Factor Adjustment for Edge Computing in IoV

Abstract

1. Introduction

2. Related Work

2.1. Offloading Scenario

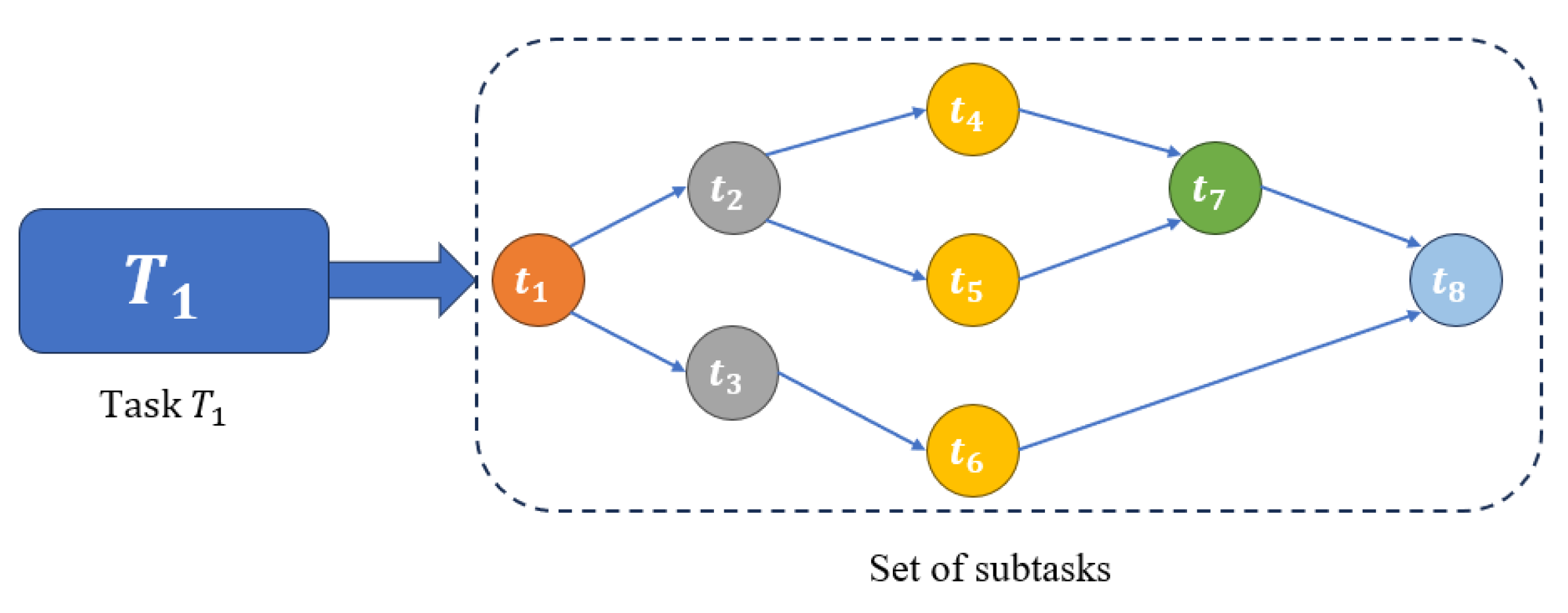

2.2. Task Model

2.3. Offloading Strategy

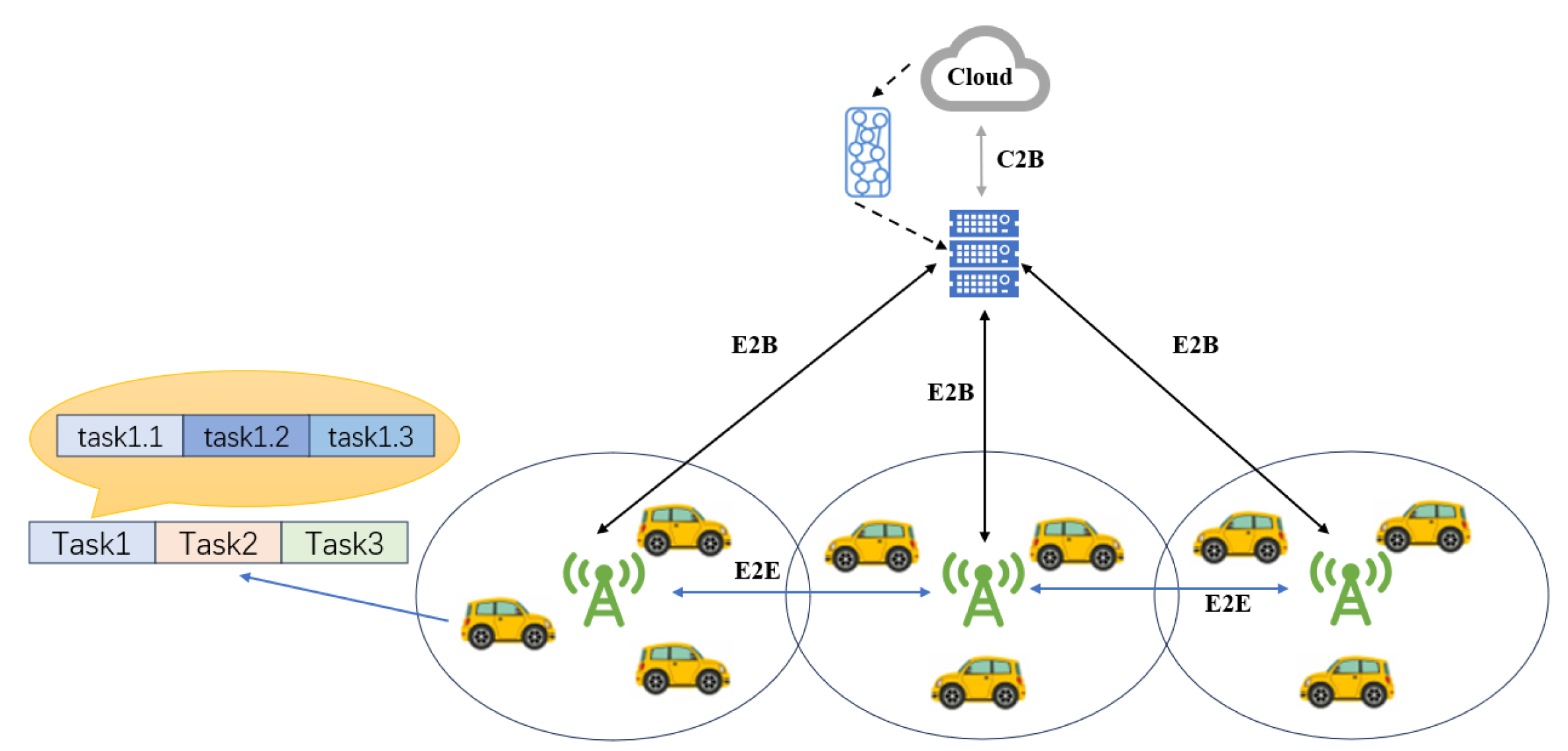

3. System Model

3.1. Network Model

3.2. Communication Model

3.3. Problem Formulation

4. Dynamic Adjustment of Parallel Factor Offloading Strategy

4.1. Subtask Scheduling and Prioritization

4.2. Parallel Factor and Load-Aware Scheduling Model

| Algorithm 1: DPF Algorithm |

|

5. Evaluation Analysis

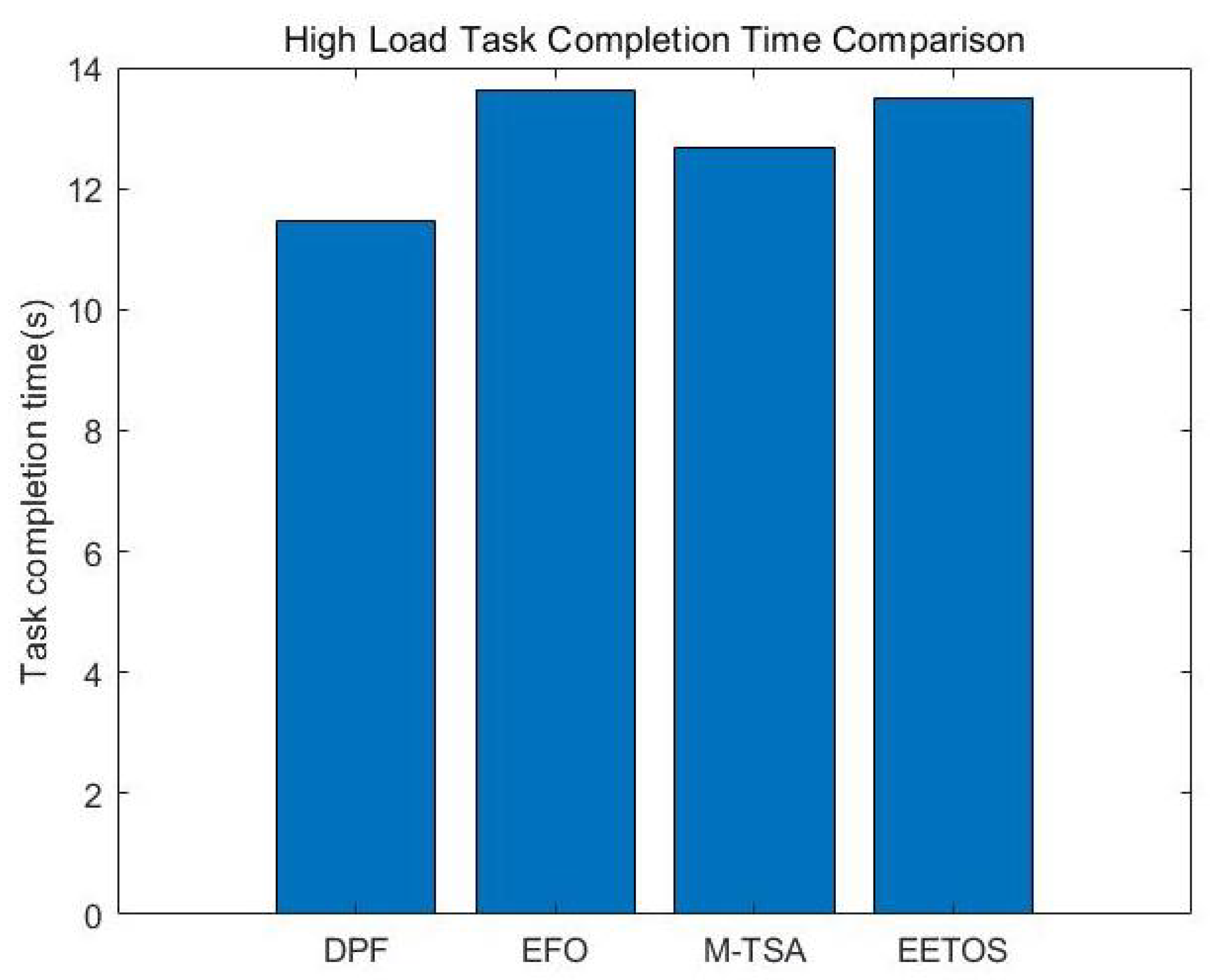

5.1. Task Completion Time

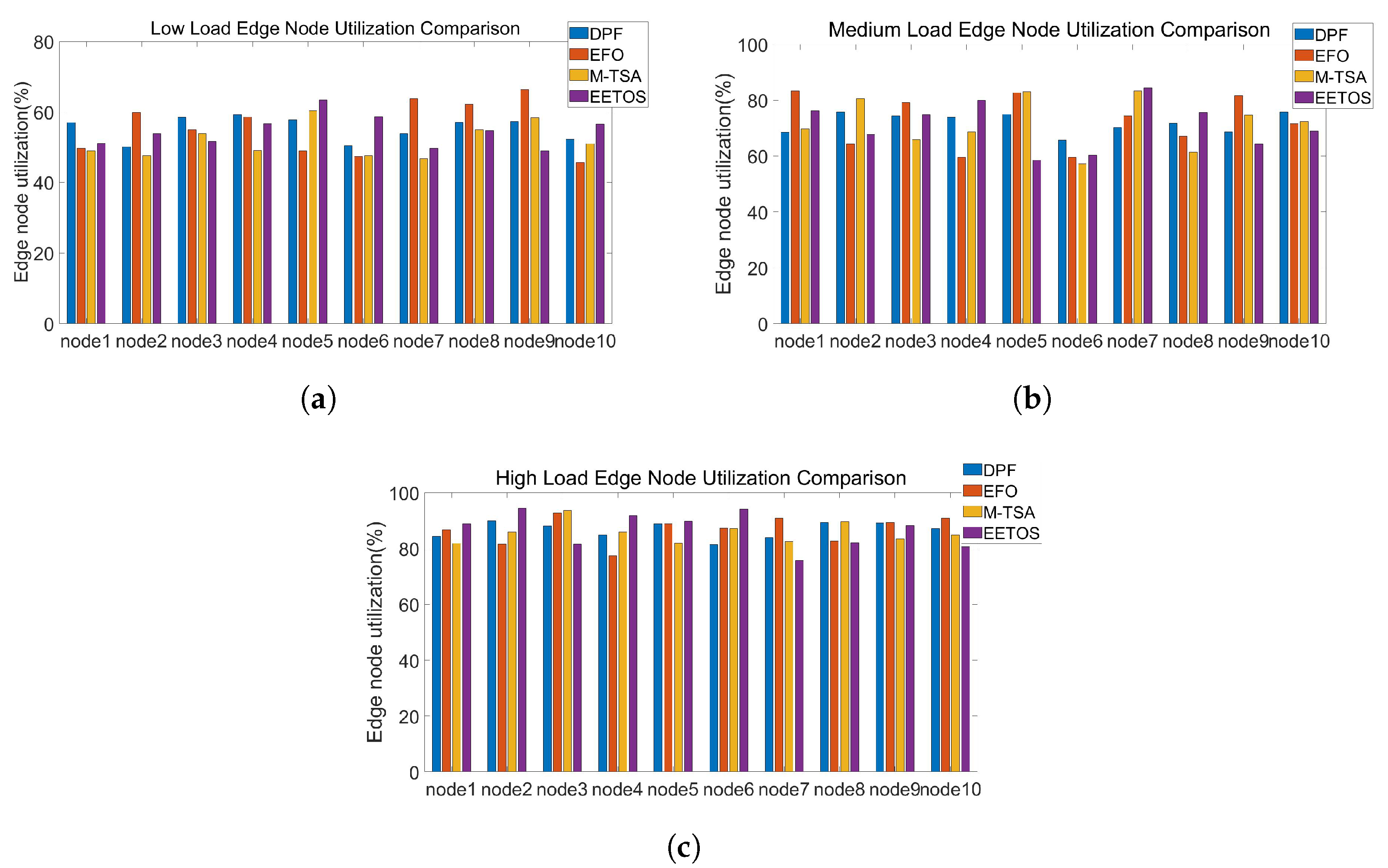

5.2. Servers Utilization

5.3. System Scalability

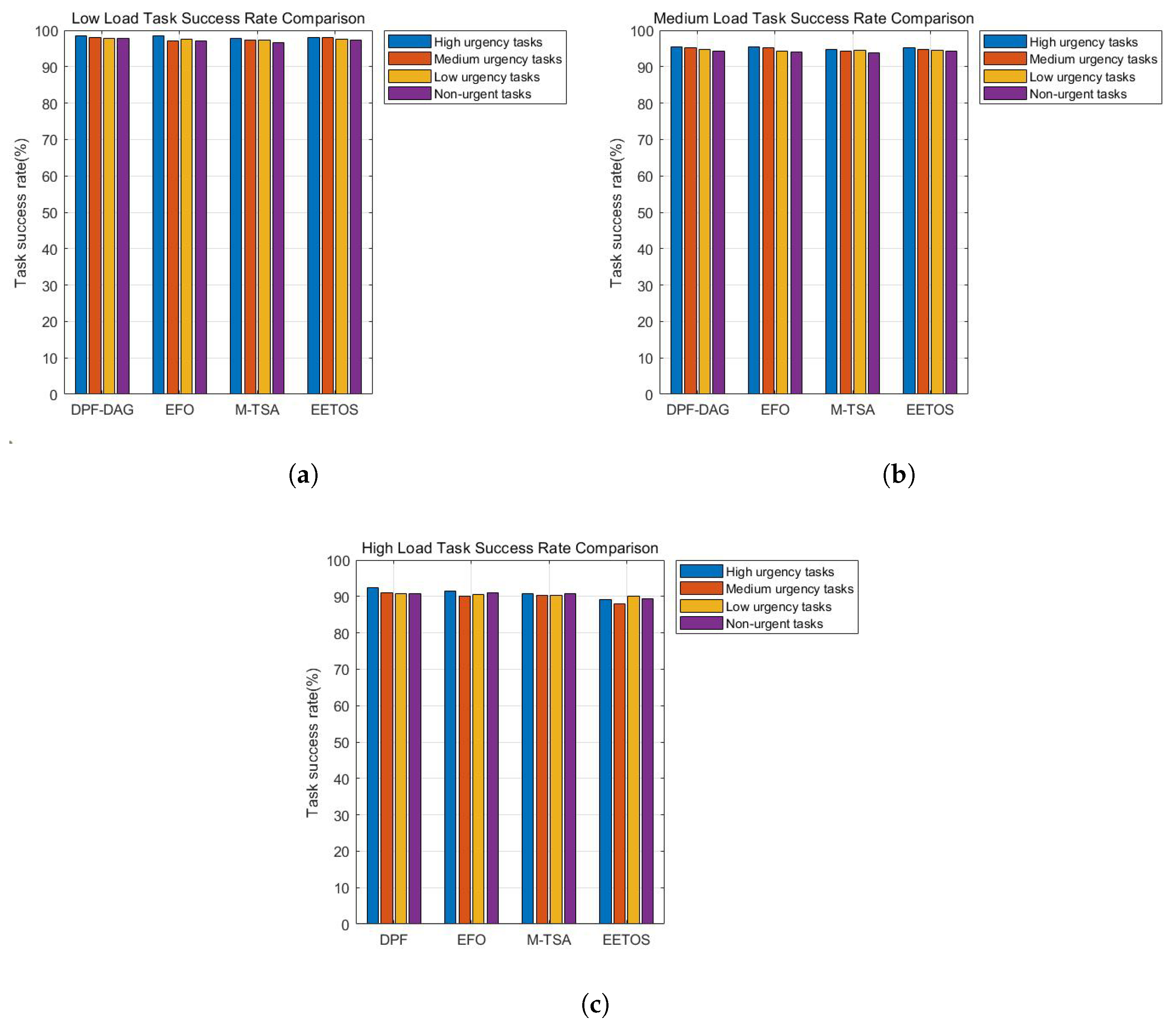

5.4. Task Success Rate

5.5. System Regulation Performance

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Qureshi, K.N.; Din, S.; Jeon, G.; Piccialli, F. Internet of vehicles: Key technologies, network model, solutions and challenges with future aspects. IEEE Trans. Intell. Transp. Syst. 2020, 22, 1777–1786. [Google Scholar] [CrossRef]

- Meneguette, R.; De Grande, R.; Ueyama, J.; Filho, G.P.R.; Madeira, E. Vehicular edge computing: Architecture, resource management, security, and challenges. ACM Comput. Surv. (CSUR) 2021, 55, 1–46. [Google Scholar] [CrossRef]

- Li, B.; Li, V.; Li, M.; Li, J.; Yang, J.; Li, B. An adaptive transmission strategy based on cloud computing in IoV architecture. EURASIP J. Wirel. Commun. Netw. 2024, 2024, 13. [Google Scholar] [CrossRef]

- Yang, C.; Liu, Y.; Chen, X.; Zhong, W.; Xie, S. Efficient mobility-aware task offloading for vehicular edge computing networks. IEEE Access 2019, 7, 26652–26664. [Google Scholar] [CrossRef]

- Mao, Y.; Zhang, J.; Letaief, K.B. Dynamic computation offloading for mobile-edge computing with energy harvesting devices. IEEE J. Sel. Areas Commun. 2016, 34, 3590–3605. [Google Scholar] [CrossRef]

- Yang, J.; Xi, M.; Wen, J.; Li, Y.; Song, H.H. A digital twins enabled underwater intelligent internet vehicle path planning system via reinforcement learning and edge computing. Digit. Commun. Netw. 2024, 10, 282–291. [Google Scholar] [CrossRef]

- Nagasubramaniam, P.; Wu, C.; Sun, Y.; Karamchandani, N.; Zhu, S.; He, Y. Privacy-Preserving Live Video Analytics for Drones via Edge Computing. Appl. Sci. 2024, 14, 10254. [Google Scholar] [CrossRef]

- Islam, A.; Debnath, A.; Ghose, M.; Chakraborty, S. A survey on task offloading in multi-access edge computing. J. Syst. Archit. 2021, 118, 102225. [Google Scholar] [CrossRef]

- Xu, Q.; Zhang, G.; Wang, J. Research on cloud-edge-end collaborative computing offloading strategy in the Internet of Vehicles based on the M-TSA algorithm. Sensors 2023, 23, 4682. [Google Scholar] [CrossRef]

- Zhao, J.; Li, Q.; Gong, Y.; Zhang, K. Computation offloading and resource allocation for cloud assisted mobile edge computing in vehicular networks. IEEE Trans. Veh. Technol. 2019, 68, 7944–7956. [Google Scholar] [CrossRef]

- Zhang, D.; Cao, L.; Zhu, H.; Zhang, T.; Du, J.; Jiang, K. Task offloading method of edge computing in internet of vehicles based on deep reinforcement learning. Clust. Comput. 2022, 25, 1175–1187. [Google Scholar] [CrossRef]

- Raza, S.; Liu, W.; Ahmed, M.; Anwar, M.R.; Mirza, M.A.; Sun, Q.; Wang, S. An efficient task offloading scheme in vehicular edge computing. J. Cloud Comput. 2020, 9, 28. [Google Scholar] [CrossRef]

- Mu, H.; Wu, S.; He, P.; Chen, J.; Wu, W. Task Similarity-Aware Cooperative Computation Offloading and Resource Allocation for Reusable Tasks in Dense MEC Systems. Sensors 2025, 25, 3172. [Google Scholar] [CrossRef] [PubMed]

- Huang, B.; Fan, X.; Zheng, S.; Chen, N.; Zhao, Y.; Huang, L.; Gao, Z.; Chao, H.C. Collaborative Sensing-Aware Task Offloading and Resource Allocation for Integrated Sensing-Communication-and Computation-Enabled Internet of Vehicles (IoV). Sensors 2025, 25, 723. [Google Scholar] [CrossRef] [PubMed]

- Yang, L.; Zhong, C.; Yang, Q.; Zou, W.; Fathalla, A. Task offloading for directed acyclic graph applications based on edge computing in industrial internet. Inf. Sci. 2020, 540, 51–68. [Google Scholar] [CrossRef]

- Chen, J.; Yang, Y.; Wang, C.; Zhang, H.; Qiu, C.; Wang, X. Multitask offloading strategy optimization based on directed acyclic graphs for edge computing. IEEE Internet Things J. 2021, 9, 9367–9378. [Google Scholar] [CrossRef]

- Tang, Z.; Lou, J.; Zhang, F.; Jia, W. Dependent task offloading for multiple jobs in edge computing. In Proceedings of the 2020 29th International Conference on Computer Communications and Networks (ICCCN), Honolulu, HI, USA, 3–6 August 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 1–9. [Google Scholar]

- Hou, C.; Zhao, Q. Optimal task-offloading control for edge computing system with tasks offloaded and computed in sequence. IEEE Trans. Autom. Sci. Eng. 2022, 20, 1378–1392. [Google Scholar] [CrossRef]

- Liu, J.; Zhou, A.; Liu, C.; Zhang, T.; Qi, L.; Wang, S.; Buyya, R. Reliability-enhanced task offloading in mobile edge computing environments. IEEE Internet Things J. 2021, 9, 10382–10396. [Google Scholar] [CrossRef]

- Singh, P.; Singh, R. Energy-efficient delay-aware task offloading in fog-cloud computing system for IoT sensor applications. J. Netw. Syst. Manag. 2022, 30, 14. [Google Scholar] [CrossRef]

- Ali, A.; Iqbal, M.M.; Jamil, H.; Qayyum, F.; Jabbar, S.; Cheikhrouhou, O.; Baz, M.; Jamil, F. An efficient dynamic-decision based task scheduler for task offloading optimization and energy management in mobile cloud computing. Sensors 2021, 21, 4527. [Google Scholar] [CrossRef]

- Shu, C.; Zhao, Z.; Han, Y.; Min, G. Dependency-aware and latency-optimal computation offloading for multi-user edge computing networks. In Proceedings of the 2019 16th Annual IEEE International Conference on Sensing, Communication and Networking (SECON), Boston, MA, USA, 10–13 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–9. [Google Scholar]

- Shao, S.; Su, L.; Zhang, Q.; Wu, S.; Guo, S.; Qi, F. Multi task dynamic edge–end computing collaboration for urban internet of vehicles. Comput. Netw. 2023, 227, 109690. [Google Scholar] [CrossRef]

- Zhu, C.; Liu, C.; Zhu, H.; Li, J. Cloud–Fog Collaborative Computing Based Task Offloading Strategy in Internet of Vehicles. Electronics 2024, 13, 2355. [Google Scholar] [CrossRef]

- Plachy, J.; Becvar, Z.; Strinati, E.C.; Di Pietro, N. Dynamic allocation of computing and communication resources in multi-access edge computing for mobile users. IEEE Trans. Netw. Serv. Manag. 2021, 18, 2089–2106. [Google Scholar] [CrossRef]

- Du, G.; Cao, Y.; Li, J.; Zhuang, Y. Secure information sharing approach for internet of vehicles based on DAG-Enabled blockchain. Electronics 2023, 12, 1780. [Google Scholar] [CrossRef]

- Yan, J.; Bi, S.; Zhang, Y.J.; Tao, M. Optimal task offloading and resource allocation in mobile-edge computing with inter-user task dependency. IEEE Trans. Wirel. Commun. 2019, 19, 235–250. [Google Scholar] [CrossRef]

- Dai, F.; Liu, G.; Mo, Q.; Xu, W.; Huang, B. Task offloading for vehicular edge computing with edge-cloud cooperation. World Wide Web 2022, 25, 1999–2017. [Google Scholar] [CrossRef]

- Sun, Y.; Guo, X.; Song, J.; Zhou, S.; Jiang, Z.; Liu, X.; Niu, Z. Adaptive learning-based task offloading for vehicular edge computing systems. IEEE Trans. Veh. Technol. 2019, 68, 3061–3074. [Google Scholar] [CrossRef]

- Misra, S.; Wolfinger, B.E.; Achuthananda, M.; Chakraborty, T.; Das, S.N.; Das, S. Auction-based optimal task offloading in mobile cloud computing. IEEE Syst. J. 2019, 13, 2978–2985. [Google Scholar] [CrossRef]

- Arthurs, P.; Gillam, L.; Krause, P.; Wang, N.; Halder, K.; Mouzakitis, A. A taxonomy and survey of edge cloud computing for intelligent transportation systems and connected vehicles. IEEE Trans. Intell. Transp. Syst. 2021, 23, 6206–6221. [Google Scholar] [CrossRef]

- Wang, K.; Wang, X.; Liu, X.; Jolfaei, A. Task offloading strategy based on reinforcement learning computing in edge computing architecture of internet of vehicles. IEEE Access 2020, 8, 173779–173789. [Google Scholar] [CrossRef]

- Sun, Z.; Sun, G.; Liu, Y.; Wang, J.; Cao, D. BARGAIN-MATCH: A game theoretical approach for resource allocation and task offloading in vehicular edge computing networks. IEEE Trans. Mob. Comput. 2023, 23, 1655–1673. [Google Scholar] [CrossRef]

- Zhang, J.; Guo, H.; Liu, J.; Zhang, Y. Task offloading in vehicular edge computing networks: A load-balancing solution. IEEE Trans. Veh. Technol. 2019, 69, 2092–2104. [Google Scholar] [CrossRef]

- Xiao, Z.; Dai, X.; Jiang, H.; Wang, D.; Chen, H.; Yang, L.; Zeng, F. Vehicular task offloading via heat-aware MEC cooperation using game-theoretic method. IEEE Internet Things J. 2019, 7, 2038–2052. [Google Scholar] [CrossRef]

- Hossain, M.D.; Sultana, T.; Hossain, M.A.; Layek, M.A.; Hossain, M.I.; Sone, P.P.; Lee, G.W.; Huh, E.N. Dynamic task offloading for cloud-assisted vehicular edge computing networks: A non-cooperative game theoretic approach. Sensors 2022, 22, 3678. [Google Scholar] [CrossRef]

- Wu, Q.; Wang, X.; Fan, Q.; Fan, P.; Zhang, C.; Li, Z. High stable and accurate vehicle selection scheme based on federated edge learning in vehicular networks. China Commun. 2023, 20, 1–17. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guan, W.; Zheng, Q.; Lian, X.; Gao, C. A DAG-Based Offloading Strategy with Dynamic Parallel Factor Adjustment for Edge Computing in IoV. Sensors 2025, 25, 6198. https://doi.org/10.3390/s25196198

Guan W, Zheng Q, Lian X, Gao C. A DAG-Based Offloading Strategy with Dynamic Parallel Factor Adjustment for Edge Computing in IoV. Sensors. 2025; 25(19):6198. https://doi.org/10.3390/s25196198

Chicago/Turabian StyleGuan, Wenyang, Qi Zheng, Xiaoqin Lian, and Chao Gao. 2025. "A DAG-Based Offloading Strategy with Dynamic Parallel Factor Adjustment for Edge Computing in IoV" Sensors 25, no. 19: 6198. https://doi.org/10.3390/s25196198

APA StyleGuan, W., Zheng, Q., Lian, X., & Gao, C. (2025). A DAG-Based Offloading Strategy with Dynamic Parallel Factor Adjustment for Edge Computing in IoV. Sensors, 25(19), 6198. https://doi.org/10.3390/s25196198