Gesture-Based Physical Stability Classification and Rehabilitation System

Abstract

1. Introduction

2. Literature Review

2.1. Key Considerations for Method Selection

2.1.1. Sensor Placement Considerations

- Waist/Center of Mass: most common for overall stability assessment.

- Multiple Body Segments: enhanced detail but increased complexity.

- Chest/Sternum: good for respiratory and postural coupling.

2.1.2. Application-Specific Recommendations

- Clinical Assessment: force platforms remain gold-standard.

- Field Studies: IMU-based systems preferred for portability.

- Continuous Monitoring: wearable accelerometers most practical.

- Research Applications: machine learning approaches for pattern discovery.

2.1.3. Performance Metrics

- Accuracy: force platforms > IMU systems > observational methods.

- Portability: wearable sensors > clinical equipment.

- Cost-Effectiveness: IMU systems > force platforms > vision systems.

- Real-time Capability: accelerometers > force platforms > complex ML systems.

3. Methodology

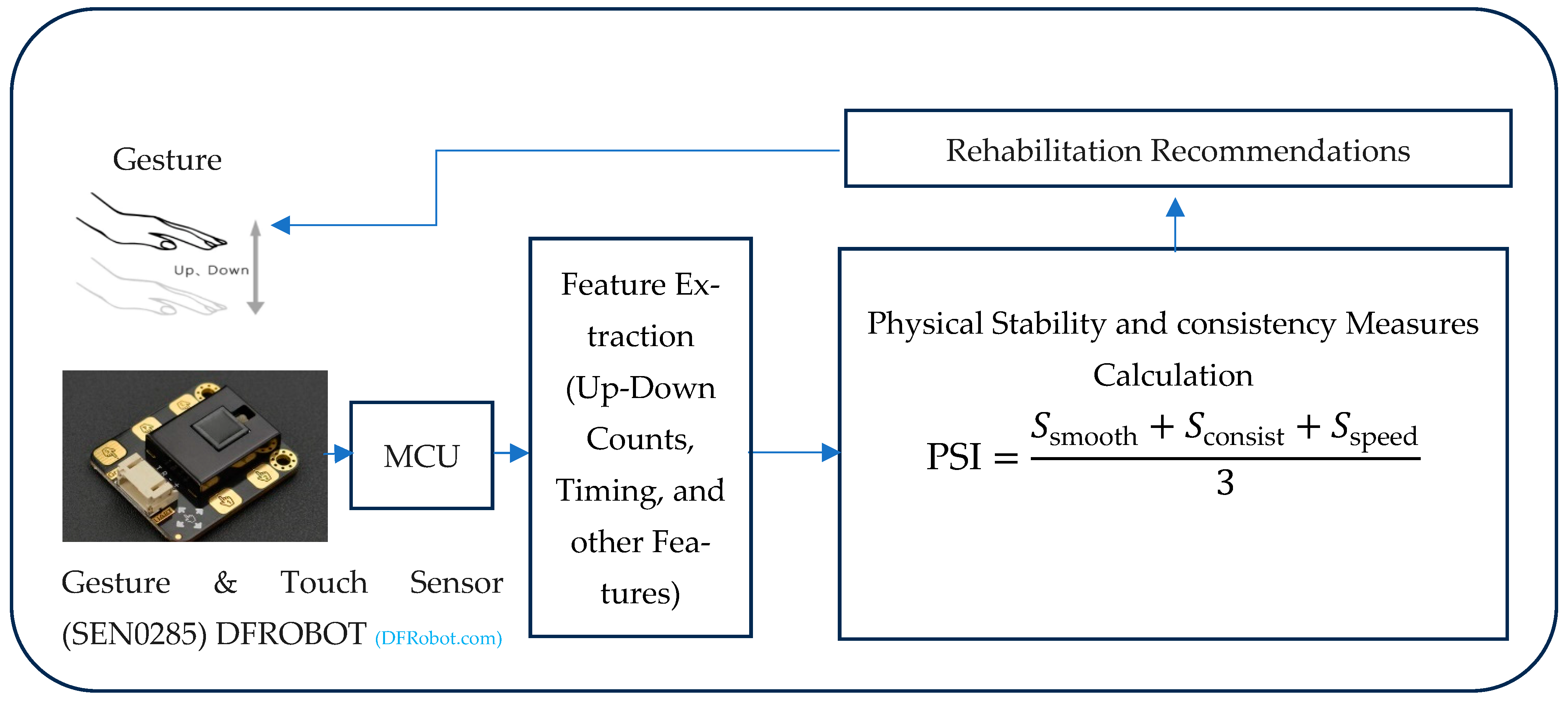

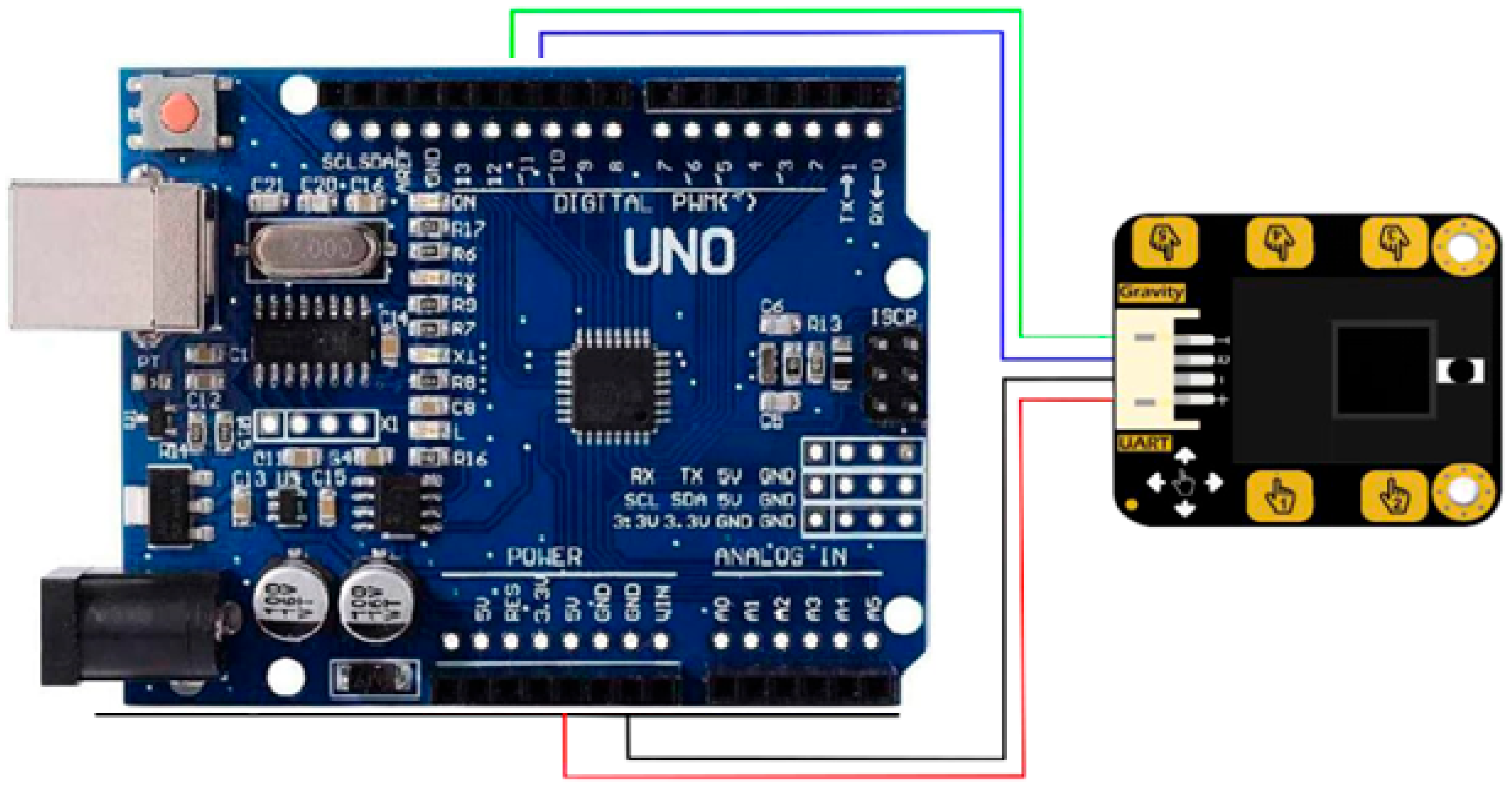

3.1. System Architecture

- Arduino Uno Microcontroller: serves as the central processing unit for data acquisition, processing, and control.

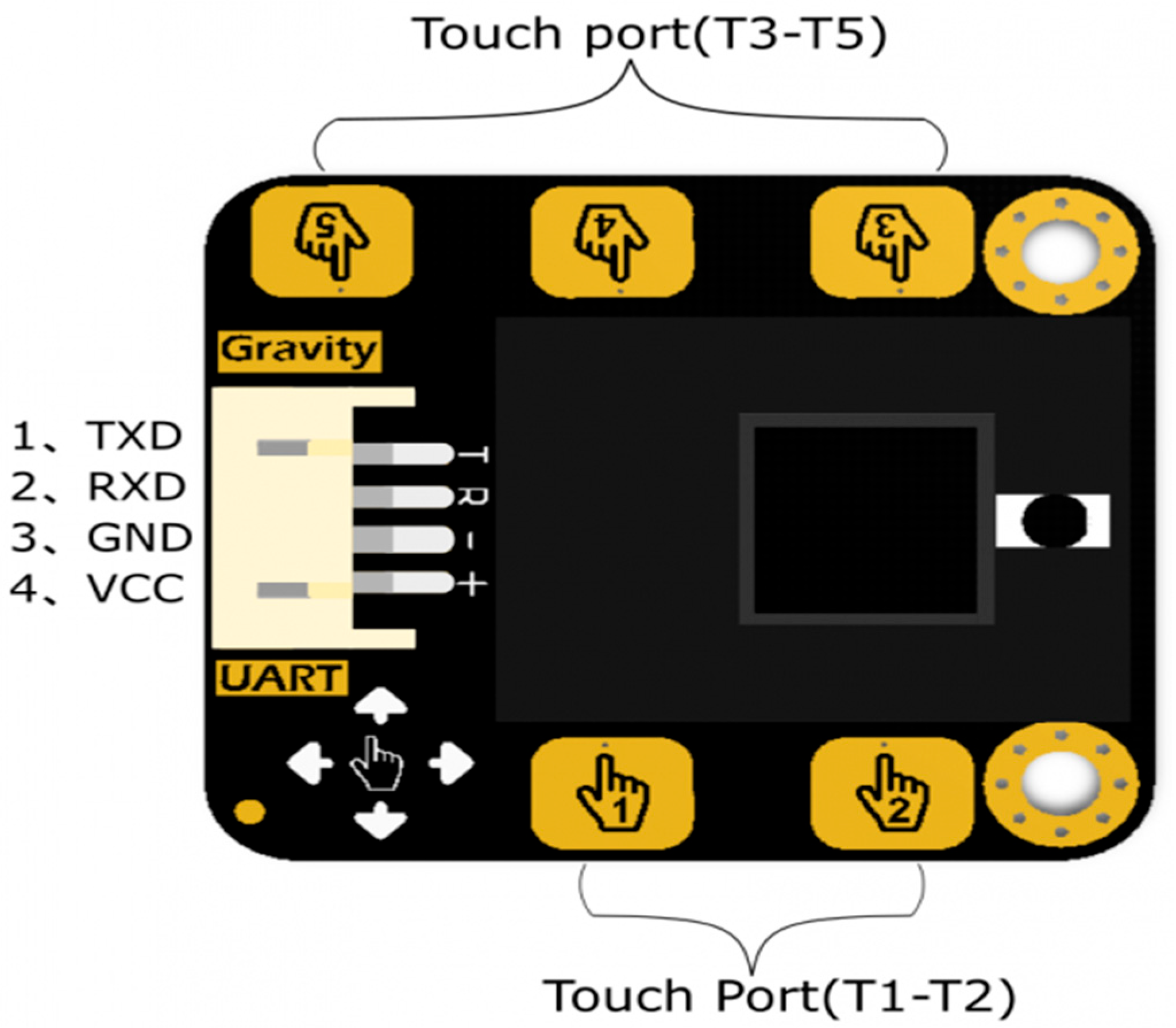

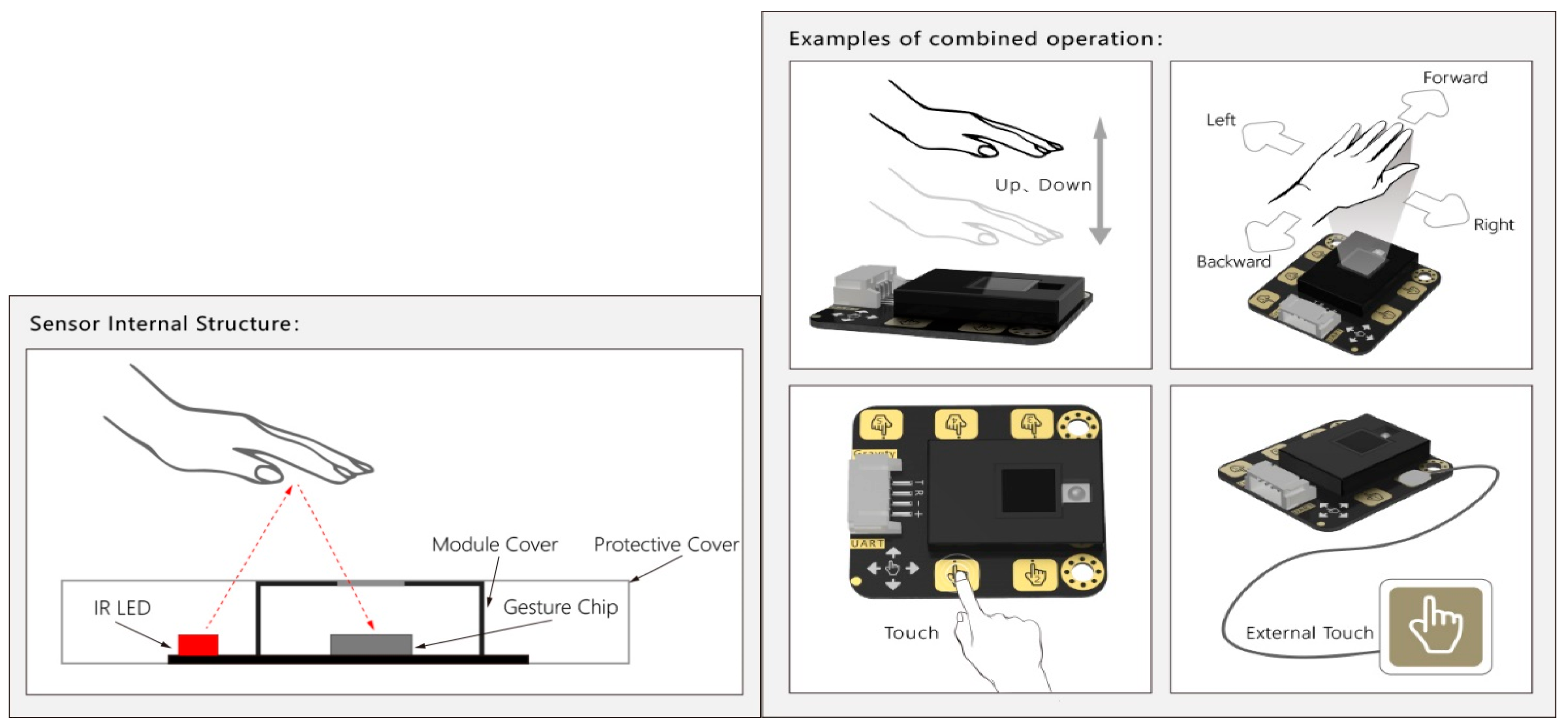

- DFRobot Gesture and Touch Sensor: Detects “up” and “down” hand gestures. This sensor integrates the APDS-9960 gesture and proximity sensor.

- Serial Communication: used for communication with a computer for data logging and visualization.

- Power Supply: provides power to the Arduino and sensor via USB.

3.2. Feature Extraction

3.2.1. Gesture Counting and Timing Feature Extraction

- Let be the current time in milliseconds.

- Let be the timestamp of the last gesture of the same type.

- Let be the debounce time to filter out consecutive gestures.

3.2.2. Smoothness Score Calculation

- Let be the timestamps for “Up” gestures.

- Let be the timestamps for “Down” gestures.

- Let be the interval between consecutive “Up” gestures.

- Let be the interval between consecutive “Down” gestures.

3.2.3. Consistency Score Calculation

- Let be the count of “Up” gestures.

- Let be the count of “Down” gestures.

- Let be the total number of gestures.

3.2.4. Speed Score

- a

- Normalization:

- 50 ms represents the fastest possible gesture time.

- 1000 ms represents the slowest acceptable gesture time.

- b

- Constraints:

3.2.5. Physical Stability Index (PSI)

- Let be the smoothness score.

- Let be the consistency score.

- Let be the speed score.

3.2.6. Data Capture Duration

- Let be the start time of the data capture.

- Let be the current time.

- Let be the data capture duration.

3.3. Physical Stability Index Calculation Algorithm

3.3.1. Initialization

- Initialize the serial communication for debugging and data logging.

- Configure the DFRobot Gesture and Touch sensor for “up” and “down” gesture detection. This includes setting the gesture distance and enabling the appropriate functions.

3.3.2. Data Acquisition

- Continuously monitor the DFRobot Gesture and Touch sensor for gesture events. The DFGT.getAnEvent() function is used to retrieve the detected gesture.

- Record the timestamps of “up” and “down” gestures using the millis() function.

3.3.3. Gesture Processing

- Debounce the gestures to prevent multiple counts for a single gesture. This is achieved by ensuring a minimum time interval (debounceTime = 200 ms) between consecutive gestures.

- Store the timestamps of the last 10 “up” and “down” gestures in circular buffers (upTimes[] and downTimes[]).

3.3.4. Physical Stability Calculation

- Smoothness Score: Calculate the smoothness score based on the consistency of time intervals between consecutive gestures. The calculated Smoothness() function computes the average deviation between the intervals of “up” and “down” gestures. A lower deviation indicates smoother movements and a higher smoothness score.

- Consistency Score: Calculate the consistency score based on the ratio of “up” and “down” gestures. The calculated Consistency() function penalizes large deviations from an equal distribution of “up” and “down” gestures. The greater the evenness of the gestures, the higher the score.

- Speed Score: it represents the speed of the gestures.

- Overall PSI: Combine the smoothness, consistency, and speed scores to calculate the overall Physical Stability Index (PSI). The PSI is computed as the average of the three scores.

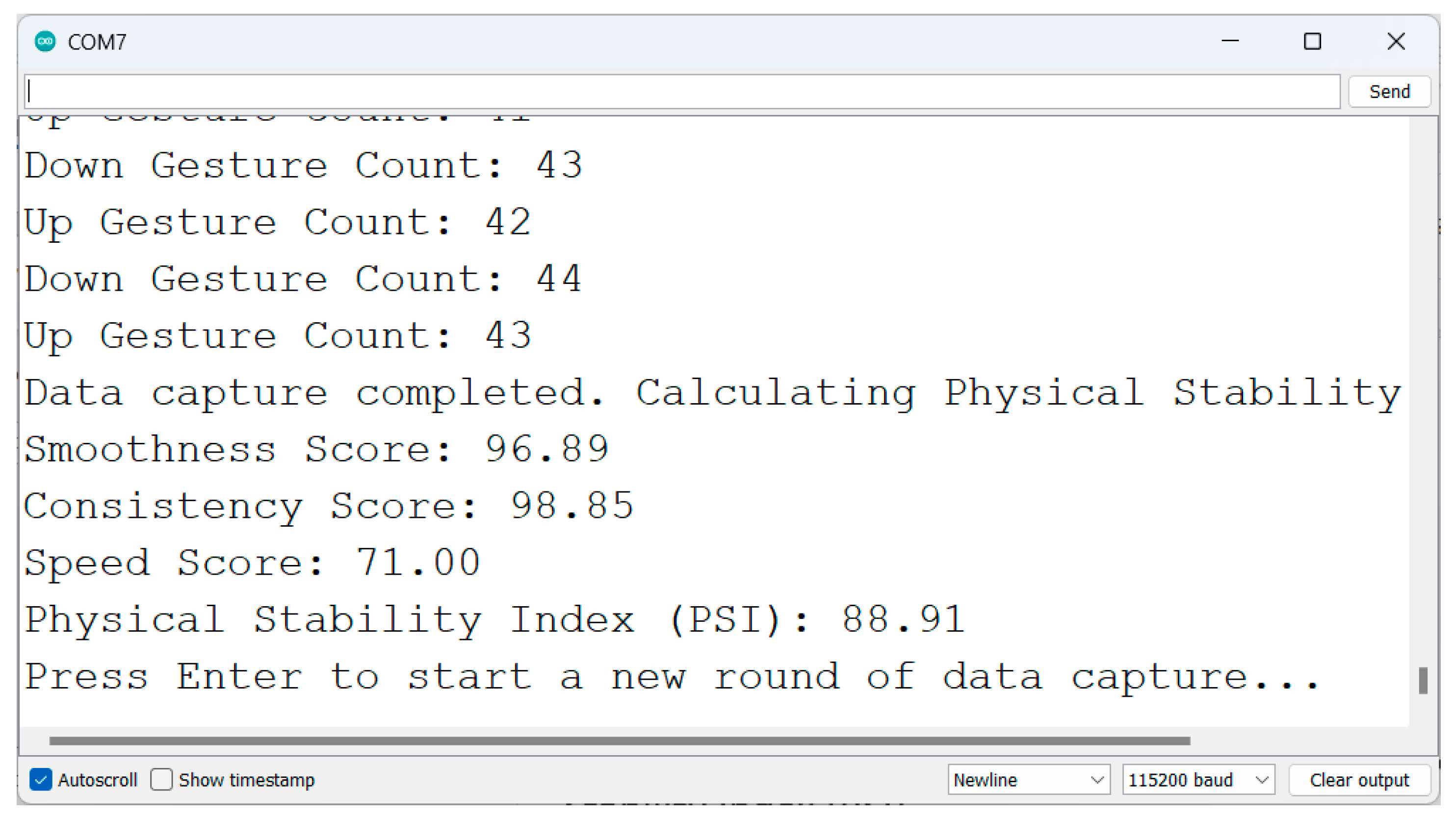

3.3.5. Data Output

- Print the smoothness score, consistency score, speed score, and PSI to the serial monitor.

3.3.6. Looping and Resetting

3.4. Models Architectures and Their Computational Complexities

3.5. Classifier Model Architectures

3.5.1. Transformer Neural Network Model Architecture

3.5.2. Convolutional Neural Network (CNN) Architecture

3.5.3. XGBoost (Extreme Gradient Boosting) Architecture

- XGBoost (Extreme Gradient Boosting) [57] is a highly optimized and extensively utilized implementation of the gradient boosting framework, specifically engineered for the speed, efficiency, and predictive performance. Similar to standard Gradient Boosting, it employs an ensemble learning approach that classifies by sequentially training decision trees in a stage-wise manner.

- This model accepts the three scaled input features (the Smoothness Score, Consistency Score, and Speed Score) derived from gesture-based stability assessments and builds an ensemble of decision trees. Trees are added iteratively, with each successive tree trained to predict and minimize residual errors (using gradient information) remaining from the previous ensemble. XGBoost’s key distinguishing features include integrated L1 and L2 regularization on leaf weights to prevent overfitting and various system optimizations for accelerated training.

- The model processes the gesture-derived features through multiple boosting rounds, where each decision tree learns to classify the four stability categories: Stable, Highly Stable, Unstable, and Highly Unstable. The ensemble approach is particularly effective for this application as it can capture complex non-linear relationships between the three input features and their corresponding stability classifications.

- Key parameters used for this XGBoost model include 100 boosting stages (n_estimators), a learning rate of 0.1, a maximum depth of 6 for individual trees (max_depth), a random state of 42, and it optimizes a multi-class logarithmic loss (‘mlogloss’) function suitable for the four-class stability classification problem.

3.5.4. Kolmogorov–Arnold Network (KAN) Architecture

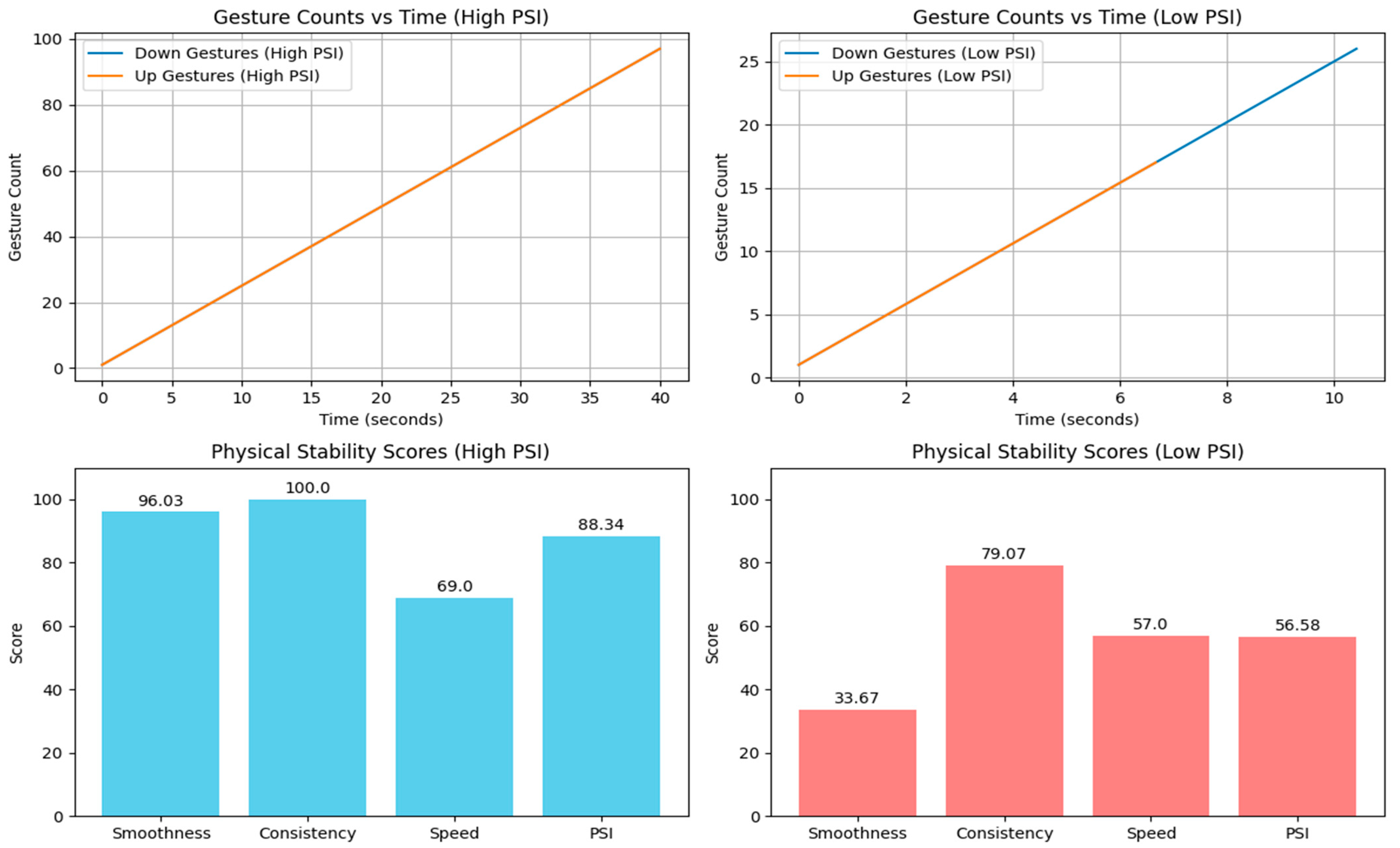

4. Results and Discussion of Physical Stability Calculation

5. Physical Stability Classification Using Both Deep Learning and Machine Learning

5.1. Gesture-Based Physical Stability Classification

5.1.1. Data Generation

- Stable: moderately high values for all features.

- Highly Stable: high values for smoothness, consistency, speed, and PSI.

- Unstable: moderate values for some features and lower values for others.

- Highly Unstable: low values for most features.

5.1.2. Data Preprocessing

- Standardization: features are scaled using Standard Scalar to ensure consistent scaling across all features.

- Data Splitting: the dataset is divided into training and testing sets (or cross-validation folds) to evaluate the model performance.

5.1.3. Model Selection

- Deep Learning Models:

- Convolutional Neural Network (CNN): captures spatial patterns in the data.

- Transformer-based Classifier: handles sequential data effectively.

- Kolmogorov–Arnold Network (KAN): The Kolmogorov–Arnold representation theorem, states that any continuous multivariate function can be represented as a superposition of univariate functions and additions. KANs are designed to directly learn this representation from data.

- Traditional Machine Learning Models:

5.1.4. Model Training

- Training and Validation Split: the training data are further divided into training and validation subsets with K-Fold Cross-Validation.

- Optimization: models are trained using appropriate optimization techniques (e.g., Adam optimizer, Cross-Entropy Loss).

- Early Stopping: training is stopped early if validation loss does not improve for a certain number of epochs to prevent overfitting.

5.1.5. Model Evaluation

- Accuracy: proportion of correctly classified samples.

- Precision, Recall, and F1-Score: metrics for evaluating the balance between false positives and false negatives.

- Confusion Matrix: a visual representation of the classification performance across classes.

- ROC Curves: plots of true-positive rate vs. false-positive rate for each class.

5.1.6. Cross-Validation

- The dataset is split into K folds.

- Each fold is used once as a test set while the remaining folds are used for training.

- Performance metrics are aggregated across all folds for each model.

- Mean Accuracy: average accuracy across all folds.

- Precision, Recall, and F1-Score: weighted averages of these metrics across all folds.

- The best-performing model is identified based on these metrics.

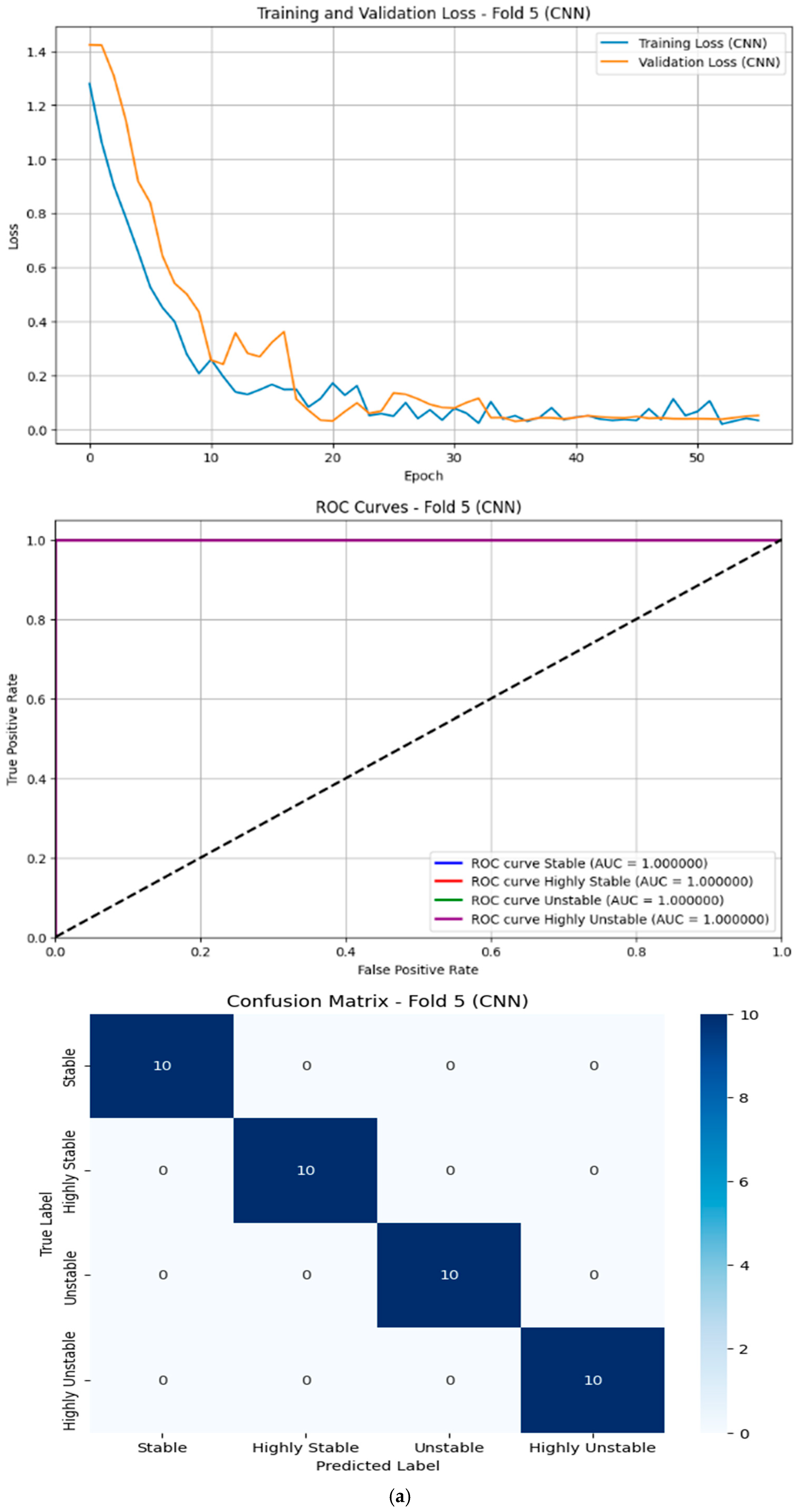

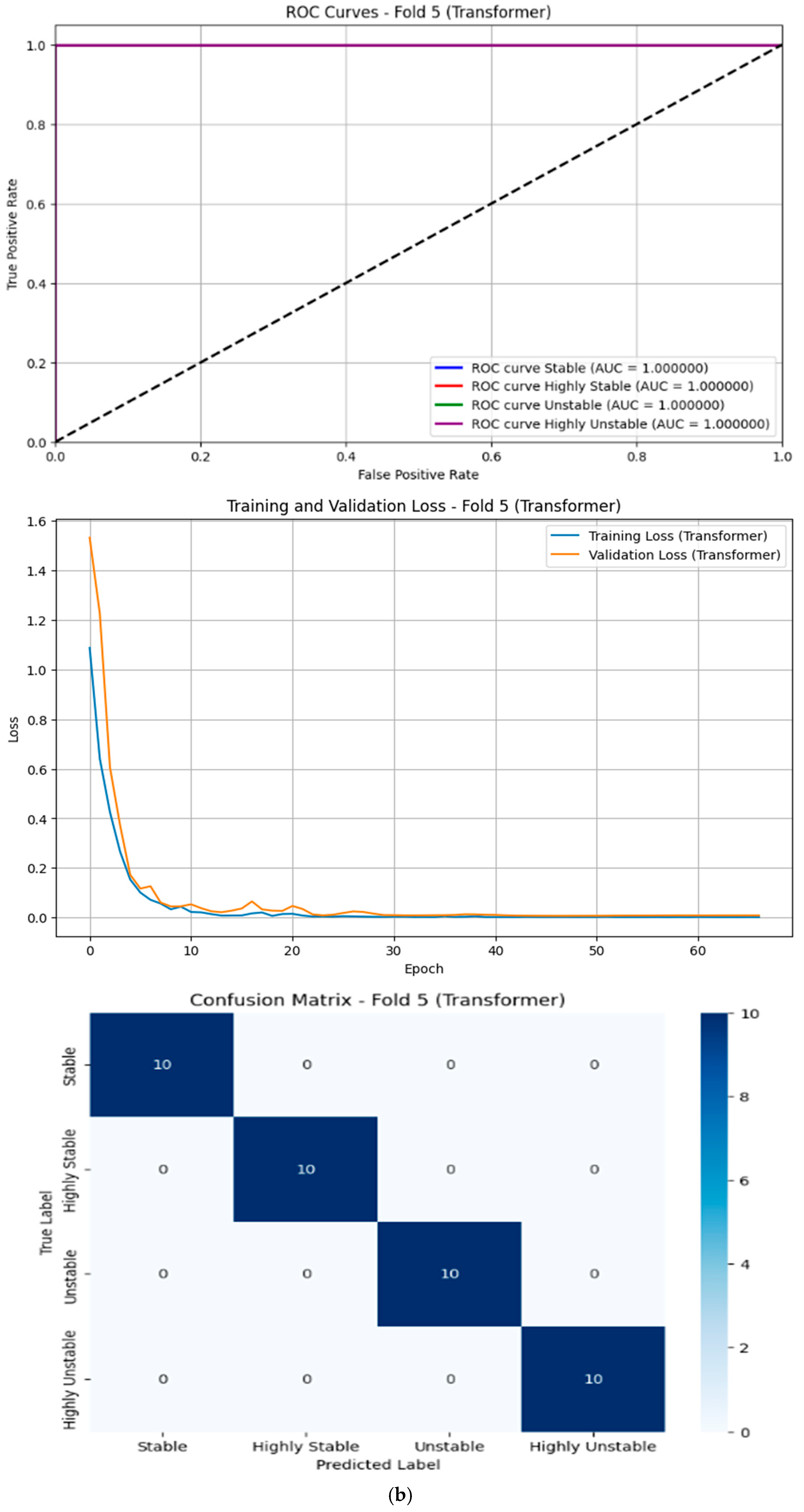

5.1.7. Results of Deep Learning Models

- Transformer:

- Achieved perfect scores (99.50%) across all metrics.

- This indicates that the transformer model excelled at capturing complex patterns in the data, likely due to its attention mechanism, which allows it to focus on relevant features effectively.

- However, its computational complexity is high due to quadratic scaling with the sequence length, making it less suitable for real-time or resource-constrained applications.

- CNN:

- Performed very well with an accuracy of 99.00% and an F1-Score of 98.99%.

- CNNs are effective at extracting spatial features, which may explain their strong performance on this dataset.

- The model’s computational cost is moderate compared to transformers, but still higher than traditional machine learning methods.

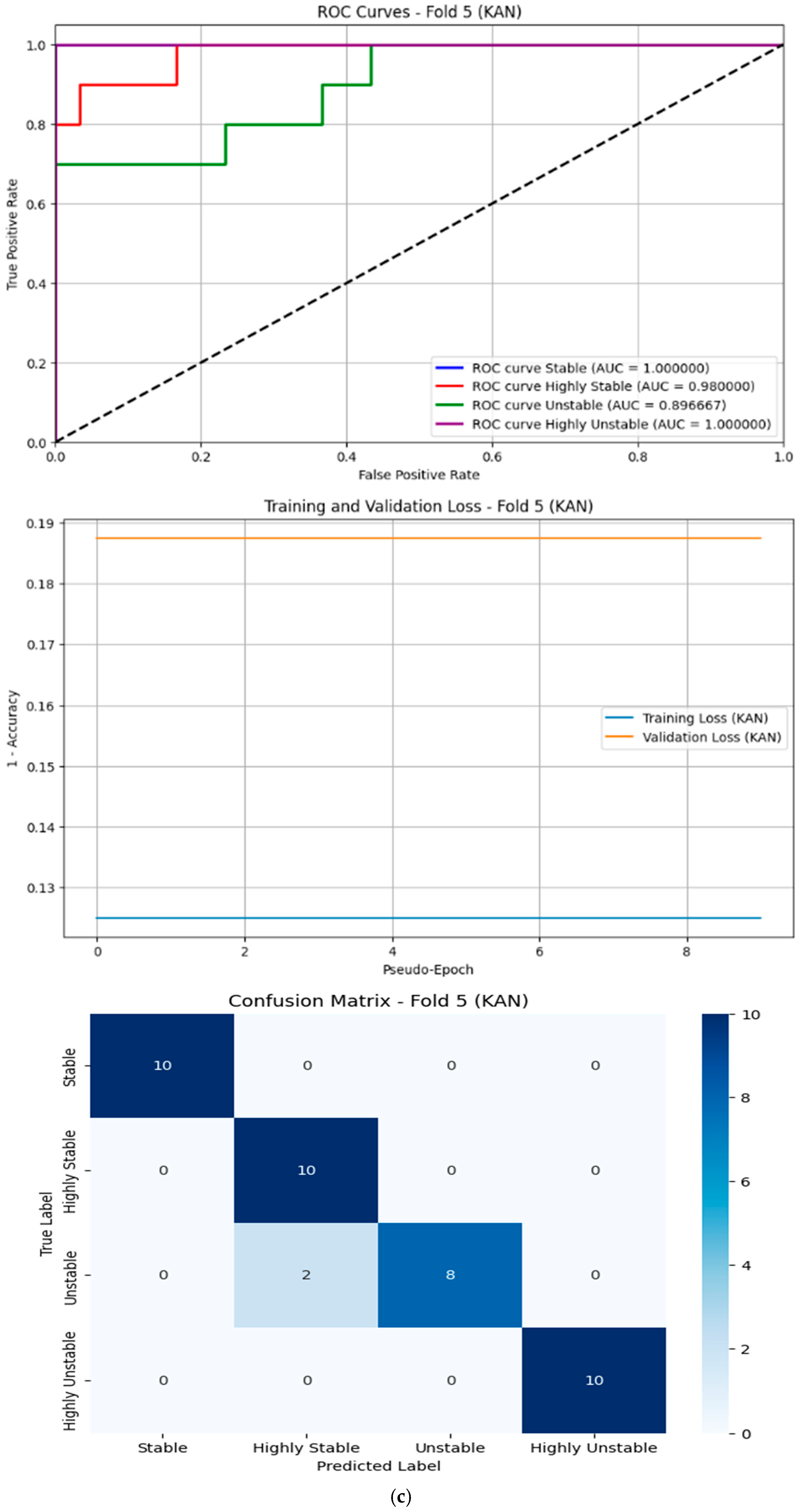

- KAN:

- Resulted in an accuracy of 88.8% and an F1-Score of 86.63%.

5.1.8. Results of Traditional Machine Learning Models

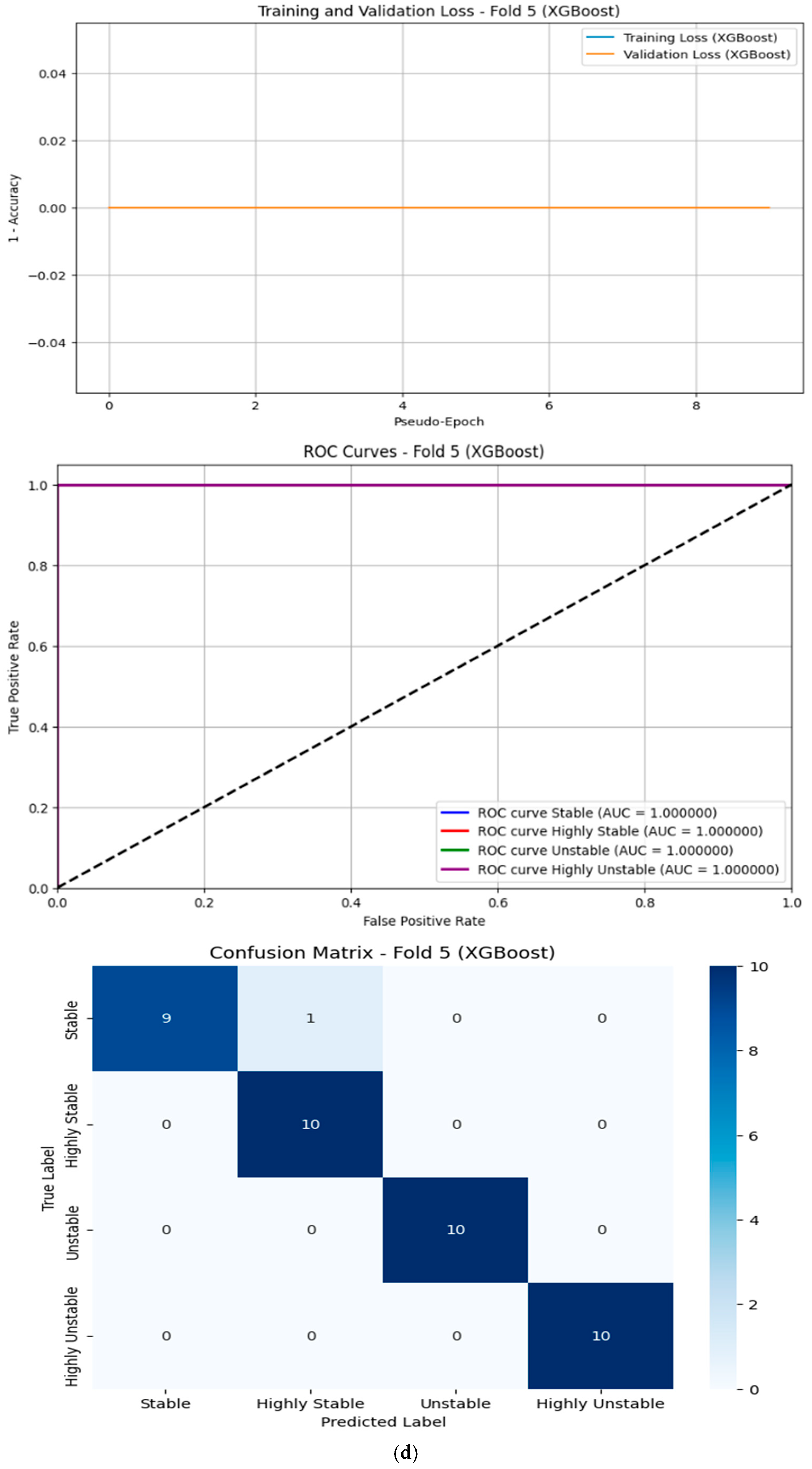

- XGBoost:

- Performed well, with an accuracy of 98.00% and an F1-Score of 97.99%.

- XGBoost is known for its efficiency and ability to handle imbalanced datasets, which may explain its slightly lower performance compared to DL models.

6. Dataset Characteristics

7. Gesture-Based Stability Classification for Rehabilitation

7.1. Establishing a Baseline Stability

7.2. Designing Personalized Exercise Programs

- Smoothness Improvement: slow, controlled hand gestures executed with minimal deviation.

- Consistency Improvement: rhythmic alternation between Up and Down gestures.

- Speed Improvement: gradual increase in the pace of gesture execution over time.

7.3. Tracking Progress over Time

- Improvement: a shift from “Unstable” to “Stable” or “Highly Stable” indicates significant progress, warranting increased exercise difficulty.

- Plateau or Decline: persistent “Unstable” or “Highly Unstable” classifications necessitate re-evaluation and modification of the program.

7.4. Providing Real-Time Feedback

7.5. Gamifying the Rehabilitation Process

7.6. Collaborating with Healthcare Providers

7.7. Long-Term Monitoring and Maintenance

7.8. Enhancing Functionality with Additional Sensors

Benefits of Gesture-Based Stability Classification for Rehabilitation

- Personalization: tailored exercises address individual needs and weaknesses based on objective data, including stability classification.

- Engagement: gamification and real-time feedback maintain motivation and adherence, helping users achieve higher stability classifications.

- Accuracy: smoothness, consistency, and speed-based classifications provide quantitative measures of progress.

- Flexibility: the system supports remote use and complements in-person therapy, expanding accessibility.

- Cost-Effectiveness: reduced need for frequent in-person visits lowers costs while maintaining high-quality care.

8. Conclusions and Future Work

- Expanding Stability Assessment Parameters: adding accelerometer measurements to analyze the speed, range of motion, and tremor patterns for a more comprehensive stability evaluation.

- Validation on Diverse Populations: testing the system on larger and more varied groups, including elderly individuals and those with mobility impairments, to ensure its applicability across different demographics.

- Cloud Integration: developing a cloud-based platform for remote monitoring and data analysis to facilitate broader usage and accessibility.

- Real-Time Feedback Mechanisms: implementing feedback systems to assist users in improving their stability and preventing falls.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- World Health Organization. Falls. 2021. Available online: https://www.who.int/news-room/fact-sheets/detail/falls (accessed on 2 June 2025).

- Berg, K.; Wood-Dauphinee, S.; Williams, J.; Maki, B. Measuring balance in the elderly: Validation of an instrument. Can. J. Public Health 1992, 83 (Suppl. 2), S7–S11. [Google Scholar]

- Chandak, A.; Chaturvedi, N.; Dhiraj. Machine-Learning-Based Human Fall Detection Using Contact and Noncontact-Based Sensors. Comput. Intell. Neurosci. 2022, 2022, 9626170. [Google Scholar] [CrossRef] [PubMed]

- Jefiza, A.; Pramunanto, E.; Boedinoegroho, H.; Purnomo, M.H. Fall detection based on accelerometer and gyroscope using back propagation. In Proceedings of the 4th International Conference on Electrical Engineering, Computer Science and Informatics (EECSI), Yogyakarta, Indonesia, 19–21 September 2017; pp. 1–6. [Google Scholar]

- Prieto, T.; Myklebust, J.; Hoffmann, R.; Lovett, E.; Myklebust, B. Measures of postural steadiness: Differences between healthy young and elderly adults. IEEE Trans. Biomed. Eng. 1996, 43, 956–966. [Google Scholar] [CrossRef]

- Quijoux, F.; Nicolaï, A.; Chairi, I.; Bargiotas, I.; Ricard, D.; Yelnik, A.; Oudre, L.; Bertin-Hugault, F.; Vidal, P.-P.; Vayatis, N.; et al. A review of center of pressure (COP) variables to quantify standing balance in elderly people. Physiol. Rep. 2021, 9, e15067. [Google Scholar] [CrossRef]

- Raymakers, J.; Samson, M.; Verhaar, H. The assessment of body sway and the choice of the stability parameter(s). Gait Posture 2005, 21, 48–58. [Google Scholar] [CrossRef]

- Nashner, L.; Black, F.; Wall, C., III. Adaptation to altered support and visual conditions during stance: Patients with vestibular deficits. J. Neurosci. 1982, 2, 536–544. [Google Scholar] [CrossRef]

- Peterka, R. Sensorimotor integration in human postural control. J. Neurophysiol. 2002, 88, 1097–1118. [Google Scholar] [CrossRef]

- Moe-Nilssen, R.; Helbostad, J. Estimation of gait cycle characteristics by trunk accelerometry. J. Biomech. 2004, 37, 121–126. [Google Scholar] [CrossRef] [PubMed]

- Mancini, M.; Horak, F. The relevance of clinical balance assessment tools to differentiate balance deficits. Eur. J. Phys. Rehabil. Med. 2010, 46, 239–248. [Google Scholar]

- Tsebesebe, N.; Mpofu, K.; Sivarasu, S.; Mthunzi-Kufa, P. Arduino-based devices in healthcare and environmental monitoring. Discov. Internet Things 2025, 5, 64. [Google Scholar] [CrossRef]

- Sabatini, A. Inertial Sensing in Biomechanics: A Survey of Computational Techniques Bridging Motion Analysis and Personal Navigation. In Computational Intelligence for Movement Sciences: Neural Networks and Other Emerging Techniques; IGI Global: Hershey, PA, USA, 2006; pp. 70–100. [Google Scholar]

- Roetenberg, D.; Luinge, H.; Slycke, P. Xsens MVN: Full 6DOF Human Motion Tracking Using Miniature Inertial Sensors; Technical Report 3; Xsens Motion Technologies BV: Enschede, The Netherlands, 2009. [Google Scholar]

- Patel, S.; Park, P.; Bonato, P.; Chan, L.; Rodgers, M. A review of wearable sensors and systems with application in rehabilitation. J. Neuroeng. Rehabil. 2012, 9, 21. [Google Scholar] [CrossRef]

- Menz, H.; Lord, L.; Fitzpatrick, R. Acceleration patterns of the head and pelvis when walking on level and irregular surfaces. Gait Posture 2003, 18, 35–46. [Google Scholar] [CrossRef]

- Rispens, S.; Pijnappels, M.; van Schooten, K.; Beek, P.; Daffertshofer, A.; van Dieën, J. Consistency of gait characteristics as determined from acceleration data collected at different trunk locations. Gait Posture 2014, 40, 187–192. [Google Scholar] [CrossRef]

- Forth, K.E.; Wirfel, K.L.; Adams, S.D.; Rianon, N.J.; Lieberman Aiden, E.; Madansingh, S.I. A Postural Assessment Utilizing Machine Learning Prospectively Identifies Older Adults at a High Risk of Falling. Front. Med. 2020, 7, 591517. [Google Scholar] [CrossRef]

- Howcroft, J.; Kofman, J.; Lemaire, E. Review of fall risk assessment in geriatric populations using inertial sensors. J. Neuroeng. Rehabil. 2013, 10, 91. [Google Scholar] [CrossRef]

- Shany, T.; Wang, K.; Liu, Y.; Lovell, N.H.; Redmond, S.J. Review: Are we stumbling in our quest to find the best predictor? Over-optimism in sensor-based models for predicting falls in older adults. Healthc. Technol. Lett. 2015, 2, 79–88. [Google Scholar] [CrossRef] [PubMed]

- Lockhart, T.; Liu, J. Differentiating fall-prone and healthy adults using local dynamic stability. Ergonomics 2008, 51, 1860–1872. [Google Scholar] [CrossRef] [PubMed]

- Giggins, O.M.; Sweeney, K.T.; Caulfield, B. Rehabilitation exercise assessment using inertial sensors: A cross-sectional analytical study. J. NeuroEng. Rehabil. 2014, 11, 158. [Google Scholar] [CrossRef] [PubMed]

- Zhang, S.; Li, S.; Zhang, S.; Shahabi, F.; Xia, S.; Deng, Y.; Alshurafa, N. Deep Learning in Human Activity Recognition with Wearable Sensors: A Review on Advances. Sensors 2022, 22, 1476. [Google Scholar] [CrossRef]

- Uddin, M.Z.; Soylu, A. Human activity recognition using wearable sensors, discriminant analysis, and long short-term memory-based neural structured learning. Sci. Rep. 2021, 11, 16455. [Google Scholar] [CrossRef]

- Hammerla, N.; Halloran, S.; Ploetz, T. Deep, convolutional, and recurrent models for human activity recognition using wearables. In Proceedings of the IJCAI’16: 25th International Joint Conference on Artificial Intelligence, New York, NY, USA, 9–15 July 2016; pp. 1533–1540. [Google Scholar]

- Abdelkhalik, A. Assessment of elderly awareness regarding Balance Disorders and Falls Prevention. Helwan Int. J. Nurs. Res. Pract. 2023, 2, 145–159. [Google Scholar] [CrossRef]

- Bogle, T.; Newton, R. Use of the Berg Balance Scale to predict falls in elderly persons. Phys. Ther. 1996, 76, 576–585. [Google Scholar] [CrossRef]

- Steffen, T.; Hacker, T.; Mollinger, L. Age- and gender-related test performance in community-dwelling elderly people: Six-Minute Walk Test, Berg Balance Scale, Timed Up & Go Test, and gait speeds. Phys. Ther. 2002, 82, 128–137. [Google Scholar] [PubMed]

- Podsiadlo, D.; Richardson, S. The timed “Up & Go”: A test of basic functional mobility for frail elderly persons. J. Am. Geriatr. Soc. 1991, 39, 142–148. [Google Scholar] [CrossRef]

- Shumway-Cook, A.; Brauer, S.; Woollacott, M. Predicting the probability for falls in community-dwelling older adults using the Timed Up & Go Test. Phys. Ther. 2000, 80, 896–903. [Google Scholar] [CrossRef]

- Bischoff, H.; Stähelin, H.; Monsch, A.; Iversen, M.; Weyh, A.; von Dechend, M.; Akos, R.; Conzelmann, M.; Dick, W.; Theiler, R. Identifying a cut-off point for normal mobility: A comparison of the timed ‘up and go’ test in community-dwelling and institutionalised elderly women. Age Ageing 2003, 32, 315–320. [Google Scholar] [CrossRef] [PubMed]

- Costa, M.; Peng, C.-K.; Goldberger, A.; Hausdorff, J. Multiscale entropy analysis of human gait dynamics. Phys. A 2005, 330, 53–60. [Google Scholar] [CrossRef] [PubMed]

- Richman, J.; Moorman, J. Physiological time-series analysis using approximate entropy and sample entropy. Am. J. Physiol. Heart Circ. Physiol. 2000, 278, H2039–H2049. [Google Scholar] [CrossRef]

- Manor, B.; Costa, M.; Hu, K.; Newton, E.; Starobinets, O.; Kang, H.; Peng, C.-K.; Novak, V.; Lipsitz, L. Physiological complexity and system adaptability: Evidence from postural control dynamics of older adults. J. Appl. Physiol. 2010, 109, 1786–1791. [Google Scholar] [CrossRef]

- Stone, E.; Skubic, M. Fall detection in homes of older adults using the Microsoft Kinect. IEEE J. Biomed. Health Inf. 2015, 19, 290–301. [Google Scholar] [CrossRef]

- Fan, X.; Zhang, H.; Leung, C.; Shen, Z. Robust unobtrusive fall detection using infrared array sensors. In Proceedings of the 2017 IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Daegu, Republic of Korea, 16–18 November 2017; pp. 194–199. [Google Scholar]

- Gasparrini, S.; Cippitelli, E.; Spinsante, S.; Gambi, E. A depth-based fall detection system using a Kinect® sensor. Sensors 2014, 14, 2756–2775. [Google Scholar] [CrossRef]

- Attal, F.; Mohammed, S.; Dedabrishvili, M.; Chamroukhi, F. Physical human activity recognition using wearable sensors. Sensors 2015, 15, 31314–31338. [Google Scholar] [CrossRef]

- Lara, O.; Labrador, M. A survey on human activity recognition using wearable sensors. IEEE Commun. Surv. Tutor. 2013, 15, 1192–1209. [Google Scholar] [CrossRef]

- Yang, C.-C.; Hsu, Y.-L. A review of accelerometry-based wearable motion detectors for physical activity monitoring. Sensors 2010, 10, 7772–7788. [Google Scholar] [CrossRef] [PubMed]

- Tinetti, M. Performance-oriented assessment of mobility problems in elderly patients. J. Am. Geriatr. Soc. 1986, 34, 119–126. [Google Scholar] [CrossRef] [PubMed]

- Kegelmeyer, D.; Kloos, A.; Thomas, K.; K Kostyk, S. Reliability and validity of the Tinetti Mobility Test for individuals with Parkinson disease. Phys. Ther. 2007, 87, 1369–1378. [Google Scholar] [CrossRef]

- Duncan, P.; Weiner, D.; Chandler, J.; Studenski, S. Functional reach: A new clinical measure of balance. J. Gerontol. 1990, 45, M192–M197. [Google Scholar] [CrossRef]

- Weiner, D.; Duncan, P.; Chandler, J.; Studenski, S. Functional reach: A marker of physical frailty. J. Am. Geriatr. Soc. 1993, 41, 101–104. [Google Scholar] [CrossRef]

- Nooruddin, S.; Islam, M.; Sharna, F.; Alhetari, H.; Kabir, M. Sensor-based fall detection systems: A review. J. Ambient. Intell. Hum. Comput. 2022, 13, 2735–2751. [Google Scholar] [CrossRef]

- Gravity: Gesture & Touch Sensor (UART, 7 Gestures, 0~30 cm). Available online: https://www.dfrobot.com/product-1898.html?srsltid=AfmBOorkuqAS9Qv4bloeSWgFMZOuRzFgc9QoaZkCH8uJJ8V6mbLE2oak (accessed on 2 June 2025).

- Olsson, T. Arduino Wearables, 1st ed.; Apress: New York, NY, USA, 2012. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Polosukhin, I. Attention is all you need. In Proceedings of the NIPS’17: 31st International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Kim, B.J.; Mun, J.H.; Hwang, D.H.; Suh, D.I.; Lim, C.; Kim, K. An explainable and accurate transformer-based deep learning model for wheeze classification utilizing real-world pediatric data. Sci. Rep. 2025, 15, 5656. [Google Scholar] [CrossRef]

- Alzubaidi, L.; Zhang, J.; Humaidi, A.; Al-Dujaili, A.; Duan, Y.; Al-Shamma, O.; Santamaría, J.; Fadhel, M.; Al-Amidie, M.; Farhan, L. Review of deep learning: Concepts, CNN architectures, challenges, applications, future directions. J. Big Data 2021, 8, 53. [Google Scholar] [CrossRef]

- Ayeni, J. Convolutional Neural Network (CNN): The architecture and applications. Appl. J. Phys. Sci. 2022, 4, 42–50. [Google Scholar] [CrossRef]

- Liu, Z.; Wang, Y.; Vaidya, S.; Ruehle, F.; Halverson, J.; Soljacic, M.; Hou, T.; Tegmark, M. KAN: Kolmogorov–Arnold Networks. In Proceedings of the International Conference on Learning Representations (ICLR), Singapore, 24–28 April 2025. [Google Scholar]

- Giannakas, F.; Troussas, C.; Krouska, A.; Sgouropoulou, C.; Voyiatzis, I. XGBoost and Deep Neural Network Comparison: The Case of Teams’ Performance. In Proceedings of the 17th International Conference on Intelligent Tutoring Systems (ITS 2021), Virtual Event, 7–11 June 2021; Springer: Cham, Switzerland; pp. 343–349. [Google Scholar]

- Hogan, N.; Sternad, D. Sensitivity of Smoothness Measures to Movement Duration, Amplitude, and Arrests. J. Mot. Behav. 2009, 41, 529–534. [Google Scholar] [CrossRef] [PubMed]

- Rohrer, B.; Fasoli, S.; Krebs, H.; Hughes, R.; Volpe, B.; Frontera, W.; Hogan, N. Movement smoothness changes during stroke recovery. J. Neurosci. 2002, 22, 8297–8304. [Google Scholar] [CrossRef] [PubMed]

- Stergiou, N.; Decker, L. Human movement variability, nonlinear dynamics, and pathology: Is there a connection? Hum. Mov. Sci. 2011, 30, 869–888. [Google Scholar] [CrossRef]

- Mai, Y.; Sheng, Z.; Shi, H.; Liao, Q. Using improved XGBoost algorithm to obtain modified atmospheric refractive index. Int. J. Antennas Propag. 2021, 1, 5506599. [Google Scholar] [CrossRef]

- Xu, K.; Chen, L.; Wang, S. Kolmogorov-Arnold Networks for Time Series: Bridging Predictive Power and Interpretability. arXiv 2024, arXiv:2406.02496. [Google Scholar] [CrossRef]

| Method | Sensors | Key References | Pros | Cons |

|---|---|---|---|---|

| Force Platform-Based Methods | ||||

| Center of Pressure (COP) Analysis | Force plates, pressure sensors | [5,6,7] |

|

|

| Static Posturography | Multi-axis force platforms | [8,9] |

|

|

| Inertial Measurement Unit (IMU)-Based Methods | ||||

| Accelerometer-Based Stability | Tri-axial accelerometers | [10,11,12] |

|

|

| Multi-Sensor IMU Systems | Accelerometers, gyroscopes, magnetometers | [13,14,15] |

|

|

| Pendant-Mounted Systems | Single IMU at center of mass | [16,17] |

|

|

| Machine Learning Classification Approaches | ||||

| Supervised Learning (SVM, RF, k-NN) | Accelerometers, gyroscopes | [18,19,20] |

|

|

| Unsupervised Learning (k-Means, GMM, HMM) | Inertial sensors | [21,22] |

|

|

| Deep Learning Approaches | Multi-modal sensor data | [23,24,25] |

|

|

| Observational and Clinical Methods | ||||

| Berg Balance Scale | Visual observation | [26,27,28] |

|

|

| Timed Up and Go (TUG) | Stopwatch, optional IMU | [29,30,31] |

|

|

| Multiscale Entropy (MSE) Analysis | ||||

| Complexity Analysis | Force plates, accelerometers | [32,33,34] |

|

|

| Emerging Technologies | ||||

| Computer Vision Systems | RGB cameras, depth sensors | [35,36,37] |

|

|

| Hybrid Sensor Systems | IMU + cameras + pressure sensors | [38,39,40] |

|

|

| Additional Clinical Assessment Tools | ||||

| Tinetti Performance-Oriented Mobility Assessment (POMA) | Visual observation | [41,42] |

|

|

| Functional Reach Test | Measuring ruler | [43,44] |

|

|

| Model Name | Key Components/Stages |

|---|---|

| CNN | 1. Input reshaped to [batch, 1, features] for 1D processing. 2. Two Convolutional Blocks (Conv1D, BatchNorm, ReLU, MaxPool) for feature extraction. 3. Flattening of feature maps. 4. Fully Connected classification head (Linear, ReLU, Dropout, Linear output). |

| Transformer | 1. Input reshaped to [batch, 1, features] (sequence length 1). 2. Linear projection maps input features to d_model embedding space. 3. Stacked Transformer Encoder layers (Multi-Head Self-Attention, Feed-Forward Network, LayerNorm, Residuals) applied to the single sequence element. 4. Output representation extracted. 5. Classifier head (LayerNorm, MLP, Linear output). |

| KAN | 1. Fixed non-linear feature expansion using polynomial basis functions (up to degree 3). 2. Single linear layer maps expanded features directly to class outputs. 3. Training via one-shot pseudoinverse calculation, not iterative gradient descent. |

| XGBoost | 1. Ensemble of decision trees built sequentially (Gradient Boosting). 2. Each new tree fits the gradient of the loss w.r.t. the previous ensemble’s predictions. 3. Includes L1/L2 regularization on leaf weights. 4. Uses efficient histogram-based algorithm for finding splits. |

| Features and Their PSI | Low Stability Case | High Stability Case |

|---|---|---|

| Smoothness Score | 96.89 | 94.79 |

| Consistency Score | 98.85 | 99.21 |

| Speed Score | 71.00 | 82.00 |

| Physical Stability Index (PSI) | 88.91 | 92.00 |

| PSI Range | Classification | Physical Condition Indicators |

|---|---|---|

| >90 | Highly Stable | Optimal Performance

|

| 76–90 | Stable | Good Performance with Minor Issues

|

| 60–75 | Unstable | Moderate Impairments

|

| <60 | Highly Unstable | Significant Impairments

|

| Model | Recall | Accuracy | Precision | F1-Score |

|---|---|---|---|---|

| Transformer | 0.9950 | 0.9950 | 0.9950 | 0.9949 |

| CNN | 0.9900 | 0.9903 | 0.9900 | 0.9899 |

| KAN | 0.8700 | 0.8880 | 0.8700 | 0.8663 |

| XGBoost | 0.9800 | 0.9800 | 0.9800 | 0.9799 |

| Expert Role | How They Use the Classification |

|---|---|

| Physiatrists (Physical Medicine and Rehabilitation Physicians) | They interpret the stability levels to guide overall rehabilitation planning, adjust medical management, and coordinate the rehabilitation team. |

| Physical Therapists | They use the classifications to design balance-training protocols, gait re-education, and fall-prevention programs, scaling exercise intensity according to the patient’s stability level. |

| Occupational Therapists | They integrate the stability ratings into ADL (activities of daily living) training, ensuring that tasks such as reaching or transferring are matched to the patient’s current stability status. |

| Rehabilitation Engineers/Assistive-technology Specialists | They embed the gesture-based stability classifier into smart exoskeletons or wearable devices (e.g., EMG-driven gloves) so that mechanical assistance is automatically modulated in real time. |

| Neurologists and Stroke-specialist Nurses | In post-stroke programs, they monitor stability trends over time and adjust pharmacologic or nursing interventions to minimize fall risk. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tolba, S.; Raafat, H.; Tolba, A.S. Gesture-Based Physical Stability Classification and Rehabilitation System. Sensors 2025, 25, 6098. https://doi.org/10.3390/s25196098

Tolba S, Raafat H, Tolba AS. Gesture-Based Physical Stability Classification and Rehabilitation System. Sensors. 2025; 25(19):6098. https://doi.org/10.3390/s25196098

Chicago/Turabian StyleTolba, Sherif, Hazem Raafat, and A. S. Tolba. 2025. "Gesture-Based Physical Stability Classification and Rehabilitation System" Sensors 25, no. 19: 6098. https://doi.org/10.3390/s25196098

APA StyleTolba, S., Raafat, H., & Tolba, A. S. (2025). Gesture-Based Physical Stability Classification and Rehabilitation System. Sensors, 25(19), 6098. https://doi.org/10.3390/s25196098