2.2. Algorithm Principle of VMD

Variational Mode Decomposition (VMD) is an innovative signal processing technique designed to decompose complex signals into sub-signal modes characterized by distinct center frequencies. Introduced by Konstantin Dragomiretskiy and Dominique Zosso in 2014, VMD was developed to overcome the limitations of Empirical Mode Decomposition (EMD) [

29]. VMD offers a robust approach to managing nonlinear and non-stationary signals. It operates as an adaptive, non-recursive modal decomposition model, with the core concept being the transformation of the signal decomposition challenge into a variational problem. The intrinsic mode functions (IMFs) are identified by minimizing an energy functional, thereby revealing the signal’s inherent mode functions.

The objective of this VMD is to decompose the original signal into a series of band-limited and compact modal components that are adaptively determined directly from the data. These components possess well-defined center frequencies and minimized bandwidths, which in turn reduce spectral overlap between modes. Additionally, they exhibit favorable local characteristics and reconfigurability.

To circumvent the issue of modal stacking that may occur during signal decomposition, VMD abandons the recursive approach traditionally employed by EMD when calculating IMFs. Instead, VMD employs a fully non-recursive mode decomposition strategy. Compared to conventional signal decomposition algorithms, VMD boasts the benefits of a non-recursive solution and the autonomous selection of the number of modes. In contrast to EMD, VMD delineates each IMF as an amplitude-modulation (AM) frequency modulation function, with a mathematical formulation expressed as:

where the instantaneous phase

is a non-monotone decline, that is

, the envelope amplitude

of the signal

.

Suppose the original signal s is composed of K components each with a limited bandwidth. The central frequency of each IMF is denoted by The sum of the bandwidths is minimized, and the aggregate of all the modes equals the original signal. The decomposition process in the VMD algorithm can be interpreted as the process of finding the optimal solution to a variational problem, which can be translated into the formulation and resolution of a variational problem. The detailed steps are as follows:

(1) The solution

is obtained by Hilbert transform [

34] and its unilateral spectrum is calculated, and the central band of

is shifted to the corresponding baseband

by multiplication with operator

:

(2) Calculate the square norm

of the demodulating gradient, and estimate the bandwidth of each module component. The constrained variational model is:

In the above formula, which represents each IMF component after decomposition, and is the center frequency of the corresponding component.

To find the optimal solution to the constrained variational problem, we transform the constrained variational problem into an unconstrained variational problem, where the Variational Mode Decomposition introduces Lagrangian multipliers τ(t) and the second order penalty term α. The extended Lagrangian expression is as follows:

(3) Then use the Alternate Direction Method of Multipliers (ADMM) [

26] to alternately update each IMF, and finally obtain the best solution to the original problem. All IMFs can be obtained by the following formula:

where ω represents the frequency,

,

,

correspond to the Fourier transform of

,

,

, respectively.

(4) is the remainder of after Wiener filtering. The algorithm re-estimates the center frequency based on the center of gravity of the power spectrum of each IMF. The specific process is:

(1) Initialize and n;

(2) Execution cycle: n = n + 1;

(3) When ω ≥ 0, update according to Formula (5);

(4) Update

according to Formula (6);

(5) Update

according to Equation (7), where β represents noise, when the signal contains strong noise, in order to achieve better denoising effect, β = 0 can be set;

(6) Repeat steps (2) through (5) until iteration stop conditions are met:

where ε is the judgment accuracy, and the K modal components are output after the conditions are satisfied.

2.3. Arithmetic Optimization Algorithm (AOA)

The Arithmetic Optimization Algorithm (AOA) is a new optimization method inspired by arithmetic operations, proposed by scholars such as Abualigah in 2021 [

35]. This algorithm ingeniously simulates the dynamic interaction process of four basic operations (addition, subtraction, multiplication, and division) as a search mechanism, thereby constructing a solution space exploration framework for optimization problems. Compared with traditional heuristic algorithms, the AOA not only has strong global search capabilities but also effectively balances the weights of the exploration and exploitation stages through adaptive parameter adjustment, thus significantly improving the convergence speed and avoiding the risk of falling into local optima. In addition, AOA has the potential for parallel computing and is suitable for solving various complex optimization problems.

The design concept of the AOA algorithm embodies the intuition and simplicity of mathematical operations, resulting in fewer parameters, easy implementation, and high computational efficiency. Moreover, due to its structure that naturally supports parallel processing, the AOA shows good scalability and flexibility when dealing with large-scale complex optimization problems. This algorithm is applicable to various fields, including but not limited to engineering design optimization, machine learning model tuning, image and signal processing, combinatorial optimization problems, and feature selection. Its efficiency and stability demonstrated in practical applications have promoted the development trend of intelligent optimization algorithms, making it an increasingly favored tool among researchers and engineers.

The AOA first randomly initializes a set of candidate solutions in the solution space. The position of each solution corresponds to a potential solution to the optimization problem, and its quality is evaluated through a fitness function. The level of the fitness value directly reflects the quality of the solution, and the algorithm continuously optimizes the distribution of these solutions through iteration.

In the search process, the AOA adopts a dynamic update mechanism based on arithmetic operations, combining the two stages of exploration and exploitation to balance the search process between global and local. The exploration stage expands the coverage of solutions through random search to avoid falling into local optima, while the exploitation stage finely adjusts the individual positions near the current optimal solution to improve convergence accuracy. The search strategy is dynamically adjusted by control parameters to adapt to the characteristics of different optimization problems.

After each iteration, the population re-evaluates the quality of the solutions according to the fitness function and updates the current optimal solution. Through continuous optimization and adjustment, the AOA can effectively approach the global optimal solution while maintaining population diversity and enhancing search capabilities. This optimization strategy based on arithmetic operations enables the AOA to have high computational efficiency, stability, and robustness when dealing with complex optimization problems.

In the solution space, the AOA initializes a population containing multiple candidate solutions, where each individual is represented by a D-dimensional vector as a potential solution to the optimization problem. To evaluate the quality of these solutions, the AOA uses a predefined fitness function to calculate the fitness value of each individual, which measures how close the solution is to the optimal solution in the search space.

Fitness evaluation provides the optimization direction for the algorithm. By comparing the fitness values between individuals, the AOA dynamically adjusts the search strategy to balance global search and local exploitation. With the iteration of the algorithm, the population is also continuously updated, making individuals gradually converge to the optimal solution while maintaining diversity to avoid falling into local optima, thereby improving search efficiency and optimization accuracy. The AOA controls the search process through arithmetic operations. Compared with Particle Swarm Optimization (PSO) and Genetic Algorithm (GA), it features fewer parameters and more direct calculation, and usually performs better in terms of convergence speed and global search performance [

36].

The specific process of the AOA is as follows:

(1) Population parameter initialization

The optimization process first needs to set basic population parameters to formulate a preliminary plan for the entire search process. Parameter initialization defines the range of the search space, the number of candidate solutions, the maximum number of iterations, and the dynamic control factors used to balance global search and local exploitation, providing basic conditions for subsequent searches.

First, the AOA will generate a randomly distributed population with uniform distribution. The initial population can be obtained by the following formula:

Among them, Ub is the upper bound, Lb is the lower bound, Rand is a random number between [0, 1], and X(i, j) is the position of the i-th solution in the j-th dimensional space.

(2) Math Optimizer Accelerated function

The Math Optimizer Accelerated (MOA) function in the Arithmetic Optimization Algorithm is a key mechanism for dynamically adjusting the search strategy. This function is designed to balance the two stages of global exploration and local exploitation, thereby maintaining a high degree of search dispersion in the early stage and gradually reducing the search step size in the later stage to achieve fine optimization. The calculation formula of MOA is generally expressed as:

Among them, Min and Max represent the minimum and maximum values of the acceleration function, respectively; T is the maximum number of iterations, and t is the current number of iterations. This function shows a linear growth trend during the iteration process. When the value is low in the early stage, it prompts the algorithm to use operators with high dispersion such as multiplication or division to achieve global exploration. As t increases, the value of MOA gradually increases, and the algorithm tends to use operators such as addition or subtraction to reduce the search step size and enhance the local exploitation ability.

A random number R1 between [0, 1] is used to control the calculation stage of the algorithm: when R1 < MOA(t), the function enters the global exploration stage; otherwise, it enters the local exploitation stage.

This strategy based on the mathematical function accelerator enables AOA to adaptively switch from rough search to fine optimization, effectively improving the convergence speed and reducing the risk of falling into local optima. Through the dynamic adjustment of MOA, the algorithm ensures the breadth of global search while being able to conduct detailed exploration of the solution space in the later stage, thereby improving the solution accuracy and overall performance.

(3) Global exploration of the algorithm

In the initial stage, a large-step update strategy is adopted to achieve global exploration, ensuring that the search process can extensively traverse the solution space, thus avoiding falling into local optima early. At this stage, a multiplication or division strategy is chosen based on the random number R2. Both strategies have high dispersion, which is beneficial for solutions to explore in the algorithm space. The calculation formulas are as follows:

X(t + 1) is the position of the next-generation particle, X

b(t) is the position of the particle with the best current fitness, μ is the search process control coefficient (generally set to 0.5), ε is a minimum value, and MOP is the Math Optimizer Probability, whose calculation formula is as follows:

Wherein, MOP(t) is the current Math Optimizer Probability, and α is the iteration sensitivity coefficient. A higher value of α means that the number of iterations has a greater impact on MOP(t). In the early stage of the algorithm (i.e., when t is small), the value of MOP is relatively high, making the update process tend to use multiplication or division operators. This strategy has high dispersion, which is conducive to global search. As t increases, the value of MOP gradually decreases, thereby reducing the search step size and enhancing the local exploitation ability. This mechanism effectively prevents the algorithm from falling into local optima prematurely and improves the overall convergence accuracy and stability.

(4) Local exploitation of the algorithm

In the later stage of the search, to refine the local search, the update step size is gradually reduced to make precise adjustments to the current better solutions. At this stage, local exploitation is mainly carried out through addition and subtraction strategies. Both strategies have significant low dispersion, which makes it easy to approach the target and helps the algorithm find the optimal solution faster. Their calculation formulas are as follows:

Wherein, R3 is a random number within [0, 1].

(5) Main loop of the algorithm (iteration process)

In the main loop, the algorithm continuously iteratively updates the state of candidate solutions and dynamically adjusts the search strategy according to the current number of iterations. First, in each iteration, the fitness values of all candidate solutions in the current population are calculated to determine the global optimal solution. Subsequently, based on the current values of the pre-set Math Optimizer Accelerated (MOA) function and Math Optimizer Probability (MOP), the algorithm switches between global exploration and local exploitation. After calculating the fitness of the candidate solutions, the current optimal solution is found, the global optimal solution is updated, and the boundaries are updated. The main loop terminates when the maximum number of iterations is reached or the convergence criterion is met, ensuring that the algorithm can achieve efficient and stable convergence while fully exploring the solution space.

Nonlinear MOA scheduling: slow in the early stage and steep in the later stage. In the early stage, more multiplication/division operations are used to achieve wide-range exploration; in the later stage, addition/subtraction operations are used to refine neighborhood search.

Fitness function: MEE (Mean Envelope Entropy). For each IMF, the normalized envelope is obtained through Hilbert transform, the entropy is calculated, and the average is taken and minimized. A small MEE indicates that the components are sparser and closer to the dominant cardiac cycle.

2.4. Algorithm Improvement and Overall Framework

2.4.1. Improvements

In practical applications, AOA may face problems such as insufficient solution accuracy, unsatisfactory convergence speed, and being easily trapped in local optimal solutions. To address these challenges, our work proposes improved position update rules and a scheme to adjust the optimizer probability in the algorithm, so as to enhance the exploration and exploitation capabilities of the algorithm. In addition, a set of mechanisms is designed to prevent the algorithm from falling into local optima, thereby improving the overall solution effect and the robustness of the algorithm.

(1) Position update strategy

In the Arithmetic Optimization Algorithm, the design of the position update strategy is crucial. Improper position update strategies may cause the algorithm to converge prematurely to local optimal solutions, reduce the global search capability, and affect the optimization effect. In addition, insufficient population diversity may also make it difficult for the algorithm to jump out of local optimal solutions, affecting the global search capability.

To improve this, the Tent chaotic mapping initialization strategy and population mutation strategy are introduced. The Tent Map is a piecewise linear chaotic mapping function, named for its image shape resembling a tent. It is widely used in chaotic encryption systems, such as image encryption, generation of chaotic spread spectrum codes, etc. The definition of the Tent chaotic mapping is as follows:

Wherein, the parameter α satisfies 0 < α < 1.

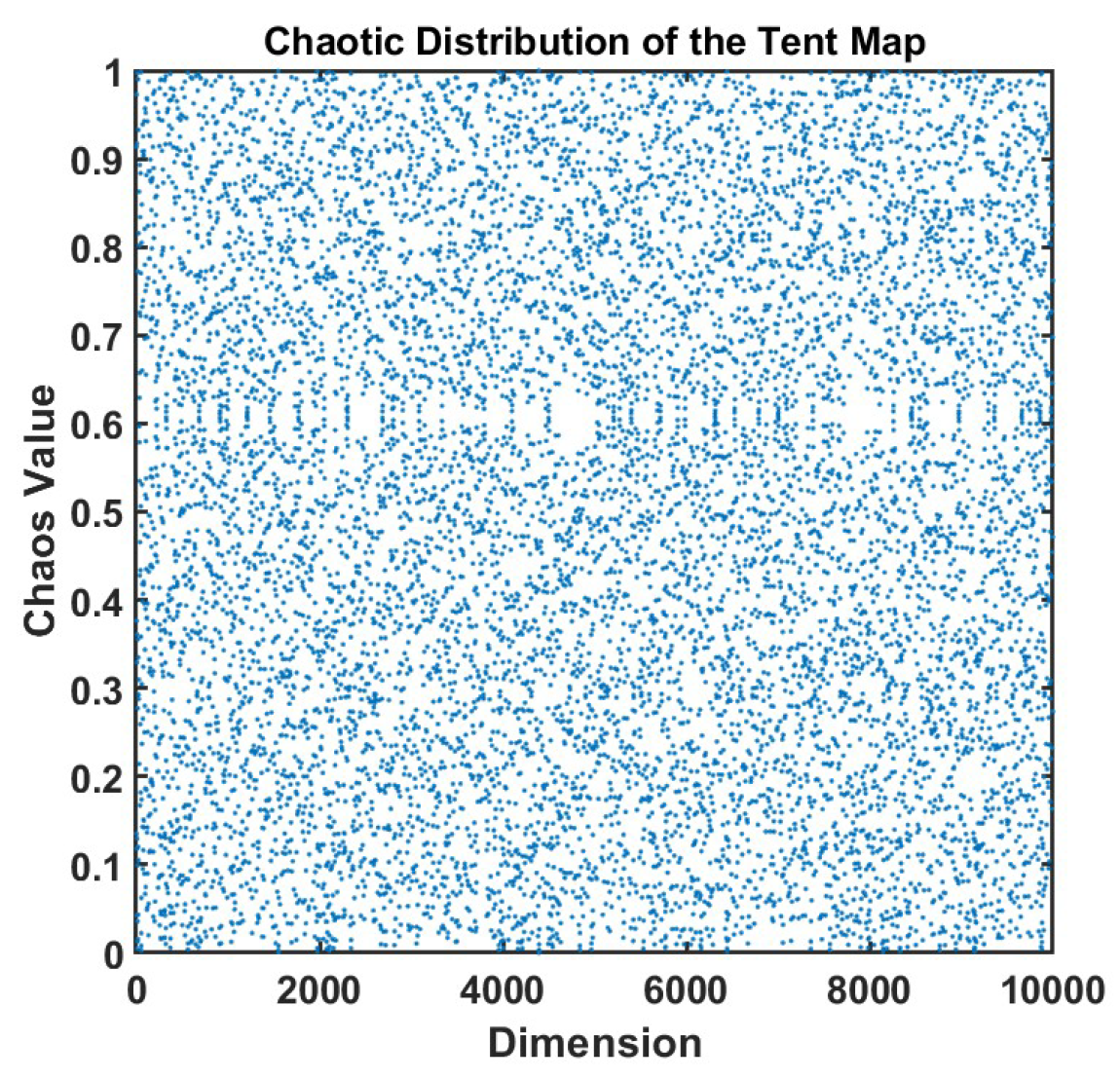

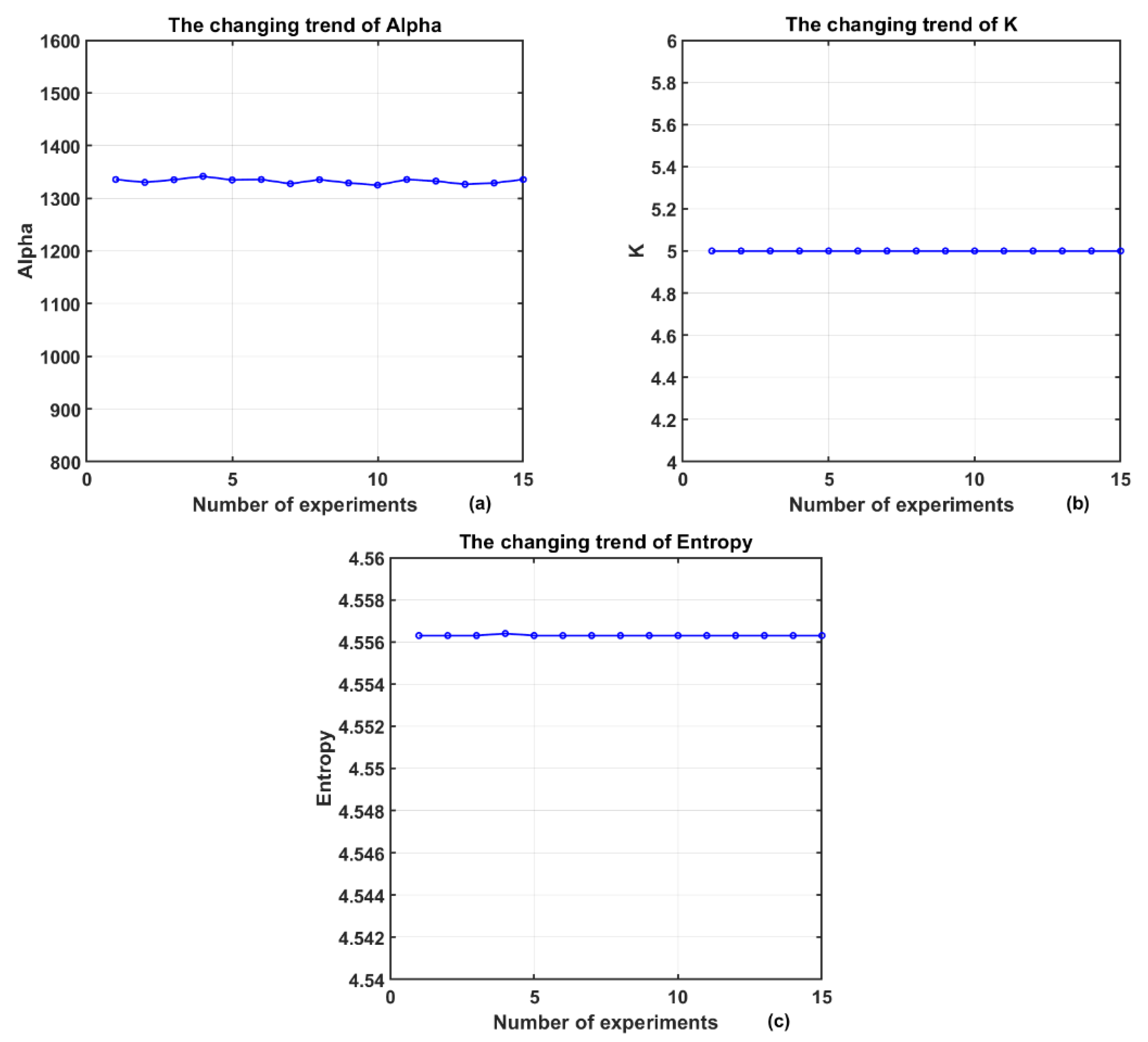

As shown in

Figure 3, in the scatter plot of the chaotic mapping, the horizontal axis represents the dimension and the vertical axis represents the chaotic value. The data points in the figure are evenly distributed without obvious patterns, showing typical chaotic characteristics, which indicates that the mapping function has strong randomness and ergodicity.

Compared with traditional random initialization, chaotic mapping can significantly enhance the global search capability and convergence accuracy of optimization algorithms. Its ergodicity and pseudo-randomness enable initial individuals to be evenly distributed in the search space, avoiding local concentration and reducing the risk of falling into local optima. Meanwhile, the non-periodic characteristic enhances population diversity, which helps achieve more comprehensive global exploration in the early stage of search and improve the overall optimization efficiency.

In addition, the algorithm adds a population mutation strategy during each population position update to further enhance the search capability and the ability to jump out of local optima. By introducing chaotic disturbance or adaptive mutation mechanisms in the iteration process, individuals make fine adjustments on the basis of their current positions, enabling them to maintain high exploration capability in the search space and avoid premature convergence. Different from the traditional fixed mutation rate, this dynamic mutation method can appropriately adjust the mutation amplitude according to the search progress, further improving the algorithm’s ability to escape local optima. The formula is as follows:

Wherein, r1 is a random number within [0, 1], and Xrand is a random individual within the range of the current population.

(2) Mathematical Optimizer Accelerated Function (MOA)

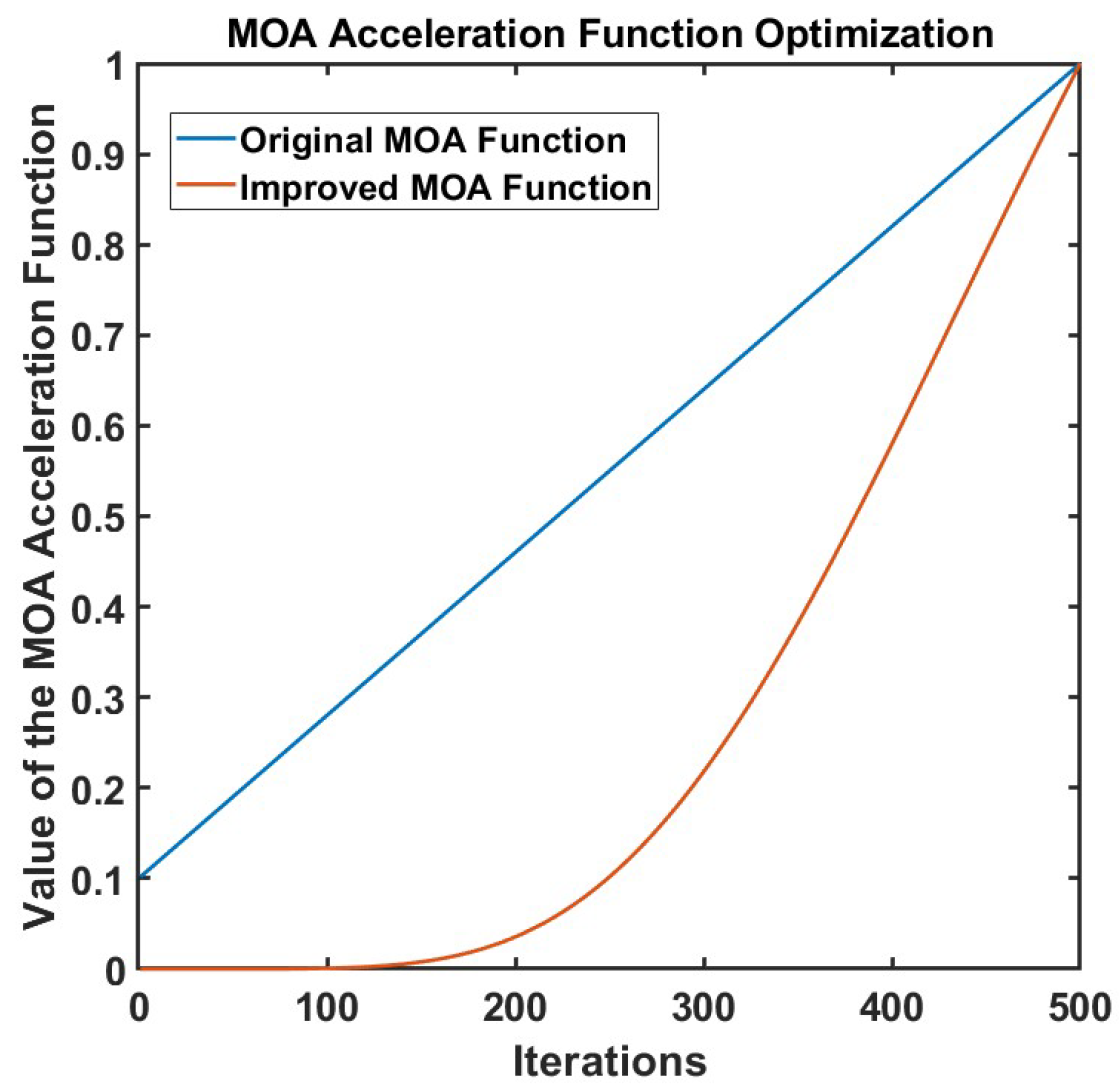

The MOA function is the core control mechanism of the AOA. It drives the algorithm performance by dynamically adjusting the balance between exploration and exploitation. Its core functions are reflected in: dynamically coordinating the switch between global search and local optimization, using a linearly increasing threshold (MOA value) to favor global exploration (multiplication and division operations) in the early stage, and gradually shifting to local exploitation (addition and subtraction operations) in the later stage; optimizing resource allocation, and adjusting parameter weights in coordination with the Math Optimizer Probability (MOP) to ensure that the algorithm takes both diversity (avoiding prematurity) and convergence (approaching the optimal solution) into account during iteration. However, the linear change strategy of MOA lacks adaptability to complex problems, which may lead to insufficient early exploration or delayed later exploitation; at the same time, its strong dependence on randomness and parameter sensitivity are prone to cause local optimal traps, which need to be improved through nonlinear reconstruction, adaptive mechanisms, etc., to enhance robustness. For this reason, this study proposes a nonlinear MOA acceleration function, and its formula is as follows:

The comparison effect between the improved MOA function and the original MOA function is shown in

Figure 4. Compared with the linear MOA function, the nonlinear MOA function proposed in this work shows better performance in the algorithm. It can be seen from the figure that as the number of evolutionary iterations gradually increases, the linear MOA function grows at a constant rate, while the nonlinear MOA function grows more slowly in the early stage. This effectively avoids the local optimal trap caused by too fast convergence in the early stage of the search, enabling the algorithm to have stronger global exploration ability. With the deepening of iterations, the nonlinear MOA function accelerates its growth and reaches the same final value as the linear MOA in the later stage, thereby enhancing the local search ability of the algorithm and improving the convergence accuracy and optimization efficiency. This improvement allows the algorithm to dynamically adjust the search step size at different stages, improving the local convergence accuracy while maintaining the global search ability, making the optimization effect more stable and reliable.

2.4.2. Algorithm Framework

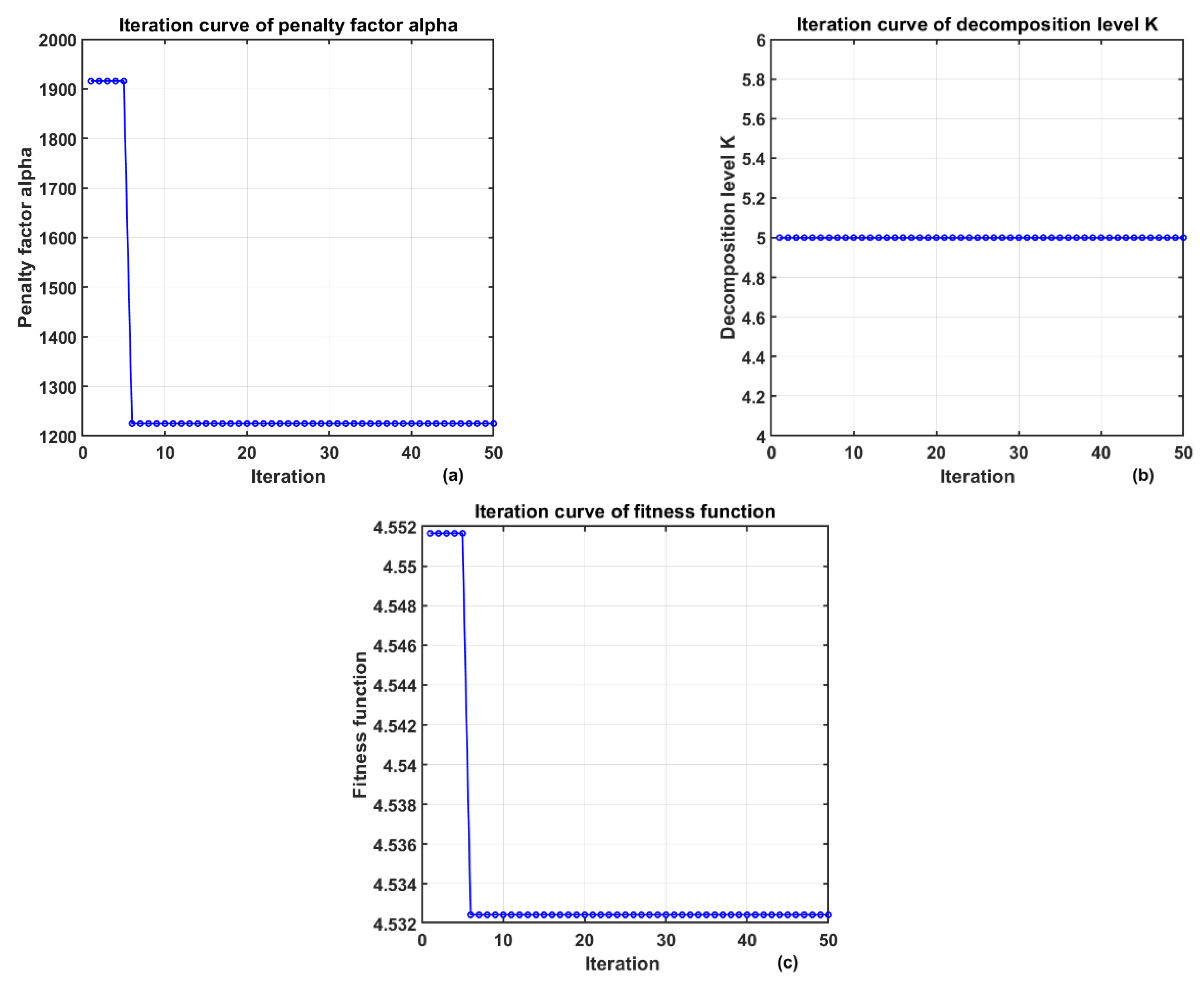

When applying VMD to process signals, the decomposition level K and the penalty factor α are key parameters that affect the decomposition effect. If K is set too small, it may lead to insufficient signal decomposition and incomplete information extraction; while an excessively large K tends to cause over-decomposition, increasing redundancy and computational complexity. Meanwhile, the selection of the penalty factor directly affects the bandwidth of Intrinsic Mode Function (IMF) components, thereby influencing the accuracy of signal reconstruction.

The traditional method of manually setting parameters is inefficient and difficult to ensure the optimal combination. Although existing studies have attempted to optimize K through the center frequency observation method, this method is cumbersome to operate and cannot optimize α simultaneously. For this reason, this work introduces the improved AOA algorithm to realize the joint adaptive optimization of K and α. AOA can efficiently search for the optimal solution in the continuous parameter space, significantly improving the accuracy and stability of VMD decomposition, while reducing manual intervention and enhancing the overall processing efficiency and the quality of signal feature extraction.

Mean envelope entropy is an indicator used to measure signal sparsity, reflecting the uniformity of the distribution of signal energy or information on the time axis. In this study, the VMD algorithm is used to decompose the magnetocardiographic signal into K intrinsic mode functions (IMFs). If some IMF components contain more noise, their pulse characteristics are not obvious and their periodicity is weak, which will be manifested as reduced sparsity and increased envelope entropy; conversely, if the IMF clearly retains the periodic characteristics of the original signal, its sparsity is stronger and the corresponding envelope entropy is smaller. In the process of the algorithm optimizing VMD parameters [K, α], this work selects Mean Envelope Entropy (MEE) as the fitness function [

37]. By minimizing MEE, the parameter optimization problem is transformed into an optimization process where AOA searches for the minimum envelope entropy value in the solution space. Assuming that during the decomposition process, the input signal s(t) is decomposed into K IMFs, the calculation formula of the mean envelope entropy is as follows:

N is the length of the signal; K Intrinsic mode functions (IMFs) can be obtained through VMD decomposition, and then the normalized envelope signals are acquired after performing Hilbert transform on them; subsequently, the envelope entropy of each IMF is calculated.

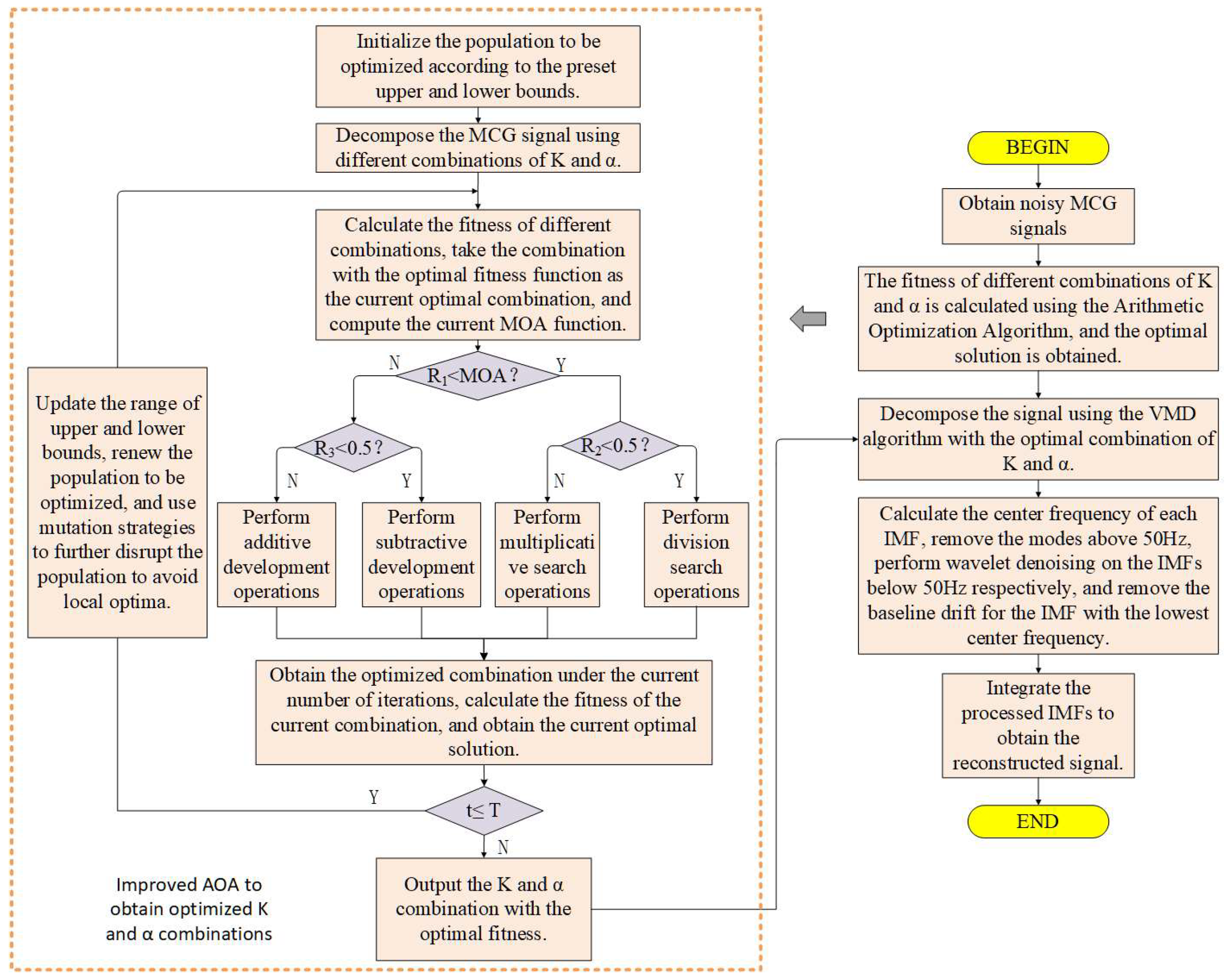

The Arithmetic Optimization Algorithm is used to optimize the penalty factor and decomposition level of VMD. The optimization steps are as follows:

(1) Set the value range of VMD parameters and randomly generate a set of initial parameters [K, α];

(2) Perform VMD decomposition according to the generated parameter set to obtain the corresponding IMF components;

(3) After calculating the mean envelope entropy, select the set of parameters with the smallest envelope entropy as the current optimal solution, and calculate the corresponding MOA function at the same time;

(4) Determine whether R1 is less than the MOA function. If yes, perform global search (step 5); otherwise, perform local exploitation (step 6);

(5) If R2 < 0.5, perform division search operation; otherwise, perform multiplication search operation;

(6) If R3 < 0.5, perform subtraction exploitation operation; otherwise, perform addition exploitation operation;

(7) If the maximum number of iterations is reached, output the current optimal parameters; otherwise, return to step (2) to continue the iteration.

To sum up, the mathematical model of the parameters can be summarized as follows:

Wherein, f (K, α) is the average envelope entropy of each IMF calculated by VMD based on the current parameters K and α.

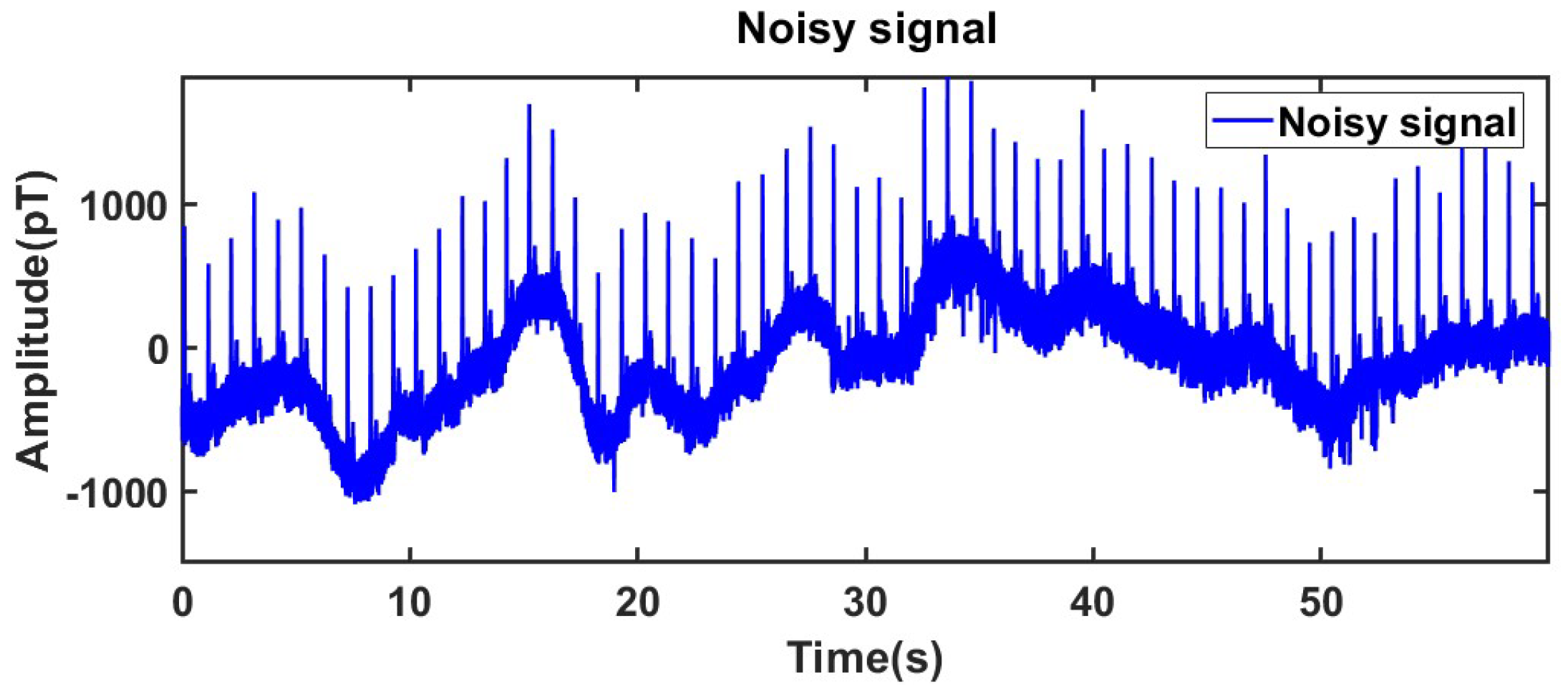

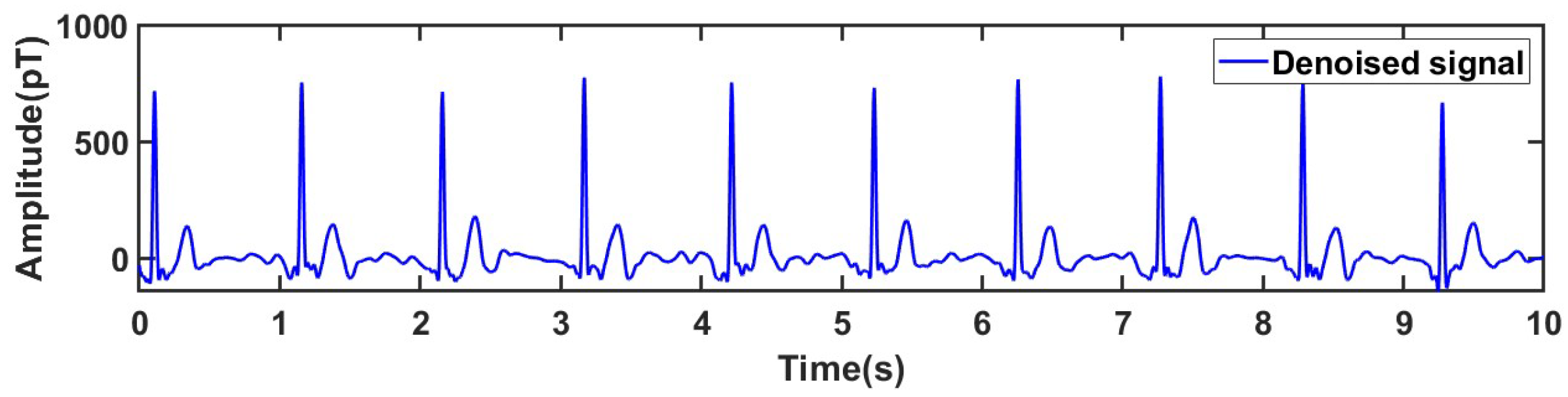

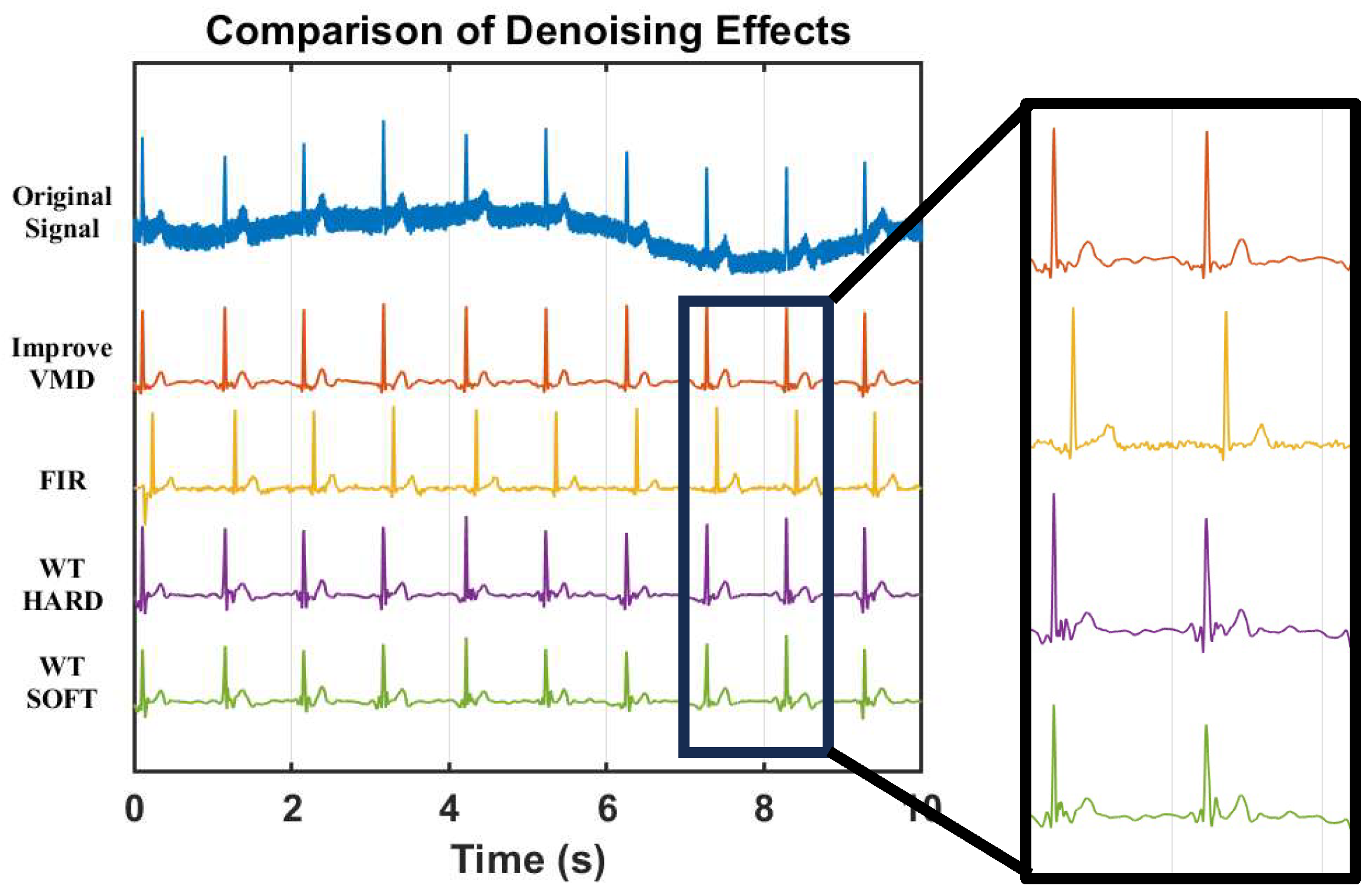

To sum up, the steps of the improved denoising algorithm are as follows:

(1) First, use AOA to adaptively optimize the key parameters of VMD. Specifically, the algorithm calculates the average envelope entropy of the IMFs decomposed under different parameter combinations through iteration, and takes the parameter combination [K, α] with the minimum entropy value as the optimal solution;

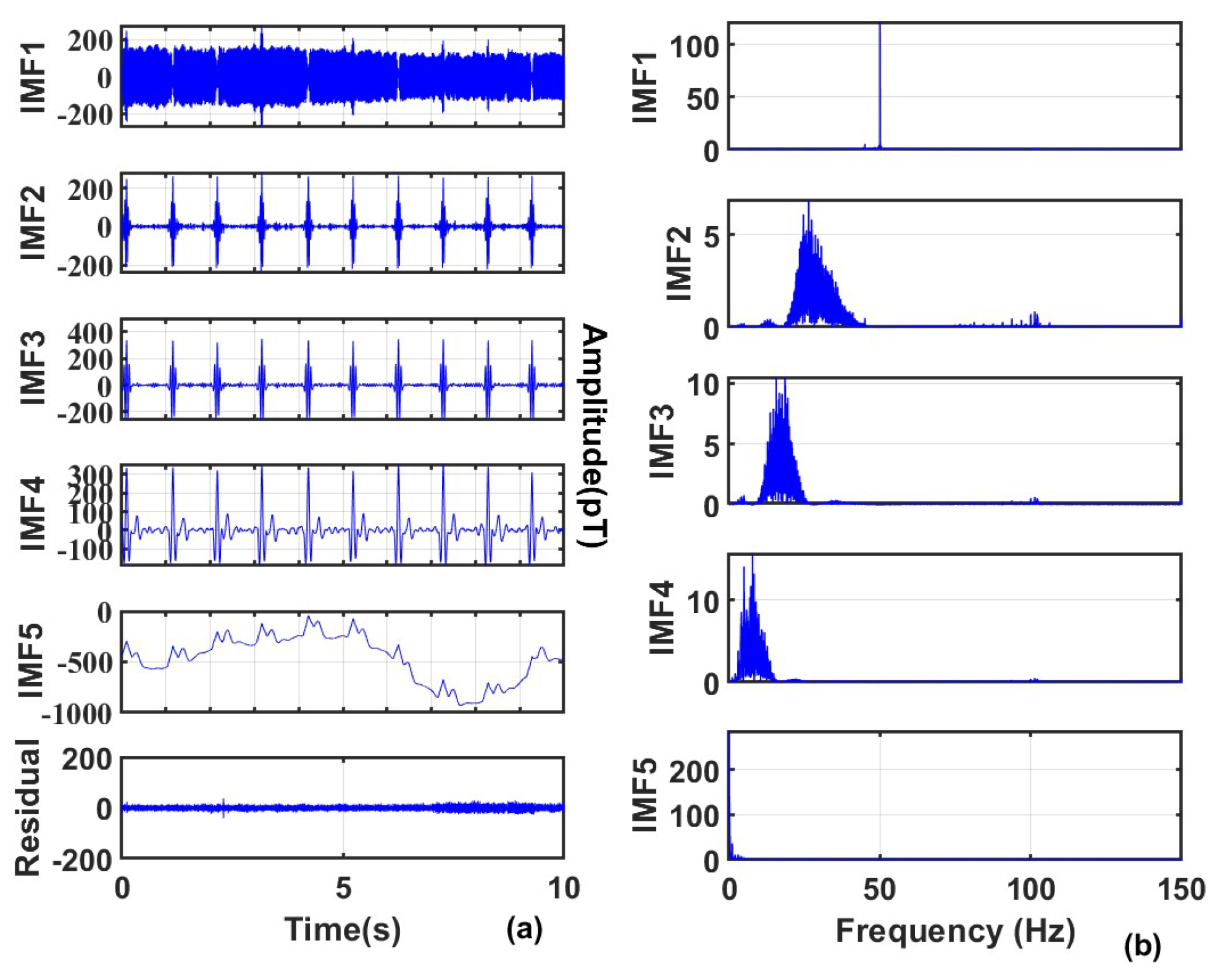

(2) Based on the optimized parameters K and α, perform VMD decomposition on the original MCG signal to obtain a series of IMF components;

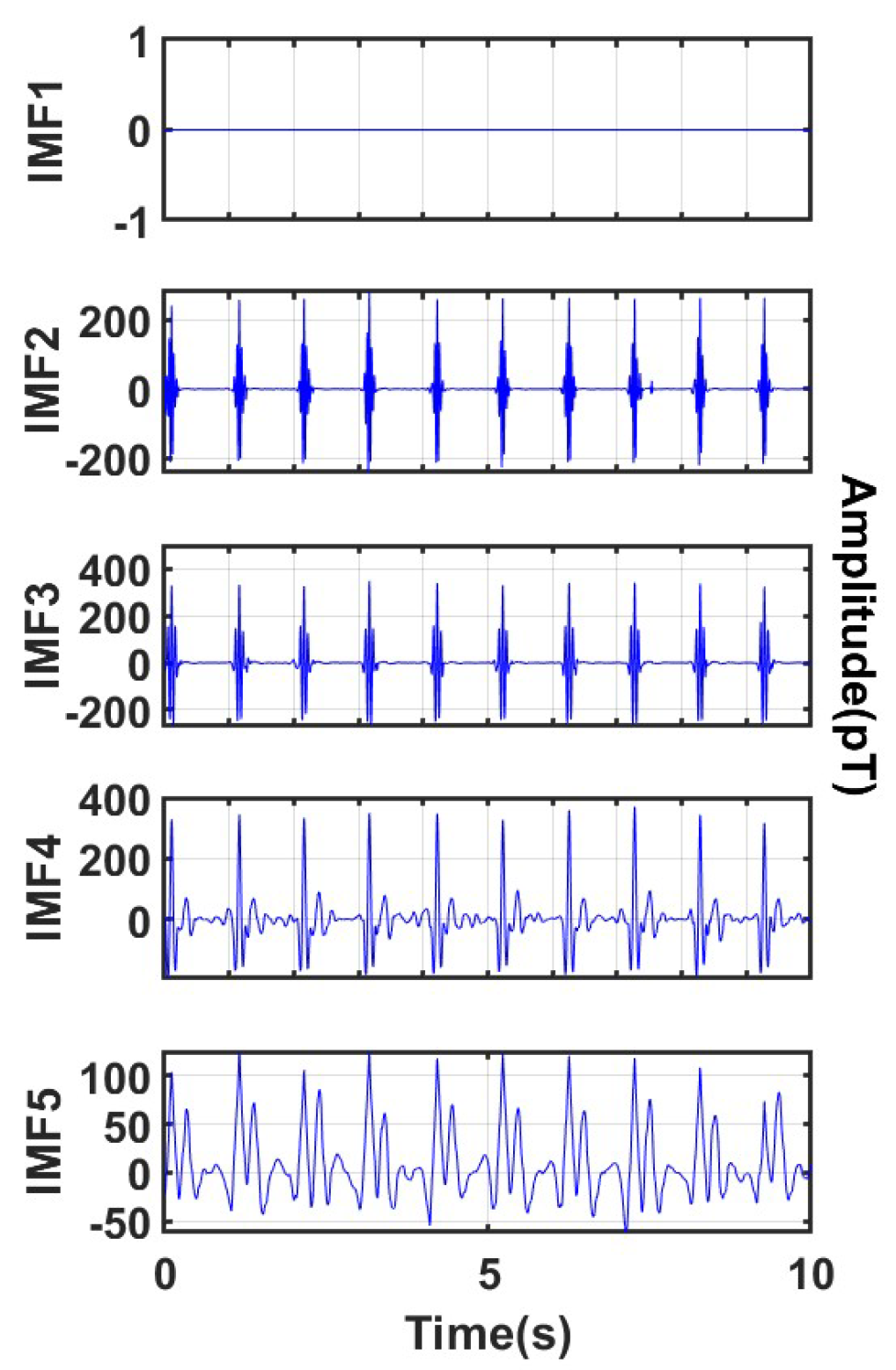

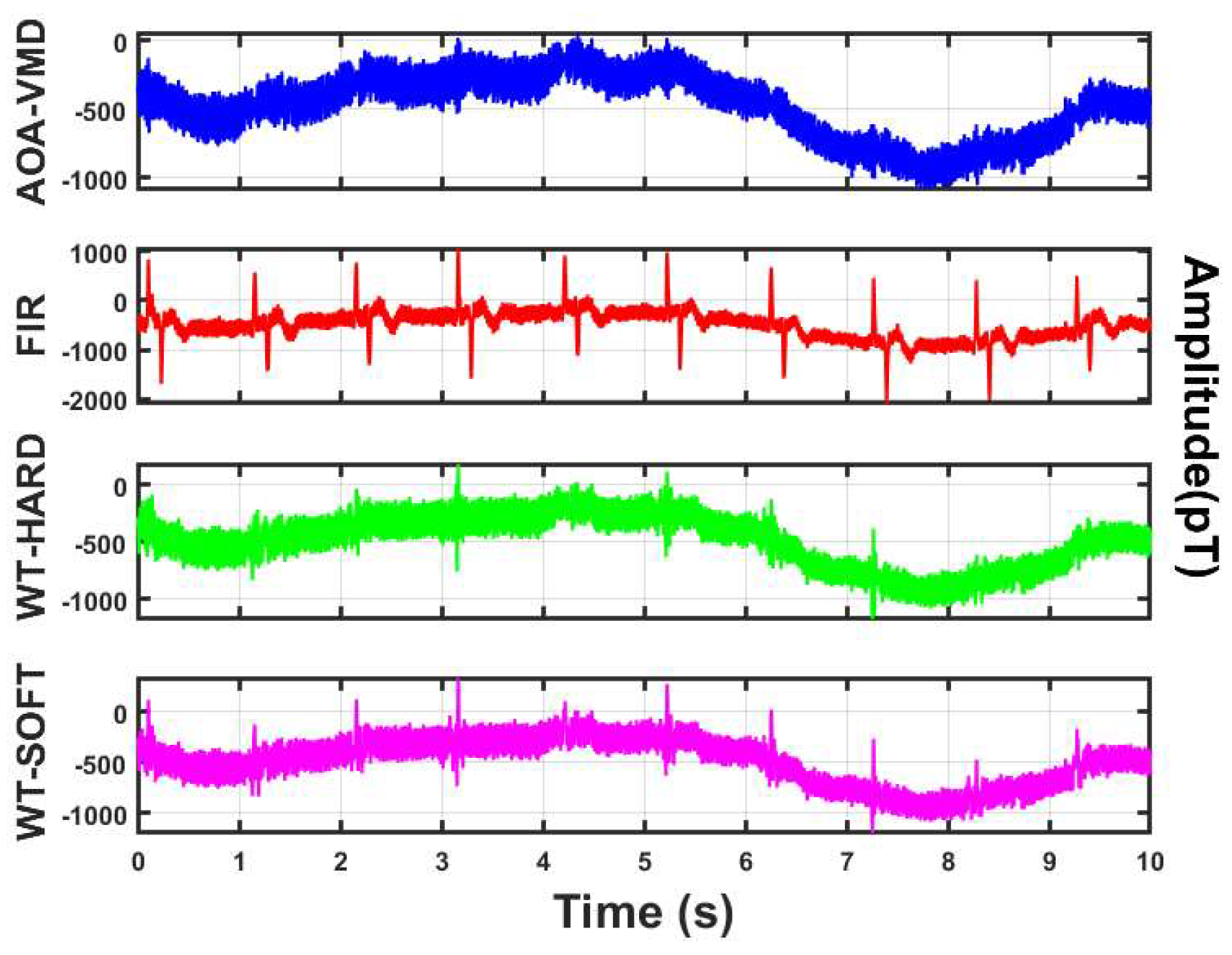

(3) Conduct spectrum analysis on each decomposed IMF component, and implement differentiated processing according to its central frequency characteristics: IMF components with a central frequency higher than 50 Hz are directly eliminated; IMF components with a central frequency lower than 50 Hz undergo wavelet threshold denoising; the lowest frequency IMF component is subjected to baseline drift correction;

(4) Reconstruct the IMF components processed as above to obtain the final denoised signal.

The overall flow chart of the algorithm is shown in

Figure 5.