Unmanned Airborne Target Detection Method with Multi-Branch Convolution and Attention-Improved C2F Module

Abstract

1. Introduction

2. Materials and Methods

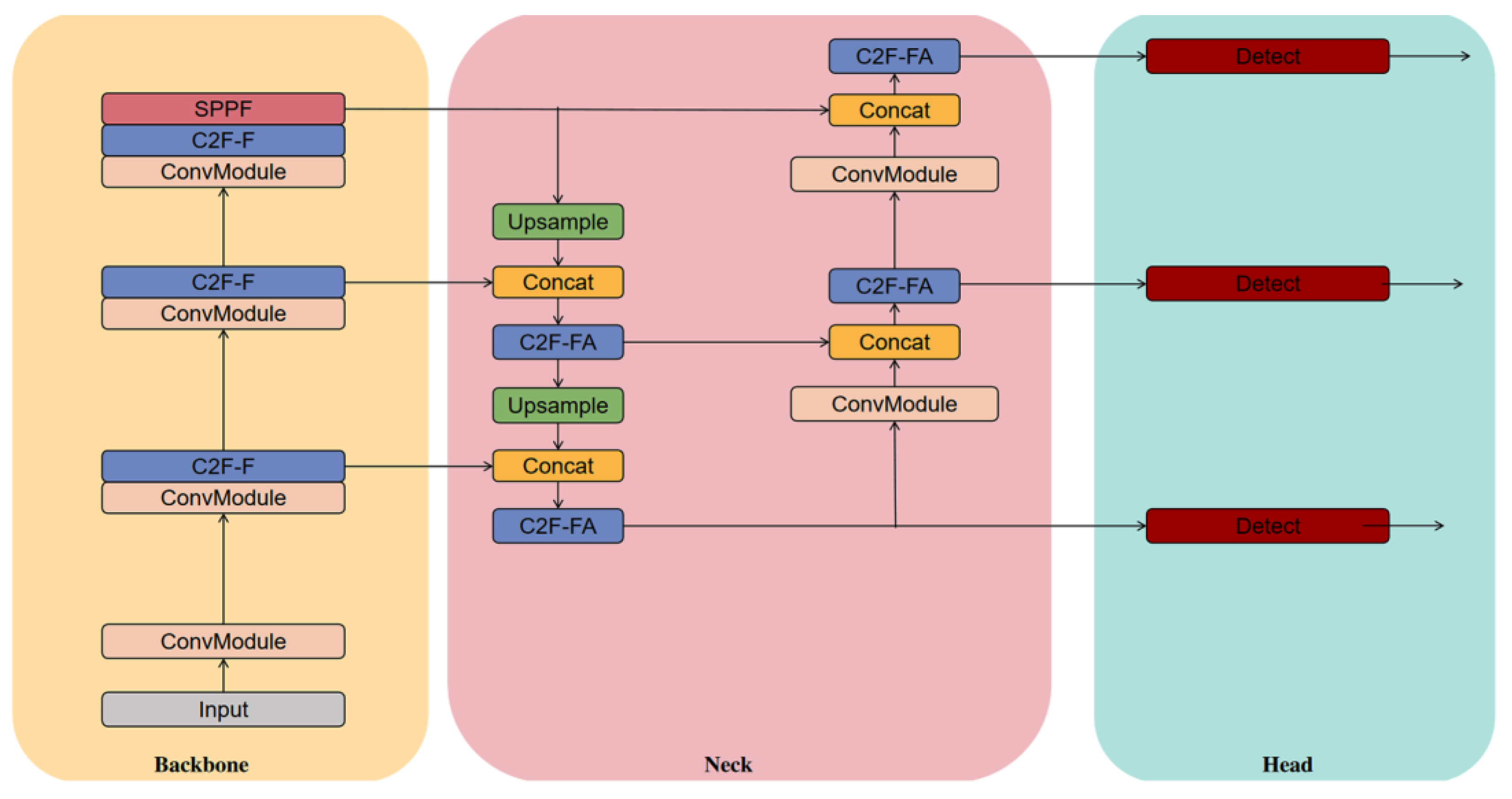

2.1. Target Detection Network Based on Multi-Branch Convolution with an Attention-Improved C2F Module

2.2. Backbone Incorporating Partial Convolution

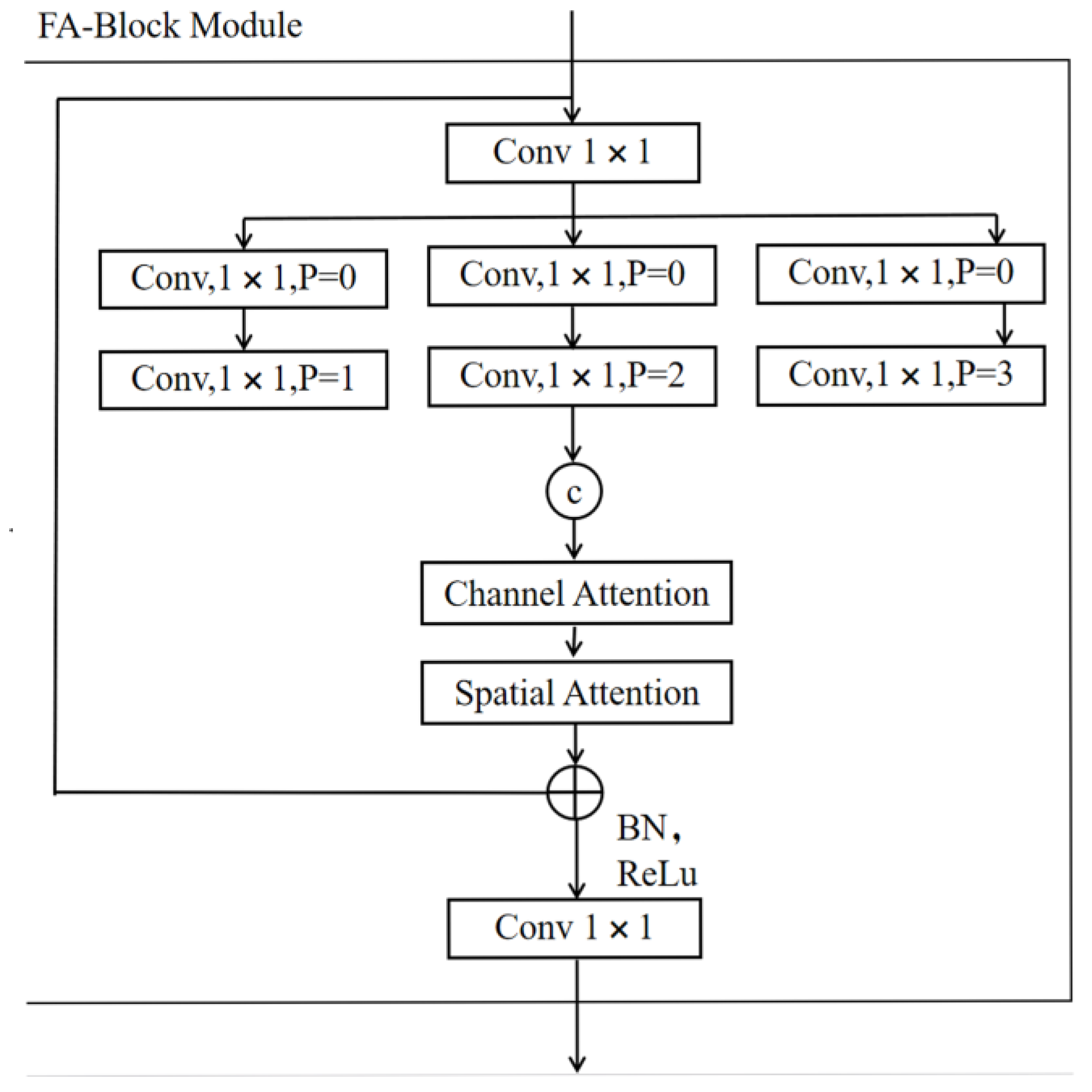

2.3. FA-Block Based on the Design of a Multi-Scale Feature Fusion and Attention Mechanism

2.4. Addition of a Tiny-Target Detection Layer

2.5. Improvements to the Up-Sampling Methodology

3. Results

3.1. Dataset and Experimental Setup

3.2. Environmental Configuration and Evaluation Indicators

3.3. Ablation Experiment

3.4. Comparison of the Results for Different Algorithms

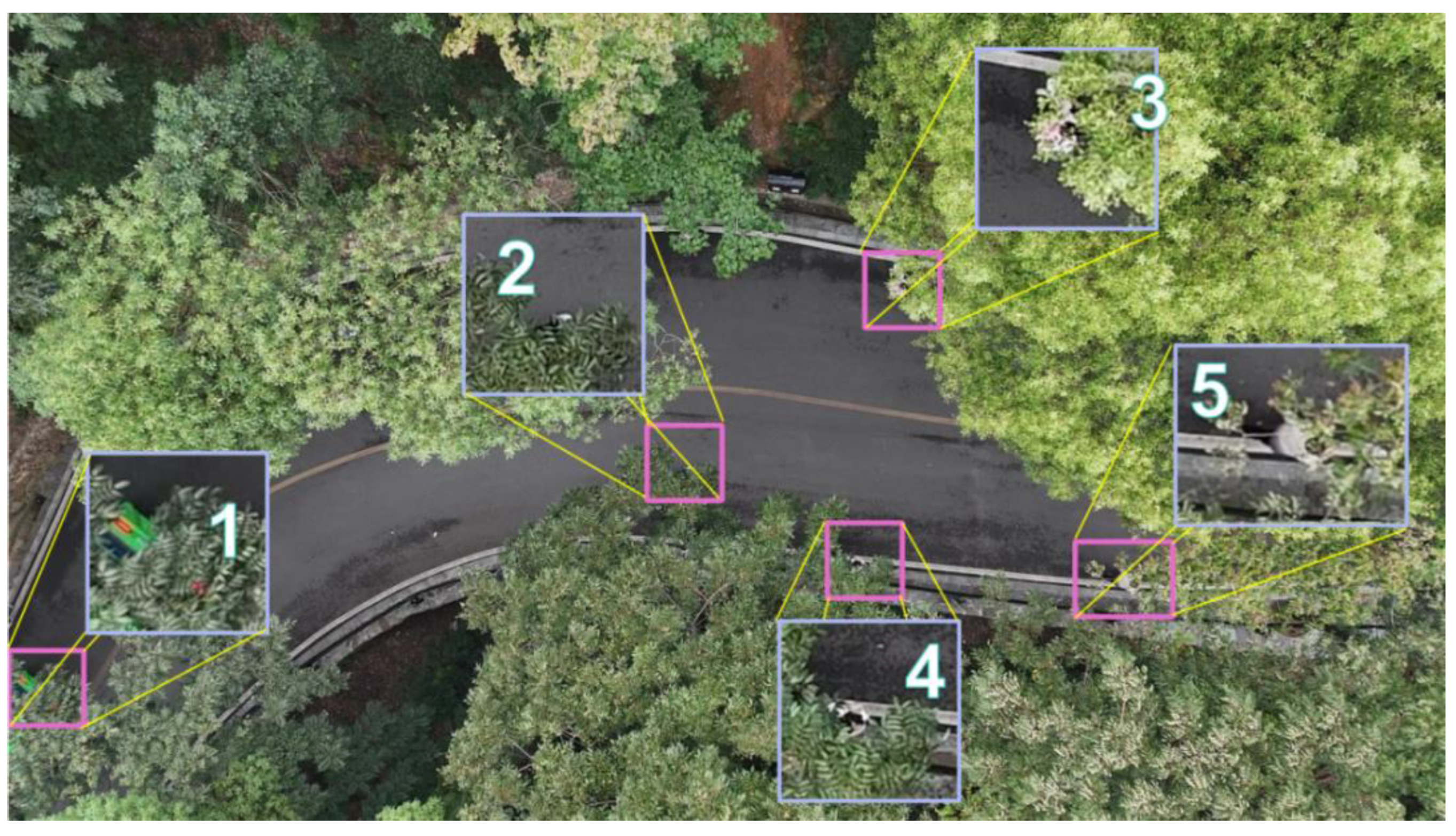

3.5. Visualization Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Asignacion, A.; Satoshi, S. Historical and Current Landscapes of Autonomous Quadrotor Control: An Early-Career Researchers’ Guide. Drones 2024, 8, 72. [Google Scholar] [CrossRef]

- Calamoneri, T.; Corò, F.; Mancini, S. Management of a post-disaster emergency scenario through unmanned aerial vehicles: Multi-Depot Multi-Trip Vehicle Routing with Total Completion Time Minimization. Expert Syst. Appl. 2024, 251, 123766–123778. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich Feature Hierarchies for Accurate Object Detection and Semantic Segmentation. In Proceedings of the 2014 IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast R-CNN. In Proceedings of the 2015 IEEE International Conference on Computer Vision (ICCV), Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1137–1149. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 386–397. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Goyal, P.; Girshick, R.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 42, 318–327. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. SSD: Single Shot MultiBox Detector. Eur. Conf. Comput. Vis. 2016, 9905, 21–37. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, Faster, Stronger. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6517–6525. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar] [CrossRef]

- Mao, Q.C.; Sun, H.M.; Liu, Y.B.; Jia, R.-S. Mini-YOLOv3: Real-Time Object Detector for Embedded Applications. IEEE Access 2019, 7, 133529–133538. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal Speed and Accuracy of Object Detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Singha, S.; Aydin, B. Automated Drone Detection Using YOLOv4. Drones 2021, 5, 95. [Google Scholar] [CrossRef]

- Khanam, R.; Hussain, M. What is YOLOv5: A deep look into the internal features of the popular object detector. arXiv 2024, arXiv:2407.20892. [Google Scholar] [CrossRef]

- Wang, C.Y.; Bochkovskiy, A.; Liao, H.Y.M. YOLOv7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. arXiv 2022, arXiv:2207.02696. [Google Scholar]

- Varghese, R.; Sambath, M. YOLOv8: A Novel Object Detection Algorithm with Enhanced Performance and Robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024. [Google Scholar]

- Wang, C.Y.; Yeh, I.H.; Liao, H.Y.M. YOLOv9: Learning What You Want to Learn Using Programmable Gradient Information. arXiv 2024, arXiv:2402.13616. [Google Scholar] [CrossRef]

- Wang, A.; Chen, H.; Liu, L.; Chen, K.; Lin, Z.; Han, J. YOLOv10: Real-Time End-to-End Object Detection. arXiv 2024, arXiv:2405.14458. [Google Scholar]

- Khanam, R.; Hussain, M. YOLOv11: An Overview of the Key Architectural Enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D. Going deeper with convolutions. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Receptive Field Block Net for Accurate and Fast Object Detection. Lect. Notes Comput. Sci. 2018, 11215, 385–400. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. Lect. Notes Comput. Sci. 2018, 11211, 3–19. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, Salt Lake City, UT, USA, 18–23 June 2018; Volume 99, pp. 7132–7141. [Google Scholar]

- Wang, L.G.; Shou, L.Y.; Alyami, H.; Laghari, A.A.; Rashid, M.; Almotiri, J.; Alyamani, H.J.; Alturise, F. A novel deep learning-based single shot multibox detector model for object detection in optical remote sensing images. Geosci. Data J. 2022, 11, 2049–6060. [Google Scholar] [CrossRef]

- Tang, S.; Zhang, S.; Fang, Y. HIC-YOLOv5: Improved YOLOv5 for Small Object Detection. In Proceedings of the 2024 IEEE International Conference on Robotics and Automation (ICRA), Yokohama, Japan, 13–17 May 2024; pp. 6614–6619. [Google Scholar]

- Zhang, M.; Wang, Z.; Song, W.; Zhao, D.; Zhao, H. Efficient Small-Object Detection in Underwater Images Using the Enhanced YOLOv8 Network. Appl. Sci. 2024, 14, 1095. [Google Scholar] [CrossRef]

- Liu, Y.F.; Zhang, D.C.; Guo, C. GL-YOLOv5: An Improved Lightweight Non-Dimensional Attention Algorithm Based on YOLOv5. Comput. Mater. Contin. 2024, 81, 3281–3299. [Google Scholar] [CrossRef]

- Kang, Z.P.; Liao, Y.R.; Du, S.H.; Li, H.; Li, Z. SE-CBAM-YOLOv7: An Improved Lightweight Attention Mechanism-Based YOLOv7 for Real-Time Detection of Small Aircraft Targets in Microsatellite Remote Sensing Imaging. Aerospace 2024, 11, 605. [Google Scholar] [CrossRef]

- Chen, J.; Wen, R.; Ma, L. Small object detection model for UAV aerial image based on YOLOv7. Signal Image Video Process. 2024, 18, 2695–2707. [Google Scholar] [CrossRef]

- Shi, Y.; Duan, Z.; Qing, H.H.; Zhao, L.; Wang, F.; Yuwen, X. YOLOv9s-Pear: A Lightweight YOLOv9s-Based Improved Model for Young Red Pear Small-Target Recognition. Agronomy 2024, 14, 2086. [Google Scholar] [CrossRef]

- Xu, K.Y.; Song, C.T.; Xie, Y.; Pan, L.; Gan, X.; Huang, G. RMT-YOLOv9s: An Infrared Small Target Detection Method Based on UAV Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2024, 21, 7002205. [Google Scholar] [CrossRef]

- Zhang, Q.Y.; Wang, X.; Shi, H.; Wang, K.; Tian, Y.; Xu, Z.; Zhang, Y.; Jia, G. BRA-YOLOv10: UAV Small Target Detection Based on YOLOv10. Drones 2025, 9, 159. [Google Scholar] [CrossRef]

- Wang, C.C.; Han, Y.Q.; Yang, C.G.; Wu, M.; Chen, Z.; Yun, L.; Jin, X. CF-YOLO for small target detection in drone imagery based on YOLOv11 algorithm. Sci. Rep. 2025, 15, 16741. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhang, Z.Y.; Li, G.; Xia, C. ZZ-YOLOv11: A Lightweight Vehicle Detection Model Based on Improved YOLOv11. Sensors 2025, 25, 3399. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.H.; He, H.; Zhuo, W.; Wen, S.; Lee, C.-H.; Chan, S.-H.G. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 18–24 June 2023; pp. 12021–12031. [Google Scholar]

- Wang, J.Q.; Chen, K.; Xu, R.; Liu, Z.; Loy, C.C.; Lin, D. CARAFE: Content-Aware Reassembly of Features. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, South Korea, 27 October–2 November 2019; pp. 3007–3016. [Google Scholar]

| Layers | Parameters | GFLOPs | FPS | |

|---|---|---|---|---|

| Base | 225 | 3,157,200 | 8.9 | 11 |

| C2F-F | 231 | 2,826,960 | 8.3 | 8.72 |

| Network Stage | Name of Feature mAP | Dimensions (W × H × Aisle) | Operation Description |

|---|---|---|---|

| Backbone | Input | 640 × 640 × 3 | Original input |

| Backbone | F1 | 160 × 160 × 128 | Generated via convolution operation on input |

| Backbone | F2 | 80 × 80 × 256 | Generated via F1 convolution operation |

| Backbone | F3 | 40 × 40 × 512 | Generated via F2 convolution operation |

| Neck | U1 | 80 × 80 × 512 | F3 is up-sampled |

| Neck | C1 | 80 × 80 × 256 | Generated by concatenating U1 and F2 |

| Neck | U2 | 160 × 160 × 256 | C1 is up-sampled |

| Head | T3 | 160 × 160 × 128 | U2 is spliced with F1 and fed into the detector head |

| Head | T2 | 80 × 80 × 256 | C1 is spliced with T3 and then generated and fed into the detection head |

| Head | T1 | 40 × 40 × 512 | F3 and T2 are spliced, generated, and fed into the detector head |

| Project | Version |

|---|---|

| Operating System | Ubuntu 20.04 |

| CPU | Intel Xeon Gold 6338 |

| GPU | Nvidia RTX 4090 24 G |

| Compiler | PyCharm |

| Algorithmic Framework | Pytorch-2.4.1 + Cuda12.1 |

| Programming Languages | Python3.8 |

| YOLO Version | v8.0.138 |

| C2F-F | C2F-FA | Tiny | CARAFE | mAP50/% | mAP50-95/% | P/% | R/% |

|---|---|---|---|---|---|---|---|

| — | — | — | — | 82.7 | 42.6 | 78.6 | 82.7 |

| √ | — | — | — | 82.8 | 43.0 | 78.7 | 80.6 |

| — | √ | — | — | 83.1 | 43.2 | 78.3 | 80.1 |

| — | — | √ | — | 84.5 | 45.0 | 79.6 | 81.4 |

| — | — | — | √ | 83.0 | 42.9 | 79.0 | 80.4 |

| √ | √ | √ | √ | 85.5 | 46.1 | 80.9 | 82.9 |

| C2F-F | C2F-FA | Tiny | CARAFE | mAP50/% | mAP50-95/% | P/% | R/% |

|---|---|---|---|---|---|---|---|

| — | — | — | — | 32.1 | 18.3 | 43.2 | 32.6 |

| √ | — | — | — | 32.3 | 18.7 | 42.7 | 32.3 |

| — | √ | — | — | 38.2 | 22.6 | 49.6 | 37.3 |

| — | — | √ | — | 35.2 | 20.6 | 44.5 | 35.5 |

| — | — | — | √ | 33.0 | 19.0 | 42.5 | 33.4 |

| √ | √ | √ | √ | 41.3 | 24.7 | 50.9 | 40.2 |

| Model | mAP50/% | mAP50-95/% | P/% | R/% |

|---|---|---|---|---|

| YOLOv5n | 77.1 | 34.5 | 75.7 | 75.0 |

| YOLOv5s | 82.1 | 40.0 | 78.9 | 79.1 |

| YOLOv7n | 72.8 | 32.1 | 71.3 | 73.2 |

| YOLOv8n | 82.7 | 42.6 | 78.6 | 82.7 |

| YOLOv11n | 82.3 | 42.1 | 77.7 | 80.1 |

| Ours | 85.5 | 46.1 | 80.9 | 82.9 |

| Model | mAP50/% | mAP50-95/% | P/% | R/% |

|---|---|---|---|---|

| YOLOv5n | 25.3 | 13.0 | 35.5 | 27.7 |

| YOLOv5s | 32.9 | 18.2 | 44.5 | 33.2 |

| YOLOv7n | 37.9 | 19.9 | 48.9 | 39.3 |

| YOLOv8n | 32.1 | 18.3 | 43.2 | 32.6 |

| YOLOv11n | 34.2 | 19.9 | 45.1 | 33.8 |

| Ours | 41.3 | 24.7 | 50.9 | 40.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qin, F.; Tang, W.; Tian, H.; Chen, Y. Unmanned Airborne Target Detection Method with Multi-Branch Convolution and Attention-Improved C2F Module. Sensors 2025, 25, 6023. https://doi.org/10.3390/s25196023

Qin F, Tang W, Tian H, Chen Y. Unmanned Airborne Target Detection Method with Multi-Branch Convolution and Attention-Improved C2F Module. Sensors. 2025; 25(19):6023. https://doi.org/10.3390/s25196023

Chicago/Turabian StyleQin, Fangyuan, Weiwei Tang, Haishan Tian, and Yuyu Chen. 2025. "Unmanned Airborne Target Detection Method with Multi-Branch Convolution and Attention-Improved C2F Module" Sensors 25, no. 19: 6023. https://doi.org/10.3390/s25196023

APA StyleQin, F., Tang, W., Tian, H., & Chen, Y. (2025). Unmanned Airborne Target Detection Method with Multi-Branch Convolution and Attention-Improved C2F Module. Sensors, 25(19), 6023. https://doi.org/10.3390/s25196023