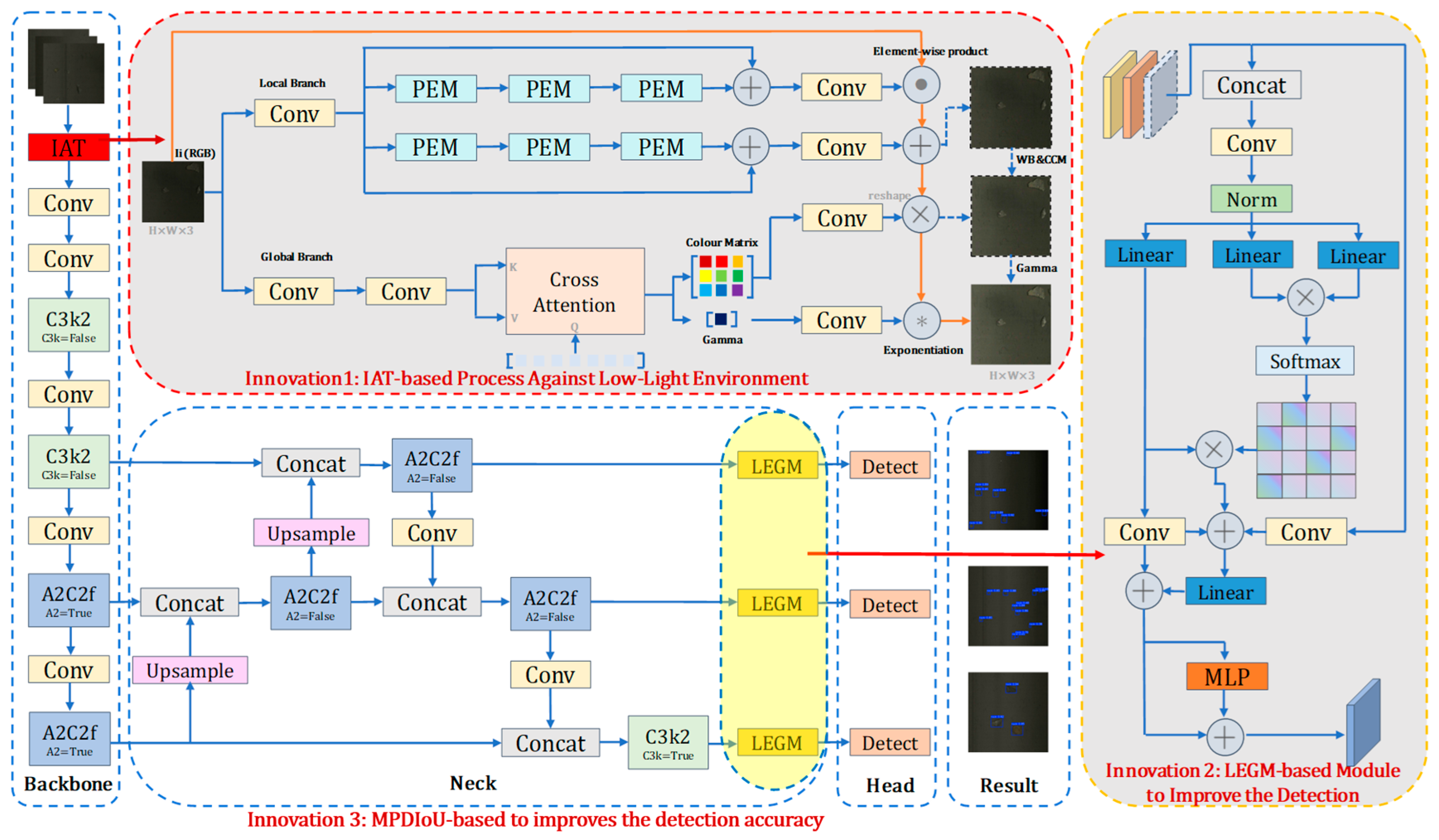

A Sensor Based Waste Rock Detection Method in Copper Mining Under Low Light Environment

Abstract

1. Introduction

- (1)

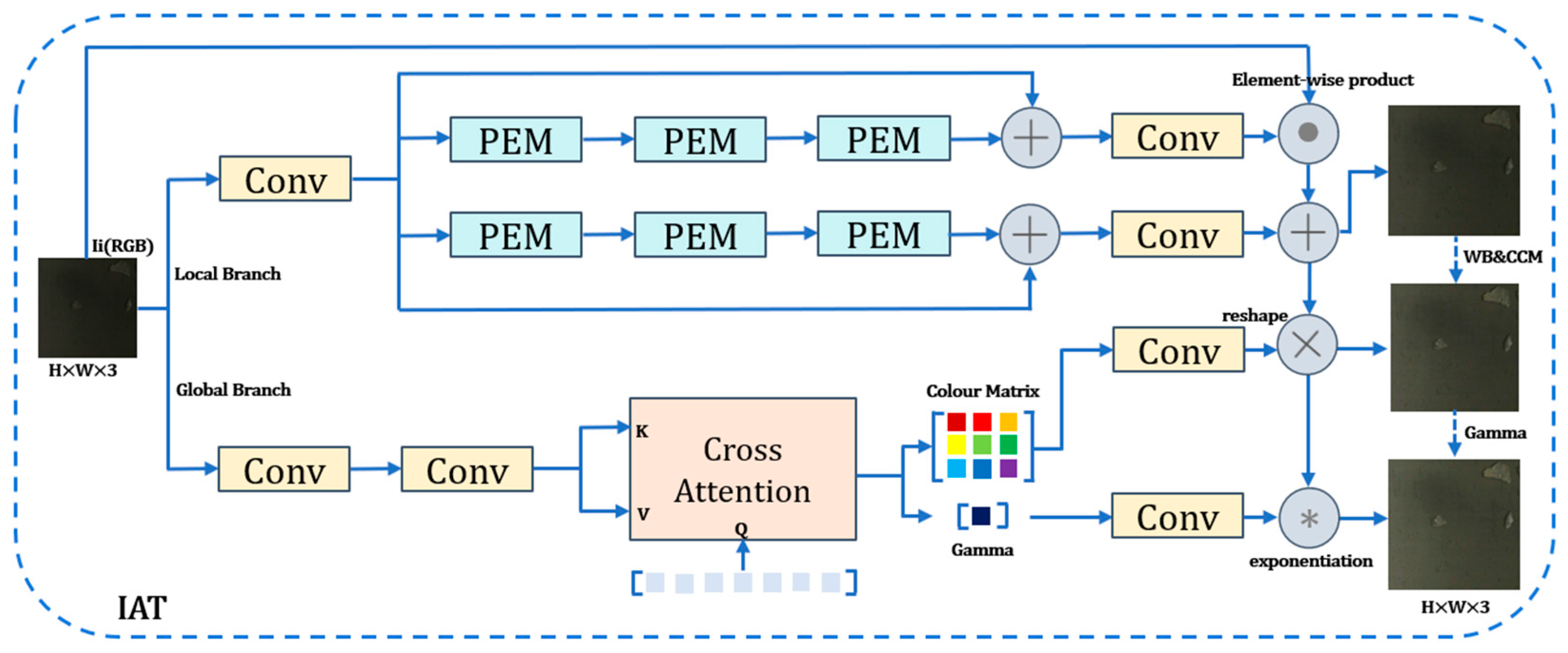

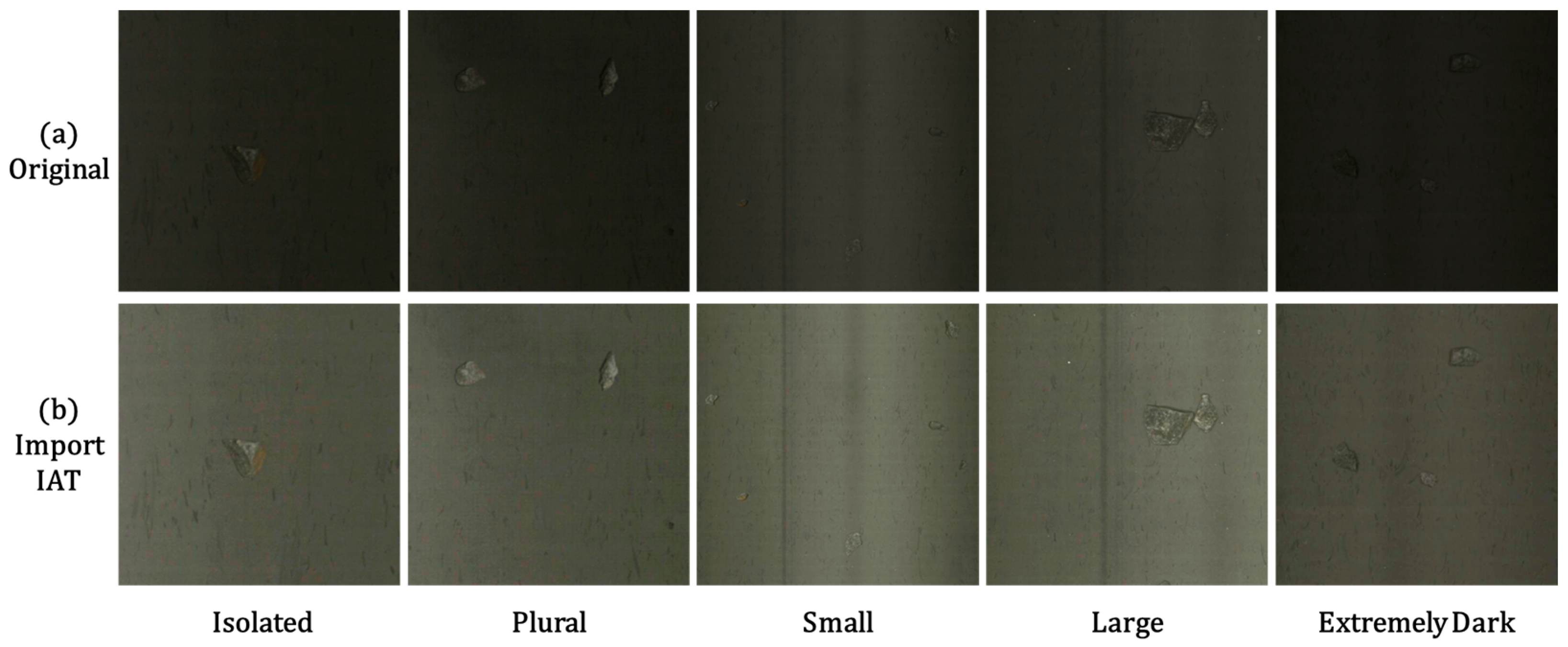

- To address image quality degradation caused by low-light conditions, an Illumination-Adaptive Transformer module is proposed and integrated as a preprocessing layer at the network front-end to enhance the brightness of subsequent input images.

- (2)

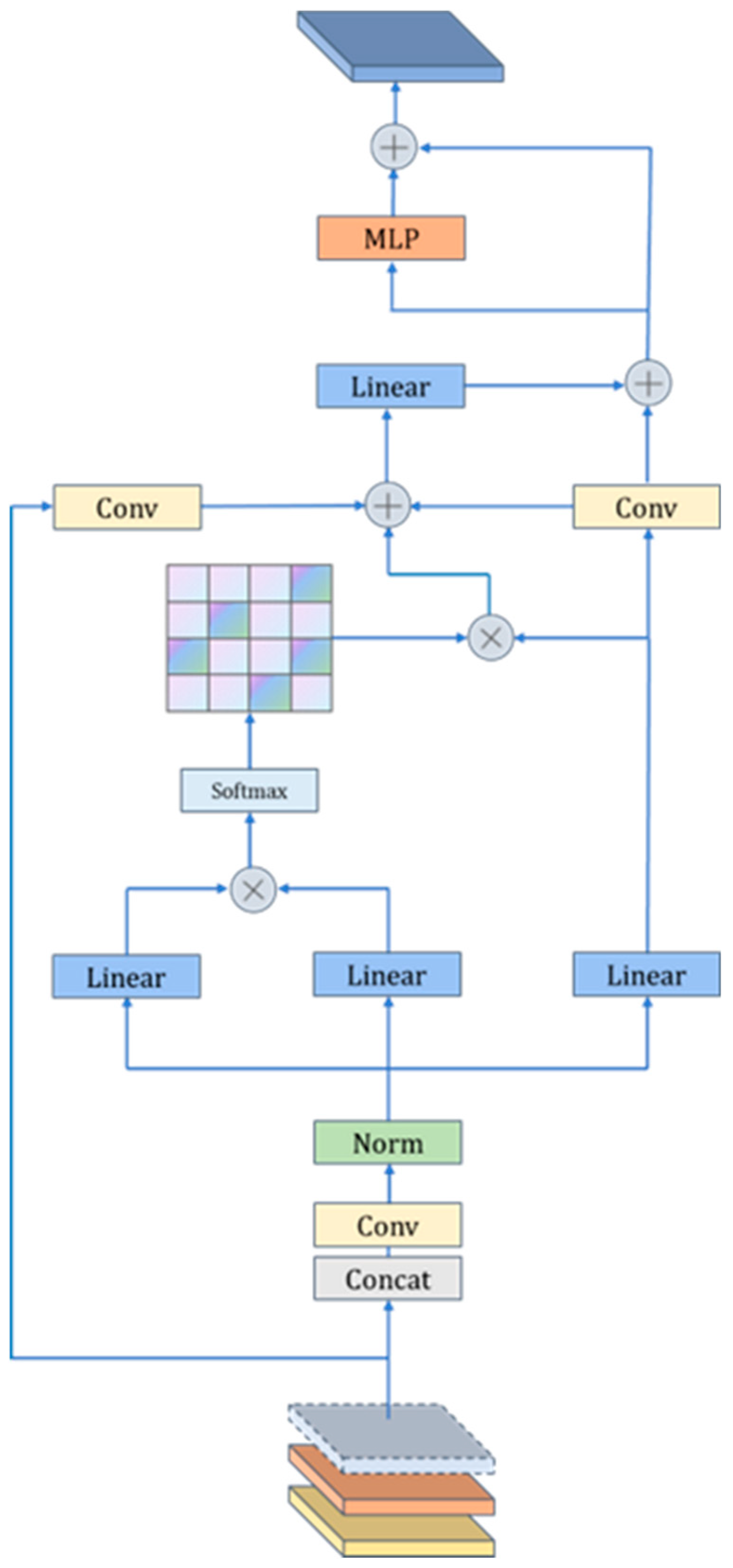

- To improve the detection accuracy of copper ore waste rocks, a method is introduced that integrates local feature embedding into global feature extraction modules following the A2C2f and C3k2 modules.

- (3)

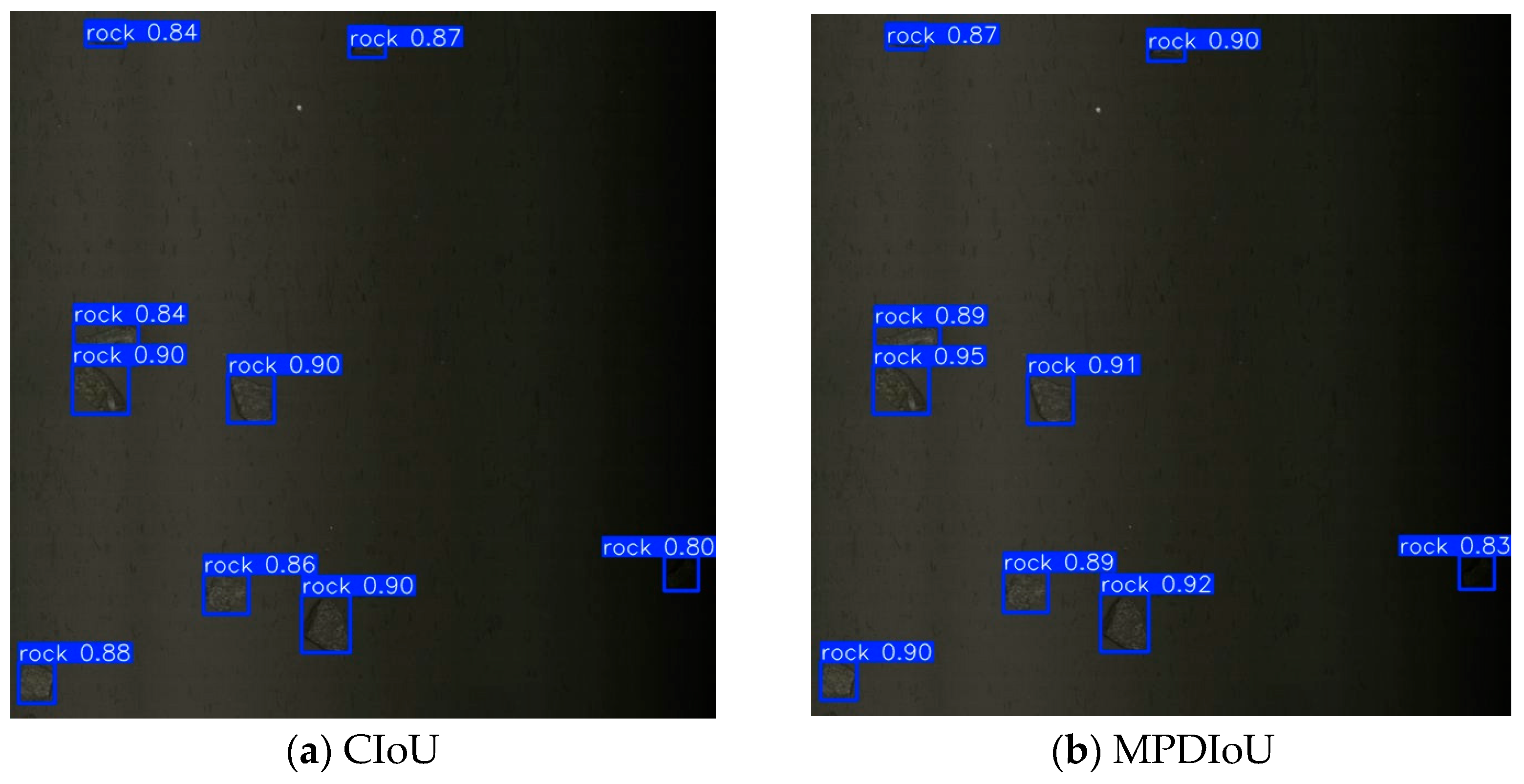

- To further enhance the detection accuracy of copper ore waste rocks, the original loss function has been refined, optimizing the network.

2. Materials and Methods

2.1. Illumination Adaptive Transformer Module

2.2. LEGM Attention Mechanism

2.3. MPDIoU Loss Function

2.4. Evaluation Metrics

- (1)

- Precision (P) refers to the proportion of true positive samples among all samples predicted as positive by the model. A higher precision indicates a lower probability of misjudgment by the model. Its calculation formula is:

- (2)

- Recall (R) refers to the proportion of actual positive samples correctly predicted as positive by the model among all actual positive samples. A higher recall indicates a lower probability of missed detections by the model. Its calculation formula is:

- (3)

- Average Precision (AP) refers to the area under the Precision-Recall curve. A higher AP indicates better model precision, recall, and overall performance. Mean Average Precision (mAP) is the average of AP values across all categories. The calculation formulas for AP and mAP are, respectively, as follows:

3. Results

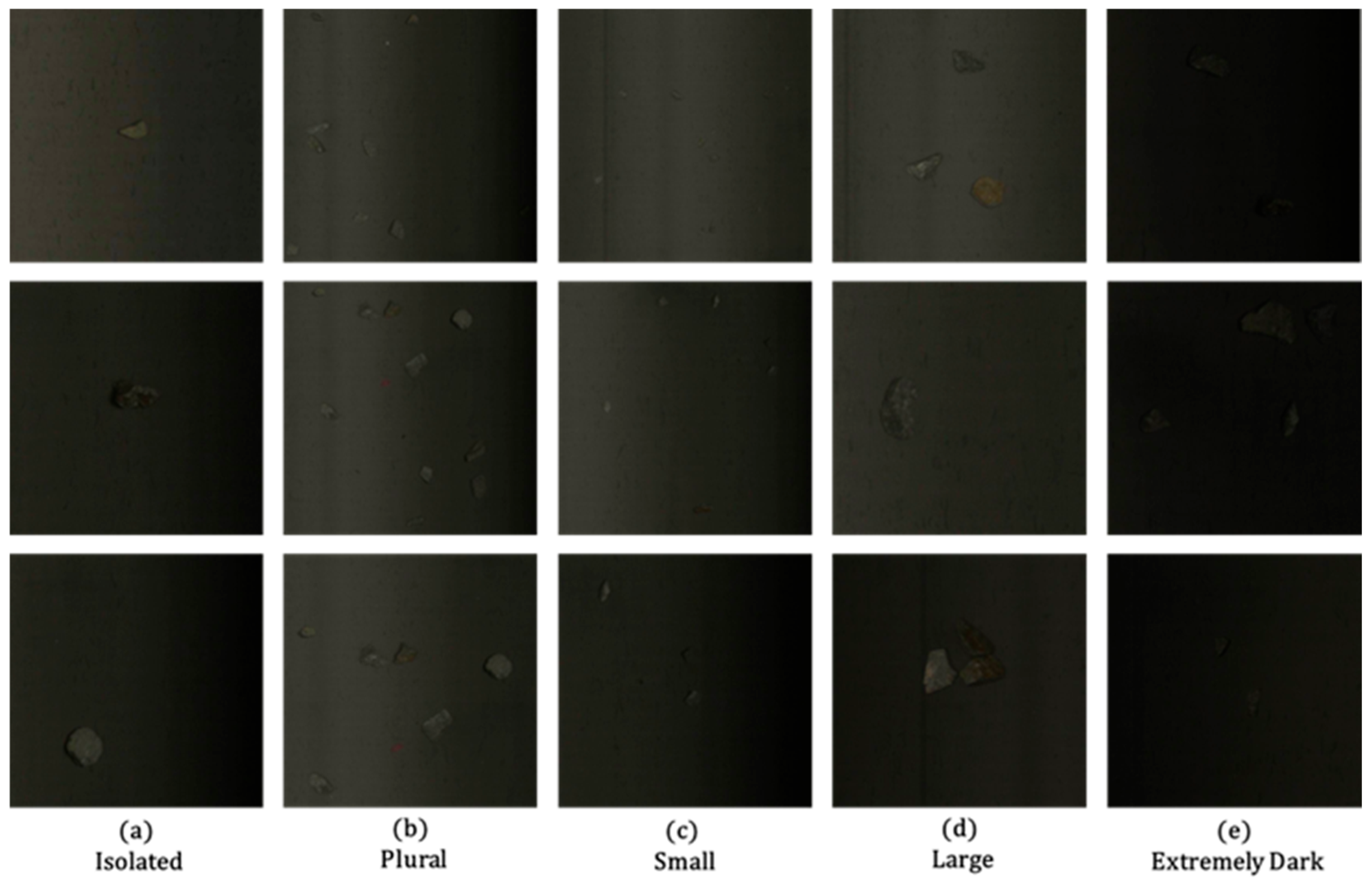

3.1. Experimental Dataset

3.2. Experimental Environment and Parameters

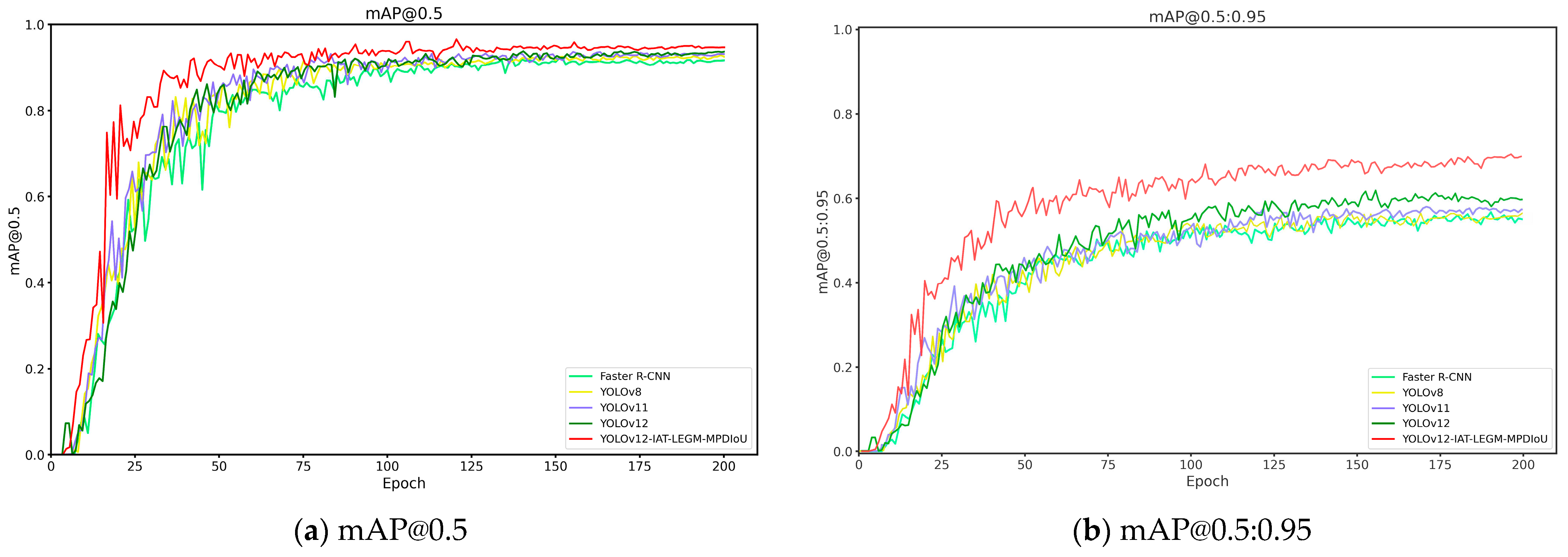

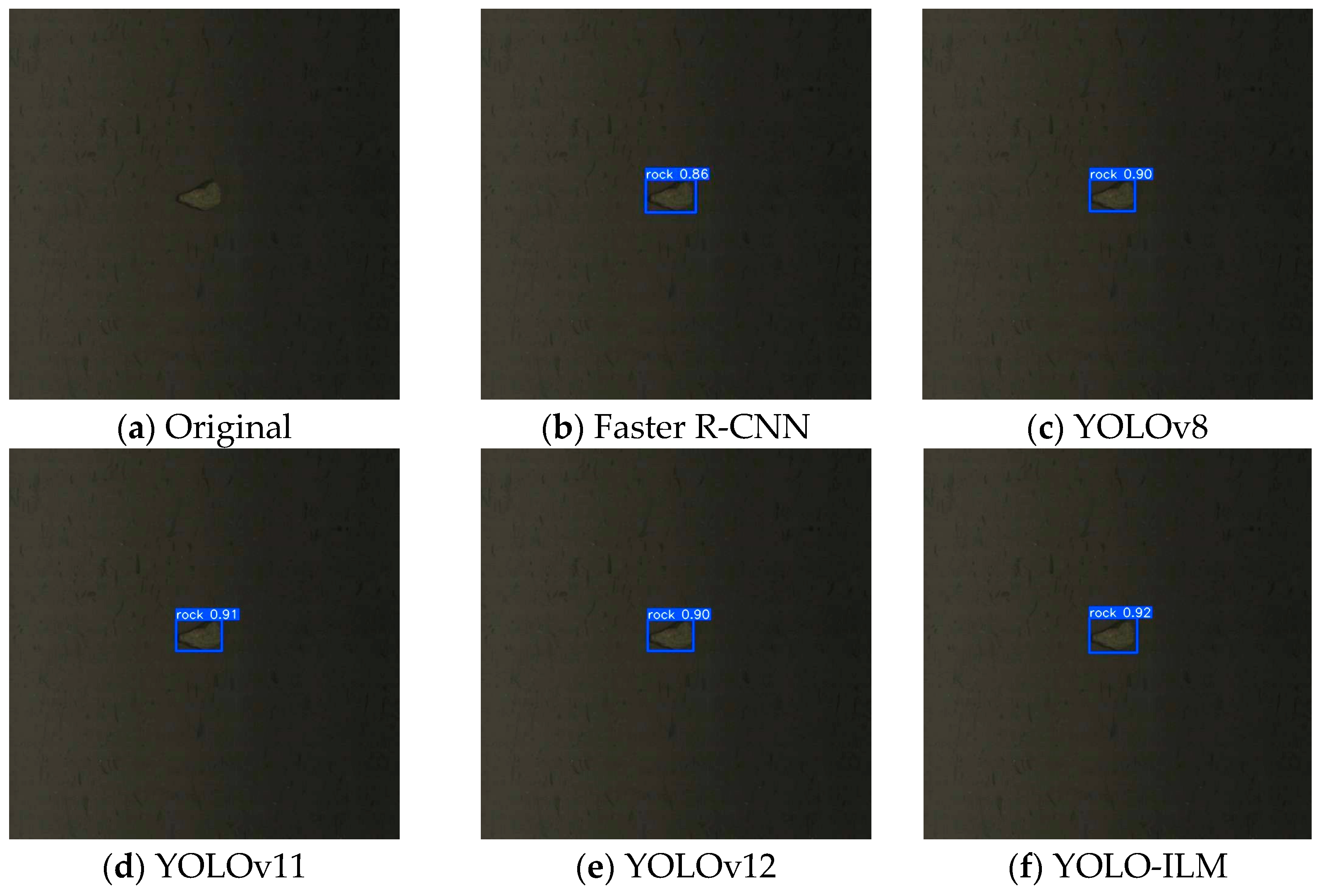

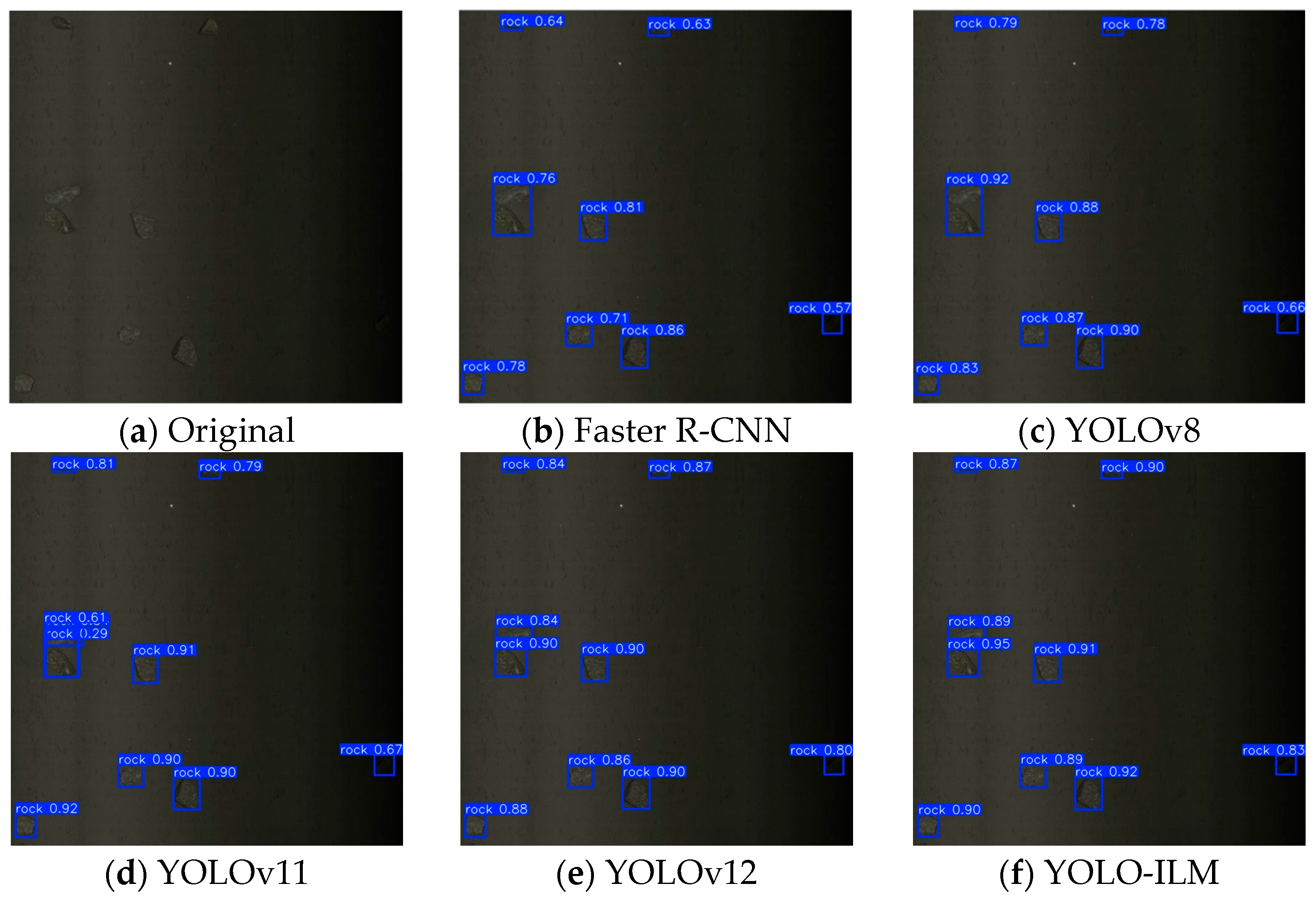

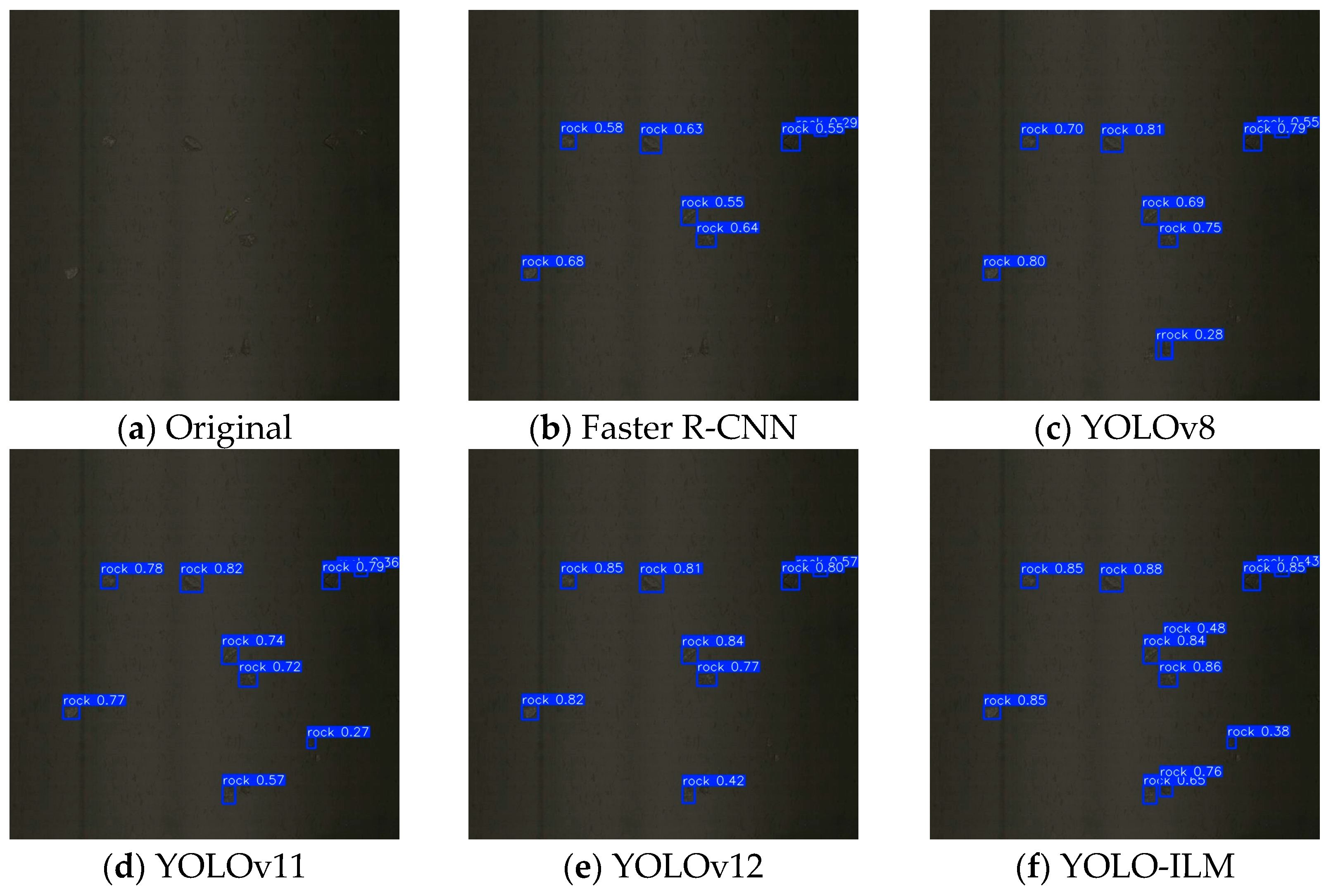

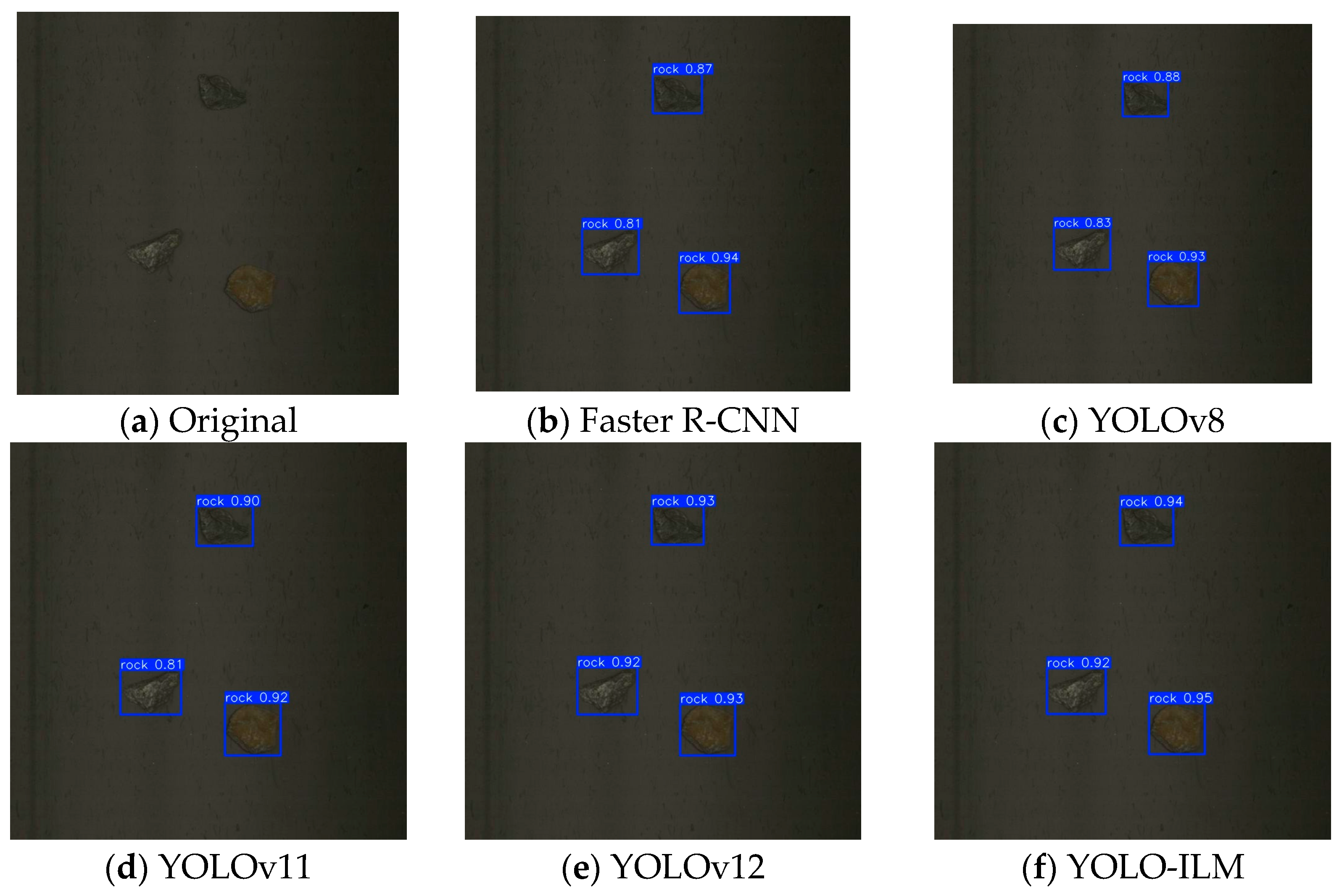

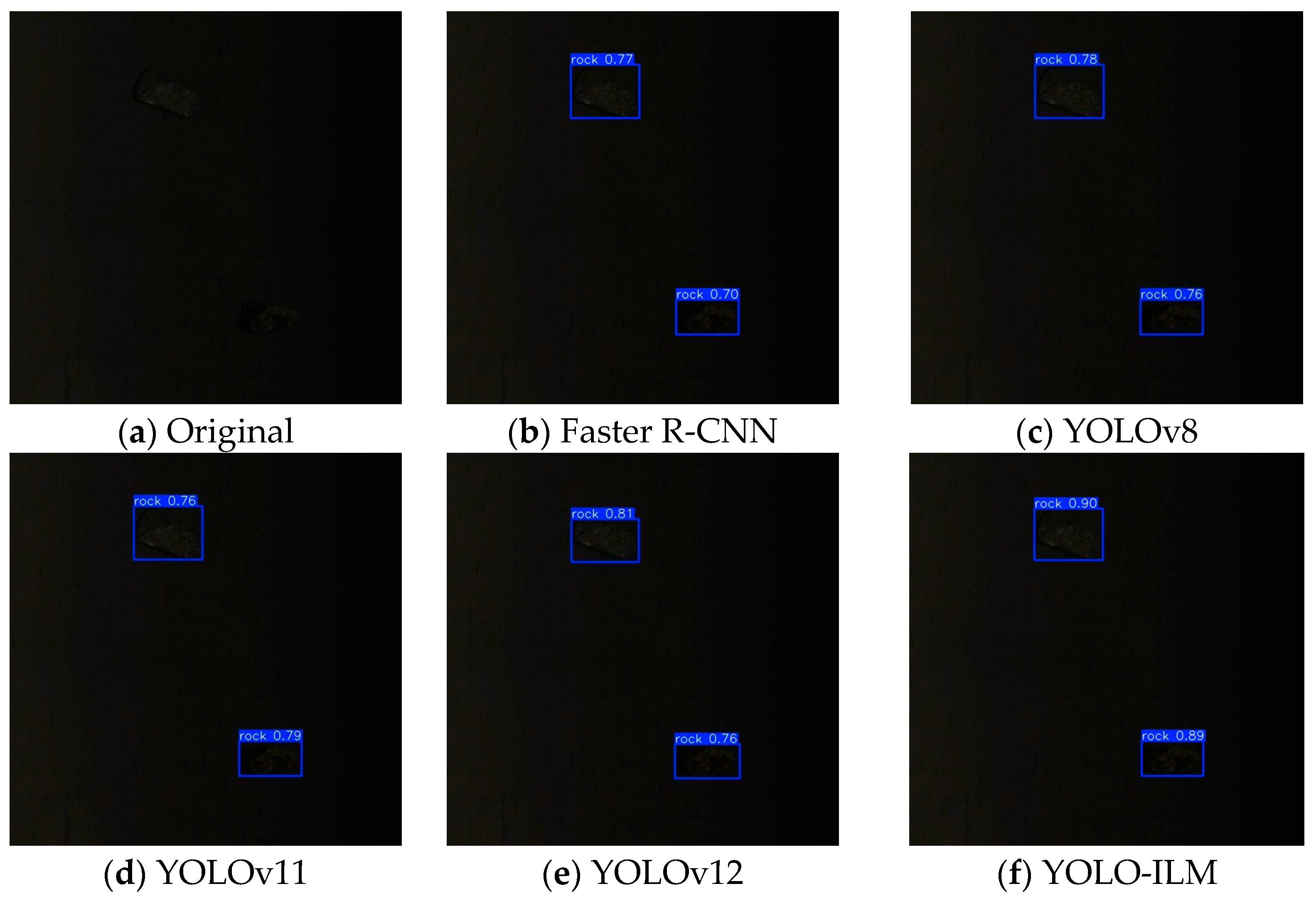

3.3. Comparative Experiments

3.4. Ablation Experiments

4. Conclusions

- (1)

- Integrating the YOLOv12 network with the Illumination Adaptive Transformer module IAT to enhance model adaptability to low-light conditions;

- (2)

- Introducing the Locally Embedded Global-feature attention mechanism LEGM to improve target feature capture capability under complex environmental interference;

- (3)

- Introducing an efficient and accurate bounding box regression loss function MPDIoU to reduce computational load while optimizing localization accuracy.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Moreau, K.; Laamanen, C.; Bose, R.; Shang, H.; Scott, J.A. Environmental impact improvements due to introducing automation into underground copper mines. Int. J. Min. Sci. Technol. 2021, 31, 1159–1167. [Google Scholar] [CrossRef]

- Tang, J.; Yang, H.; Chen, H.; Li, F.; Wang, C.; Liu, Q.; Zhang, Q.; Zhang, R.; Yu, L. Potential and future direction for copper resource exploration in China. Green Smart Min. Eng. 2024, 1, 127–131. [Google Scholar] [CrossRef]

- Wang, Y.; Chen, Q.; Dai, B.; Wang, D. Guidance and review: Advancing mining technology for enhanced production and supply of strategic minerals in China. Green Smart Min. Eng. 2024, 1, 2–11. [Google Scholar] [CrossRef]

- Liang, G.; Liang, Y.; Niu, D.; Shaheen, M. Balancing sustainability and innovation: The role of artificial intelligence in shaping mining practices for sustainable mining development. Resour. Policy 2024, 90, 104793. [Google Scholar] [CrossRef]

- Shiryayeva, O.; Suleimenov, B.; Kulakova, Y. Sustainable Mineral Processing Technologies Using Hybrid Intelligent Algorithms. Technologies 2025, 13, 269. [Google Scholar] [CrossRef]

- Quan, Z.; Sun, J. A Feature-Enhanced Small Object Detection Algorithm Based on Attention Mechanism. Sensors 2025, 25, 589. [Google Scholar] [CrossRef]

- Korie, J.; Gabriela, C.-A.; Ezeonyema, C.; Oshim, F. Machine Learning Innovations for Improving Mineral Recovery and Processing: A Comprehensive Review. Int. J. Econ. Environ. Geol. 2024, 15, 26–31. [Google Scholar]

- Corrigan, C.C.; Ikonnikova, S.A. A review of the use of AI in the mining industry: Insights and ethical considerations for multi-objective optimization. Extr. Ind. Soc. 2024, 17, 101440. [Google Scholar] [CrossRef]

- Tao, M.; Lv, S.; Feng, S. Study on the Evaluation of the Development Efficiency of Smart Mine Construction and the Influencing Factors Based on the US-SBM Model. Sustainability 2023, 15, 5183. [Google Scholar] [CrossRef]

- Shen, X.; Li, L.; Ma, Y.; Xu, S.; Liu, J.; Yang, Z.; Shi, Y. VLCIM: A vision-language cyclic interaction model for industrial defect detection. IEEE Trans. Instrum. Meas. 2025, 74, 1–13. [Google Scholar] [CrossRef]

- Liu, Y.; Wang, X.; Zhang, Z.; Deng, F. LOSN: Lightweight ore sorting networks for edge device environment. Eng. Appl. Artif. Intell. 2023, 123, 106191. [Google Scholar] [CrossRef]

- Shatwell, D.G.; Murray, V.; Barton, A. Real-time ore sorting using color and texture analysis. Int. J. Min. Sci. Technol. 2023, 33, 659–674. [Google Scholar] [CrossRef]

- Tsangaratos, P.; Ilia, I.; Spanoudakis, N.; Karageorgiou, G.; Perraki, M. Machine learning approaches for real-time mineral classification and educational applications. Appl. Sci. 2025, 15, 1871. [Google Scholar] [CrossRef]

- Hui, Y.; Wang, J.; Li, B. WSA-YOLO: Weak-supervised and Adaptive object detection in the low-light environment for YOLOV7. IEEE Trans. Instrum. Meas. 2024, 73, 1–12. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhang, T.; Li, Z.; Qiu, J. Improved Space Object Detection Based on YOLO11. Aerospace 2025, 12, 568. [Google Scholar] [CrossRef]

- Zhang, Q.; Guo, W.; Lin, M. LLD-YOLO: A multi-module network for robust vehicle detection in low-light conditions. Signal Image Video Process. 2025, 19, 271. [Google Scholar] [CrossRef]

- Zhang, M.; Yue, K.; Li, B.; Guo, J.; Li, Y.; Gao, X. Single-Frame Infrared Small Target Detection via Gaussian Curvature Inspired Network. IEEE Trans. Geosci. Remote Sens. 2024, 62, 1–13. [Google Scholar] [CrossRef]

- Wang, S.; Jiang, H.; Li, Z.; Yang, J.; Ma, X.; Chen, J.; Tang, X. PHSI-RTDETR: A Lightweight Infrared Small Target Detection Algorithm Based on UAV Aerial Photography. Drones 2024, 8, 240. [Google Scholar] [CrossRef]

- Wang, Z.; Su, Y.; Kang, F.; Wang, L.; Lin, Y.; Wu, Q.; Li, H.; Cai, Z. PC-YOLO11s: A Lightweight and Effective Feature Extraction Method for Small Target Image Detection. Sensors 2025, 25, 348. [Google Scholar] [CrossRef] [PubMed]

- Fang, W.; Chen, W. TBF-YOLOv8n: A Lightweight Tea Bud Detection Model Based on YOLOv8n Improvements. Sensors 2025, 25, 547. [Google Scholar] [CrossRef]

- Peng, D.; Ding, W.; Zhen, T. A novel low light object detection method based on the YOLOv5 fusion feature enhancement. Sci. Rep. 2024, 14, 4486. [Google Scholar] [CrossRef]

- Das, P.P.; Ganguly, T.; Chaudhuri, R.; Deb, S. YOLO-D: A Domain Adaptive approach towards low light object detection. Procedia Comput. Sci. 2025, 258, 3042–3051. [Google Scholar] [CrossRef]

- Liu, Q.; Huang, W.; Hu, T.; Duan, X.; Yu, J.; Huang, J.; Wei, J. Efficient network architecture for target detection in challenging low-light environments. Eng. Appl. Artif. Intell. 2025, 142, 109967. [Google Scholar] [CrossRef]

- Han, Z.; Yue, Z.; Liu, L. 3L-YOLO: A Lightweight Low-Light Object Detection Algorithm. Appl. Sci. 2025, 15, 90. [Google Scholar] [CrossRef]

- Ye, S.; Huang, W.; Liu, W.; Chen, L.; Wang, X.; Zhong, X. YES: You should Examine Suspect cues for low-light object detection. Comput. Vis. Image Underst. 2025, 251, 104271. [Google Scholar] [CrossRef]

- Qi, Z.; Wang, J.; Yang, G.; Wang, Y. Lightweight YOLOv8-Based Model for Weed Detection in Dryland Spring Wheat Fields. Sustainability 2025, 17, 6150. [Google Scholar] [CrossRef]

- Zhao, Y.; Li, W.; Yang, R.; Liu, Y. Real-time efficient image enhancement in low-light condition with novel supervised deep learning pipeline. Digit. Signal Process. 2025, 165, 105342. [Google Scholar] [CrossRef]

- Qiu, Z.; Huang, X.; Sun, Z.; Li, S.; Wang, J. GS-YOLO-Seg: A Lightweight Instance Segmentation Method for Low-Grade Graphite Ore Sorting Based on Improved YOLO11-Seg. Sustainability 2025, 17, 5663. [Google Scholar] [CrossRef]

- Li, J.; Meng, X.; Wang, S.; Lu, Z.; Yu, H.; Qu, Z.; Wang, J. Enhanced Parallel Convolution Architecture YOLO Photovoltaic Panel Detection Model for Remote Sensing Images. Sustainability 2025, 17, 6476. [Google Scholar] [CrossRef]

- Tian, Y.; Ye, Q.; Doermann, D. Yolov12: Attention-centric real-time object detectors. arXiv 2025, arXiv:2502.12524. [Google Scholar]

- Cui, Z.; Li, K.; Gu, L.; Su, S.; Gao, P.; Jiang, Z.; Qiao, Y.; Harada, T. You only need 90k parameters to adapt light: A light weight transformer for image enhancement and exposure correction. arXiv 2022, arXiv:2205.14871. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, S.; Li, H. Depth information assisted collaborative mutual promotion network for single image dehazing. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–22 June 2024; pp. 2846–2855. [Google Scholar]

- Ma, S.; Xu, Y. Mpdiou: A loss for efficient and accurate bounding box regression. arXiv 2023, arXiv:2307.07662. [Google Scholar] [CrossRef]

- Li, Y.; Fu, C.; Liu, T.; Hu, Z.; Song, G. Two-stage remaining useful life prediction method across operating conditions based on small samples and novel health indicators. Reliab. Eng. Syst. Saf. 2025, 264, 111290. [Google Scholar] [CrossRef]

- Guo, C.; Li, C.; Guo, J.; Loy, C.C.; Hou, J.; Kwong, S.; Cong, R. Zero-reference deep curve estimation for low-light image enhancement. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 14–19 June 2020; pp. 1780–1789. [Google Scholar]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep retinex decomposition for low-light enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar] [CrossRef]

- Jha, R.; Lenka, A.; Ramanagopal, M.; Sankaranarayanan, A.; Mitra, K. RT-X Net: RGB-Thermal cross attention network for Low-Light Image Enhancement. arXiv 2025, arXiv:2505.24705. [Google Scholar]

- Zheng, Z.; Wang, P.; Ren, D.; Liu, W.; Ye, R.; Hu, Q.; Zuo, W. Enhancing Geometric Factors in Model Learning and Inference for Object Detection and Instance Segmentation. IEEE Trans. Cybern. 2022, 52, 8574–8586. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Varghese, R.; Sambath, M. Yolov8: A novel object detection algorithm with enhanced performance and robustness. In Proceedings of the 2024 International Conference on Advances in Data Engineering and Intelligent Computing Systems (ADICS), Chennai, India, 18–19 April 2024; pp. 1–6. [Google Scholar]

- Khanam, R.; Hussain, M. Yolov11: An overview of the key architectural enhancements. arXiv 2024, arXiv:2410.17725. [Google Scholar] [CrossRef]

| Method | Test 1 | Test 2 | Test 3 | Test 4 | Test 5 | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | PSNR | SSIM | |

| Zero-DCE [35] | 9.207 | 0.515 | 9.429 | 0.528 | 9.969 | 0.602 | 9.372 | 0.573 | 10.449 | 0.225 |

| RetinexNet [36] | 6.705 | 0.324 | 6.714 | 0.450 | 7.009 | 0.311 | 6.805 | 0.454 | 6.584 | 0.197 |

| RT-X Net [37] | 10.235 | 0.415 | 10.521 | 0.448 | 10.874 | 0.432 | 10.236 | 0.529 | 11.145 | 0.375 |

| IAT | 15.163 | 0.679 | 15.485 | 0.633 | 15.487 | 0.705 | 15.407 | 0.686 | 16.021 | 0.669 |

| Hardware/Software Used | Description |

|---|---|

| CPU | Intel Core Ultra 9-275HX |

| GPU | NVIDIA GeForce RTX 5080 |

| GPU Parallel Processing Platform | CUDA 12.8 |

| CUDA Deep Neural Network Library | cuDNN v 8.9.7 |

| Operating System | Windows 11 |

| Programming Language | Python 3.12.7 |

| Deep Learning Framework | PyTorch 2.7.1 |

| Integrated Development Environment | PyCharm 2025.1.3.1-Windows |

| Model | Precision (P) | Recall (R) | mAP@0.5 | mAP@0.5:0.95 | GFLOPs | Memory (G) | Inference Times (ms) |

|---|---|---|---|---|---|---|---|

| Faster R-CNN | 0.852 | 0.876 | 0.915 | 0.574 | 181.4 | 36.2 | 29.56 |

| YOLOv8 | 0.874 | 0.881 | 0.923 | 0.582 | 28.80 | 11.7 | 11.41 |

| YOLOv11 | 0.880 | 0.884 | 0.935 | 0.594 | 21.70 | 7.39 | 9.85 |

| YOLOv12 | 0.903 | 0.910 | 0.938 | 0.603 | 19.60 | 7.02 | 6.73 |

| YOLO-ILM | 0.948 | 0.941 | 0.957 | 0.689 | 45.2 | 9.92 | 9.43 |

| Test | IAT | LEGM | MPDIoU | Precision (P) | Recall (R) | mAP@0.5 | mAP@0.5:0.95 | GFLOPs | Inference Times (ms) |

|---|---|---|---|---|---|---|---|---|---|

| 1 | 0.903 | 0.910 | 0.938 | 0.603 | 19.60 | 6.73 | |||

| 2 | √ | 0.909 | 0.912 | 0.942 | 0.632 | 24.20 | 6.88 | ||

| 3 | √ | 0.916 | 0.914 | 0.944 | 0.649 | 27.30 | 8.87 | ||

| 4 | √ | 0.914 | 0.915 | 0.940 | 0.641 | 19.60 | 7.13 | ||

| 5 | √ | √ | 0.929 | 0.925 | 0.958 | 0.657 | 45.20 | 9.31 | |

| 6 | √ | √ | 0.930 | 0.934 | 0.952 | 0.663 | 24.20 | 8.92 | |

| 7 | √ | √ | 0.936 | 0.929 | 0.949 | 0.676 | 27.30 | 9.34 | |

| 8 | √ | √ | √ | 0.948 | 0.941 | 0.957 | 0.689 | 45.20 | 9.43 |

| Module Combination | ΔPrecision(%) | ΔRecall (%) | ΔmAP@0.5 (%) | ΔmAP@0.5:0.95 (%) |

|---|---|---|---|---|

| Baseline | – | – | – | – |

| IAT | 0.60 | 0.20 | 0.40 | 2.90 |

| LEGM | 1.30 | 0.40 | 0.60 | 4.60 |

| IAT + LEGM + MPDIoU | 1.10 | 0.50 | 0.20 | 3.80 |

| IAT + LEGM | 2.60 | 1.50 | 2.00 | 5.40 |

| IAT + MPDIoU | 2.70 | 2.40 | 1.40 | 6.00 |

| LEGM + MPDIoU | 3.30 | 1.90 | 1.10 | 7.30 |

| IAT + LEGM + MPDIoU | 4.50 | 3.10 | 1.90 | 8.60 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ding, J.; Qu, F.; Zhou, W.; Xu, J.; Zhao, L.; Ji, Y. A Sensor Based Waste Rock Detection Method in Copper Mining Under Low Light Environment. Sensors 2025, 25, 5961. https://doi.org/10.3390/s25195961

Ding J, Qu F, Zhou W, Xu J, Zhao L, Ji Y. A Sensor Based Waste Rock Detection Method in Copper Mining Under Low Light Environment. Sensors. 2025; 25(19):5961. https://doi.org/10.3390/s25195961

Chicago/Turabian StyleDing, Jianing, Fuming Qu, Weihua Zhou, Jiajun Xu, Lingyu Zhao, and Yaming Ji. 2025. "A Sensor Based Waste Rock Detection Method in Copper Mining Under Low Light Environment" Sensors 25, no. 19: 5961. https://doi.org/10.3390/s25195961

APA StyleDing, J., Qu, F., Zhou, W., Xu, J., Zhao, L., & Ji, Y. (2025). A Sensor Based Waste Rock Detection Method in Copper Mining Under Low Light Environment. Sensors, 25(19), 5961. https://doi.org/10.3390/s25195961