Generative Sign-Description Prompts with Multi-Positive Contrastive Learning for Sign Language Recognition

Abstract

1. Introduction

- 1.

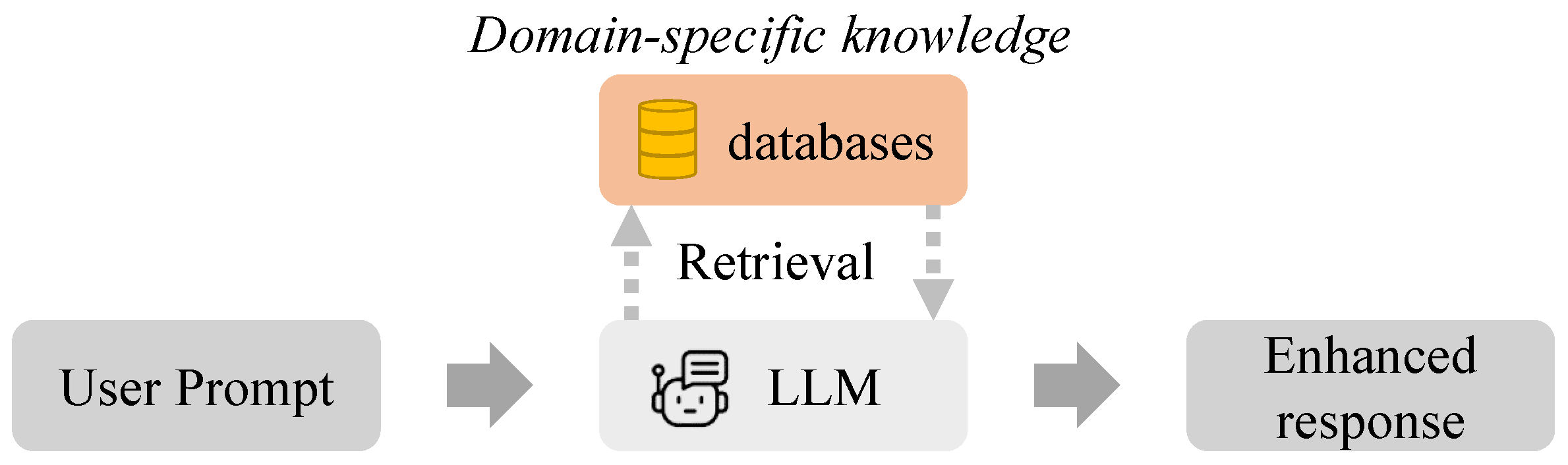

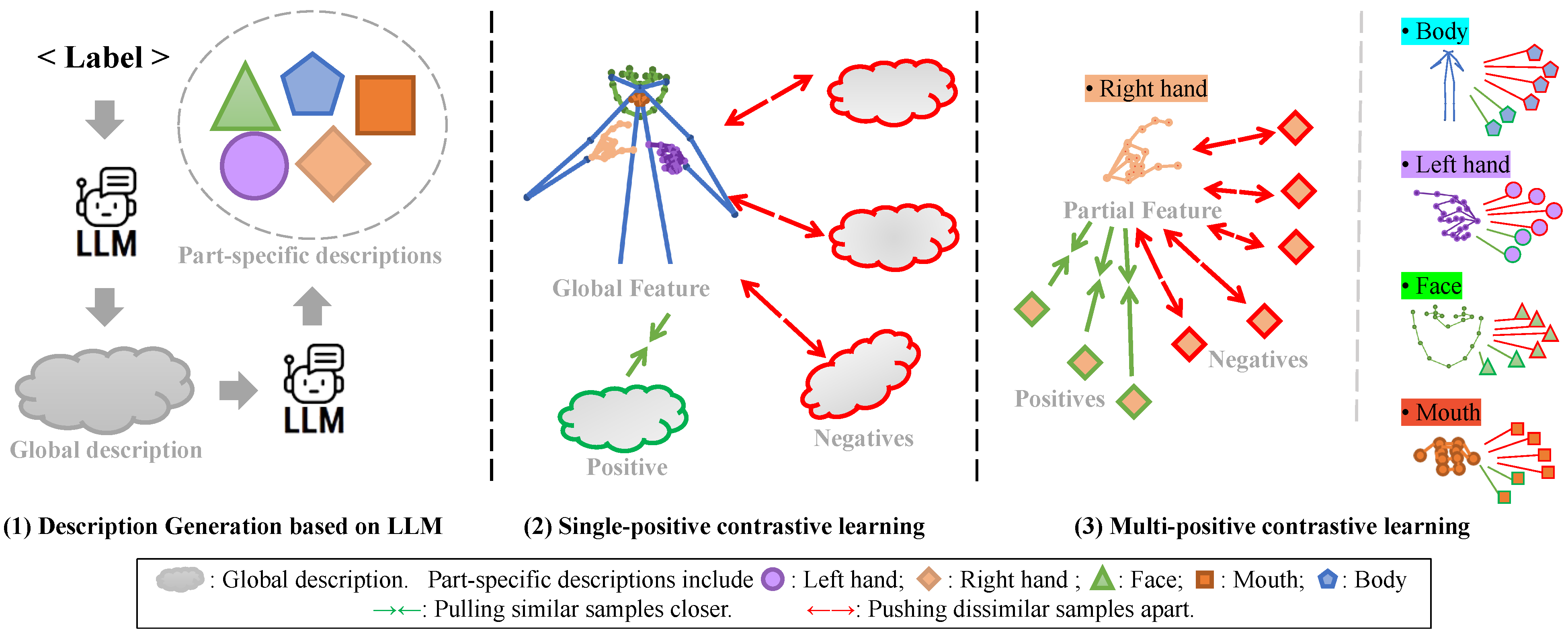

- To the best of our knowledge, we are the first to integrate generative LLMs into SLR through our Generative Sign-description Prompts (GSP). GSP employs retrieval-augmented generation with domain-specific LLMs to produce accurate multipart sign descriptions, providing reliable textual grounding for contrastive learning.

- 2.

- We design the Multi-positive Contrastive learning (MC) approach, which combines retrieval-augmented generative descriptions from expert-validated Knowledge Bases and a novel multi-positive contrastive learning paradigm.

- 3.

- Comprehensive experiments on the Chinese SLR500 and Turkish AUTSL datasets further validate the effectiveness of our method, achieving state-of-the-art accuracy (97.1% and 97.07%, respectively). The consistent and robust performance across languages demonstrates generalization capabilities.

2. Related Work

2.1. Skeleton-Based Sign Language Recognition

2.2. Text-Augmented Sign Language Representation

3. Materials and Methods

3.1. Part-Specific Skeleton Encoder

3.2. Generative Sign-Description Prompts

3.2.1. Expert Dataset Construction and Standardization

3.2.2. Redundancy Elimination and Multi-Part Decomposition

3.2.3. Text Encoder

3.3. Description-Driven Sign Language Representation Learning

3.3.1. Text-Conditioned Multi-Positive Alignment

3.3.2. Hierarchical Part Contrastive Learning

3.4. Composite Training Objective

4. Experiments

4.1. Experimental Setup

4.1.1. Datasets

4.1.2. Implementation Details

4.2. Comparison with State-of-the-Art Methods

4.2.1. Performance Comparison on SLR-500 Dataset

4.2.2. Performance Comparison on AUTSL Dataset

4.2.3. Comprehensive Performance Analysis

4.3. Ablation Study

4.3.1. Combining Sign Language with LLMs

4.3.2. Multipart Contrastive Learning for Sign Language Recognition

4.3.3. Keypoint Selection Analysis

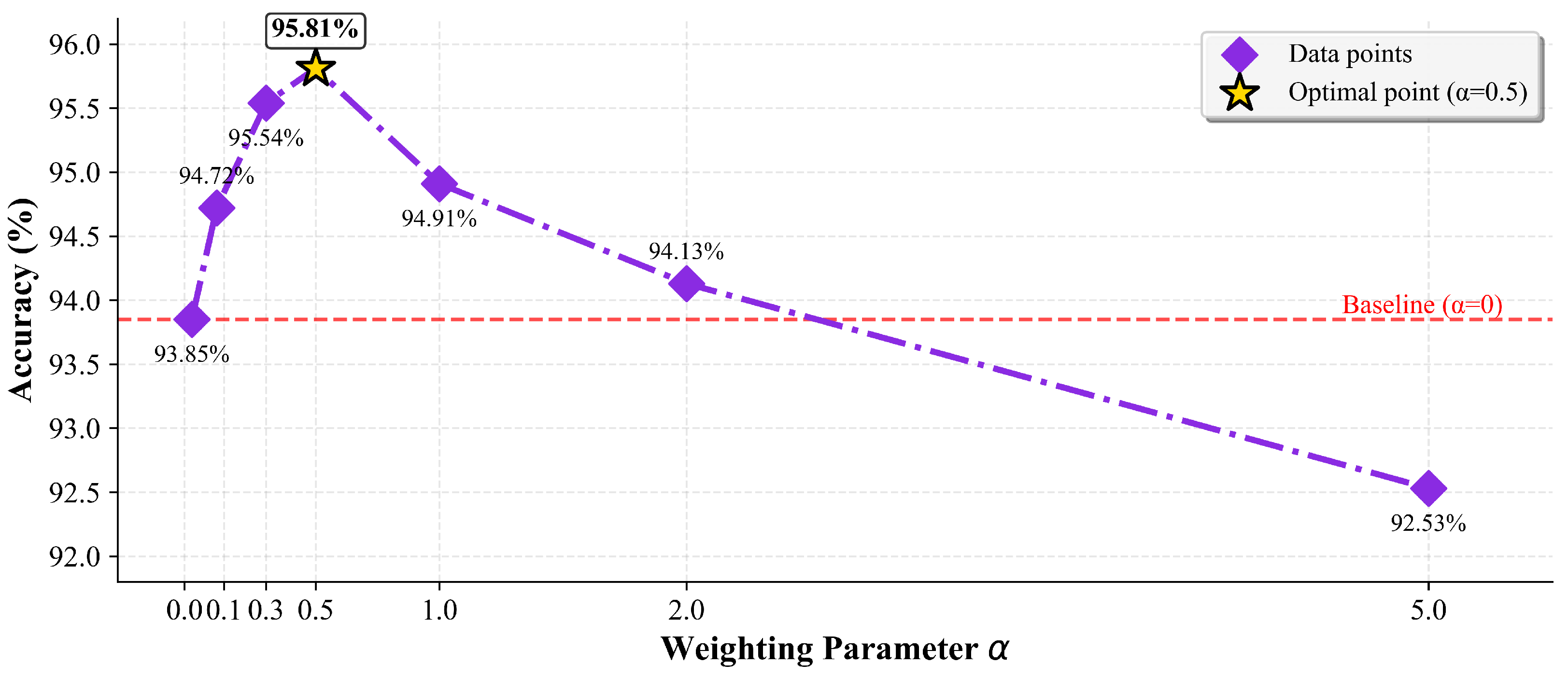

4.3.4. Analysis of the Weighting Parameter

4.3.5. Analysis of Multipart Feature Contribution

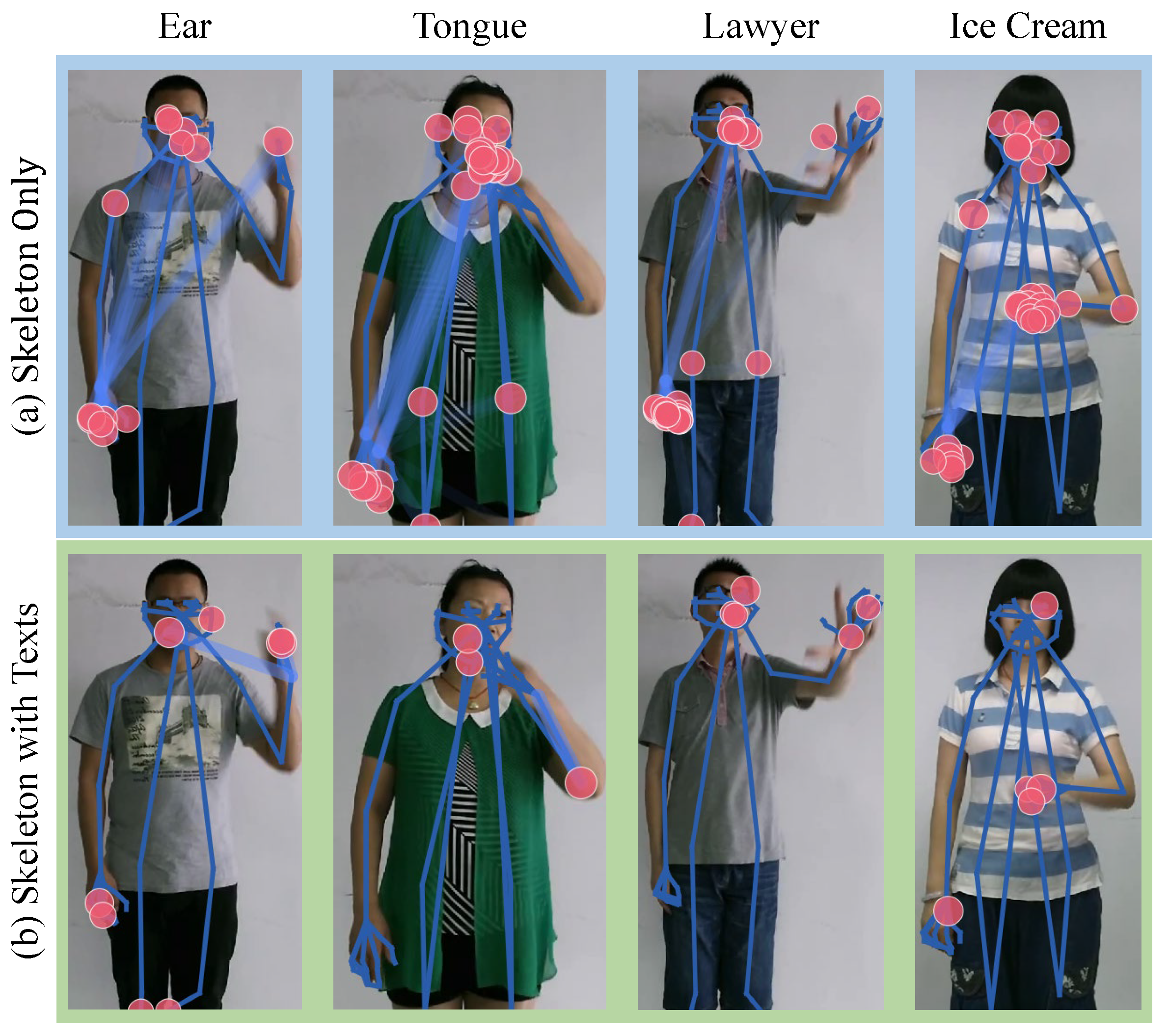

4.4. Visualized Analysis

4.4.1. Visualization of Human Keypoint Attention Weights

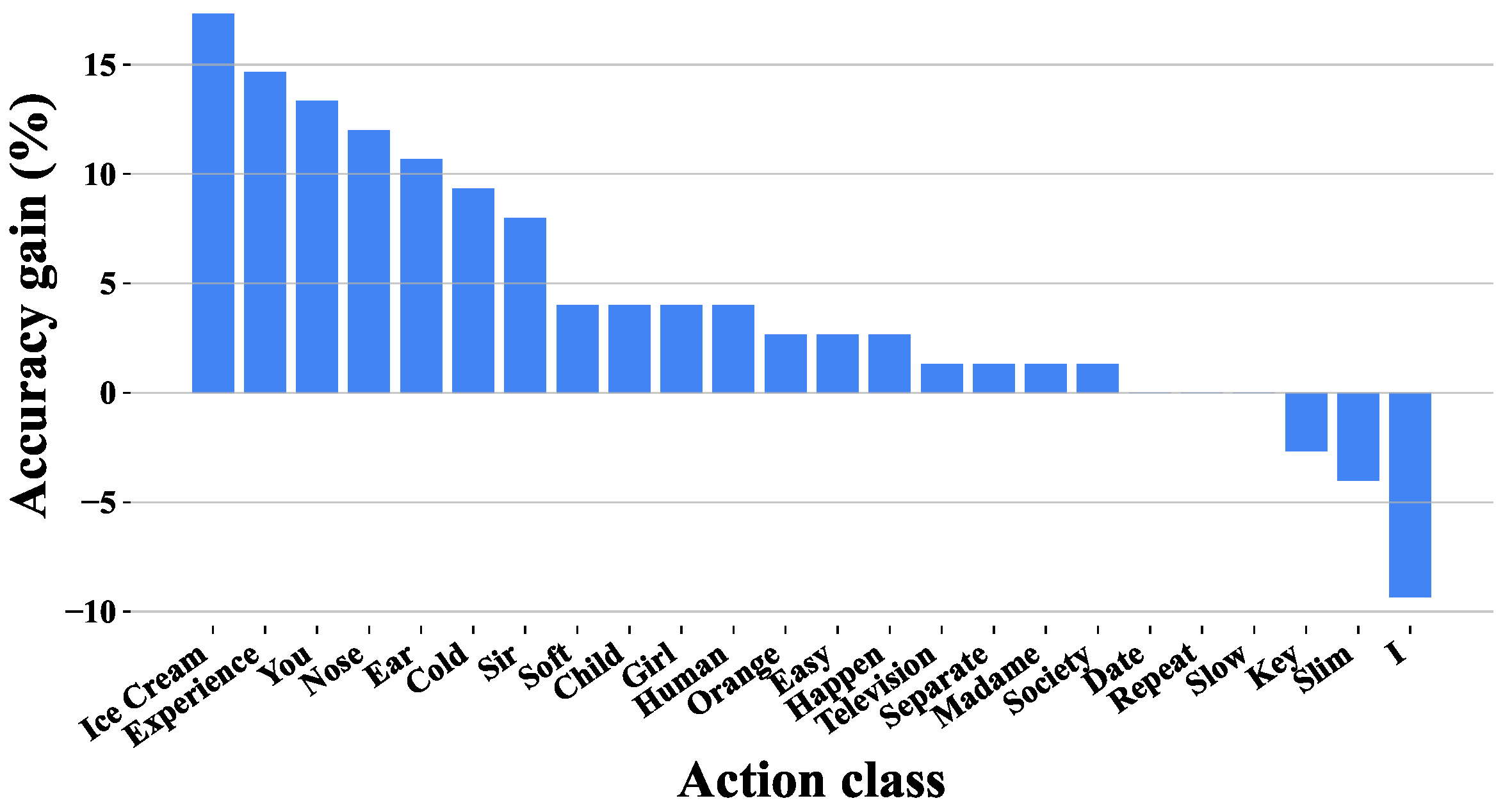

4.4.2. Class-Wise Performance Gains

4.4.3. Case Study: Illustrating the Many-to-Many Mapping

4.5. Efficiency and Computational Cost Analysis

5. Discussion

5.1. Limitations and Future Directions

- 1.

- The most significant limitation is our evaluation on isolated sign datasets. While this provides a controlled environment to validate our core approach, real-world communication involves continuous signing. Extending our method to Continuous Sign Language Recognition (CSLR) presents substantial challenges, including (a) temporal segmentation to identify sign boundaries; (b) modeling co-articulation effects, where the appearance of a sign is influenced by adjacent signs; and (c) interpreting grammatical structures conveyed over time. Future work will focus on adapting our framework for CSLR, potentially by integrating it into a sequence-to-sequence architecture (e.g., Transformer or CTC-based models) to process continuous streams on datasets like RWTH-PHOENIX-Weather [42] and CSL-Daily [43].

- 2.

- Our method’s performance is contingent on the accuracy of the upstream pose estimation model. In real-world scenarios with challenging conditions such as poor lighting, motion blur, or occlusions, the quality of the extracted skeleton data may degrade, consequently affecting recognition accuracy. Improving robustness to imperfect pose estimation is a key direction for future research.

- 3.

- The LLM-based description generation introduces a one-time computational cost for each vocabulary item. While this is an offline process and does not affect inference speed, it adds complexity to the training pipeline compared to traditional methods.

- 4.

- Although we have demonstrated success on both Chinese (CSL) and Turkish (AUTSL) sign languages, our model has not been tested on a wider range of sign languages or the vast stylistic and regional variations that exist within them. Future work should explore the model’s generalizability and potential need for fine-tuning on diverse linguistic and signer-specific data.

5.2. Practical Significance and Deployment Considerations

- 1.

- The state-of-the-art accuracy (>97%) on large-scale isolated sign datasets makes the method suitable for immediate deployment in applications where single-sign interaction is prevalent. This includes educational software for learning sign language, assistive communication boards, and command-based control systems for smart devices.

- 2.

- By leveraging fine-grained textual descriptions, our model learns a more semantically rich representation of signs compared to methods relying solely on class labels. This could enable more nuanced applications, such as sign-to-text systems that can differentiate between visually similar but semantically distinct signs.

- 3.

- Importantly, the LLM and text encoder are only used during the training phase. During inference, our model operates solely on skeleton data, maintaining the same computational efficiency as standard skeleton-based classifiers. This makes it practical for real-time deployment on resource-constrained devices.

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Microsoft. Developing with Kinect. 2017. Available online: https://developer.microsoft.com/en-us/windows/kinect/develop (accessed on 19 August 2025).

- Intel. Intel® RealSense™ Technology. 2019. Available online: https://www.intel.com/content/www/us/en/architecture-and-technology/realsense-overview.html (accessed on 19 August 2025).

- Fang, H.S.; Li, J.; Tang, H.; Xu, C.; Zhu, H.; Xiu, Y.; Li, Y.L.; Lu, C. Alphapose: Whole-body regional multi-person pose estimation and tracking in real-time. IEEE Trans. Pattern Anal. Mach. Intell. 2022, 45, 7157–7173. [Google Scholar] [CrossRef] [PubMed]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep high-resolution representation learning for human pose estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 5693–5703. [Google Scholar] [CrossRef]

- Lin, K.; Wang, X.; Zhu, L.; Zhang, B.; Yang, Y. SKIM: Skeleton-based isolated sign language recognition with part mixing. IEEE Trans. Multimed. 2024, 26, 4271–4280. [Google Scholar] [CrossRef]

- Jiao, P.; Min, Y.; Li, Y.; Wang, X.; Lei, L.; Chen, X. Cosign: Exploring co-occurrence signals in skeleton-based continuous sign language recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 20676–20686. [Google Scholar] [CrossRef]

- Zhao, W.; Hu, H.; Zhou, W.; Shi, J.; Li, H. BEST: BERT pre-training for sign language recognition with coupling tokenization. In Proceedings of the AAAI Conference on Artificial Intelligence, Washington, DC, USA, 7–14 February 2023; Volume 37, pp. 3597–3605. [Google Scholar] [CrossRef]

- Zhao, W.; Hu, H.; Zhou, W.; Mao, Y.; Wang, M.; Li, H. MASA: Motion-aware Masked Autoencoder with Semantic Alignment for Sign Language Recognition. IEEE Trans. Circuits Syst. Video Technol. 2024, 34, 10793–10804. [Google Scholar] [CrossRef]

- Liang, S.; Li, Y.; Shi, Y.; Chen, H.; Miao, Q. Integrated multi-local and global dynamic perception structure for sign language recognition. Pattern Anal. Appl. 2025, 28, 50. [Google Scholar] [CrossRef]

- Yuan, L.; He, Z.; Wang, Q.; Xu, L.; Ma, X. Skeletonclip: Recognizing skeleton-based human actions with text prompts. In Proceedings of the 2022 8th International Conference on Systems and Informatics (ICSAI), Kunming, China, 10–12 December 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Bao, H.; Dong, L.; Wei, F.; Wang, W.; Yang, N.; Liu, X.; Wang, Y.; Gao, J.; Piao, S.; Zhou, M.; et al. UniLMv2: Pseudo-Masked Language Models for Unified Language Model Pre-Training. In Proceedings of the 37th International Conference on Machine Learning, Virtual, 13–18 July 2020; Proceedings of Machine Learning Research. Volume 119, pp. 642–652. [Google Scholar]

- Liu, R.; Liu, Y.; Wu, M.; Xin, W.; Miao, Q.; Liu, X.; Li, L. SG-CLR: Semantic representation-guided contrastive learning for self-supervised skeleton-based action recognition. Pattern Recognit. 2025, 162, 111377. [Google Scholar] [CrossRef]

- Xiang, W.; Li, C.; Zhou, Y.; Wang, B.; Zhang, L. Generative Action Description Prompts for Skeleton-based Action Recognition. In Proceedings of the Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Paris, France, 1–6 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 10276–10285. [Google Scholar] [CrossRef]

- Hwang, E.J.; Cho, S.; Lee, J.; Park, J.C. An Efficient Sign Language Translation Using Spatial Configuration and Motion Dynamics with LLMs. arXiv 2024, arXiv:2408.10593. [Google Scholar] [CrossRef]

- Yan, T.; Zeng, W.; Xiao, Y.; Tong, X.; Tan, B.; Fang, Z.; Cao, Z.; Zhou, J.T. Crossglg: Llm guides one-shot skeleton-based 3d action recognition in a cross-level manner. In Proceedings of the European Conference on Computer Vision, Milan, Italy, 29 September–4 October 2024; Springer: Berlin/Heidelberg, Germany, 2024; Volume 15078, pp. 113–131. [Google Scholar] [CrossRef]

- He, K.; Fan, H.; Wu, Y.; Xie, S.; Girshick, R.B. Momentum contrast for unsupervised visual representation learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Computer Vision Foundation, Seattle, WA, USA, 13–19 June 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 9729–9738. [Google Scholar] [CrossRef]

- Chen, T.; Kornblith, S.; Norouzi, M.; Hinton, G. A simple framework for contrastive learning of visual representations. In Proceedings of the 37th International Conference on Machine Learning, Online, 13–18 July 2020; ICML 2020, Virtual Event, Proceedings of Machine Learning Research. Volume 119, pp. 1597–1607. Available online: http://proceedings.mlr.press/v119/chen20j.html (accessed on 21 September 2025).

- Liu, K.; Ma, Z.; Kang, X.; Li, Y.; Xie, K.; Jiao, Z.; Miao, Q. Enhanced Contrastive Learning with Multi-view Longitudinal Data for Chest X-ray Report Generation. arXiv 2025, arXiv:2502.20056. [Google Scholar] [CrossRef]

- Hu, H.; Zhao, W.; Zhou, W.; Wang, Y.; Li, H. SignBERT: Pre-Training of Hand-Model-Aware Representation for Sign Language Recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 11087–11096. [Google Scholar] [CrossRef]

- Zuo, R.; Wei, F.; Mak, B. Natural Language-Assisted Sign Language Recognition. In Proceedings of the 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 14890–14900. [Google Scholar] [CrossRef]

- Zhang, H.; Guo, Z.; Yang, Y.; Liu, X.; Hu, D. C2st: Cross-modal contextualized sequence transduction for continuous sign language recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Paris, France, 1–6 October 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 21053–21062. [Google Scholar] [CrossRef]

- Kalakonda, S.S.; Maheshwari, S.; Sarvadevabhatla, R.K. Action-gpt: Leveraging large-scale language models for improved and generalized action generation. In Proceedings of the 2023 IEEE International Conference on Multimedia and Expo (ICME), Brisbane, Australia, 10–14 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 31–36. [Google Scholar] [CrossRef]

- Wong, R.; Camgöz, N.C.; Bowden, R. Sign2GPT: Leveraging Large Language Models for Gloss-Free Sign Language Translation. In Proceedings of the The Twelfth International Conference on Learning Representations, Vienna, Austria, 7–11 May 2024. [Google Scholar]

- Chen, Y.; Zhang, Z.; Yuan, C.; Li, B.; Deng, Y.; Hu, W. Channel-wise topology refinement graph convolution for skeleton-based action recognition. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 13359–13368. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, Virtual, 18–24 July 2021; pp. 8748–8763. Available online: http://proceedings.mlr.press/v139/radford21a.html (accessed on 21 September 2025).

- Oord, A.v.d.; Li, Y.; Vinyals, O. Representation learning with contrastive predictive coding. arXiv 2018, arXiv:1807.03748. Available online: http://arxiv.org/abs/1807.03748 (accessed on 21 September 2025).

- Tian, Y.; Fan, L.; Isola, P.; Chang, H.; Krishnan, D. Stablerep: Synthetic images from text-to-image models make strong visual representation learners. Adv. Neural Inf. Process. Syst. 2023, 36, 48382–48402. Available online: http://papers.nips.cc/paper_files/paper/2023/hash/971f1e59cd956cc094da4e2f78c6ea7c-Abstract-Conference.html (accessed on 21 September 2025).

- Huang, J.; Zhou, W.; Li, H.; Li, W. Attention-based 3D-CNNs for large-vocabulary sign language recognition. IEEE Trans. Circuits Syst. Video Technol. 2018, 29, 2822–2832. [Google Scholar] [CrossRef]

- Sincan, O.M.; Keles, H.Y. Autsl: A large scale multi-modal turkish sign language dataset and baseline methods. IEEE Access 2020, 8, 181340–181355. [Google Scholar] [CrossRef]

- Gu, D.; Wei, D.; Yang, Y.; Wang, C.; Yu, Y.; Gao, H.; Wu, Y.; Heng, M.; Qiu, B.; Liu, W.; et al. Chinese Sign Language (Revised Edition) (Parts 1 & 2); Huaxia Publishing House: Beijing, China, 2018. [Google Scholar]

- Dingqian, G.; Dan, W.; Yang, Y.; Chenhua, W.; Yuanyuan, Y.; Hui, G.; Yongsheng, W.; Miao, H.; Bing, Q.; Wa, L.; et al. Lexicon of Common Expressions in Chinese National Sign Language; Technical Report GF 0020–2018; National Standard; Ministry of Education of the People’s Republic of China, State Language Commission, China Disabled Persons’ Federation: Beijing, China, 2018.

- Dingqian, G.; Dan, W.; Chenhua, W.; Hui, G.; Yuanyuan, Y.; Miao, H.; Bing, Q.; Yongsheng, W. Chinese Manual Alphabet; Technical Report GF 0021–2019; National Standard; Ministry of Education of the People’s Republic of China, State Language Commission, China Disabled Persons’ Federation: Beijing, China, 2019. [Google Scholar]

- Kavak, S. Turkish Sign Language Dictionary; National Education Ministry: New Delhi, India, 2015. [Google Scholar]

- Yan, S.; Xiong, Y.; Lin, D. Spatial temporal graph convolutional networks for skeleton-based action recognition. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, IL, USA, 2–7 February 2018; Volume 32. [Google Scholar] [CrossRef]

- Zhao, W.; Zhou, W.; Hu, H.; Wang, M.; Li, H. Self-supervised representation learning with spatial-temporal consistency for sign language recognition. IEEE Trans. Image Process. 2024, 33, 4188–4201. [Google Scholar] [CrossRef] [PubMed]

- Laines, D.; Gonzalez-Mendoza, M.; Ochoa-Ruiz, G.; Bejarano, G. Isolated sign language recognition based on tree structure skeleton images. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 276–284. [Google Scholar] [CrossRef]

- Jiang, S.; Sun, B.; Wang, L.; Bai, Y.; Li, K.; Fu, Y. Sign language recognition via skeleton-aware multi-model ensemble. arXiv 2021, arXiv:2110.06161. Available online: https://arxiv.org/abs/2110.06161 (accessed on 21 September 2025).

- Liu, Y.; Lu, F.; Cheng, X.; Yuan, Y. Asymmetric multi-branch GCN for skeleton-based sign language recognition. Multimed. Tools Appl. 2024, 83, 75293–75319. [Google Scholar] [CrossRef]

- Jiang, S.; Sun, B.; Wang, L.; Bai, Y.; Li, K.; Fu, Y. Skeleton aware multi-modal sign language recognition. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. Computer Vision Foundation, Nashville, TN, USA, 19–25 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 3413–3423. [Google Scholar] [CrossRef]

- Deng, Z.; Leng, Y.; Chen, J.; Yu, X.; Zhang, Y.; Gao, Q. TMS-Net: A multi-feature multi-stream multi-level information sharing network for skeleton-based sign language recognition. Neurocomputing 2024, 572, 127194. [Google Scholar] [CrossRef]

- Deng, Z.; Leng, Y.; Hu, J.; Lin, Z.; Li, X.; Gao, Q. SML: A Skeleton-based multi-feature learning method for sign language recognition. Knowl.-Based Syst. 2024, 301, 112288. [Google Scholar] [CrossRef]

- Forster, J.; Schmidt, C.; Hoyoux, T.; Koller, O.; Zelle, U.; Piater, J.H.; Ney, H. RWTH-PHOENIX-Weather: A Large Vocabulary Sign Language Recognition and Translation Corpus. In Proceedings of the Eighth International Conference on Language Resources and Evaluation, LREC 2012, Istanbul, Turkey, 23–25 May 2012; Calzolari, N., Choukri, K., Declerck, T., Dogan, M.U., Maegaard, B., Mariani, J., Odijk, J., Piperidis, S., Eds.; European Language Resources Association (ELRA): Paris, France, 2012; pp. 3785–3789. [Google Scholar]

- Zhou, H.; Zhou, W.; Qi, W.; Pu, J.; Li, H. Improving Sign Language Translation with Monolingual Data by Sign Back-Translation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2021, Nashville, TN, USA, 19–25 June 2021; Computer Vision Foundation; IEEE: Piscataway, NJ, USA, 2021; pp. 1316–1325. [Google Scholar] [CrossRef]

| Primary Descriptions | Expert-Validated Knowledge | Refined Descriptions |

|---|---|---|

| Devoted: (I) Make the sign for “love”. (II) Extend the thumb with one hand and place it on the palm of the other hand, then raise it upwards. | The sign for “love”: Gently caress the back of the thumb with one hand, expressing a feeling of “tenderness”. | Devoted: (1) Gently caress the back of the thumb with one hand, expressing a feeling of “tenderness”. (2) Extend your thumb with one hand, sit on the other palm, and lift it up. |

| Ambience: (1) One hand forms the manual sign “Q”, with the fingertips pointing inward, placed at the nostrils. (2) Extend your index finger with one hand and make a big circle with your fingertips facing down. | The manual sign “Q”: One hand with the right thumb down, the index and middle fingers together on top, the thumb, index, and middle fingers pinched together, the fingertips pointing forward and slightly to the left, the ring and little fingers bent, the fingertips touching the palm. | Ambience: (1) One hand with the right thumb down, the index and middle fingers together on top, the thumb, index, and middle fingers pinched together, the fingertips pointing forward and slightly to the left, the ring and little fingers bent, the fingertips touching the palm. The fingertips are pointing inward, placed at the nostrils. (2) Extend index finger with one hand and make a circle with your fingertips facing down. |

| Method | Accuracy (%) |

|---|---|

| ST-GCN [34] | 90.0 |

| SignBERT [19] | 94.5 |

| BEST [7] | 95.4 |

| MASA [8] | 96.3 |

| SSRL [35] | 96.9 |

| Ours (joint\joint_motion) | 96.01\95.28 |

| Ours (bone\bone_motion) | 94.37\94.19 |

| Ours (4 streams fusion) | 97.1 |

| Method | Top-1 | Top-5 |

|---|---|---|

| SL-TSSI-DenseNet [36] | 93.13 | – |

| SSTCN [37] | 93.37 | – |

| SAM-SLR-V1 [37] | 95.45 | 99.25 |

| AM-GCN-A [38] | 96.27 | 99.48 |

| SAM-SLR-V2 [39] | 96.47 | 99.76 |

| TMS-Net [40] | 96.62 | 99.71 |

| SML [41] | 96.85 | 99.79 |

| Ours | 97.07 | 99.89 |

| Dataset | Accuracy | Precision | Recall | F1-Score | Top-5 Acc |

|---|---|---|---|---|---|

| SLR500 | 97.1% | 96.5% | 96.8% | 96.7% | 99.6% |

| AUTSL | 97.07% | 96.2% | 96.8% | 96.5% | 99.89% |

| VE | LLM | KB | Optimized Prompt | Accuracy (%) |

|---|---|---|---|---|

| ✓ | – | – | – | 93.85 |

| ✓ | ✓ | – | – | 93.57 |

| ✓ | – | ✓ | – | 94.50 |

| ✓ | ✓ | ✓ | – | 94.89 |

| ✓ | ✓ | ✓ | ✓ | 95.25 |

| VE | Synonym | Prompt | Multipart | Accuracy (%) |

|---|---|---|---|---|

| ✓ | – | – | – | 93.85 |

| ✓ | ✓ | – | – | 94.50 |

| ✓ | – | ✓ | – | 94.93 |

| ✓ | – | – | ✓ | 95.25 |

| ✓ | ✓ | ✓ | – | 95.38 |

| ✓ | ✓ | ✓ | ✓ | 95.81 |

| Method | Num. Keypoints | Acc (%) | Parts | +Multipart (%) |

|---|---|---|---|---|

| all | 133 | 59.22 | – | – |

| base | 27 | 93.46 | 3 | 93.62 (0.16%↑) |

| MASA | 49 | 93.66 | 3 | 94.01 (0.35%↑) |

| Cosign | 76 | 93.57 | 5 | 94.52 (0.95%↑) |

| Ours | 87 | 93.85 | 5 | 95.25(1.40%↑) |

| Configuration | Accuracy (%) | Performance Drop |

|---|---|---|

| VE-only (Baseline) | 93.85 | - |

| VE + Multipart (Full Model) | 95.25 | - |

| w/o Face features | 94.55 | −0.70 |

| w/o Body features | 95.04 | −0.21 |

| w/o Hands features | 93.92 | −1.33 |

| Method | Training Time (h) | GPU Memory (GB) | Inference (ms/Sample) | Parameters (M) |

|---|---|---|---|---|

| CTR-GCN (Baseline) | 8.2 | 12.4 | 4.2 | 5.6 |

| Ours (Training) | 21.3 | 24.6 | - | 69.1 |

| Ours (Inference) | - | 12.8 | 4.3 | 5.6 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liang, S.; Li, Y.; Xin, W.; Chen, H.; Liu, X.; Liu, K.; Miao, Q. Generative Sign-Description Prompts with Multi-Positive Contrastive Learning for Sign Language Recognition. Sensors 2025, 25, 5957. https://doi.org/10.3390/s25195957

Liang S, Li Y, Xin W, Chen H, Liu X, Liu K, Miao Q. Generative Sign-Description Prompts with Multi-Positive Contrastive Learning for Sign Language Recognition. Sensors. 2025; 25(19):5957. https://doi.org/10.3390/s25195957

Chicago/Turabian StyleLiang, Siyu, Yunan Li, Wentian Xin, Huizhou Chen, Xujie Liu, Kang Liu, and Qiguang Miao. 2025. "Generative Sign-Description Prompts with Multi-Positive Contrastive Learning for Sign Language Recognition" Sensors 25, no. 19: 5957. https://doi.org/10.3390/s25195957

APA StyleLiang, S., Li, Y., Xin, W., Chen, H., Liu, X., Liu, K., & Miao, Q. (2025). Generative Sign-Description Prompts with Multi-Positive Contrastive Learning for Sign Language Recognition. Sensors, 25(19), 5957. https://doi.org/10.3390/s25195957