A Two-Step Filtering Approach for Indoor LiDAR Point Clouds: Efficient Removal of Jump Points and Misdetected Points

Abstract

1. Introduction

- Introduction of a clustering filtering algorithm based on radial distance and tangential span, achieving efficient jump point detection and removal.

- Implementation of a grid penetration model to filter misdetected points on smooth surfaces, significantly improving data quality and navigation stability.

- Strong performance and adaptability in complex indoor environments, outperforming existing methods in preserving point cloud details while minimizing noise.

2. Related Work

2.1. Image-Based Filtering Algorithms

2.2. Feature Fitting-Based Filtering Algorithms

2.3. Local Statistics-Based Filtering Algorithms

2.4. Density Clustering-Based Filtering Algorithms

2.5. Deep Learning-Based Filtering Algorithms

2.6. Vision–LiDAR Fusion-Based Filtering Algorithms

3. Point Cloud Data Analysis of Indoor Sweeping Robots

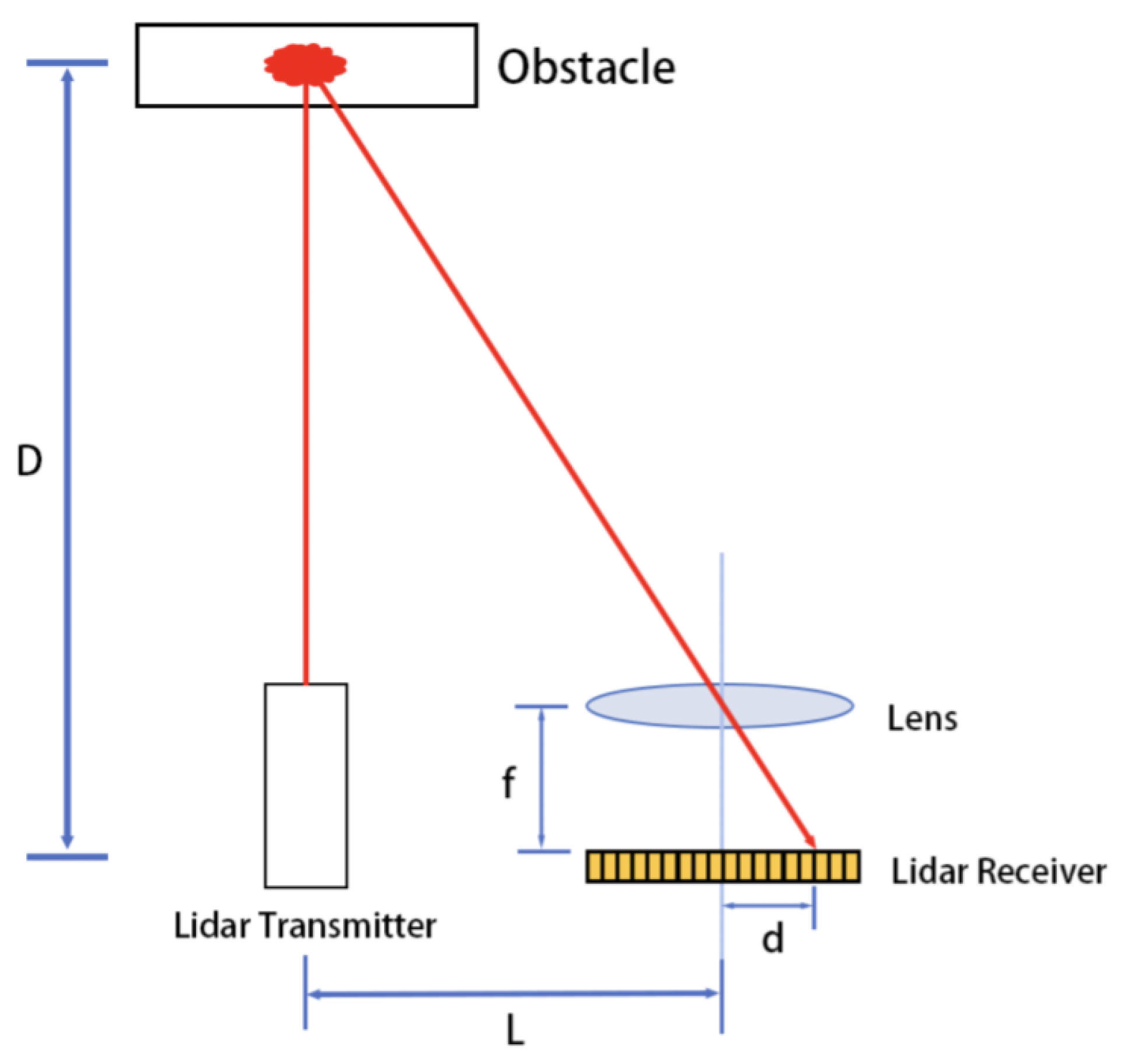

3.1. Operating Principle of Laser Range Sensors

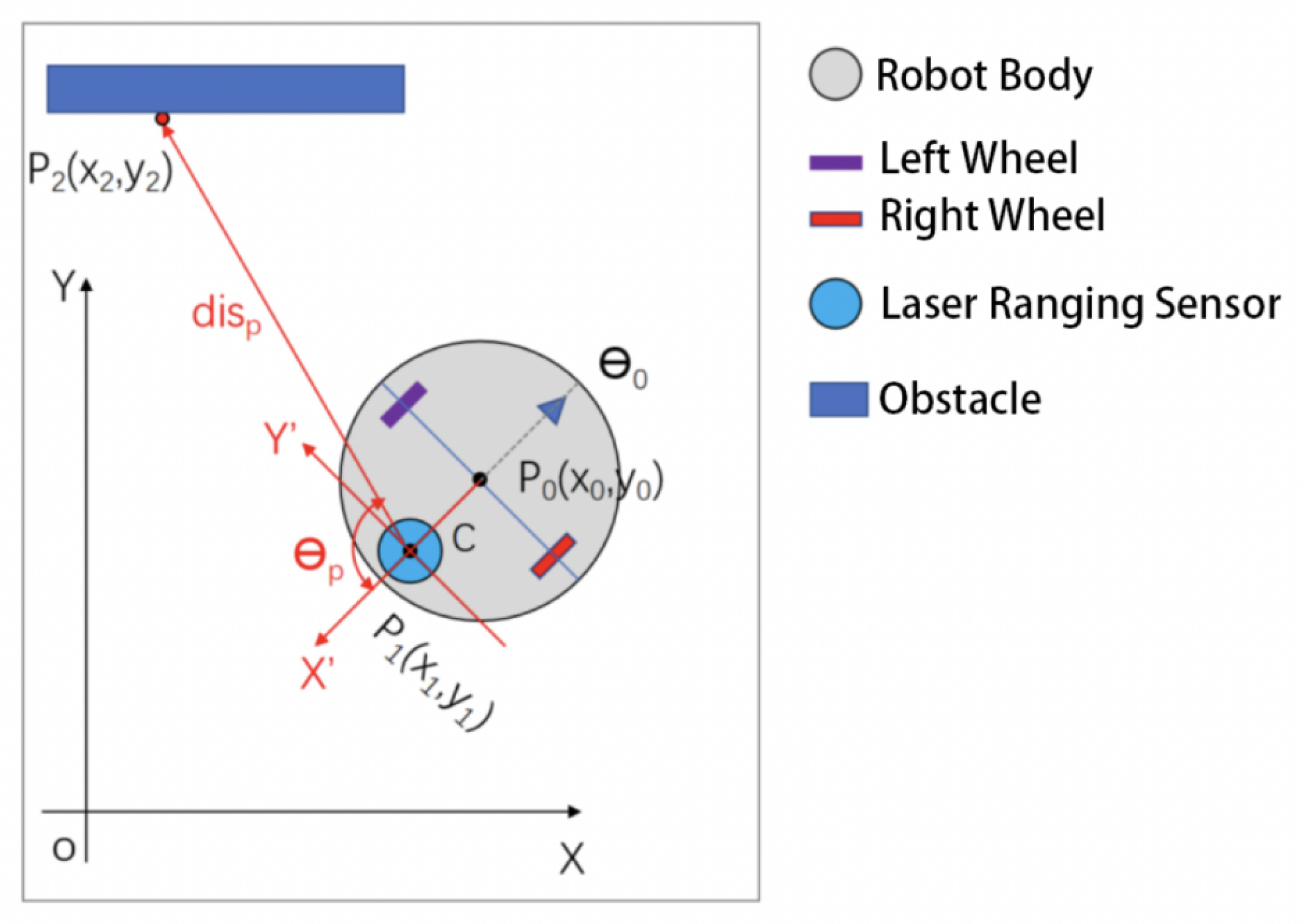

3.2. Point Cloud Data Acquisition

3.3. Jump Points and Misdetected Points

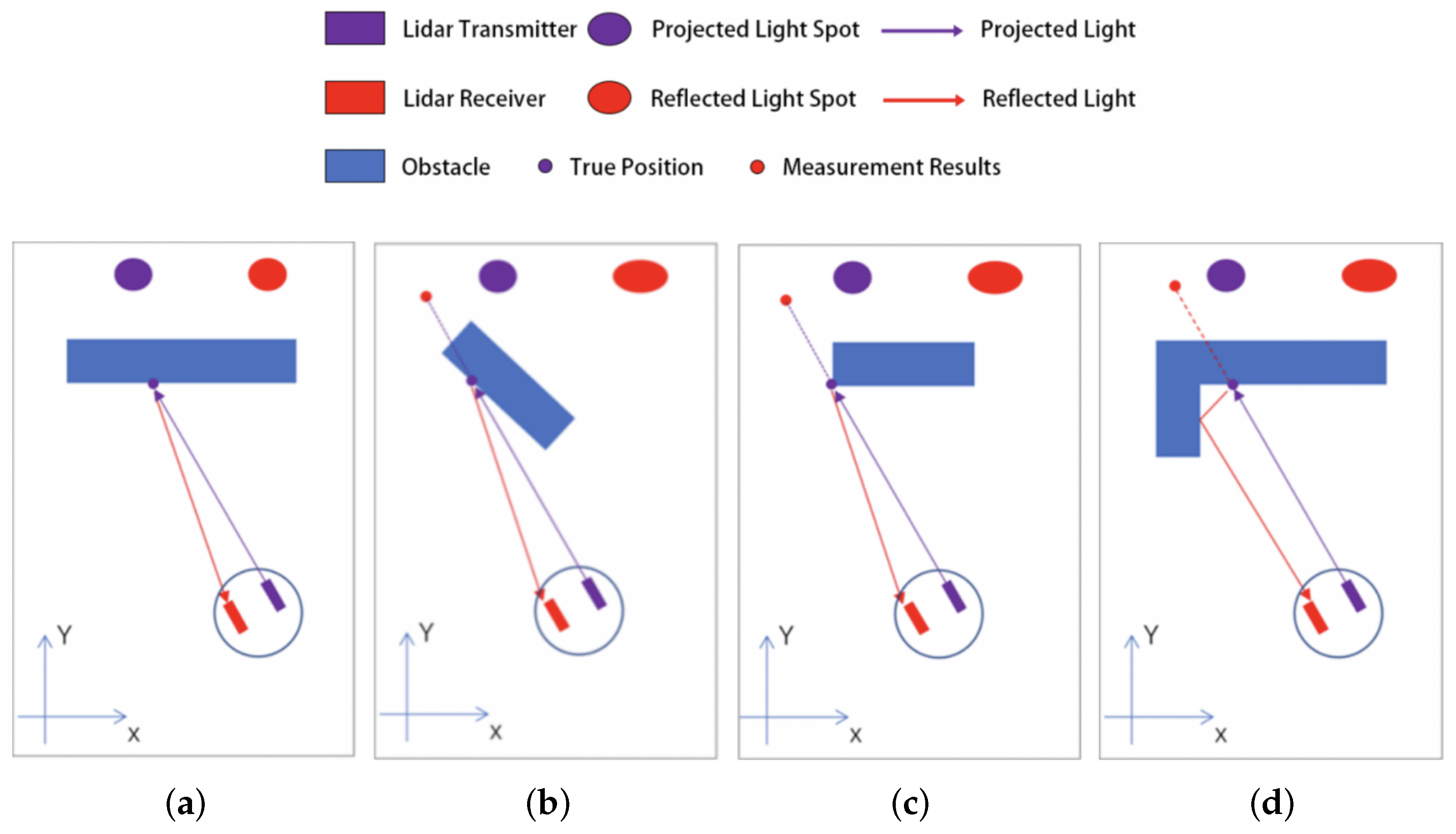

3.3.1. Jump Points

3.3.2. Misdetected Points

4. Point Cloud Filtering Algorithms for Indoor Sweeping Robots

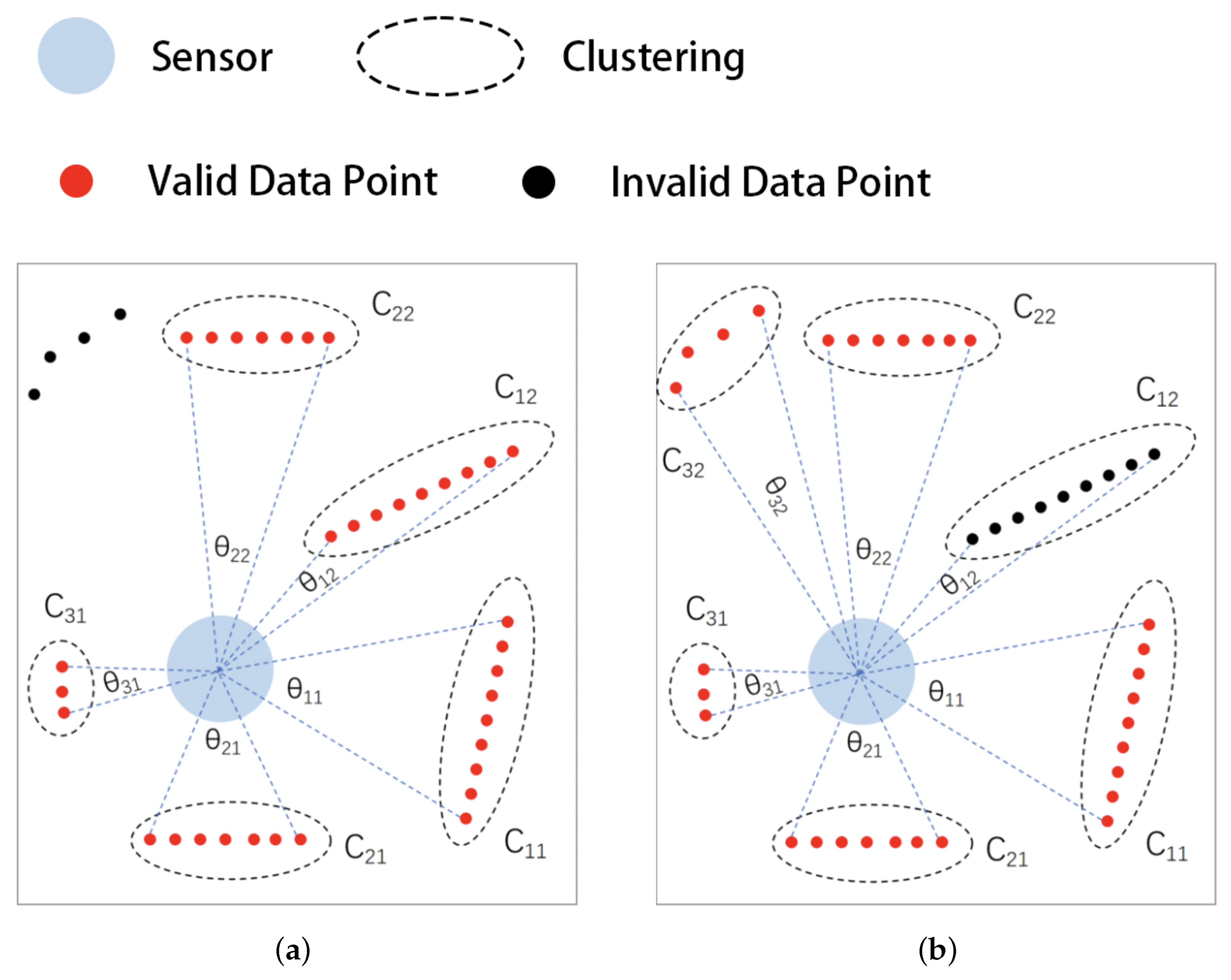

4.1. Clustering Filtering Algorithm Based on Radial Distance and Tangential Span

- In the initial state, all data points are free points.

- Select the free point with the smallest serial number and mark it as a non-free point.

- Set the proximity point distance threshold . From the analysis in Section 3.1, it can be seen that the point cloud data have different densities at different measured distances; the larger the measured distance, the lower the data density. A fixed distance threshold will cause the data points nearest to the robot to be over-adopted and those farthest away to be over-filtered. For this reason, in this paper the proximity distance threshold is dynamically set according to the radial distance of the data points, as shown in Equation (5):where is the measured distance corresponding to the data point, is the angular accuracy of the sensor, is the maximum angle allowed between the surface of the obstacle and the tangent direction of the projected light, and is the magnification factor, as shown in Figure 5a.Figure 5. Reflected light on different surfaces: (a) proximity distance thresholds and (b) span thresholds.Figure 5. Reflected light on different surfaces: (a) proximity distance thresholds and (b) span thresholds.

- Search for free points within a distance less than from , group them into a point cluster with , and mark them as non-free points.

- Repeat steps 2 to 4 for all points in the point cluster to obtain the point cluster C = , where M is the number of points and denotes the i-th data point in the point cluster.

- Calculate the tangential span of the point clusters. Traditional filtering algorithms use the number, distance, or density of data points as indicators to judge the point clusters; however, in indoor sweeping robot application scenarios these indicators are very likely to be invalidated due to the influence of factors such as the distance and alignment direction of sampling points. For this reason, in this paper we calculate the tangential span of point clusters as an evaluation index. This is shown in Equation (6), where and are the respective angles of outgoing light corresponding to data points i and j:

- Set the span threshold as shown in Equation (7):where is the maximum measurement distance of the sensor, is the minimum measurement distance of the sensor, is the minimum tangential span allowed at the maximum measured distance position, is the minimum tangential span allowed at the minimum measured distance location, and is the average measured distance of all data points in the point cluster, as shown in Figure 5b.

- If , then the data points in the point cluster are determined to be valid; otherwise, they are deleted.

- Repeat steps 2 to 8 until there are no more free points in the point cloud.

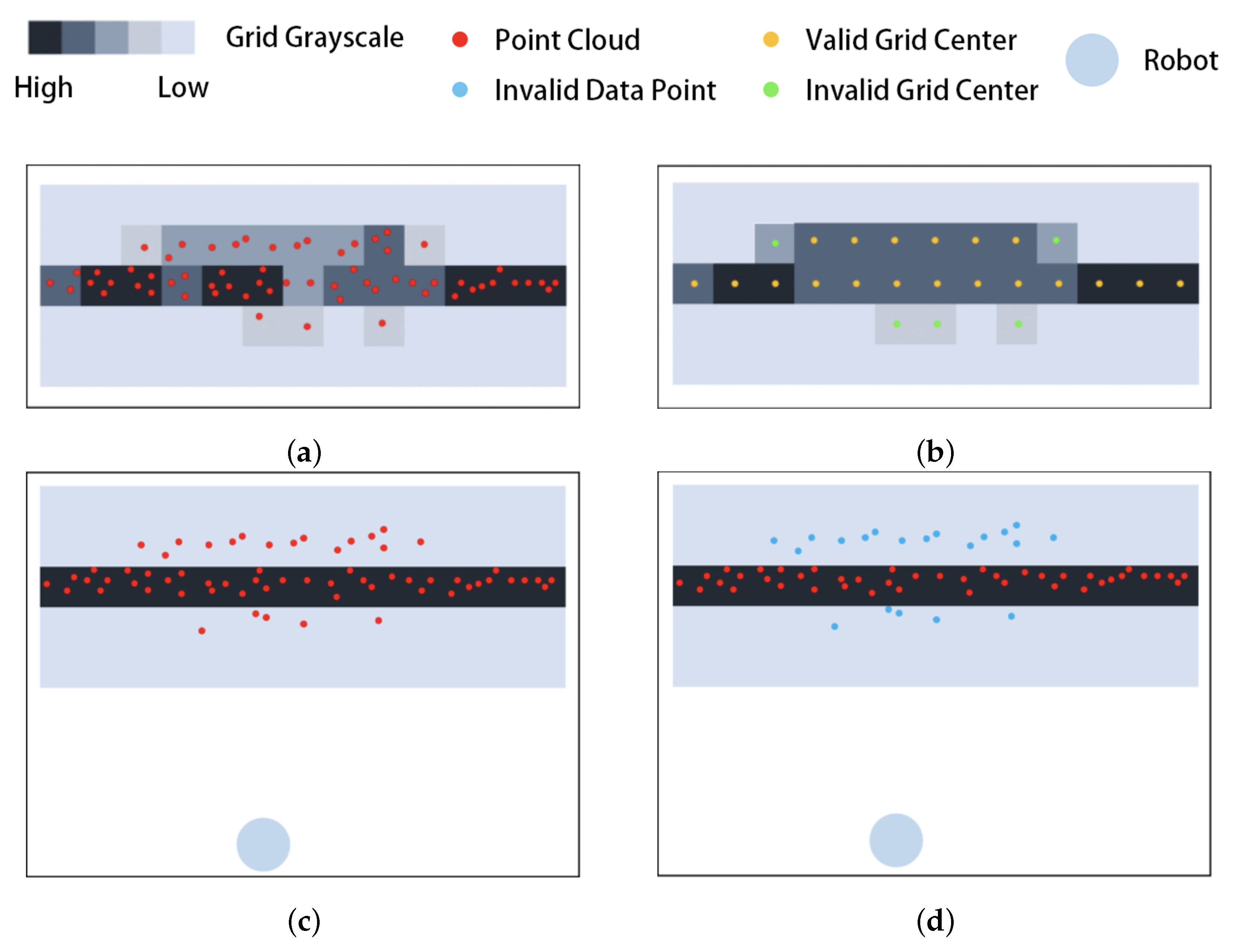

4.2. Point Cloud Filtering Algorithm Based on Grid Penetration Modeling

- Initialization (frame count ). Create a cache array for caching the point cloud of each frame over a period of time.

- Acquire a new frame of point cloud; add 1 to the frame count .

- Grid update. Generate a grid map with the grid width set as (in the experiment reported in this paper, 5 ). Map the data points in the cache array to the grid space sequentially, and add 1 to the corresponding grid count for each mapping.

- Point cloud caching. Add a new frame point cloud to the point cloud cache and record the time corresponding to the frame point cloud. For data frames in the cache pool, if the survival time is greater than , removed it from the cache to maintain the timeliness of the cached data points, with as the set maximum survival time.

- Frame count judgment. The initial period of the robot’s work is . If there is not enough statistical information at this time, jump to step 2. If , this means that the statistical information is sufficient; then, continue to the next step. Here, is the minimum number of frames required to execute the next step.

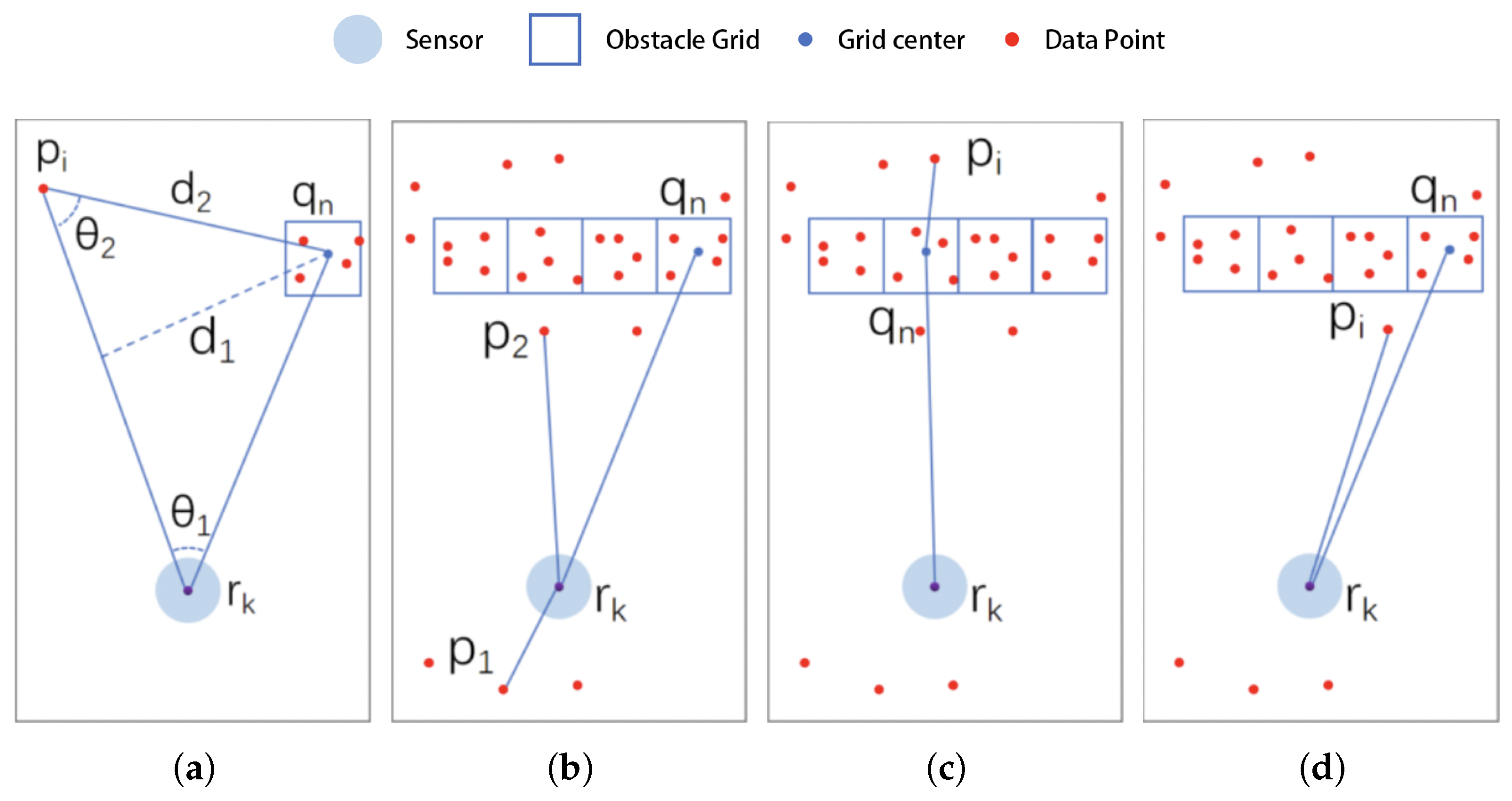

- Penetration modeling. Map grids with a count value greater than are considered obstacle grids, and are denoted as . Here, is the set threshold of obstacle counts, is the position of the grid center in the world coordinate system, and N is the number of obstacle grids.Take a data point from the latest frame of the point cloud , calculating its relationship to the obstacle grid and the robot ’s relative position relation, as shown in Figure 6.Figure 6. Penetration model: (a) parameters, (b) no obstacles in the way, (c) penetration of obstacles, (d) no obstacles in the way.Figure 6. Penetration model: (a) parameters, (b) no obstacles in the way, (c) penetration of obstacles, (d) no obstacles in the way.In Figure 6a, the angle between and is denoted as , the angle between and is denoted as , the distance from obstacle to is denoted as , and the distance between data point and obstacle is denoted as .For data point , we perform the following judgment operations:

- (a)

- Remove the obstacle grid points .

- (b)

- Calculate . If , i.e., if the data point is not on the same side of the robot as the obstacle (as shown by in Figure 6b), then skip to step 6.

- (c)

- Calculate . If , i.e., if the obstacle does not shade the transmitted light (as shown by in Figure 6b), then skip to step 6.

- (d)

- Calculate . If , i.e., if the projected light traverses the obstacle grid and is judged to be a misdetected point (as shown in Figure 6c), then skip to step 7.

- (e)

- Calculate . If , i.e., if the data point is too close to the obstacle grid, then is determined to be a misdetected point, as shown in Figure 6d. In this case, skip to step 7.

- (f)

- Repeat steps (a) to (e) until all obstacle grids are compared.

- Repeat step 6 until all data points in the new frame have been processed.

- Repeat steps 2 to 6 until all data frames are processed.

4.3. Comparison with Existing Filtering Methods

4.3.1. Comparison of the Proposed Clustering Filtering Algorithm with the State-of-the-Art

4.3.2. Comparison of the Proposed Grid Penetration Model-Based Filtering Algorithm with the State-of-the-Art

5. Experimental

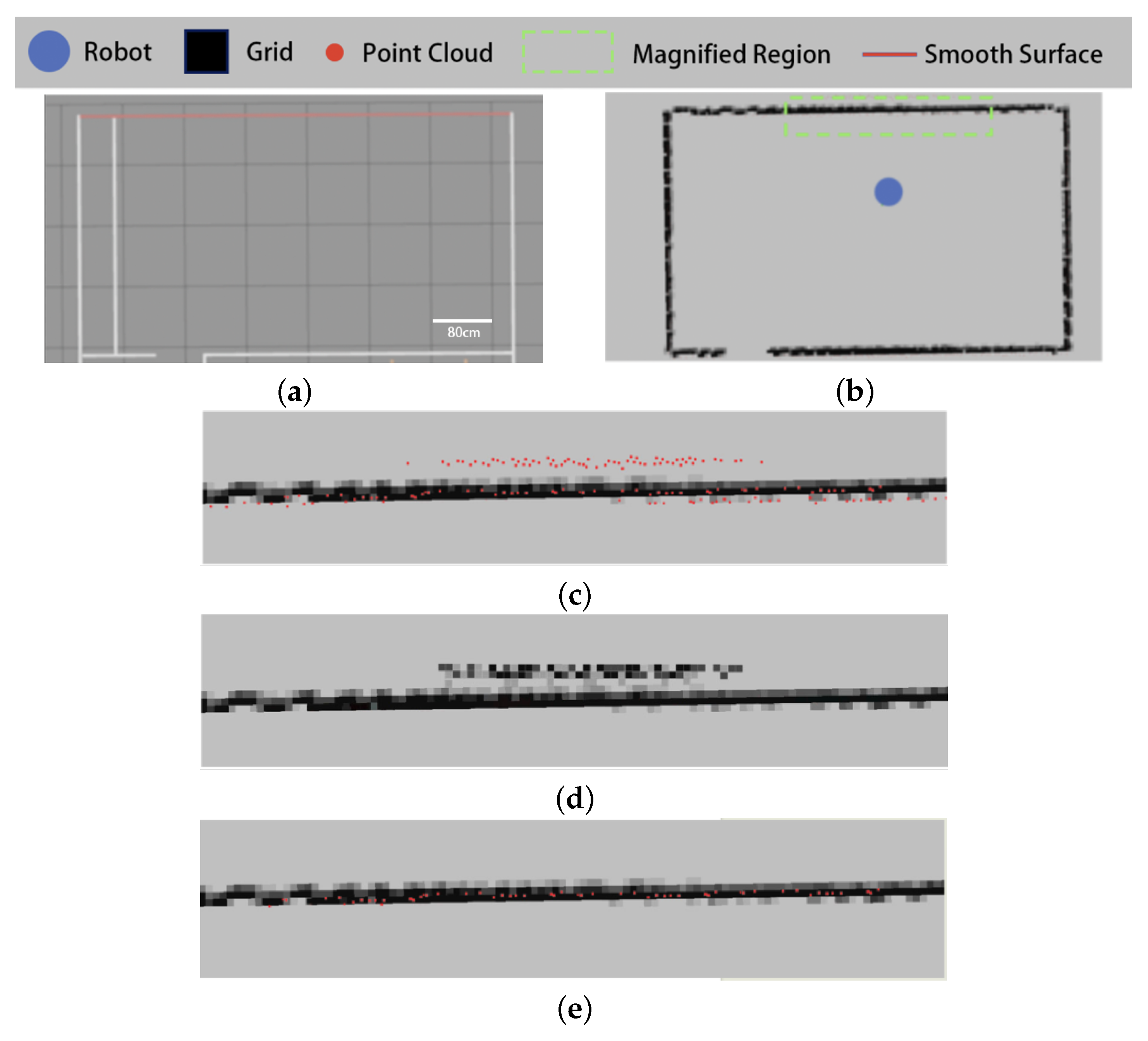

5.1. Experimental Environment

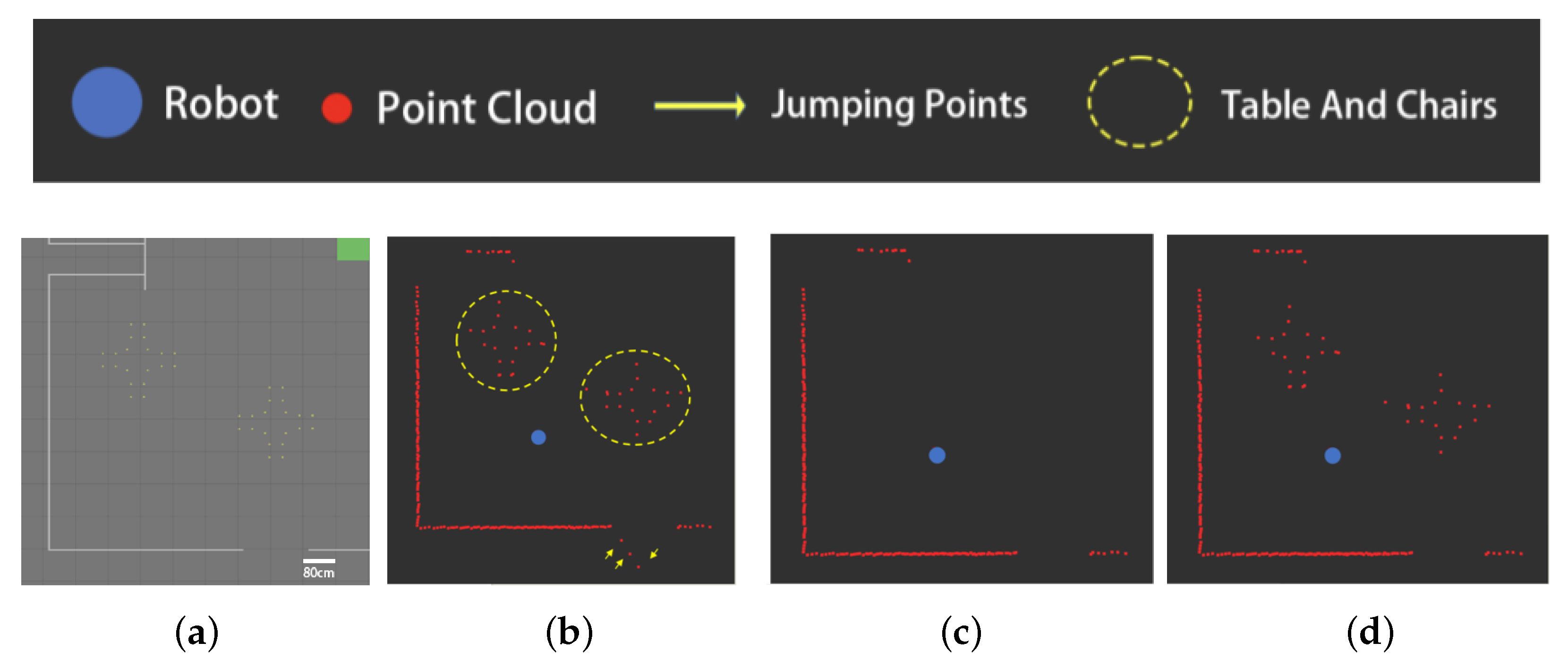

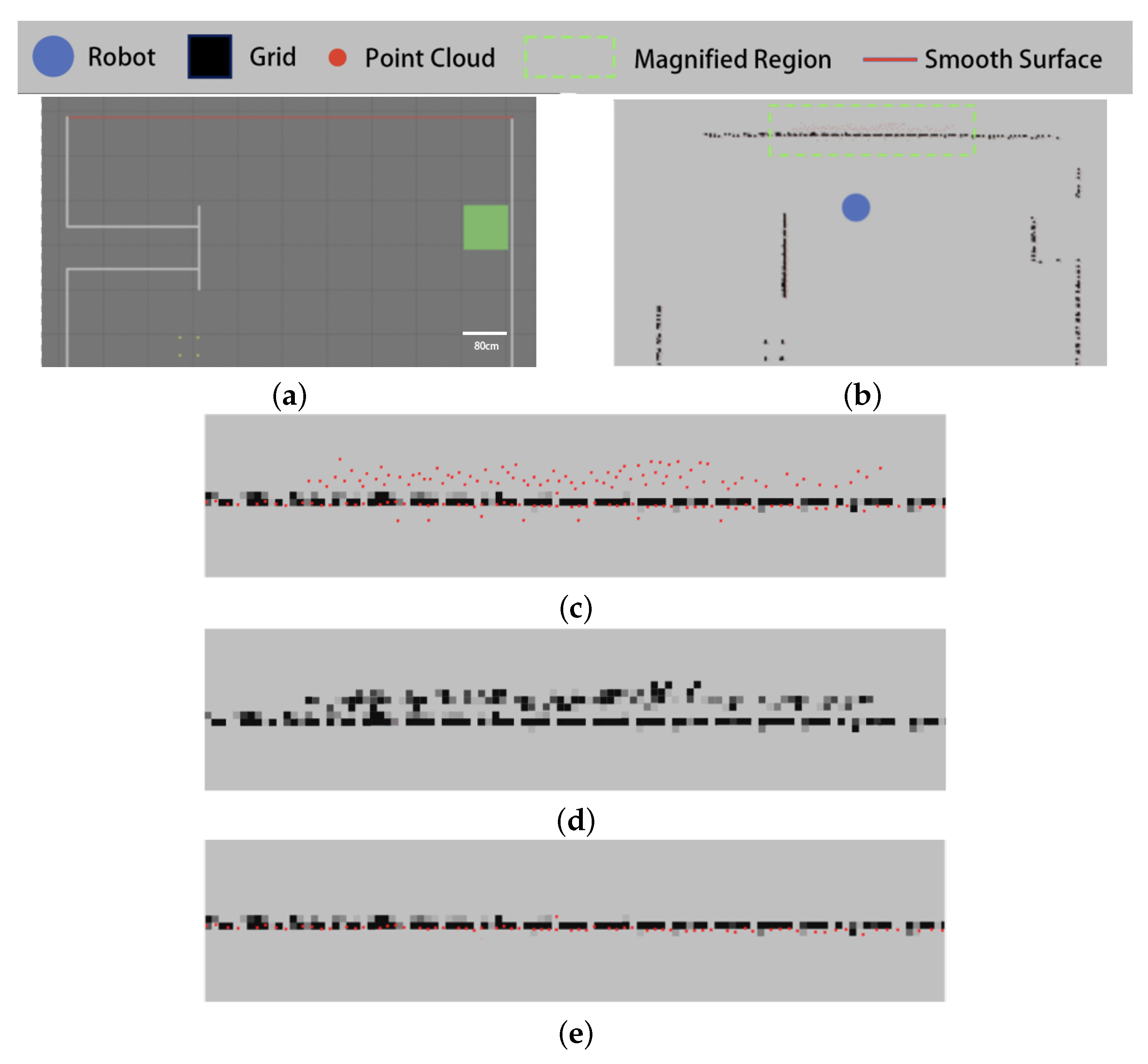

5.2. Experiments on Clustering Filtering Algorithms Based on Radial Distance and Tangential Span

5.3. Experiments on Filtering Algorithm Based on the Grid Penetration Model

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Licardo, J.T.; Domjan, M.; Orehovački, T. Intelligent robotics—A systematic review of emerging technologies and trends. Electronics 2024, 13, 542. [Google Scholar] [CrossRef]

- Kim, H.G.; Yang, J.Y.; Kwon, D.S. Experience based domestic environment and user adaptive cleaning algorithm of a robot cleaner. In Proceedings of the 2014 11th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Kuala Lumpur, Malaysia, 12–15 November 2014; pp. 176–178. [Google Scholar]

- Wang, L.; Chen, Y.; Song, W.; Xu, H. Point Cloud Denoising and Feature Preservation: An Adaptive Kernel Approach Based on Local Density and Global Statistics. Sensors 2024, 24, 1718. [Google Scholar] [CrossRef] [PubMed]

- Wang, B.; Wang, H.; Song, D. A filtering method for LiDAR point cloud based on multi-scale CNN with attention mechanism. Remote Sens. 2022, 14, 6170. [Google Scholar] [CrossRef]

- Zheng, Z.; Zha, B.; Zhou, Y.; Huang, J.; Xuchen, Y.; Zhang, H. Single-stage adaptive multi-scale point cloud noise filtering algorithm based on feature information. Remote Sens. 2022, 14, 367. [Google Scholar] [CrossRef]

- Szutor, P.; Zichar, M. Fast Radius Outlier Filter Variant for Large Point Clouds. Data 2023, 8, 149. [Google Scholar] [CrossRef]

- You, H.; Li, Y.; Qin, Z.; Lei, P.; Chen, J.; Shi, X. Research on Multilevel Filtering Algorithm Used for Denoising Strong and Weak Beams of Daytime Photon Cloud Data with High Background Noise. Remote Sens. 2023, 15, 4260. [Google Scholar] [CrossRef]

- Wang, T. Research on Pedestrian Detection and Tracking Based on 2D Lidar. Master’s Thesis, Beijing University of Posts and Telecommunications, Beijing, China, 2021. [Google Scholar]

- Narine, L.L.; Popescu, S.; Neuenschwander, A.; Zhou, T.; Srinivasan, S.; Harbeck, K. Estimating aboveground biomass and forest canopy cover with simulated ICESat-2 data. Remote Sens. Environ. 2019, 224, 1–11. [Google Scholar] [CrossRef]

- Digne, J.; de Franchis, C. The Bilateral Filter for Point Clouds. Image Process. On Line 2017, 7, 278–287. [Google Scholar] [CrossRef]

- Cai, S.; Zhang, W.; Liang, X.; Wan, P.; Qi, J.; Yu, S.; Yan, G.; Shao, J. Filtering Airborne LiDAR Data Through Complementary Cloth Simulation and Progressive TIN Densification Filters. Remote Sens. 2019, 11, 1037. [Google Scholar] [CrossRef]

- Liu, K.; Wang, W.; Tharmarasa, R.; Wang, J.; Zuo, Y. Ground Surface Filtering of 3D Point Clouds Based on Hybrid Regression Technique. IEEE Access 2019, 7, 23270–23284. [Google Scholar] [CrossRef]

- Zhang, H.; Wu, D.; Yao, T. Research on AGV trajectory tracking control based on double closed-loop and PID control. J. Phys. Conf. Ser. 2018, 1074, 012136. [Google Scholar] [CrossRef]

- Malayjerdi, E.; Kalani, H.; Malayjerdi, M. Self-Tuning Fuzzy PID Control of a Four-Mecanum Wheel Omni-directional Mobile Platform. In Proceedings of the Electrical Engineering (ICEE), Iranian Conference on, Mashhad, Iran, 8–10 May 2018; pp. 816–820. [Google Scholar] [CrossRef]

- Ning, X.; Li, F.; Tian, G.; Wang, Y. An efficient outlier removal method for scattered point cloud data. PLoS ONE 2018, 13, e0201280. [Google Scholar] [CrossRef] [PubMed]

- Huang, Y.; Bi, H.; Li, Z.; Mao, T.; Wang, Z. STGAT: Modeling Spatial-Temporal Interactions for Human Trajectory Prediction. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, South Korea, 27 October–2 November 2019; pp. 6271–6280. [Google Scholar] [CrossRef]

- Zhu, X.; Nie, S.; Wang, C.; Xi, X.; Hu, Z. A Ground Elevation and Vegetation Height Retrieval Algorithm Using Micro-Pulse Photon-Counting Lidar Data. Remote Sens. 2018, 10, 1962. [Google Scholar] [CrossRef]

- Zhu, X.; Nie, S.; Wang, C.; Xi, X.; Wang, J.; Li, D.; Zhou, H. A Noise Removal Algorithm Based on OPTICS for Photon-Counting LiDAR Data. IEEE Geosci. Remote Sens. Lett. 2021, 18, 1471–1475. [Google Scholar] [CrossRef]

- Zhang, G.; Xu, Q.; Xing, S.; Li, P.; Zhang, X.; Wang, D.; Dai, M. A noise-removal algorithm without input parameters based on quadtree isolation for photon-counting LiDAR. IEEE Geosci. Remote Sens. Lett. 2021, 19, 6501905. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, G.; Cao, H.; Hu, K.; Wang, Q.; Deng, Y.; Gao, J.; Tang, Y. Geometry-Aware 3D Point Cloud Learning for Precise Cutting-Point Detection in Unstructured Field Environments. J. Field Robot. 2025, 42, 514–531. [Google Scholar] [CrossRef]

- Ghadimzadeh Alamdari, A.; Zade, F.A.; Ebrahimkhanlou, A. A Review of Simultaneous Localization and Mapping for the Robotic-Based Nondestructive Evaluation of Infrastructures. Sensors 2025, 25, 712. [Google Scholar] [CrossRef]

- Gonizzi Barsanti, S.; Marini, M.R.; Malatesta, S.G.; Rossi, A. Evaluation of Denoising and Voxelization Algorithms on 3D Point Clouds. Remote Sens. 2024, 16, 2632. [Google Scholar] [CrossRef]

- Chen, C.; Guo, J.; Wu, H.; Li, Y.; Shi, B. Performance Comparison of Filtering Algorithms for High-Density Airborne LiDAR Point Clouds over Complex LandScapes. Remote Sens. 2021, 13, 2663. [Google Scholar] [CrossRef]

- Xie, J.; Zhong, J.; Mo, F.; Liu, R.; Li, X.; Yang, X.; Zeng, J. Denoising and Accuracy Evaluation of ICESat-2/ATLAS Photon Data for Nearshore Waters Based on Improved Local Distance Statistics. Remote Sens. 2023, 15, 2828. [Google Scholar] [CrossRef]

- Li, J.; Si, G.; Liang, X.; An, Z.; Tian, P.; Zhou, F. Partition-Based Point Cloud Completion Network with Density Refinement. Entropy 2023, 25, 1018. [Google Scholar] [CrossRef]

- Vazquez, G.D.B.; Lacapmesure, A.M.; Martínez, S.; Martínez, O.E. SUPPOSe 3Dge: A method for super-resolved detection of surfaces in volumetric fluorescence microscopy. J. Opt. Photonics Res. 2024. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cao, Y.; Huang, Y.; Ni, J. A Two-Step Filtering Approach for Indoor LiDAR Point Clouds: Efficient Removal of Jump Points and Misdetected Points. Sensors 2025, 25, 5937. https://doi.org/10.3390/s25195937

Cao Y, Huang Y, Ni J. A Two-Step Filtering Approach for Indoor LiDAR Point Clouds: Efficient Removal of Jump Points and Misdetected Points. Sensors. 2025; 25(19):5937. https://doi.org/10.3390/s25195937

Chicago/Turabian StyleCao, Yibo, Yonghao Huang, and Junheng Ni. 2025. "A Two-Step Filtering Approach for Indoor LiDAR Point Clouds: Efficient Removal of Jump Points and Misdetected Points" Sensors 25, no. 19: 5937. https://doi.org/10.3390/s25195937

APA StyleCao, Y., Huang, Y., & Ni, J. (2025). A Two-Step Filtering Approach for Indoor LiDAR Point Clouds: Efficient Removal of Jump Points and Misdetected Points. Sensors, 25(19), 5937. https://doi.org/10.3390/s25195937