A Lightweight Hybrid Detection System Based on the OpenMV Vision Module for an Embedded Transportation Vehicle

Abstract

1. Introduction

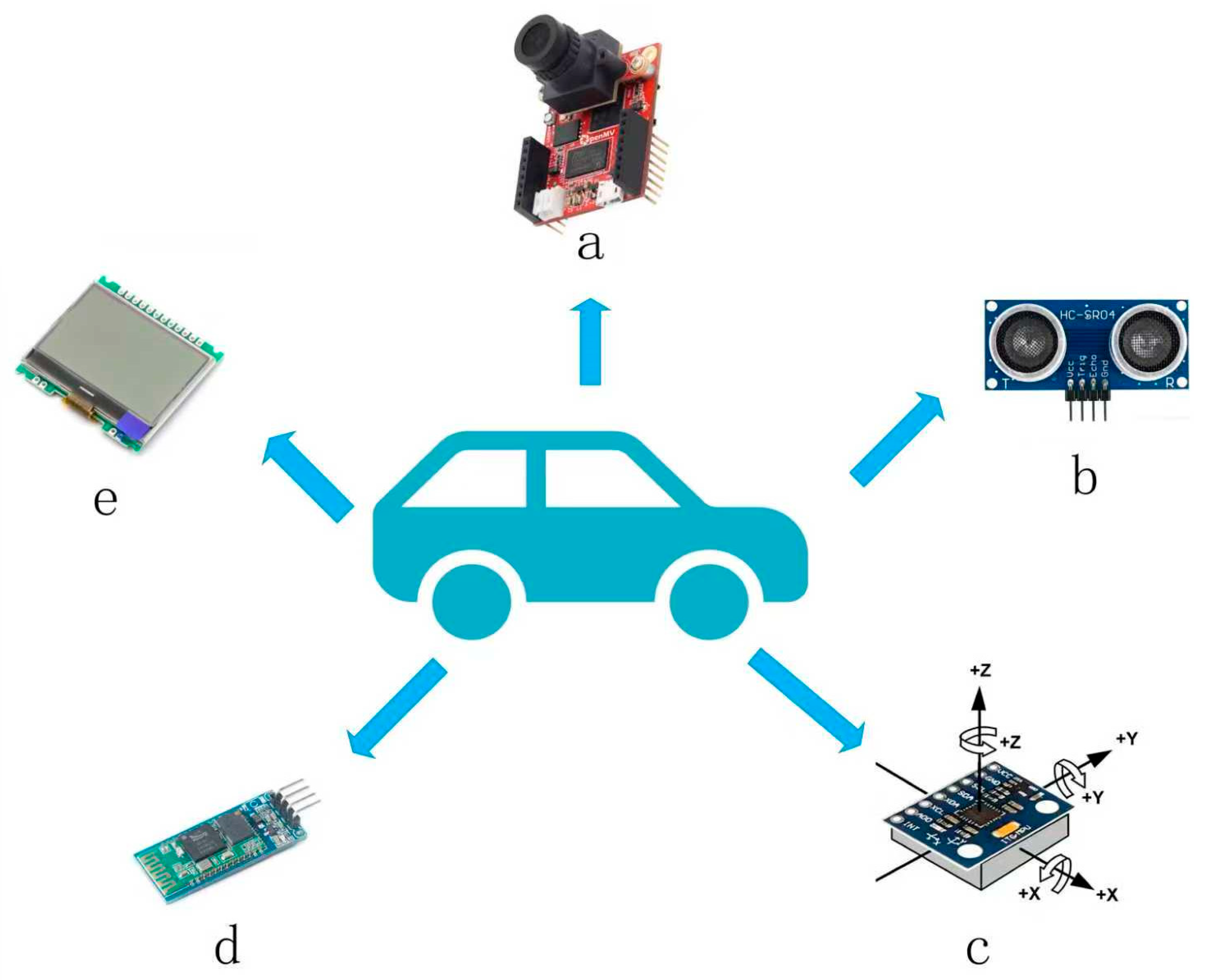

2. Hardware and System Design

- OLED Display System: Equipped with a high-contrast OLED display, it visualizes in real time motion parameters, sensor data, and functional status, constructing an intuitive human–machine interaction interface.

- Ultrasonic Obstacle Avoidance System: Featuring an HC-SR04 ultrasonic module, it scans a 180° fan-shaped area to real-time detect obstacles within 0.02–4 m. Combined with dynamic obstacle avoidance algorithms, it automatically plans safe paths, enhancing operational safety in complex environments.

- MPU6050 Attitude Correction Unit: Integrating a six-axis inertial measurement unit, it collects real-time three-axis acceleration and angular velocity data, realizing motion attitude calculation via complementary filtering algorithms. It has dynamic trajectory correction capability: when external forces cause heading deviation, it completes attitude calibration and returns to the preset route within 200 ms, while supporting 0–360° precise angle rotation control.

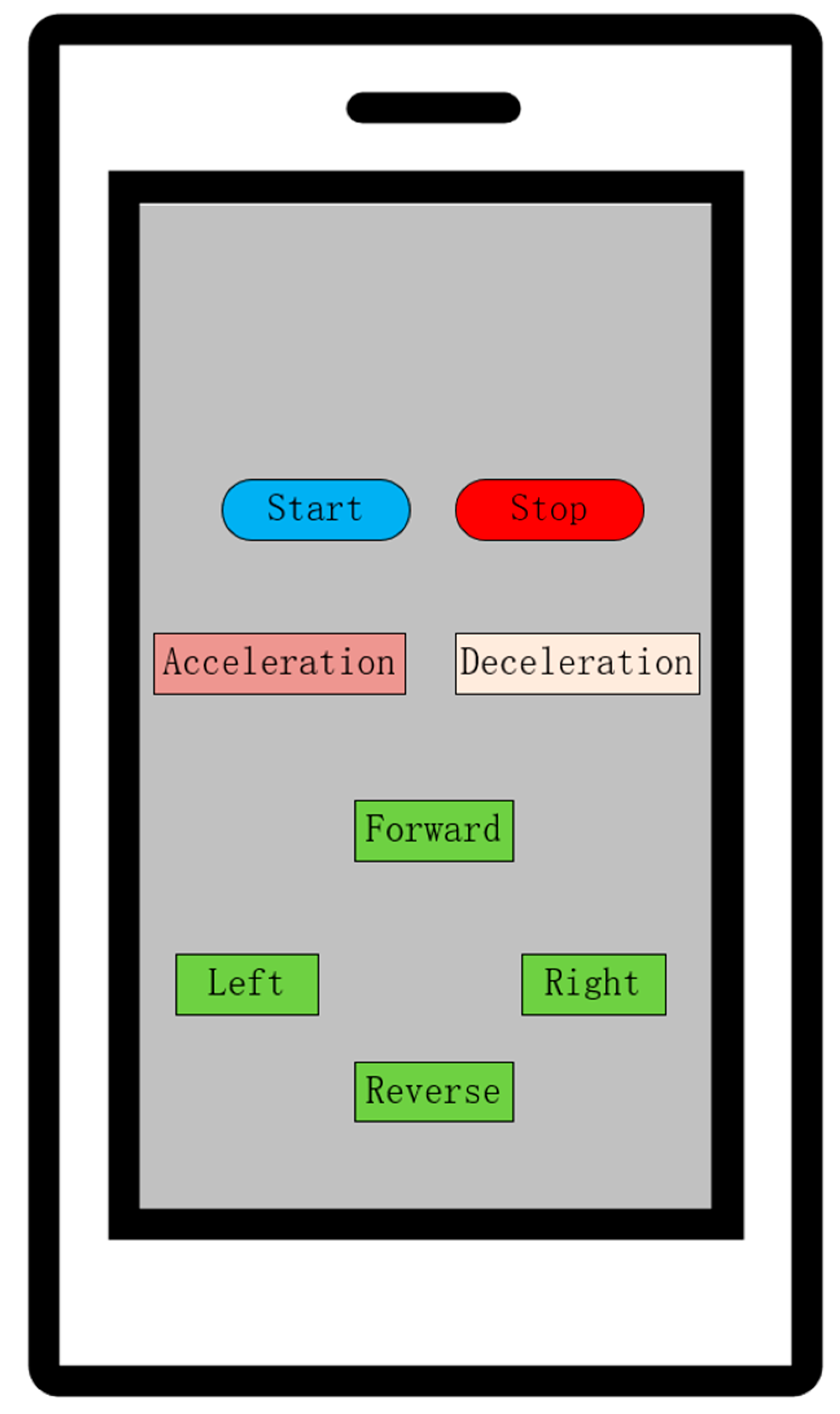

- Bluetooth Wireless Control Module: Using the BLE 5.0 communication protocol, it establishes a 10 m radius wireless control range, enabling experimenters to remotely control basic actions of the cart (start/stop, speed adjustment, steering) via a mobile APP.

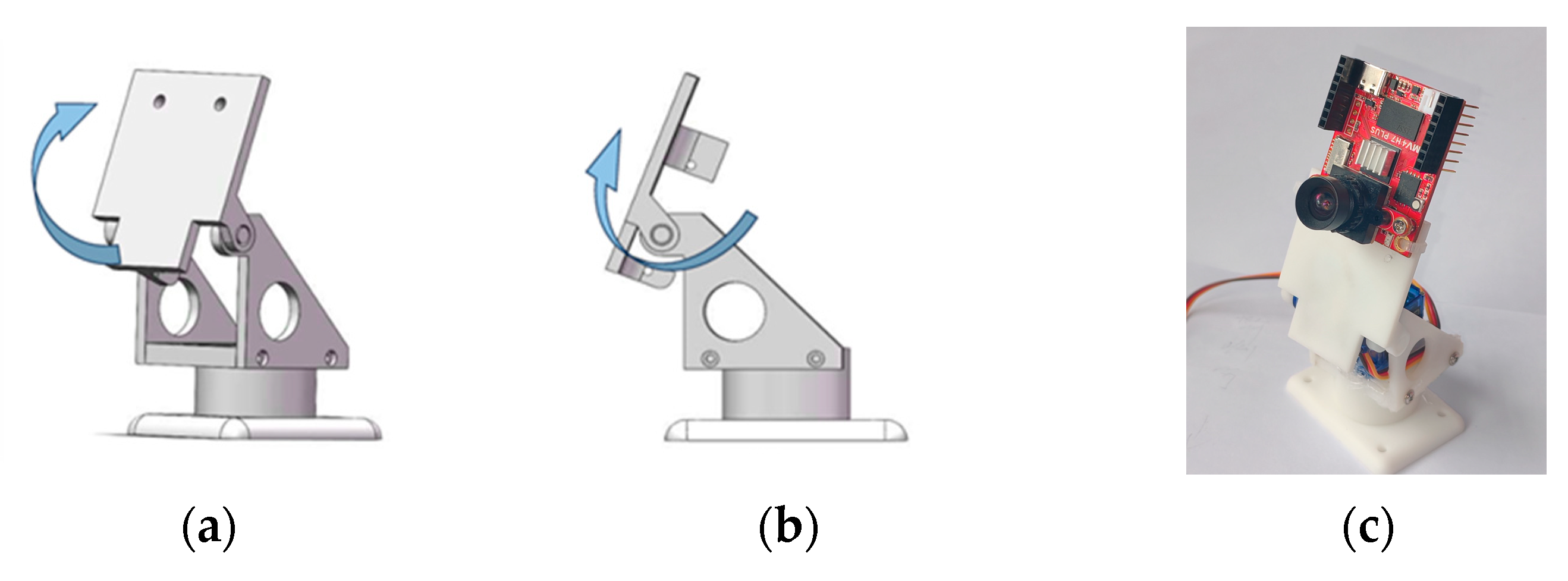

- OpenMV Visual Navigation System: Equipped with an OV5640 camera (OV5640, OmniVision Technologies, Inc., Santa Clara, CA, USA), it performs real-time recognition and positioning of red-marked target areas based on machine vision algorithms. The STM32 main control chip processes image data and generates motion control commands, constructing a “visual perception-decision control” closed-loop system that supports precise target area arrival and dynamic following in cargo transportation scenarios.

2.1. Speed and Mileage Display of DC Gear Reduction Encoder Motor and OLED Screen

2.2. MPU6050 Six-Axis Sensor Attitude Correction Technology

2.3. Ultrasonic Module HC-SR04 and Mobile Phone Remote Control

3. Experiment and Verification

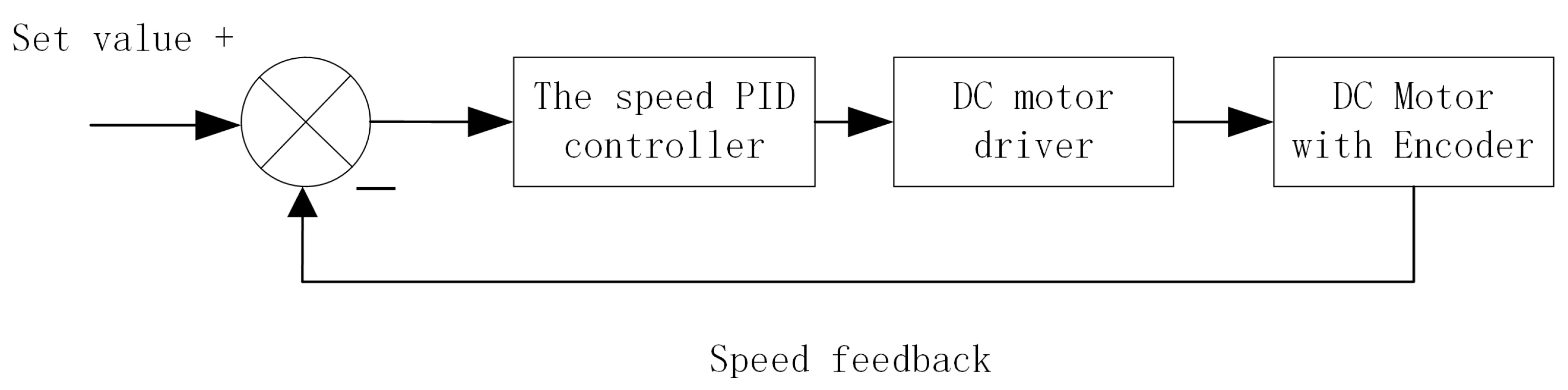

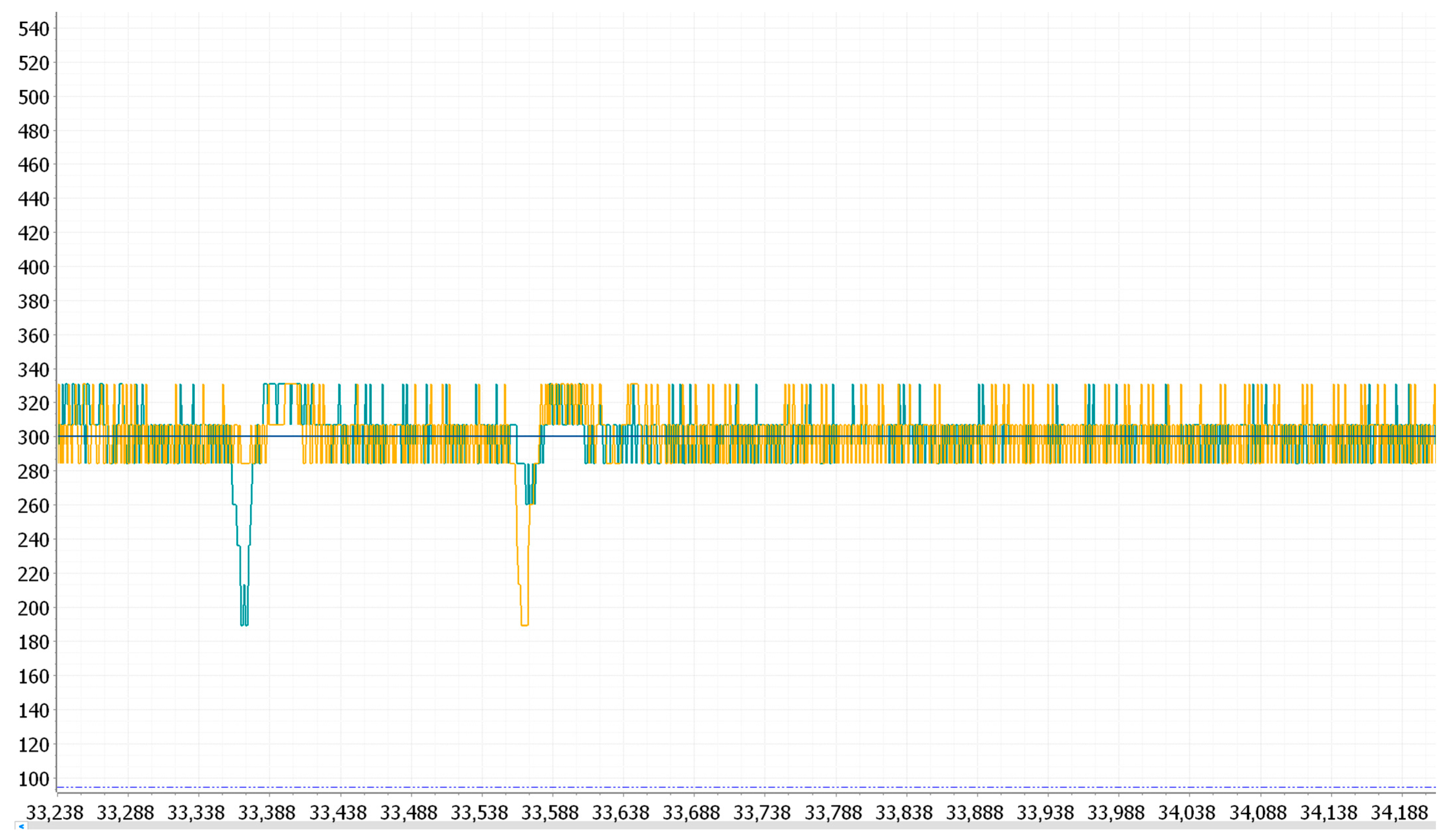

3.1. A4950 Motor Drive and PWM

3.2. Motor Speed and Direction Control

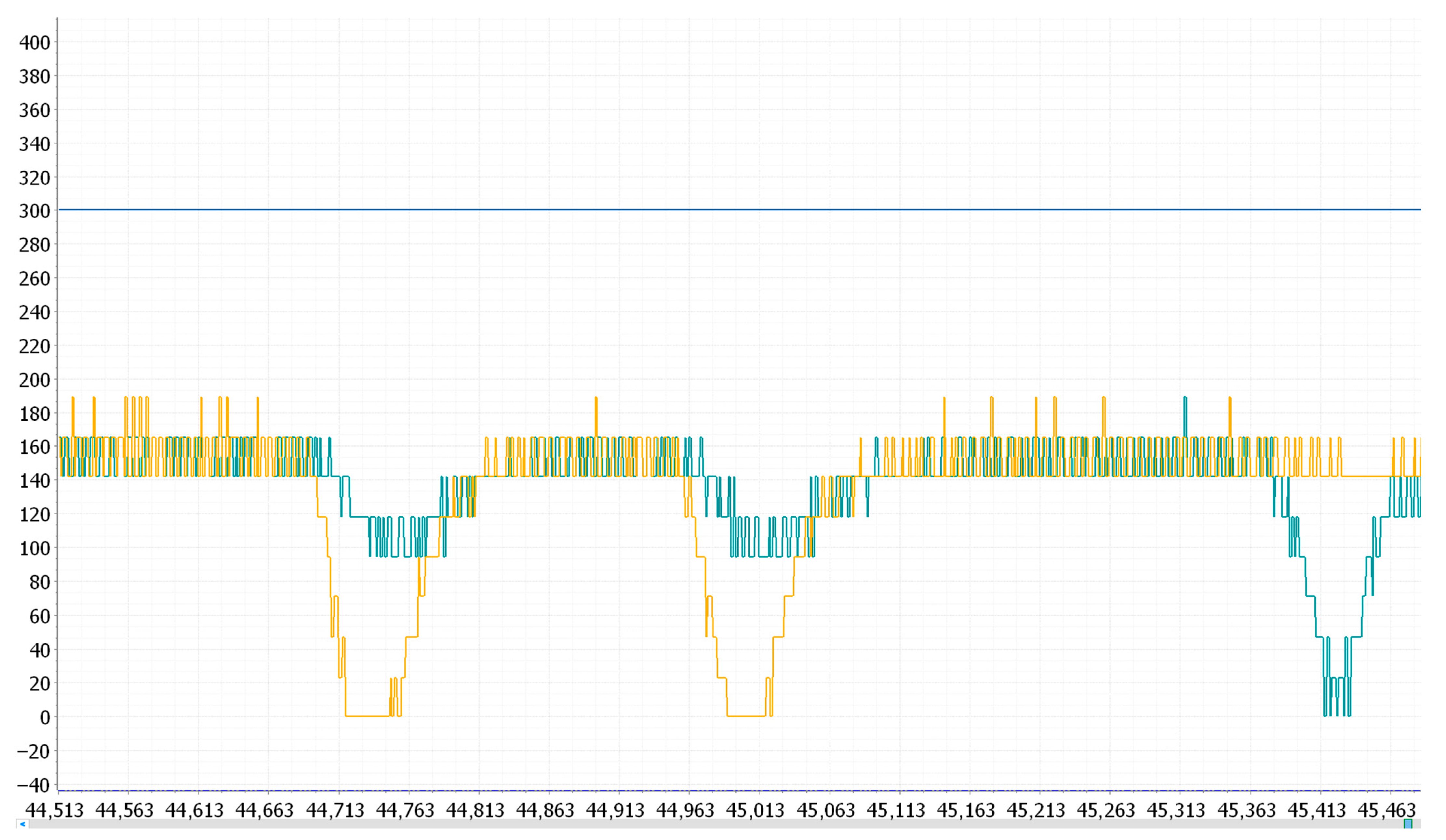

3.3. Debugging Experiment of Speed Control

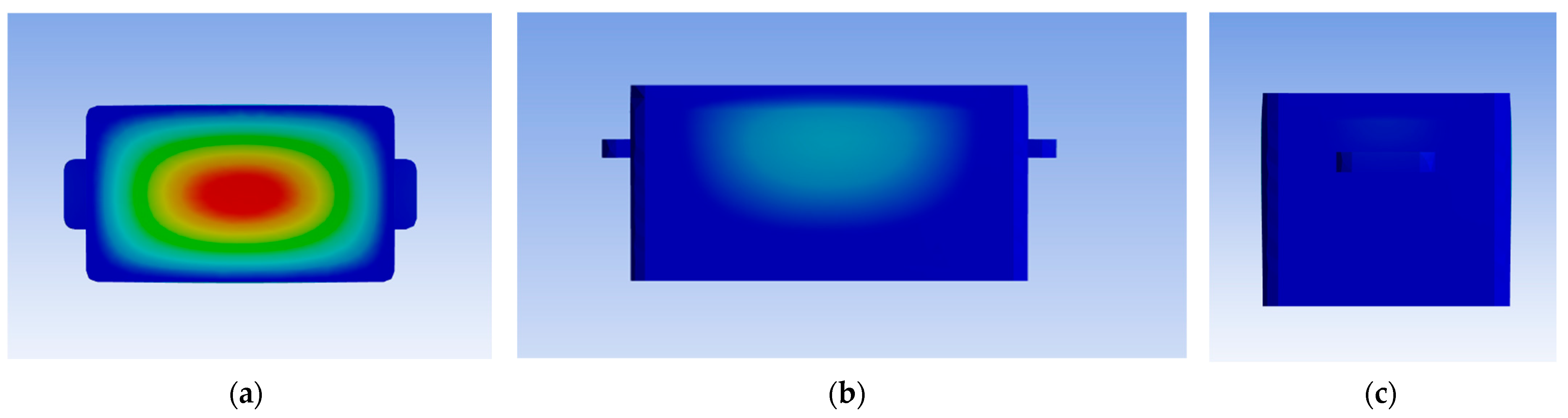

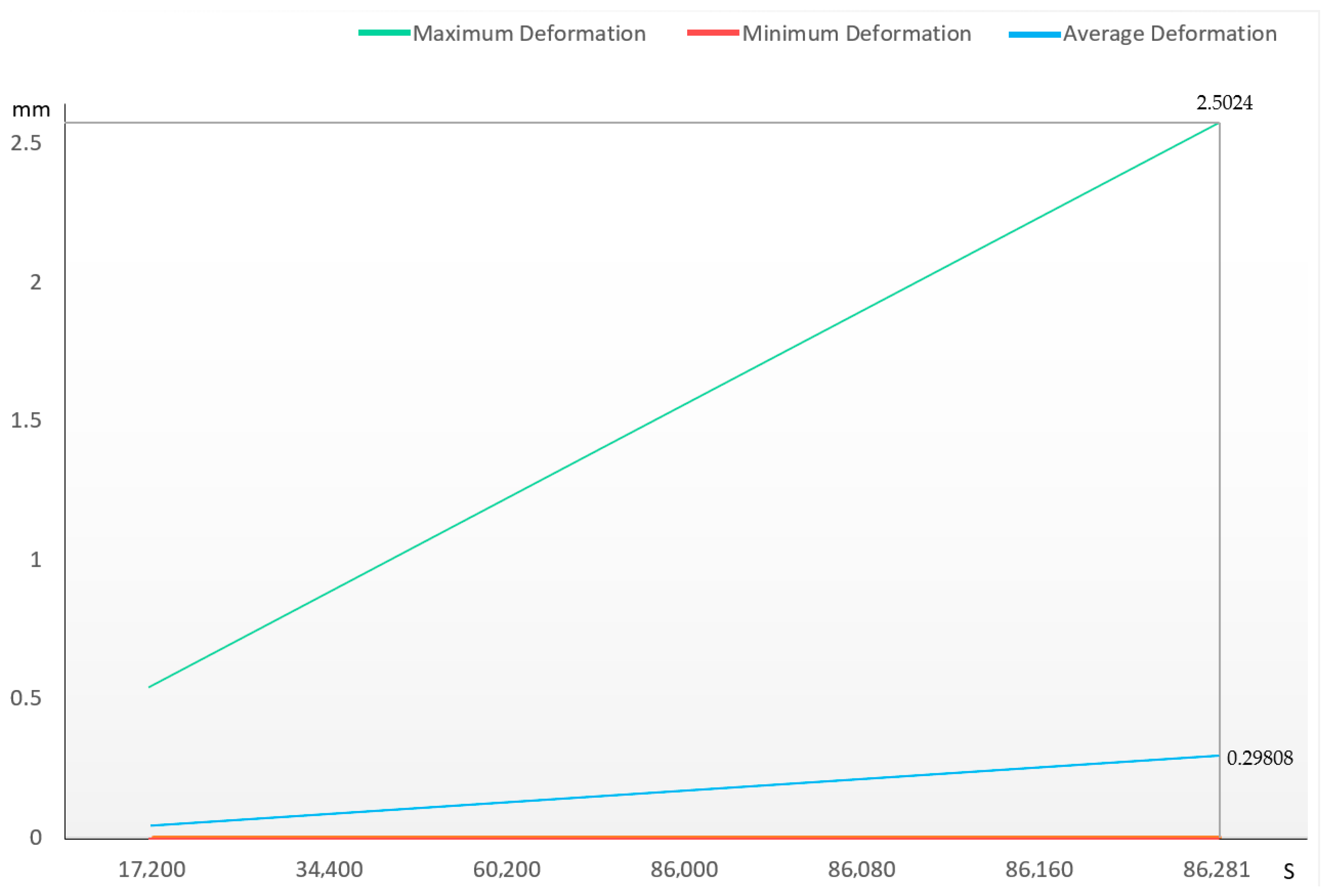

3.4. Static Load Stacking Test for Transport Boxes

- The basic balance equation is shown in Formula (8).

- 2.

- The maximum/minimum deformation calculation formula is shown in (9) and (10).

- 3.

- The average deformation calculation formula is shown in (11).

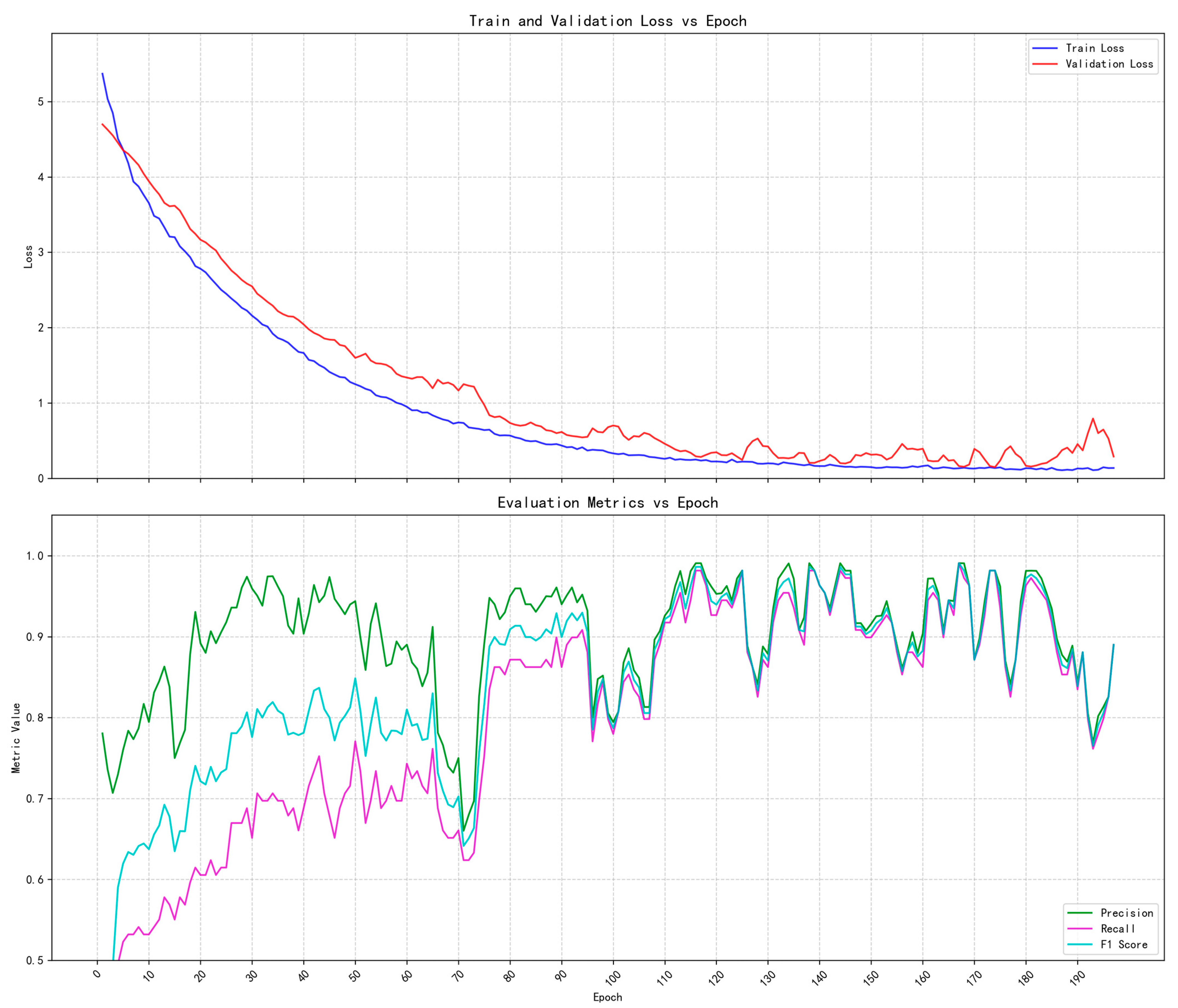

4. OpenMV Visual Processing

4.1. Device and Working Principle

4.2. Vision-Based Target Tracking

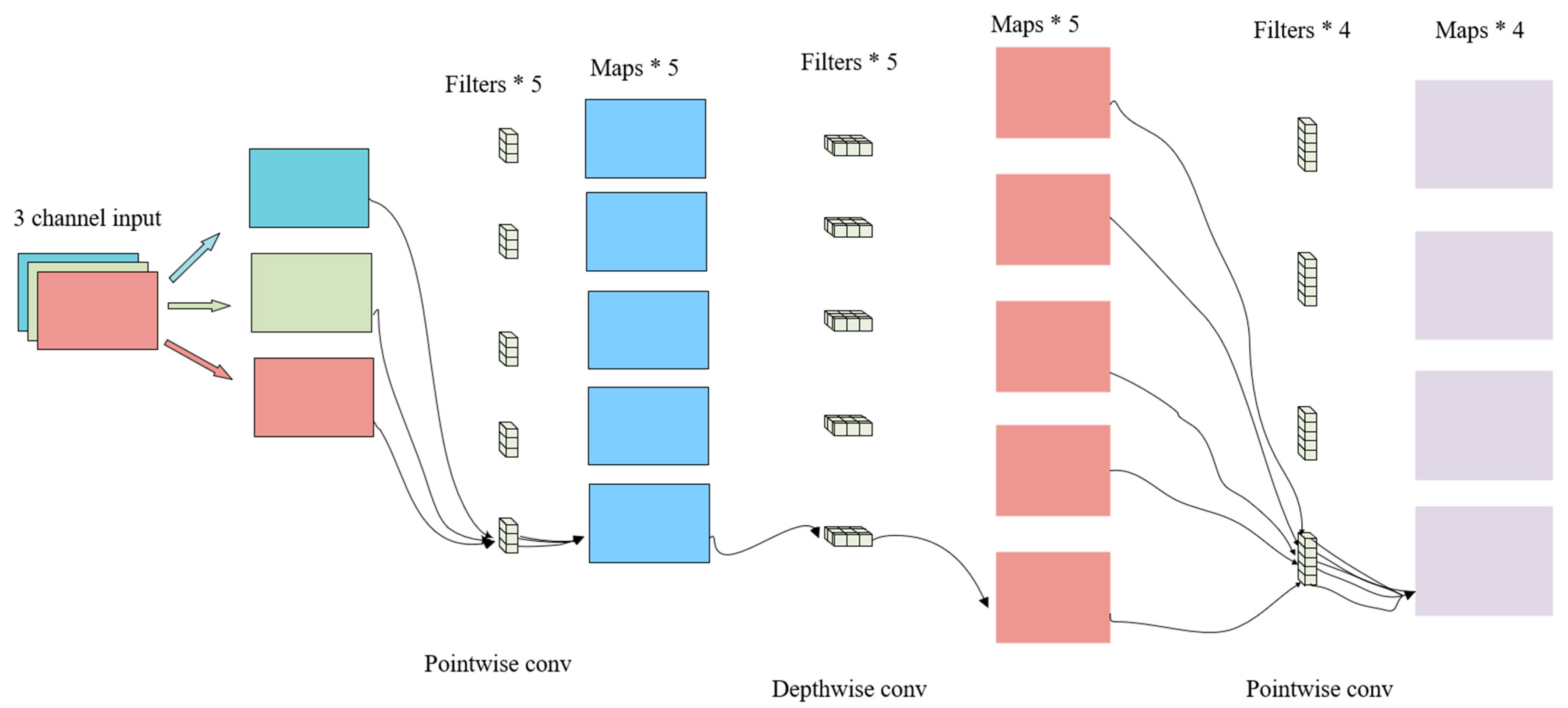

4.3. Object Detection and the Principle of FOMO MobileNetV2 Network

4.4. Comparative Analysis of Model Architecture and Technical Characteristics

4.5. OpenMV’s Real-Time Visual Tracking System

5. Finished Product Design and Limitation Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Qiu, C.; Tang, H.; Yang, Y. Machine vision-based autonomous road hazard avoidance system for self-driving vehicles. Sci. Rep. 2024, 14, 12178. [Google Scholar] [CrossRef]

- Hu, X.; Long, C.; Bo, T.; Cao, D.; He, H. Dynamic Path Planning for Autonomous Driving on Various Roads with Avoidance of Static and Moving Obstacles. Mech. Syst. Signal Process. 2018, 100, 482–500. [Google Scholar] [CrossRef]

- Lu, Y.W.; Tang, W.T.; Li, Y.Z.; Wei, C.G. Automatic Cross-Modal Joint Calibration of LiDAR and Cameras. Automot. Pract. Technol. 2025, 50, 41–47. [Google Scholar] [CrossRef]

- Zhou, Z.; Song, Z.; Cui, Y. Real-time kiwifruit detection in orchard using deep learning on AndroidTM smartphones for yield estimation. Comput. Electron. Agric. 2020, 179, 105856. [Google Scholar] [CrossRef]

- Liu, X.F. Research on Dynamic Visual SLAM for Mobile Robots Based on Semantic Segmentation and Object Detection. Master’s Thesis, Central South University of Forestry and Technology, Changsha, China, 2025. [Google Scholar]

- Li, N.; Ho, C.; Lee, L. A Progress Review on Solid-State LiDAR and Nanophotonics-Based LiDAR Sensors. Laser Photonics Rev. 2022, 16, 2100511. [Google Scholar] [CrossRef]

- Barbu, T. An Automatic Face Detection System for RGB Images. Int. J. Comput. Commun. Control 2011, 6, 21–32. [Google Scholar] [CrossRef]

- Nie, Y.Y.; Qi, Y.F.; Zhan, B.R.; Li, X.Q. Modular Experimental Application Based on 51 and STM32 Microcontroller. Inf. Comput. 2022, 34, 237–239. [Google Scholar]

- Mehta, A.; Singh, R.; Kumar, V. On 3D printed polyvinylidene fluoride-based smart energy storage devices. J. Thermoplast. Compos. Mater. 2024, 37, 1921–1937. [Google Scholar] [CrossRef]

- Park, B.; Kim, S.; Su, K. Study on Compensation Method of Encoder Pulse Errors for Permanent Magnet Synchronous Motor Control. Mathematics 2024, 12, 3019. [Google Scholar] [CrossRef]

- Sharbati, R.; Rahimi, R.; Amindavar, H. Detection and extraction of velocity pulses of near-fault ground motions using asymmetric Gaussian chirplet model. Soil Dyn. Earthq. Eng. 2020, 133, 106123. [Google Scholar] [CrossRef]

- Lin, S.; Nguyen, D.; Chen, H. Prediction of OLED temperature distribution based on a neural network model. Microsyst. Technol. 2022, 28, 2215–2224. [Google Scholar] [CrossRef]

- Nagy, R.; Kummer, A.; Abonyi, J.; Szalai, I. Machine learning-based soft-sensor development for road quality classification. J. Vib. Control. 2024, 30, 2672–2684. [Google Scholar] [CrossRef]

- Wang, H.; Wang, C. Intelligent Obstacle Avoidance Patrol Car based on Raspberry Pi 4B. In Proceedings of the 4th International Symposium on Application of Materials Science and Energy Materials, Tianjin, China, 26 December 2020; pp. 969–975. [Google Scholar]

- Kumar, A.; Sarangi, A.; Mani, I. Evaluation of Ultrasonic Sensor for Flow Measurement in Open Channel. J. Sci. Ind. Res. 2023, 82, 1091–1099. [Google Scholar] [CrossRef]

- Adarsh, S.; Ramachandran, K.; Nair, B. Improving Range Estimation Accuracy of an Ultrasonic Sensor Using an Adaptive Neuro-Fuzzy Inference System. Int. J. Robot. Autom. 2022, 37, 200–208. [Google Scholar] [CrossRef]

- Nadh, G.; Rahul, A. Clamping Modulation Scheme for Low-Speed Operation of Three-Level Inverter Fed Induction Motor Drive With Reduced CMV. IEEE Trans. Ind. Appl. 2022, 58, 7336–7345. [Google Scholar] [CrossRef]

- Xiang, Y.; Zhang, T.; Zhao, S.; Zhou, L.; Tian, M.; Gong, H. A PID Tracking Car Based on STM32. In Proceedings of the 2022 International Conference on Artificial Life and Robotics (ICARO 2022), Online, 20–23 January 2022; Volume 27, pp. 97–100. [Google Scholar] [CrossRef]

- Hu, Y.; Wang, F.Q.; Qin, L.F.; Gong, J.; Liu, B.K. Application of Fuzzy PID Control Algorithm Based on Genetic Self-tuning in Constant Temperature Incubator. In Proceedings of the 2020 Chinese Control and Decision Conference (CCDC), Hefei, China, 23 August 2020; pp. 172–177. [Google Scholar]

- Zhou, X.; Zhang, J.; Wu, D. Linear programming-based proportional-integral-derivative control of positive systems. IET Control Theory Appl. 2023, 17, 1342–1353. [Google Scholar] [CrossRef]

- GB/T 4857.3-2008/ISO 2234:2000; Packaging—Basic Tests for Transport Packages—Part 3: Stacking Tests Using Static Loads. China Standards Press: Beijing, China, 2008.

- Kim, Y.; Choe, H.; Cha, C.; Im, G.; Jeong, J.; Yang, I. Influence of Stacking Sequence Conditions on the Characteristics of Impact Collapse using CFRP Thin-Wall Structures. Trans. Korean Soc. Mech. Eng. A 2000, 24, 2945–2951. [Google Scholar]

- Li, G.; Komi, S.; Berg, R. A Real-Time Vision-Based Adaptive Follow Treadmill for Animal Gait Analysis. Sensors 2025, 25, 4289. [Google Scholar] [CrossRef] [PubMed]

- Yang, X.; Zhang, Y.; Li, H. Design and experimental study of plasma device for accurate contour scanning. Vacuum 2022, 205, 111442. [Google Scholar] [CrossRef]

- Zhu, Q.; Zhuang, H.; Meng, R. A study on expression recognition based on improved mobilenetV2 network. Sci. Rep. 2024, 14, 8121. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar] [CrossRef]

- Crupi, L.; Butera, L.; Ferrante, A.; Palossi, D. A Deep Learning-based Pest Insect Monitoring System for Ultra-low Power Pocket-sized Drones. In Proceedings of the 2024 20th International Conference on Distributed Computing in Smart Systems and the Internet of Things (DCOSS-IoT), Abu Dhabi, United Arab Emirates, 29 April–1 May 2024. [Google Scholar] [CrossRef]

- Ogundokun, R.; Awotunde, J.; Imoize, A. Deep Transfer Learning Models for Mobile-Based Ocular Disorder Identification on Retinal Images. Comput. Mater. Contin. 2024, 80, 139–161. [Google Scholar] [CrossRef]

- Lobur, Y.; Vonsevych, K.; Bezugla, N. Spatial identification of manipulable objects for a bionic hand prosthesis. Appl. Comput. Sci. 2025, 21, 18–30. [Google Scholar] [CrossRef]

- Ding, Y.; Huang, H.; Zhao, Y. A Non-Destructive Method for Identification of Tea Plant Cultivars Based on Deep Learning. Forests 2023, 14, 728. [Google Scholar] [CrossRef]

- Novak, M.; Dolezal, P.; Prokysek, M. Intelligent inspection probe for monitoring bark beetle activities using embedded IoT real-time object detection. Eng. Sci. Technol. Int. J. 2024, 51, 101637. [Google Scholar] [CrossRef]

- Lawal, O. YOLOv5-LiNet: A lightweight network for fruits instance segmentation. PLoS ONE 2023, 18, e0282297. [Google Scholar] [CrossRef] [PubMed]

- Sun, P.; Qi, X.; Zhong, R. A Roadside Precision Monocular Measurement Technology for Vehicle-to-Everything (V2X). Sensors 2024, 24, 5730. [Google Scholar] [CrossRef]

| Variable Name | Initial Value | Functional Description |

|---|---|---|

| pidMPU6050YawMovement.actual_val | 0.0 | Current actual angle value measured by MPU6050 |

| pidMPU6050YawMovement.err | 0.0 | Instantaneous error (target angle—actual angle) for PID calculation |

| pidMPU6050YawMovement.err_last | 0.0 | Previous cycle’s error value (used for derivative term calculation) |

| pidMPU6050YawMovement.err_sum | 0.0 | Cumulative error sum |

| pidMPU6050YawMovement.Kp | 0.02 | Proportional gain coefficient: adjusts control output linearly with current error |

| pidMPU6050YawMovement.Ki | 0 | Integral gain coefficient: currently disabled |

| pidMPU6050YawMovement.Kd | 0.1 | Derivative gain coefficient: suppresses dynamic overshoot by responding to the error rate of change |

| Stage | Module | Input Dim (B × H × W × C) | Output Dim (B × H × W × C) | Key Operations | Functional Description |

|---|---|---|---|---|---|

| 1 | Input | 1 × 256 × 256 × 3 | - | Image Normalization | RGB input preprocessing |

| 2 | DW Conv1 | 1 × 256 × 256 ×3 | 1 × 128 × 128 × 32 | Depthwise Separable Conv Stride = 2 | Initial feature extraction 4× spatial downsampling |

| 3 | DW Conv2 | 1 × 128 × 128 × 32 | 1 × 64 × 64 × 64 | Depthwise Separable Conv Stride = 2 | Mid-level feature extraction Additional 2× downsampling |

| 4 | DW Conv3 | 1 × 64 × 64 × 64 | 1 × 32 × 32 × 128 | Depthwise Separable Conv Stride = 2 | High-level semantic features Final 2× downsampling (OS = 8) |

| 5 | Truncation | 1 × 32 × 32 × 128 | 1 × 32 × 32 × 128 | Network truncation | Preserves 32 × 32 resolution Prevents over-abstraction |

| 6 | Head | 1 × 32 × 32 × 128 | 1 × 32 × 32 × (C + 2) | 1×1 Convolution | Channel compression and fusion Output: class logits + offsets |

| 7 | Logits | 1 × 32 × 32 × (C + 2) | 1 × 32 × 32 × (C + 2) | Raw output | First C channels: class scores Last two channels: (dx, dy) offsets |

| 8 | Sigmoid | 1 × 32 × 32 × (C + 2) | 1 × 32 × 32 × (C + 2) | Sigmoid activation | First C channels → [0, 1] probabilities Offsets remain unchanged |

| 9 | Heatmap | 1 × 32 × 32 × (C + 2) | 1 × 32 × 32 × 1 | max(prob) operation | Visual heatmap generation Highlights target center regions |

| 10 | Post-process | 1 × 32 × 32 × (C + 2) | Object coordinates | Coordinate conversion + NMS | Final localization |

| BACKGROUND | LEFT | REVERSE | RIGHT | STOP | |

|---|---|---|---|---|---|

| BACKGROUND | 100% | 0% | 0% | 0% | 0% |

| LEFT | 2.3% | 72.7% | 10.5% | 3.9% | 8.6% |

| REVERSE | 8.7% | 13.0% | 54.1% | 18.8% | 6.4% |

| RIGHT | 9.4% | 2.2% | 13.6% | 67.7% | 7.1% |

| STOP | 5.9% | 7.3% | 4.3% | 6.7% | 75.9% |

| F1-SCORE | 0.88 | 0.74 | 0.59 | 0.68 | 0.76 |

| BACKGROUND | LEFT | REVERSE | RIGHT | STOP | |

|---|---|---|---|---|---|

| BACKGROUND | 100% | 0% | 0% | 0% | 0% |

| LEFT | 2.8% | 93.3% | 0% | 3.9% | 0% |

| REVERSE | 3.7% | 2.0% | 94.5% | 0% | 0% |

| RIGHT | 2.5% | 0% | 3.9% | 93.6% | 0% |

| STOP | 4.9% | 0% | 0% | 0% | 95.1% |

| F1-SCORE | 1.00 | 0.95 | 0.98 | 0.77 | 0.91 |

| Lighting Conditions | Average Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| Normal light | 94.44% | 0.95 | 1 | 0.97 |

| Darker light | 88.64% | 0.95 | 0.91 | 0.93 |

| Low light | 13.04% | 0.32 | 0.13 | 0.19 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, X.; Gao, H.; Ma, X.; Wang, L. A Lightweight Hybrid Detection System Based on the OpenMV Vision Module for an Embedded Transportation Vehicle. Sensors 2025, 25, 5724. https://doi.org/10.3390/s25185724

Wang X, Gao H, Ma X, Wang L. A Lightweight Hybrid Detection System Based on the OpenMV Vision Module for an Embedded Transportation Vehicle. Sensors. 2025; 25(18):5724. https://doi.org/10.3390/s25185724

Chicago/Turabian StyleWang, Xinxin, Hongfei Gao, Xiaokai Ma, and Lijun Wang. 2025. "A Lightweight Hybrid Detection System Based on the OpenMV Vision Module for an Embedded Transportation Vehicle" Sensors 25, no. 18: 5724. https://doi.org/10.3390/s25185724

APA StyleWang, X., Gao, H., Ma, X., & Wang, L. (2025). A Lightweight Hybrid Detection System Based on the OpenMV Vision Module for an Embedded Transportation Vehicle. Sensors, 25(18), 5724. https://doi.org/10.3390/s25185724