1. Introduction

In this paper, the task of detecting branches to be pruned on fruit trees is addressed. This step is essential for automating the pruning process. Once the branches requiring pruning and the cutting points are accurately identified, a robotic system can execute the pruning accordingly. To make this feasible, the robot must have 3D information about the position and orientation of these pruning points. Therefore, the detection of branches to be pruned is performed on 3D models, specifically point clouds.

Since this represents a real-world problem, an approach that automatically detects branches to be pruned directly on point cloud models of actual trees is proposed. To obtain these point clouds, RGB-D images of real trees were captured directly in orchards, facilitating the potential for the entire process to be performed by a robot in real time in the future. This work serves as a proof-of-concept, demonstrating that the proposed approach is capable of producing results sufficient for practical applications, considering both accuracy and processing speed. Limitations of the current method are acknowledged, providing a foundation for future research and improvements. Nonetheless, the pipeline developed is considered a solid starting point, offering meaningful results for real-world robotic pruning applications (The repository with code and dataset can be found here:

https://github.com/ividovic/BRANCH_pipeline, accessed on 7 September 2025).

The initial step involved acquiring RGB-D images of the pear trees. Multiple viewpoints were used during image collection to ensure comprehensive coverage of each tree, which is crucial for accurate 3D reconstruction. Depth information was particularly emphasized due to its importance in robotic applications. Image acquisition took place in the orchard of the Agricultural Institute in Osijek, ensuring that the trees could be pruned by experts. RGB-D images of the same trees were also captured after pruning. Point clouds were generated from these images, followed by preprocessing to remove background elements unrelated to the tree, as well as ground segments. However, the removal of neighboring trees and supporting trellis wires was considered impractical, as manual extraction would have been very time-consuming. Furthermore, due to the sparsity of data, it was extremely difficult to distinguish at the point level which points belonged to the target tree versus adjacent neighboring trees. An approach was developed for the registration of these point clouds to create a reconstruction of the 3D model of each tree, referred to as partial 3D models, as the image acquisition did not form a closed loop. The registration was performed using the TEASER++ algorithm.

For machine learning training in pruning branch detection, ground truth points belonging to the branches to be pruned were automatically labeled. This was achieved by registering the pre- and post-pruning models; the differences between the aligned point clouds were assumed to correspond to pruned branches, which were then labeled accordingly. The neural network training utilized PointNet++, with an ablation study conducted to assess the influence of hyperparameters such as batch size, learning rate and number of points, and also voxel size and normalization of the point clouds. Model evaluation included both quantitative metrics—precision and recall—and qualitative visual inspection.

The main contributions of this work are summarized as follows:

1. BRANCH_v2 dataset: An enhanced version of the BRANCH dataset [

1], containing RGB-D images and reconstructed partial 3D models of pear trees pre- and post- pruning. Points belonging to the branches to be pruned are annotated. The dataset containing pre- pruning partial models with corresponding annotations is provided in multiple variations, including normalized and centered forms with voxel sizes ranging from 2.5 mm to 2 cm, totaling ten dataset versions. This dataset can serve as a valuable resource for future research aimed at both tree reconstruction and the prediction of branches to be pruned.

2. Point Cloud Registration Method: Development of a robust point cloud registration method using the TEASER++ algorithm, enhanced with an automatic preprocessing step for background removal. This improves alignment accuracy in sparse, partially overlapping tree structures, and reduces the impact of outliers and environmental noise.

3. Automatic Branch Labeling: A method that automatically labels branches to be pruned, eliminating the necessity of manual annotation.

4. End-to-End Real-Time Pipeline: A complete pipeline that processes RGB-D images to predict pruned branches in real time under real-world conditions. The pipeline’s effectiveness has been validated through neural network training and evaluation, both quantitatively and visually, underlining its potential for future integration into robotic pruning systems.

The paper is structured as follows.

Section 2 provides the overview of the research domain and a summary of prior approaches.

Section 3 describes the dataset acquisition process, the preprocessing algorithm, and the overall structure of the dataset. In

Section 4 the reconstruction of partial 3D tree models is presented.

Section 5 explains the annotation of these models and the implementation of a deep neural network for prediction of branches to be pruned. In

Section 6 the quantitative and visual evaluation of model predictions in comparison to ground truth labels is presented.

Section 7 discusses the main achievements and possibilities for the improvements of the work, while the paper concludes with a summary in

Section 8.

3. Dataset

Recognizing the limitations of existing data in agricultural robotics, an extended version of the BRANCH dataset, BRANCH_v2 (The complete dataset is available here:

https://puh.srce.hr/s/EoPqgASGerLapne (accessed on 7 September 2025), or can be obtained upon request from the authors), was created in collaboration with the Agricultural Institute in Osijek, Croatia. In our previous work [

1], the original BRANCH dataset was introduced, which comprised RGB-D images of 70 pear trees which resulted in 52 reconstructed tree models, captured both before and after pruning, with labeled points belonging to the branches for pruning. In this study, we build upon that dataset by incorporating an additional RGB-D images of 114 trees, covering one full row of 184 pear trees in the orchard, thereby significantly expanding the dataset’s scope and potential for analysis. The RGB-D images were captured using an ASUS Xtion PRO Live RGB-D camera, with a resolution of 640 × 480 pixels, which was sufficient for generating point clouds and performing 3D reconstruction. Image acquisition took place in late winter, when the trees were in a dormant state—characterized by the absence of leaves, with only bare branches and trunks visible. To ensure high-quality data, the dataset was collected at sunset, exclusively under conditions without rain or wind. The camera was positioned at approximately 10 viewpoints along the front side of each tree, as the presence of trellis wires prevented full 360-degree scanning, enabling creation of the partial tree 3D model. The data presented in this paper consists of three sets. The first set includes RGB-D images of pear trees taken before pruning, along with a point cloud for each view and a single partial 3D model reconstructed for each tree. The second set of the dataset contains RGB-D images captured after an expert from the Agricultural Institute pruned the entire row; accompanied by corresponding point clouds for each view and partial 3D model for each tree. In this paper, the term model specifically refers to a point cloud representation of a tree. Finally, the third set includes registered partial models from pre- and post- pruning, with thus obtained labeled branches identified for pruning. By overlapping the pre- and post-pruning models, data-driven ground truth data were generated. This approach is more practical and cost-effective than manually annotating every point in the point cloud. It enables experts to perform pruning in the field, with the proposed method automatically labeling the relevant points, eliminating the need for time-consuming point-by-point annotation. The pruning technique applied in this orchard aimed to improve fruit quality by removing excess branches that primarily consumed water without contributing to fruit production. Note that the post-pruning images were taken once the trees had already begun to blossom.

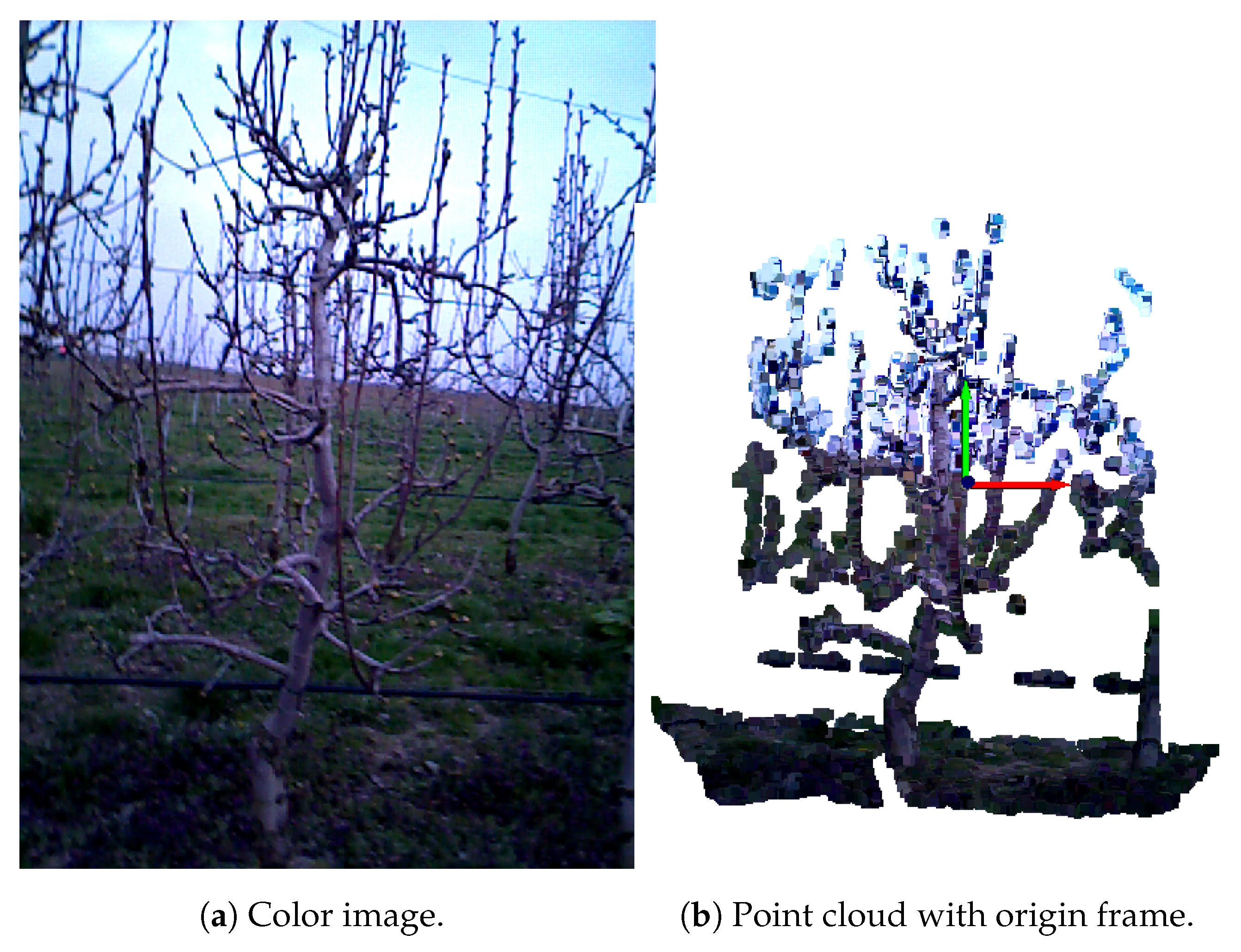

Each tree was partially reconstructed using only four RGB-D images, carefully selected to provide sufficient overlap of the point clouds and to reconstruct almost the entire tree. An example of approximate selection is shown in the

Figure 1 illustrating camera positions at mid-left (colored red), center (green), mid-right (blue), and center-upper (yellow) viewpoints. The choice of using exactly four images per tree was driven by computational constraints, as the registration process is resource-intensive, and including more images did not significantly improve the final model quality. The novelty of this work, compared to [

1], lies in the enhanced preprocessing approach, which includes the removal of grass and the application of the TEASER++ algorithm [

15] to improve the robustness and accuracy of point cloud registration. Overall, the dataset comprises RGB-D image captures of 184 individual pear trees, providing a substantial basis for 3D reconstruction and pruning analysis. Each reconstructed 3D partial model was visually examined. If the trunk and at least two primary branch points overlapped with minimal disparity, the registration was deemed successful. On the other hand, even a slight tilt of the trunk or lack of overlap at the branch points was considered a failed registration. Using the described approach, a total of 101 partial 3D tree models both before and after pruning process were proclaimed successfully reconstructed, with labeled points indicating the pruned branches.

3.1. Dataset Structure

The dataset is organized into three main folders: A, B, and Merged, corresponding to the images taken after (A) pruning, before (B) pruning, and the aligned (merged) data, respectively. Within each of these folders, a subfolder is named with the prefix E, which stands for evening capture session. Each E folder contains data for several trees, which are structured as follows:

Here, <row> corresponds to the tree row in orchard, <cultivar> corresponds to the Williams pear cultivar (shortened ‘V’ in Croatian) and <iteration> indicates the specific tree order number. Inside each tree folder, there are three subfolders:

original_images/ – containing unprocessed data

filtered_noGrass/ – containing preprocessed data with removed grass

reconstruction/ – containing registered data

Both original_images/ and filtered_noGrass/ folders include:

The reconstruction folder contains the (.ply) registered partial 3D model with voxelized point clouds used for the registration process.

The Merged folder contains final overlap of the pre- and post- pruning models and additionally labeled branches for pruning on point-level annotation provided as a csv file. This file is organized as follows: voxel size, tree name, and labels. The labels correspond to the registered point cloud and are represented as a list of ones and zeros, where a value of 1 indicates that the point belongs to a pruned branch, and a value of 0 indicates non pruning point. This hierarchical structure facilitates organized access to raw and pre-processed data for training and evaluation purposes. Point clouds derived from the RGB-D images before the pruning were further processed for training, including centering, normalization, and voxelization at eight different voxel sizes ranging from 2.5 mm to 2 cm. The labels were adjusted accordingly, resulting in a total of 10 dataset variations.

3.2. Preprocessing Dataset

The process developed in this research is intended to be applicable in real-world conditions. Accordingly, the RGB-D images were collected directly in an outdoor orchard setting, capturing all environmental factors a robot would encounter during operation. The entire dataset was uniformly preprocessed and then randomly split into training and testing subsets, ensuring similar environmental conditions in both. The dataset already exhibits significant occlusion from the tree’s own branches and trunk, as well as from neighboring trees and structures like wires, present in both subsets. Since the approach relies solely on depth information, additional augmentation for lighting perturbation would offer limited benefit. Therefore, only grass removal preprocessing was implemented, as described below.

Each point cloud was generated using the corresponding color and depth images along with the camera’s intrinsic parameters, as described in [

1]. Due to the specific imaging setup, all point clouds shared a similar spatial layout: a tree was always positioned in the foreground—typically centered, though occasionally with overlapping branches from neighboring trees. The background sometimes contained distant trees or grass. The bottom part of the scene often included grass; however, in some cases, it was not visible because the camera was elevated to capture the upper portions of the tree. This observation motivated the development of an automated grass removal algorithm, presented in Algorithm 1. The preprocessing pipeline is demonstrated using the before pruning image seen in

Figure 2a. The input to the algorithm is a point cloud

P.

Since the camera was positioned vertically to capture only one tree per frame, the resulting point cloud required reorientation to achieve an upright tree alignment. Accordingly, the initial preprocessing step involved rotating

P about the Z and Y axes of the camera’s reference frame to ensure proper vertical alignment of the tree.

| Algorithm 1: GrassRemoval : Grass removal from point cloud P |

- 1:

procedure GrassRemoval(P) - 2:

Rotate P about Z and Y axes to align vertically. - 3:

if m then - 4:

Flag P as Potential_Grass - 5:

- 6:

- 7:

while do - 8:

RANSAC_Plane_Segment(B) - 9:

if then - 10:

break - 11:

end if - 12:

angle between normal of and Y-axis - 13:

if then - 14:

- 15:

end if - 16:

- 17:

end while - 18:

if then - 19:

plane in with maximum number of points - 20:

- 21:

- 22:

for all B do - 23:

distance from p to - 24:

if m then - 25:

- 26:

end if - 27:

end for - 28:

- 29:

return - 30:

else - 31:

- 32:

- 33:

- 34:

- 35:

- 36:

return - 37:

end if - 38:

else - 39:

return P ▹ No preprocessing needed - 40:

end if - 41:

end procedure

|

The next preprocessing step involved removing ground-level grass and distant background points from the point clouds. In previous work [

1], grass removal was performed using the K-Means clustering algorithm with two clusters to separate grass from the tree. However, due to limited computational resources at the time, further preprocessing steps were not feasible, and the selection of images for grass removal was done manually. In the current work, this process was significantly improved and automated to enhance consistency and efficiency.

To automate detection and removal of points belonging to the grass, a heuristic method based on spatial thickness was implemented. Based on empirical data, measured in orchard, each tree was expected to occupy approximately 1.5 m in width along both the X- and Z-axes. Specifically, if the absolute distance of Z-coordinates in the considered point cloud P, hereafter denoted as , is greater than 1.5 m, the point cloud was flagged as potentially containing grass or objects in the background. Otherwise, no further preprocessing was applied.

Given that the average grass height was empirically measured to be approximately 0.4 m during dataset acquisition, a threshold of

+ 0.6 m along the Y-axis was applied. All points below this height were considered potential grass. This additional 0.2 m provided a margin for potential errors caused by camera tilting, ensuring all grass points could be detected. Although a threshold of 0.2 m may seem large, it primarily affected the lower portion of the trunk, which was not critical for our purposes. Even if this section was mistakenly cropped, it would have minimal impact on the resulting registration. Consequently, a new point cloud containing only the bottom part of the flagged point cloud

P, up to the height of

+ 0.6 m along the Y-axis—is defined as

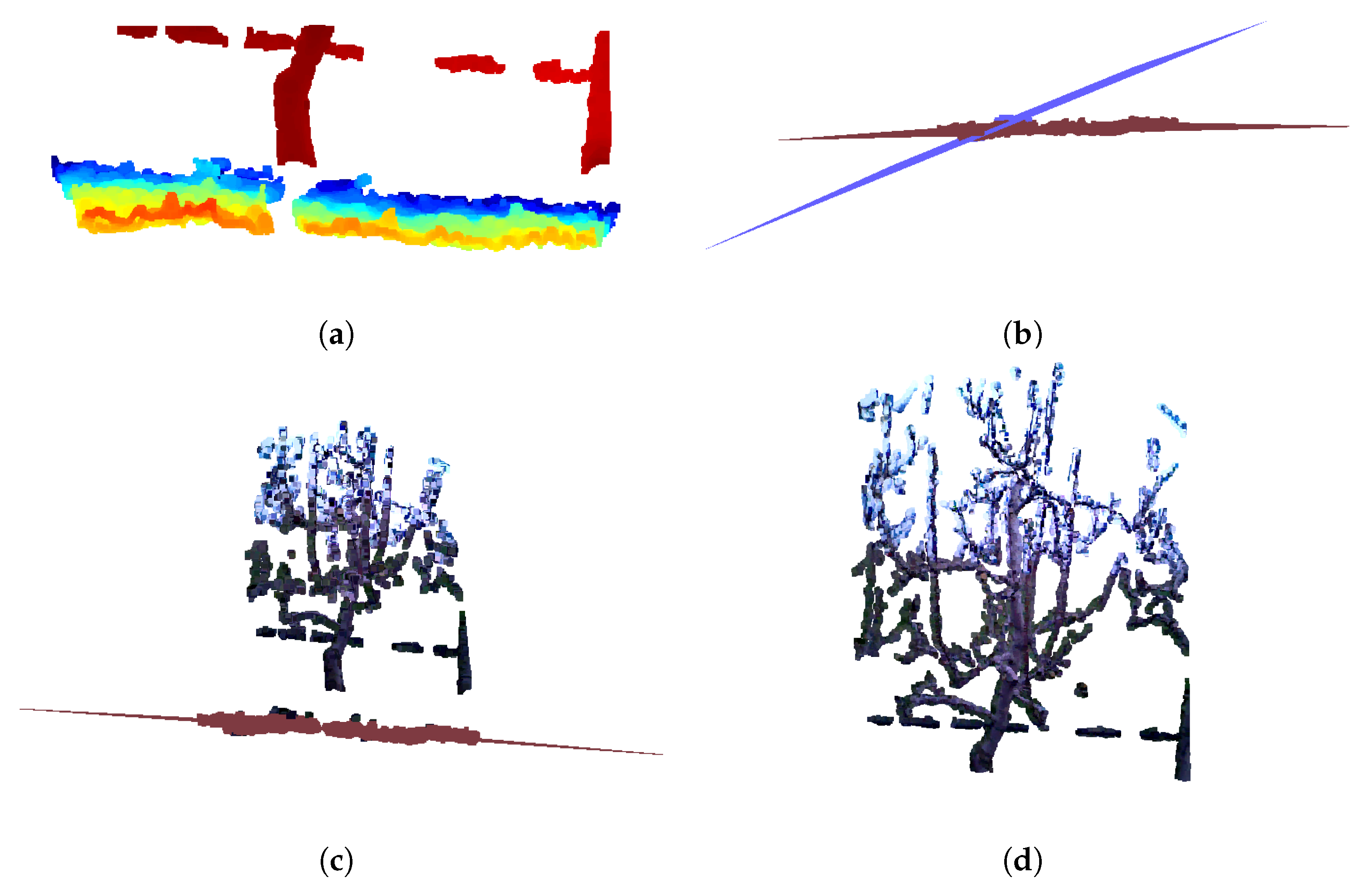

B to ensure faster grass detection, as illustrated in

Figure 3a. Points further than 1.5 m in the negative Z direction were presumed to lie outside the target tree structure and were removed accordingly.

For the point cloud

B, iterative RANSAC-based plane segmentation was performed using the

segment_plane() [

21] method from the Open3D library. In each iteration, the detected plane inliers were removed from the remaining point cloud

B. This removal step was necessary because the

segment_plane() function tends to repeatedly detect the same planes if previously segmented points are not excluded. Furthermore, let

be the unit normal of the plane and

represent the vertical axis. The angle

between

and

was computed as:

A detected plane was retained only if it met two criteria:

- 1.

Minimum number of inliers: ,

- 2.

Plane orientation:

If , the plane was considered approximately horizontal and retained. The angle threshold was empirically determined based on the camera’s tilt during data acquisition.

The segmentation process continued until fewer than 30 points remained in the bottom point cloud

B. A visualization of the segmented planes representing grass—highlighted in red and purple—can be seen in

Figure 3b. In the next step, only the dominant plane

containing the highest number of inlier points, was selected, as this plane consistently corresponded to a horizontally aligned surface resembling the characteristic pattern of grass (e.g., the red plane in

Figure 3c). Other segmented planes, which had fewer amount of points and larger tilt angles, were often found intersecting lower parts of the tree structure (e.g., trunk), mistakenly including tree points as part of the grass. By focusing on the most dominant horizontal plane, this approach minimized the risk of removing valid tree geometry and improved the accuracy of the grass segmentation process. Additionally, it was necessary to incorporate points from other segmented planes that also represented the grass surface, visible as black points near the red plane in

Figure 3c. To achieve this, the point cloud

B was updated by selecting points from the original point cloud

P that satisfied the condition of having a Y-axis value less than

+ 0.7 m. This elevated threshold accounts for potential inclinations in the grass plane. For each point

in

B, its orthogonal distance

D to the plane was calculated as follows:

where

are the plane normal coefficients and

d is the plane offset (

+ 0.7 m). If

, the point was considered part of the dominant grass plane.

Finally, all points within ±0.35 m of the dominant plane were labeled as grass and removed from the original point cloud P, while the remaining points were retained as part of the tree structure.

In cases where RANSAC failed to detect any or valid planes, grass points were heuristically identified based on their spatial location. Specifically, the points from the original point cloud P were divided into two subsets: B, representing the lower region of the scene, defined as points with m; and T, representing the upper part of the point cloud, with m. The minimum Z-value among the points in T was computed and denoted as . Then, a subset of points was defined as those points in the lower region whose Z-coordinate was less than this threshold: , i.e., . These points in G were identified as likely belonging to distant background structures (e.g., grass or terrain behind the tree) and were removed from the original point cloud. The resulting cleaned point cloud was obtained as .

The output of the algorithm is the point cloud of the tree without grass or background structures, denoted as

, in cases where grass was initially detected or objects were present in the background (see

Figure 3d).

4. Tree Reconstruction

Having clear, noise-free point clouds is important when applying reconstruction algorithms. Many commonly used methods- such as Iterative Closest Point (ICP)—tend to perform poorly in the presence of noise and outliers [

22]; while RANSAC’s performance degrades significantly as noise level increases [

23]. An additional challenge arises when reconstructing unordered and sparse objects, such as trees, from multiple viewpoints: the resulting point clouds often have minimal overlap, making it difficult or even impossible to establish reliable correspondences purely through mathematical means, especially in the absence of perceptual context.

The reconstruction process closely follows the methodology described in our previous work [

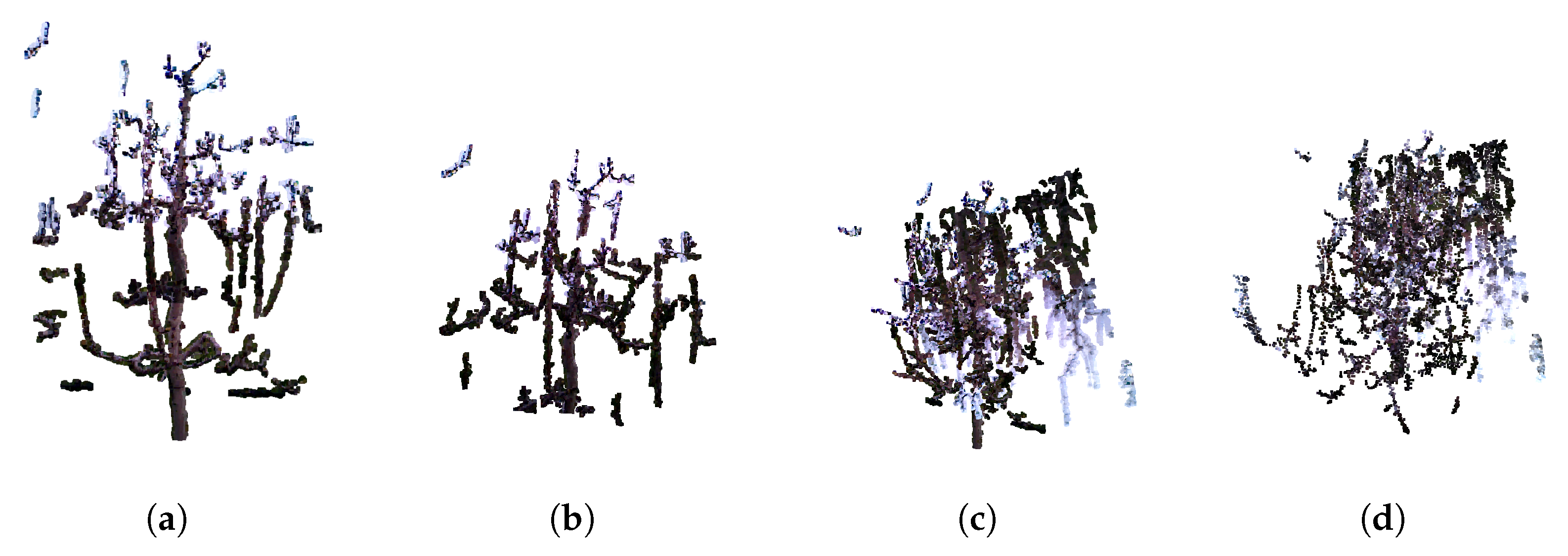

1]. For each tree, four point clouds, subjectively selected based on visual assessment as containing the most relevant structural information, were manually chosen for the reconstruction algorithm. Due to computational constraints, using more than four point clouds would have made registration overly complex; therefore, the selected four were carefully chosen to avoid redundant views and to exclude those lacking meaningful structural information (e.g., point cloud showing only a single branch). In cases where more than four high-quality point clouds were available, preference was always given to those capturing a greater number of additional branches.

Figure 4 illustrates the importance of manual point cloud selection. Successful registration using four manually chosen point clouds is shown in

Figure 4a. In contrast,

Figure 4b demonstrates that using fewer than four point clouds leads to incomplete reconstruction.

Figure 4c shows a failed registration when four point clouds are selected at random, while

Figure 4d depicts registration failure when all six available point clouds are used. These results highlight that careful manual selection of representative views is crucial for reliable reconstruction. Furthermore, by systematically observing which viewpoints consistently lead to successful registrations, it will be possible in future work to predefine optimal acquisition positions, thereby eliminating the need for manual selection and enabling a fully automated pipeline. As detailed in

Section 3.2, point clouds were first preprocessed; and then sequentially registered. In our previous work [

1], the registration process employed global registration (RANSAC) as an initial step for the local ICP method. The ICP algorithm has several limitation: accuracy of the results relies on a good initial alignment and can be affected by point cloud imperfections such as noise, outliers and partial-overlaps [

24]. In this paper the object of interest are tree structures, which are sparse and not entirely rigid due to the potential wind-induced moving. These characteristics, combined with small overlapping areas in chosen images and noise introduced by neighboring branches, pose significant challenge for standard ICP registration. In such conditions characterized by limited overlap, structural sparsity, and nonrigid deformations, ICP is likely to converge to incorrect alignments or fail altogether. A review of the literature highlights fast and certifiable algorithm called Truncated least squares estimation And SEmidefinite Relaxation (TEASER++) for the registration of two sets of point clouds with a large number of outlier correspondences [

15]. TEASER++ is designed to provide certifiable global optimality in the presence of extreme outlier rates- handling up to 99% of outliers - by formulating the rotation estimation as a semidefinite program (SDP) and solving it efficiently through convex relation techniques. Unlike traditional methods such as ICP, TEASER++ is not only more accurate, but also significantly faster, often completing tasks in milliseconds [

15]. Its certifiability, robustness to outliers, and deterministic success bounds make it especially suitable for registering sparse, partially overlapping structures such as trees.

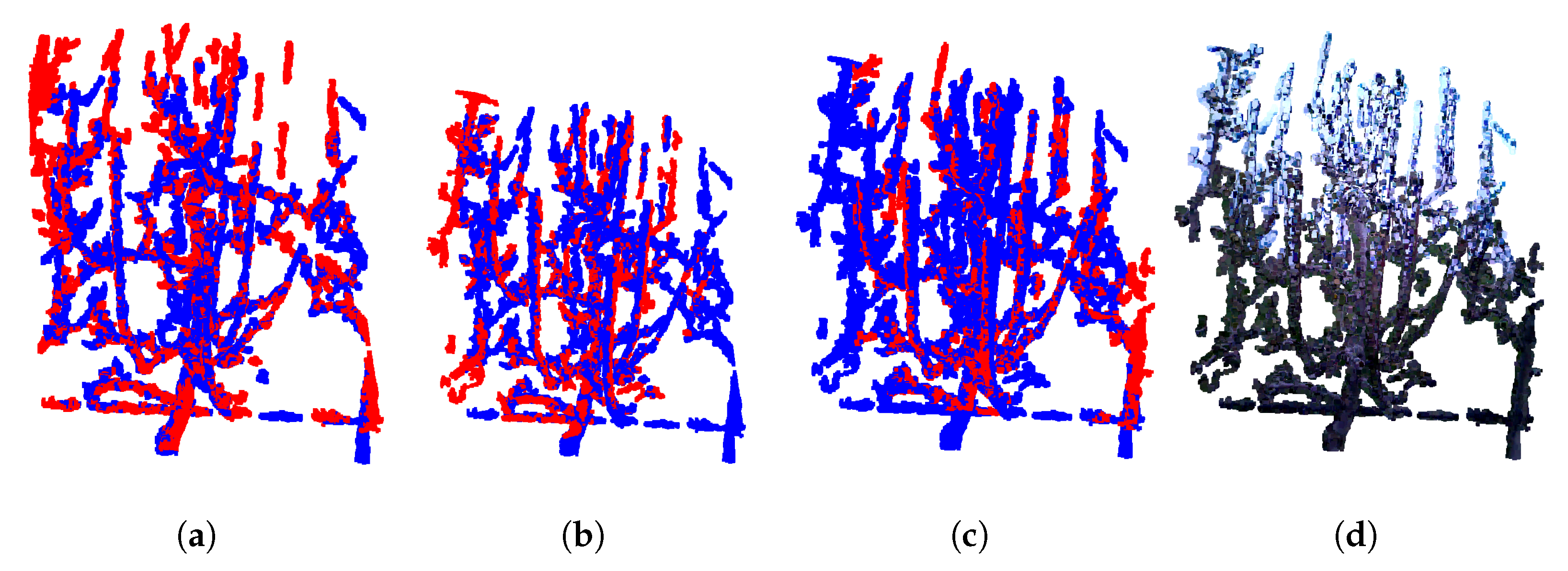

The algorithm for pear tree reconstruction is presented as Algorithm 2, which is explained using a pre-pruning dataset example seen in

Figure 5. The input consists of four preprocessed point clouds:

, representing different views of the tree. When combined, these provide almost complete scan of the tree. The first point cloud is always designated as the stationary reference and is referred to as the target point cloud,

in

Figure 5a, while the remaining point clouds are considered source point clouds, in

Figure 5b–d. The algorithm is designed in a way that the final integrated point cloud remains aligned within the reference frame of the initial target point cloud.

| Algorithm 2: RegistrationTEASER : Sequential TEASER++ registration |

- 1:

procedure RegistrationTEASER() - 2:

- 3:

for to 3 do - 4:

- 5:

- 6:

- 7:

- 8:

- 9:

- 10:

- 11:

end for - 12:

return target - 13:

end procedure

|

Since each preprocessed point cloud contains more than 40,000 points, voxel downsampling was performed for both the source and target clouds to improve computational efficiency. A voxel size of 0.02 m was chosen as an effective compromise between registration accuracy and runtime performance. Preliminary tests showed that smaller voxel sizes (e.g., 0.01 m) preserved fine geometric details but significantly increased computation time and occasionally led to unstable registrations. Conversely, larger voxel sizes (e.g., 0.03 m) reduced computational costs, but resulted in insufficient point density, and thus lower alignment accuracy. The visual evaluation of the registration results in combination with these computational considerations, indicated that a voxel size of 0.02 m provided the best balance between accuracy and efficiency. TEASER++ relies on Fast Point Feature Histogram (FPFH) [

25] descriptors to establish correspondences between point clouds [

15]. First, FPFH features are extracted from the downsampled source and target point clouds (

and

). Using KDTreeFlann [

21], nearest neighbor searches are conducted on the target features for each source feature, producing arrays of source and target correspondences. Next, global registration is performed using TEASER++ with the identified correspondences (

source_corr,

target_corr). The open-source TEASER++ library [

26] (C++ with Python and MATLAB bindings) is used for this step. The TEASER++ parameters employed for the registration process are summarized in

Table 2. Among them, two parameters were adjusted from their default values: the

noise_bound was set to 0.02, matching the voxel size used for downsampling, instead of the default value of 1, and the

rotation_estimation_algorithm was set to

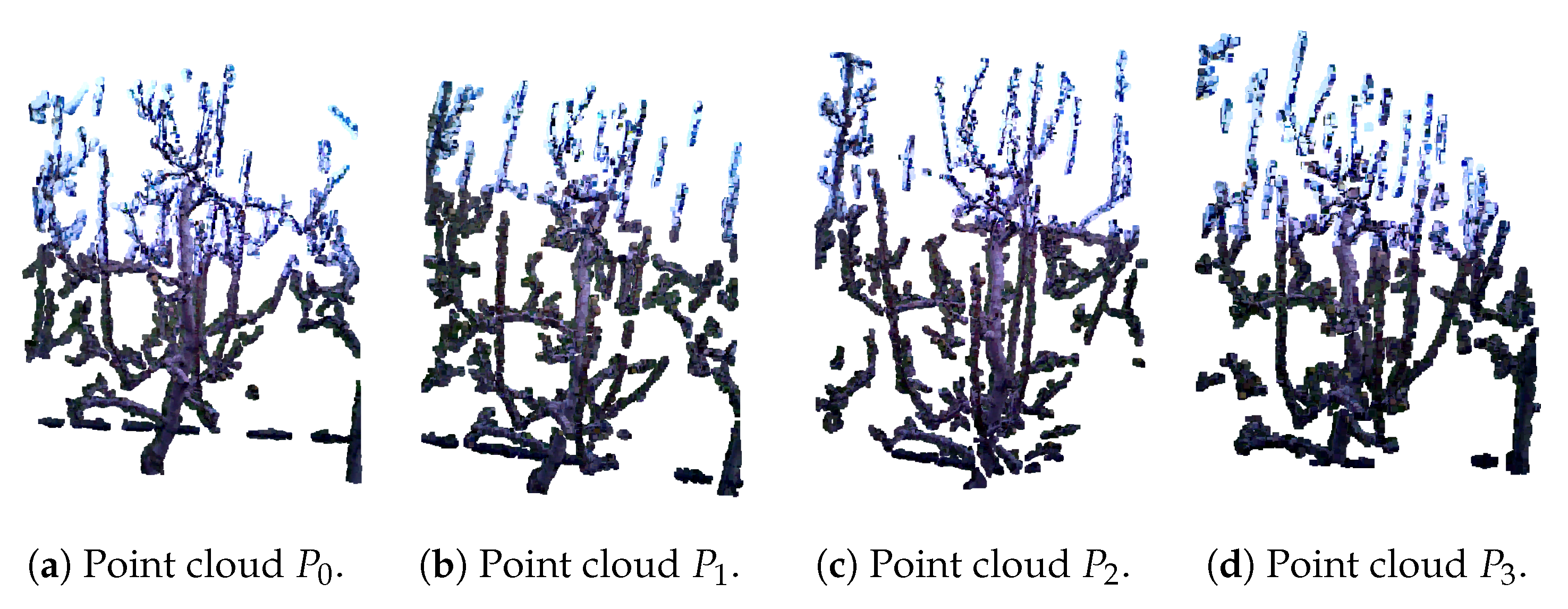

False, since additional rotation estimation was unnecessary. The output is a transformation matrix that maps the source point cloud into the target point cloud’s reference frame. In this paper, the target point cloud is consistently colored blue, and the source point cloud red. The resulting registration after applying computed transformation matrix on source point cloud, shown in

Figure 6a, demonstrates good overlap in key areas such as the tree trunk, a prominent vertical branch, and the horizontal support wire near the base of the scene. In the next step, the target point cloud is updated to include the previously registered source point cloud, with the combined target point cloud. In next iteration, the new source point cloud, shown in

Figure 5c, is registered against this updated target after finding correspondences and suitable transformation, resulting in the point cloud seen in

Figure 6b. This iterative process continues until all point clouds have been registered. The final result of combining all four point clouds, shown in their original colors, is presented in

Figure 6d. It is important to note that while the transformation matrices are computed using the downsampled point clouds, these matrices are ultimately applied to the original, full-resolution source point clouds for final storage and visualization.

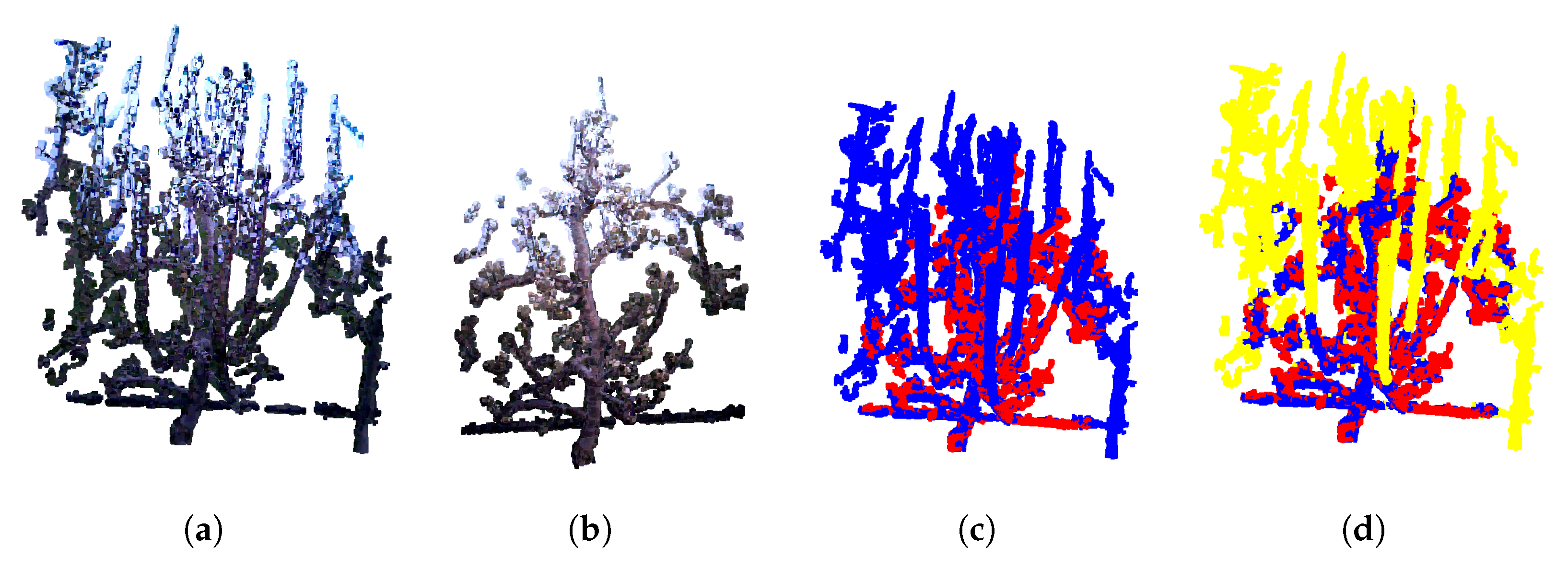

The same process was also applied to generate models from the after pruning images. An example of a resulting post-pruning model is shown in

Figure 7b. Comparing the pre- pruning model (

Figure 7a) with the post- pruning model (

Figure 7b) clearly illustrates the extent of branch removal due to pruning.

A limitation of this sequential point cloud registration approach is the accumulation of errors. If an error occurs early in the sequence, it can propagate and negatively affect all subsequent transformations. However, since the images were captured in a strict, consecutive order, it is reasonable to assume that each newly added point cloud shares increasing overlap with the existing model, facilitating easier registration. An alternative approach, registering only neighboring point clouds, was also tested but frequently failed when consecutive images lacked sufficient overlap, leading to incorrect registration. An additional limitation of this work is the reliance solely on visual inspection for evaluating each step of the registration. In previous work [

1], Intersection over Union and Chamfer Distance were employed, but in this study, these metrics—together with RMSE—proved unreliable for detecting incorrect registrations in the absence of ground truth. The challenge of robust evaluation warrants further investigation, and the development and adoption of additional metrics will be addressed in future work.

Another improvement over the previous work was the substitution of the ICP algorithm with TEASER++, which required only a single run per registration. In contrast, the earlier approach necessitated running ICP five times for each registration to increase the likelihood of successful alignment. This repetition significantly increased computation time, as ICP often failed to achieve satisfactory alignment on the initial attempt. Moreover, if a suitable overlap was not found within these five iterations, the registration typically failed entirely.

As mentioned in

Section 3, images were captured for a total of 184 trees, representing a complete row in the orchard. These trees were captured both before and after the pruning process. All generated point clouds were preprocessed by applying rotation corrections and removing excess grass and background elements. Subsequently, the point clouds were registered using the TEASER++ algorithm for both pre- and post-pruning scans. This approach facilitates the efficient and cost-effective generation of ground truth data, eliminating the need for experts to manually label branches to be pruned, which will be explained in the

Section 5.

The TEASER++ registration successfully aligned all four point clouds for 133 trees pre- pruning (72.3%) and 143 trees post- pruning (77.7%), as summarized in

Table 3. Among these, 103 trees (55.9%) had both pre- and post-pruning models correctly reconstructed. For these, a final registration was performed using TEASER++ to generate the overlapped pre- and post-pruning models, treating the pre-pruning model as the target (blue in

Figure 7c) and the post-pruning model as the source point cloud (red in

Figure 7c). After visual inspection, 101 (98.1%) of these combined models were proclaimed as successfully created models.

In

Table 4, we provide a timing overview of the reconstruction, including preprocessing and registration phases. The preprocessing step includes grass removal, applied to four selected RGB-D images. Registration involves point cloud voxelization, FPFH feature extraction, correspondence finding, and matching with TEASER++. Since the registration process is performed sequentially, the table reports the elapsed times for each phase: registration of

and

, then

and

, and finally registration of

and

. The results indicate that registration, on average, takes only a few seconds per single tree, while preprocessing generally requires a couple of minutes on average per single tree, making it the main bottleneck of the overall process. Grass removal and registration experiments were conducted on a 64-bit Linux system equipped with an Intel(R) Core(TM) i7-7700 CPU at 3.60 GHz (4 cores, 8 threads, 8 MiB L3 cache) and an NVIDIA GeForce GTX 1060 6GB GPU.

6. Experimental Evaluation

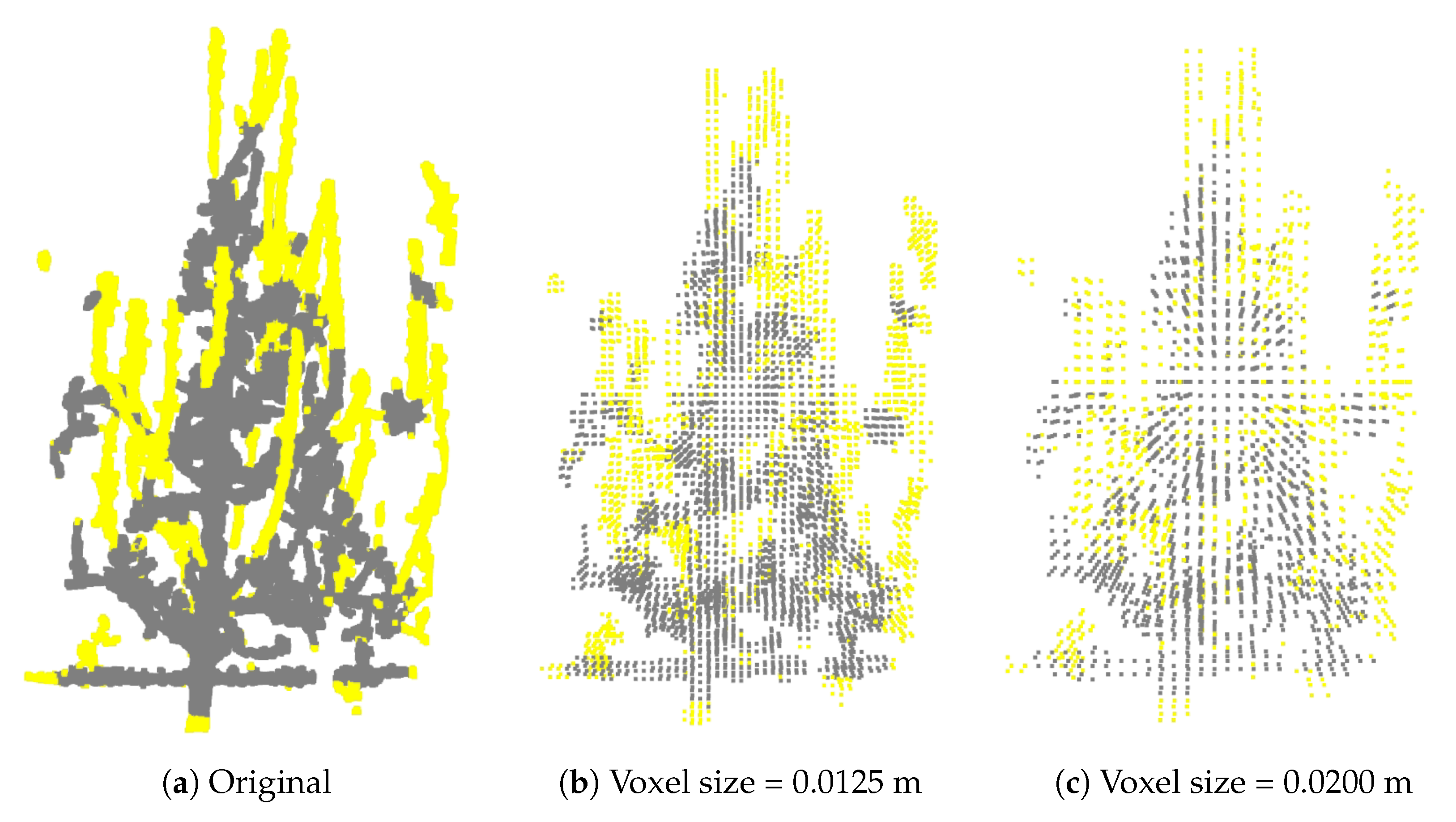

To evaluate the overall process of predicting branches to be pruned, the input for the training of the PointNet++ model consisted of point clouds of partial tree models, along with labeled points obtained by overlapping the reconstructed pre- and post-pruning models. Following training, the model was tested on a separate set, where it predicted pruning points expected to belong to branches to be pruned. Quantitative evaluation was performed by calculating precision, recall, and F1 score, based on comparisons between the model’s predictions and the ground truth labels. To gain a better understanding of the prediction quality, visual inspections were also conducted, allowing for qualitative comparison between predicted points and ground truth annotations. A small ablation study was conducted to assess the impact of various parameters. Since the original point clouds were referenced to the camera capturing the first RGB-D image of each tree—which was inconsistent across the dataset (sometimes captured from the left, other times from the right)—the point clouds were centered to have their origin at the center of all tree points. Additionally, normalization of the point clouds was applied. To accelerate training and evaluate the network’s robustness to various voxel sizes, the normalized and centered point clouds were additionally voxelized into eight different sizes, ranging from 2.5 mm to 2 cm, resulting in a total of ten dataset variations with corresponding ground truth labels. Details regarding these datasets are provided in

Table 7, where dataset names indicate voxel size in meters. Examples of the 3D model with different voxel sizes are shown in

Figure 8.

Hyper-parameter tuning was also performed, including variations in batch size, learning rate, and the number of points sampled, as shown in

Table 6. Training was carried out on a NVIDIA GeForce RTX 4070 Ti, CUDA Version 12.6, 12 GB GDDR6X, which limited the convergence of certain dataset and parameter variations. To assess the stability of the results, experiments were repeated with different random seeds, [10, 42], used for splitting the dataset into training, validation, and test sets. The results showed consistent trends, indicating that the performance of the model was neither significantly affected by the values of the hyperparameters nor by the choice of seed. The training details and test results, including precision, recall, and F1 scores, are summarized in

Table 8.

In this application, high precision is particularly crucial because it indicates that the network is not predicting false positives—meaning it is unlikely to mistakenly identify parts of the tree, such as branches or other structures, as candidates for pruning when they should not be. This is important because unnecessary cutting of healthy parts can cause damage to the tree and compromise its overall health and stability. Therefore, ensuring high precision helps minimize the risk of incorrect pruning and preserves the integrity of the tree.

While quantitative metrics such as precision and recall provide valuable indicators of the model’s performance, they alone are insufficient to fully understand the causes of false predictions. These metrics do not offer insight into the specific reasons behind incorrect classifications, such as whether false positives are due to ambiguous features or ground truth inaccuracies. To gain a deeper understanding of the model’s behavior and to better assess its practical applicability, a visual inspection of the test samples was conducted. This qualitative analysis allows us to observe the pattern, context, and potential sources of errors that are not apparent from numerical metrics alone. We conducted a qualitative validation of the test samples from the chosen training variation with

batch size = 2,

learning rate = 0.0025,

seed = 42, and

npoints = min_points. Specifically, we selected the dataset with voxelization at 2.5 mm, as this variation achieved the highest precision within this training variation. The point clouds represent trees with predicted points indicating branches for pruning, with four different colors depicting the classification results: green for true positives (correctly predicted pruning points), gray for true negatives (correctly predicted non-pruning points), red for false positives (incorrectly predicted pruning points), and blue for false negatives (ground truth pruning points missed by the model). Among the test samples, three common scenarios emerged. First, some examples, like those shown in

Figure 9, exhibit high precision, with the model accurately identifying pruning points.

Further, there are cases where the predicted cutting points are very close to the ground truth, as illustrated in

Figure 10. In such cases introduction of the post-processing module which will use information about tree morphology and pruning rules could be beneficial. This task is a complex task which requires collaboration with agronomy experts and is for sure one of the topics for our future research. We hope that introduction of such post-processing module will be able to improve pruning predictions obtained by PointNet++ or any other used neural network or algorithm.

Some samples, such as those in

Figure 11, demonstrate lower precision and recall, often due to inaccuracies or inconsistencies in the ground truth annotations itself and test models not resembling training set.

This visual assessment provides valuable insights into the model’s practical performance and highlights the importance of careful ground truth validation.

7. Discussion

The primary motivation of this study was to develop and evaluate an automated pipeline for predicting branches to be pruned in orchards. Since the entire process is interconnected, errors tend to accumulate from one stage to the next, highlighting the potential for targeted improvements at each step.

The pipeline begins with capturing RGB-D images in the orchard. An initial refinement could involve upgrading the imaging sensor, such as employing a Time-of-Flight (ToF) camera, which is less affected by ambient sunlight. This would enable longer imaging sessions throughout the day, not just during late evening hours. Furthermore, higher-quality sensors could produce less noisy images with greater resolution, resulting in more informative point clouds.

Some form of segmentation before the whole registration process would be beneficial, since it may help with the removal of the considered tree-unrelated structures, such as other trees and supporting trellis wires. Improving the subsequent tree reconstruction could involve automating and enhancing the process further. Currently, manual selection of images is required; automating this step by capturing images in a consistent pattern and using all available images for reconstruction would be beneficial. Additionally, implementing an automatic validation mechanism for the reconstruction process—based on metrics or consistency checks—could reduce reliance on manual visual inspection and subjective assessment. This is particularly challenging given the absence of a definitive ground truth. Validation could be performed after each registration phase and upon completion of the full scaffold model, ensuring the accuracy of each reconstruction stage.

The annotations are generated based on the differences between the pre- and post-pruning models, under the assumption that the only changes come from the pruned branches. In practice, however, some points may also be missing in the post-pruning model due to imperfect reconstruction. These missing points are not systematic-sometimes they occur on the trunk, other times on branch segments near the trunk—and the network does not register them as important, so it does not attempt to predict them. As a result, they appear as false negatives, which lowers recall thus creating a gap between precision and recall values. The annotation process can be refined by segmenting the tree into its constituent parts—branches, trunk, and other structures—so that only the branches are considered for annotation. This would improve model training by reducing possible confusion and emphasizing the network’s focus. Incorporating expert validation directly on the point clouds, accompanied by comparisons to annotations obtained automatically, would enhance the reliability of the training data.

In this dataset, the branches to be pruned are typically longer and grow vertically, a structure that the network demonstrates to recognize quite effectively. The results suggest that the model’s performance is not highly sensitive to voxel size, provided that the resolution is sufficient to preserve such prominent structures. Therefore, the choice of voxel size can be optimized as a trade-off between training duration and prediction accuracy. Further improvements could be achieved through post-processing of predictions—for example, filtering out points predicted on the trunk rather than the branches or enforcing pruning rules to eliminate anatomically inconsistent predictions. Additionally, fine-tuning the network’s parameters may enhance prediction quality. Expanding the dataset with more diverse and representative samples would also be advantageous, especially to capture edge cases and improve generalization.

Overall, the results demonstrate that the pipeline is meaningful and effective, achieving a precision of approximately 75%. Visual inspection of the predicted branches to be pruned indicates that the network produces plausible results, frequently identifying the correct branches with only a slight offset in the predicted cutting points.

Based on the average duration of the entire reconstruction process, as shown in

Table 4—which includes preprocessing (grass removal) and point cloud registration—grass removal emerges as the primary bottleneck, as it can take several minutes per tree. In contrast, registration is performed in just a few seconds and is compatible with real-time applications. The annotation process, reported in

Table 5 is relatively fast, taking approximately 4 s per tree; however, since it can be conducted offline, it is less critical for real-time performance. Regarding training times, as shown in

Table 8, the process ranges from about 8 min to 1 h and 16 min. While this could be accelerated with higher-performance hardware, it is performed offline and thus does not impact the system’s operational efficiency. Testing durations are in the realm of real time, ranging from 2 to 22 s per test set—roughly one second per tree in the worst case—and can be further optimized with more powerful computing resources.

The entire pipeline presented in this work opens several avenues for future research. Improvements in sensor technology, automation of reconstruction validation, enhanced annotation methods, and advanced post-processing techniques all present opportunities to refine and extend this approach. Additionally, expanding the dataset to include more diverse tree structures, growth stages, and orchard conditions will further improve model robustness and generalization. Overall, the framework laid out here serves as a foundational step, encouraging ongoing development and innovation automated tree modeling and pruning prediction.

8. Conclusions

This study outlines a comprehensive pipeline for automated prediction of branches to be pruned, consisting of four stages. First, we created a dataset comprising RGB-D images, corresponding point clouds, reconstructed tree models generated via the proposed reconstruction process, and annotations of branches to be pruned. This dataset provides a valuable resource for future research on tree model reconstruction and pruning prediction, complete with benchmark results for comparative evaluation. Then, the reconstruction process is performed, which utilizes the TEASER++ algorithm, combined with automated preprocessing steps such as background and ground removal, to produce partial 3D models of the trees. After that, branches to be pruned are automatically labeled based on registering models before and after pruning, and identifying points that differ. This step enables the generation of training data for the prediction network. Finally, a deep learning network is trained to predict branches to be pruned, with performance assessed through quantitative metrics and qualitative visual inspections. The results demonstrate the approach’s viability, with the network frequently identifying relevant branches, although some inaccuracies remain.

For each step, potential limitations—such as sensor constraints, manual selection of images used in reconstruction, data-driven annotation, lack of post-processing, and dataset size and variability—have been identified, and improvements have been suggested. These considerations lay the groundwork for future investigations aimed at refining each component, enhancing accuracy, and expanding applicability.