Towards AI-Based Strep Throat Detection and Interpretation for Remote Australian Indigenous Communities

Abstract

1. Introduction

2. Strep Throat Diagnosis in Rural and Remote Indigenous Communities

2.1. Conventional Diagnostic Methods for Streptococcal Pharyngitis

2.2. Why AI Is Essential but Challenging for Strep Throat Diagnosis

3. Proposed AI-Based Model for Strep Throat Diagnosis in Rural and Remote Indigenous Communities

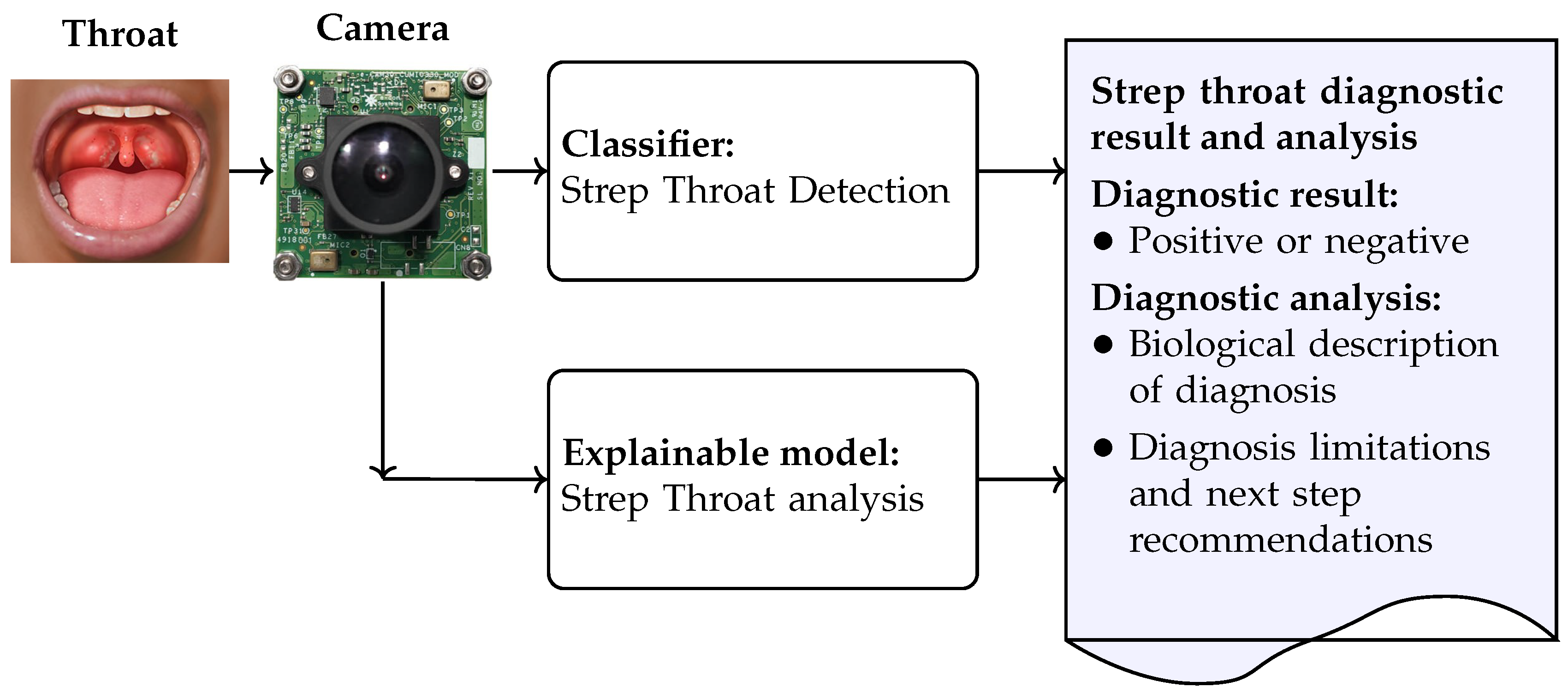

- The strep throat classifier diagnoses strep throat when a throat image is captured by the camera; the strep throat detection model was developed and its performance was evaluated in terms of detection accuracy, inference time, and model size. We attempted to find a balance between detection accuracy, model size, and inference time such that the model can be implemented on resource-limited devices, such as those available in rural and remote Indigenous communities. The development of the strep throat detection model is detailed in Section 3.1.

- The explainable model is used to improve understanding and transparency in strep throat diagnosis. The explainable model generates diagnostic analyses directly from throat images and produces a comprehensive report that includes both the diagnostic outcome and an explanatory narrative. The inclusion of interpretable outputs enhances clinical acceptability. In particular, this approach addresses the resistance to unfamiliar AI technologies often observed in rural and remote Indigenous communities. Both model visualization and the explainable model can be deployed to foster trust and support informed clinical decision-making. The development of the explainable model is detailed in Section 3.2.

3.1. Strep Throat Detection Classifier

3.1.1. Convolutional Neural Networks

3.1.2. Vision Transformers

3.2. Explainable Models

3.2.1. BLIP-2

3.2.2. Low-Rank Adaptation

4. Model Implementation and Validation

4.1. Datasets for Throat Analysis

4.2. Strep Throat Detection Classifier

4.2.1. Classifier Implementation

4.2.2. Detection Accuracy and ROC-AUC

4.2.3. Inference Time and Model Size

4.3. Explainable Model

4.3.1. Label Generation

4.3.2. Fine-Tuning

4.3.3. Validation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wiegele, S.; McKinnon, E.; van Schaijik, B.; Enkel, S.; Noonan, K.; Bowen, A.C.; Wyber, R. The epidemiology of superficial Streptococcal A (impetigo and pharyngitis) infections in Australia: A systematic review. PLoS ONE 2023, 18, e0288016. [Google Scholar] [CrossRef] [PubMed]

- Australian Institute of Health and Welfare. Acute Rheumatic Fever and Rheumatic Heart Disease in Australia, 2016–2020; AIHW: Bruce, ACT, Australia; Darlinghurst, NSW, Australia, 2021. [Google Scholar]

- Nolan-Isles, D.; Macniven, R.; Hunter, K.; Gwynn, J.; Lincoln, M.; Moir, R.; Dimitropoulos, Y.; Taylor, D.; Agius, T.; Finlayson, H.; et al. Enablers and barriers to accessing healthcare services for Aboriginal people in New South Wales, Australia. Int. J. Environ. Res. Public Health 2021, 18, 3014. [Google Scholar] [CrossRef] [PubMed]

- American Medical Association. 2019 AMA Rural Health Issues Survey; AMA: Chicago, IL, USA, 2019. [Google Scholar]

- Asokan, P. The Role of AI in Remote Indigenous Healthcare: Detection and Annotation of Strep Throat. Bachelor’s Thesis, Curtin University, Bentley, Australia, 2024. [Google Scholar]

- Yoo, T.K.; Choi, J.Y.; Jang, Y.; Oh, E.; Ryu, I.H. Toward automated severe pharyngitis detection with smartphone camera using deep learning networks. Comput. Biol. Med. 2020, 125, 103980. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar] [CrossRef]

- Cao, X.; Zhang, Y.; Lang, S.; Gong, Y. Swin-Transformer-Based YOLOv5 for Small-Object Detection in Remote Sensing Images. Sensors 2023, 23, 3634. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Li, J.; Li, D.; Savarese, S.; Hoi, S. BLIP-2: Bootstrapping Language-Image Pre-training with Frozen Image Encoders and Large Language Models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023; pp. 14256–14268. [Google Scholar]

- Ashurst, J.V.; Edgerley-Gibb, L. Streptococcal pharyngitis. In StatPearls [Internet]; StatPearls Publishing: Treasure Island, FL, USA, 2018. [Google Scholar]

- Yeoh, D.K.; Anderson, A.; Cleland, G.; Bowen, A.C. Are scabies and impetigo “normalised”? A cross-sectional comparative study of hospitalised children in northern Australia assessing clinical recognition and treatment of skin infections. PLoS Neglected Trop. Dis. 2017, 11, e0005726. [Google Scholar] [CrossRef]

- Guntinas-Lichius, O.; Geißler, K.; Mäkitie, A.A.; Ronen, O.; Bradley, P.J.; Rinaldo, A.; Takes, R.P.; Ferlito, A. Treatment of recurrent acute tonsillitis—A systematic review and clinical practice recommendations. Front. Surg. 2023, 10, 1221932. [Google Scholar] [CrossRef] [PubMed]

- Willis, B.H.; Coomar, D.; Baragilly, M. Comparison of Centor and McIsaac scores in primary care: A meta-analysis over multiple thresholds. Br. J. Gen. Pract. 2020, 70, e245–e254. [Google Scholar] [CrossRef]

- Mizna, S.; Arora, S.; Saluja, P.; Das, G.; Alanesi, W. An analytic research and review of the literature on practice of artificial intelligence in healthcare. Eur. J. Med Res. 2025, 30, 382. [Google Scholar] [CrossRef]

- Lu, J. Will medical technology deskill doctors? Int. Educ. Stud. 2016, 9, 130–134. [Google Scholar] [CrossRef]

- Jabbour, S.; Fouhey, D.; Shepard, S.; Valley, T.S.; Kazerooni, E.A.; Banovic, N.; Wiens, J.; Sjoding, M.W. Measuring the impact of AI in the diagnosis of hospitalized patients: A randomized clinical vignette survey study. JAMA 2023, 330, 2275–2284. [Google Scholar] [CrossRef]

- Selvaraju, R.R.; Cogswell, M.; Das, A.; Vedantam, R.; Parikh, D.; Batra, D. Grad-cam: Visual explanations from deep networks via gradient-based localization. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 618–626. [Google Scholar]

- Askarian, B.; Yoo, S.C.; Chong, J.W. Novel Image Processing Method for Detecting Strep Throat (Streptococcal Pharyngitis) Using Smartphone. Sensors 2019, 19, 3307. [Google Scholar] [CrossRef] [PubMed]

- Tobias, R.R.; De Jesus, L.C.; Mital, M.E.; Lauguico, S.; Bandala, A.; Vicerra, R.; Dadios, E. Throat Detection and Health Classification Using Neural Network. In Proceedings of the 2019 International Conference on Contemporary Computing and Informatics (IC3I), Singapore, 12–14 December 2019; pp. 38–43. [Google Scholar] [CrossRef]

- Vamsi, Y.; Sai, Y.; Dora, S. Throat Infection Detection Using Deep Learning. UGC Care Group I J. 2022, 12, 127–134. [Google Scholar]

- Chng, S.Y.; Tern, P.J.W.; Kan, M.R.X.; Cheng, L.T.E. Deep Learning Model and its Application for the Diagnosis of Exudative Pharyngitis. Healthc. Inform. Res. 2024, 30, 42. [Google Scholar] [CrossRef] [PubMed]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual Event, 3–7 May 2021. [Google Scholar]

- Dai, Z.; Liu, H.; Le, Q.V.; Tan, M. CoAtNet: Marrying Convolution and Attention for All Data Sizes. arXiv 2021, arXiv:2106.04803. [Google Scholar]

- Li, L.H.; Yatskar, M.; Yin, D.; Hsieh, C.J.; Chang, K.W. VisualBERT: A simple and performant baseline for vision and language. arXiv 2019, arXiv:1908.03557. [Google Scholar] [CrossRef]

- Radford, A.; Kim, J.W.; Hallacy, C.; Ramesh, A.; Goh, G.; Agarwal, S.; Sastry, G.; Askell, A.; Mishkin, P.; Clark, J.; et al. Learning transferable visual models from natural language supervision. In Proceedings of the International Conference on Machine Learning, PMLR, Virtual Event, 18–24 July 2021; pp. 8748–8763. [Google Scholar]

- Alayrac, J.B.; Donahue, J.; Luc, P.; Miech, A.; Barr, I.; Hasson, Y.; Lenc, K.; Mensch, A.; Millican, K.; Reynolds, M.; et al. Flamingo: A visual language model for few-shot learning. Adv. Neural Inf. Process. Syst. 2022, 35, 23716–23736. [Google Scholar]

- Hu, E.J.; Shen, Y.; Wallis, P.; Allen-Zhu, Z.; Li, Y.; Wang, L.; Wang, W.; Chen, W. LoRA: Low-Rank Adaptation of Large Language Models. arXiv 2021, arXiv:2106.09685. [Google Scholar] [CrossRef]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE international Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Cambay, V.Y.; Barua, P.D.; Baig, A.H.; Dogan, S.; Baygin, M.; Tuncer, T.; Acharya, U.R. Automated Detection of Gastrointestinal Diseases Using Resnet50*-Based Explainable Deep Feature Engineering Model with Endoscopy Images. Sensors 2024, 24, 7710. [Google Scholar] [CrossRef]

- Huang, Y.; Liang, S.; Cui, T.; Mu, X.; Luo, T.; Wang, S.; Wu, G. Edge Computing and Fault Diagnosis of Rotating Machinery Based on MobileNet in Wireless Sensor Networks for Mechanical Vibration. Sensors 2024, 24, 5156. [Google Scholar] [CrossRef]

- Perez, H.; Tah, J.H.M.; Mosavi, A. Deep Learning for Detecting Building Defects Using Convolutional Neural Networks. Sensors 2019, 19, 3556. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, H.; Vo, M.; Hyatt, J.; Wang, Z. AI-Powered Visual Sensors and Sensing: Where We Are and Where We Are Going. Sensors 2025, 25, 1758. [Google Scholar] [CrossRef]

- Smith, L.N. Cyclical learning rates for training neural networks. arXiv 2015, arXiv:1506.01186. [Google Scholar]

- Brin, D.; Sorin, V.; Barash, Y.; Konen, E.; Glicksberg, B.S.; Nadkarni, G.N.; Klang, E. Assessing GPT-4 multimodal performance in radiological image analysis. Eur. Radiol. 2025, 35, 1959–1965. [Google Scholar] [CrossRef]

- Zhang, S.; Roller, S.; Goyal, N.; Artetxe, M.; Chen, M.; Chen, S.; Dewan, C.; Diab, M.; Li, X.; Lin, X.V.; et al. Opt: Open pre-trained transformer language models. arXiv 2022, arXiv:2205.01068. [Google Scholar] [CrossRef]

- Reid, M.; Savinov, N.; Teplyashin, D.; Lepikhin, D.; Lillicrap, T.; Alayrac, J.b.; Soricut, R.; Lazaridou, A.; Firat, O.; Schrittwieser, J.; et al. Gemini 1.5: Unlocking multimodal understanding across millions of tokens of context. arXiv 2024, arXiv:2403.05530. [Google Scholar] [CrossRef]

| Parameter | Centor Score | McIsaac Score | FeverPAIN |

|---|---|---|---|

| Validated for | GAS | GAS | -Hemolytic Streptococcus |

| Target group, age | For patients aged ≤15 years | Primarily or patient aged 3–14 | |

| Within 3 days after onset | - | - | 1 point |

| Body temperature > 38 °C | 1 point | 1 point | 1 point |

| No cough | 1 point | 1 point | 1 point |

| Cervical lymph node swelling | 1 point | 1 point | - |

| Tonsillar swelling/exudate | 1 point | 1 point | 1 point |

| Tonsillar redness/inflammation | - | - | 1 point |

| Age | - | <15 years: 1 point | - |

| ≥45 years: minus 1 point | |||

| Sum of point: score and probability | 0: 2.5% | 0: 2.5% | 0: 14% |

| 1: 6–7% | 1: 4.4–5.7% | 1: 16% | |

| 2: 15% | 2: 11% | 2: 33% | |

| 30–35% | 3: 28% | 3: 43% | |

| 50–60% | 4–5: 38–63% | 4–5: 63% |

| Researcher | Method | Accuracy |

|---|---|---|

| Yoo et al. [6] | ResNet50 | 0.953 |

| Chng et al. [22] | EfficientNetB0 | 0.955 |

| Vamsi et al. [21] | CoAtNet | 0.966 |

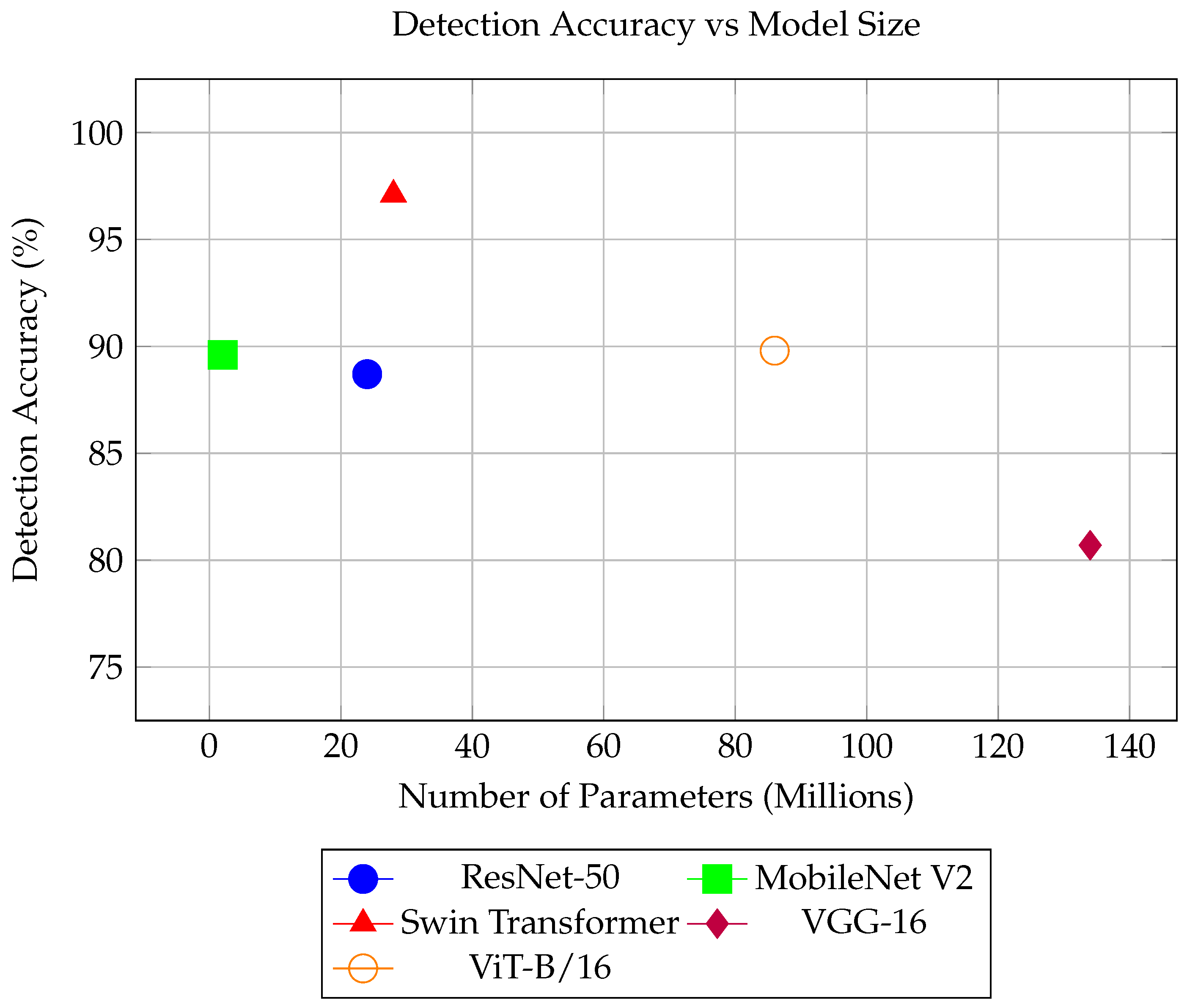

| Model | Accuracy | ROC-AUC |

|---|---|---|

| ResNet-50 | 88.7% | 0.951 |

| MobileNet V2 | 89.6% | 0.959 |

| Swin Transformer | 97.1% | 0.993 |

| VGGNet-16 | 80.7% | 0.909 |

| ViT-B/16 | 89.8% | 0.969 |

| Model | Inference Time (ms) | Number of Parameters |

|---|---|---|

| ResNet-50 | 6.7 | 24 M |

| MobileNet V2 | 5.6 | 2 M |

| Swim Transformer | 13.8 | 28 M |

| VGG-16 | 1.5 | 134 M |

| ViT-B/16 | 5.3 | 86 M |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Asokan, P.; Truong, T.T.; Pham, D.S.; Chan, K.Y.; Soon, S.; Maiorana, A.; Hollingsworth, C. Towards AI-Based Strep Throat Detection and Interpretation for Remote Australian Indigenous Communities. Sensors 2025, 25, 5636. https://doi.org/10.3390/s25185636

Asokan P, Truong TT, Pham DS, Chan KY, Soon S, Maiorana A, Hollingsworth C. Towards AI-Based Strep Throat Detection and Interpretation for Remote Australian Indigenous Communities. Sensors. 2025; 25(18):5636. https://doi.org/10.3390/s25185636

Chicago/Turabian StyleAsokan, Prasanna, Thanh Thu Truong, Duc Son Pham, Kit Yan Chan, Susannah Soon, Andrew Maiorana, and Cate Hollingsworth. 2025. "Towards AI-Based Strep Throat Detection and Interpretation for Remote Australian Indigenous Communities" Sensors 25, no. 18: 5636. https://doi.org/10.3390/s25185636

APA StyleAsokan, P., Truong, T. T., Pham, D. S., Chan, K. Y., Soon, S., Maiorana, A., & Hollingsworth, C. (2025). Towards AI-Based Strep Throat Detection and Interpretation for Remote Australian Indigenous Communities. Sensors, 25(18), 5636. https://doi.org/10.3390/s25185636