Multi-Temporal Remote Sensing Image Matching Based on Multi-Perception and Enhanced Feature Descriptors

Abstract

1. Introduction

- We designed a feature extraction network based on multi-perception, which increases the number of effective feature points extracted in multi-temporal remote sensing image matching tasks and improves the positional accuracy of feature points to some extent.

- We introduced a feature descriptor enhancement module for multi-temporal remote sensing image matching tasks. This module optimizes feature descriptors through self-enhancement and cross-enhancement phases to improve their matching performance.

- To demonstrate the superiority of our method, we conducted comparative experiments against existing remote sensing image matching methods on multi-temporal remote sensing datasets, validating the accuracy and robustness of our approach.

2. Related Works

3. Method

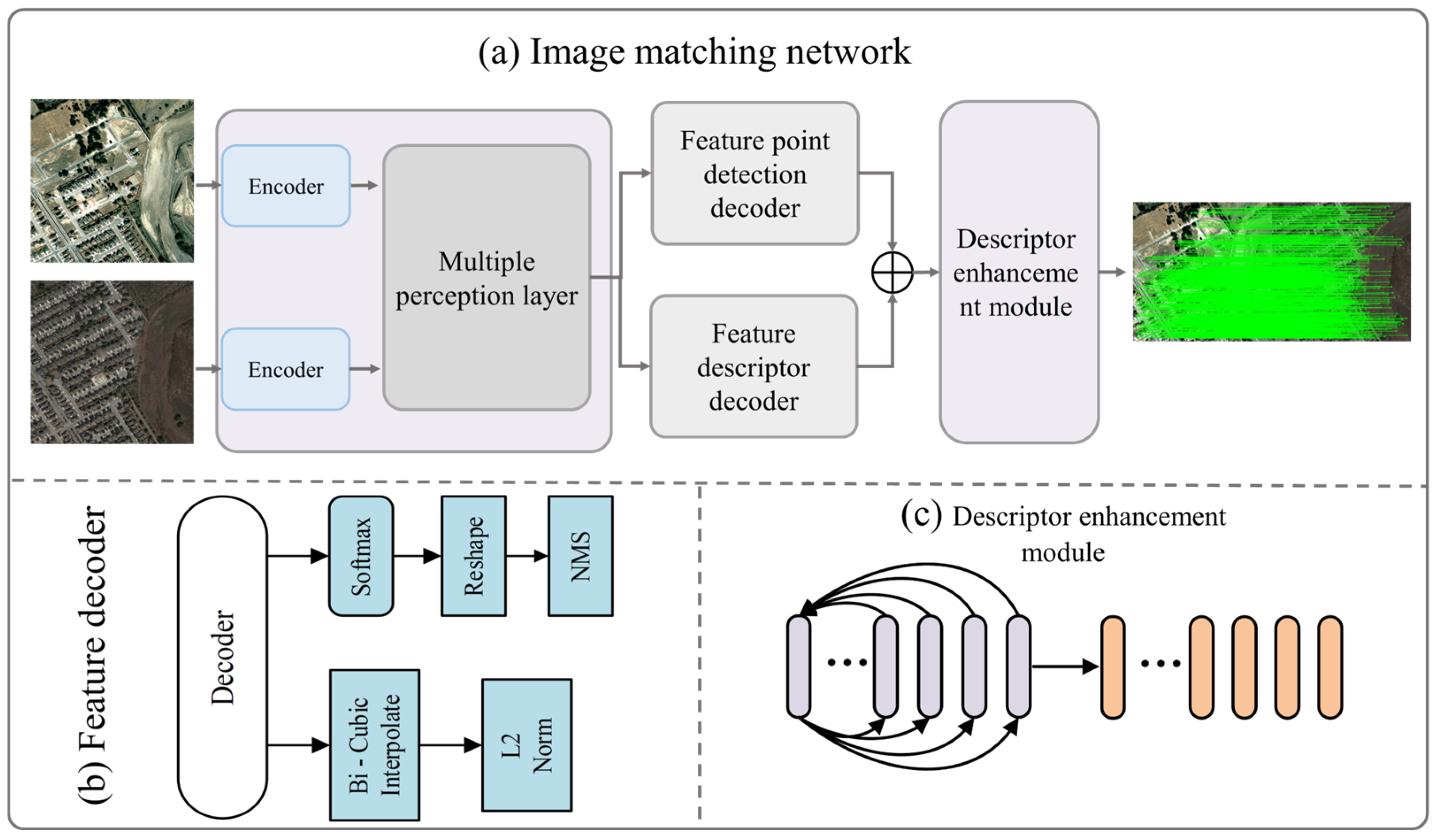

3.1. Overview of the Proposed Method

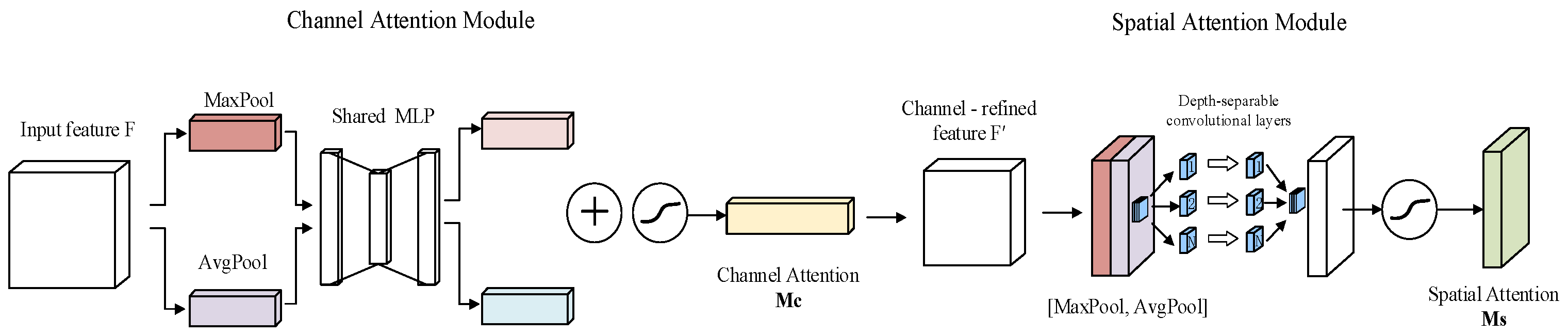

3.2. Feature Extraction Network

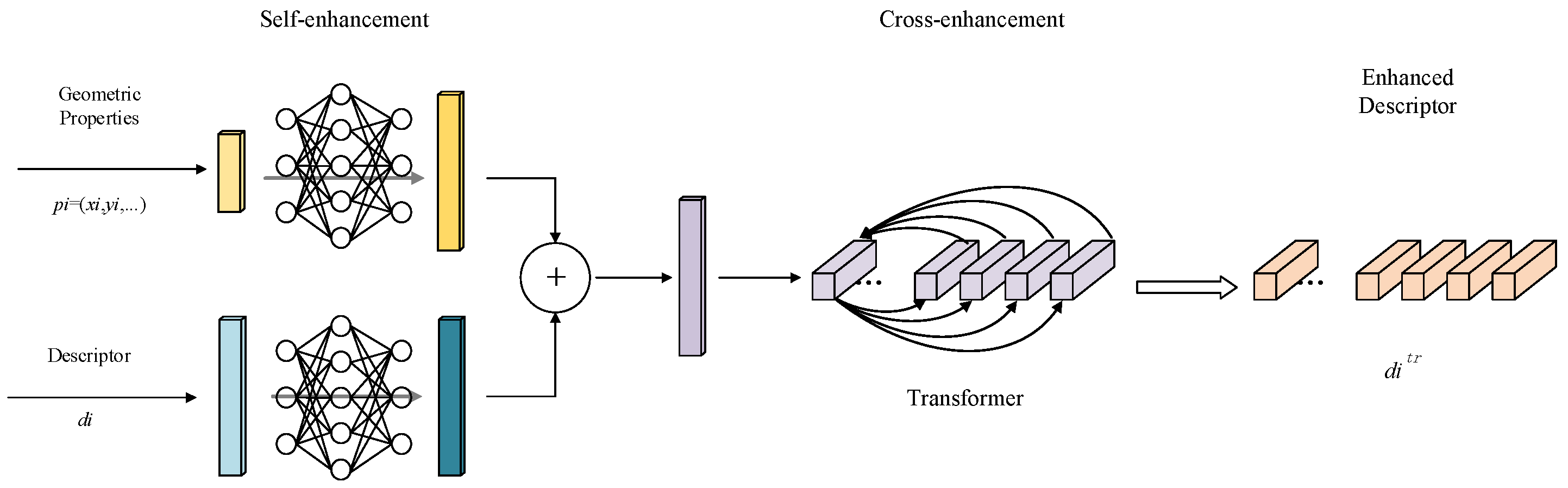

3.3. Descriptor Enhancement Module

- (1)

- Self-Enhancement Phase: For each feature point detected in the image, a multilayer perceptron (MLP) network is applied to project its original descriptor; it is then mapped into a new space to obtain a preliminary enhanced descriptor. The MLP serves as a universal function approximator, as demonstrated by the Cybenko theorem [29]. We utilized MLP to approximate the projection function, denoting it as . The architecture consists of two fully connected layers, with an ReLU activation function applied between them. Additionally, layer normalization and dropout were utilized as regularization techniques to prevent overfitting. The transformed descriptor for key point was defined as the nonlinear projection of the extracted descriptor :

- (2)

- Cross-Enhancement Phase: The self-enhancement phase does not consider the potential correlations among different feature points, enhancing each descriptor independently. For instance, it fails to leverage the spatial relationships between these feature points; however, spatial contextual cues can significantly improve feature matching capabilities. Consequently, the descriptors obtained after the self-enhancement phase perform poorly in scenarios involving variations in perspective and complex terrain. To address this issue, we further processed these descriptors through the cross-enhancement phase. We took the descriptors generated in the self-enhancement phase as input and utilized the attention mechanism of a Transformer to capture the spatial context relationships among the key points. We denote the Transformer as “Trans,” and the projection is described as follows:

- (3)

- Loss Function: We used the descriptor matching problem as a nearest neighbor retrieval task and Average Precision () to train the descriptors. Given the transformed local feature descriptor , our objective was to maximize the [34] of all descriptors. Therefore, the training goal was to minimize the following cost function:

4. Experimental Results and Discussion

4.1. Experimental Data and Parameter Settings

4.2. Performance Metrics

- (1)

- NCM: The NCM represents the total number of correctly matched points. One image in the pair is designated as the reference image, while the other serves as the target image. The feature point positions obtained from the matching algorithm on the target image are denoted as , and the corresponding ground truth positions on the reference image are denoted as . Using a matching accuracy threshold (in this experiment, points within a 3-pixel error margin were considered correctly matched), the determination of correctly matched points is shown in the following equation:where is the mapping matrix between the reference image and the matched image.

- (2)

- SR: The SR is defined as the ratio of the number of correctly matched points (NCMs) to the total number of matched points provided by the algorithm (). The calculation formula is as follows:where represents the NCM.

- (3)

- RMSE: The RMSE is a commonly used metric for evaluating the performance of matching algorithms. It measures the degree of deviation between the predicted positions of matched points and their corresponding ground truth values. Specifically, the RMSE represents the average deviation of the matching points, reflecting the magnitude of the error at each matched point coordinate. The calculation formula is as follows:where represents the NCM and represents the mapping matrix between the reference image and the matched image.

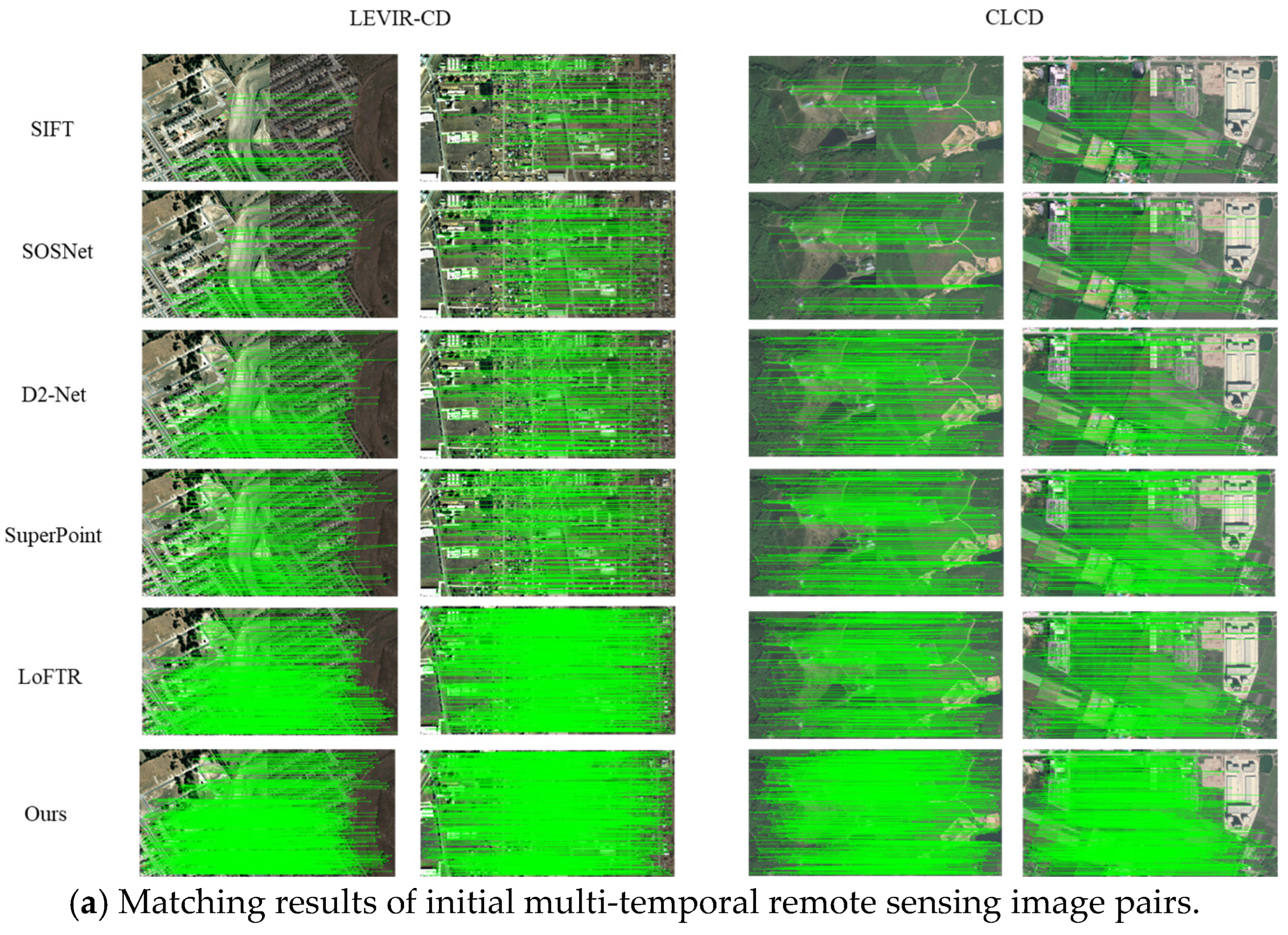

4.3. Matching Performance Evaluation

- (1)

- The original dual-temporal remote sensing image pairs;

- (2)

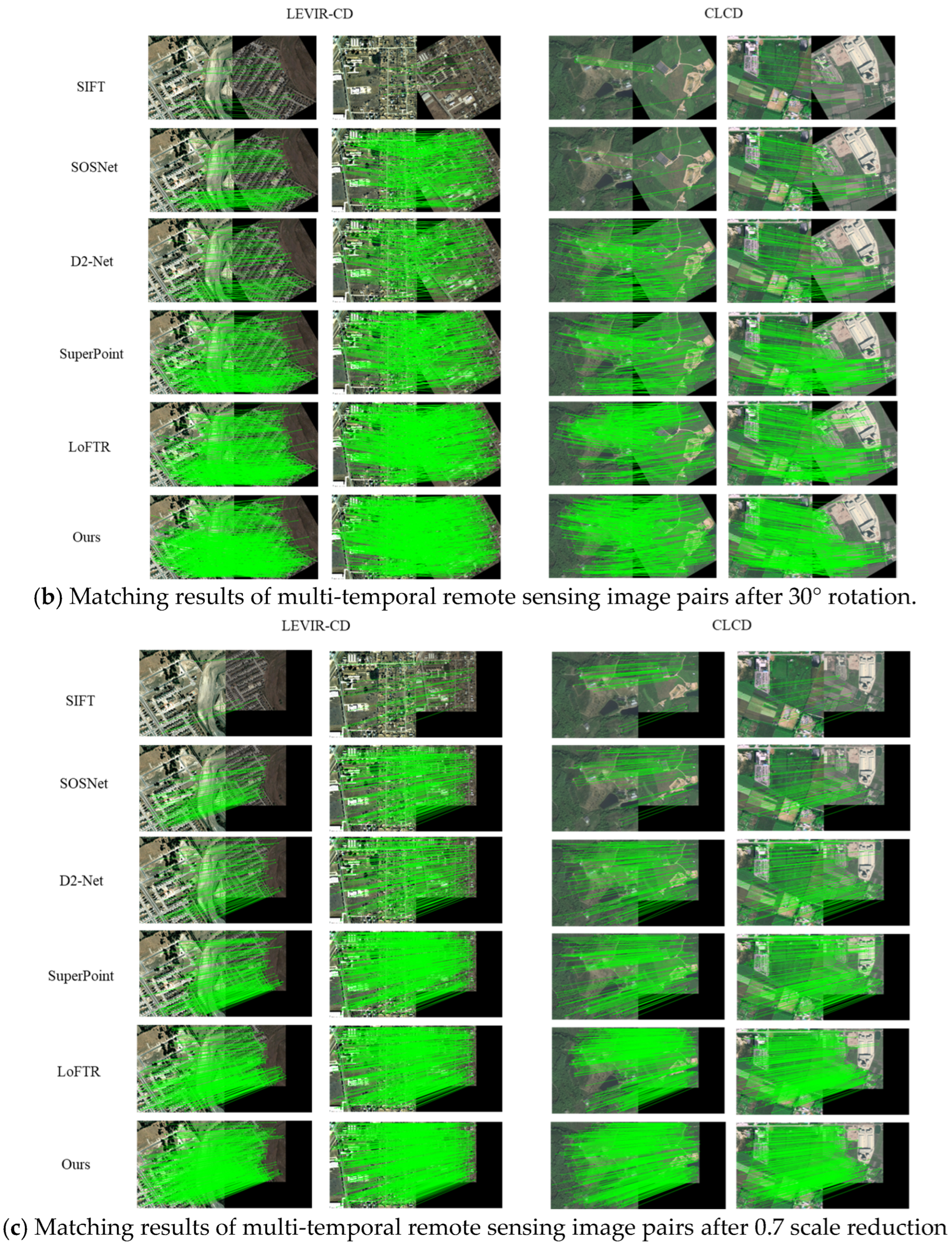

- Dual-temporal remote sensing image pairs subjected to angular transformations;

- (3)

- Dual-temporal remote sensing image pairs subjected to scale transformations.

4.4. Ablation Study

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Bovolo, F.; Bruzzone, L.; King, R.L. Introduction to the special issue on analysis of multitemporal remote sensing data. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1867–1869. [Google Scholar] [CrossRef]

- Meng, X.; Liu, Q.; Shao, F.; Li, S. Spatio–temporal–spectral collaborative learning for spatio–temporal fusion with land cover changes. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Fu, Z.; Zhang, J.; Tang, B.H. Multi-Temporal Snow-Covered Remote Sensing Image Matching via Image Transformation and Multi-Level Feature Extraction. Optics 2024, 5, 392–405. [Google Scholar] [CrossRef]

- Mohammadi, N.; Sedaghat, A.; Jodeiri Rad, M. Rotation-invariant self-similarity descriptor for multi-temporal remote sensing image registration. Photogramm. Rec. 2022, 37, 6–34. [Google Scholar] [CrossRef]

- Habeeb, H.N.; Mustafa, Y.T. Deep Learning-Based Prediction of Forest Cover Change in Duhok, Iraq: Past and Future. Forestist 2025, 75, 1–13. [Google Scholar] [CrossRef]

- Feng, S.; Fan, Y.; Tang, Y.; Cheng, H.; Zhao, C.; Zhu, Y.; Cheng, C. A change detection method based on multi-scale adaptive convolution kernel network and multimodal conditional random field for multi-temporal multispectral images. Remote Sens. 2022, 14, 5368. [Google Scholar] [CrossRef]

- Ma, J.; Jiang, X.; Fan, A.; Jiang, J.; Yan, J. Image matching from handcrafted to deep features: A survey. Int. J. Comput. Vis. 2021, 129, 23–79. [Google Scholar] [CrossRef]

- Ma, J.; Zhou, H.; Zhao, J.; Gao, Y.; Jiang, J.; Tian, J. Robust feature matching for remote sensing image registration via locally linear transforming. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6469–6481. [Google Scholar] [CrossRef]

- Chen, L.; Rottensteiner, F.; Heipke, C. Feature detection and description for image matching: From hand-crafted design to deep learning. Geo-Spat. Inf. Sci. 2021, 24, 58–74. [Google Scholar] [CrossRef]

- Liu, Y.; Xu, X.; Li, F. Image feature matching based on deep learning. In Proceedings of the 2018 IEEE 4th International Conference on Computer and Communications (ICCC), Chengdu, China, 7–10 December 2018; IEEE: New York, NY, USA, 2018; pp. 1752–1756. [Google Scholar]

- DeTone, D.; Malisiewicz, T.; Rabinovich, A. Superpoint: Self-supervised interest point detection and description. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Salt Lake City, UT, USA, 18–22 June 2018; pp. 224–236. [Google Scholar]

- Wang, X.; Liu, Z.; Hu, Y.; Xi, W.; Yu, W.; Zou, D. Featurebooster: Boosting feature descriptors with a lightweight neural network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Vancouver, BC, Canada, 17–24 June 2023; pp. 7630–7639. [Google Scholar]

- Zeng, L.; Du, Y.; Lin, H.; Wang, J.; Yin, J.; Yang, J. A novel region-based image registration method for multisource remote sensing images via CNN. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 14, 1821–1831. [Google Scholar] [CrossRef]

- Zeng, Q.; Hui, B.; Liu, Z.; Xu, Z.; He, M. A Method Combining Discrete Cosine Transform with Attention for Multi-Temporal Remote Sensing Image Matching. Sensors 2025, 25, 1345. [Google Scholar] [CrossRef]

- Chen, S.; Zhong, S.; Xue, B.; Li, X.; Zhao, L.; Chang, C.-I. Iterative scale-invariant feature transform for remote sensing image registration. IEEE Trans. Geosci. Remote Sens. 2020, 59, 3244–3265. [Google Scholar] [CrossRef]

- Okorie, A.; Makrogiannis, S. Region-based image registration for remote sensing imagery. Comput. Vis. Image Underst. 2019, 189, 102825. [Google Scholar] [CrossRef]

- Yang, Z.; Dan, T.; Yang, Y. Multi-temporal remote sensing image registration using deep convolutional features. IEEE Access 2018, 6, 38544–38555. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the 2011 International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; IEEE: New York, NY, USA, 2011; pp. 2564–2571. [Google Scholar]

- Yang, W.; Yao, Y.; Zhang, Y.; Wan, Y. Weak texture remote sensing image matching based on hybrid domain features and adaptive description method. Photogramm. Rec. 2023, 38, 537–562. [Google Scholar] [CrossRef]

- Gong, X.; Yao, F.; Ma, J.; Jiang, J.; Lu, T.; Zhang, Y.; Zhou, H. Feature matching for remote-sensing image registration via neighborhood topological and affine consistency. Remote Sens. 2022, 14, 2606. [Google Scholar] [CrossRef]

- Feng, R.; Shen, H.; Bai, J.; Li, X. Advances and opportunities in remote sensing image geometric registration: A systematic review of state-of-the-art approaches and future research directions. IEEE Geosci. Remote Sens. Mag. 2021, 9, 120–142. [Google Scholar] [CrossRef]

- Sun, J.; Shen, Z.; Wang, Y.; Bao, H.; Zhou, X. LoFTR: Detector-free local feature matching with transformers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8922–8931. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1251–1258. [Google Scholar]

- Khan, Z.Y.; Niu, Z. CNN with depthwise separable convolutions and combined kernels for rating prediction. Expert Syst. Appl. 2021, 170, 114528. [Google Scholar] [CrossRef]

- Zhao, M.; Yang, R.; Hu, M.; Liu, B. Deep learning-based technique for remote sensing image enhancement using multiscale feature fusion. Sensors 2024, 24, 673. [Google Scholar] [CrossRef]

- Li, W.; Chen, H.; Liu, Q.; Liu, H.; Wang, Y.; Gui, G. Attention mechanism and depthwise separable convolution aided 3DCNN for hyperspectral remote sensing image classification. Remote Sens. 2022, 14, 2215. [Google Scholar] [CrossRef]

- Dusmanu, M.; Miksik, O.; Schönberger, J.L.; Pollefeys, M. Cross-descriptor visual localization and mapping. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 6058–6067. [Google Scholar]

- Zhai, S.; Talbott, W.; Srivastava, N.; Huang, C.; Goh, H.; Zhang, R.; Susskind, J. An attention free transformer. arXiv 2021, arXiv:2105.14103. [Google Scholar] [CrossRef]

- Wang, R.; Ma, L.; He, G.; Johnson, B.A.; Yan, Z.; Chang, M.; Liang, Y. Transformers for remote sensing: A systematic review and analysis. Sensors 2024, 24, 3495. [Google Scholar] [CrossRef]

- Chen, H.; Qi, Z.; Shi, Z. Remote sensing image change detection with transformers. IEEE Trans. Geosci. Remote Sens. 2021, 60, 5607514. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, Y.; Liu, A.; Xie, Q.; Ward, R.; Wang, Z.J.; Chen, X. Multimodal image fusion via self-supervised transformer. IEEE Sens. J. 2023, 23, 9796–9807. [Google Scholar] [CrossRef]

- Boyd, K.; Eng, K.H.; Page, C.D. Area under the precision-recall curve: Point estimates and confidence intervals. In Proceedings of the Joint European Conference on Machine Learning and Knowledge Discovery in Databases, Prague, Czech Republic, 23–27 September 2013; Springer: Berlin/Heidelberg, Germany, 2013; pp. 451–466. [Google Scholar]

- Cakir, F.; He, K.; Xia, X.; Kulis, B.; Sclaroff, S. Deep metric learning to rank. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 1861–1870. [Google Scholar]

- Chen, H.; Shi, Z. A spatial-temporal attention-based method and a new dataset for remote sensing image change detection. Remote Sens. 2020, 12, 1662. [Google Scholar] [CrossRef]

- Liu, M.; Chai, Z.; Deng, H.; Liu, R. A CNN-transformer network with multiscale context aggregation for fine-grained cropland change detection. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 4297–4306. [Google Scholar] [CrossRef]

- Tian, Y.; Yu, X.; Fan, B.; Wu, F.; Heijnen, H.; Balntas, V. Sosnet: Second order similarity regularization for local descriptor learning. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 11016–11025. [Google Scholar]

- Dusmanu, M.; Rocco, I.; Pajdla, T.; Pollefeys, M.; Sivic, J.; Torii, A.; Sattler, T. D2-net: A trainable cnn for joint description and detection of local features. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 8092–8101. [Google Scholar]

| Group | Method | LEVIR-CD | CLCD | ||||||

|---|---|---|---|---|---|---|---|---|---|

| NCM | SR | RMSE | RT | NCM | SR | RMSE | RT | ||

| (a) | SIFT | 82 | 86.74 | 2.34 | 2.53 | 76 | 86.53 | 2.52 | 2.61 |

| SOSNet | 121 | 89.83 | 2.08 | 0.95 | 108 | 88.25 | 2.37 | 1.25 | |

| D2-Net | 160 | 91.42 | 2.17 | 1.47 | 177 | 93.36 | 2.06 | 1.37 | |

| SuperPoint | 173 | 92.46 | 1.88 | 0.76 | 156 | 90.14 | 2.25 | 0.92 | |

| LoFTR | 256 | 97.82 | 1.58 | 2.32 | 212 | 96.24 | 1.82 | 2.15 | |

| Ours | 263 | 99.25 | 1.62 | 1.24 | 246 | 98.43 | 1.71 | 1.30 | |

| (b) | SIFT | 46 | 48.73 | 2.62 | 2.44 | 38 | 41.93 | 2.77 | 2.47 |

| SOSNet | 81 | 55.16 | 2.58 | 1.02 | 66 | 45.00 | 2.61 | 1.18 | |

| D2-Net | 117 | 88.56 | 2.36 | 1.38 | 94 | 83.27 | 2.44 | 1.42 | |

| SuperPoint | 146 | 92.50 | 2.20 | 0.82 | 122 | 87.57 | 2.32 | 0.87 | |

| LoFTR | 187 | 93.38 | 1.98 | 2.17 | 165 | 90.29 | 2.04 | 2.24 | |

| Ours | 232 | 96.74 | 1.76 | 1.15 | 218 | 94.14 | 1.82 | 1.35 | |

| (c) | SIFT | 52 | 53.60 | 2.50 | 2.23 | 43 | 47.81 | 2.95 | 2.55 |

| SOSNet | 68 | 64.71 | 2.46 | 0.89 | 55 | 58.25 | 2.87 | 1.16 | |

| D2-Net | 133 | 91.54 | 2.28 | 1.31 | 120 | 88.05 | 2.39 | 1.33 | |

| SuperPoint | 110 | 86.21 | 2.41 | 0.85 | 102 | 82.34 | 2.65 | 0.78 | |

| LoFTR | 202 | 94.67 | 2.17 | 2.08 | 198 | 92.15 | 2.23 | 2.13 | |

| Ours | 218 | 97.48 | 1.67 | 1.21 | 203 | 95.86 | 1.74 | 1.28 | |

| Multi-Perspective Layer | Descriptor Enhancement Module | NCM | SR | RMSE | RT |

|---|---|---|---|---|---|

| × | × | 202 | 91.56 | 2.13 | 0.86 |

| √ | × | 243 | 94.83 | 1.97 | 1.14 |

| × | √ | 217 | 96.71 | 1.72 | 0.98 |

| √ | √ | 256 | 98.24 | 1.65 | 1.27 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, J.; Zang, W.; Tian, X. Multi-Temporal Remote Sensing Image Matching Based on Multi-Perception and Enhanced Feature Descriptors. Sensors 2025, 25, 5581. https://doi.org/10.3390/s25175581

Zhang J, Zang W, Tian X. Multi-Temporal Remote Sensing Image Matching Based on Multi-Perception and Enhanced Feature Descriptors. Sensors. 2025; 25(17):5581. https://doi.org/10.3390/s25175581

Chicago/Turabian StyleZhang, Jinming, Wenqian Zang, and Xiaomin Tian. 2025. "Multi-Temporal Remote Sensing Image Matching Based on Multi-Perception and Enhanced Feature Descriptors" Sensors 25, no. 17: 5581. https://doi.org/10.3390/s25175581

APA StyleZhang, J., Zang, W., & Tian, X. (2025). Multi-Temporal Remote Sensing Image Matching Based on Multi-Perception and Enhanced Feature Descriptors. Sensors, 25(17), 5581. https://doi.org/10.3390/s25175581