RST-Net: A Semantic Segmentation Network for Remote Sensing Images Based on a Dual-Branch Encoder Structure

Abstract

1. Introduction

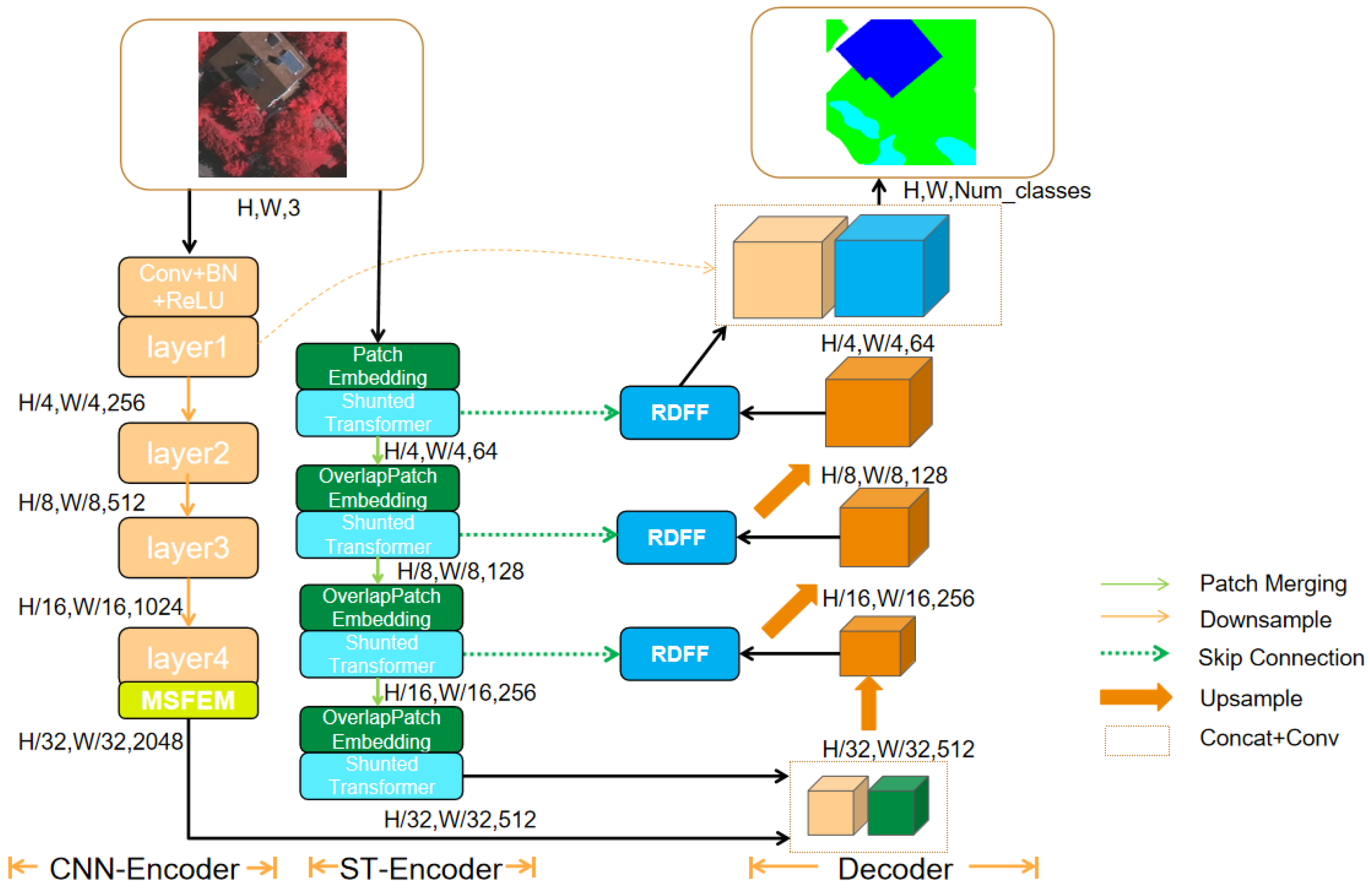

- We propose a novel dual-branch encoder that integrates a ResNeXt-50-based CNN and a Transformer in parallel, effectively combining local detail extraction and global context modeling to generate rich multilevel feature representations.

- We design the MSFEM that integrates various convolution operations, such as atrous and depthwise separable convolutions, to dynamically extract and aggregate multi-scale features, thereby addressing the limitations of inadequate multi-scale representation.

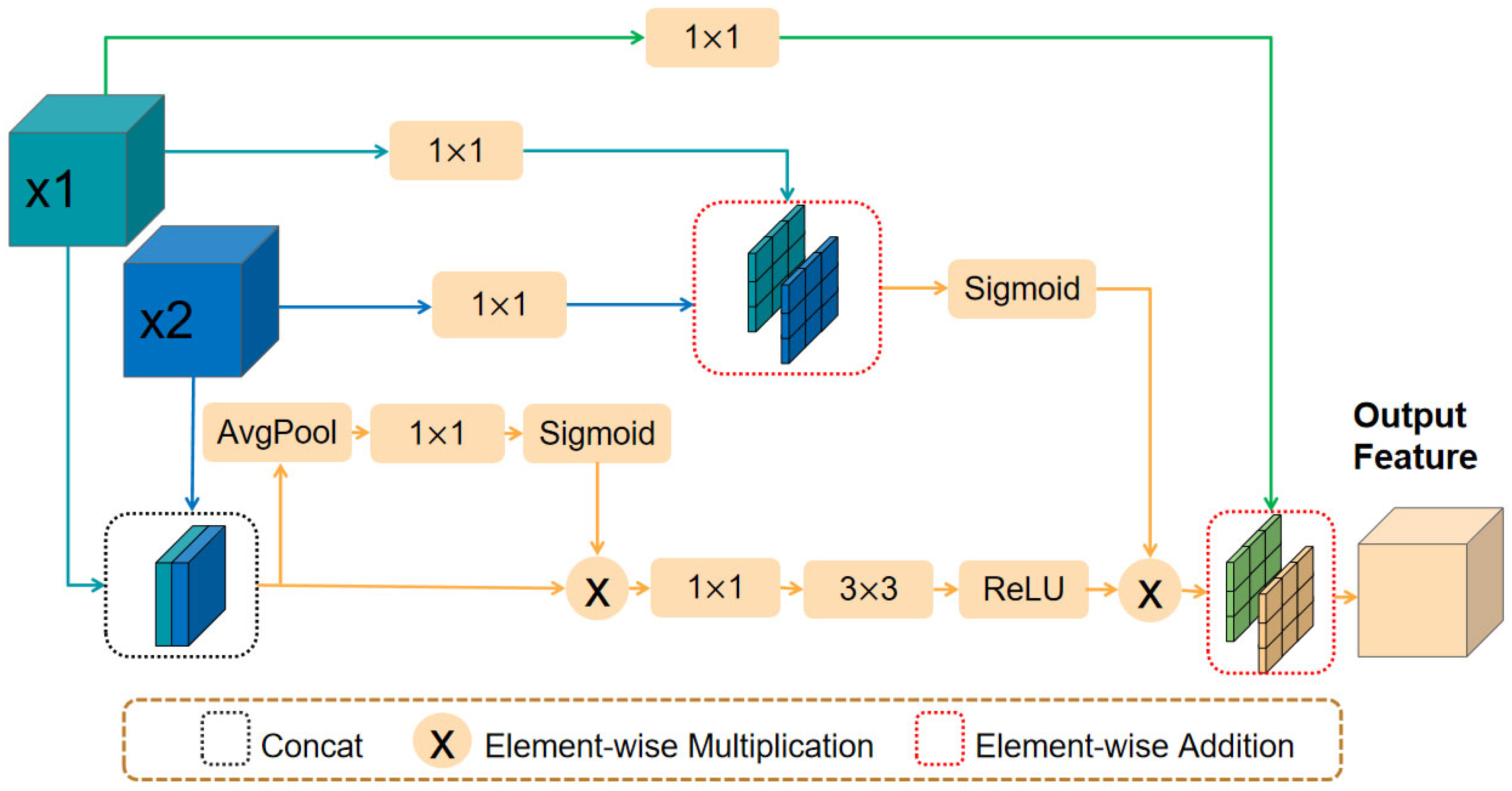

- We introduce the RDFF module to alleviate semantic–detail conflicts in conventional skip connections by integrating residual connections with channel–spatial dual attention, enabling the adaptive fusion of deep semantic and shallow detail features.

2. Related Work

2.1. Remote Sensing Image Semantic Segmentation

2.2. Multi-Scale Feature Extraction

2.3. Skip Connections

3. Proposed Method

3.1. Dual-Branch Encoder Structure

3.2. Multi-Scale Feature Enhancement Module (MSFEM)

3.3. Residual Dynamic Feature Fusion (RDFF)

4. Experiments

4.1. Datasets

4.2. Experimental Settings

4.3. Evaluation Metrics

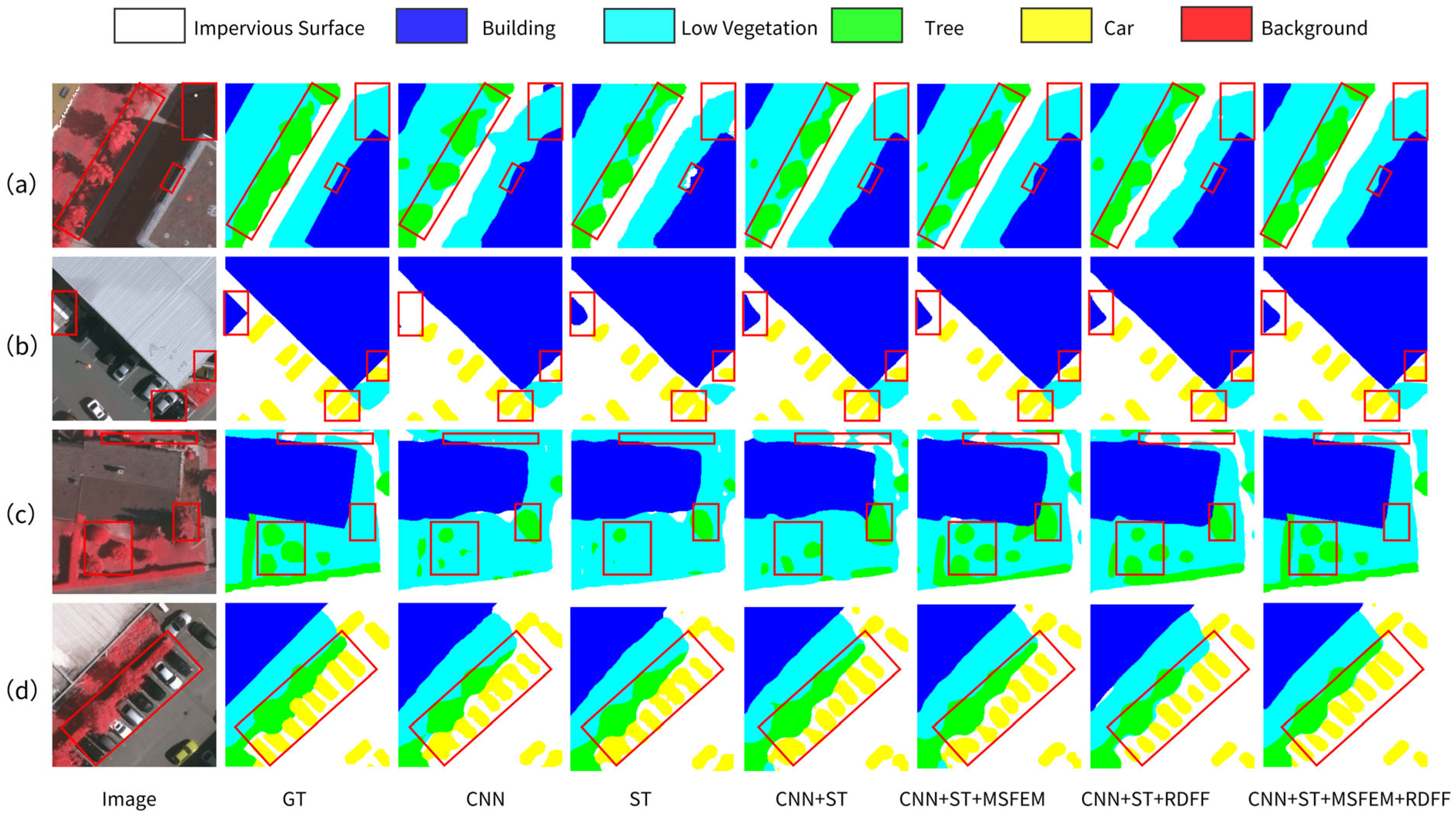

4.4. Ablation Study

4.4.1. Effectiveness of the Dual-Branch Encoder Structure

4.4.2. Effectiveness of the MSFEM

4.4.3. Effectiveness of the RDFF Module

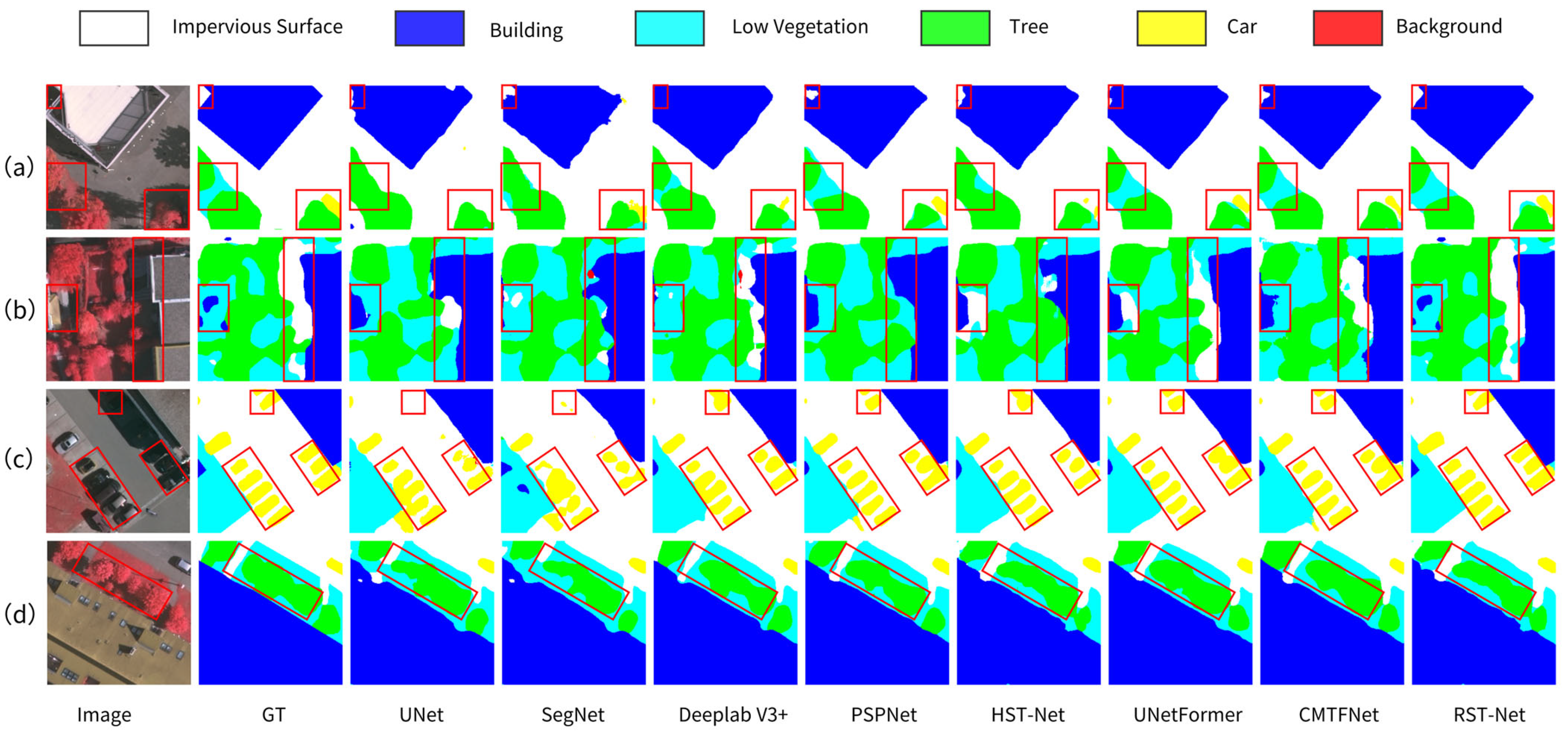

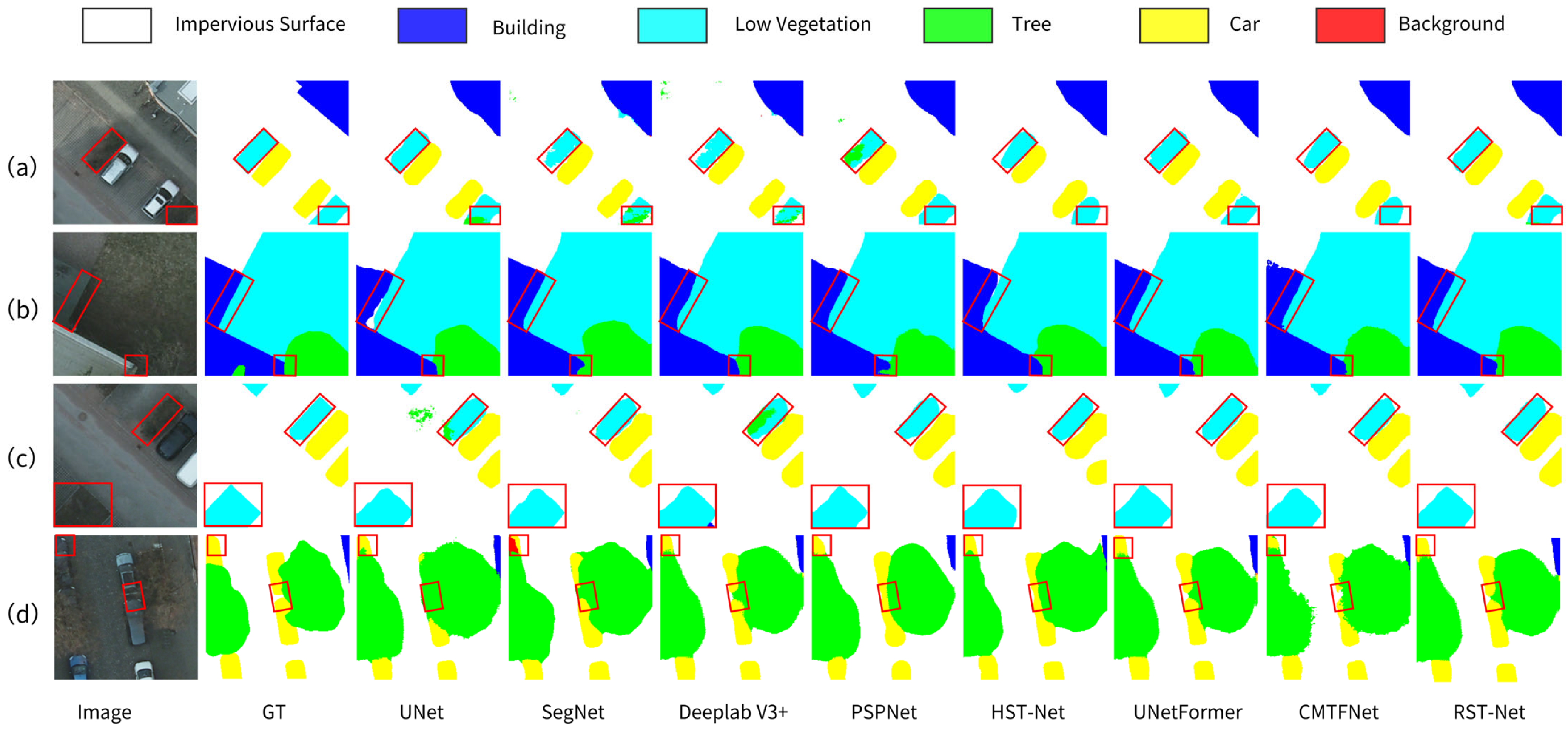

4.5. Comparative Experiments

4.5.1. Results on the Vaihingen Dataset

4.5.2. Results on the Potsdam Dataset

4.5.3. Efficiency Analysis

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Fan, J.; Shi, Z.; Ren, Z.; Zhou, Y.; Ji, M. DDPM-SegFormer: Highly refined feature land use and land cover segmentation with a fused denoising diffusion probabilistic model and transformer. Int. J. Appl. Earth Obs. Geoinf. 2024, 133, 104093. [Google Scholar] [CrossRef]

- Shi, Z.; Fan, J.; Du, Y.; Zhou, Y.; Zhang, Y. LULC-SegNet: Enhancing Land Use and Land Cover Semantic Segmentation with Denoising Diffusion Feature Fusion. Remote Sens. 2024, 16, 4573. [Google Scholar] [CrossRef]

- Zhou, N.; Hong, J.; Cui, W.; Wu, S.; Zhang, Z. A Multiscale Attention Segment Network-Based Semantic Segmentation Model for Landslide Remote Sensing Images. Remote Sens. 2024, 16, 1712. [Google Scholar] [CrossRef]

- Kaushal, A.; Gupta, A.K.; Sehgal, V.K. A semantic segmentation framework with UNet-pyramid for landslide prediction using remote sensing data. Sci. Rep. 2024, 14, 30071. [Google Scholar] [CrossRef]

- Jia, P.; Chen, C.; Zhang, D.; Sang, Y.; Zhang, L. Semantic segmentation of deep learning remote sensing images based on band combination principle: Application in urban planning and land use. Comput. Commun. 2024, 217, 97–106. [Google Scholar] [CrossRef]

- Guo, Z.; Shengoku, H.; Wu, G.; Chen, Q.; Yuan, W.; Shi, X.; Shao, X.; Xu, Y.; Shibasaki, R.J.I. Semantic Segmentation for Urban Planning Maps based on U-Net. In Proceedings of the IGARSS 2018—2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018. [Google Scholar]

- Wang, Y.; Yu, W.; Fang, Z. Multiple Kernel-Based SVM Classification of Hyperspectral Images by Combining Spectral, Spatial, and Semantic Information. Remote Sens. 2020, 12, 120. [Google Scholar] [CrossRef]

- Friedl, M.A.; Brodley, C.E. Decision tree classification of land cover from remotely sensed data. Remote Sens. Environ. 1997, 61, 399–409. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Chen, G.; Zhang, X.; Wang, Q.; Dai, F.; Gong, Y.; Zhu, K. Symmetrical Dense-Shortcut Deep Fully Convolutional Networks for Semantic Segmentation of Very-High-Resolution Remote Sensing Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1633–1644. [Google Scholar] [CrossRef]

- Chen, G.; Tan, X.; Guo, B.; Zhang, X. SDFCNv2: An Improved FCN Framework for Remote Sensing Images Semantic Segmentation. Remote Sens. 2021, 13, 4902. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the 18th International Conference on Medical Image Computing and Computer-Assisted Intervention—MICCAI 2015, Munich, Germany, 5–9 October 2015. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar]

- Chen, L.C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-Decoder with Atrous Separable Convolution for Semantic Image Segmentation. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 14 September 2018. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Schroff, F.; Adam, H. Rethinking Atrous Convolution for Semantic Image Segmentation. arXiv 2017, arXiv:1706.05587v3. [Google Scholar] [CrossRef]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep High-Resolution Representation Learning for Human Pose Estimation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019; pp. 5686–5696. [Google Scholar]

- Lin, G.; Milan, A.; Shen, C.; Reid, I. RefineNet: Multi-path Refinement Networks for High-Resolution Semantic Segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated Residual Transformations for Deep Neural Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018. [Google Scholar]

- Oktay, O.; Schlemper, J.; Folgoc, L.L.; Lee, M.; Heinrich, M.; Misawa, K.; Mori, K.; Mcdonagh, S.; Hammerla, N.Y.; Kainz, B. Attention U-Net: Learning Where to Look for the Pancreas. arXiv 2018, arXiv:1804.03999. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar] [CrossRef]

- Fu, J.; Liu, J.; Tian, H.; Li, Y.; Bao, Y.; Fang, Z.; Lu, H. Dual Attention Network for Scene Segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019. [Google Scholar]

- Vaswani, A.; Shazeer, N.M.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. In Proceedings of the Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Lu, S.-Y.; Zhang, Y.-D.; Yao, Y.-D. A regularized transformer with adaptive token fusion for Alzheimer’s disease diagnosis in brain magnetic resonance images. Eng. Appl. Artif. Intell. 2025, 155, 111058. [Google Scholar] [CrossRef]

- Lu, S.-Y.; Zhu, Z.; Zhang, Y.-D.; Yao, Y.-D. Tuberculosis and pneumonia diagnosis in chest X-rays by large adaptive filter and aligning normalized network with report-guided multi-level alignment. Eng. Appl. Artif. Intell. 2025, 158, 111575. [Google Scholar] [CrossRef]

- Lu, S.-Y.; Zhu, Z.; Tang, Y.; Zhang, X.; Liu, X. CTBViT: A novel ViT for tuberculosis classification with efficient block and randomized classifier. Biomed. Signal Process. Control 2025, 100, 106981. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Houlsby, N. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021. [Google Scholar]

- Ren, S.; Zhou, D.; He, S.; Feng, J.; Wang, X. Shunted Self-Attention via Multi-Scale Token Aggregation. In Proceedings of the 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Zhou, Y. TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar] [CrossRef]

- Cao, H.; Wang, Y.; Chen, J.; Jiang, D.; Zhang, X.; Tian, Q.; Wang, M. Swin-Unet: Unet-like Pure Transformer for Medical Image Segmentation. In Proceedings of the European Conference on Computer Vision 2022, Tel Aviv, Israel, 23–24 October 2022. [Google Scholar]

- Cheng, R.; Chen, J.; Xia, Z.; Lu, C. RSFormer: Medical Image Segmentation Based on Dual Model Channel Merging; SPIE: Cergy, France, 2024; Volume 13250. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Hu, Q. ECA-Net: Efficient Channel Attention for Deep Convolutional Neural Networks. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 14–19 June 2020. [Google Scholar]

- Huang, Z.; Wang, X.; Wei, Y.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. CCNet: Criss-Cross Attention for Semantic Segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 603–612. [Google Scholar]

- Zhou, Q.; Qiang, Y.; Mo, Y.; Wu, X.; Latecki, L.J. BANet: Boundary-Assistant Encoder-Decoder Network for Semantic Segmentation. IEEE Trans. Intell. Transp. Syst. 2022, 23, 25259–25270. [Google Scholar] [CrossRef]

- Wang, L.; Li, R.; Zhang, C.; Fang, S.; Duan, C.; Meng, X.; Atkinson, P.M. UNetFormer: A UNet-like transformer for efficient semantic segmentation of remote sensing urban scene imagery. ISPRS J. Photogramm. Remote Sens. 2022, 190, 196–214. [Google Scholar] [CrossRef]

- Wu, H.; Huang, P.; Zhang, M.; Tang, W.; Yu, X. CMTFNet: CNN and Multiscale Transformer Fusion Network for Remote-Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–12. [Google Scholar] [CrossRef]

- Shang, R.; Zhang, J.; Jiao, L.; Li, Y.; Stolkin, R. Multi-scale Adaptive Feature Fusion Network for Semantic Segmentation in Remote Sensing Images. Remote Sens. 2020, 12, 872. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Qiao, S.; Chen, L.C.; Yuille, A. DetectoRS: Detecting Objects with Recursive Feature Pyramid and Switchable Atrous Convolution. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2020. [Google Scholar]

- Wang, W.; Wang, S.; Li, Y.; Jin, Y. Adaptive Multi-scale Dual Attention Network for Semantic Segmentation. Neurocomputing 2021, 460, 39–49. [Google Scholar] [CrossRef]

- Xiao, T.; Liu, Y.; Huang, Y.; Li, M.; Yang, G. Enhancing Multiscale Representations With Transformer for Remote Sensing Image Semantic Segmentation. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Fan, H.; Xiong, B.; Mangalam, K.; Li, Y.; Yan, Z.; Malik, J.; Feichtenhofer, C. Multiscale Vision Transformers. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 11–17 October 2021; pp. 6804–6815. [Google Scholar]

- Ao, Y.; Shi, W.; Ji, B.; Miao, Y.; He, W.; Jiang, Z. MS-TCNet: An effective Transformer–CNN combined network using multi-scale feature learning for 3D medical image segmentation. Comput. Biol. Med. 2024, 170, 108057. [Google Scholar] [CrossRef]

- Zhou, H.; Xiao, X.; Li, H.; Liu, X.; Liang, P. Hybrid Shunted Transformer embedding UNet for remote sensing image semantic segmentation. Neural Comput. Appl. 2024, 36, 15705–15720. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Maaten, L.V.D.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Wang, T.; Xu, C.; Liu, B.; Yang, G.; Zhang, E.; Niu, D.; Zhang, H. MCAT-UNet: Convolutional and Cross-Shaped Window Attention Enhanced UNet for Efficient High-Resolution Remote Sensing Image Segmentation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 9745–9758. [Google Scholar] [CrossRef]

- Wang, H.; Cao, P.; Wang, J.; Zaiane, O.R. UCTransNet: Rethinking the Skip Connections in U-Net from a Channel-wise Perspective with Transformer. Proc. Conf. AAAI Artif. Intell. 2021, 36, 2442–2449. [Google Scholar] [CrossRef]

- Hu, Y.; Chen, Y.; Li, X.; Feng, J. Dynamic Feature Fusion for Semantic Edge Detection. In Proceedings of the International Joint Conference on Artificial Intelligence, Macao, China, 10–16 August 2019. [Google Scholar]

| Method | IoU (%) | Evaluation Index | ||||||

|---|---|---|---|---|---|---|---|---|

| Impervious Surface | Building | Low Vegetation | Tree | Car | OA (%) | m-F1 (%) | MioU (%) | |

| CNN | 82.20 | 84.48 | 70.31 | 69.24 | 58.77 | 83.70 | 84.05 | 73.00 |

| ST | 81.83 | 84.22 | 70.60 | 70.27 | 60.08 | 86.39 | 84.36 | 73.40 |

| CNN+ST | 84.55 | 89.85 | 70.85 | 68.41 | 66.65 | 88.23 | 86.09 | 76.06 |

| CNN+ST+MSFEM | 84.19 | 89.98 | 72.15 | 71.17 | 64.51 | 88.47 | 86.31 | 76.40 |

| CNN+ST+RDFF | 84.37 | 89.85 | 71.65 | 70.13 | 67.13 | 88.40 | 86.49 | 76.62 |

| CNN+ST+MSFEM+RDFF | 88.44 | 90.20 | 71.61 | 69.69 | 69.24 | 88.48 | 86.77 | 77.04 |

| Method | IoU (%) | Evaluation Index | ||||||

|---|---|---|---|---|---|---|---|---|

| Impervious Surface | Building | Low Vegetation | Tree | Car | OA (%) | m-F1 (%) | MioU (%) | |

| UNet [12] | 82.20 | 84.48 | 70.31 | 69.24 | 58.77 | 83.70 | 84.05 | 73.00 |

| SegNet [13] | 81.83 | 87.33 | 69.20 | 67.35 | 59.21 | 86.80 | 83.98 | 72.98 |

| DeeplabV3+ [16] | 81.61 | 87.48 | 68.54 | 67.81 | 58.35 | 86.65 | 83.81 | 72.76 |

| PSPNet [14] | 82.32 | 86.88 | 69.65 | 69.39 | 61.34 | 87.08 | 84.68 | 73.92 |

| HST-Net [49] | 82.33 | 87.94 | 70.01 | 68.26 | 62.38 | 87.27 | 84.84 | 74.18 |

| UNetFormer [39] | 83.04 | 88.24 | 69.98 | 69.11 | 64.59 | 84.53 | 85.41 | 74.99 |

| CMTFNet [40] | 84.17 | 89.80 | 70.50 | 68.83 | 62.34 | 88.04 | 85.41 | 75.13 |

| RST-Net (Ours) | 88.44 | 90.20 | 71.61 | 69.69 | 69.24 | 88.48 | 86.77 | 77.04 |

| Method | IoU (%) | Evaluation Index | ||||||

|---|---|---|---|---|---|---|---|---|

| Impervious Surface | Building | Low Vegetation | Tree | Car | OA (%) | m-F1 (%) | MioU (%) | |

| UNet [12] | 76.22 | 88.99 | 70.11 | 71.93 | 68.50 | 85.21 | 85.62 | 75.15 |

| SegNet [13] | 75.90 | 85.89 | 69.45 | 71.37 | 76.34 | 84.68 | 86.11 | 75.79 |

| DeeplabV3+ [16] | 75.38 | 86.59 | 68.96 | 67.92 | 74.79 | 84.05 | 85.38 | 74.73 |

| PSPNet [14] | 76.41 | 86.46 | 70.37 | 71.30 | 74.83 | 84.79 | 86.16 | 75.86 |

| HST-Net [49] | 76.84 | 87.28 | 70.99 | 71.50 | 76.61 | 85.28 | 86.66 | 76.64 |

| UNetFormer [39] | 77.94 | 88.65 | 71.09 | 72.28 | 78.00 | 85.89 | 87.31 | 77.69 |

| CMTFNet [40] | 77.05 | 87.54 | 72.18 | 74.79 | 78.57 | 87.75 | 87.56 | 78.03 |

| RST-Net (Ours) | 78.60 | 89.73 | 73.69 | 76.23 | 79.53 | 87.24 | 88.51 | 79.56 |

| Method | Parameters (MB) | FLOPs (G) | MioU (%) | FPS |

|---|---|---|---|---|

| UNet [12] | 23.89 | 18.85 | 75.15 | 87.35 |

| SegNet [13] | 80.63 | 28.08 | 75.79 | 60.12 |

| DeeplabV3+ [16] | 122.01 | 52.21 | 74.73 | 41.87 |

| PSPNet [14] | 92.45 | 44.54 | 75.86 | 46.05 |

| HST-Net [49] | 28.03 | 22.83 | 76.64 | 90.28 |

| UNetFormer [39] | 11.7 | 11.14 | 77.69 | 115.42 |

| CMTFNet [40] | 28.67 | 17.14 | 78.03 | 100.15 |

| RST-Net (Ours) | 57.17 | 34.11 | 79.56 | 75.20 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yang, N.; Tian, C.; Gu, X.; Zhang, Y.; Li, X.; Zhang, F. RST-Net: A Semantic Segmentation Network for Remote Sensing Images Based on a Dual-Branch Encoder Structure. Sensors 2025, 25, 5531. https://doi.org/10.3390/s25175531

Yang N, Tian C, Gu X, Zhang Y, Li X, Zhang F. RST-Net: A Semantic Segmentation Network for Remote Sensing Images Based on a Dual-Branch Encoder Structure. Sensors. 2025; 25(17):5531. https://doi.org/10.3390/s25175531

Chicago/Turabian StyleYang, Na, Chuanzhao Tian, Xingfa Gu, Yanting Zhang, Xuewen Li, and Feng Zhang. 2025. "RST-Net: A Semantic Segmentation Network for Remote Sensing Images Based on a Dual-Branch Encoder Structure" Sensors 25, no. 17: 5531. https://doi.org/10.3390/s25175531

APA StyleYang, N., Tian, C., Gu, X., Zhang, Y., Li, X., & Zhang, F. (2025). RST-Net: A Semantic Segmentation Network for Remote Sensing Images Based on a Dual-Branch Encoder Structure. Sensors, 25(17), 5531. https://doi.org/10.3390/s25175531