1. Introduction

With the increasing advancement of autonomous driving technology, lane line detection, a critical component of vehicular environmental perception, is widely utilized in intelligent driver-assistance systems (IDAS) such as lane keeping assist, lane departure warning, blind spot monitoring, adaptive cruise control, and forward collision warning. However, robust lane line detection in complex environments remains highly challenging. The inherent structural characteristics of lane lines their slender morphology, potential for bends, merges, and bifurcations, small footprint, and sparse pixel distribution make them inherently more difficult to identify than conventional objects. Furthermore, actual driving scenarios are complicated by numerous uncontrollable external factors, including adverse weather, extreme illumination, traffic interferences, and abnormal markings.

Early research in lane detection primarily relied on fundamental image features such as color [

1] and edge information [

2]. Although traditional methods based on handcrafted features offer straightforward implementation and rapid detection speeds, they exhibit limited robustness and poor interference resistance in complex environments. Consequently, these approaches often struggle to meet the high-precision requirements of autonomous driving systems for lane detection. Recent advances in deep neural networks, coupled with the availability of large-scale annotated datasets, have provided powerful new avenues for addressing this problem. Lane detection methods based on pixel-wise segmentation identify lane markings through pixel-level segmentation. Subsequent post-processing techniques—such as clustering and curve fitting—are then applied to derive parametric representations of the lane lines. These approaches can be categorized into semantic segmentation and instance segmentation based on their segmentation output format. Semantic segmentation methods first perform binary segmentation to extract lane contours, followed by clustering algorithms to group lane pixels and distinguish individual lane instances. A key distinction between these methodologies primarily lies in their employed clustering algorithms. For instance, VPGNet [

3] employs a density-based clustering algorithm. LaneAF [

4] introduces a direction-aware clustering technique utilizing horizontal and vertical affine fields to constrain the spatial arrangement of adjacent lane points, thereby enhancing the interpretability of the clustering process. In contrast, instance segmentation-based methods directly predict segmentation maps for individual lane instances, deriving precise lane geometry through subsequent curve fitting. For example, ConvLSTM [

5] leverages temporal information from consecutive frames by integrating a hybrid convolutional-recurrent neural network architecture, further improving lane segmentation accuracy. While segmentation-based approaches offer conceptually straightforward design, the computational resources and inference time required for dense pixel-wise prediction impose non-trivial overhead.

Inspired by rectangular anchor boxes in object detection, researchers introduced dense linear anchors as structural priors for lane detection. These methods predict lane point offsets relative to the predefined linear anchors, which span diverse image regions to accommodate lanes with varying spatial distributions. This design facilitates feature extraction by providing dedicated reference structures. LineCNN [

6] pioneered this paradigm shift, enumerating potential lane origins at image boundaries to generate predictions rather than explicitly sampling features via linear anchors. Conversely, PointLaneNet [

7] and CurveLanesNAS [

8] employ vertical linear anchors to associate each feature map cell with ground truth lane annotations, subsequently regressing positional offsets relative to these anchors. Despite their efficacy, the fixed geometry and predetermined placement of linear anchors constrain adaptability to lanes of arbitrary shapes and orientations, potentially resulting in suboptimal feature sampling.

Parameter prediction-based lane detection methods represent lane markings as parameterized curves and directly regress the corresponding curve parameters via end-to-end network architectures. Bert et al. [

9] proposed a differentiable least squares fitting module to optimize lane curve parameters. Compared to the conventional two-stage segmentation-and-fitting pipeline, this end-to-end paradigm enhances stability during model fitting and yields superior performance and interpretability. To address complex lane geometries, PRNet [

10] employs piecewise-defined polynomials with distinct coefficients to represent lane structures. LSTR [

11] utilizes a hybrid convolutional Transformer architecture to capture global contextual information for lanes and directly regresses lane parameters. However, LSTR’s curve formulation, designed around specific vehicle camera parameters, exhibits high complexity, complicating model training.

In summary, identifying lane lines by pixel-by-pixel classification requires high-resolution feature maps, which leads to a large number of model parameters and high inference latency, making it difficult to meet the real-time requirements of the vehicle system. The clustering algorithm required is prone to failure due to pixel breakage in occlusion or intersection scenes, resulting in lane line merging or breakage. Lane points are sampled by predefined anchor lines and offsets are predicted. The sampling points are unevenly distributed in curved scenes, resulting in curvature estimation deviation. Horizontal anchors are difficult to adapt to steep curves, and high-density anchors improve coverage but increase the amount of calculation. Low-density anchors may miss sparse lane lines. The parameterized curve prediction of direct regression lane lines is insufficiently aware of local slender structures, and the regression error of curve edge points is large. At the same time, it is difficult to strike a balance between the number of model parameters and feature extraction accuracy.

Based on the preceding analysis, we propose ODVT-Net, a novel lane detection model integrating omni-dimensional dynamic convolution with Vision Transformer. To address the limited representational capacity of conventional deep learning models for lane detection, we introduce Omni-Dimensional Dynamic Non-local Fusion Network as the core feature extraction module. Concurrently, recognizing the critical need for robust spatial relationships and global context in lane detection, we incorporate a Transformer-based feature weighting mechanism and a vanishing point detection module. This design enables ODVT-Net to capture rich global information across both spatial and channel dimensions, significantly enhancing the model global perception capability. Furthermore, ODVT-Net achieves end-to-end pixel-level lane detection and effectively adapts to complex topological structures, including bifurcations and discontinuities. The principal contributions of this work are four fold:

- (1)

In order to extract features with richer contextual information in the feature extraction stage and to improve the adaptive ability of the model under extreme conditions, this paper proposes a full-dimensional dynamic non-local feature extraction module to extract features from the input images. The Omni-Dimensional Dynamic Convolution (ODConv) is used to make the model dynamically adapt to the inputs of different types of complex situations of lane lines, and an improved Non-local network layer is incorporated to help the model capture the long-range dependencies of spatial dimensions, and a Feature Flip Fusion Level is also incorporated to utilize the horizontal symmetry of lane lines, aggregating features of lane symmetry.

- (2)

In order to aggregate global features, a Transformer-based feature weight generation mechanism is designed, which utilizes the self-attention mechanism of the Transformer encoder while adding a cross-attention mechanism between the feature map and the lane request at the decoder stage, so that the network both aggregates the global feature information while intuitively capturing the correlation between the feature sequences and the a priori sequences of the lane lines, to reducing the complexity of the network.

- (3)

To further enhance the model’s global perception capability, we introduce a vanishing point detection auxiliary task following feature extraction. This module undergoes joint optimization with the primary lane detection task through shared weight parameters. This design exploits vanishing point localization as a global positional prior while concurrently enriching extracted features and improving model generalization.

- (4)

To enhance detection performance for complex lane topologies, our method directly predicts pixel-level lane representations and employs bipartite matching against ground truth annotations. The final loss function combines the bipartite matching loss with the vanishing point detection loss in a weighted formulation.

The structure of the rest of this paper is described as follows:

Section 2 deeply explores the existing deep learning-based lane detection methods, including the technical ideas based on semantic segmentation, object detection, and parameter prediction.

Section 3 describes the lane detection model of full-dimensional dynamic convolution-VIT and the design details of its main modules.

Section 4 describes our comparative experiments and ablation experiment results. Finally,

Section 5 summarizes our contributions.

2. Related Work

2.1. Semantic Segmentation Based Methods

Lane line detection methods based on semantic segmentation, such as the Spatial Convolutional Neural Network SpatialCNN [

12], which extends the traditional deep layer-by-layer convolution to slice-by-slice convolution in feature mapping to realize message passing between pixels in rows and columns in the layer; structural association-based lane line detection method SAD [

13], which is applicable to the accurate detection and tracking of lane lines in automated vehicle systems; group channel local connection-based lane line detection method GCLNet [

14], which is applicable to the efficient and accurate detection and tracking of lane lines in automated vehicle systems; and CurveLanesNAS [

8], which is based on neural structure search for lane line detection. LaneNet [

15] was proposed by the team of Neven et al. Similarly to the clustering method Deep Clustering [

16], LaneNet deploys two decoders for segmentation and instance embedding, respectively, which enable it to finish clustering lane lines in the main body of the model without the need of tedious post-processing tasks. In previous segmentation-based lane line detection tasks, such as CooNet [

17] and SCNN [

18], the maximum number of lane lines is rigidly limited to four, which weakens the flexibility of lane line detection, while LaneNet is able to adaptively detect different numbers of lane lines according to different scenarios. Wang et al. proposed the FENET [

19] model inspired by the concentration of human drivers and utilized focus sampling and partial field of view evaluation. focus sampling and partial field of view evaluation to emphasize important long-distance details. GroupLane [

20] first applied the row classification strategy to 3D lane detection, performed row classification in the bird’s-eye view (BEV) space, and designed a dual detection head group to process lanes in different directions, respectively, solving the problem that traditional methods only support vertical lanes. Han et al. [

20] proposed a new detection head structure that processes lane geometry information and environmental information (discrete key point height regression), respectively, regards 2D lanes as projections of 3D lanes in perspective space, and achieves unified representation through camera parameters, effectively combining integrity and local flexibility.

Early segmentation-based methods typically preset a fixed maximum number of lane lines and were unable to flexibly adapt to changes in the number of lane lines in actual road scenarios. Standard segmentation methods work in perspective image space and are primarily designed for vertical lane lines, making it difficult to effectively model the three-dimensional geometric information of lanes. They also perform poorly for detecting curved lanes, horizontal lanes, or other non-vertical lanes. More importantly, in complex scenarios (such as occlusion, lighting changes, and road wear) or when focusing on distant, small lane lines, the model may struggle to capture sufficiently clear and robust features.

2.2. Object Detection Based Methods

Target-based detection methods, such as PointLaneNet [

7], a point-based lane line detection network for lane line identification and tracking by detecting lane line points in images; LineCNN [

6], a line-based convolutional neural network method for lane line detection by detecting line segments in images; and IntRA-KD [

21], a regional affinity kernel density-based lane line detection method for lane line detection and tracking by capturing correlations between different regions in images; UFLDV2 [

22], a lane line detection method that integrates feature learning and detection to achieve accurate lane line detection and tracking by jointly learning image features and detector parameters; SGNet [

23], a semantic grouping-based lane line detection method that improves lane line detection accuracy by grouping pixels in an image into semantic regions; and CondLaneNet [

8], a conditional network based lane line detection method that improves the accuracy and robustness of lane line detection by introducing conditional information, first generates a set of predefined anchor lines, inputs them into a deep learning model to extract the anchor features, calculates the offset between the lane lines and the anchor lines in the image, and finally regresses them back to the predefined anchor lines to obtain the lane line prediction results. DecoupleLane [

20] proposes a new detection head structure, which processes lane geometry information (third-order polynomial curve modeling in BEV space) and environmental information (height regression of discrete key points), respectively, and regards 2D lanes as projections of 3D lanes in perspective space, achieving unified representation through camera parameters, effectively combining integrity and local flexibility. LaneCorrect [

24] proposes a completely unlabeled lane detection framework, which extracts candidate lane points from the ground point cloud through threshold segmentation based on the high reflectivity difference in special paint on lane markings in point clouds, aggregates candidate points into 3D lane instances, and projects them to 2D images to generate noisy pseudo-labels. From pseudo-label generation to final detection, no manual labeling is required, which greatly reduces the labeling cost. Compared with the segmentation-based lane line detection method, the target detection-based method can accomplish the lane line detection task more quickly due to the use of anchor lines that incorporate the a priori knowledge of the lane line topology. And it is more resistant to interference. However, when encountering special lane line representations such as bifurcations, disconnections, or bends, this approach performs poorly despite its ability to simplify the modeling process.

Although lane detection methods based on target detection have shown advantages in detection speed, topology preservation, and anti-interference ability by introducing prior knowledge such as anchor lines, regional relationships, conditional information, or geometric modeling, predefined models or anchor lines are difficult to flexibly adapt to these irregular or geometric structures that exceed the preset pattern; methods such as DecoupleLane, which treat two-dimensional lanes as three-dimensional projections, are highly dependent on accurate camera internal and external parameters for perspective conversion. Camera calibration errors or dynamic changes will directly affect the accuracy of the detection results; LaneCorrect, a method that uses point clouds to generate unsupervised pseudo-labels, has its detection results limited by the noise generated by the point cloud segmentation and aggregation steps. The inaccuracy of threshold segmentation and the error in aggregating point clouds into three-dimensional instances will cause the pseudo-labels projected onto the image to contain noise, which in turn affects the effect of the final supervised training.

2.3. Parameter Prediction-Based Methods

Parameter-based prediction methods, such as PolylaneNet [

25], LSTR [

11], BezierLaneNet [

26], etc., are different from the aforementioned point-based prediction methods, in that parameter prediction methods directly output parameter lines represented by curve equations, which makes the model more end-to-end, reduces the complexity of the model, and makes it easy to obtain a lightweight model. However, the performance of parametric prediction-based lane line detection methods is often not as good as that of point-based methods because modeling lane lines as parametric curves restricts the degree of freedom of the lane line points, and is not compatible with special lane line representations such as irregularities and bifurcations, etc. The PolyLaneNet method was proposed by Lucas Tabelini et al. This model is a convolutional neural network for end-to-end lane line detection estimation and outputs polynomials representing each lane marker in the image, as well as domain lane polynomials and confidence scores for each lane, but its simple network structure and polynomial parameter curves are insufficient to cope with the complex lane line topology, which seriously affects its network performance. The team of Ma et al. proposed the BezierLaneNet method, and unlike the above methods, the model proposes to use a third order. Bessel curves to fit lane lines, and also proposed and used a deformation convolution-based feature flip fusion module in order to fuse the symmetric properties of the lanes in the driving scenario into the features.

Lane detection methods based on parameter prediction offer advantages in terms of model lightweightness and end-to-end deployment. However, parameterized methods force lane lines to conform to a predefined curve equation, limiting the degrees of freedom of lane points and resulting in overly strong geometric constraints. Compared with methods based on object detection, parameterized models often struggle to achieve comparable detection accuracy. The fundamental reason is that the curve equation requires a finite number of parameters to summarize the global shape, while local details of lane lines (such as worn markings and shadow interference) are easily ignored during smoothing, resulting in keypoint offsets. Slight deviations in curve parameters can significantly distort the predicted lane shape, making model training more dependent on fine-tuning parameters and increasing the difficulty of optimization.

3. Method

3.1. OTVPA-Net Framework

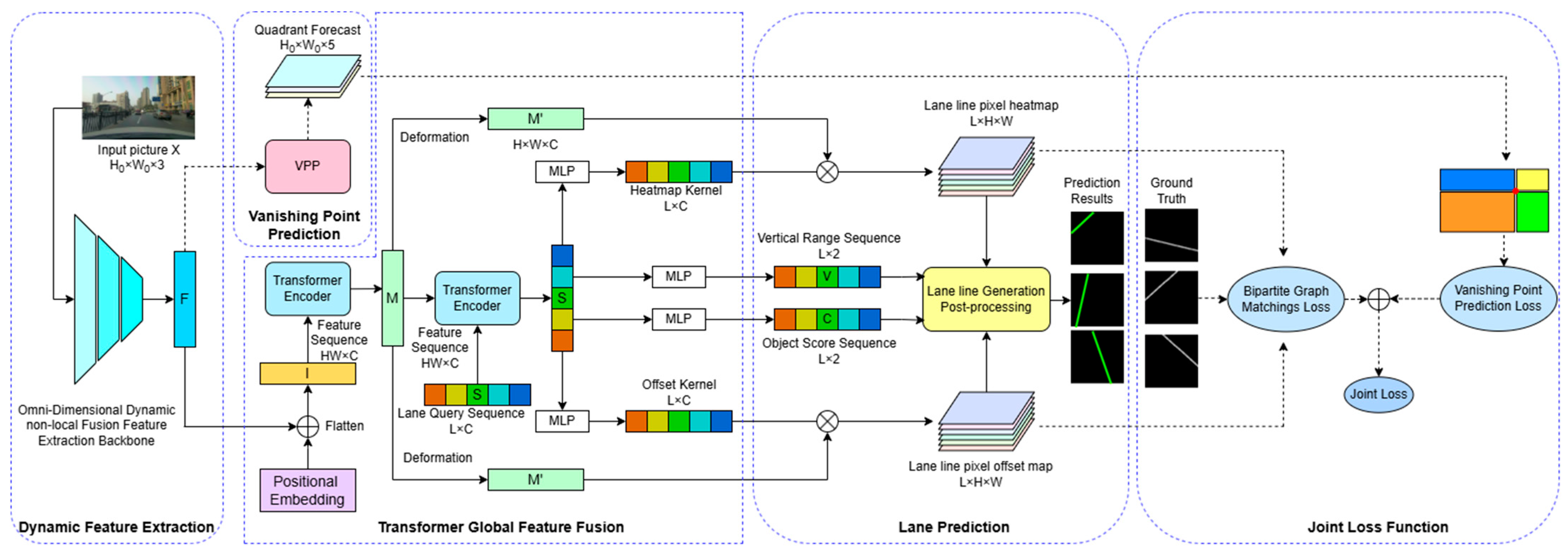

The network architecture of OTVLD-Net consists of the dynamic feature extraction network ODVT-Net, the vanishing point detection module, the Transformer global feature aggregation module ODVT-Net, the lane detection module and the joint loss module, as shown in

Figure 1. The model consists of a sequence of modules that process information in different ways:

- (1)

Dynamic feature extraction module: We propose a omni-dimensional dynamic non-local fusion feature extraction network, which performs dynamic convolution on the feature map after fusing the lane line symmetry features, and captures the global view of the feature map in the spatial dimension through the non-local layer.

- (2)

Vanishing point detection module: Use the feature map output in the feature extraction stage to detect vanishing points and generate a vanishing point quadrant prediction map.

- (3)

Transformer global feature aggregation module: The Transformer encoder is used to perform global feature aggregation on the input feature map in the channel dimension, and the Transformer decoder is used to calculate the cross attention between the feature sequence and the lane request sequence to further expand the global field of view of the feature sequence.

- (4)

Lane detection module: Utilizes a series of outputs with global feature information extracted by the previous module to perform pixel-level detection of lane lines.

- (5)

Joint loss module: Calculates the weighted loss of vanishing point detection and lane line matching, and the model is trained based on this loss.

3.2. Omni-Dimensional Dynamic Non-Local Fusion Feature Extraction Network ODVT-Net

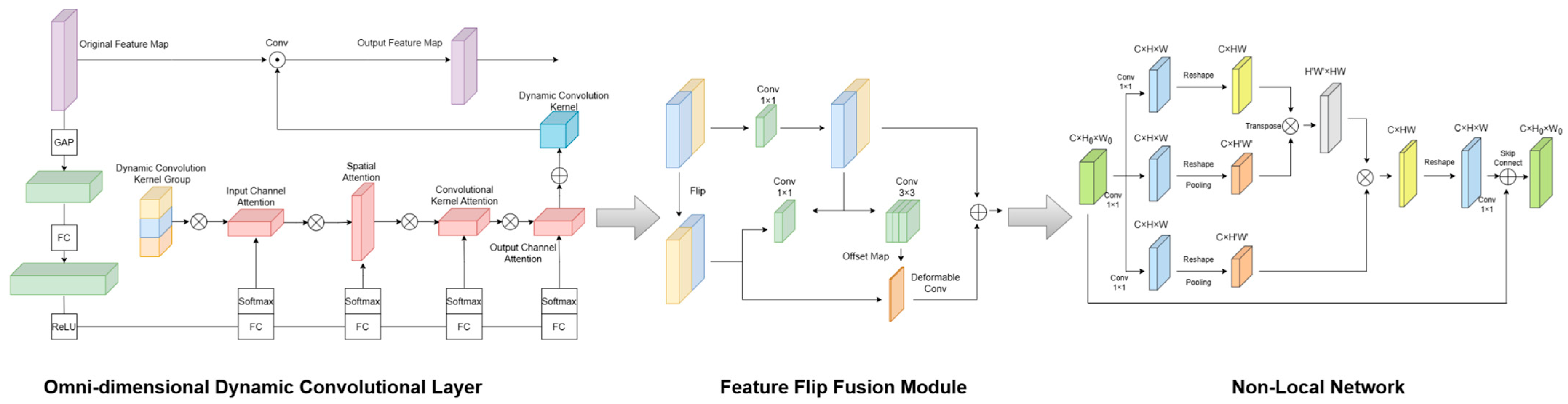

A single CNN network is limited by the narrow receptive field of CNNs and the limitations of the universal convolution kernel. The feature representations it generates are often simple and limited. This fixed feature extraction mechanism limits the model’s ability to adapt to complex scenes. Therefore, this paper designs a full-dimensional dynamic non-local fusion feature extraction network ODVT-Net, whose main structure is shown in

Figure 2. First, ODVT-Net replaces the convolution operations of the first two layers of residual blocks in the ResNet model with full-dimensional dynamic convolution operations to form a full-dimensional dynamic convolution layer. This operation enables the model to dynamically adapt to lane line images in different scenarios; secondly, ODVT-Net introduces a feature flip fusion module to introduce the horizontal symmetry of lane lines to the model; finally, ODVT-Net replaces the convolution operations of the last two layers of residual blocks in the ResNet model with improved Non-local Blocks to give the model a global vision in the spatial dimension.

The omni-dimensional dynamic convolution operation (

Figure 2, left) uses a new multi-dimensional attention mechanism and parallel strategy. ODConv dynamically learns the attention of the convolution kernel along the four dimensions of the kernel space in the convolution layer, thereby better capturing the feature information of the input data, allowing the network to better adapt to different input data and improve the perception and generalization capabilities of the model. At the same time, there is no great sacrifice in the amount of model calculation. The dynamic convolution operation can be regarded as a dynamic perceptron

, and its calculation process is as follows:

where

,

,

represent weights, biases, and activation functions, respectively, and

represents the attention weight. The attention weight is not fixed, but changes with the input. Therefore, compared with static convolution, dynamic convolution has stronger feature expression ability.The additional computation it brings is much less than the convolution itself:

Therefore, applying ODConv to lane line detection models can improve performance without destroying their real-time requirements.

Lane lines on the road often have horizontal symmetry, that is, the lane lines are symmetrically distributed on both sides of the lane, and in most scenarios, the shapes of two symmetrical lane lines are similar. Inspired by the BezierLaneNet [

27], ODVT-Net adds a feature flip fusion module (

Figure 2, middle) to enable the model to consider the horizontal symmetry characteristics of lane lines during reasoning and provide a reference for symmetrical lane lines for the detection of single lane lines. Considering that the lane lines in the camera-captured image may not be aligned, for example, image angle rotation, vehicle turning, unpaired lane lines, etc., 3 × 3 deformable convolution is used in the convolution layer of the flipped feature map, and the model learns the offset features to make residual connections with the original feature map. Thanks to the fact that OTVLD-Net outputs pixel-level predictions of lane lines in the lane detection task, the positioning of pixel points can build a more accurate spatial feature map, which will support the accurate fusion between flipped features in the feature flip fusion module, help the model better adapt to different road conditions, and effectively improve the performance of the model in complex road environments.

In order to obtain a global view of the spatial dimension at this stage, ODVT-Net focuses on the extraction of non-local information in the spatial dimension in the height H and width W directions. Unlike the classic Non-Local Block, the input feature map size in the Non-Local Block of ODVT-Net (

Figure 2, right) is modified to

, making it more suitable for image detection and focusing on the global information extraction in the spatial dimension. At the same time, in order to reduce the amount of model calculation and not destroy the real-time requirements in the lane detection model, after the two 1 × 1 convolutions of the Non-Local Block, the spatial dimension of the feature map is sampled with a maximum pooling of 2 steps. The impact on network performance is within an acceptable range, but the amount of calculation is only 1/4 of the original. By introducing the Non-Local Block, ODVT-Net can more easily capture long-distance dependencies in the spatial dimension, and at the same time, with the Transformer global feature aggregation, it focuses on the attention extraction in the channel dimension, so that the OTVLD-Net model can obtain a more comprehensive global view. The model will be more suitable for handling lane detection tasks in complex scenes, improving the accuracy and robustness of detection.

3.3. Vanishing Point Prediction Module

Adverse conditions—including inclement weather, poor illumination, and occlusions—degrade lane visibility. To address this, OTVLD-Net adapts VPGNet [

3] four-quadrant vanishing point localization method. This approach partitions the image plane using quadrant masks, defining the vanishing point (VP) as the intersection of these four regions. Through this structural decomposition, the vanishing point prediction (VPP) module infers the VP position by analyzing quadrant-specific features within the segmented global scene structure.

OTVLD-Net uses a lightweight semantic segmentation module in the vanishing point prediction head, which contains a 6 × 6 residual block, two 1 × 1 residual blocks, and finally performs a Tiling upsampling operation on the obtained feature map to obtain a four-quadrant vanishing point mask map with 5 channels. In the four-quadrant vanishing point mask map, VP is the intersection of the four quadrants, so the confidence values of VP in the four quadrant channels are roughly the same. Based on this, the formula for calculating VP is as follows:

Among them, represents the probability of the existence of VP in the image; represents the confidence of the point in quadrant channel, represents the size of the confidence map, and represents the position coordinates of the final predicted VP.

In OTVLD-Net, the VPP module serves as an auxiliary component exclusively during training to enhance spatial perception. This module is omitted during inference to optimize computational efficiency.

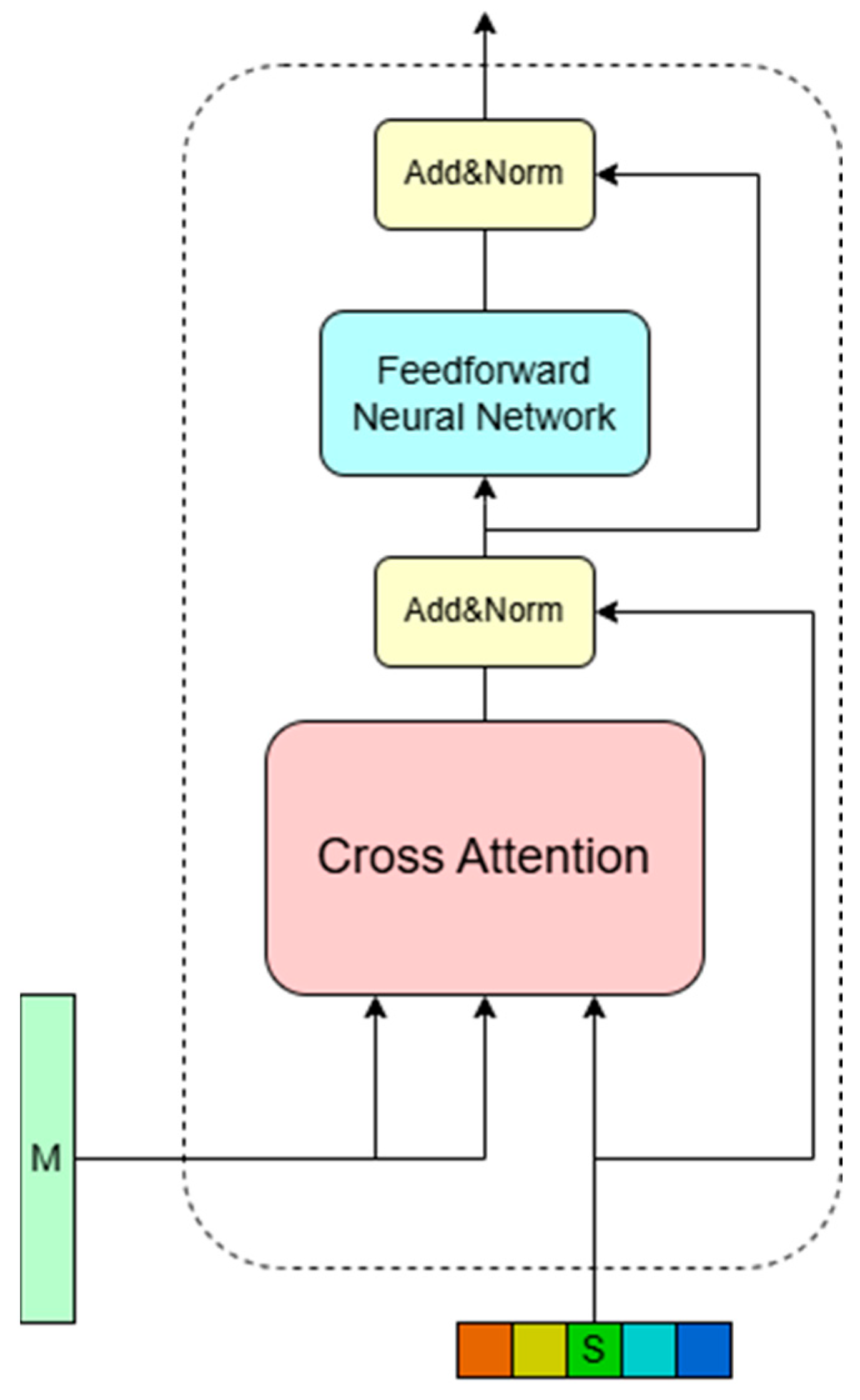

3.4. Transformer Global Feature Aggregation

To generate dynamic feature weights for each lane line candidate, the Transformer global feature aggregation module is designed in this paper, as shown in

Figure 3. The encoder of the model takes the feature sequence

as input, uses the Transformer self-attention mechanism to capture each of the most relevant input features in I, and outputs the feature sequence

. However, this module discards the mask-self-attention module in a typical Transformer decoder, and defines a learnable lane query sequence

, where

vectors of length

represent different lane line candidates, and this query sequence

will be directly used as the query sequence

of the decoder’s self-attention module after a linear transformation. At this time, the decoder self-attention module integrates the cross-attention mechanism to capture the most relevant features from the feature sequence

for each lane line query in

. Finally, the decoder will output the dynamic lane feature sequence

. The functional relationship between the query sequence

and the feature sequence

in the cross-self-attention mechanism is as follows:

Among them, and represent the key vector and value vector obtained by linear transformation of , respectively, and represent the i-th and j-th unknown features of and , respectively, is used to describe the attention map of the pairwise relationship between and , represents the lane feature of output corresponding to lane query , and is a nonlinear mapping.

Within this architecture, the dynamic lane feature sequence is generated through cross-attention between the lane query sequence and the globally enhanced pixel feature sequence . Consequently, each lane feature effectively captures channel-wise global context for its corresponding lane in . When integrated with spatial global features from Non-Local Blocks in the feature extraction module, OTVLD-Net achieves comprehensive global perception across dimensions. This enables precise localization of image pixels most relevant to lane queries demonstrating robustness against challenging conditions including occlusions and complex topological variations.

3.5. Lane Detection Module

To generate pixel-level lane predictions, the model leverages dynamically generated weights from preceding modules within its detection pipeline. At this stage, the model reshapes the output feature sequence

of the Transformer encoder into a feature map

, multiplies it by the heat map kernel

and the offset kernel

, and obtains the lane line pixel point heat map

and the offset map

. Then the model will use the inter-row

to further process the lane line pixel point heat map

:

Among them, represents the probability that predicted lane line exists in row and column of the lane line pixel point heat map . For simplicity, the same name will be used to represent the above results in the following.

Each lane feature

corresponds to a heat map

and an offset map

. The heat map

predicts the probability of each pixel being a lane point (foreground) for each predicted lane line, and the offset map

predicts the horizontal offset from the lane point of each pixel in the same row to each predicted lane line (at this time, it is assumed that each

has at most one lane pixel in each row). After the model inputs the lane line pixel heat map and offset map into a post-processing step, it combines the vertical range vector

and the object score vector

to obtain the final lane line pixel point. This post-processing process is shown in the following formula:

where

represents the expected horizontal coordinate of each lane line in each row generated initially using the lane line pixel point heat map

;

represents the resultant horizontal coordinate of each lane line in each row obtained by combining the expected

with the position offset of each lane line in the lane line pixel point offset map

. The calculation process of the final model predicting the lane line point is as follows:

where

represents the point set of

lane line predicted by the model, among which only the lane points between the starting row

and the ending row

are retained;

represents the set of lane lines finally predicted by the model, among which only the lane lines with foreground probability

higher than the threshold

are retained.

3.6. Joint Loss Function

The proposed OTVLD-Net employs joint training for its two complementary subtasks: lane detection and vanishing point detection. Accordingly, we design a joint loss function to evaluate model performance and optimize parameters. The overall loss function for lane detection in OTVLD-Net is formulated as:

The vanishing point prediction VPP loss calculates the loss of VPP prediction by calculating the Euclidean distance between the predicted VP and the true value VP. By converting the distance between the predicted point and the true value point into a probability distribution, assuming that the predicted point is

and the true value point is

, their Euclidean distance in two-dimensional space is

. VPP loss uses a Gaussian distribution, sets a standard deviation

to convert the square of the Euclidean distance into a probability density function of a Gaussian distribution, and uses the negative log-likelihood loss as the loss function for the result:

In the lane detection stage, the final predicted lane pixel set

, lane pixel heat map

, offset map

, vertical range vector

, and object score vector

are generated. First, the model will calculate the pairwise bipartite matching loss

of

predicted lane lines

and

true lane lines

, which is expressed as the weighted sum of the object score loss

, lane pixel heat map loss

, lane pixel offset map loss

, and vertical range loss

. Among them, the calculation process of the object score loss

is:

where

represents the probability that the

i-th lane line is the foreground lane line. The calculation process of the lane line pixel heat map loss

is:

where

represents the probability that the pixel point of

lane line in

row and

column is a foreground pixel point;

represents the horizontal coordinate of

true value lane line in

row; and

and

represent the vertical coordinates of the starting point and the ending point of true value lane line, that is, the pixel point heat map loss is only calculated within the valid range of the true value lane. The calculation process of the lane line pixel offset map loss

is:

where

represents the predicted horizontal offset of the pixel at

row and

column of

lane line;

represents the horizontal coordinate of

lane line predicted by the model at

row; the pixel offset map loss is the same as the pixel point heat map loss, which is only calculated within the valid range of the true value lane. The calculation process of the vertical range loss

is:

where

and

represent the predicted starting and ending ordinates of

predicted lane line. The calculation process of the final lane line bipartite matching loss is:

The

,

,

, and

are weighted balance coefficients of

,

,

, and

, respectively. Subsequently, the model will use a mapping function

to represent the optimal prediction-true value match, that is, the index of the predicted lane line assigned to

true value lane line in the optimal match. The minimum matching loss guided by it is obtained:

After obtaining the optimal match

between the predicted value and the true value, the calculation process of the final lane line two-part matching loss function is as follows:

where

,

represents the probability that the

i-th predicted lane line is the background.

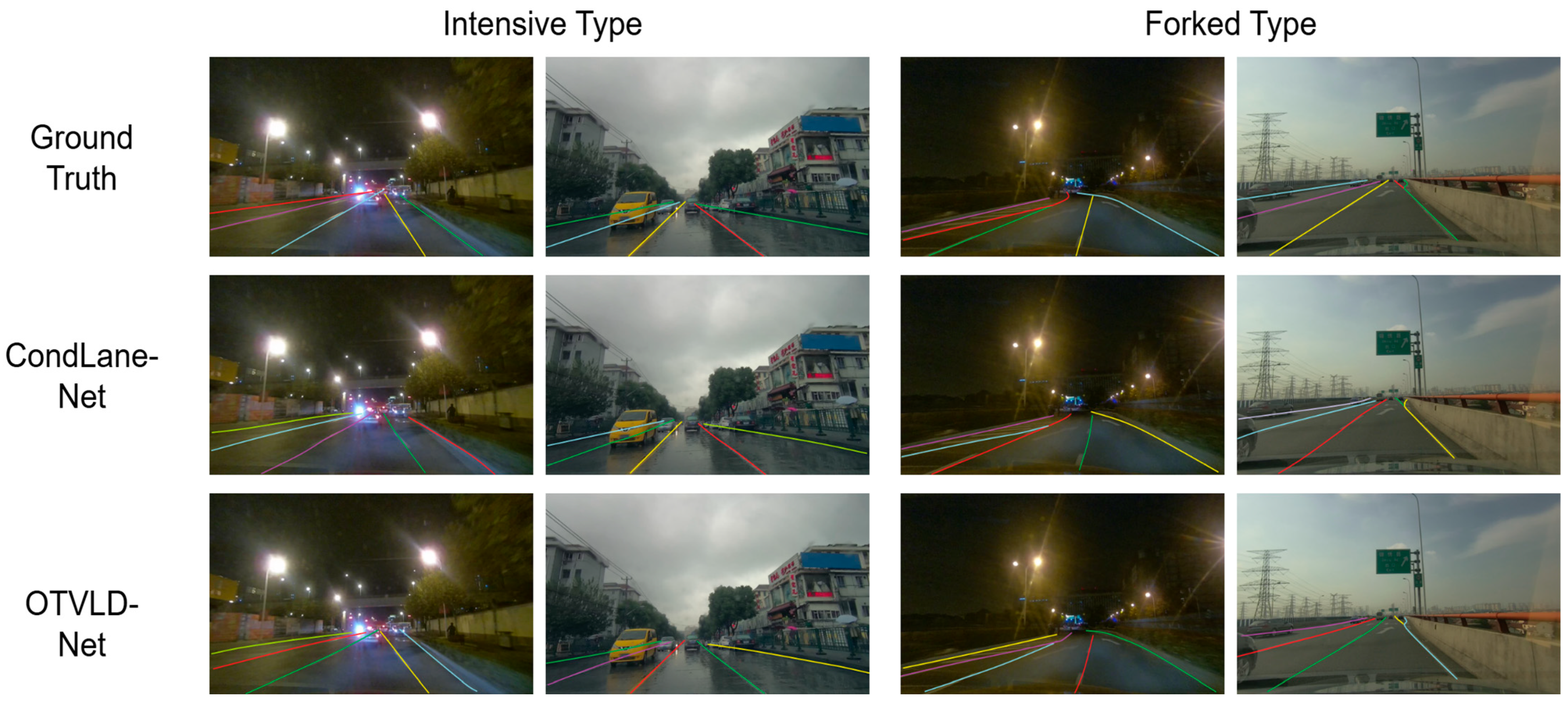

5. Conclusions

This paper proposes a lane detection model OTVLD-Net based on full-dimensional dynamic convolutional Transformer. In the feature extraction stage, the model designs a full-dimensional dynamic non-local feature extraction network ODVT-Net, which dynamically adapts to different types of complex lane inputs through ODConv, and combines Non-Local Level and Feature Flip Fusion Level to obtain richer contextual information using the horizontal symmetry of the lane. While using the self-attention mechanism of the Transformer encoder, a cross-attention mechanism between the feature map and the lane request is added in the decoder stage, and a feature weight generation mechanism based on Transformer is designed, which can intuitively capture the correlation between the feature sequence and the lane prior sequence and reduce the network complexity. The vanishing point detection module is introduced to assist the lane detection task, and a weighted loss function consisting of bipartite matching loss and vanishing point detection is designed. The model is trained together with the vanishing point detection to share weights, which enhances the generalization ability of the model. The performance of OTVPA-Net on the OpenLane dataset is 6.4% higher than that of the second-ranked model. At the same time, the detection speed of OTVPA-Net reached 103 FPS, the computing amount was only 14.2 GFlops, and the real-time performance was greatly improved. The performance and design of the model were verified through a series of comparative experiments and ablation experiments. However, the vanishing point detection module relies on the geometric structure of the image plane and has poor robustness in low-visibility scenarios such as extreme rainstorms/heavy fog, as well as in severely occluded environments. Furthermore, the model only outputs two-dimensional lane line pixel positions and lacks three-dimensional geometric information such as lane line height and curvature radius, making it unable to meet the requirements for lane spatial topology (such as uphill/curved curvature) in actual driving. Training relies on the OpenLane/CurveLanes dataset and does not cover unstructured roads. Therefore, in order to address the shortcomings of this article, in the future we will integrate radar/lidar point cloud data to compensate for the lack of vision in low visibility, refer to mainstream 3D lane line detection and BEV lane maps for 3D perception, and compare with the RSUD20K dataset to verify the generalization ability of roads in developing countries (such as narrow streets in Bangladesh and mixed rickshaw traffic).