A Study on Detection of Prohibited Items Based on X-Ray Images with Lightweight Model

Abstract

1. Introduction

- (1)

- A sample set of typical prohibited items is established;

- (2)

- An improved lightweight detection model for typical prohibited items based on the attention mechanism and dilated convolution spatial pyramid module is proposed.

2. Principle and Experimental Setup

2.1. X-Ray Imaging Principle

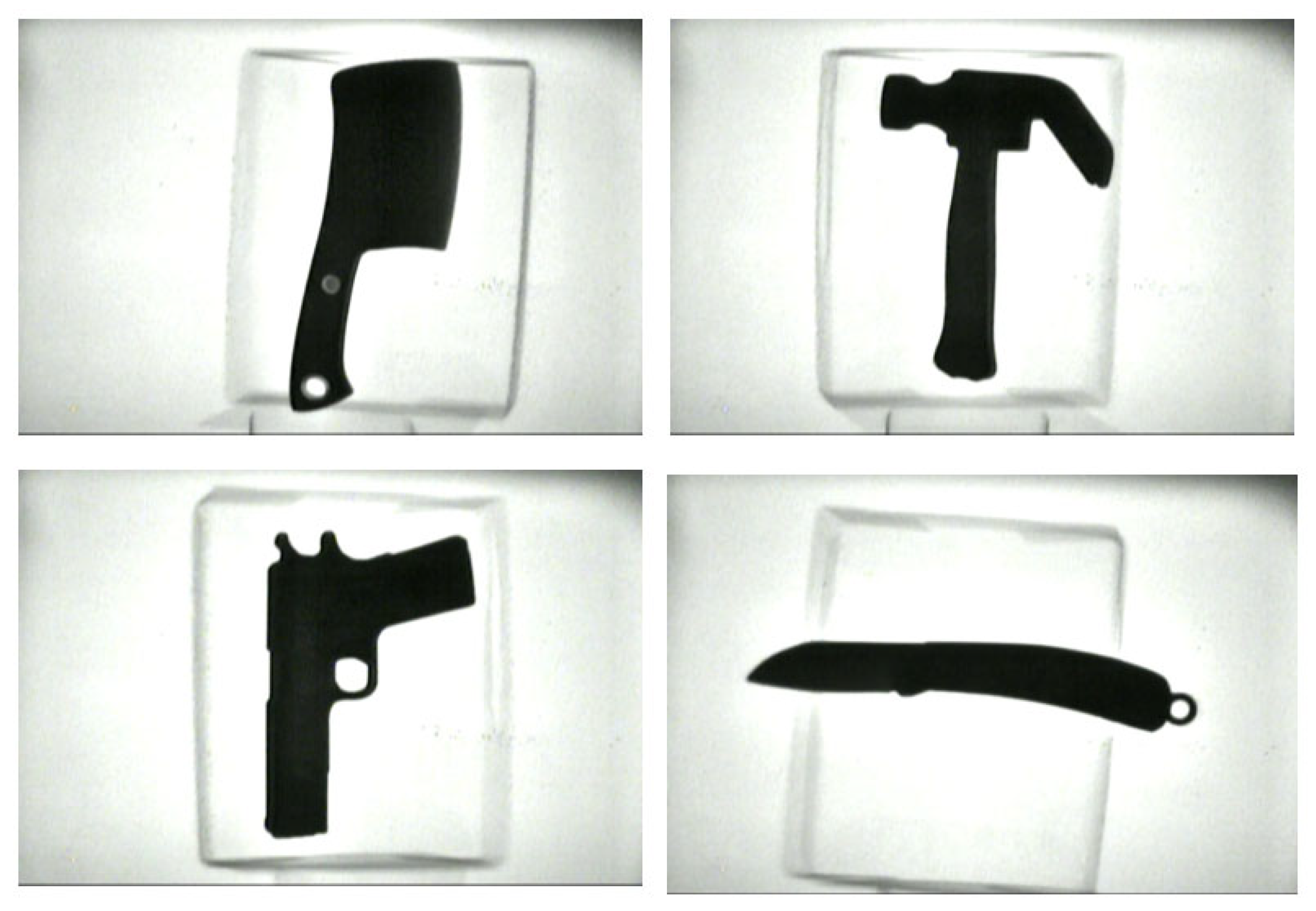

2.2. Analysis of Prohibited Items on X-Ray Images and Enhancement of Datasets

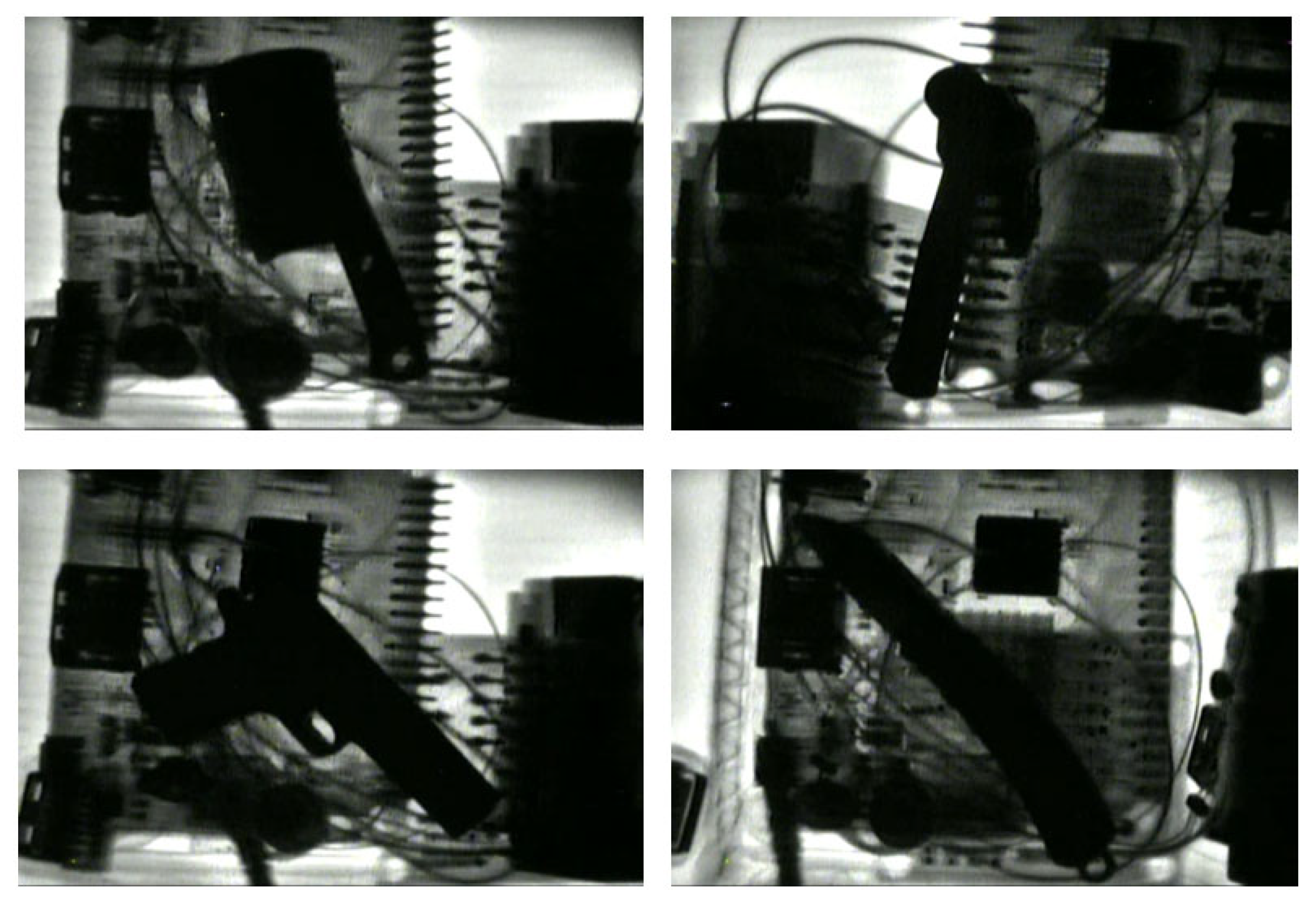

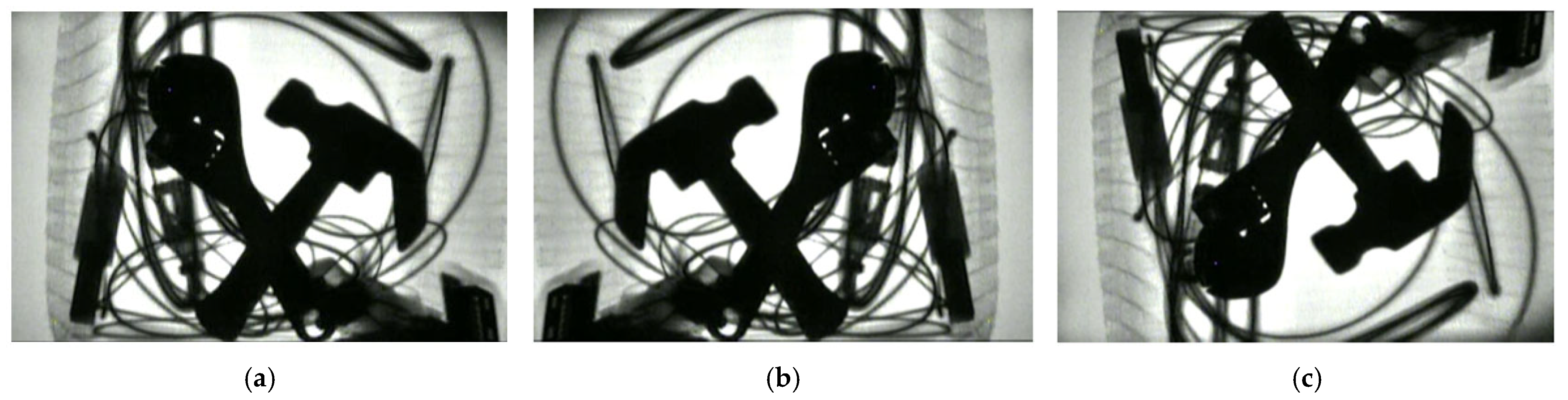

2.2.1. Image Flipping of X-Ray Imaging of Prohibited Items

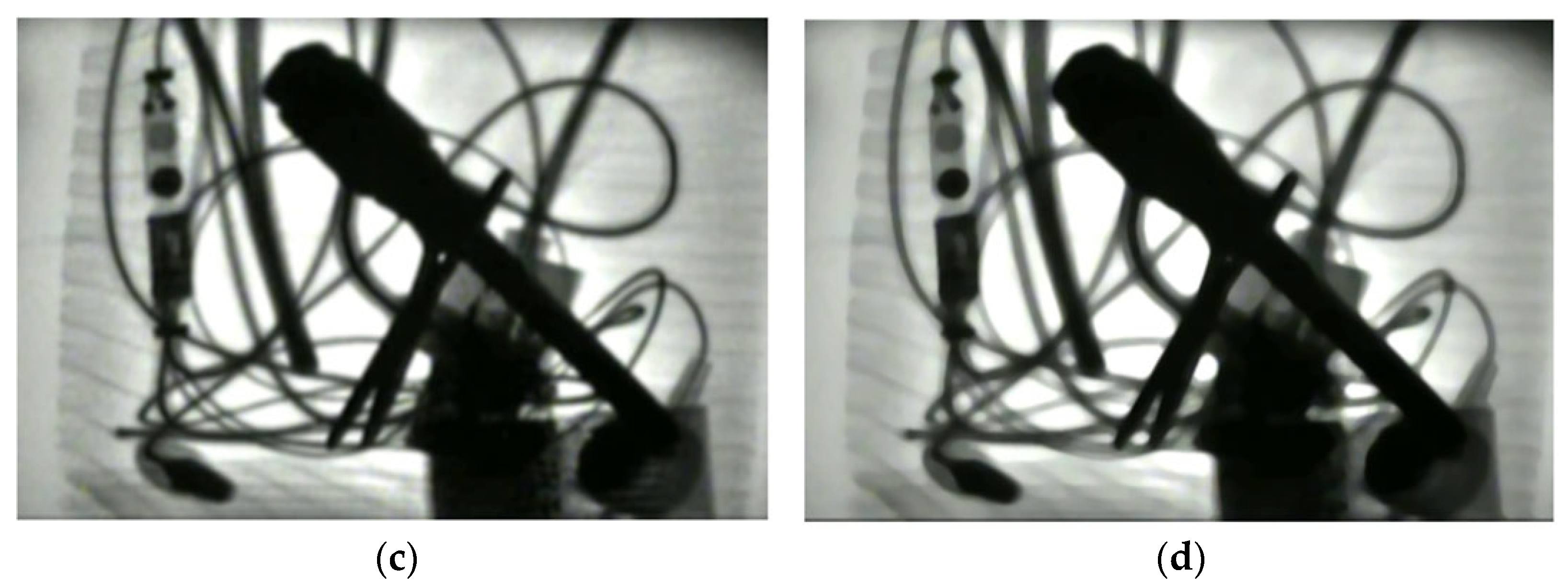

2.2.2. Image Blurring of the X-Ray Images of Prohibited Items

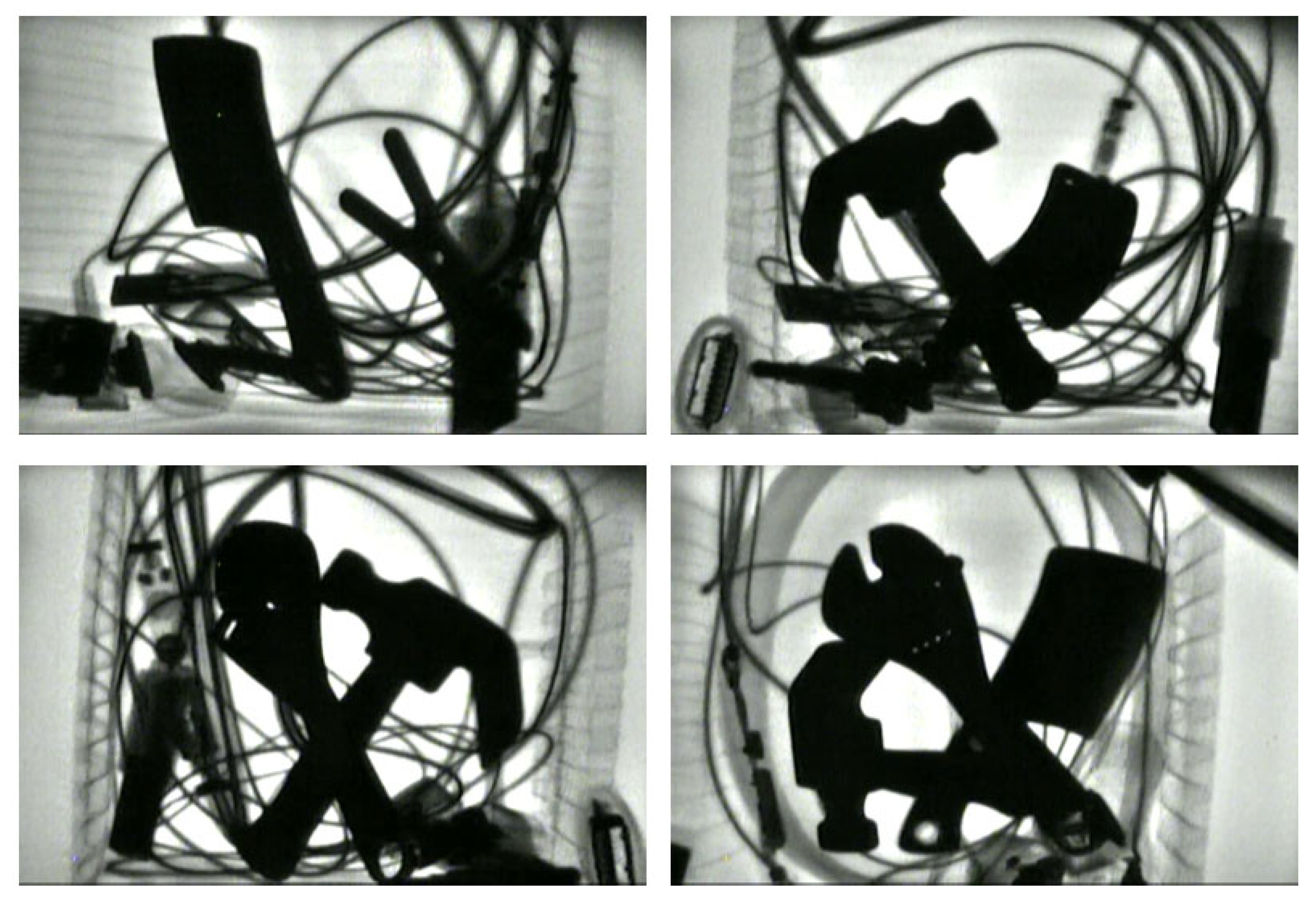

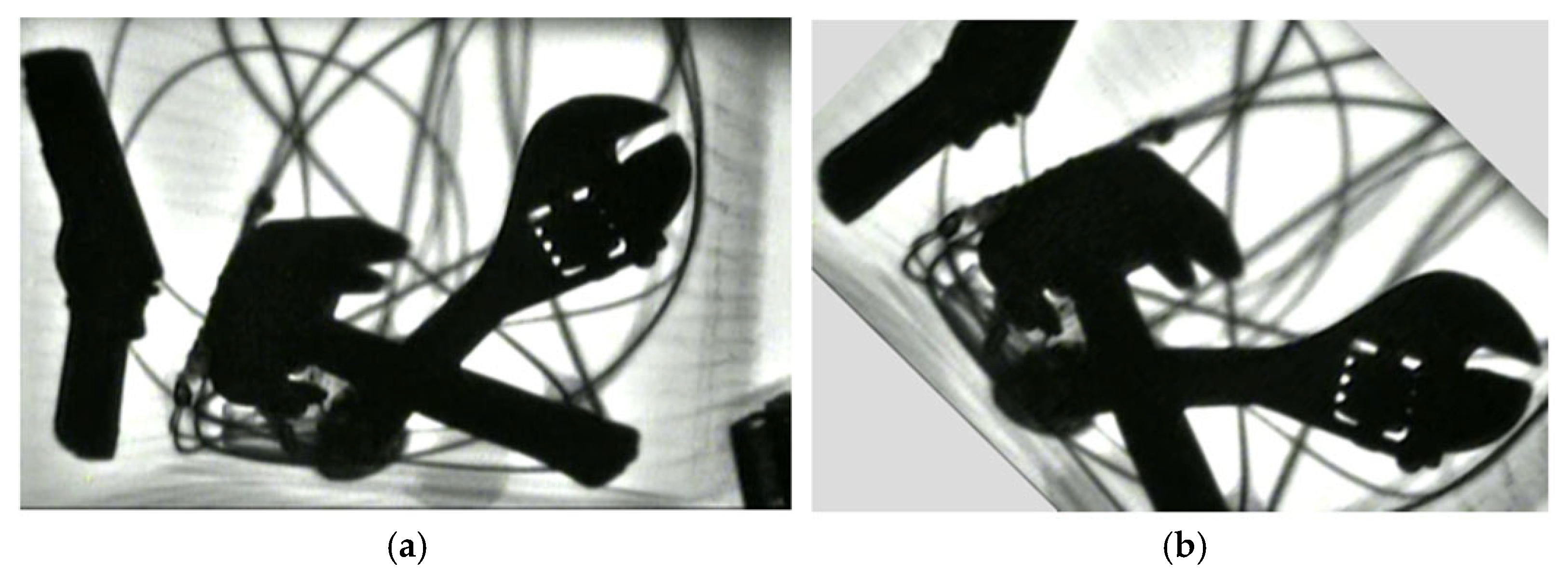

2.2.3. Affine Transformation of X-Ray Images of Prohibited Items

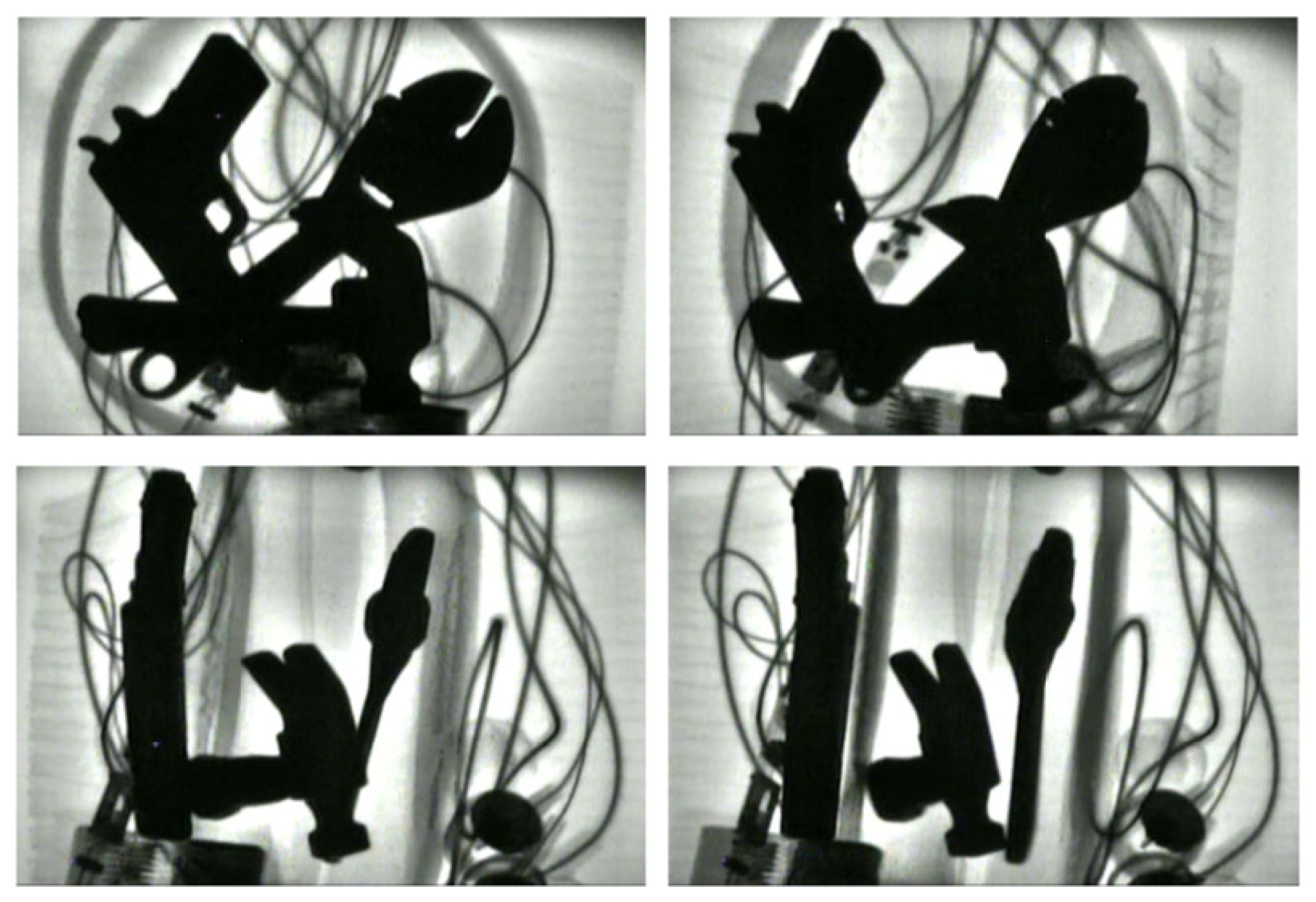

2.2.4. Mosaic Transformation

3. The Proposed Model and Its Training

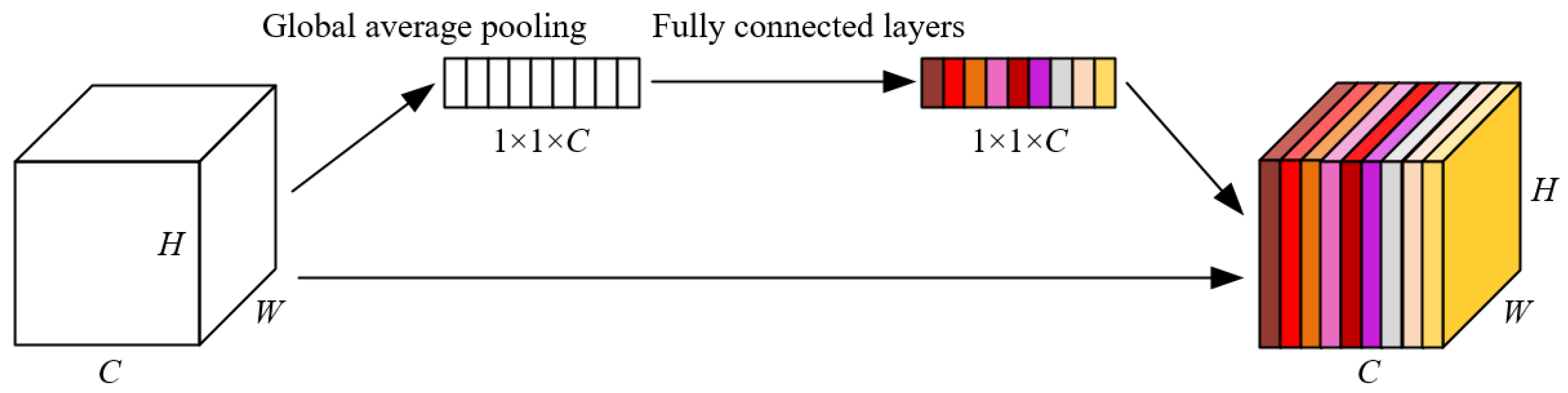

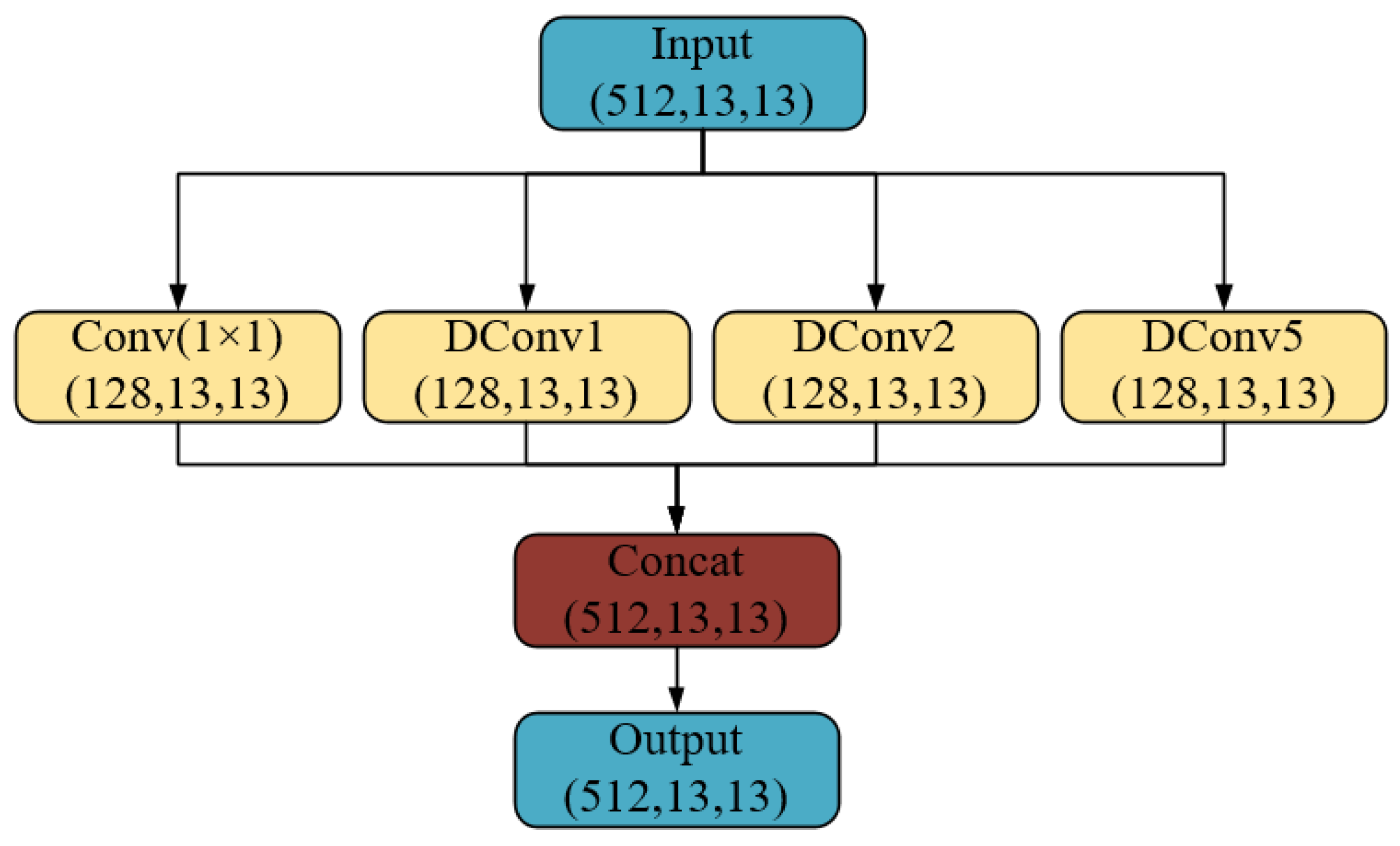

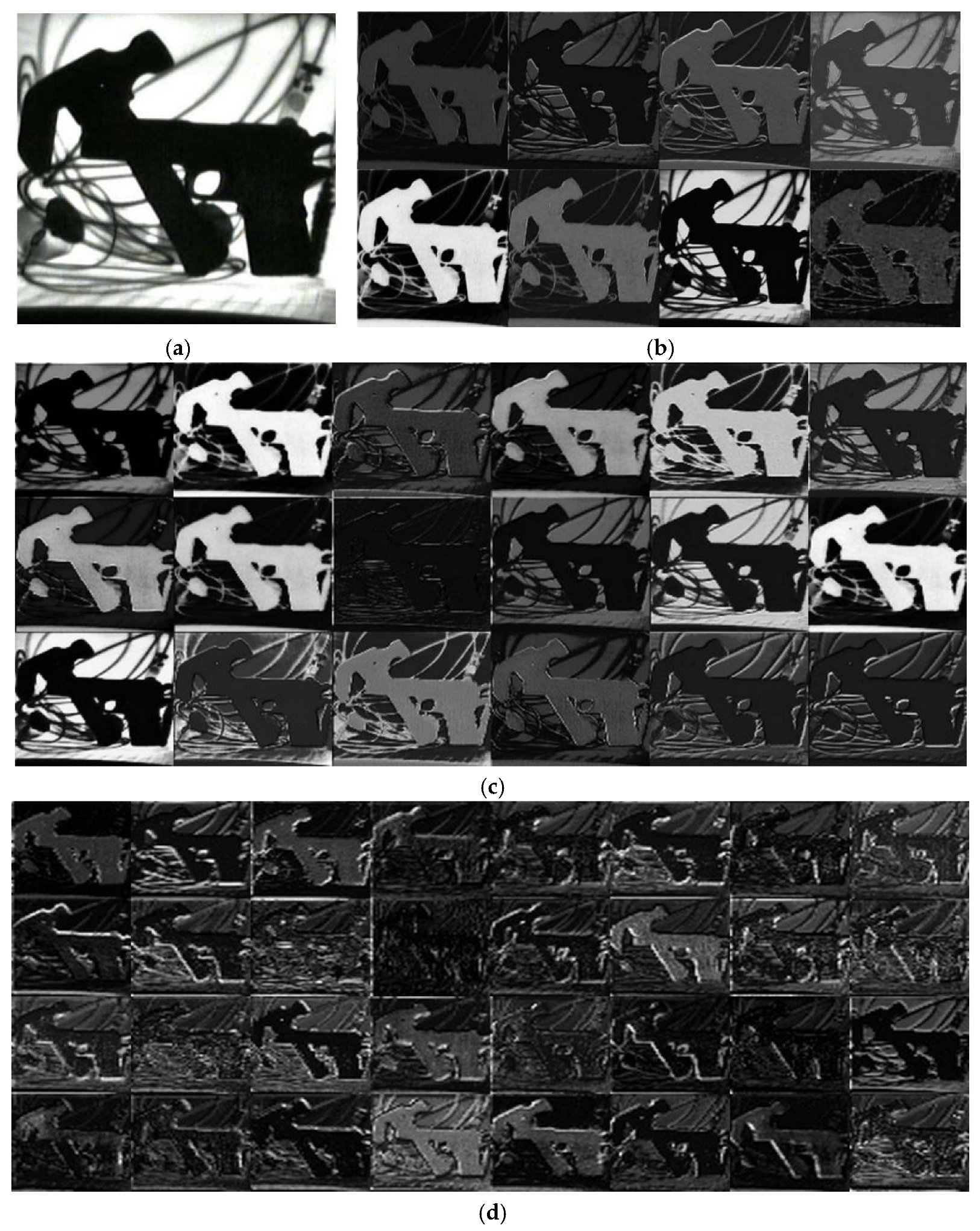

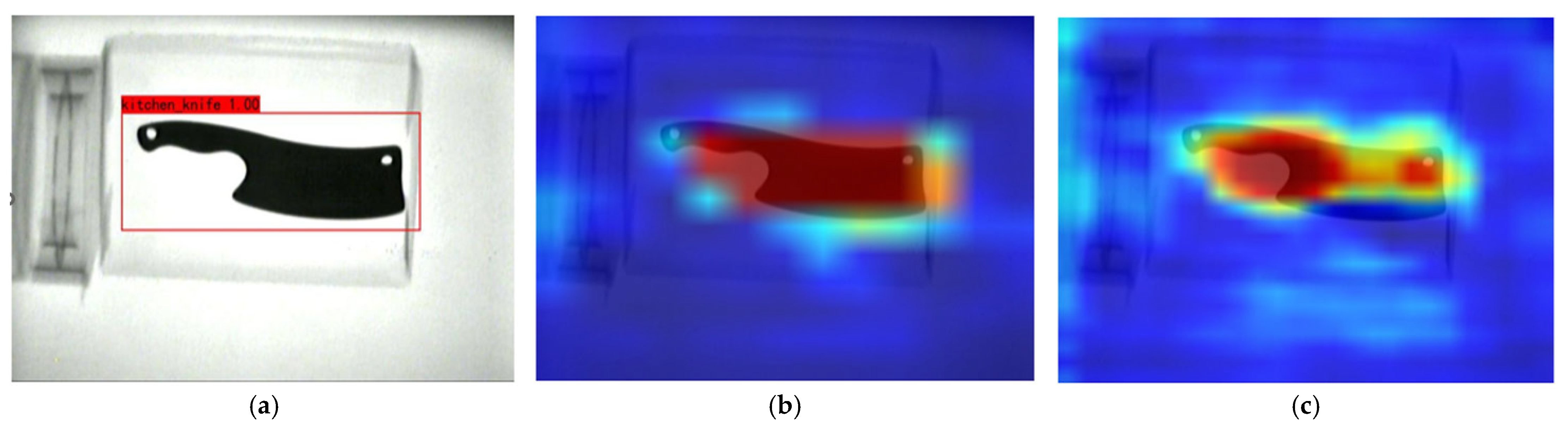

3.1. The Structure of the Proposed Model

3.2. Training of the Proposed Model

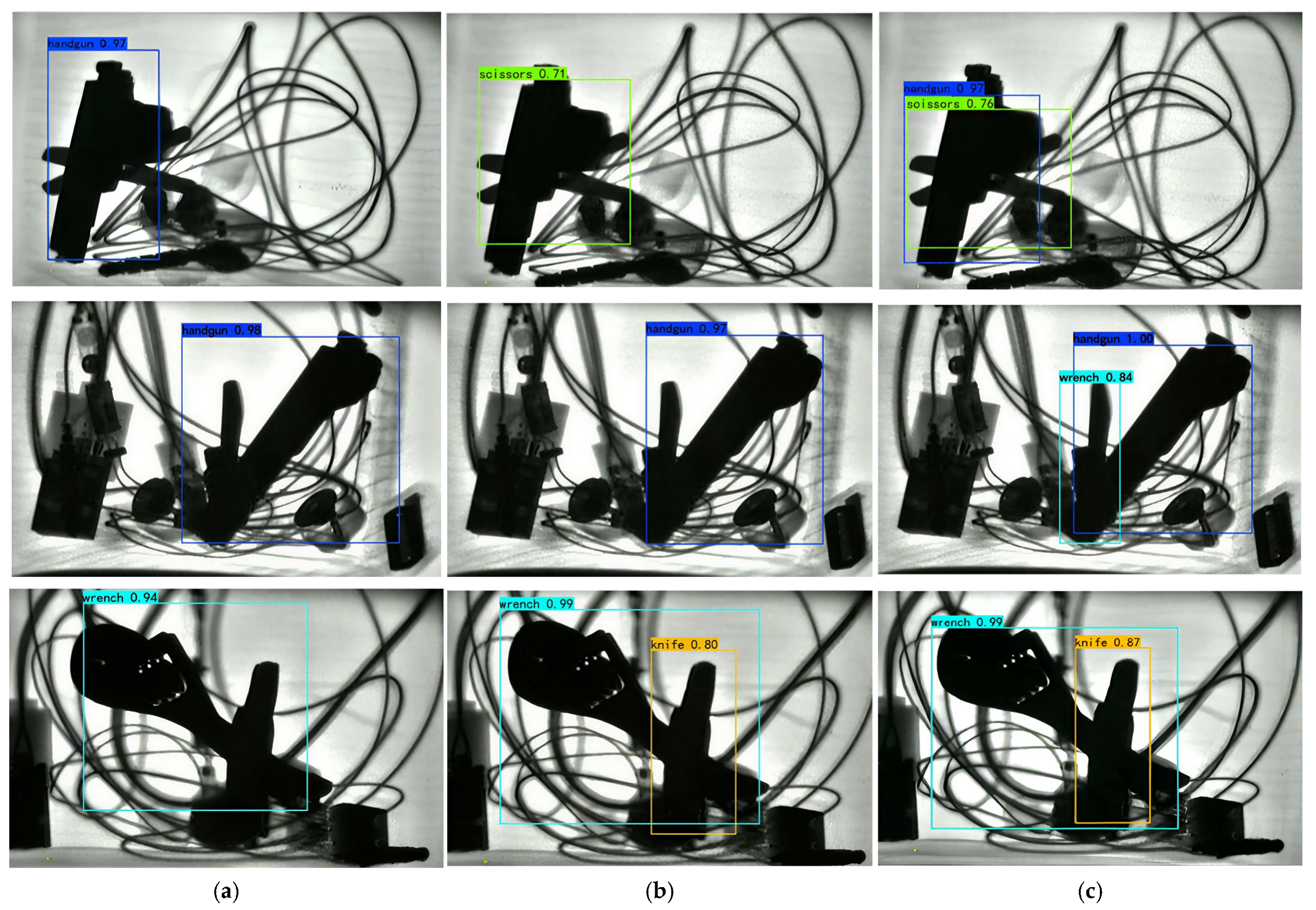

4. Result and Analysis

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Mery, D.; Svec, E.; Arias, M.; Riffo, V.; Saavedra, J.M.; Banerjee, S. Modern Computer Vision Techniques for X-Ray Testing in Baggage Inspection. IEEE Trans. Syst. Man Cybern. Syst. 2017, 47, 682–692. [Google Scholar] [CrossRef]

- Park, J.; An, G.; Lee, B.-N.; Seo, H. Real-Time CNN-Based Object Detection of Prohibited Items for X-Ray Security Screening. Radiat. Phys. Chem. 2025, 232, 112681. [Google Scholar] [CrossRef]

- Yang, F.; Jiang, R.; Yan, Y.; Xue, J.-H.; Wang, B.; Wang, H. Dual-Mode Learning for Multi-Dataset X-Ray Security Image Detection. IEEE Trans. Inf. Forensics Secur. 2024, 19, 3510–3524. [Google Scholar] [CrossRef]

- Akcay, S.; Breckon, T.P. An Evaluation of Region Based Object Detection Strategies within X-Ray Baggage Security Imagery. In Proceedings of the IEEE International Conference on Image Processing, Beijing, China, 17–20 September 2017; pp. 1337–1341. [Google Scholar]

- Akcay, S.; Kundegorski, M.E.; Willcocks, C.G.; Breckon, T.P. Using Deep Convolutional Neural Network Architectures for Object Classification and Detection within X-Ray Baggage Security Imagery. IEEE Trans. Inf. Forensics Secur. 2018, 13, 2203–2215. [Google Scholar] [CrossRef]

- Kierzkowski, A.; Kisiel, T.; Mardeusz, E.; Ryczyński, J. Research on the Use of Eye-Tracking in Marking Prohibited Items in Security Screening at the Airport. Appl. Sci. 2025, 15, 6161. [Google Scholar] [CrossRef]

- Zhang, Y.T.; Zhang, H.G.; Zhao, T.F.; Yang, J.F. Automatic Detection of Prohibited Items with Small Size in X-Ray Images. Optoelectron. Lett. 2020, 16, 313–317. [Google Scholar] [CrossRef]

- Mu, S.; Lin, J.; Wang, H.; Wei, X. An Algorithm for Detecting Prohibited Items in X-Ray Images Based on Improved YOLOv4. Acta Armamentarii 2021, 42, 2675–2683. [Google Scholar]

- An, J.; Zhang, H.; Zhu, Y.; Yang, J. Semantic Segmentation for Prohibited Items in Baggage Inspection. In Proceedings of the International Conference on Intelligent Science and Big Data Engineering, Ningbo, China, 26–28 October 2019; pp. 495–505. [Google Scholar]

- Kundegorski, M.E.; Akçay, S.; Devereux, M.; Mouton, A.; Breckon, T. On Using Feature Descriptors as Visual Words for Object Detection within X-Ray Baggage Security Screening. In Proceedings of the International Conference on Imaging for Crime Detection and Prevention, Madrid, Spain, 23–25 November 2016; pp. 1–6. [Google Scholar]

- Uijlings, J.R.R.; van de Sande, K.E.A.; Gevers, T.; Smeulders, A.W.M. Selective Search for Object Recognition. Int. J. Comput. Vis. 2013, 104, 154–171. [Google Scholar] [CrossRef]

- Franzel, T.; Schmidt, U.; Roth, S. Object Detection in Multi-View X-Ray Images. In Proceedings of the Joint DAGM and OAGM Symposium, Graz, Austria, 28–31 August 2012; pp. 144–154. [Google Scholar]

- Bastan, M.; Byeon, W.; Breuel, T.M. Object Recognition in Multi-View Dual Energy X-Ray Images. In Proceedings of the British Machine Vision Conference, Bristol, UK, 9–13 September 2013; pp. 1–11. [Google Scholar]

- Mery, D. Inspection of Complex Objects Using Multiple-X-Ray Views. IEEE/ASME Trans. Mechatron. 2015, 20, 338–347. [Google Scholar] [CrossRef]

- Mery, D.; Riffo, V.; Zuccar, I.; Pieringer, C. Object Recognition in X-Ray Testing Using an Efficient Search Algorithm in Multiple Views. Insight Non-Destr. Test. Cond. Monit. 2017, 59, 85–92. [Google Scholar] [CrossRef]

- Wang, M.; Du, H.; Mei, W.; Wang, S.; Yuan, D. Material-Aware Cross-Channel Interaction Attention (MCIA) for Occluded Prohibited Item Detection. Vis. Comput. 2023, 39, 2865–2877. [Google Scholar] [CrossRef]

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Kim, D.; Kang, J. Novel Learning Framework with Generative AI X-Ray Images for Deep Neural Network-Based X-Ray Security Inspection of Prohibited Items Detection with You Only Look Once. Electronics 2025, 14, 1351. [Google Scholar] [CrossRef]

- Li, D.S.; Hu, X.B.; Zhang, H.G.; Yang, J.-F. A GAN Based Method for Multiple Prohibited Items Synthesis of X-Ray Security Image. Optoelectron. Lett. 2021, 17, 112–117. [Google Scholar] [CrossRef]

- Akcay, S.; Kundegorski, M.E.; Devereux, M.; Breckon, T.P. Transfer Learning Using Convolutional Neural Networks for Object Classification within X-Ray Baggage Security Imagery. In Proceedings of the IEEE International Conference on Image Processing, Phoenix, AZ, USA, 25–28 September 2016; pp. 1057–1061. [Google Scholar]

- Wang, Z.; Wang, X.; Shi, Y.; Qi, H.; Jia, M.; Wang, W. Lightweight Detection Method for X-ray Security Inspection with Occlusion. Sensors 2024, 24, 1002. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Zhang, Y. ScanGuard-YOLO: Enhancing X-ray Prohibited Item Detection with Significant Performance Gains. Sensors 2024, 24, 102. [Google Scholar] [CrossRef] [PubMed]

- Miao, C.; Xie, L.; Wan, F.; Su, C.; Liu, H.; Jiao, J.; Ye, Q. SIXRay: A Large-scale Security Inspection X-ray Benchmark for Prohibited Item Discovery in Overlapping Images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2119–2128. [Google Scholar]

- Ren, Y.; Zhang, H.; Sun, H.; Ma, G.; Ren, J.; Yang, J. LightRay: Lightweight network for prohibited items detection in X-ray images during security inspection. Comput. Electr. Eng. 2022, 103, 108283. [Google Scholar] [CrossRef]

| Sample Set | Hammers | Pistols | Kitchen Knives | Small Knives | Lighters | Scissors | Screwdrivers | Wrenches | Total |

|---|---|---|---|---|---|---|---|---|---|

| Training Set | 722 | 801 | 1217 | 580 | 628 | 571 | 647 | 725 | 5891 |

| Testing Set | 324 | 385 | 500 | 240 | 271 | 233 | 252 | 317 | 2522 |

| Total number | 1046 | 1186 | 1717 | 820 | 899 | 804 | 899 | 1042 | 8413 |

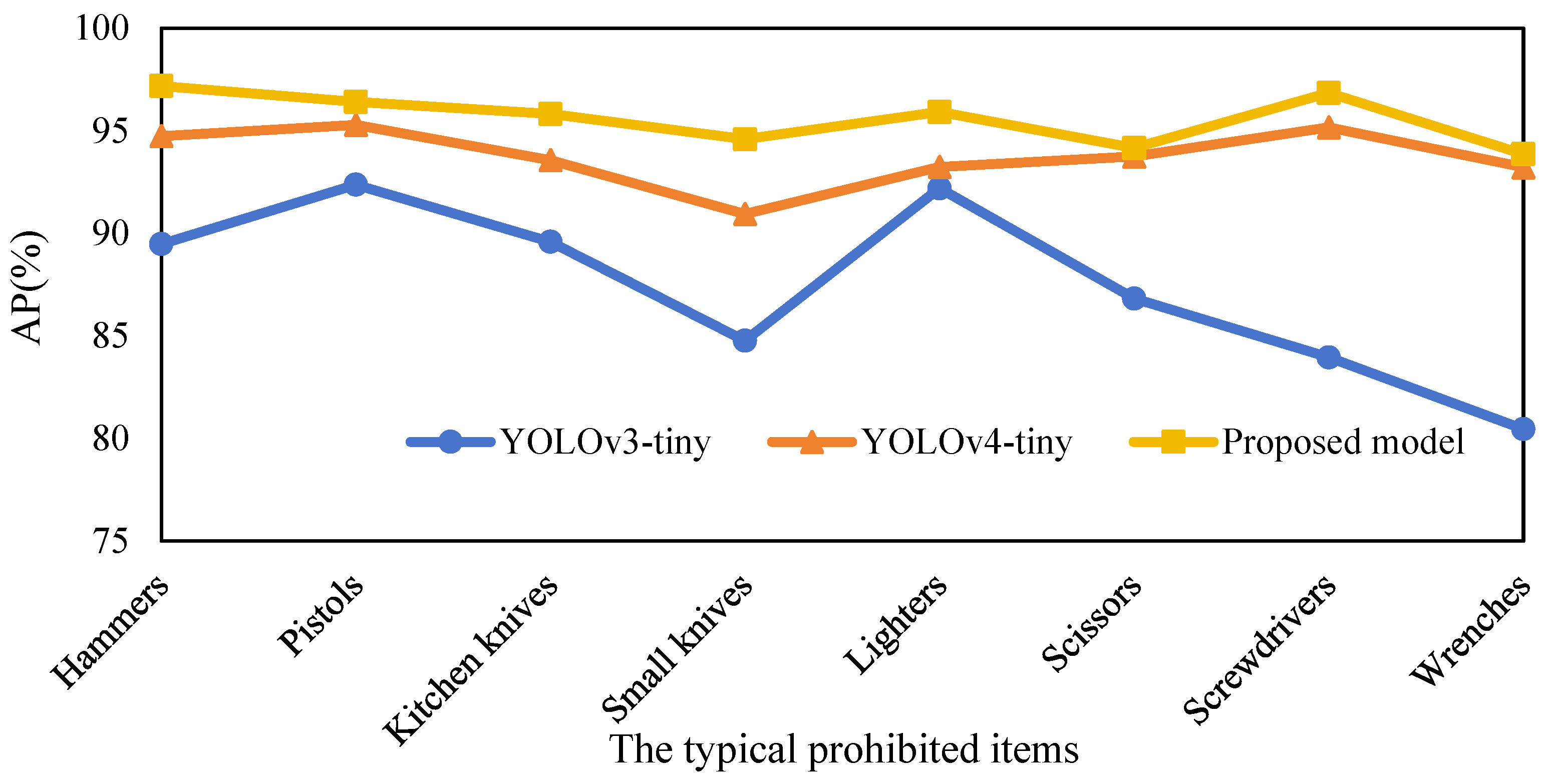

| Algorithms | Hammers | Pistols | Kitchen Knives | Small Knives | Lighters | Scissors | Screwdrivers | Wrenches | mAP |

|---|---|---|---|---|---|---|---|---|---|

| YOLOv3-tiny | 89.47 | 92.37 | 89.58 | 84.76 | 92.19 | 86.82 | 83.95 | 80.46 | 87.45 |

| YOLOv4-tiny | 94.73 | 95.28 | 93.56 | 90.94 | 93.23 | 93.74 | 95.16 | 93.21 | 93.73 |

| Proposed model | 97.17 | 96.4 | 95.81 | 94.58 | 95.91 | 94.15 | 96.84 | 93.86 | 95.59 |

| Algorithms | Hammers | Pistols | Kitchen Knives | Small Knives | Lighters | Scissors | Screwdrivers | Wrenches | mAP |

|---|---|---|---|---|---|---|---|---|---|

| YOLOv4-tiny | 94.73 | 95.28 | 93.56 | 90.94 | 93.23 | 93.74 | 95.16 | 93.21 | 93.73 |

| YOLOv4-tiny + A | 96.22 | 96.28 | 95.27 | 91.77 | 95.49 | 91.76 | 94.68 | 92.52 | 94.25 |

| YOLOv4-tiny + B | 97.19 | 96.7 | 95.48 | 93.36 | 95.42 | 95.5 | 94.9 | 93.51 | 95.26 |

| YOLOv4-tiny + C | 96.56 | 96.77 | 94.57 | 93.59 | 95.89 | 92.25 | 95.48 | 93.8 | 94.86 |

| Proposed model | 97.17 | 96.4 | 95.81 | 94.58 | 95.91 | 94.15 | 96.84 | 93.86 | 95.59 |

| Algorithms | mAP | mAP | FPS | Storage Space (MB) |

|---|---|---|---|---|

| No Mosaic | Mosaic | |||

| YOLOv4-tiny | 91.83 | 93.73 | 168 | 23 |

| YOLOv4-tiny + A | 93.08 | 94.25 | 142 | 19 |

| YOLOv4-tiny + B | 93.88 | 95.26 | 131 | 30 |

| YOLOv4-tiny + C | 93.04 | 94.86 | 152 | 23 |

| Proposed Model | 94.43 | 95.59 | 122 | 27 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liang, T.; Wen, H.; Huang, B.; Zhang, N.; Zhang, Y. A Study on Detection of Prohibited Items Based on X-Ray Images with Lightweight Model. Sensors 2025, 25, 5462. https://doi.org/10.3390/s25175462

Liang T, Wen H, Huang B, Zhang N, Zhang Y. A Study on Detection of Prohibited Items Based on X-Ray Images with Lightweight Model. Sensors. 2025; 25(17):5462. https://doi.org/10.3390/s25175462

Chicago/Turabian StyleLiang, Tianfen, Hao Wen, Binyu Huang, Nanfeng Zhang, and Yanxi Zhang. 2025. "A Study on Detection of Prohibited Items Based on X-Ray Images with Lightweight Model" Sensors 25, no. 17: 5462. https://doi.org/10.3390/s25175462

APA StyleLiang, T., Wen, H., Huang, B., Zhang, N., & Zhang, Y. (2025). A Study on Detection of Prohibited Items Based on X-Ray Images with Lightweight Model. Sensors, 25(17), 5462. https://doi.org/10.3390/s25175462