A Rapid Segmentation Method Based on Few-Shot Learning: A Case Study on Roadways

Abstract

1. Introduction

2. Related Works

2.1. Road Segmentation Method

2.2. Few-Shot Semantic Segmentation

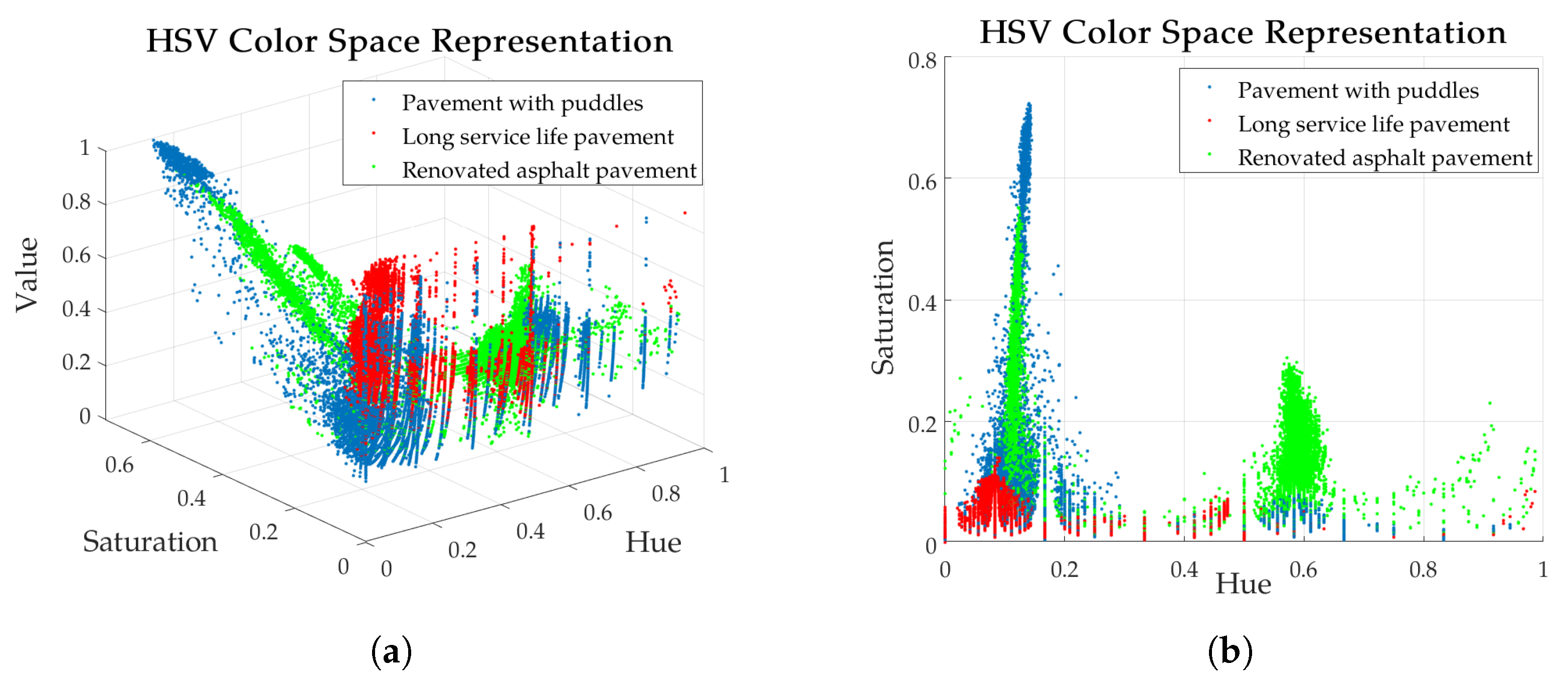

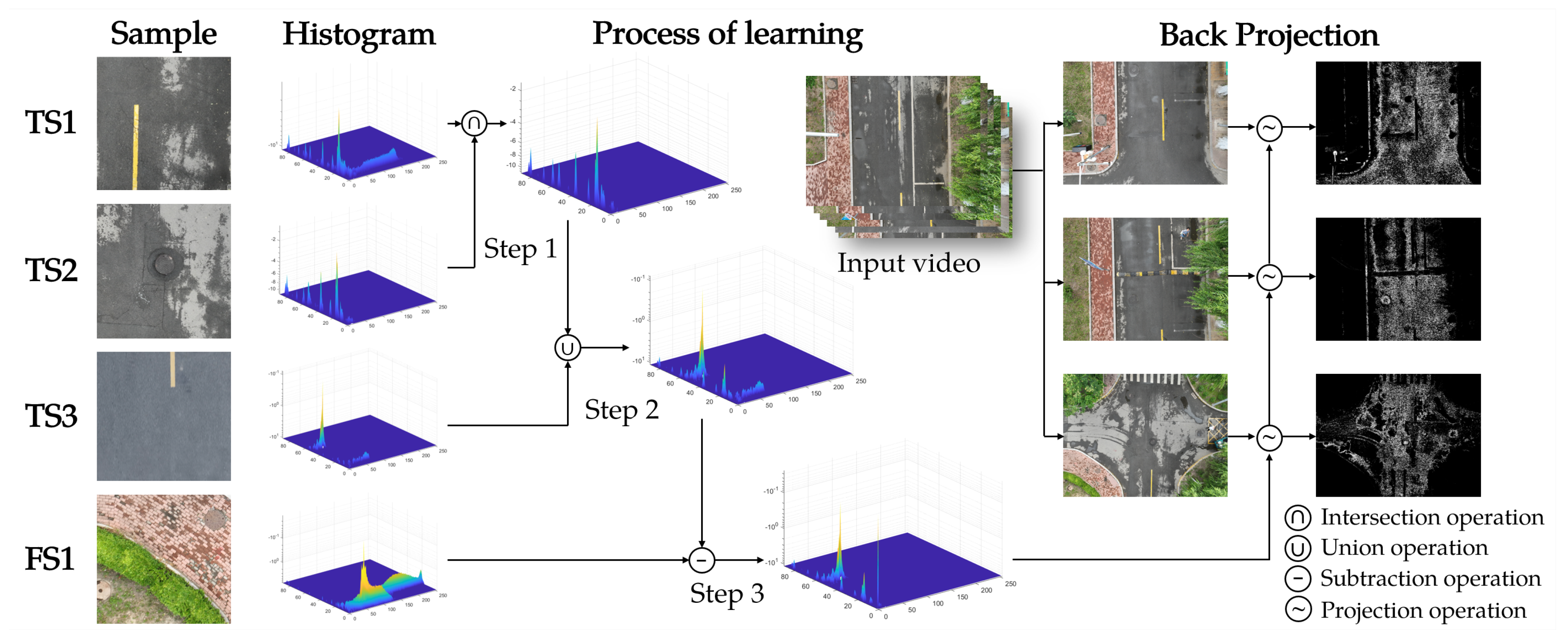

3. Road Segmentation Based on Improved Back-Projection Algorithm

3.1. Histogram Learning Mechanism

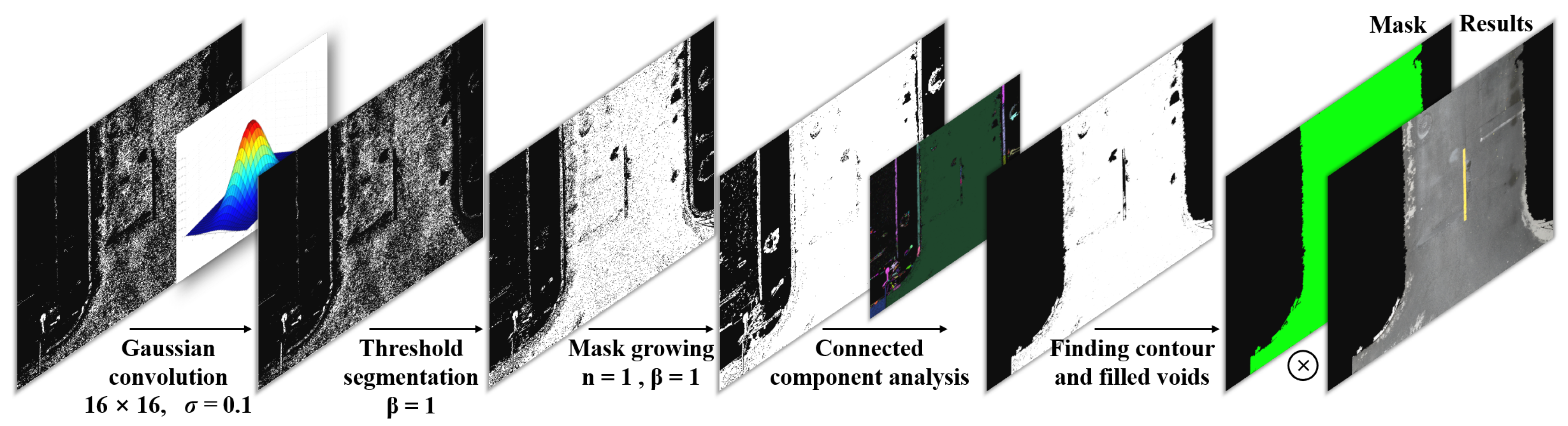

3.2. Mask Generation and Growth

- In the above equation, W is a sliding window of size ; are the coordinates of the window; is the probability mean of the intersection of W and represents the variance. In the growth of the map, first, the mean and variance of the probability of retained pixels within the window are calculated. Then, the pixels not in the map whose probability is within times the variance of the mean are retained, and their corresponding maps are filled. Finally, the whole process is repeated N times to expand the map.

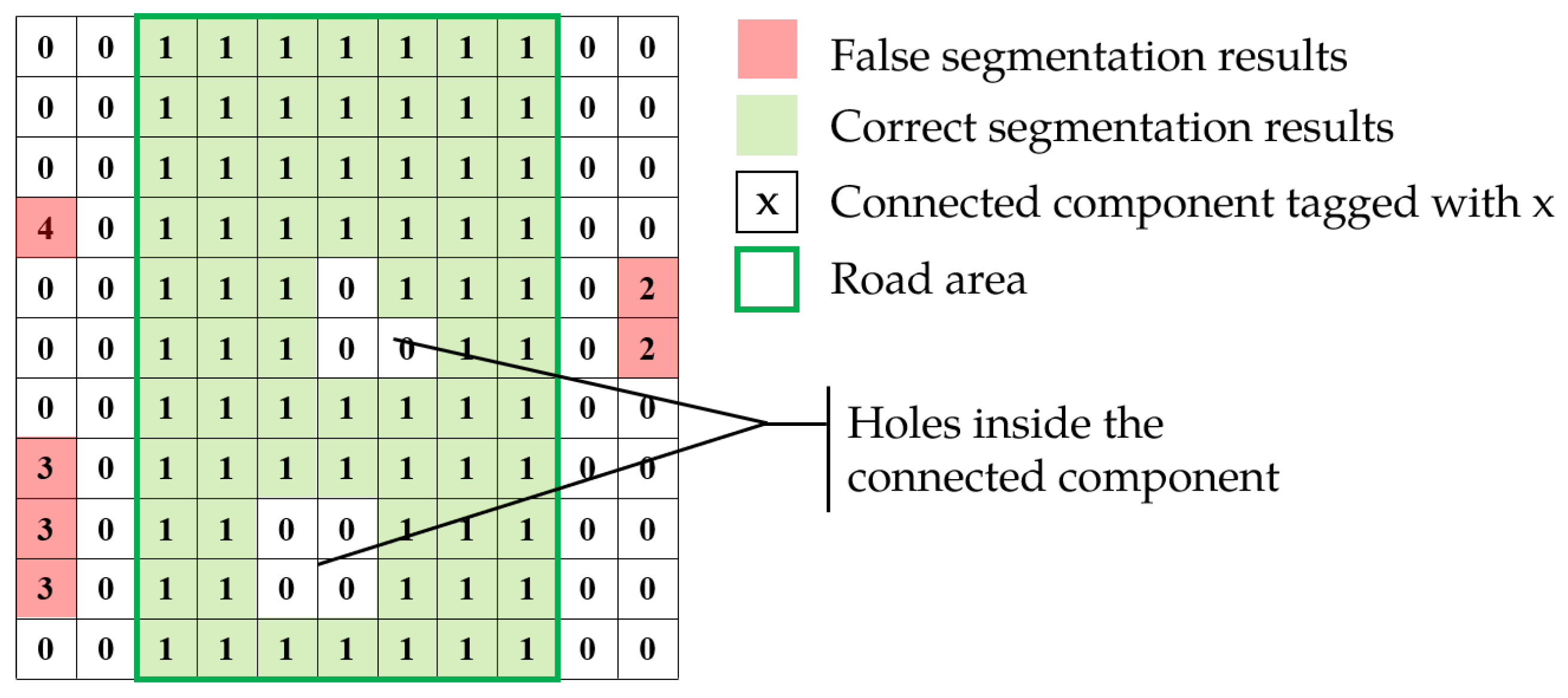

3.3. Connected Component Reservation and Filling

3.4. Parameter Optimization Methods

- It should be noted that in (8), the first part represents the recognition accuracy of the model, where the small probability or area of incorrect segmentation means a large value. The second term represents the recall of the probability, which is used to represent the model’s recognition of pavement areas.

4. Experimental Results

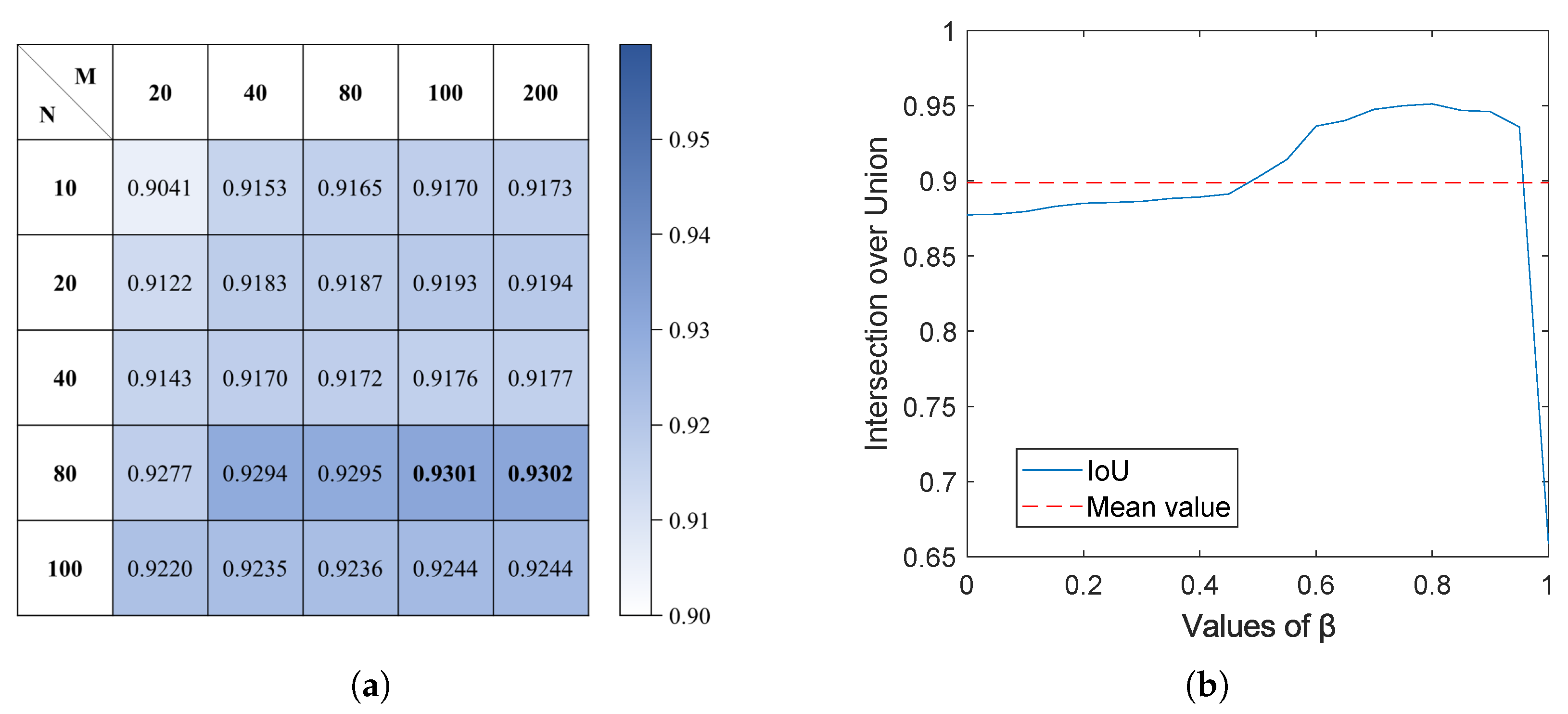

4.1. Experimental Parameter Optimization

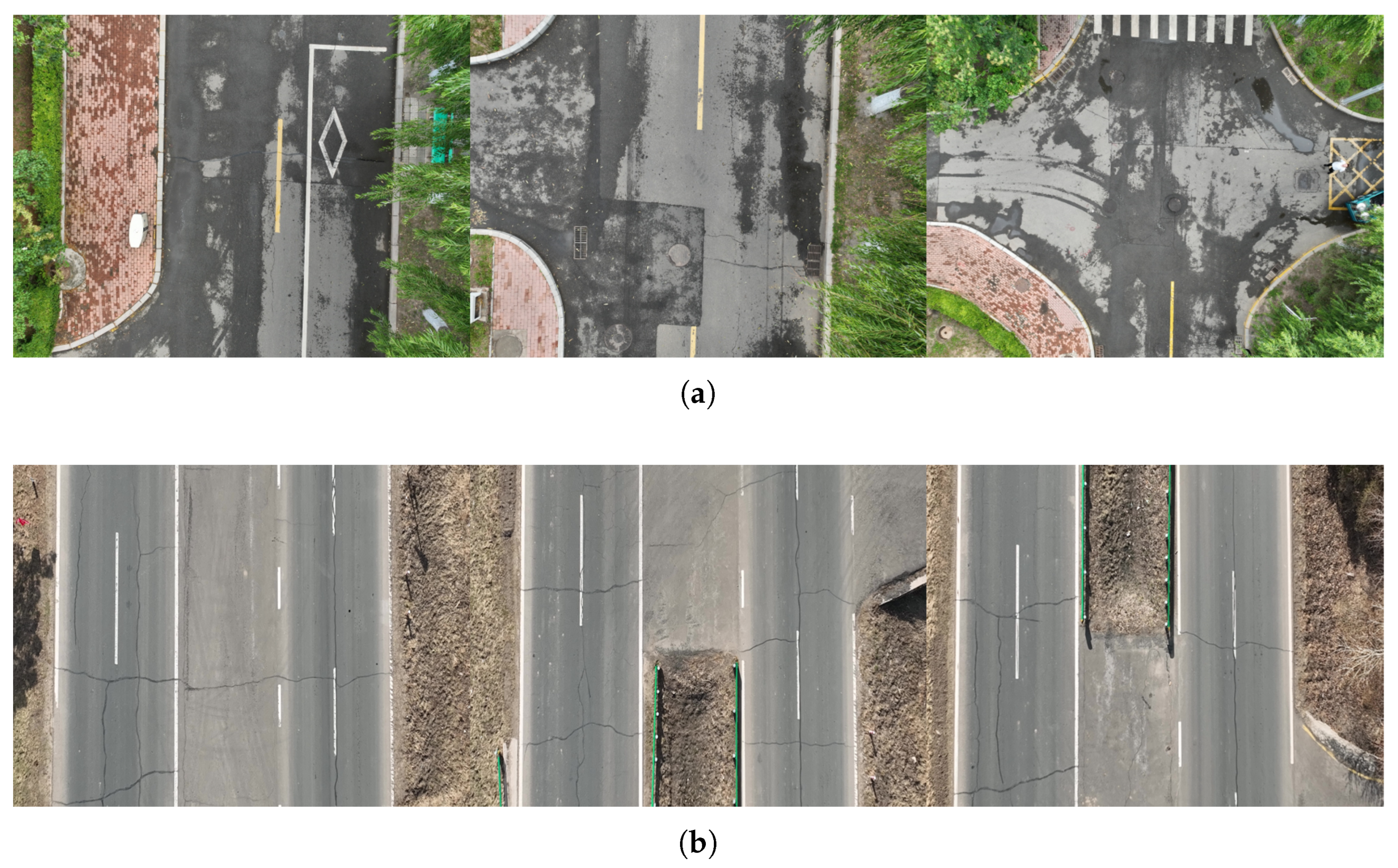

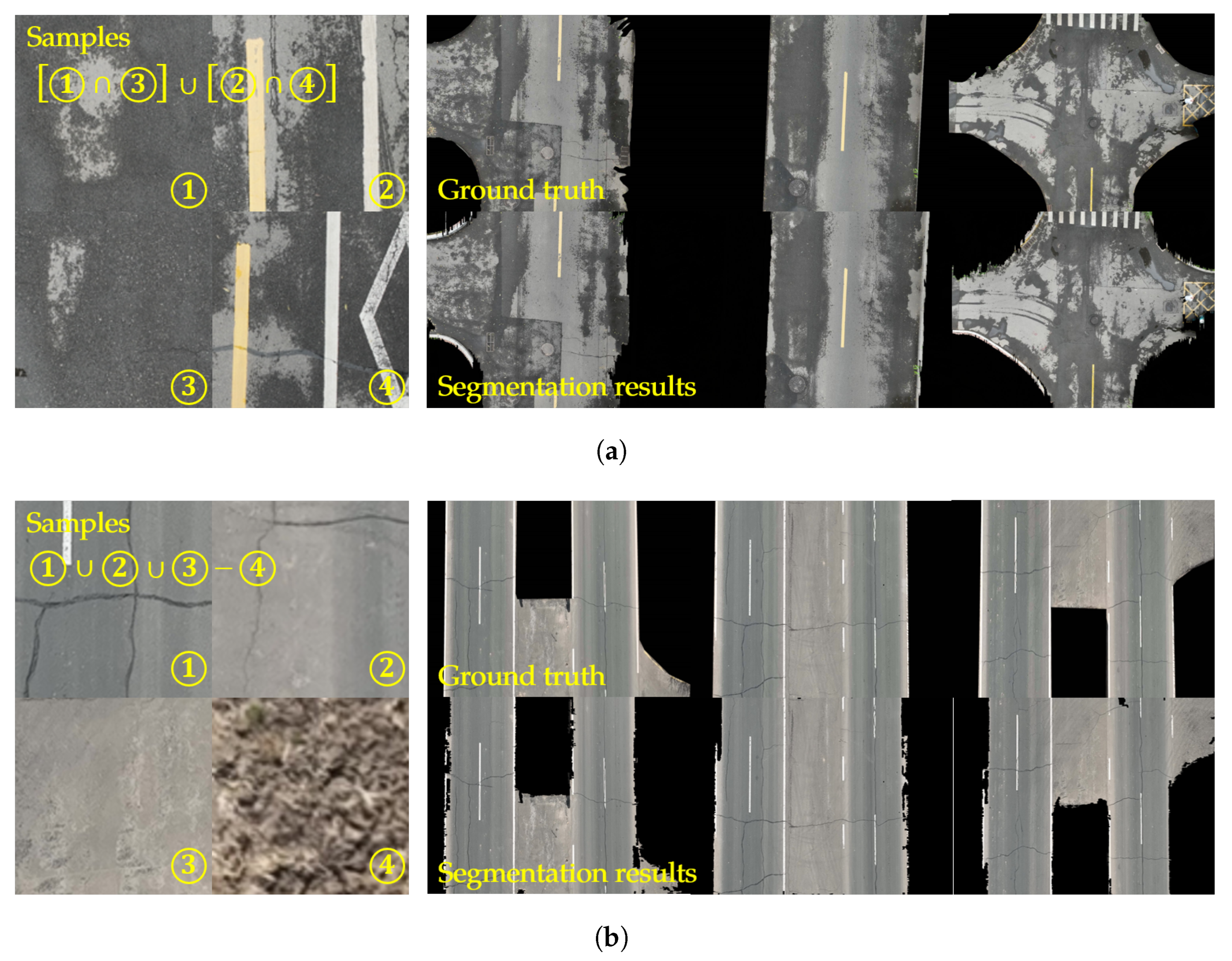

4.2. Algorithm Testing Under Complex Environment

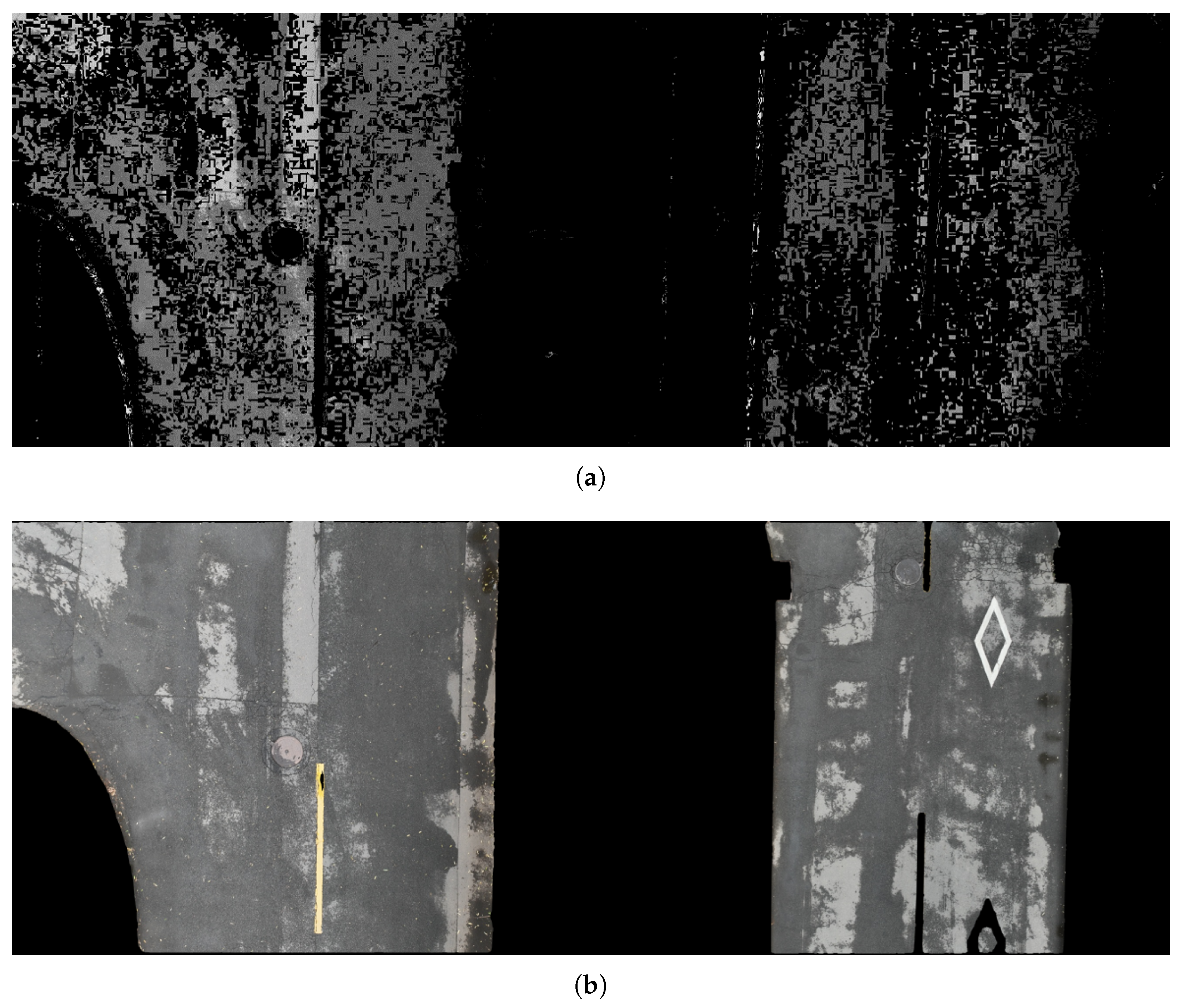

4.3. Comparative Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Silva, L.A.; Leithardt, V.R.Q.; Batista, V.F.L.; González, G.V.; Santana, J.F.D.P. Automated Road Damage Detection using UAV Images and Deep Learning Techniques. IEEE Access 2023, 11, 62918–62931. [Google Scholar] [CrossRef]

- Nappo, N.; Mavrouli, O.; Nex, F.; van Westen, C.; Gambillara, R.; Michetti, A.M. Use of UAV-based photogrammetry products for semi-automatic detection and classification of asphalt road damage in landslide-affected areas. Eng. Geol. 2021, 294, 106363. [Google Scholar] [CrossRef]

- Sebasco, N.P.; Sevil, H.E. Graph-Based Image Segmentation for Road Extraction from Post-Disaster Aerial Footage. Drones 2022, 6, 315. [Google Scholar] [CrossRef]

- Mahmud, M.N.; Osman, M.K.; Ismail, A.P.; Ahmad, F.; Ahmad, K.A.; Ibrahim, A. Road Image Segmentation using Unmanned Aerial Vehicle Images and DeepLab V3+ Semantic Segmentation Model. In Proceedings of the 2021 11th IEEE International Conference on Control System, Computing and Engineering (ICCSCE), Penang, Malaysia, 27–28 August 2021; pp. 176–181. [Google Scholar]

- Ahmed, M.I.; Foysal, M.; Chaity, M.D.; Hossain, A.A. DeepRoadNet: A deep residual based segmentation network for road map detection from remote aerial image. IET Image Process. 2023, 18, 265–279. [Google Scholar] [CrossRef]

- Zhou, Z.; Zhang, Y.; Hua, G.; Long, R.; Tian, S.; Zou, W. SPNet: An RGB-D Sequence Progressive Network for Road Semantic Segmentation. In Proceedings of the 2023 IEEE 25th International Workshop on Multimedia Signal Processing (MMSP), Poitiers, France, 27–29 September 2023; pp. 1–6. [Google Scholar]

- Dewangan, D.K.; Sahu, S.P.; Sairam, B.; Agrawal, A. VLDNet: Vision-based lane region detection network for intelligent vehicle system using semantic segmentation. Computing 2021, 103, 2867–2892. [Google Scholar] [CrossRef]

- Yu, J.; Liu, Z.; Yan, Q. High-resolution SAR image road network extraction combining statistics and shape features. J. Wuhan Univ. 2013, 38, 1308–1312. [Google Scholar]

- Jia, C.; Zhao, L.; Wu, Q.; Kuang, G. Automatic road extraction from SAR imagery based on genetic algorithm. J. Image Graph. 2008, 6, 1134–1142. [Google Scholar]

- Fu, X.; Zhang, F.; Wang, G.; Shao, Y. Automatic road extraction from high resolution SAR images based on fuzzy connectedness. J. Comput. Appl. 2015, 35, 523. [Google Scholar]

- Sun, Z.; Geng, H.; Lu, Z.; Scherer, R.; Woźniak, M. Review of road segmentation for SAR images. Remote Sens. 2021, 13, 1011. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Henry, C.; Azimi, S.M.; Merkle, N. Road segmentation in SAR satellite images with deep fully convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 1867–1871. [Google Scholar] [CrossRef]

- Quan, B.; Liu, B.; Fu, D.; Chen, H.; Liu, X. Improved Deeplabv3 for better road segmentation in remote sensing images. In Proceedings of the 2021 International Conference on Computer Engineering and Artificial Intelligence (ICCEAI), Shanghai, China, 27–29 August 2021; pp. 331–334. [Google Scholar]

- Abderrahim, N.Y.Q.; Abderrahim, S.; Rida, A. Road segmentation using u-net architecture. In Proceedings of the 2020 IEEE International Conference of Moroccan Geomatics (Morgeo), Casablanca, Morocco, 11–13 May 2020; pp. 1–4. [Google Scholar]

- Li, Y.; Xu, L.; Rao, J.; Guo, L.; Yan, Z.; Jin, S. A Y-Net deep learning method for road segmentation using high-resolution visible remote sensing images. Remote Sens. Lett. 2019, 10, 381–390. [Google Scholar] [CrossRef]

- Yousri, R.; Elattar, M.A.; Darweesh, M.S. A Deep Learning-Based Benchmarking Framework for Lane Segmentation in the Complex and Dynamic Road Scenes. IEEE Access 2021, 9, 117565–117580. [Google Scholar] [CrossRef]

- Neupane, B.; Horanont, T.; Aryal, J. Deep learning-based semantic segmentation of urban features in satellite images: A review and meta-analysis. Remote Sens. 2021, 13, 808. [Google Scholar] [CrossRef]

- Zhou, H.; Kong, H.; Wei, L.; Creighton, D.; Nahavandi, S. On Detecting Road Regions in a Single UAV Image. IEEE Trans. Intell. Transp. Syst. 2017, 18, 1713–1722. [Google Scholar] [CrossRef]

- Lian, R.; Wang, W.; Mustafa, N.; Huang, L. Road Extraction Methods in High-Resolution Remote Sensing Images: A Comprehensive Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5489–5507. [Google Scholar] [CrossRef]

- Chougula, B.; Tigadi, A.; Manage, P.; Kulkarni, S. Road segmentation for autonomous vehicle: A review. In Proceedings of the 2020 3rd International Conference on Intelligent Sustainable Systems (ICISS), Thoothukudi, India, 3–5 December 2020; pp. 362–365. [Google Scholar] [CrossRef]

- Liu, Z.; Yi, Z.; Cheng, C. A Robust Ground Point Cloud Segmentation Algorithm Based on Region Growing in a Fan-shaped Grid Map. In Proceedings of the 2022 IEEE International Conference on Robotics and Biomimetics (ROBIO), Jinghong, China, 5–9 December 2022; pp. 1359–1364. [Google Scholar] [CrossRef]

- Xu, G.b.; Xie, S.y.; Yin, Y.x. An Optimalizing Threshold Segmentation Algorithm for Road Images Based on Mathematical Morphology. In Proceedings of the 2009 Third International Symposium on Intelligent Information Technology Application, Nanchang, China, 21–22 November 2009; Volume 2, pp. 518–521. [Google Scholar] [CrossRef]

- Mittal, H.; Pandey, A.C.; Saraswat, M.; Kumar, S.; Pal, R.; Modwel, G. A comprehensive survey of image segmentation: Clustering methods, performance parameters, and benchmark datasets. Multimed. Tools Appl. 2022, 81, 35001–35026. [Google Scholar] [CrossRef]

- Chen, H.; Yin, L.; Ma, L. Research on road information extraction from high resolution imagery based on global precedence. In Proceedings of the 2014 Third International Workshop on Earth Observation and Remote Sensing Applications (EORSA), Changsha, China, 11–14 June 2014; pp. 151–155. [Google Scholar] [CrossRef]

- Wijaya, R.B.M.A.A.; Wahyono. Enhancing Road Segmentation in Satellite Images via Double U-Net with Advanced Pre-Processing. In Proceedings of the 2024 Ninth International Conference on Informatics and Computing (ICIC), Medan, Indonesia, 24–25 October 2024; pp. 1–6. [Google Scholar] [CrossRef]

- Xiao, M.; Min, W.; Yang, C.; Song, Y. A Novel Network Framework on Simultaneous Road Segmentation and Vehicle Detection for UAV Aerial Traffic Images. Sensors 2024, 24, 3606. [Google Scholar] [CrossRef]

- Wu, Z.; Song, C.; Yan, H. TC-Net: Transformer-Convolutional Networks for Road Segmentation. In Proceedings of the 2022 IEEE International Conference on Multimedia and Expo (ICME), Taipei, Taiwan, 18–22 July 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Yuan, W.; Ran, W.; Shi, X.; Fan, Z.; Cai, Y.; Shibasaki, R. Graph Encoding based Hybrid Vision Transformer for Automatic Road Network Extraction. In Proceedings of the IGARSS 2023-2023 IEEE International Geoscience and Remote Sensing Symposium, Pasadena, CA, USA, 16–21 July 2023; pp. 3656–3658. [Google Scholar] [CrossRef]

- Chang, Z.; Lu, Y.; Ran, X.; Gao, X.; Wang, X. Few-shot semantic segmentation: A review on recent approaches. Neural Comput. Appl. 2023, 35, 18251–18275. [Google Scholar] [CrossRef]

- Bi, H.; Feng, Y.; Mao, Y.; Pei, J.; Diao, W.; Wang, H.; Sun, X. AgMTR: Agent mining transformer for few-shot segmentation in remote sensing. Int. J. Comput. Vis. 2025, 133, 1780–1807. [Google Scholar] [CrossRef]

- Chen, Z.; Lian, Y.; Bai, J.; Zhang, J.; Xiao, Z.; Hou, B. Weakly Supervised Semantic Segmentation of Remote Sensing Images Using Siamese Affinity Network. Remote Sens. 2025, 17, 808. [Google Scholar] [CrossRef]

- Zhang, K.; Han, Y.; Chen, J.; Wang, S.; Zhang, Z. Few-Shot Semantic Segmentation for Building Detection and Road Extraction Based on Remote Sensing Imagery Using Model-Agnostic Meta-Learning. In Advances in Guidance, Navigation and Control; Springer: Singapore, 2022; pp. 1973–1983. [Google Scholar]

- Ren, W.; Tang, Y.; Sun, Q.; Zhao, C.; Han, Q.L. Visual Semantic Segmentation Based on Few/Zero-Shot Learning: An Overview. IEEE/CAA J. Autom. Sin. 2024, 11, 1106–1126. [Google Scholar] [CrossRef]

- Khan, I.; Farbiz, F. A back projection scheme for accurate mean shift based tracking. In Proceedings of the 2010 IEEE International Conference on Image Processing, Image Processing (ICIP), Hong Kong, China, 26–29 September 2010; pp. 33–36. [Google Scholar]

- Suzuki, S.; be, K. Topological structural analysis of digitized binary images by border following. Comput. Vision Graph. Image Process. 1985, 30, 32–46. [Google Scholar] [CrossRef]

- Kirillov, A.; Mintun, E.; Ravi, N.; Mao, H.; Rolland, C.; Gustafson, L.; Xiao, T.; Whitehead, S.; Berg, A.C.; Lo, W.Y.; et al. Segment anything. arXiv 2023, arXiv:2304.02643. [Google Scholar] [PubMed]

| Scenes | Minimum IoU | Minimum Precision | Average IoU | Average Precision |

|---|---|---|---|---|

| Roads with puddles | 0.8437 | 0.8633 | 0.9273 | 0.9489 |

| Roads with similar color | 0.9277 | 0.93022 | 0.9473 | 0.9489 |

| Scenes | Ave IoU | Ave Precision | FLOPS | Parameters (M) |

|---|---|---|---|---|

| Proposed algorithm | 0.9273 | 0.9489 | 146 M | 0.008 |

| Traditional algorithm | 0.2139 | 0.2143 | - | - |

| SAM | 0.9685 | 0.9809 | 746.4 G | 93.7 |

| SAM2 | 0.9692 | 0.9813 | 533.9 G | 80.8 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cai, H.; Chen, J.; Yin, Y.; Yu, J.; Dong, Z. A Rapid Segmentation Method Based on Few-Shot Learning: A Case Study on Roadways. Sensors 2025, 25, 5290. https://doi.org/10.3390/s25175290

Cai H, Chen J, Yin Y, Yu J, Dong Z. A Rapid Segmentation Method Based on Few-Shot Learning: A Case Study on Roadways. Sensors. 2025; 25(17):5290. https://doi.org/10.3390/s25175290

Chicago/Turabian StyleCai, He, Jiangchuan Chen, Yunfei Yin, Junpeng Yu, and Zejiao Dong. 2025. "A Rapid Segmentation Method Based on Few-Shot Learning: A Case Study on Roadways" Sensors 25, no. 17: 5290. https://doi.org/10.3390/s25175290

APA StyleCai, H., Chen, J., Yin, Y., Yu, J., & Dong, Z. (2025). A Rapid Segmentation Method Based on Few-Shot Learning: A Case Study on Roadways. Sensors, 25(17), 5290. https://doi.org/10.3390/s25175290