Efficient Caching Strategies in NDN-Enabled IoT Networks: Strategies, Constraints, and Future Directions

Abstract

1. Introduction

1.1. Related Work

1.2. Motivation and Contribution

- In this article, we provide a comprehensive analysis and summary of NDN, in conjunction with IoTs, focusing on the transmission from providers to end users through cacheable networks.

- A comprehensive evaluation of various strategies in NDN-based IoT networks based on current and relevant surveys is conducted.

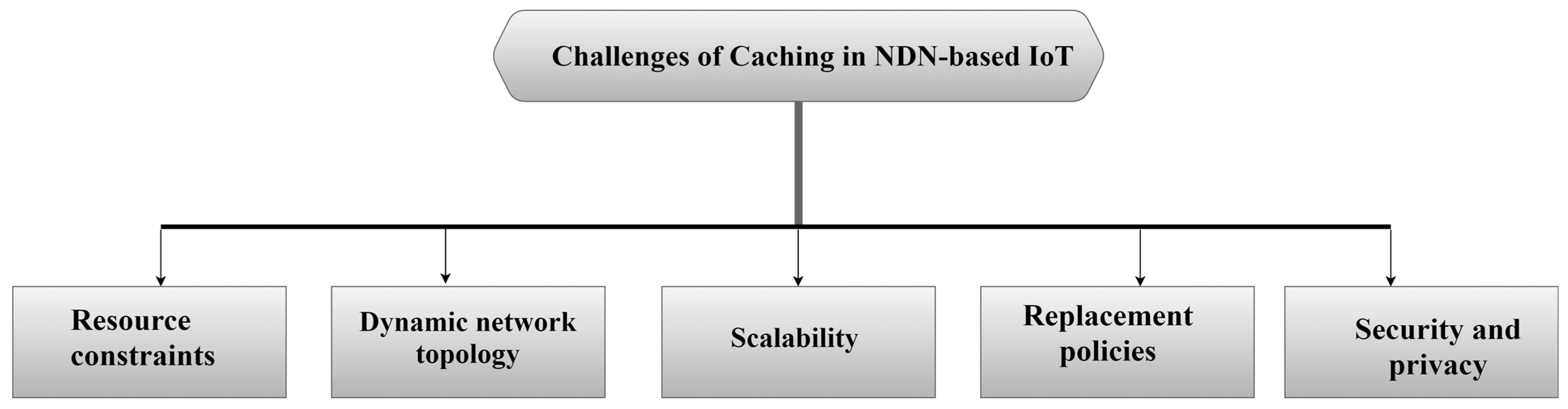

- This survey provides comprehensive insights into the difficulties of NDN-based IoT networks and identifies their resolutions through the use of caching strategies.

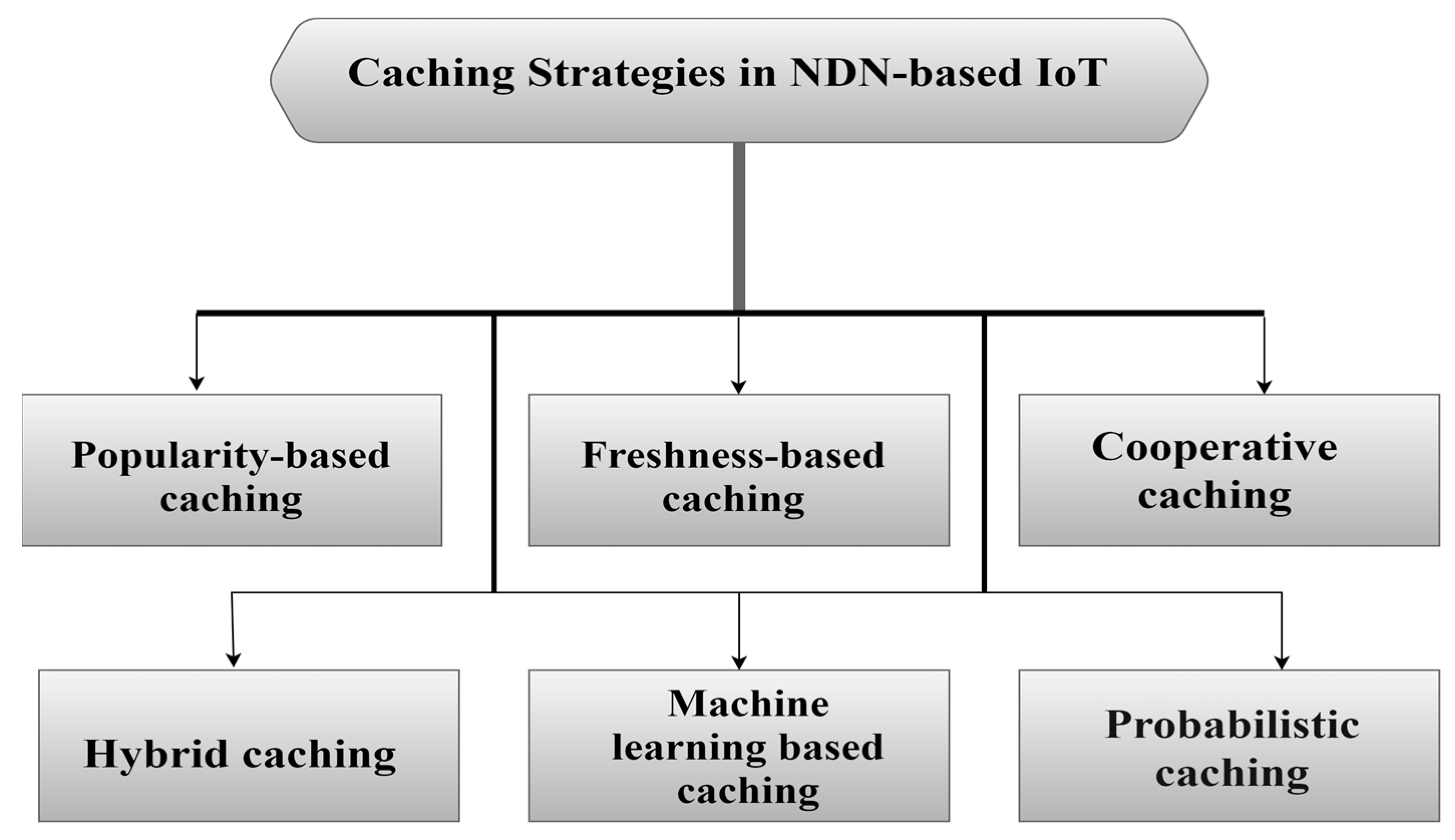

- This paper provides a comprehensive summary of NDN-based IoT caching strategies based on their classification into six categories: popularity-based, freshness-aware, collaborative, hybrid, probabilistic, and machine learning-based.

1.3. Paper Strucutre

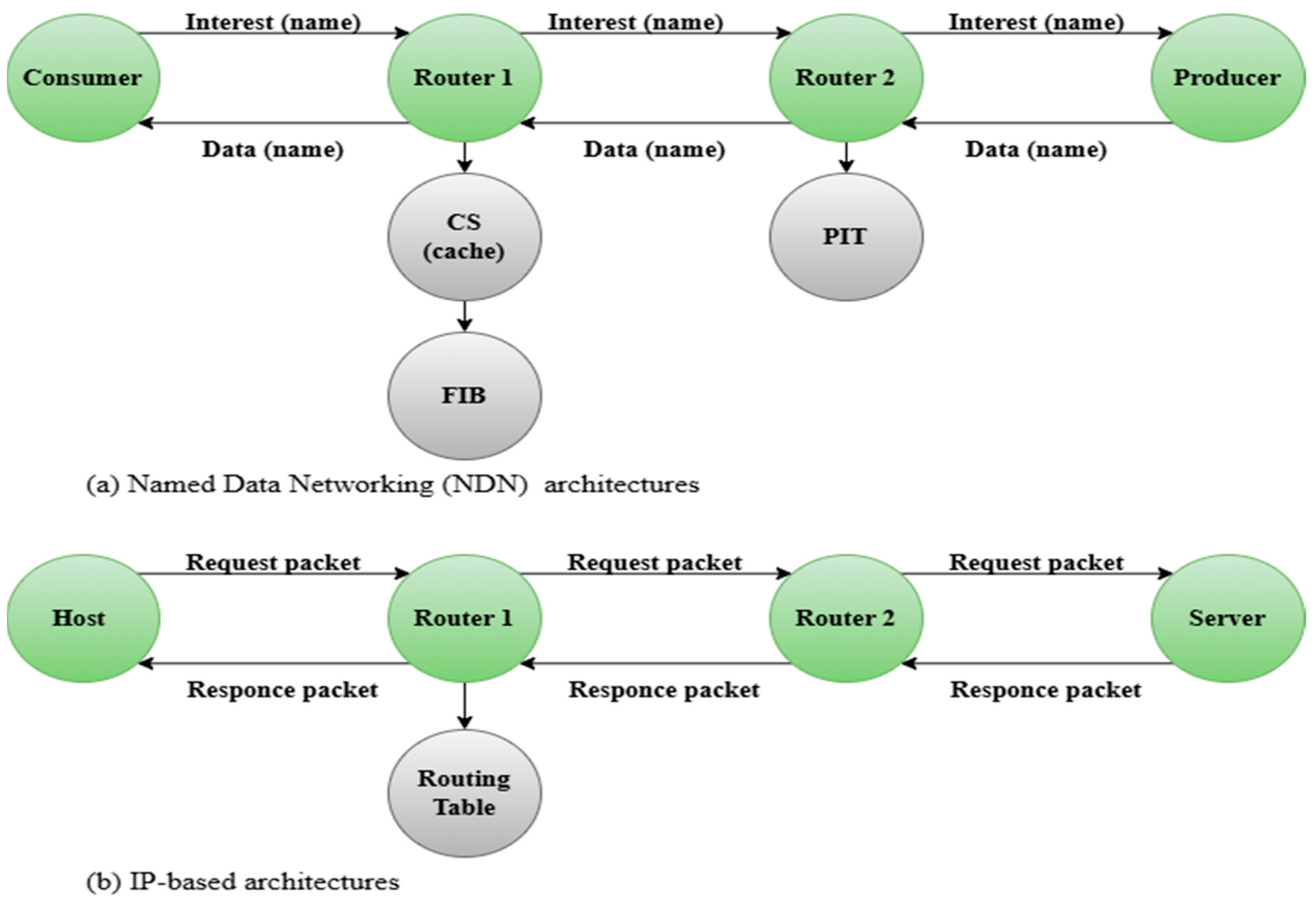

2. Caching in NDN-IoT Networks

3. Caching Strategies in NDN-IoT

3.1. Popularity-Based Caching

3.1.1. Popularity with Network Centrality Awareness

3.1.2. Traffic and Energy Efficiency-Oriented Caching

3.1.3. Cache Consistency and Data Freshness Mechanisms

3.1.4. Dynamic Popularity Windows and Threshold-Based Schemes

| Category | Study | Proposed Strategy | Key Features | Evaluation and Results | Tool |

|---|---|---|---|---|---|

| Popularity with Network Centrality Awareness | [59] | PT-Cache | A new mechanism used, which is interest packet forwarding, which works to update closeness centrality values and content popularity ratings | Compared to both PT-default and LCD. Improvements in both cache efficiency and network performance, reduces response time. This strategy based on this equation. | ndnSIM |

| [58] | Popularity-Aware Closeness Centrality (PACC) and efficient popularity-aware probabilistic caching (EPPC) | PACC and EPPC give better results than other strategies | Compared to CCS, PCS, CCC, and ABC. | Icarus | |

| [60] | Popularity and betweenness-based replacement scheme (PBRS) | Uses content popularity and node importance for cache replacement | Compared with LRU and LFU improved CHR and reduced delay. | ndnSIM | |

| Traffic and Energy Efficiency-Oriented Caching | [62] | Traffic-aware caching mechanism (TCM) | Caches popular content near the sink node to improve energy efficiency | Compared with CPCCS and MPC reduced energy consumption and response time. | COOJA |

| [63] | (CCN-WSNs) or Collaborative Caching Strategy Distance and Degree aware (CSDD) | Balances content accessibility and energy efficiency, selectively stores data in neighboring nodes | Compared with LCE and LCD reduced energy consumption and improved CHR. | CCNx Contiki | |

| [64] | Proposes an architecture, including edge computing | Increases content availability, reduces access time | Outperformed in response time, content availability, and bandwidth. | NS-3 | |

| Cache Consistency and Data Freshness Mechanisms | [67] | PoSiF | Relies on content freshness, popularity, and size | Outperformed CCS/CES, LCD, and CEE in CHR and reduces hop ratio. | ndnSIM |

| [68] | Popularity-based Cache Consistency Management (PCCM) | Ensures cache consistency by prioritizing high-freshness popular data, removes outdated cache content | Compared with traditional methods improved cache consistency and reduced latency. | ndnSIM | |

| Dynamic Popularity Windows and Threshold-based Schemes | [70] | Dynamic Popularity Window and Distance-based Efficient Caching | Uses dynamic popularity window and content distance for caching decisions, improves QoS and LRU for replacement | Compared with LCE improved hit ratio, network traffic, and response time. | - |

| [71] | Dynamic Popularity Window-based Caching Scheme (DPWCS) | Reduces redundant storage and network load by caching highly popular content based on request rate and threshold | Compared with LCE and ProbCache, improved CHR, hop count, and network load. | - | |

| [56] | Compound Popular Content Caching Strategy (CPCCS) | Caches less popular and optimal popular content, LRU for replacement | Compared with WAVE, MAGIC, HPC, CCAC, MPC, and ProbCache improved CHR, stretch, and content diversity. | SocialCCNSim-Master | |

| [72] | Periodic caching strategy (PCS) | Threshold-based statistical table, use the LRU for replacement, reduces redundancy and congestion | Compared with CCS and TCS and the PCS improved CHR, retrieval time, and stretch. | SocialCCN |

| Category | Study | Equations | Description |

|---|---|---|---|

| Popularity with Network Centrality Awareness | [59] | . ). : Weighting factor that adjusts responsiveness to new data. | |

| . . | |||

| . : Total number of nodes in the network. . | |||

| [58] | . , respectively. | ||

| [60] | . | ||

. | |||

| . . . | |||

| . . : Set of all requested content. | |||

| Traffic and Energy Efficiency-Oriented Caching | [62] | . : Total number of interest messages sent by all consumers. : Total number of nodes in the network. | |

| : Total number of interest packets transmitted in the network. . . | |||

| . : Total number of interest messages. | |||

| Each term represents the duty cycle of different radio states: : Listening, : Transmitting, : Receiving, : Overhearing, : Additional operations. | |||

| . : Total number of nodes. | |||

| [63] | -th most popular content. : Zipf exponent controlling the skewness of popularity (higher = more skewed). : Rank of the content (1 is most popular). : Normalization constant defined as: is the total number of contents. | ||

| : The threshold hop-distance from the source node where caching starts. : Total number of hops from the source node to the requester. : Caching threshold percentage (e.g., 30%, 50%). | |||

| : Total number of generated content interests. . : total possible hop count to source. | |||

| : Total number of nodes. . The numerator counts unique content items; the denominator counts total stored items. | |||

| [64] | Where (PC): popularity of content. is set to 0.01. | ||

| : Content popularity of content i. : Number of content types. : Caching resource allocated to content i on device j. : Computing resource allocated to content i on device j. : Coverage area of mobile device. : Speed of mobile device. : Time to retrieve a unit content from a mobility-supported content provider. : Time to retrieve a unit content from Tier 4 devices (content server). : Processing latency. : Number of caching devices that can cache content i. | |||

| : Size of content i. | |||

| : Total caching capacity available in Tier 2 devices. | |||

| : Computing requirement for content i. : Total computing resource available in Tier 2 devices. | |||

| Cache Consistency and Data Freshness Mechanisms | [67] | at the caching node. at the producer node. . This equation ensures content is still fresh if the arrival time minus the generation time is less than its lifetime. | |

where: | : Popularity weight of incoming content. : Size weight of incoming content. : Request rate of incoming content. : Request rates of cached items. : Size of incoming content. : Sizes of cached items. | ||

where: | : Request rates of cached items to evict. : Number of cached items to evict. : Sizes of cached items to evict. | ||

| : Cache limit (maximum cache size). | |||

| : Number of content requests in the time window. : Last and first timestamps of the request window. | |||

| [68] | is the total number of interest packets received for the content during an estimation period. | ||

| . : Proportion of popular contents. : Impact ratios related to TTL and update frequency. : Characteristic time of cache. : Update time of content. | |||

| Dynamic Popularity Windows and Threshold-based Schemes | [70] | : Coefficient to regulate window size. : Content catalogue size. . | |

| : Threshold value for incoming content Dj on Ri. : Normalized hop-count navigated by Dj. | |||

| : Number of distinct interests in the window. | |||

| : Hop-count from the previous caching router to current. : Hop-count of Interest packet to content provider. | |||

| Combining the above equation. | |||

| : Rank of the content. : Skewness factor (typically 0.7 in simulations). : Total number of contents. | |||

| [71] | This indicates that the maximum popularity window size should grow proportionally with the total number of distinct contents in the network. | ||

| The window size should also increase as the request rate grows to keep tracking accurate popularity. | |||

| : Coefficient for window size control. : Number of distinct contents. : Content request rate. | |||

| As the window size increases, the threshold to consider content as “popular” must also increase. | |||

| [56] | : Number of hops between a content provider and a consumer. : A constant. | ||

| : Mean Residence Time. : Expected Mean Residence Time. | |||

| : Compensation value. : Recent caching load. : Size of cache at node i. | |||

| : Total number of content. : Rank of the content. | |||

| Defines a threshold to classify content as “popular”. | |||

| [72] | . | ||

| This metric measures the efficiency of content retrieval in terms of the number of hops. It compares the actual number of hops traveled to retrieve content (hop-traveled) with the total number of hops (total-hop) between user and server. |

3.2. Freshness-Based Caching

3.2.1. Machine Learning and Prediction-Based Freshness Management

3.2.2. Freshness-Aware Replacement Policies

| Category | Study | Proposed Strategy | Key Features | Evaluation and Results | Tool |

|---|---|---|---|---|---|

| Machine Learning and Prediction-Based Freshness Management | [76] | Cache Aging with Learning (CAL) | Cache aging mechanism combined with artificial neural networks (ANNs) that address the challenge of fresh data | Outperformed VLRU in CHR, reduces latency, and reduces network load. | MATLAB-based simulation |

| [74] | Least Fresh First (LFF) policy | Uses ARMA model for time-series prediction; removes outdated content based on estimated remaining freshness; ensures fresh data caching. | Outperformed RR, LRU, LFU, and FIFO in reducing hops, reducing service provider pressure, hops, and response time. | ccnSIM | |

| Freshness-Aware Replacement Policies | [79] | - | Discuss the role of data freshness in the replacement of cached content | LRU with freshness awareness enhances CHR and reduces RTT compared to FIFO replacement policy. | Mini-NDN simulator |

| [80] | Smart caching (SCTSmart) | Employs SCTSmart caching table to track and manage cached data freshness | Reduced energy consumption while maintaining high data freshness levels. | ndnSIM |

| Category | Study | Equations | Description |

|---|---|---|---|

| Machine Learning and Prediction-Based Freshness Management | [76] | = Round-trip time delay. = Processing time at network nodes. = Queuing delay. = Transmission time. | |

| = Number of observations. . . | |||

| = Incoming message. = Set of data request messages. = Set of data response messages. | |||

| Where: = Request time. = Cached data timestamp. = Requested data type refresh rate. | |||

| = New data timestamp. = Cached data timestamp. = New data type refresh rate. | |||

| Where: = Weighting coefficient. = Freshness rate. | |||

| = Same request number (specific data type requests). = Total requests. | |||

| = Predicted same request number. = Predicted total requests. | |||

| [74] | : Number of consumers. : Number of requests per consumer. . : Number of hops to producer for the same request. | ||

| that were served by the producer. . | |||

| . | |||

| : Probability of cache hit. : Delay for content from cache. : Delay from producer. : Number of caching nodes. : Number of times content cached. : Number of caching decisions. : Number of evictions. | |||

| . . | |||

| Freshness-Aware Replacement Policies | [79] | is the time taken by routers to process packets. is the delay caused by packets waiting in queues. is the time taken to push all packet bits onto the link. is the time for the signal to propagate through the medium. | |

| [80] | = Caching utility function value. = Energy level of the node. = Cache occupancy (how full the cache is). = Freshness of the data. ). ). | ||

| (energy required for transaction). (energy required for data caching). (energy required for amplification). |

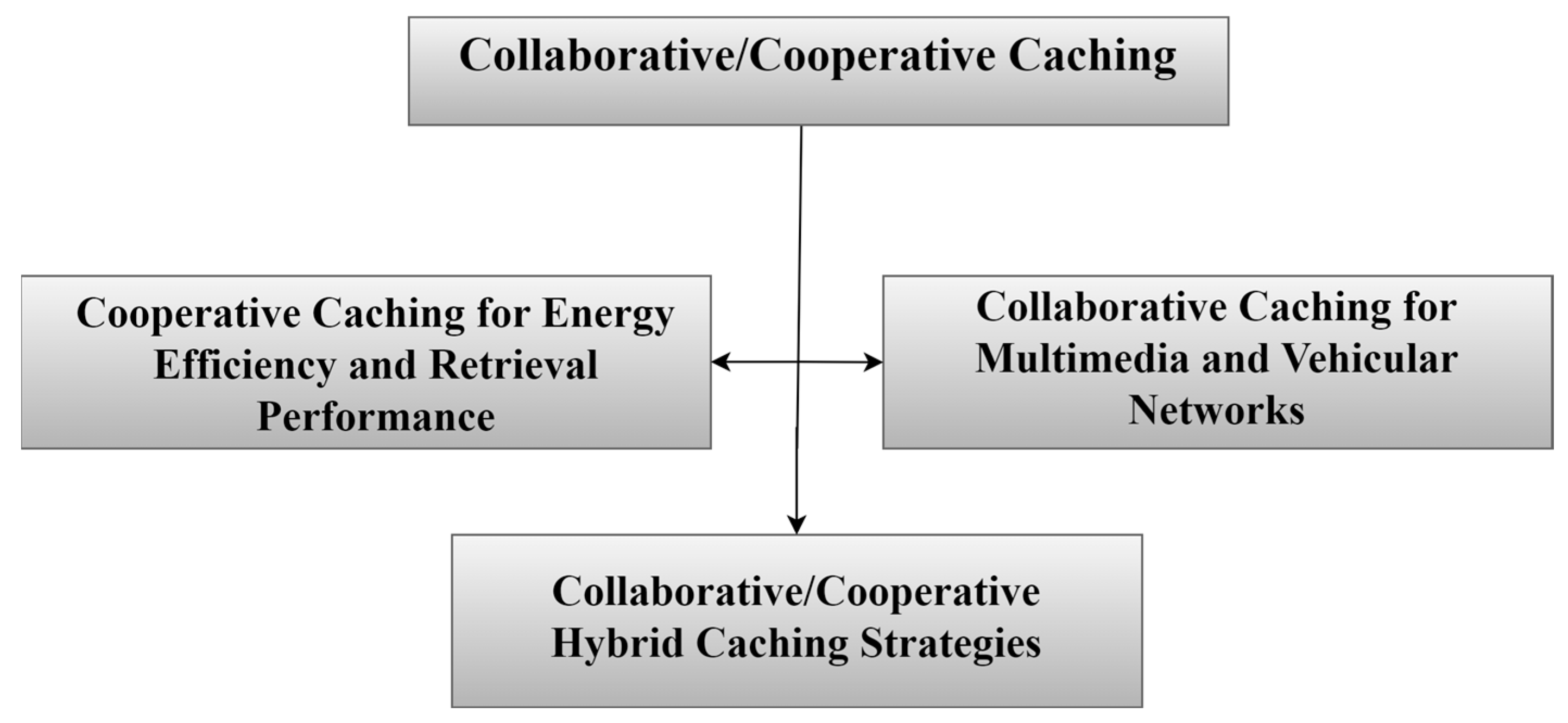

3.3. Collaborative/Cooperative Caching

3.3.1. Cooperative Caching for Energy Efficiency and Retrieval Performance

3.3.2. Collaborative Caching for Multimedia and Vehicular Networks

3.3.3. Collaborative/Cooperative Hybrid Caching Strategies

| Category | Study | Proposed Strategy | Key Features | Evaluation and Results | Tool |

|---|---|---|---|---|---|

| Cooperative Caching for Energy Efficiency and Retrieval Performance | [84] | Cooperative Multi-Hop Caching (SMCC) | Balances content retrieval time and energy consumption; regional storage within α-hop distance; enables multi-hop cooperative caching. | Demonstrated energy savings and reduced content retrieval time compared to CoCa and AA. | - |

| [85] | Green Cooperative Caching Strategy | Proactive caching in auxiliary nodes; reduces non-renewable energy consumption while maintaining QoE standards. | Reduced non-renewable energy consumption while improving CHR. | IBM ILOG CPLEX and MATLAB R2016b | |

| [86] | Cooperative Caching (CoCa) with NDN | Integrates cooperative caching with deep sleep mechanisms; uses MDMR for content replacement. | Improved energy efficiency, response time, and content diversity. | - | |

| Collaborative Caching for Multimedia and Vehicular Networks | [88] | Enhanced Collaborative Caching for Multimedia Streaming | Uses hierarchical edge-core structure; clustering principle for balancing traffic and storage; supports chunk-level caching for efficiency. | Improved CHR, reduced hops, enhanced QoE in vehicular networks compared with LCE and WAVE. | MATLAB Parallel Simulation Toolkit |

| Collaborative/Cooperative Hybrid Caching Strategies | [90] | - | Discuss the impact of combining cache placement and replacement operations in NDN networks. | When combining both LCE and LFU, the results outperform the process of combining both LCE and LRU. | Icarus |

| [89] | Hybrid Caching Strategy (On-Path and Off-Path) | Combines on-path and off-path caching to optimize storage efficiency; heuristic mechanism prioritizes frequently requested content. | Improved CHR, reduced redundancy, and decreased retrieval delay. | MATLAB |

| Category | Study | Equations | Description |

|---|---|---|---|

| Cooperative Caching for Energy Efficiency and Retrieval Performance | [84] | : Total energy consumption without cooperative caching. : Energy consumption per time unit in active mode. : Energy consumption per time unit in light-sleep mode. : Number of hops from the producer to the consumer (original transmission path). : Total number of sensors in the region. . : Waiting phase duration (in time slots). | |

| : Total energy consumption with cooperative caching. : Number of hops from the cooperator to the consumer (shortened path). (cooperation scope). -hop region. : Energy consumption per time unit in deep-sleep mode. Remaining terms same as above. | |||

| . : Risk coefficient of cooperation (probability that a cooperator cannot serve a request). | |||

| : Net reduction in data retrieval delay due to cooperative caching. : Average transmission delay per hop. : Deep-sleep schedule time (delay incurred when waiting for a sensor to wake up). | |||

| [85] | . in terms of popularity (1 being the most popular). : Skewness parameter of the Zipf distribution (controls how steep the popularity curve is). : Total number of content objects. | ||

| . . . . . | |||

| . : Maximum battery capacity. : Energy remaining from the previous slot after usage. . | |||

| . . | |||

| . . : Delay per hop. to the node closest to the data center. : Additional delay from the data center to the network. | |||

| [86] | : Total energy consumed by the node. : Power consumed in a particular state (e.g., sleeping, active listening, unicast sending, broadcast sending). : Duration the node spends in that state. : Number of replicas for the most recent sensor value from source i. : Total number of nodes. ). | ||

| Represents the average number of replicas for each content item across the network. | |||

| : Probability that content is available in the network. : Lifetime (freshness window) of the data. | |||

| . | |||

| : Total number of content sources. | |||

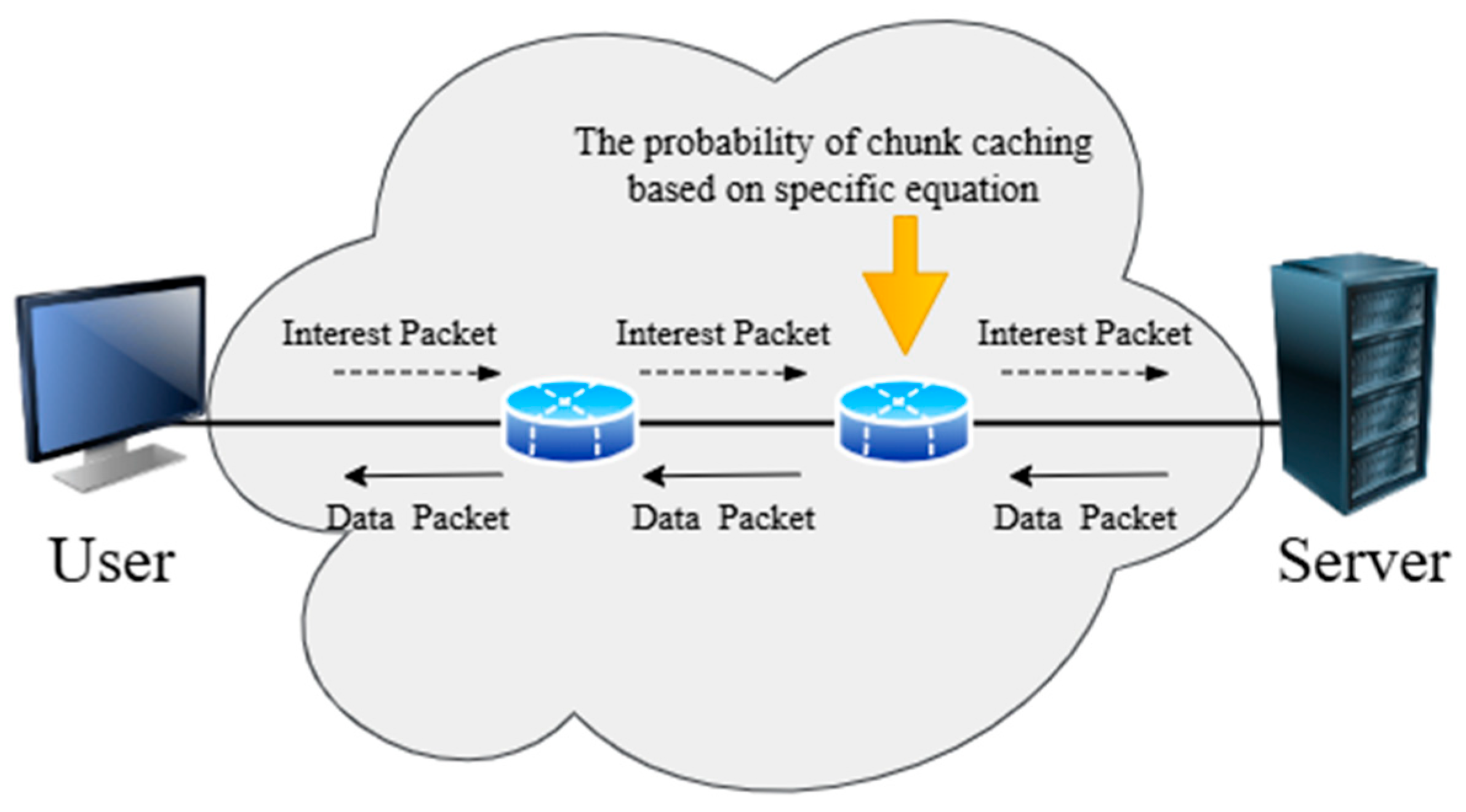

| Collaborative Caching for Multimedia and Vehicular Networks | [88] | . . is the chunk size. | |

| . . : Weighted sum of neighbor hop distances. . . : corresponding average values across all nodes. | |||

| Collaborative/Cooperative Hybrid Caching Strategies | [89] | : Diversity index of incoming requests at router i : Entropy of the incoming request set X : Average rate of requests at router i . | |

| : Expected (average) cache hit probability at router i. : Expected end-to-end delay while serving requests. : Available cache space at router i. | |||

| : Caching score of content k at router i. : Normalized distance (in hop count) from the source to router i. : Normalized frequency of access for content k. : Constants to weigh distance and frequency. | |||

| : Delay threshold required by service agreement. Turns the previous bi-objective problem into a constrained optimization for practical deployment. | |||

| : Expected cache hit ratio at the central router. : Available cache space at the central router. : Average delay from each router i. | |||

| : Boolean variable, 1 if content from router i is chosen, 0 otherwise where: : Represents the rate of non-repetitive request traffic from router i. : Score to prioritize edge routers based on delay and traffic diversity. |

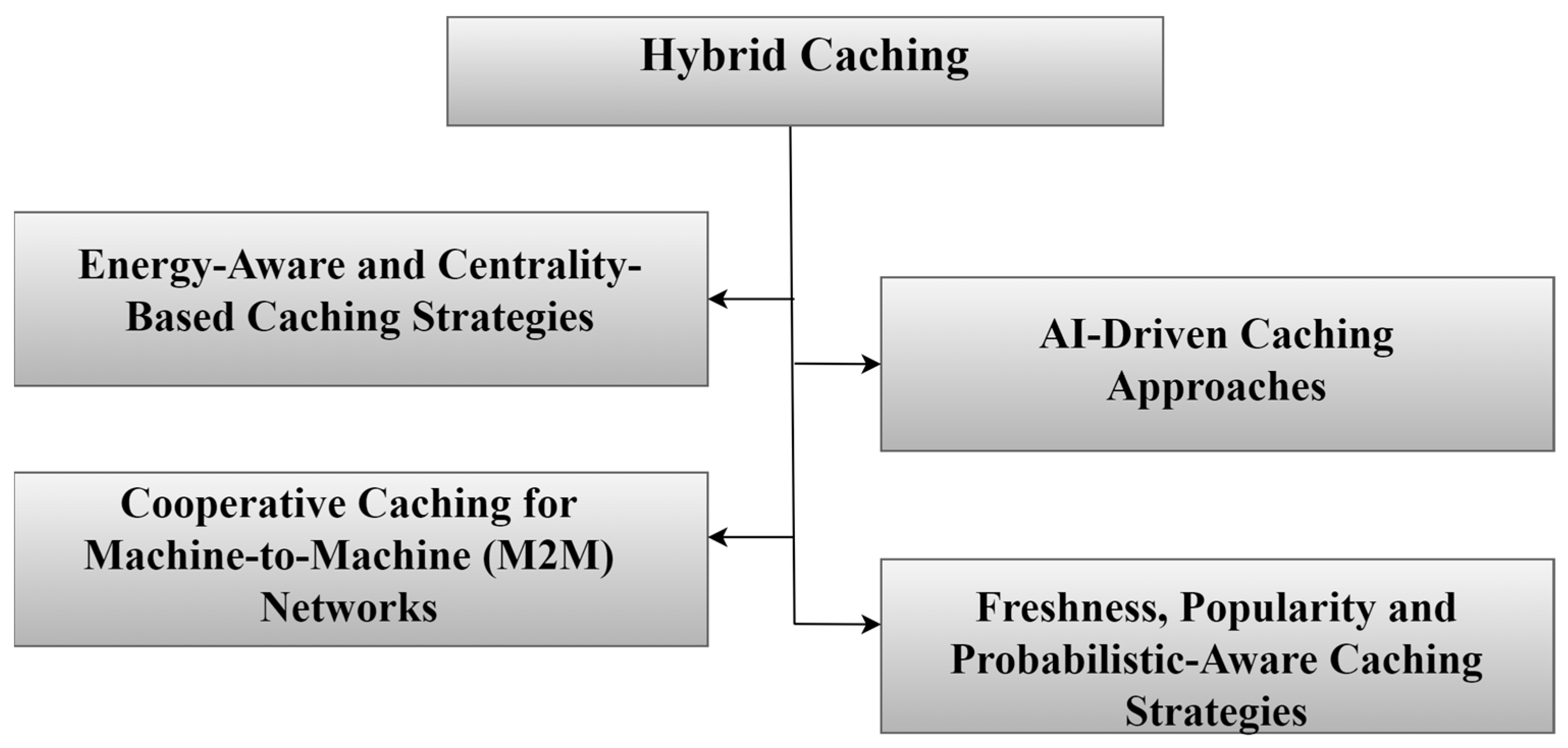

3.4. Hybrid Caching

3.4.1. Energy-Aware and Centrality-Based Caching Strategies

3.4.2. AI-Driven Caching Approaches

3.4.3. Cooperative Caching for Machine-to-Machine (M2M) Networks

3.4.4. Freshness, Popularity, and Probabilistic-Aware Caching Strategies

| Category | Study | Proposed Strategy | Key Features | Evaluation and Results | Tool |

|---|---|---|---|---|---|

| Energy-Aware and Centrality-Based Caching Strategies | [92] | Energy-aware Centrality-Based Caching (EABC) | EABC balances content delivery latency and energy efficiency. | Improved cache hit rates, retrieval delay, and network lifetime compared to CEE, Prob, pCASTING and ABC. | ndnSIM |

| [93] | Lifetime Cooperative Caching (LCC) | Caches content based on lifetime and request rate. | Improved energy consumption, retrieval time, and reduced hops compared to LCE and probabilistic caching strategy. | C++ Simulator | |

| AI-Driven Caching Approaches | [95] | Heterogeneous Edge Caching Scheme | Uses AI, cloud computing, and collaborative filtering to predict and cache high-demand content at edge nodes. | Reduced retrieval time, improved CHR, and decreased number of hops. | Icarus Simulator |

| Cooperative Caching for Machine-to-Machine (M2M) Networks | [37] | Core and Edge Layer Caching (CCS and CES) | Core caching for long-lived data, edge caching to reduce redundancy, enhances QoE. | Superior CHR, reduced hops and retrieval time compared to LRU, RC, and BEP. | ndnSIM |

| [97] | Cooperative Caching for M2M | Prioritizes popular content in M2M environments, reduces duplication and improves efficiency. | Improved energy efficiency and access to cached content compared to LCE and LRU. | TOSSIM | |

| Freshness, Popularity and Probabilistic-Aware Caching Strategies | [98] | Efficient popularity-aware probabilistic caching (EPPC) | Hybrid approach combining content selection, placement, and replacement. | Improved CHR, reduced path stretch, and retrieval time compared to ProbCache, MPC, and Client-Cache. | Icarus Simulator |

| [99] | PF-ClusterCache | Merges storage resources in clusters, prioritizes most recent and most requested content, uses LPF for content replacement. | Improved CHR and reduced retrieval time compared to LCE, PoolCache, and CFPC. | ndnSIM | |

| [100] | Caching for Freshness and Popularity Content (CFPC) | Independently stores popular and fresh content, ensures simplicity and scalability in NDN-IoT, enhances QoE. | Enhanced retrieval time, CHR, and fewer hops than CEE and RC. | ndnSIM |

| Category | Study | Equations | Description |

|---|---|---|---|

| Energy-Aware and Centrality-Based Caching Strategies | [92] | where: | .Betweenness centrality of node v. This measures how central or important a node is within the network by counting how many shortest paths between other nodes pass through v. : The set of all nodes in the network. ). , and 0 otherwise. |

| [93] | = Freshness of the data. = Current time. = Time when the data was generated by the content producer. | ||

| . = Threshold functions for root, middle, and edge nodes. = Node type indicators (only one is 1, others are 0 depending on the node type). | |||

| Caching Threshold Adjustment (Auto-Configuration Mechanism) If request rate is increasing: If request rate is decreasing: | = Adjustable weights. = Set of data lifetimes in a sliding time window. | ||

| to transmit one bit. = Base transmission energy per bit. = Energy cost of the transmitter amplifier. and the gateway. = Path loss factor. | |||

| = Simulation time. = Packet size. = Energy for sensing one bit. = Number of IoT devices. = Energy to wake up from sleep mode. = Number of times IoT devices are activated. | |||

| = Number of activations. . = Requests served from cache. | |||

| = Number of hops for each request. | |||

| AI-Driven Caching Approaches | [95] | . . . J: Total number of content attributes. | |

| Size_L: Cache size allocated to each edge node. vol: Total capacity of the cloud server database. : Cache allocation ratio (between 0 and 1). | |||

| . . N: Total number of content items. | |||

| . . J: Total number of content attributes. | |||

| . . . . | |||

| Cooperative Caching for Machine-to-Machine (M2M) Networks | [37] | : The current popularity threshold. : The previous value of the popularity threshold. : The current average number of received Interest packets per content. : A smoothing factor (set to 0.125 in the study). | |

| : The current freshness threshold. : The previous value of the freshness threshold. : The current average freshness period of the received IoT data packets. : Same smoothing factor (0.125). | |||

| at the core nodes. . . : Popularity threshold. : Freshness threshold. | |||

| at the edge nodes. . in the given time window. | |||

| . . . | |||

| [97] | Be | . . in the current request path. | |

| BetwRepRate(v): Ratio indicating node importance, factoring in both centrality and replacement rate. . . : Maximum number of replacements among all nodes. | |||

| . . . . : Total number of all content requests in the previous cycle. | |||

| Freshness, Popularity and Probabilistic-Aware Caching Strategies | [98] | : The path length (number of hops) from the requesting node r to the serving node s. : The path length from the requesting node r to the content provider p (publisher). : Total number. | |

| [99] | . , which balances between recent observations and past popularity. . : The popularity count of content. | ||

| [100] | . . . | ||

| . . in this paper). : Popularity sample from the previous equation. | |||

| . : Popularity threshold from the previous interval. (see next equation). : Smoothing factor (same as before). | |||

| . . . | |||

| . : Freshness threshold from the previous interval. : Average freshness period of the cached contents. | |||

| . . . |

3.5. Machine Learning-Based Caching

3.5.1. Reinforcement Learning-Based Caching Strategies

Deep Q Networks (DQN)

Genetic Algorithms (GA)

Deep Q-Learning (DQL)

Reinforcement Learning (RL)

3.5.2. Supervised and Unsupervised Learning-Based Caching

K-Nearest Neighbors (KNNs)

Deep Neural Networks (DNN)

Recurrent Neural Networks (RNNs)

| Category | Study | Proposed Strategy | Key Features | Evaluation and Results | Tool |

|---|---|---|---|---|---|

| Reinforcement Learning-Based Caching Strategies | [105] | iCache (DQN-based Intelligent Caching) | Deep Q Networks (DQNs) and Markov Decision Model (MDP) for intelligent caching, uses LFF policy. | Reduced retrieval delay and energy consumption compared to LCE, MPC, CTD. | Python 3.8 and PyTorch 1.9 |

| [106] | Multi-Round Parallel Genetic Algorithm (MRPGA) | Genetic algorithm-based strategy for cache allocation using SDN, improves storage resource utilization (SUR). | Improved CHR, reduced retrieval time, compared to LCE, ProbCache. | MATLAB | |

| [107] | Deep Q-Learning (DQL) for IC-IoT | Combines caching with computing resources, optimizes resource allocation in dynamic IoT environments like smart cities and IIoT. | Simulation results show increased CHR, improved energy efficiency, and reduced retrieval time. | - | |

| [108] | Reinforcement learning-based proactive caching | Uses MDP framework, policy gradient methods (LRM and FDM), and caching policies (LISO, LFA) for proactive caching. | Simulation results show reduced energy consumption and improved caching performance. | - | |

| Supervised and Unsupervised Learning-Based Caching | [110] | KNN-LRU | KNN machine learning model combined with LRU (KNN-LRU). | Improves the CHR, reduces network load, and reduces response time compared to LFU, LRU, FIFO. | Icarus Simulator |

| [111] | IoTCache (popularity-based caching framework) | Utilizes deep neural networks (DNNs) and LSTM for popularity prediction. | Simulation results show reduced data retrieval time, increased caching efficiency, and reduced traffic load. | - | |

| [112] | DeepCache (Deep RNN-based Caching) | Uses deep recurrent neural networks (RNNs) with LSTM to predict content popularity and optimize caching decisions. | Simulation results show improved CHR, reduced response time, and better scalability compared to LRU and LFU. | - |

| Category | Study | Equations | Description |

|---|---|---|---|

| Reinforcement Learning-Based Caching Strategies | [105] | . . : Current time. | |

| . . | |||

| . : Rank of the IoT data packet. : Skewness parameter of the Zipf distribution (higher values mean more skewed popularity). : Total number of data packets. | |||

| : Total requests for IoT data packets. . : Set of edge caching nodes. | |||

| . , otherwise 0. . . to transmit one bit. to sense one bit. to wake up. | |||

| : Loss function for the DQN model. : Immediate reward (or cost). : Discount factor. : Q-value from the target network. : Q-value from the prediction network. | |||

| : Total energy consumed by IoT nodes. . : Indicator function (1 if request can be served from cache, else 0). | |||

| : Average number of hops. . | |||

| [106] | : Total transportation cost. . . . , 0 otherwise). | ||

Subject to: | : Optimal cache matrix. : Vector of block sizes. . , must not exceed its capacity. | ||

| Assigns higher fitness to solutions with lower transportation costs but penalizes infeasible solutions (that exceed cache capacity). | |||

| to be cached. : Adjustable scaling parameter. . . | |||

| Ensures that the total allocated cache volume does not exceed the total available cache capacity. | |||

| Ensures that each content block category i is cached exactly times across the network. | |||

| [107] | λ: Total arrival rate of user requests (Poisson process). i: Index of AI model, arranged by decreasing popularity. α: Zipf distribution slope (0 < α ≤ 1). . | ||

| : Probability that the caching status of model i transitions from state x to y at time t. State 0: Not cached, State 1: Cached. | |||

| : CPU cycles needed for training the AI model for user u. : Computation capability of MEC gateway m at time t for user u. | |||

| [108] | These equations reflect the transition probability and the expected cost under the MDP with side information (MDP-SI). | ||

| Supervised and Unsupervised Learning-Based Caching | [110] | : The Euclidean distance between two data points. : The number of features (dimensions) in the dataset. feature in the second data point (test data). feature in the first data point (training data). | |

| [111] | . , defined as: ). | ||

| [112] | is a feature vector of dimension d, where d is the number of unique objects. Each vector captures the probability (popularity) of each object within a predefined window (either time-based or request-based). is an output vector of dimension p (where p = d, the number of unique objects). The output represents future probabilities (popularities) of these objects over k future time steps. m is the number of past probability windows (e.g., 20 time steps), and d is the number of unique objects. |

3.6. Probabilistic Caching

4. Findings

4.1. Comparative Analysis of Caching Strategies in NDN-Based IoT Networks

4.1.1. Popularity-Based Caching

4.1.2. Freshness Caching

4.1.3. Collaborative/Cooperative Caching

4.1.4. Hybrid Caching

4.1.5. Machine Learning-Based Caching

4.1.6. Probabilistic Caching

4.2. Evaluation Metrics Used in NDN-IoT Caching Strategies

4.3. Simulation Environments for Evaluating NDN-Based Caching Strategies

4.3.1. ndnSIM

4.3.2. SocialCCNSim

4.3.3. Icarus

4.3.4. Mininet

5. Future Directions

5.1. Developing a Caching Strategy That Combines Data Freshness and Popularity

5.2. Caching Strategies Based on Machine Learning (ML)

5.3. Caching Strategies That Support Energy Saving

5.4. Caching Strategies Based on Enhancing Security and Privacy

5.5. Caching Strategies That Support Mobility Management

5.6. Caching Strategies That Support Digital Twin Integration

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Islam, R.U.; Savaglio, C.; Fortino, G. Leading Smart Environments towards the Future Internet through Name Data Networking: A survey. Future Gener. Comput. Syst. 2025, 167, 107754. [Google Scholar] [CrossRef]

- Kumar, S.; Tiwari, P.; Zymbler, M. Internet of Things is a revolutionary approach for future technology enhancement: A review. J. Big Data 2019, 6, 111. [Google Scholar] [CrossRef]

- Alubady, R.; Hassan, S.; Habbal, A. Pending interest table control management in Named Data Network. J. Netw. Comput. Appl. 2018, 111, 99–116. [Google Scholar] [CrossRef]

- Gupta, D.; Rani, S.; Ahmed, S.H.; Hussain, R. Caching policies in NDN-IoT architecture. In Integration of WSN and IoT for Smart Cities; Springer International Publishing: Cham, Switzerland, 2020; pp. 43–64. [Google Scholar]

- Anjum, A.; Agbaje, P.; Hounsinou, S.; Guizani, N.; Olufowobi, H. D-NDNoT: Deterministic Named Data Networking for Time-Sensitive IoT Applications. IEEE Internet Things J. 2024, 11, 24872–24885. [Google Scholar] [CrossRef]

- Chand, M. A Comparative Survey on Different Caching Mechanisms in Named Data. J. Emerg. Technol. Innov. Res. 2019, 6, 264–271. [Google Scholar]

- Abane, A.; Daoui, M.; Bouzefrane, S.; Muhlethaler, P. A lightweight forwarding strategy for named data networking in low-end IoT. J. Netw. Comput. Appl. 2019, 148, 102445. [Google Scholar] [CrossRef]

- Hail, M.A.M.; Matthes, A.; Fischer, S. Comparative Performance Analysis of NDN Protocol in IoT Environments. In Proceedings of the 7th Conference on Cloud and Internet of Things, CioT, Montreal, QC, Canada, 29–31 October 2024; pp. 1–8. [Google Scholar]

- Azamuddin, W.M.H.; Aman, A.H.M.; Hassan, R.; Mansor, N. Comparison of named data networking mobility methodology in a merged cloud internet of things and artificial intelligence environment. Sensors 2022, 22, 6668. [Google Scholar] [CrossRef]

- Alwakeel, A.M. Enhancing IoT performance in wireless and mobile networks through named data networking (NDN) and edge computing integration. Comput. Netw. 2025, 264, 111267. [Google Scholar] [CrossRef]

- Amadeo, M.; Campolo, C.; Ruggeri, G.; Molinaro, A. Edge Caching in IoT Smart Environments: Benefits, Challenges, and Research Perspectives Toward 6G. Internet Things 2023, 53–73. [Google Scholar] [CrossRef]

- Alahmri, B.; Al-Ahmadi, S.; Belghith, A. Efficient Pooling and Collaborative Cache Management for NDN/IoT Networks. IEEE Access 2021, 9, 43228–43240. [Google Scholar] [CrossRef]

- Asghari, P.; Rahmani, A.M.; Javadi, H.H.S. Internet of Things applications: A systematic review. Comput. Netw. 2019, 148, 241–261. [Google Scholar] [CrossRef]

- Khalid, A.; Rehman, R.A.; Kim, B.S. Caching Strategies in NDN Based Wireless Ad Hoc Network: A Survey. Comput. Mater. Contin. 2024, 80, 61–103. [Google Scholar] [CrossRef]

- Yovita, L.V.; Syambas, N.R. Caching on named data network: A survey and future research. Int. J. Electr. Comput. Eng. 2018, 8, 4456–4466. [Google Scholar] [CrossRef]

- Abdullahi, I.; Arif, S.; Hassan, S. Survey on caching approaches in information centric networking. J. Netw. Comput. Appl. 2015, 56, 48–59. [Google Scholar] [CrossRef]

- Alkwai, L.; Belghith, A.; Gazdar, A.; AlAhmadi, S. Awareness of user mobility in Named Data Networking for IoT traffic under the push communication mode. J. Netw. Comput. Appl. 2023, 213, 103598. [Google Scholar] [CrossRef]

- Mishra, S.; Jain, V.K.; Gyoda, K.; Jain, S. Distance-based dynamic caching and replacement strategy in NDN-IoT networks. Internet Things 2024, 27, 101264. [Google Scholar] [CrossRef]

- Muralidharan, S.; Roy, A.; Saxena, N. MDP-IoT: MDP based interest forwarding for heterogeneous traffic in IoT-NDN environment. Future Gener. Comput. Syst. 2018, 79, 892–908. [Google Scholar] [CrossRef]

- Shailendra, S.; Sengottuvelan, S.; Rath, H.K.; Panigrahi, B.; Simha, A. Performance evaluation of caching policies in NDN-an ICN architecture. In Proceedings of the IEEE Region 10 Annual International Conference, Proceedings/TENCON, Singapore, 22–25 November 2016; pp. 1117–1121. [Google Scholar]

- Lehmann, M.B.; Barcellos, M.P.; Mauthe, A. Providing producer mobility support in NDN through proactive data replication. In Proceedings of the NOMS 2016—2016 IEEE/IFIP Network Operations and Management Symposium, Istanbul, Turkey, 25–29 April 2016; pp. 383–391. [Google Scholar]

- Dhawan, G.; Mazumdar, A.P.; Meena, Y.K. CNCP: A Candidate Node Selection for Cache Placement in ICN-IoT. In Proceedings of the 2022 IEEE 6th Conference on Information and Communication Technology, CICT, Gwalior, India, 18–20 November 2022; pp. 1–6. [Google Scholar]

- Arshad, S.; Azam, M.A.; Rehmani, M.H.; Loo, J. Recent advances in information-centric networking-based internet of things (ICN-IoT). IEEE Internet Things J. 2019, 6, 2128–2158. [Google Scholar] [CrossRef]

- Atzori, L.; Iera, A.; Morabito, G. Understanding the Internet of Things: Definition, potentials, and societal role of a fast evolving paradigm. Ad Hoc Netw. 2017, 56, 122–140. [Google Scholar] [CrossRef]

- Pruthvi, C.N.; Vimala, H.S.; Shreyas, J. A systematic survey on content caching in ICN and ICN-IoT: Challenges, approaches and strategies. Comput. Netw. 2023, 233, 109896. [Google Scholar]

- Zhang, Z.; Lung, C.-h.; Member, S.; Wei, X.; Chen, M.; Zhang, Z. In-network Caching for ICN-based IoT ( ICN-IoT ): A comprehensive survey. IEEE Internet Things J. 2023, 10, 14595–14620. [Google Scholar] [CrossRef]

- Naeem, M.A.; Din, I.U.; Meng, Y.; Almogren, A.; Rodrigues, J.J.P.C. Centrality-Based On-Path Caching Strategies in NDN-Based Internet of Things: A Survey. IEEE Commun. Surv. Tutor. 2024, 27, 2621–2657. [Google Scholar] [CrossRef]

- Meng, Y.; Ahmad, A.B.; Naeem, M.A. Comparative analysis of popularity aware caching strategies in wireless-based ndn based iot environment. IEEE Access 2024, 12, 136466–136484. [Google Scholar] [CrossRef]

- Alubady, R.; Salman, M.; Mohamed, A.S. A review of modern caching strategies in named data network: Overview, classification, and research directions. Telecommun. Syst. 2023, 84, 581–626. [Google Scholar] [CrossRef]

- Serhane, O.; Yahyaoui, K.; Nour, B.; Moungla, H. A survey of ICN content naming and in-network caching in 5G and beyond networks. IEEE Internet Things J. 2020, 8, 4081–4104. [Google Scholar] [CrossRef]

- Aboodi, A.; Wan, T.C.; Sodhy, G.C. Survey on the Incorporation of NDN/CCN in IoT. IEEE Access 2019, 7, 71827–71858. [Google Scholar] [CrossRef]

- Din, I.U.; Hassan, S.; Khan, M.K.; Guizani, M.; Ghazali, O.; Habbal, A. Caching in Information-Centric Networking: Strategies, Challenges, and Future Research Directions. IEEE Commun. Surv. Tutor. 2018, 20, 1443–1474. [Google Scholar] [CrossRef]

- Bilal, M.; Kang, S.G. A Cache Management Scheme for Efficient Content Eviction and Replication in Cache Networks. IEEE Access 2017, 5, 1692–1701. [Google Scholar] [CrossRef]

- Zhao, Q.; Peng, Z.; Hong, X. A named data networking architecture implementation to internet of underwater things. In Proceedings of the 14th International Conference on Underwater Networks & Systems, Atlanta, GA, USA, 23–25 October 2019. [Google Scholar]

- Shrisha, H.S.; Boregowda, U. An energy efficient and scalable endpoint linked green content caching for Named Data Network based Internet of Things. Results Eng. 2022, 13, 100345. [Google Scholar] [CrossRef]

- Amadeo, M.; Campolo, C.; Ruggeri, G.; Molinaro, A. Beyond Edge Caching: Freshness and Popularity Aware IoT Data Caching via NDN at Internet-Scale. IEEE Trans. Green Commun. Netw. 2022, 6, 352–364. [Google Scholar] [CrossRef]

- Kumar, S.; Tiwari, R. Navigating transient content: PFC caching approach for NDN-based IoT networks. Pervasive Mob. Comput. 2025, 109, 102031. [Google Scholar] [CrossRef]

- Dehkordi, I.F.; Manochehri, K.; Aghazarian, V. Internet of Things (IoT) Intrusion Detection by Machine Learning (ML): A Review. Asia-Pac. J. Inf. Technol. Multimed. 2023, 12, 13–38. [Google Scholar]

- Naeem, M.A.; Ullah, R.; Meng, Y.; Ali, R.; Lodhi, B.A. Caching Content on the Network Layer: A Performance Analysis of Caching Schemes in ICN-Based Internet of Things. IEEE Internet Things J. 2022, 9, 6477–6495. [Google Scholar] [CrossRef]

- Ahed, K.; Benamar, M.; El Ouazzani, R. Content delivery in named data networking based internet of things. In Proceedings of the 2019 15th International Wireless Communications and Mobile Computing Conference, IWCMC, Tangier, Morocco, 24–28 June 2019; pp. 1397–1402. [Google Scholar]

- Wang, X.; Wang, X.; Li, Y. NDN-based IoT with Edge computing. Future Gener. Comput. Syst. 2021, 115, 397–405. [Google Scholar] [CrossRef]

- Meng, Y.; Naeem, M.A.; Ali, R.; Zikria, Y.B.; Kim, S.W. DCS: Distributed Caching Strategy at the Edge of Vehicular Sensor Networks in Information-Centric Networking. Sensors 2019, 19, 4407. [Google Scholar] [CrossRef]

- Meddeb, M.; Dhraief, A.; Belghith, A.; Monteil, T.; Drira, K.; Mathkour, H. Least fresh first cache replacement policy for NDN-based IoT networks. Pervasive Mob. Comput. 2019, 52, 60–70. [Google Scholar] [CrossRef]

- Kumamoto, Y.; Nakazato, H. Implementation of NDN function chaining using caching for IoT environments. In Proceedings of the CCIoT 2020—Proceedings of the 2020 Cloud Continuum Services for Smart IoT Systems, Part of SenSys, Virtual, 16–19 November 2020; pp. 20–25. [Google Scholar]

- Kazmi, S.H.A.; Qamar, F.; Hassan, R.; Nisar, K. Improved QoS in internet of things (IoTs) through short messages encryption scheme for wireless sensor communication. In Proceedings of the 2022 International Symposium on Intelligent Signal Processing and Communication Systems (ISPACS), Penang, Malaysia, 22–25 November 2022; pp. 1–6. [Google Scholar]

- Alabadi, M.; Habbal, A.; Wei, X. Industrial Internet of Things: Requirements, Architecture, Challenges, and Future Research Directions. IEEE Access 2022, 10, 66374–66400. [Google Scholar] [CrossRef]

- Hassan, R.; Qamar, F.; Hasan, M.K.; Aman, A.H.M.; Ahmed, A.S. Internet of Things and its applications: A comprehensive survey. Symmetry 2020, 12, 1674. [Google Scholar] [CrossRef]

- Liwen, Z.; Qamar, F.; Liaqat, M.; Hindia, M.N.; Ariffin, K.A.Z. Toward efficient 6G IoT networks: A perspective on resource optimization strategies, challenges, and future directions. IEEE Access 2024, 12, 76606–76633. [Google Scholar] [CrossRef]

- Djama, A.; Djamaa, B.; Senouci, M.R. TCP/IP and ICN Networking Technologies for the Internet of Things: A Comparative Study. In Proceedings of the ICNAS 2019: 4th International Conference on Networking and Advanced Systems, Annaba, Algeria, 26–27 June 2019; pp. 1–6. [Google Scholar]

- Zahedinia, M.S.; Khayyambashi, M.R.; Bohlooli, A. IoT data management for caching performance improvement in NDN. Clust. Comput. 2024, 27, 4537–4550. [Google Scholar] [CrossRef]

- Zhang, Z.; Yu, Y.; Zhang, H.; Newberry, E.; Mastorakis, S.; Li, Y.; Afanasyev, A.; Zhang, L. An Overview of Security Support in Named Data Networking. IEEE Commun. Mag. 2018, 56, 62–68. [Google Scholar] [CrossRef]

- Djama, A.; Djamaa, B.; Senouci, M.R. Information-Centric Networking solutions for the Internet of Things: A systematic mapping review. Comput. Commun. 2020, 159, 37–59. [Google Scholar] [CrossRef]

- Ullah, S.S.; Ullah, I.; Khattak, H.; Khan, M.A.; Adnan, M.; Hussain, S.; Amin, N.U.; Khattak, M.A.K. A Lightweight Identity-Based Signature Scheme for Mitigation of Content Poisoning Attack in Named Data Networking with Internet of Things. IEEE Access 2020, 8, 98910–98928. [Google Scholar] [CrossRef]

- Abraham, H.B.; Crowley, P. Controlling strategy retransmissions in named data networking. In Proceedings of the 2017 ACM/IEEE Symposium on Architectures for Networking and Communications Systems (ANCS), Beijing, China, 18–19 May 2017; pp. 70–81. [Google Scholar]

- Ahlgren, B.; Dannewitz, C.; Imbrenda, C.; Kutscher, D.; Ohlman, B. A survey of information-centric networking. IEEE Commun. Mag. 2012, 50, 26–36. [Google Scholar] [CrossRef]

- Ali Naeem, M.; Awang Nor, S.; Hassan, S.; Kim, B.S. Compound popular content caching strategy in named data networking. Electronics 2019, 8, 771. [Google Scholar] [CrossRef]

- Amadeo, M.; Ruggeri, G.; Campolo, C.; Molinaro, A. Content-Driven Closeness Centrality Based Caching in Softwarized Edge Networks. In Proceedings of the ICC 2023-IEEE International Conference on Communications, Rome, Italy, 28 May–1 June 2023; pp. 3264–3269. [Google Scholar]

- Gui, Y.; Chen, Y. A cache placement strategy based on compound popularity in named data networking. IEEE Access 2020, 8, 196002–196012. [Google Scholar] [CrossRef]

- Koide, M.; Matsumoto, N.; Matsuzawa, T. Caching Method for Information-Centric Ad Hoc Networks Based on Content Popularity and Node Centrality. Electronics 2024, 13, 2416. [Google Scholar] [CrossRef]

- Liu, Y.; Zhi, T.; Zhou, H.; Xi, H. PBRS: A Content Popularity and Betweenness Based Cache Replacement Scheme in ICN-IoT. J. Internet Technol. 2021, 22, 1495–1508. [Google Scholar]

- Nour, B.; Sharif, K.; Li, F.; Moungla, H.; Kamal, A.E.; Afifi, H. NCP: A near ICN cache placement scheme for IoT-based traffic class. In Proceedings of the 2018 IEEE Global Communications Conference (GLOBECOM), Abu Dhabi, United Arab Emirates, 9–13 December 2018. [Google Scholar]

- Dinh, N.-t. An efficient traffic-aware caching mechanism for information-centric wireless sensor networks. EAI Endorsed Trans. Ind. Netw. Intell. Syst. 2022, 9, e5. [Google Scholar] [CrossRef]

- Jaber, G.; Kacimi, R. A collaborative caching strategy for content-centric enabled wireless sensor networks. Comput. Commun. 2020, 159, 60–70. [Google Scholar] [CrossRef]

- Hasan, K.; Jeong, S.H. Efficient caching for data-driven IoT applications and fast content delivery with low latency in ICN. Appl. Sci. 2019, 9, 4730. [Google Scholar] [CrossRef]

- Xu, Y.; Li, J. Cache Benefit-Based Cache Placement Scheme for Iot Data in Icn by Using Ranking. Available online: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=4132289 (accessed on 20 April 2025).

- Tarnoi, S.; Kumwilaisak, W.; Suppakitpaisarn, V.; Fukuda, K.; Ji, Y. Adaptive probabilistic caching technique for caching networks with dynamic content popularity. Comput. Commun. 2019, 139, 1–15. [Google Scholar] [CrossRef]

- Asmat, H.; Din, I.U.; Ullah, F.; Talha, M.; Khan, M.; Guizani, M. ELC: Edge Linked Caching for content updating in information-centric Internet of Things. Comput. Commun. 2020, 156, 174–182. [Google Scholar] [CrossRef]

- Dhawan, G.; Mazumdar, A.P.; Meena, Y.K. PoSiF: A Transient Content Caching and Replacement Scheme for ICN-IoT. Wirel. Commun. Mob. Comput. 2023, 2023, 1–18. [Google Scholar] [CrossRef]

- Feng, B.; Tian, A.; Yu, S.; Li, J.; Zhou, H.; Zhang, H. Efficient Cache Consistency Management for Transient IoT Data in Content-Centric Networking. IEEE Internet Things J. 2022, 9, 12931–12944. [Google Scholar] [CrossRef]

- Han, G.; Liu, L.; Jiang, J.; Shu, L.; Hancke, G. Analysis of energy-efficient connected target coverage algorithms for industrial wireless sensor networks. IEEE Trans. Ind. Inform. 2015, 13, 135–143. [Google Scholar] [CrossRef]

- Kumar, S.; Tiwari, R. Dynamic popularity window and distance-based efficient caching for fast content delivery applications in CCN. Eng. Sci. Technol. Int. J. 2021, 24, 829–837. [Google Scholar] [CrossRef]

- Kumar, S.; Tiwari, R. Optimized content centric networking for future internet: Dynamic popularity window based caching scheme. Comput. Netw. 2020, 179, 107434. [Google Scholar] [CrossRef]

- Naeem, M.A.; Ali, R.; Kim, B.S.; Nor, S.A.; Hassan, S. A Periodic Caching Strategy Solution for the Smart City in Information-Centric Internet of Things. Sustainability 2018, 10, 2576. [Google Scholar] [CrossRef]

- Vural, S.; Wang, N.; Navaratnam, P.; Tafazolli, R. Caching Transient Data in Internet Content Routers. IEEE/ACM Trans. Netw. (TON) 2017, 25, 1048–1061. [Google Scholar] [CrossRef]

- Meddeb, M.; Dhraief, A.; Belghith, A.; Monteil, T.; Drira, K.; Alahmadi, S. Cache freshness in named data networking for the internet of things. Comput. J. 2018, 61, 1496–1511. [Google Scholar] [CrossRef]

- Buchipalli, T.; Mahendran, V.; Badarla, V. How Fresh is the Data? An Optimal Learning-Based End-to-End Pull-Based Forwarding Framework for NDNoTs. In Proceedings of the Int’l ACM Conference on Modeling Analysis and Simulation of Wireless and Mobile Systems, Montreal, QC, Canada, 30 October–3 November 2023; pp. 285–289. [Google Scholar]

- Hazrati, N.; Pirahesh, S.; Arasteh, B.; Sefati, S.S.; Fratu, O.; Halunga, S. Cache Aging with Learning (CAL): A Freshness-Based Data Caching Method for Information-Centric Networking on the Internet of Things (IoT). Future Internet 2025, 17, 11. [Google Scholar] [CrossRef]

- Zahed, M.I.A.; Ahmad, I.; Habibi, D.; Phung, Q.V.; Mowla, M.M.; Waqas, M. A review on green caching strategies for next generation communication networks. IEEE Access 2020, 8, 212709–212737. [Google Scholar] [CrossRef]

- Podlipnig, S.; Böszörmenyi, L. A survey of web cache replacement strategies. ACM Comput. Surv. (CSUR) 2003, 35, 374–398. [Google Scholar] [CrossRef]

- Sekardefi, K.P.; Negara, R.M. Impact of Data Freshness-aware in Cache Replacement Policy for NDN-based IoT Network. In Proceedings of the ICCoSITE 2023—International Conference on Computer Science, Information Technology and Engineering: Digital Transformation Strategy in Facing the VUCA and TUNA Era, Jakarta, Indonesia, 16 February 2023; pp. 156–161. [Google Scholar]

- Shrimali, R.; Shah, H.; Chauhan, R. Proposed Caching Scheme for Optimizing Trade-off between Freshness and Energy Consumption in Name Data Networking Based IoT. Adv. Internet Things 2017, 7, 11–24. [Google Scholar] [CrossRef]

- Ioannou, A.; Weber, S. A Survey of Caching Policies and Forwarding Mechanisms in Information-Centric Networking. IEEE Commun. Surv. Tutor. 2016, 18, 2847–2886. [Google Scholar] [CrossRef]

- Naeem, M.A.; Bashir, A.K.; Meng, Y. Dynamic cluster-based cooperative cache management at the network edges in NDN-based Internet of Things. Alex. Eng. J. 2025, 125, 297–310. [Google Scholar] [CrossRef]

- Gui, Y.; Chen, Y. A Cache Placement Strategy Based on Entropy Weighting Method and TOPSIS in Named Data Networking. IEEE Access 2021, 9, 56240–56252. [Google Scholar] [CrossRef]

- Yang, Y.; Song, T. Energy-Efficient Cooperative Caching for Information-Centric Wireless Sensor Networking. IEEE Internet Things J. 2022, 9, 846–857. [Google Scholar] [CrossRef]

- Zahed, M.I.A.; Ahmad, I.; Habibi, D.; Phung, Q.V.; Zhang, L. A Cooperative Green Content Caching Technique for Next Generation Communication Networks. IEEE Trans. Netw. Serv. Manag. 2020, 17, 375–388. [Google Scholar] [CrossRef]

- Hahm, O.; Baccelli, E.; Schmidt, T.C.; Wählisch, M.; Adjih, C.; Massoulié, L. Low-power internet of things with NDN and cooperative caching. In Proceedings of the ICN 2017—Proceedings of the 4th ACM Conference on Information Centric Networking, Berlin, Germany, 26–28 September 2017; pp. 98–108. [Google Scholar]

- Yao, L.; Chen, A.; Deng, J.; Wang, J.; Wu, G. A cooperative caching scheme based on mobility prediction in vehicular content centric networks. IEEE Trans. Veh. Technol. 2017, 67, 5435–5444. [Google Scholar] [CrossRef]

- Gupta, D.; Rani, S.; Ahmed, S.H.; Garg, S.; Piran, M.J.; Alrashoud, M. ICN-Based Enhanced Cooperative Caching for Multimedia Streaming in Resource Constrained Vehicular Environment. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4588–4600. [Google Scholar] [CrossRef]

- Rath, H.K.; Panigrahi, B.; Simha, A. On cooperative on-path and off-path caching policy for information centric networks (ICN). In Proceedings of the International Conference on Advanced Information Networking and Applications, AINA, Crans-Montana, Switzerland, 23–25 March 2016; pp. 842–849. [Google Scholar]

- Zaki Hamidi, E.A.; Akbar, K.M.; Negara, R.M.; Payangan, I.P.; Puspitaningsih, M.D.; Putri As’ari, A.Z. NDN Collaborative Caching Replacement and Placement Policy Performance Evaluation. In Proceeding of 2024 the 10th International Conference on Wireless and Telematics, ICWT, Batam, Indonesia, 4–5 July 2024; pp. 1–5. [Google Scholar]

- Dinh, N.; Kim, Y. An energy reward-based caching mechanism for information-centric internet of things. Sensors 2022, 22, 743. [Google Scholar] [CrossRef]

- He, X.; Liu, H.; Li, W.; Valera, A.; Seah, W.K.G. EABC: Energy-aware Centrality-based Caching for Named Data Networking in the IoT. In Proceedings of the 2024 IEEE 25th International Symposium on a World of Wireless, Mobile and Multimedia Networks, WoWMoM, Perth, Australia, 4–7 June 2024; pp. 259–268. [Google Scholar]

- Zhang, Z.; Lung, C.H.; Lambadaris, I.; St-Hilaire, M. IoT data lifetime-based cooperative caching scheme for ICN-IoT networks. In Proceedings of the IEEE International Conference on Communications, Kansas City, MO, USA, 20–24 May 2018; pp. 1–7. [Google Scholar]

- Hail, M.A.M.; Bin-Salem, A.A.; Munassar, W. AI for IoT-NDN: Enhancing IoT with Named Data Networking and Artificial Intelligence. In Proceedings of the 2024 ASU International Conference in Emerging Technologies for Sustainability and Intelligent Systems, ICETSIS, Manama, Bahrain, 28–29 January 2024; pp. 1020–1026. [Google Scholar]

- Gupta, D.; Rani, S.; Ahmed, S.H.; Verma, S.; Ijaz, M.F.; Shafi, J. Edge caching based on collaborative filtering for heterogeneous icn-iot applications. Sensors 2021, 21, 5491. [Google Scholar] [CrossRef]

- Meddeb, M.; Dhraief, A.; Belghith, A.; Monteil, T.; Drira, K. Cache coherence in machine-to-machine information centric networks. In Proceedings of the 2015 IEEE 40th Conference on Local Computer Networks (LCN), Clearwater Beach, FL, USA, 26–29 October 2015; pp. 430–433. [Google Scholar]

- Anamalamudi, S.; Alkatheiri, M.S.; Solami, E.A.; Sangi, A.R. Cooperative Caching Scheme for Machine-to-Machine Information-Centric IoT Networks. IEEE Can. J. Electr. Comput. Eng. 2021, 44, 228–237. [Google Scholar] [CrossRef]

- Naeem, M.A.; Nguyen, T.N.; Ali, R.; Cengiz, K.; Meng, Y.; Khurshaid, T. Hybrid Cache Management in IoT-Based Named Data Networking. IEEE Internet Things J. 2022, 9, 7140–7150. [Google Scholar] [CrossRef]

- Alduayji, S.; Belghith, A.; Gazdar, A.; Al-Ahmadi, S. PF-ClusterCache: Popularity and Freshness-Aware Collaborative Cache Clustering for Named Data Networking of Things. Appl. Sci. 2022, 12, 6706. [Google Scholar] [CrossRef]

- Amadeo, M.; Ruggeri, G.; Campolo, C.; Molinaro, A.; Mangiullo, G. Caching popular and fresh IoT contents at the edge via named data networking. In Proceedings of the IEEE INFOCOM 2020—IEEE Conference on Computer Communications Workshops, INFOCOM WKSHPS, Toronto, ON, Canada, 6–9 July 2020; pp. 610–615. [Google Scholar]

- Khattab, A.; Youssry, N. Machine Learning for IoT Systems; Springer: Berlin/Heidelberg, Germany, 2020; pp. 105–127. [Google Scholar]

- Zhang, Y.; Muniyandi, R.C.; Qamar, F. A Review of Deep Learning Applications in Intrusion Detection Systems: Overcoming Challenges in Spatiotemporal Feature Extraction and Data Imbalance. Appl. Sci. 2025, 15, 1552. [Google Scholar] [CrossRef]

- Hou, J.; Lu, H.; Nayak, A. A GNN-based proactive caching strategy in NDN networks. Peer-Peer Netw. Appl. 2023, 16, 997–1009. [Google Scholar] [CrossRef]

- Wu, H.; Nasehzadeh, A.; Wang, P. A Deep Reinforcement Learning-Based Caching Strategy for IoT Networks With Transient Data. IEEE Trans. Veh. Technol. 2022, 71, 13310–13319. [Google Scholar] [CrossRef]

- Zhang, Z.; Wei, X.; Lung, C.H.; Zhao, Y. iCache: An Intelligent Caching Scheme for Dynamic Network Environments in ICN-Based IoT Networks. IEEE Internet Things J. 2023, 10, 1787–1799. [Google Scholar] [CrossRef]

- Yang, F.; Tian, Z. MRPGA: A Genetic-Algorithm-based In-network Caching for Information-Centric Networking. In Proceedings of the International Conference on Network Protocols, ICNP, Dallas, TX, USA, 1–5 November 2021; pp. 1–6. [Google Scholar]

- Xu, F.; Yang, F.; Bao, S.; Zhao, C. DQN Inspired Joint Computing and Caching Resource Allocation Approach for Software Defined Information-Centric Internet of Things Network. IEEE Access 2019, 7, 61987–61996. [Google Scholar] [CrossRef]

- Somuyiwa, S.O.; Gyorgy, A.; Gunduz, D. A Reinforcement-Learning Approach to Proactive Caching in Wireless Networks. IEEE J. Sel. Areas Commun. 2018, 36, 1331–1344. [Google Scholar] [CrossRef]

- Ihsan, A.; Rainarli, E. Optimization of k-nearest neighbour to categorize Indonesian’s news articles. Asia–Pac. J. Inf. Technol. Multimed. 2021, 10, 43–51. [Google Scholar] [CrossRef]

- Negaral, R.M.; Syambas, N.R.; Mulyana, E.; Wasesa, N.P. Maximizing Router Efficiency in Named Data Networking with Machine Learning-Driven Caching Placement Strategy. In Proceedings of the IEEE International Conference on Computer Communication and the Internet, ICCCI, Tokyo, Japan, 14–16 June 2024; pp. 118–123. [Google Scholar]

- Chen, B.; Liu, L.; Sun, M.; Ma, H. IoTCache: Toward Data-Driven Network Caching for Internet of Things. IEEE Internet Things J. 2019, 6, 10064–10076. [Google Scholar] [CrossRef]

- Narayanan, A.; Verma, S.; Ramadan, E.; Babaie, P.; Zhang, Z.L. DEEPCACHE: A deep learning based framework for content caching. In Proceedings of the NetAI 2018—Proceedings of the 2018 Workshop on Network Meets AI and ML, Part of SIGCOMM, Budapest, Hungary, 24 August 2018; pp. 48–53. [Google Scholar]

- Naeem, M.A.; Nor, S.A.; Hassan, S.; Kim, B.-S. Performances of probabilistic caching strategies in content centric networking. IEEE Access 2018, 6, 58807–58825. [Google Scholar] [CrossRef]

- Gao, Y.; Zhou, J. Probabilistic caching mechanism based on software defined content centric network. In Proceedings of the 2019 IEEE 11th International Conference on Communication Software and Networks (ICCSN), Chongqing, China, 12–15 June 2019. [Google Scholar]

- Qin, Y.; Yang, W.; Liu, W. A probability-based caching strategy with consistent hash in named data networking. In Proceedings of the 2018 1st IEEE International Conference on Hot Information-Centric Networking (HotICN), Shenzhen, China, 15–17 August 2018. [Google Scholar]

- Mishra, S.; Jain, V.K.; Gyoda, K.; Jain, S. A novel content eviction strategy to retain vital contents in NDN-IoT networks. Wirel. Netw. 2024, 31, 2327–2349. [Google Scholar] [CrossRef]

- Iqbal, S.M.A. Asaduzzaman Cache-MAB: A reinforcement learning-based hybrid caching scheme in named data networks. Future Gener. Comput. Syst. 2023, 147, 163–178. [Google Scholar] [CrossRef]

- Safitri, C.; Mandala, R.; Nguyen, Q.N.; Sato, T. Artificial Intelligence Approach for Name Classification in Information-Centric Networking-based Internet of Things. In Proceedings of the 2020 IEEE International Conference on Sustainable Engineering and Creative Computing (ICSECC), Cikarang, Indonesia, 16–17 December 2020; pp. 158–163. [Google Scholar]

- Meng, Y.; Ahmad, A.B. Performance Measurement Through Caching in Named Data Networking Based Internet of Things. IEEE Access 2023, 11, 120569–120584. [Google Scholar] [CrossRef]

- Afanasyev, A.; Burke, J.; Refaei, T.; Wang, L.; Zhang, B.; Zhang, L. A Brief Introduction to Named Data Networking. In Proceedings of the IEEE Military Communications Conference MILCOM, Los Angeles, CA, USA, 29–31 October 2018; pp. 605–611. [Google Scholar]

- Afanasyev, A.; Moiseenko, I.; Zhang, L. ndnSIM: NDN Simulator for NS-3. 2012. Available online: https://www.researchgate.net/publication/265359778_ndnSIM_ndn_simulator_for_NS-3 (accessed on 20 April 2025).

- Mesarpe. SocialCCNSim: Social CCN Sim is a CCN Simulator, Which Represents Interaction of Users in a CCN Network. GitHub, 2017. Available online: https://github.com/mesarpe/Socialccnsim (accessed on 21 July 2025).

- Saino, L.P.; Psaras, I.; Pavlou, G. Icarus: A caching simulator for information centric networking (ICN)v. In Proceedings of the SIMUTools 2014—7th International Conference on Simulation Tools and Techniques, Lisbon, Portugal, 17–19 March 2014. [Google Scholar]

- Mininet. Mininet Overview. 2015. Available online: http://mininet.org/overview/ (accessed on 7 May 2025).

- Kim, D.; Bi, J.; Vasilakos, A.V.; Yeom, I. Security of cached content in NDN. IEEE Trans. Inf. Forensics Secur. 2017, 12, 2933–2944. [Google Scholar] [CrossRef]

- Kumar, N.; Singh, A.K.; Aleem, A.; Srivastava, S. Security attacks in named data networking: A review and research directions. J. Comput. Sci. Technol. 2019, 34, 1319–1350. [Google Scholar] [CrossRef]

- Azamuddin, W.M.H.; Aman, A.H.M.; Sallehuddin, H.; Abualsaud, K.; Mansor, N. The Emerging of Named Data Networking: Architecture, Application, and Technology. IEEE Access 2023, 11, 23620–23633. [Google Scholar] [CrossRef]

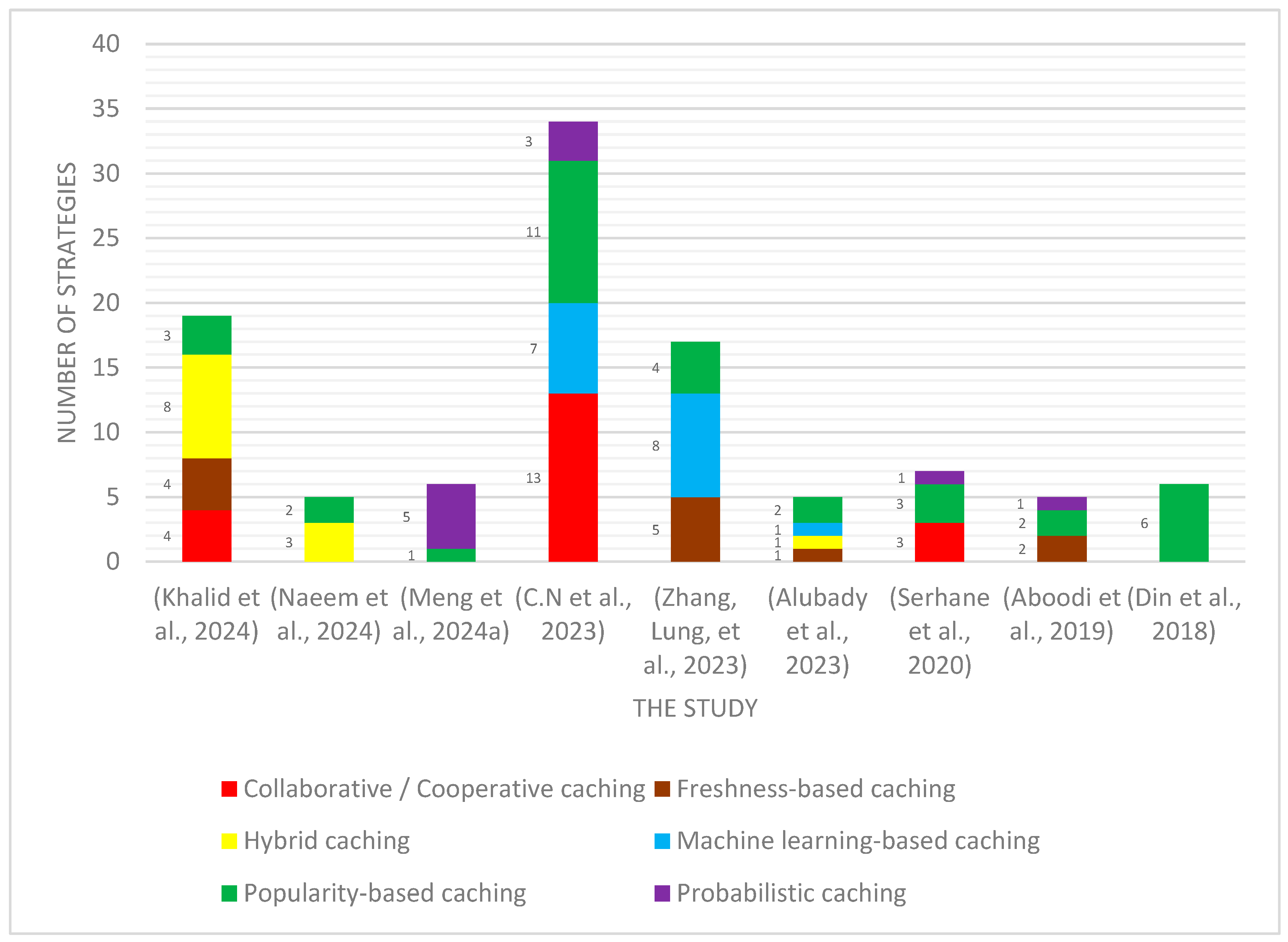

| The Study | Year | Collaborative/Cooperative Caching | Freshness-Based Caching | Hybrid Caching | Machine Learning-Based Caching | Popularity-Based Caching | Probabilistic Caching |

|---|---|---|---|---|---|---|---|

| Number of Strategies | |||||||

| [14] Khalid | 2024 | 4 | 4 | 8 | 3 | ||

| [27] Naeem | 2024 | 3 | 2 | ||||

| [28] Meng | 2024 | 1 | 5 | ||||

| [29] C.N | 2023 | 13 | 7 | 11 | 3 | ||

| [26] Zhang | 2023 | 5 | 8 | 4 | |||

| [30] Alubady | 2023 | 1 | 1 | 1 | 2 | ||

| [31] Serhane | 2020 | 3 | 3 | 1 | |||

| [32] Aboodi | 2019 | 2 | 2 | 1 | |||

| [33] Din | 2018 | 6 | |||||

| Percentage | 19.23 | 11.53 | 11.53 | 15.38 | 32.69 | 9.61 | |

| Abbreviations | Full Form |

|---|---|

| AA | Always Active |

| ABC | Approximate Betweenness Centrality |

| AI | Artificial intelligence |

| ANNs | Artificial neural networks |

| ARMA | Autoregressive Moving Average |

| ARs | Adaptive Routers |

| BF | Bloom filter |

| CCC | Centrally Controlled Caching |

| CCN | Content-centric network |

| CCN-WSNs | Collaborative Caching Strategy for Content-Centric Enabled Wireless Sensor Networks |

| CCS | Client Cache Strategy |

| CEE | Cache Everything Everywhere |

| CES | Caching At the Edge Strategy |

| CFPC | Caching strategy for freshness and popularity content |

| CHR | Cache hit rate |

| CL4M | Cache Less for More |

| CoCa | Cooperative caching in ICN |

| CPCCS | Cluster-Based Popularity and Centrality-Aware Caching Strategy |

| CS | Content Store |

| CSDD | Caching Strategy Distance and Degree |

| CTD | Caching Transient Data |

| DNNs | Deep neural networks |

| DPWCS | Dynamic Popularity Window-Based Caching Scheme |

| DQL | Deep Q-Learning |

| DQNs | Deep Q Networks |

| EABC | Energy-Aware Centrality-Based Caching |

| EC | Edge computing |

| EPPC | Efficient popularity-aware probabilistic caching |

| FIB | Forwarding Information Base |

| FIFO | First In First Out |

| GAs | Genetic algorithms |

| ICANETs | Information-Centric ad hoc Networks |

| ICN | Information-centric networking |

| ICWSNs | Information-driven wireless sensor networks |

| IIoT | Industrial Iot |

| IoT | Internet of Things |

| IP | Internet Protocol |

| KNNs | K-Nearest Neighbors |

| LCC | Lifetime Cooperative Caching |

| LCD | Leave Copy Down |

| LCE | Leave Copy Everywhere |

| LFF | Least Fresh First |

| LFU | Least Frequently Used |

| LPC | Less popular content |

| LPF | Least Popular First |

| LRU | Least Recently Used |

| LSTM | Long short-term memory |

| M2M | Machine-to-machine |

| MAGIC | Max-gain in-network |

| MANET | Mobile Ad Hoc Network |

| MDMR | Max Diversity Most Recent |

| MDP | Markov Decision Model |

| ML | Machine learning |

| MPC | Most popular content |

| MRPGA | Multi-Round Parallel Genetic Algorithm |

| NDN | Named Data Networking |

| ndnSIM | Named Data Networking Simulator |

| NMF | Non-Negative Matrix Factorization |

| NS-3 | Network Simulator-3 |

| OPC | Optimal popular content |

| PACC | Popularity-Aware Closeness Centrality |

| PBRS | Popularity and betweenness-based replacement scheme |

| PCCM | Popularity-Based Cache Consistency Management |

| PCS | Periodic caching strategy |

| PEM | Popularity Evolving Model |

| PIT | Pending Interest Table |

| PoSiF | Popularity, size, and freshness-based |

| QoE | Quality Of User Experience |

| QoS | Quality of service |

| RARS | Resource adaptation resolving server |

| RC | Random Caching |

| RL | Reinforcement learning |

| RNNs | Recurrent neural networks |

| RTT | Round Trip Time |

| SBPC | Software-defined probabilistic caching |

| SCTSmart | Smart caching |

| SDN | Software-defined networking |

| SMCC | A cooperative multi-hop caching |

| SUR | Storage resource utilization |

| TCM | Traffic-aware caching mechanism |

| TCS | Tag-Based Caching Strategy |

| TTL | Time-to-live |

| VANETs | Vehicular Ad Hoc Networks |

| VLRU | Variable Least Recently Used |

| WAVE | Weighted Popularity |

| Study | Proposed Strategy | Key Features | Evaluation and Results | Tool |

|---|---|---|---|---|

| [114] | SBPC (software-defined probabilistic caching) |

|

| Standard CCN simulation 50 nodes, 156 links Zipf, Poisson distributions |

| [115] | Prob-CH (probabilistic caching + consistent hashing) |

|

| ndnSIM (NS-3) |

| Study | Equations | Description |

|---|---|---|

| [114] | . | |

| is the maximum node importance on the path. | ||

| is transmission delay. | ||

| [115] | is the normalized hash value (between 0 and 1) for the content name, is the pre-defined caching probability. |

| Caching Strategy | Application Domain | Theories | Strengths | Critical Weaknesses | Performance Metrics | Tool |

|---|---|---|---|---|---|---|

| Popularity-Based Caching | Smart homes Industrial monitoring Static IoT deployments Consistent access patterns | Stable content access patterns Sufficient local memory/processing Historical data predicts future demand Relatively static network topology | High cache hit ratio for stable patterns Significant latency reduction Effective for predictable content demand Well-suited for static environments | Poor performance in dynamic environments Outdated content remains cached Requires local computation of popularity Assumes stable access patterns | CHR; High Latency; Low Energy; Medium Scalability; Medium | ndnSIM, SocialCCNSim, Icarus |

| Freshness-Based Caching | Health monitoring Environmental sensing Smart traffic systems Time-critical applications | Content tagged with freshness indicators Sufficient network resources for validation Stale data more harmful than cache misses Regular content updates available | Ensures content validity Supports real-time applications High CHR with valid content Reduces energy via proactive eviction | Frequent updates consume bandwidth High control overhead Reduced caching efficiency Energy consumption for validation | CHR; High Latency; Low Energy; Medium Scalability; Medium | ndnSIM |

| Collaborative/Cooperative Caching | Smart campuses Smart cities Industrial IoT setups Dense, well-connected networks | Stable communication between peers Trust relationships or common protocols Sufficient bandwidth for coordination Redundancy is undesirable | Enhanced performance through sharing Significant energy savings Avoids duplicate caching Excellent scalability in dense networks | Complex coordination mechanisms High signaling overhead Requires trust between devices Impractical in heterogeneous networks | CHR; High Latency; Low Energy; High Scalability; High | ndnSIM, Icarus |

| Hybrid Caching | Diverse IoT environments Edge-cloud networks Mixed content types Adaptive systems | Multiple content types present Heterogeneous node capabilities Network supports lightweight intelligence No single policy sufficient | High adaptability Combines multiple mechanisms Excellent scalability Balances diverse objectives | Increased implementation complexity Challenging parameter fine-tuning Potential scalability issues Requires careful coordination management | CHR; High Latency; Low Energy; High Scalability; High | ndnSIM, Icarus |

| Machine Learning-Based Caching | Edge data centers AI-enabled routers Large-scale networks High-traffic environments | Sufficient computational capabilities Access to historical training data Stable learning environments Models can generalize well | Powerful prediction capabilities Dynamic decision-making Significant CHR improvements Optimizes energy consumption | High computational cost Barrier for real-time deployment Requires sufficient training data May not work in new environments | CHR; High Latency; Low Energy; High Scalability; Medium | ndnSIM, Icarus |

| Probabilistic Caching | Large-scale distributed networks Vehicular networks Mobile IoT applications High content diversity scenarios | Lightweight decisions preferable Detailed metrics too costly to maintain High content diversity Intermittent connectivity acceptance | Simple implementation Excellent load balancing Highly scalable Works in dynamic environments | Suboptimal cache utilization May miss popular content Can cache rarely accessed content Performance depends on configuration | CHR; Medium Latency; Medium Energy; Variable Scalability; High | ndnSIM |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alahmad, A.A.; Mohd Aman, A.H.; Qamar, F.; Mardini, W. Efficient Caching Strategies in NDN-Enabled IoT Networks: Strategies, Constraints, and Future Directions. Sensors 2025, 25, 5203. https://doi.org/10.3390/s25165203

Alahmad AA, Mohd Aman AH, Qamar F, Mardini W. Efficient Caching Strategies in NDN-Enabled IoT Networks: Strategies, Constraints, and Future Directions. Sensors. 2025; 25(16):5203. https://doi.org/10.3390/s25165203

Chicago/Turabian StyleAlahmad, Ala’ Ahmad, Azana Hafizah Mohd Aman, Faizan Qamar, and Wail Mardini. 2025. "Efficient Caching Strategies in NDN-Enabled IoT Networks: Strategies, Constraints, and Future Directions" Sensors 25, no. 16: 5203. https://doi.org/10.3390/s25165203

APA StyleAlahmad, A. A., Mohd Aman, A. H., Qamar, F., & Mardini, W. (2025). Efficient Caching Strategies in NDN-Enabled IoT Networks: Strategies, Constraints, and Future Directions. Sensors, 25(16), 5203. https://doi.org/10.3390/s25165203