4.1. Experimental Setup

Training Strategy. We trained our model using the

DUTS dataset (accessed on 3 July 2025) [

61], which provides 15,572 images. We allocated 10,553 images for training and 5019 for validation, resizing all to

pixels. To create multi-focus image pairs, we transformed ground truth annotations into binary masks. These masks then guided the application of Gaussian blur, with kernel sizes from 3 to 21, to generate realistic training samples (detailed in SwinMFF [

21]). For network optimization, we utilized the AdamW optimizer with an initial learning rate of

,

parameters set to

, an

of

, and a weight decay of

. A CosineAnnealingLR scheduler was implemented to progressively reduce the learning rate over the course of training. The model was trained for 20 epochs with a batch size of 16 on an Nvidia A6000 GPU system. operating at 2.90 GHz. The entire framework was developed in PyTorch 2.6.0.

Datasets for Evaluation. MLP-MFF’s performance was rigorously evaluated against three prominent MFF benchmarks: Lytro [

28], MFFW [

62], and MFI-WHU [

41]. The Lytro dataset, consisting of 20 light-field camera image pairs, facilitated both qualitative and quantitative analysis. MFFW, with its 13 image pairs exhibiting pronounced defocus, was employed for qualitative evaluation. Similarly, the MFI-WHU dataset, offering 120 synthetically generated (via Gaussian blur), was also used for qualitative assessment.

Methods for Comparison. To comprehensively evaluate the performance of our proposed MLP-MFF, we compare it with various state-of-the-art methods from different categories. For traditional methods, we include both spatial domain and transform domain approaches. The spatial domain methods include SSSDI [

63], QUADTREE [

64], DSIFT [

65], SRCF [

28], GFDF [

66], BRW [

67], MISF [

68], and MDLSR_RFM [

69]. The transform domain methods include DWT [

14], DTCWT [

70], NSCT [

71], CVT [

72], GFF [

73], SR [

74], ASR [

75], and ICA [

76]. For decision map-based deep learning methods, we compare with CNN [

19], ECNN [

77], DRPL [

78], SESF [

31], MFIF-GAN [

33], MSFIN [

20], GACN [

79], and ZMFF [

35]. For end-to-end deep learning methods, we compare with IFCNN-MAX [

39], U2Fusion [

40], MFF-GAN [

41], SwinFusion [

22], FusionDiff [

23], SwinMFF [

21], and DDBFusion [

42]. These methods represent the current state-of-the-art in MFF, covering different technical approaches and architectural designs. The comparison with these methods allows us to thoroughly evaluate the effectiveness and advantages of our proposed MLP-MFF approach.

Evaluation metrics. To comprehensively evaluate the performance of different fusion methods, we employ eight widely-used metrics that can be categorized based on their theoretical foundations. Information theory-based metrics include Entropy (EN) and Mutual Information (MI), which measure the information content and transfer in the fused image. Edge and gradient-based metrics consist of Edge Information (EI), Spatial Frequency (SF), Average Gradient (AVG), and , which assess the preservation of edge details and image clarity. Structure and visual quality-based metrics include Structural Similarity Index Measure (SSIM) and Visual Information Fidelity (VIF), which evaluate the structural preservation and visual quality of the fused image. These metrics provide a comprehensive evaluation framework that considers different aspects of fusion quality, including information content, edge preservation, structural similarity, and visual quality.

4.2. Experimental Results

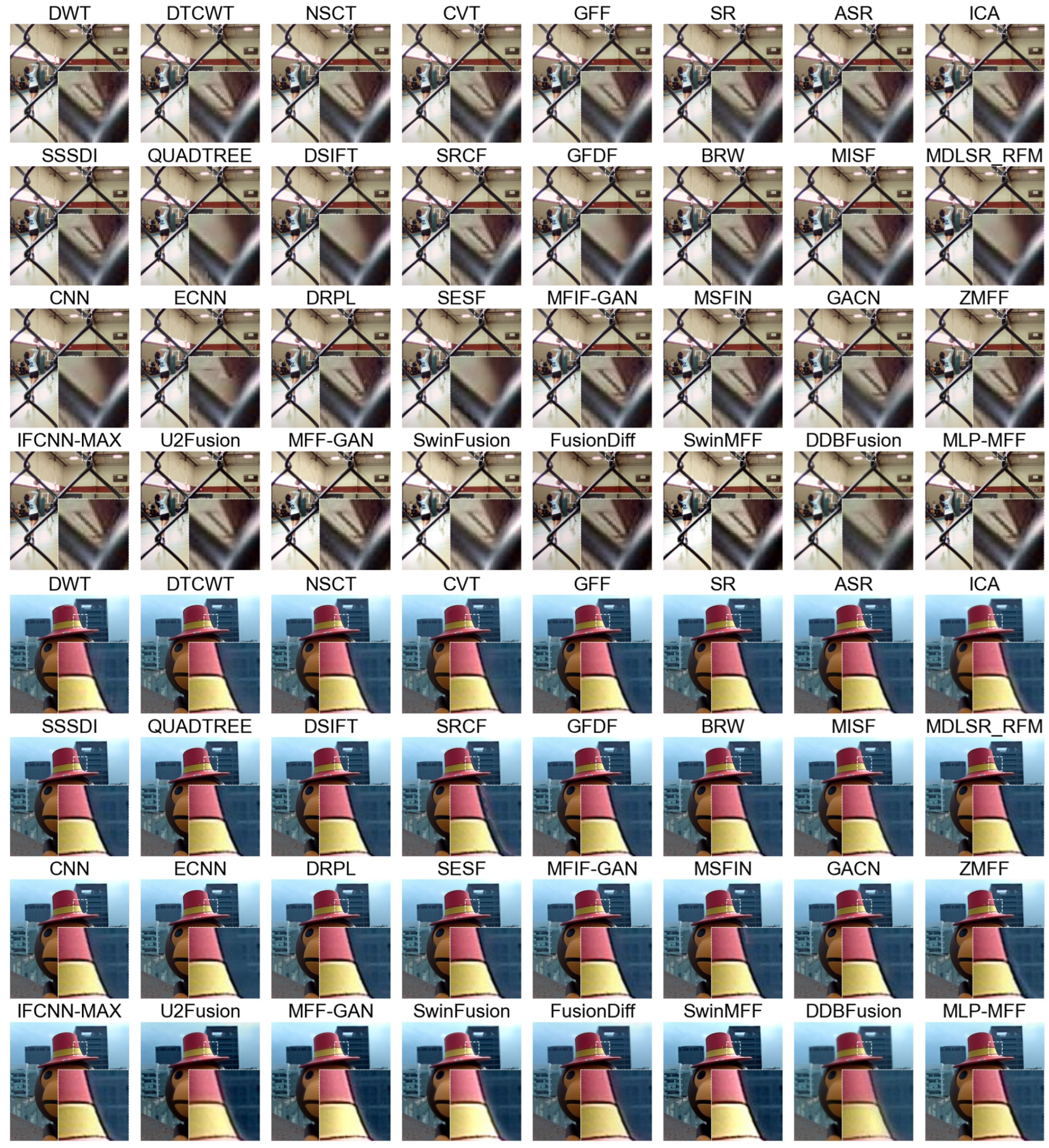

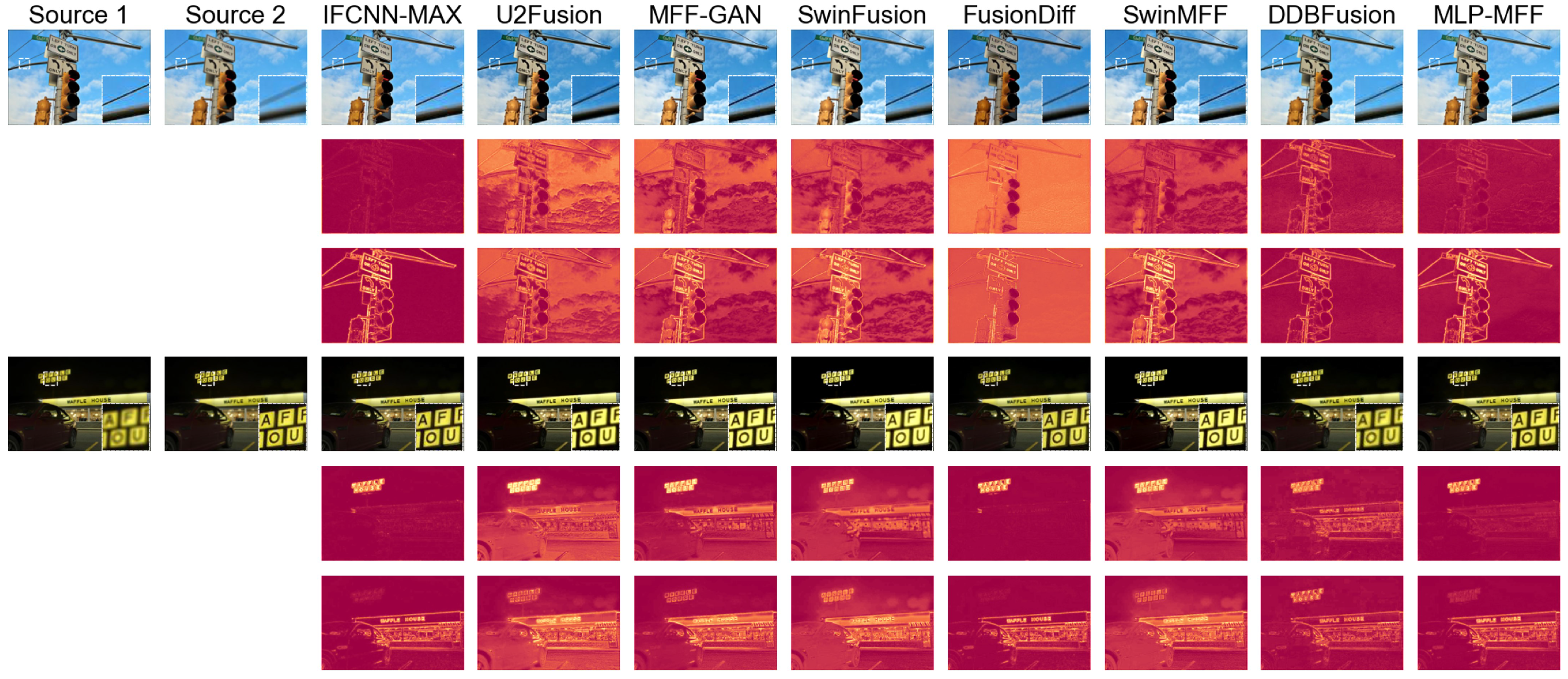

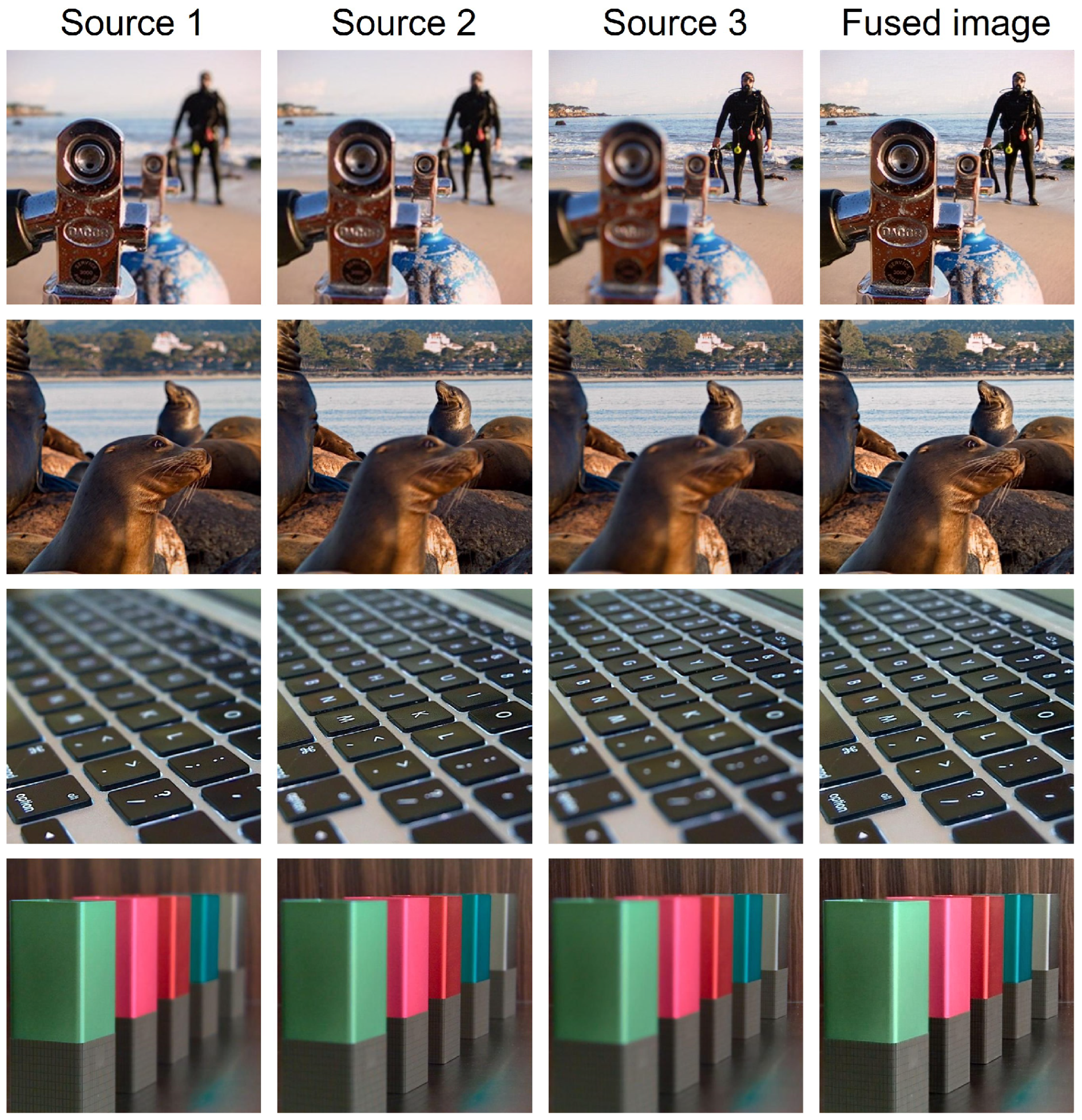

Qualitative comparison. In

Figure 5, we present a comparative analysis of various MFF methods applied to the Lytro dataset [

28]. The figure is structured into four rows, each representing a distinct category of fusion approaches: transform-domain methods, spatial-domain methods, decision map-based deep learning methods, and end-to-end deep learning methods. From the first example, it is evident that transform-domain methods and end-to-end deep learning methods consistently outperform others in preserving intricate details within complex scenes, exhibiting significantly fewer fusion artifacts. Furthermore, the second example clearly highlights the superior performance of our proposed method over existing end-to-end deep learning approaches. Our method effectively suppresses artifacts along object edges, a common challenge for other end-to-end techniques that show varying degrees of artifacts in the provided examples.

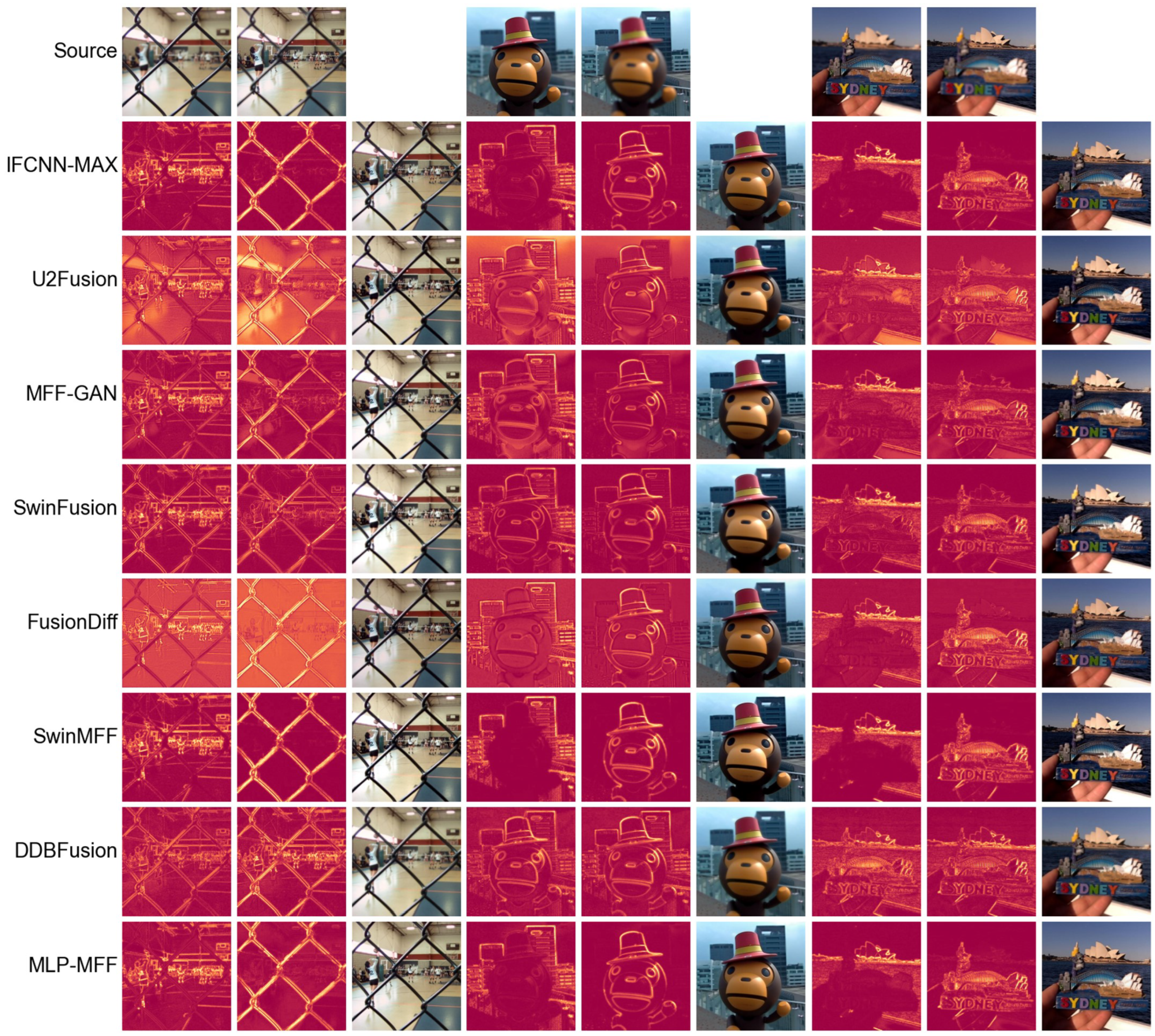

To provide a more intuitive comparison of the performance of different end-to-end methods, we further used the difference maps between the fusion results and the two source images as a basis for comparison. A greater discrepancy between the two difference maps indicates a superior fusion result [

21]. First, we compare our method with decision map-based deep learning approaches in

Figure 6. Even though our method is an end-to-end fusion approach where fused image pixel values are inferred by the network rather than sampled from source images, its difference maps are comparable to those produced by decision map-based deep learning methods. This demonstrates that our proposed method, similar to SwinMFF [

21], achieves excellent pixel-level fidelity. Next, we compare our method with other end-to-end approaches in

Figure 7. Our method, along with IFCNN-MAX and SwinMFF, significantly outperforms other methods in this comparison. While IFCNN-MAX and SwinMFF show slightly superior results to our proposed method, their computational costs are approximately 16 and 43 times higher, respectively. Therefore, our method strikes a favorable balance between computational efficiency and fusion quality.

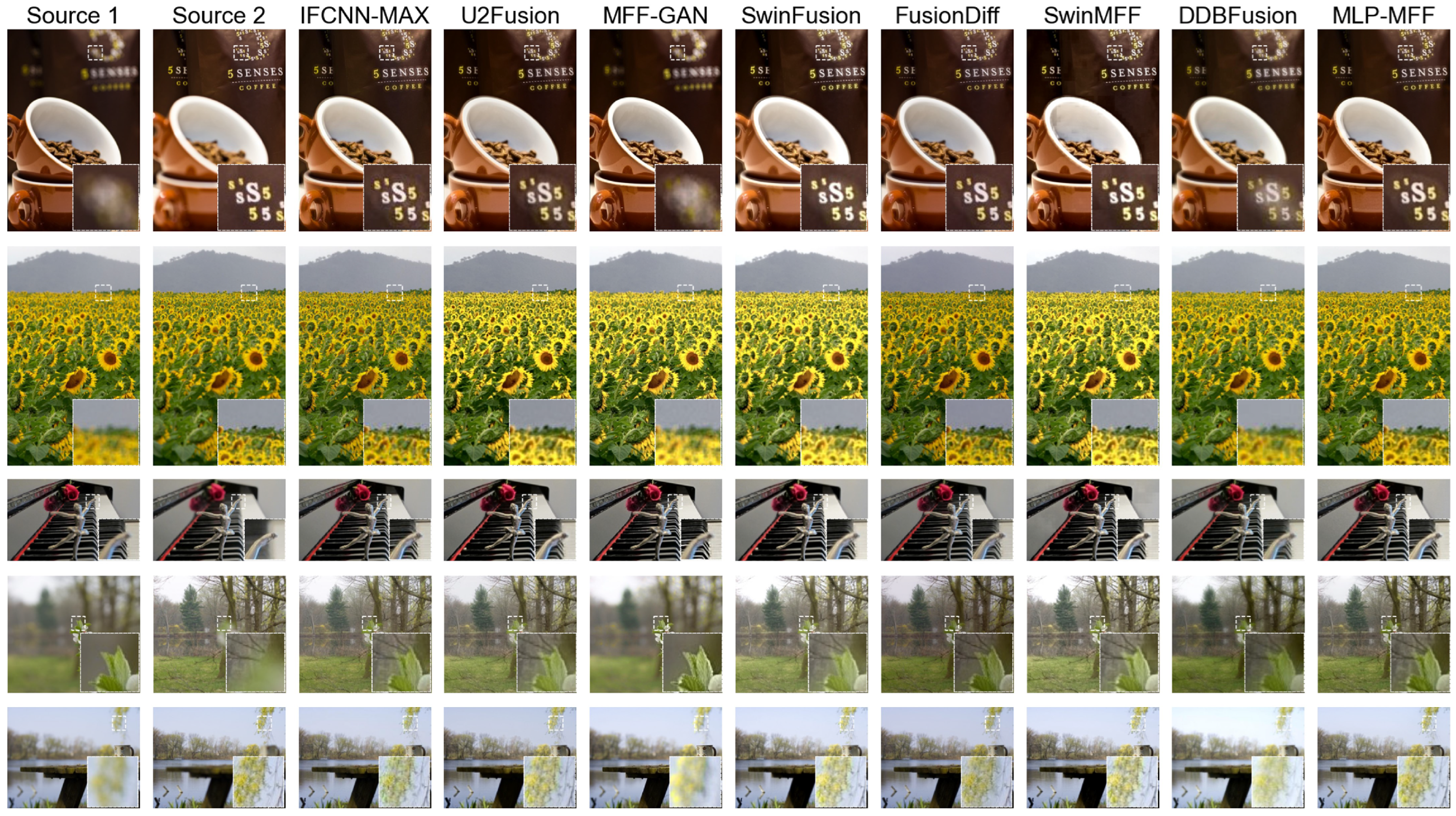

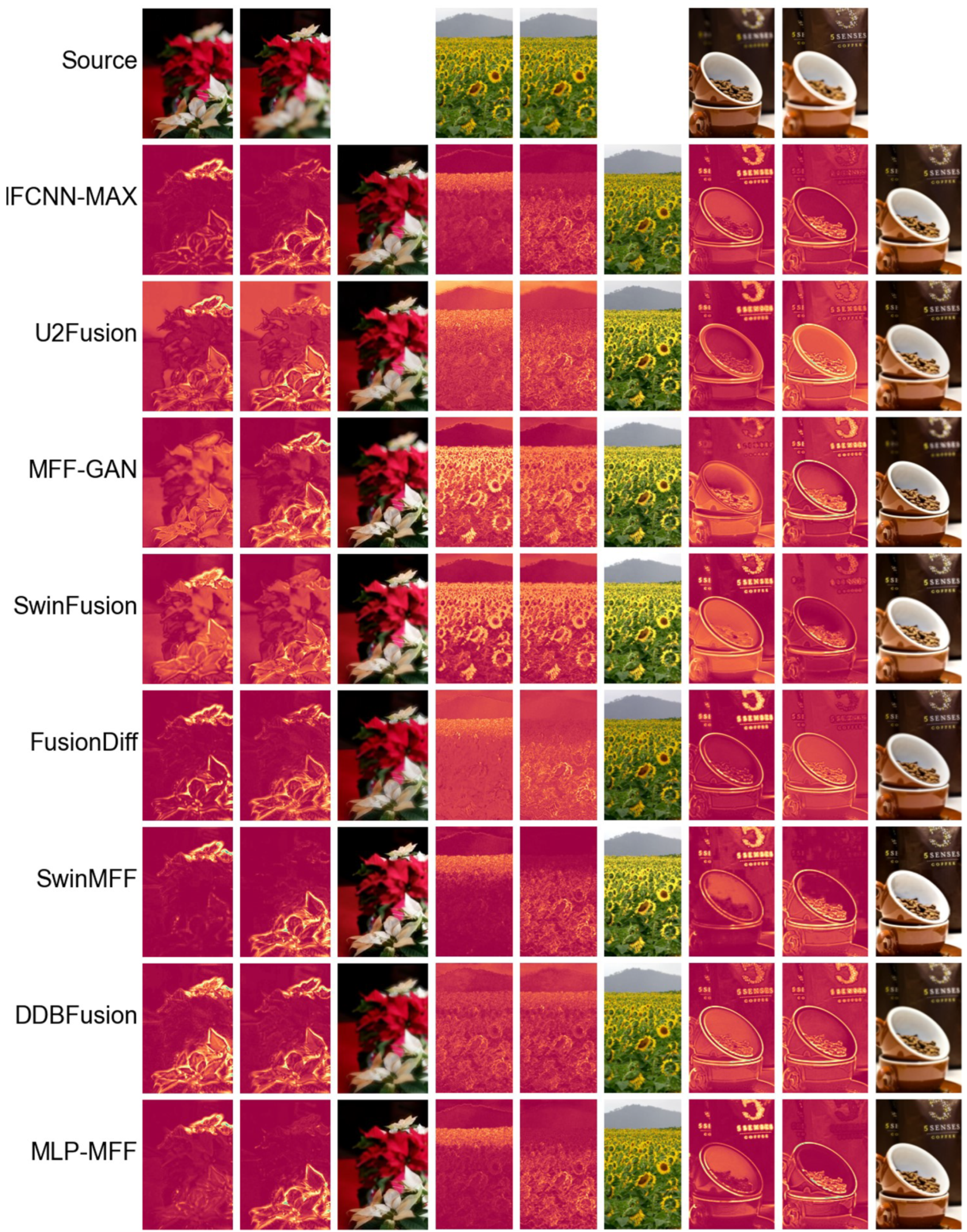

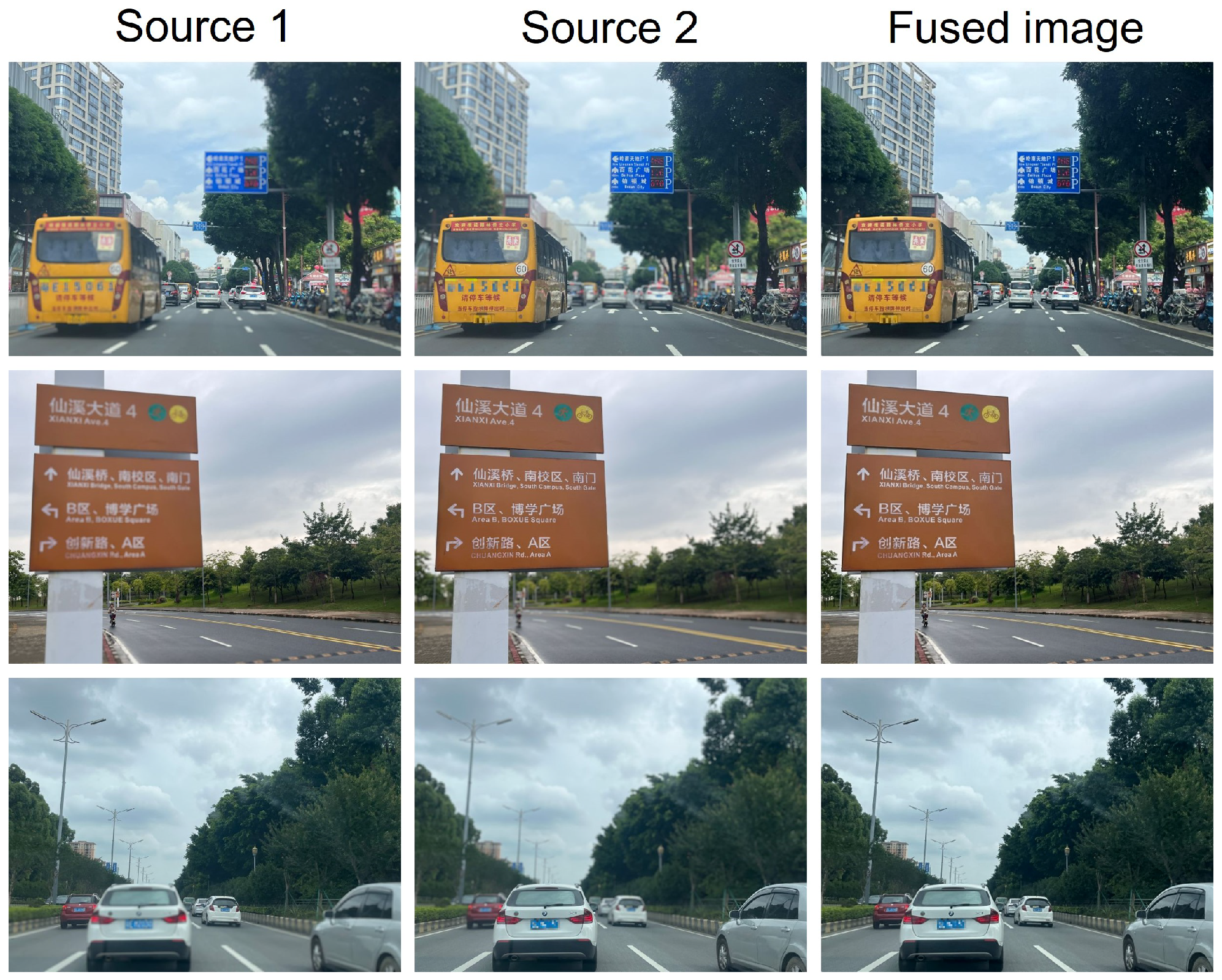

To further evaluate the performance of different end-to-end methods in scenarios with strong defocus, we conducted an additional comparative analysis on the MFFW dataset [

62], as shown in

Figure 8. The results indicate that some methods, such as MFF-GAN [

41] and DDBFusion [

42], exhibit noticeable fusion errors in strongly defocused scenes. In contrast, our proposed method consistently maintains top-tier fusion quality across all examples, with virtually no significant artifacts appearing at the edges. Similarly, we used difference maps for further comparison in

Figure 9. It is evident from the difference maps that several methods, including MFF-GAN [

41], DDBFusion [

42], and SwinFusion [

22], produce two difference maps that are quite similar. This suggests that their fusion results fail to adequately distinguish and fuse the foreground and background in strongly defocused scenes. However, our proposed method, along with SwinMFF [

21] and IFCNN-MAX [

39], demonstrates significantly superior performance in these challenging conditions.

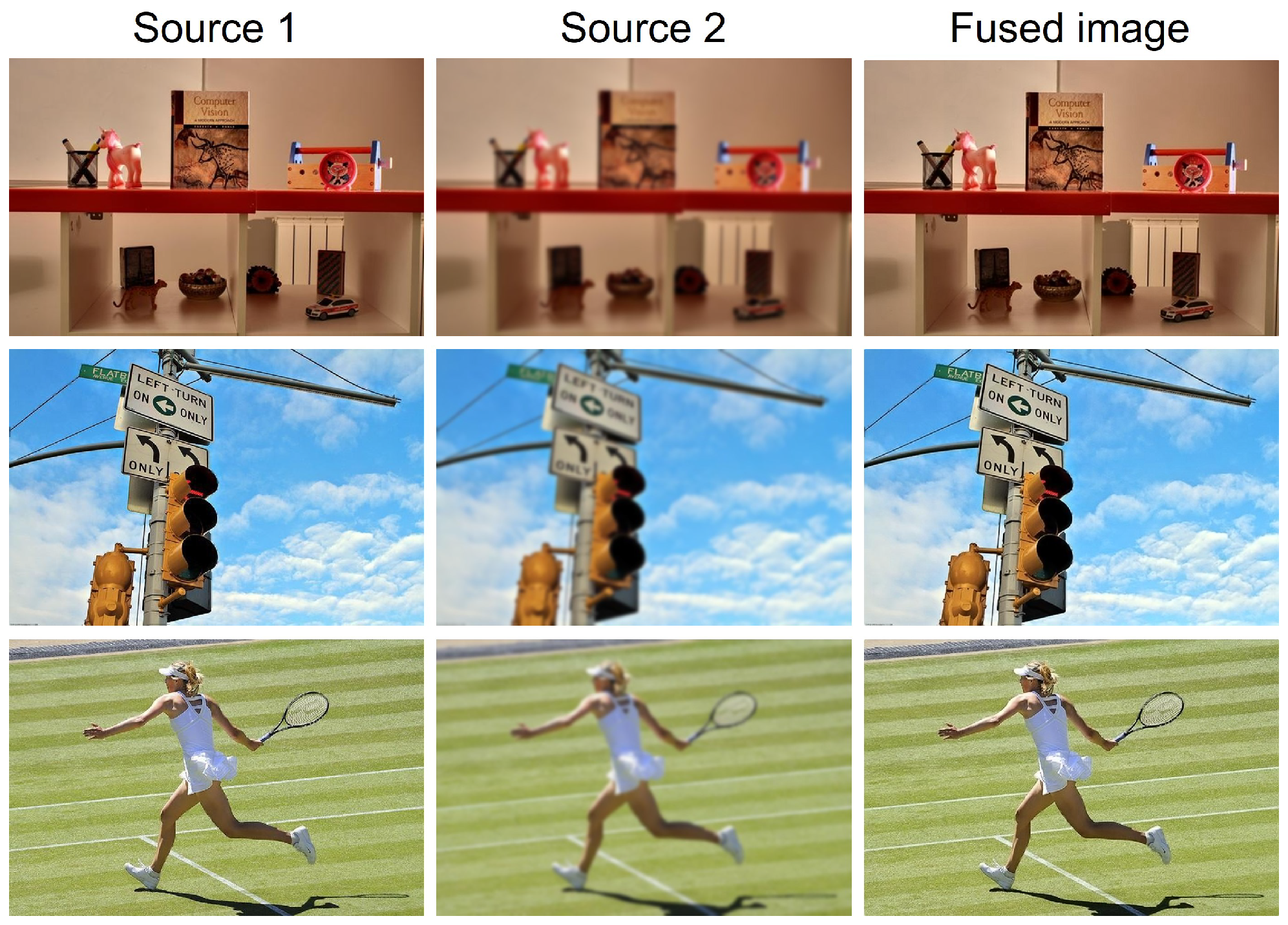

In

Figure 10, we further visualize the fusion results of various end-to-end deep learning methods on the MFI-WHU dataset [

41], along with their corresponding difference plots. The MFI-WHU dataset offers a more diverse range of examples, including small objects and high-contrast scenes, as depicted in

Figure 10. The first example in

Figure 10 demonstrates that our proposed method maintains robustness even with small objects, an area where many other methods, such as DDBFusion [

42] and U2Fusion [

40], are susceptible to noise and artifacts. Interestingly, while the diffusion model-based FusionDiff [

23] generally exhibits poor performance and often produces noticeable color casts in previous examples, it performs exceptionally well in high-contrast scenes.

In terms of comprehensive performance across multiple datasets, our proposed method consistently delivers high-quality fusion results. It effectively handles complex scenarios, preserves fine details, and maintains excellent pixel-level fidelity. Furthermore, it demonstrates a strong ability to distinguish and fuse foreground and background elements, even in challenging conditions like strong defocus.

Quantitative comparison. Table 1 presents a comprehensive quantitative comparison of different MFF methods on the Lytro dataset [

28]. The best-performing method for each metric is shown in bold, while the second-best is underlined. Additionally, a colored background is used to highlight the proposed method. An upward-pointing arrow (↑) next to a metric’s name indicates that a higher value is better. This formatting convention is applied consistently across all tables in this paper. The results demonstrate that our proposed MLP-MFF achieves superior performance across multiple evaluation metrics. Specifically, MLP-MFF achieves the highest scores in six out of eight metrics, significantly outperforming other methods in these aspects. End-to-end deep learning methods show varying performance levels. While DDBFusion achieves the highest SSIM score of 0.8661, it performs poorly in other metrics such as EI (48.1600) and SF (12.1484). SwinMFF shows balanced performance across multiple metrics but still falls short of MLP-MFF’s comprehensive superiority.

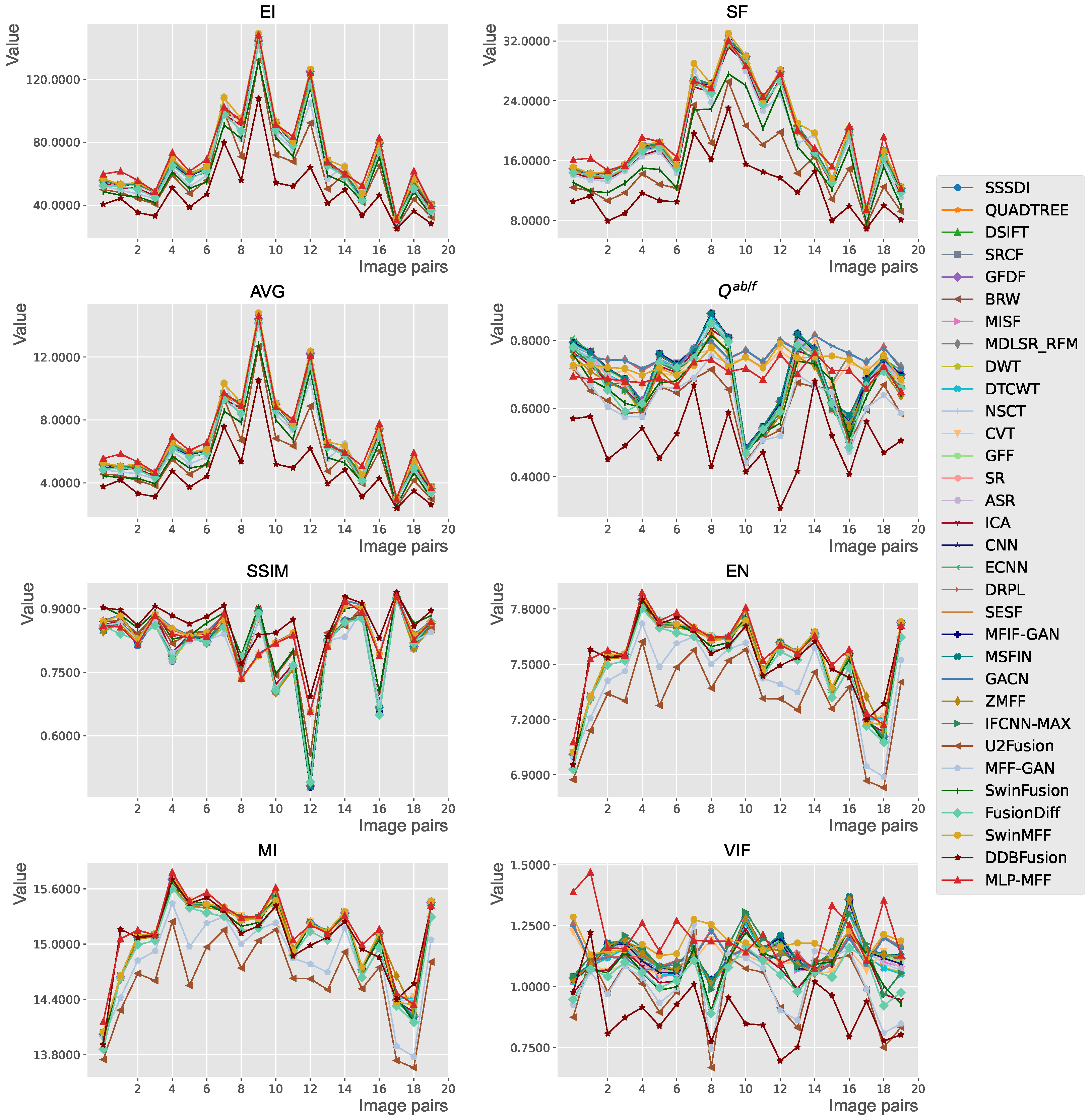

In

Figure 11, we present the quantitative metrics for the fusion results of different methods on each image pair within the Lytro dataset [

28]. The red line represents our proposed method. The results clearly show that the quantitative advantage of our proposed method extends across nearly the entire dataset, rather than being concentrated in just a few isolated examples that might skew overall metrics. This strong performance across diverse examples indicates that our proposed method possesses good generalization capabilities and superior performance.

In

Table 2 and

Table 3, we provide a further quantitative comparison of various deep learning-based methods on the MFFW dataset [

62] and the MFI-WHU dataset [

41], respectively. Across both datasets, our proposed method consistently ranks first or second in multiple metrics. This further demonstrates that our method maintains excellent fusion performance across a variety of scenarios.

The comprehensive quantitative experimental results collectively validate the superiority of the proposed MLP-MFF method across multiple evaluation metrics. MLP-MFF consistently demonstrates outstanding performance in terms of information entropy, edge information, structural similarity, and visual information fidelity, significantly outperforming both traditional methods and various existing deep learning approaches. Furthermore, MLP-MFF’s stable performance across different public datasets further underscores its strong generalization capability and robustness.

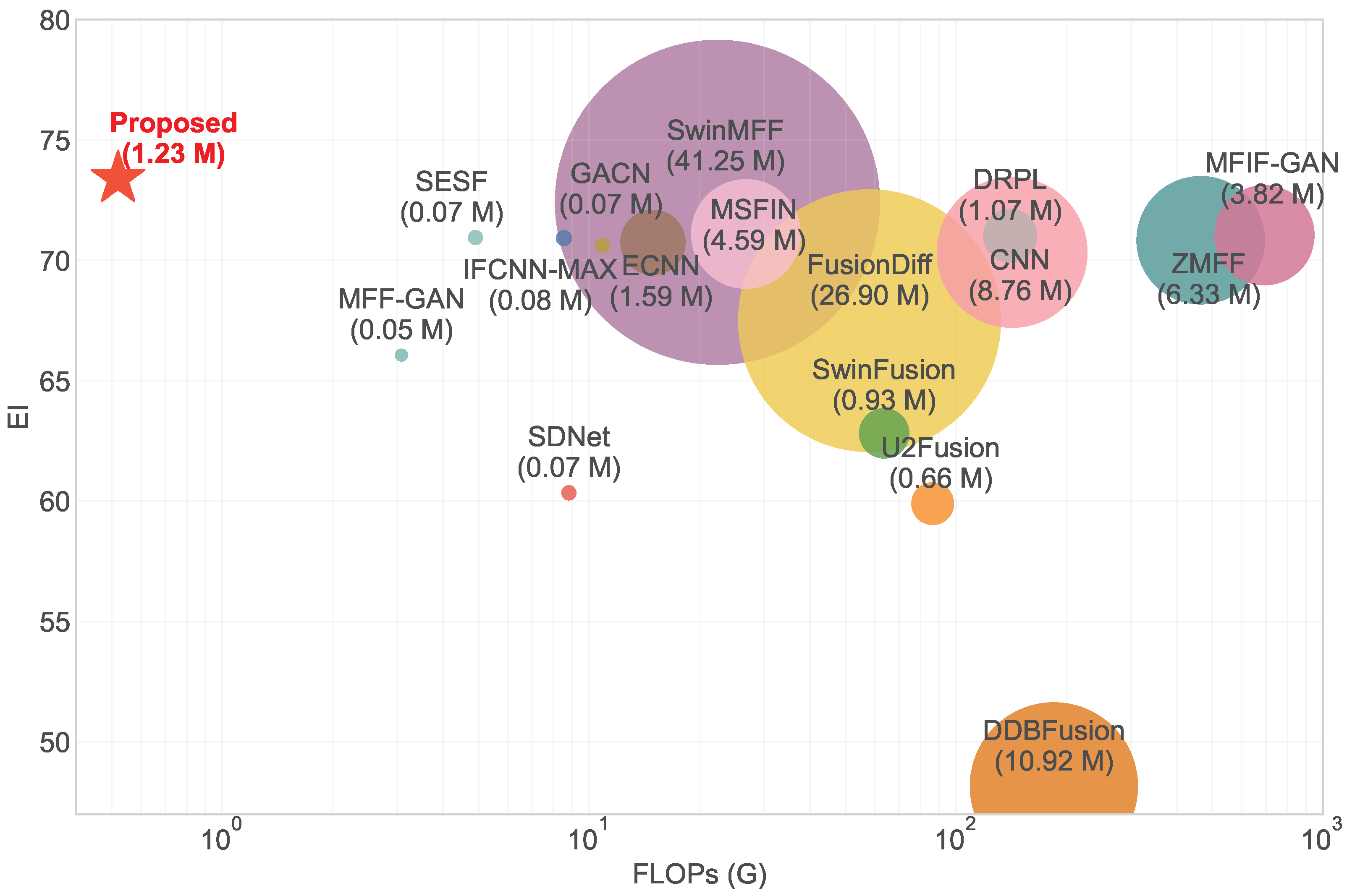

Efficiency Analysis. To comprehensively evaluate the computational efficiency of our proposed MLP-MFF, we compare it with various state-of-the-art learning-based MFF methods in terms of model size, computational complexity (FLOPs), and inference time. Note that all FLOPs are calculated on

input images to ensure fair comparison, and the inference time is measured as the average processing time per image on the MFI-WHU dataset [

41]. As shown in

Table 4, MLP-MFF demonstrates remarkable efficiency advantages across multiple dimensions. In terms of computational complexity, MLP-MFF achieves the lowest FLOPs (0.52G) among all compared methods, representing an 83.12% reduction compared to the previous most efficient method (MFF-GAN with 3.08G FLOPs). Regarding inference speed, MLP-MFF achieves the fastest inference time (0.01s), which is 83.33% faster than the previous fastest method (MFF-GAN with 0.06s). While MLP-MFF’s model size (1.23M) is not the smallest among all methods, it remains highly competitive, especially when considering its superior fusion performance demonstrated in

Table 1. The model size is significantly smaller than recent Transformer-based methods such as SwinMFF (41.25M), making it more practical for deployment in resource-constrained environments. The results demonstrate that MLP-MFF successfully achieves an optimal balance between computational efficiency and fusion performance, making it a practical solution for real-world applications.

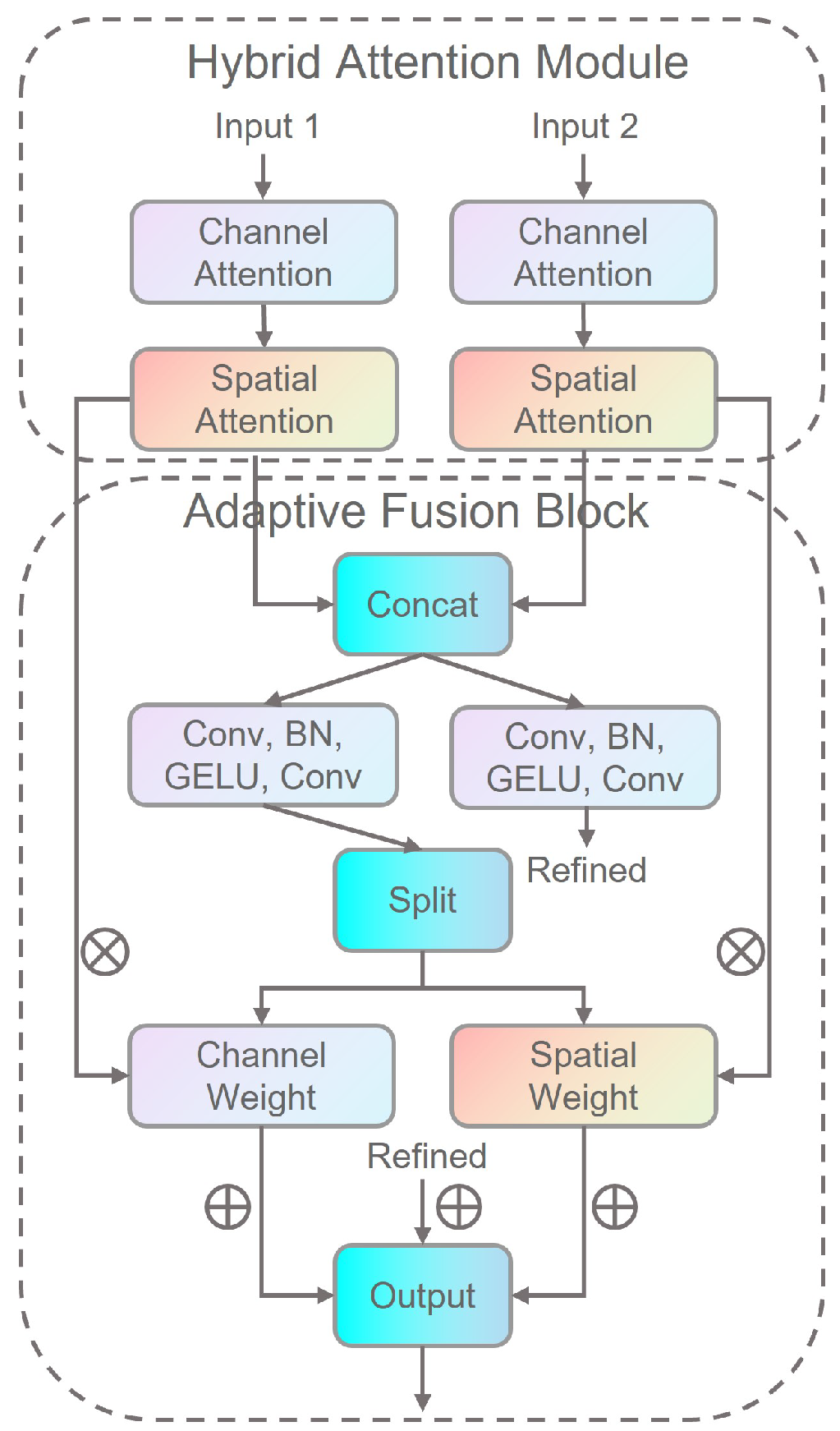

4.7. Ablation Study

To validate the effectiveness of our proposed DAMFFM-HA module, we conducted an ablation study on the Lytro dataset, with results presented in

Table 5. As observed, when the DAMFFM-HA module is removed and the pixel-wise dot product is directly used as the fusion scheme, the model experiences a performance decline across various metrics. Conversely, incorporating DAMFFM-HA leads to a significant improvement in all metrics, demonstrating the module’s substantial role in enhancing the fused image’s edge information, structural similarity, and visual information fidelity.

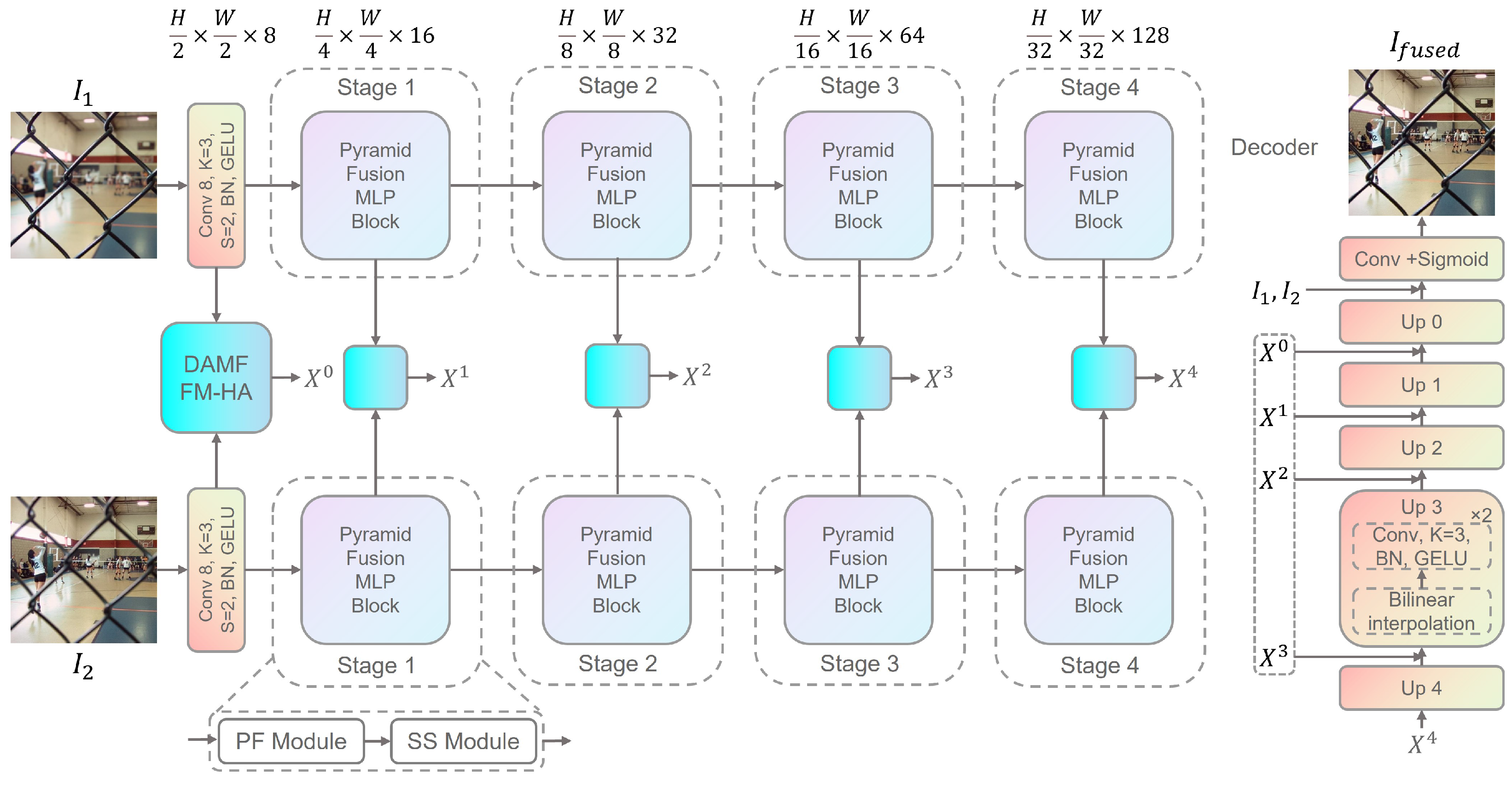

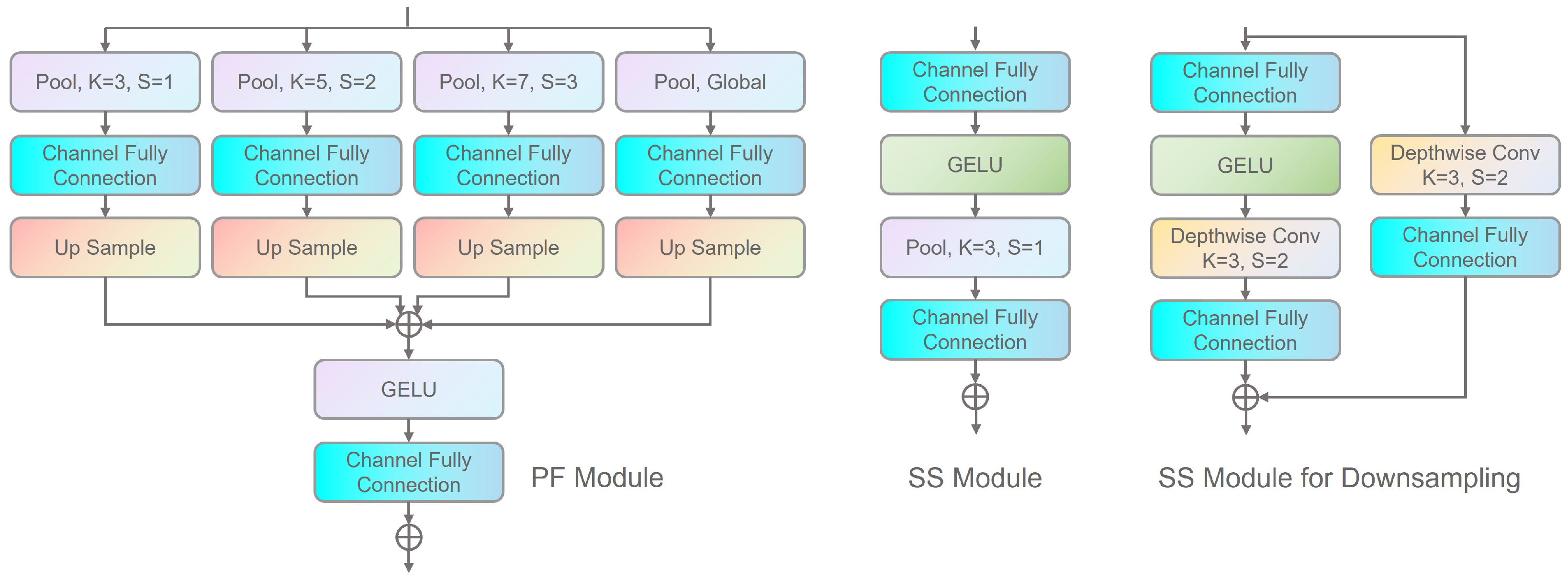

Additionally, we compared the performance of different backbone network architectures for end-to-end MFF. This comparison included UniRepLKNet [

80], representing one of the most advanced CNN networks; Swin Transformer [

22], representing Transformer-based networks; EVMamba [

81], representing vision state space-based networks; and MAXIM [

59], another widely used MLP architecture. The results, shown in

Table 6, reveal that MLP-MFF, which employs PFMLP as its backbone, achieves optimal results on most metrics compared to other mainstream architectures. This further validates the effectiveness and superiority of PFMLP in MFF tasks.

To further address the architectural design choices, particularly the number of blocks within each stage, we conducted a comparison with various configurations from the original PFMLP paper. This approach, as adopted by the original PFMLP work, is a more common and effective method to analyze the influence of network depth than varying the number of stages. As shown in

Table 7, we compare our model (“PFMLP-Ours”) with four versions of the PFMLP backbone: PFMLP-N, PFMLP-T, PFMLP-S, and PFMLP-B. The results indicate that while increasing the number of blocks (from N to T, S, and B) leads to a marginal improvement in fusion results, this gain comes with a significant increase in model size (Params) and computational cost (FLOPs). For instance, the PFMLP-B model achieves the highest scores but with a substantial increase in complexity. Our model, which utilizes a single block per stage, achieves a satisfactory balance between performance and efficiency, demonstrating the rationality of our architectural choice.

In summary, the ablation experiments conclusively demonstrate the significant contribution of both the proposed DAMFFM-HA module and the PFMLP backbone network in enhancing MFF performance.