Development and Implementation of an IoT-Enabled Smart Poultry Slaughtering System Using Dynamic Object Tracking and Recognition

Abstract

Highlights

- Developed an IoT-enabled, AI-driven humane poultry slaughtering system for red-feathered Taiwan chickens using YOLO-v4-based dynamic object tracking. Also, the system was successfully implemented in a real slaughterhouse, demonstrating a practical AI application in humane poultry slaughtering.

- Achieved 94% mean average precision (mAP) with a real-time detection speed of 39 fps, enabling accurate distinction between stunned and unstunned chickens using the YOLO-v4 model and image enhancement.

- Provides a scalable, automation-ready solution for enhancing animal welfare compliance, reducing labor dependency, and improving hygiene standards in poultry slaughterhouses.

- Demonstrates the viability of integrating deep learning and sensor networks into closed-loop, IoT-monitored smart agriculture systems for real-time decision making.

Abstract

1. Introduction

2. Literature Review

3. Materials and Methods

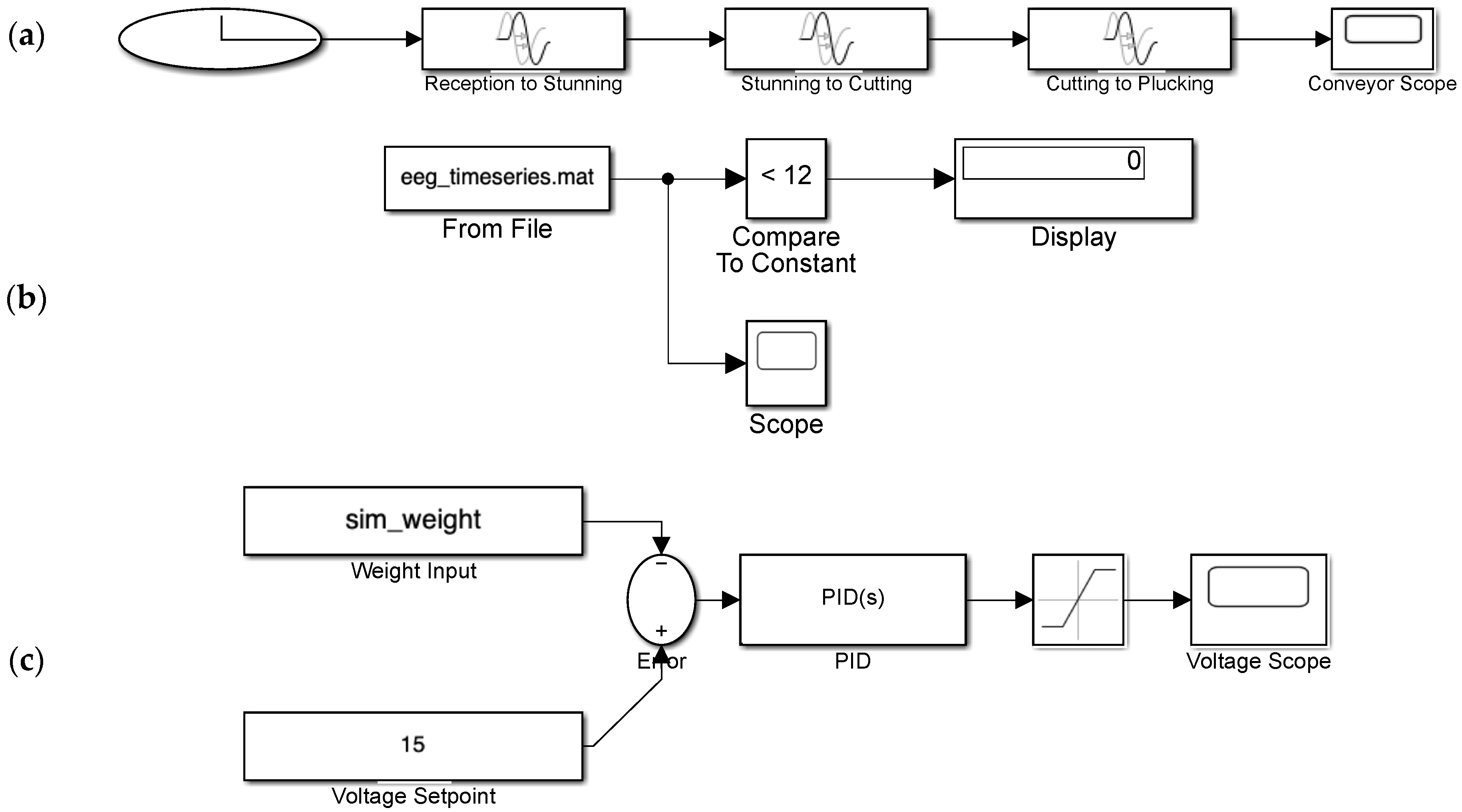

3.1. Smart Humane Poultry Slaughter System Processes

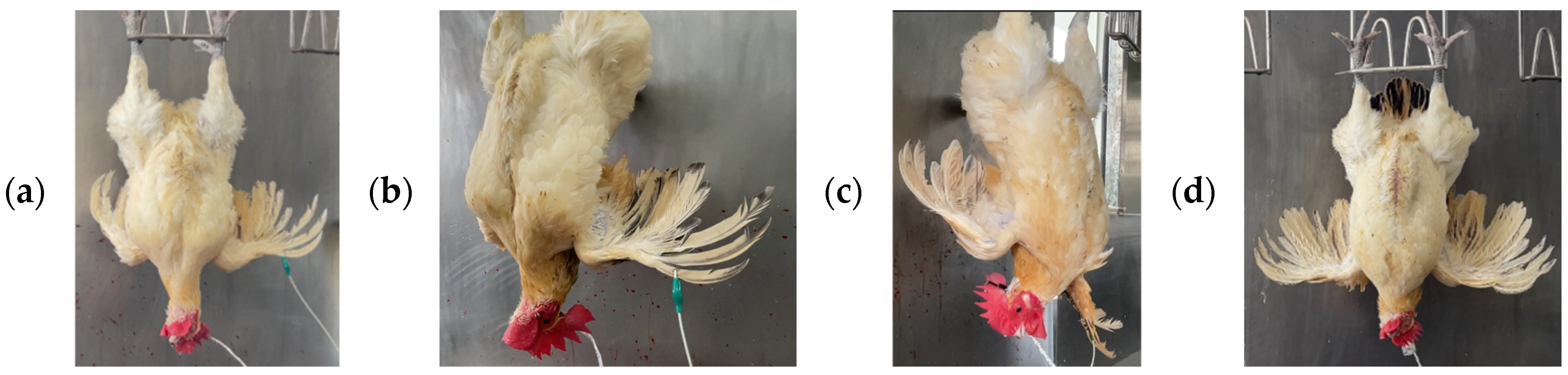

- During reception, red-feathered Taiwan chickens waiting to be slaughtered are hung by their feet from an overhead conveyor to be transported upside-down to the water bath for electrical stunning. Shackles ensure that the chickens are firmly secured to the overhead conveyor, preventing them from falling from the conveyor when immersed in the electrified water bath and damaging equipment or harming personnel. This design guarantees the safety of the slaughter process and maximizes work efficiency by securing the chickens’ feet to the overhead conveyor and confirming that the chickens’ bodies are in the proper position. This operational procedure ensures that the red-feathered Taiwan chickens move smoothly through the slaughter process while minimizing risks.

- The red-feathered chickens are electrically stunned during the electrified water bath. More specifically, the chickens are shocked for 7 ± 0.6 s with a constant voltage DC to render them unconscious. The positive electrode of the constant voltage DC is connected to the water bath, and the negative electrode is connected to the overhead conveyor. This setup ensures that the red-feathered Taiwan chickens are fully stunned and prevents situations in which any chicken remains conscious because the electrical current did not completely pass through their body. In addition, this design reduces the risk of electrical shock to operators, thereby ensuring the safety of the slaughter process.

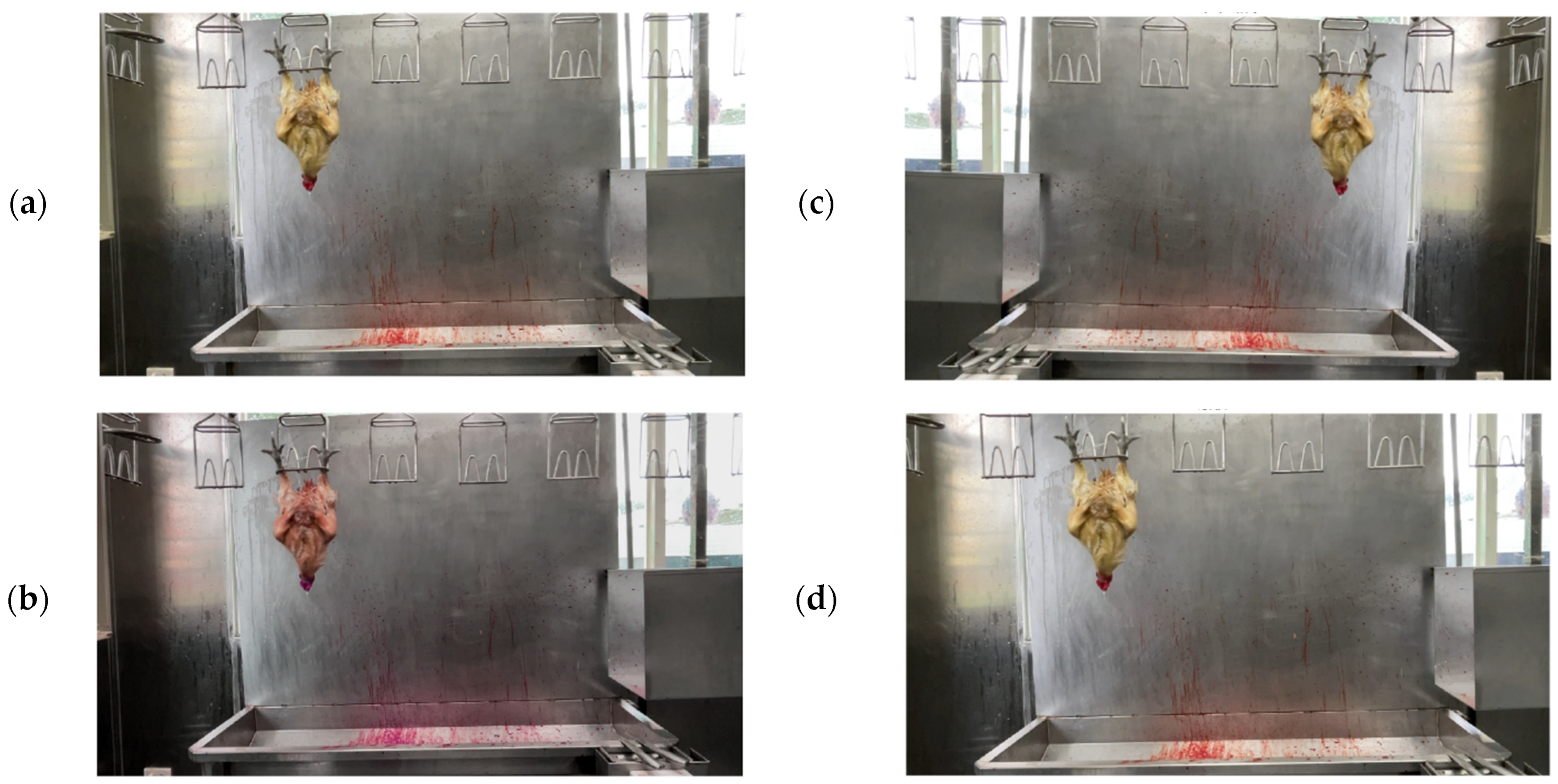

- The head-cutting area is where slaughterers cut the throats of the red-feathered Taiwan chickens. After being stunned by the electrified water bath, the unconscious chickens are killed and bled in this area to complete the slaughter process. In this study, Camera B was positioned in this area to capture images of successfully and unsuccessfully stunned red-feathered Taiwan chickens; these images were subsequently used to train the identification model. The trained model was then used for real-time stunning identification to ensure that the treatment of the red-feathered chickens met animal welfare requirements prior to slaughter. In addition to images, brainwaves were also collected in the head-cutting area; these data can contribute to a deeper understanding of the physiological responses of red-feathered Taiwan chickens during the electrical stunning process and further guarantee proper stunning effect and animal welfare. To facilitate data collection and real-time monitoring, this study employed high-resolution cameras and a high-speed router to ensure that the collected images and data could be instantly and accurately transmitted to the computer via Wi-Fi. The collection and analysis of these data can help improve and optimize the electrical stunning process, ensuring that every red-feathered Taiwan chicken can be rendered properly unconscious before being slaughtered, thereby minimizing their suffering. In addition, these technological measures can enhance the safety and efficiency of the slaughter process, preventing risks to operators.

- Red-feathered chickens that are confirmed to have no vital signs are transported by the overhead conveyor to the complete bloodletting area and continue to bleed during transport. All red-feathered chickens in this area are dead and are released automatically from the overhead conveyor onto a bench.

- The slaughterers place the bled chickens into the chicken pluckers for feather removal. First, the carcasses of the red-feathered Taiwan chickens are scalded to warm them up and thus facilitate subsequent defeathering. The carcasses are continually tumbled in the pluckers to ensure that the red-feathered Taiwan chickens are sufficiently heated in the drum, which is padded with soft plastic columns. When a red-feathered Taiwan chicken carcass is dumped into the drum, the soft plastic columns collide with the carcass, and the force from the high-speed rotations plucks the feathers from the carcass. When feather removal is complete, the carcass is ejected from the plucker.

3.2. Image Recognition System Equipment and Architecture

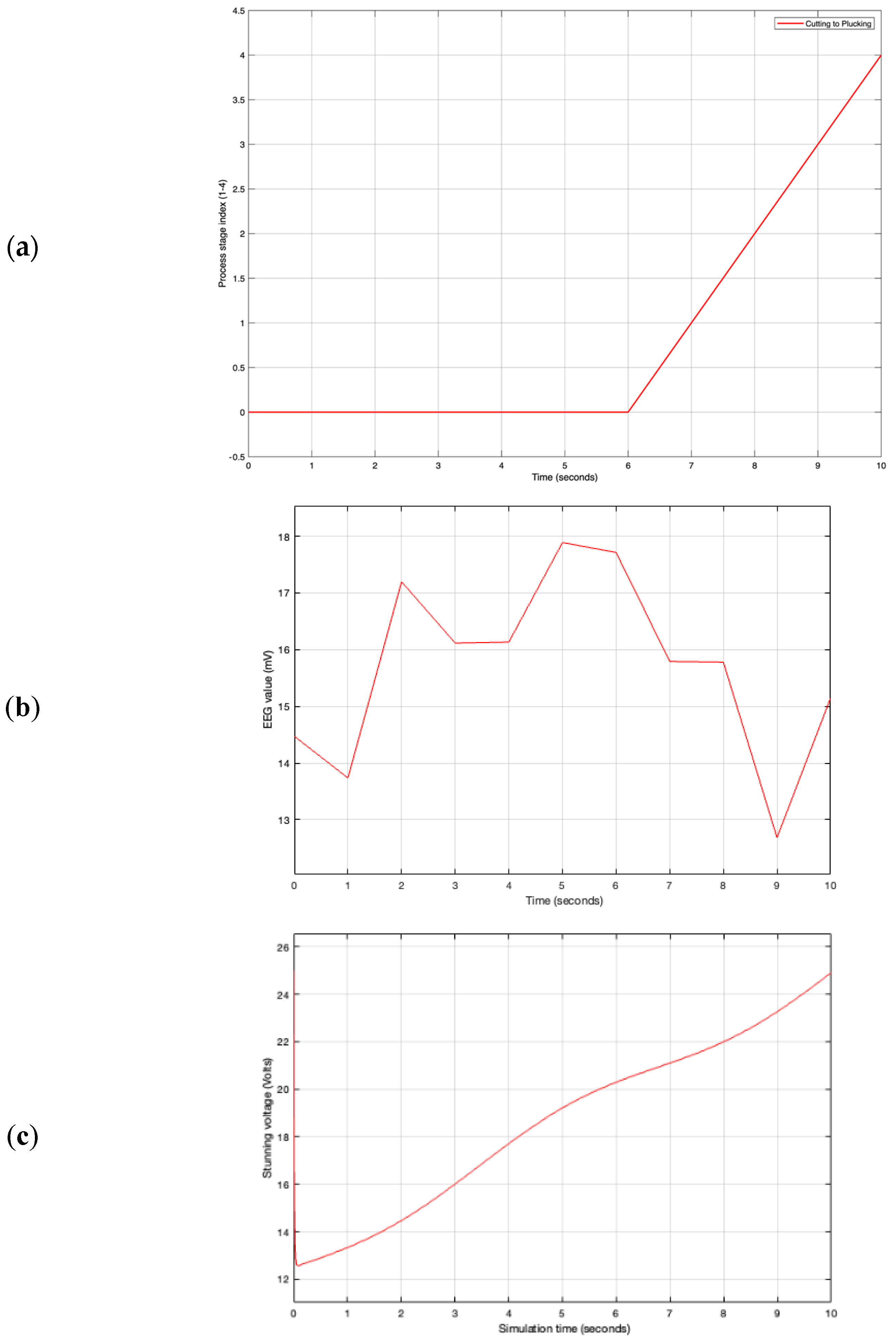

3.3. IoT Integration and Digital Twin Simulation

3.4. Building the Stunned Red-Feathered Taiwan Chicken Dataset

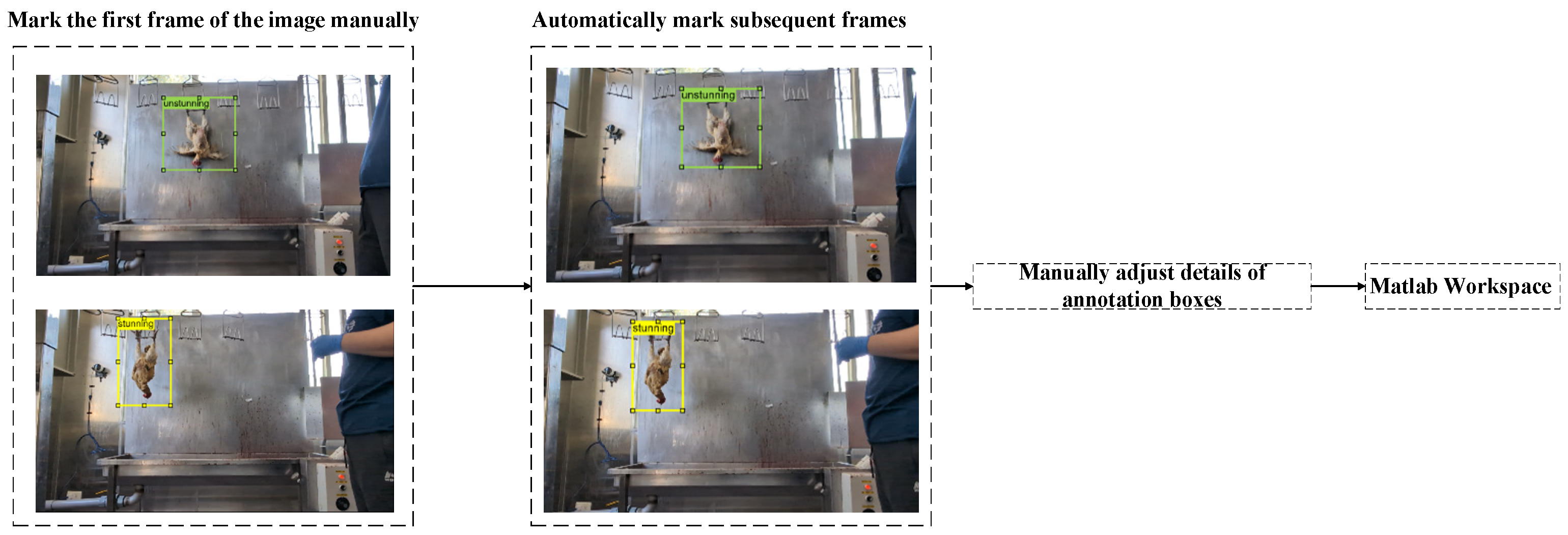

3.5. Dynamic Tracking Object Recognition for the YOLO-v4 Red-Feathered Taiwan Chicken Image Recognition Model

3.6. Enhancing Images of Stunned Red-Feathered Taiwan Chickens

4. Results and Discussion

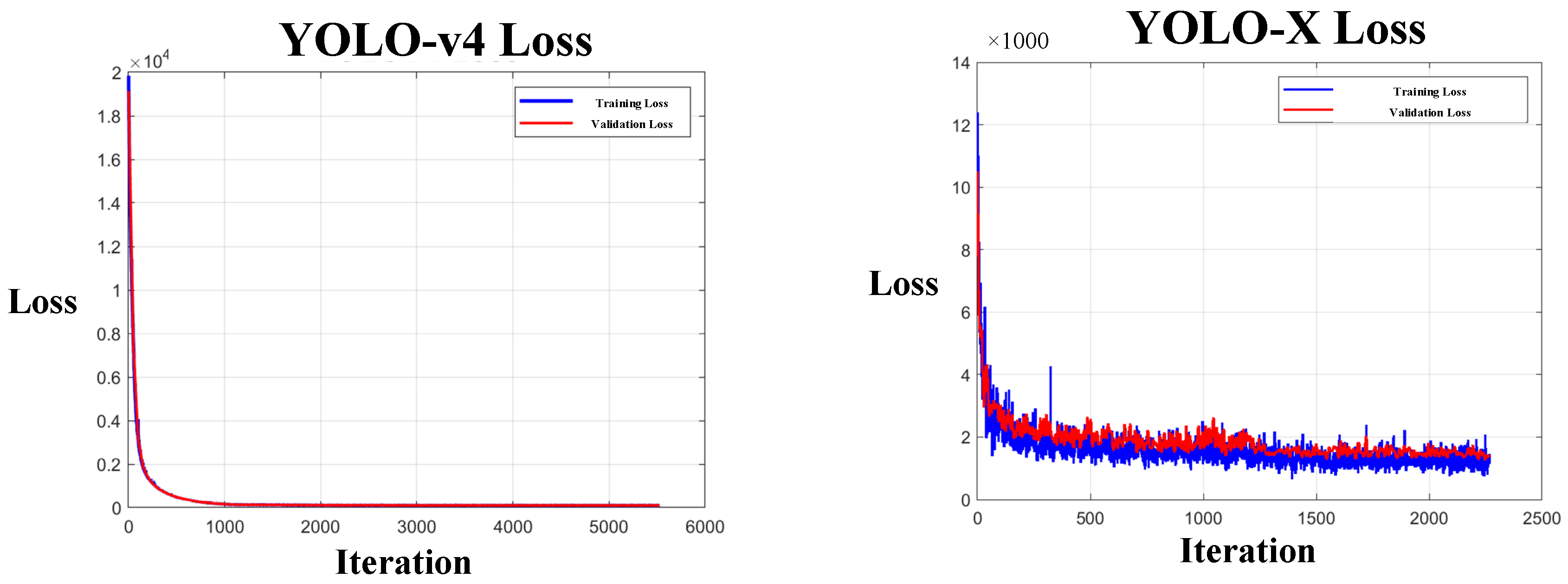

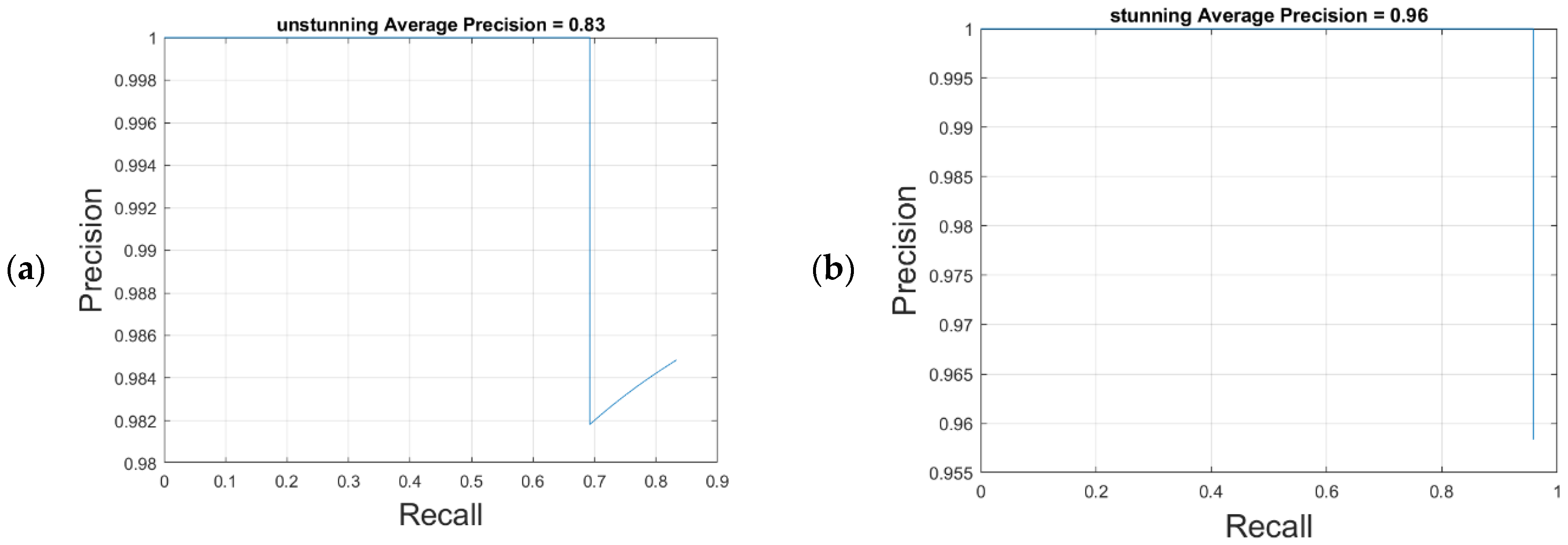

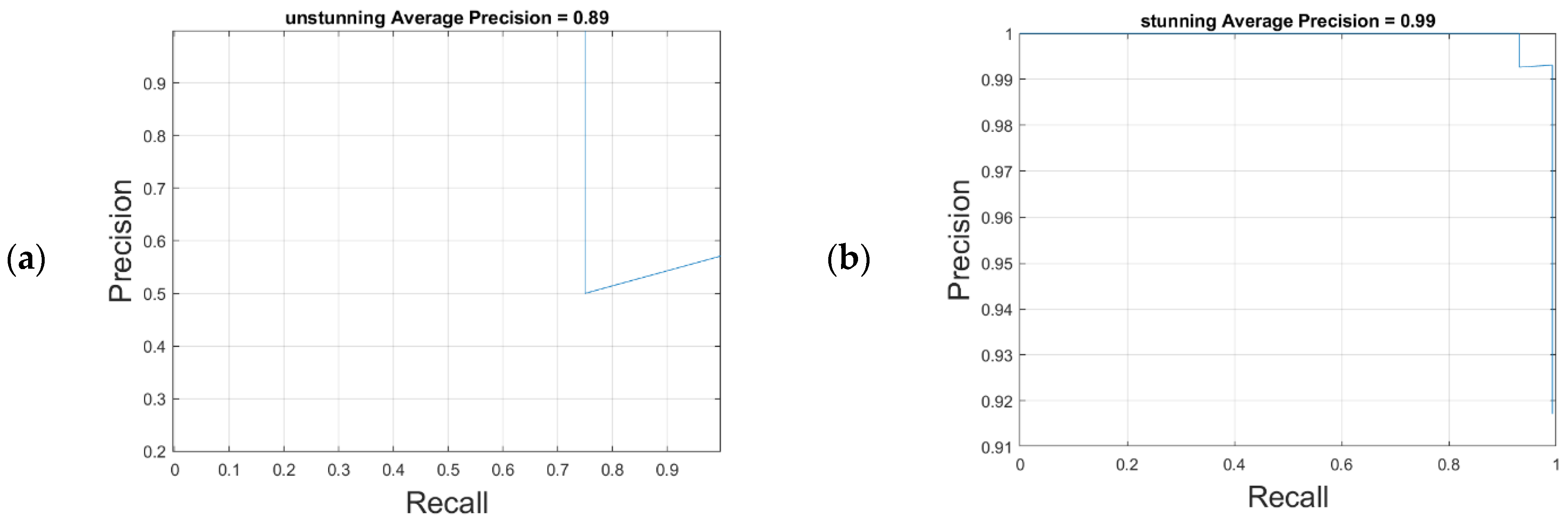

4.1. Performance Criteria of the Stunned Red-Feathered Taiwan Chicken Identification Model

4.2. Results of the YOLO-v4 Stunned Red-Feathered Taiwan Chicken Identification Model

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Jainonthee, C.; Chaisowwong, W.; Ngamsanga, P.; Meeyam, T.; Sampedro, F.; Wells, S.J.; Pichpol, D. Exploring the influence of slaughterhouse type and slaughtering steps on campylobacter jejuni contamination in chicken meat: A cluster analysis approach. Heliyon 2024, 10, e32345. [Google Scholar] [CrossRef] [PubMed]

- Zhou, Y.; Wang, N.-L.; Cen, J.-Q.; Han, J.-T.; Tang, Y.-X.; Xu, Z.-Q.; Zeng, H.; Houf, K.; Yu, Z. Comparative analyses of bacterial contamination and microbiome of broiler carcasses in wet market and industrial processing environments. Int. J. Food Microbiol. 2025, 426, 110937. [Google Scholar] [CrossRef] [PubMed]

- He, S.; Lin, J.; Jin, Q.; Ma, X.; Liu, Z.; Chen, H.; Ma, J.; Zhang, H.; Descovich, K.; Phillips, C.J.C.; et al. The relationship between animal welfare and farm profitability in cage and free-range housing systems for laying hens in china. Animals 2022, 12, 2090. [Google Scholar] [CrossRef] [PubMed]

- Iannetti, L.; Neri, D.; Torresi, M.; Acciari, V.A.; Marzio, V.D.; Centorotola, G.; Scattolini, S.; Pomilio, F.; Giannatale, E.D.; Vulpiani, M.P. Can animal welfare have an impact on food safety? a study in the poultry production chain. Eur. J. Public Health 2020, 30, ckaa166.202. [Google Scholar] [CrossRef]

- Valkova, L.; Vecerek, V.; Voslarova, E.; Kaluza, M.; Takacova, D.; Brscic, M. Animal welfare during transport: Comparison of mortality during transport from farm to slaughter of different animal species and categories in the czech republic. Ital. J. Anim. Sci. 2022, 21, 914–923. [Google Scholar] [CrossRef]

- Duval, E.; Lecorps, B.; Keyserlingk, M.V. Are regulations addressing farm animal welfare issues during live transportation fit for purpose? a multi-country jurisdictional check. R. Soc. Open Sci. 2024, 11, 231072. [Google Scholar] [CrossRef]

- Truong, T.S.; Fernandes, A.F.A.; Kidd, M.T.; Le, N. CarcassFormer: An end-to-end transformer-based framework for simultaneous localization, segmentation and classification of poultry carcass defect. Poult. Sci. 2024, 103, 103765. [Google Scholar]

- Chen, Y.; Wang, S.C. Poultry carcass visceral contour recognition method using image processing. J. Appl. Poult. Res. 2018, 27, 316–324. [Google Scholar] [CrossRef]

- Chowdhury, E.U.; Morey, A. Application of optical technologies in the US poultry slaughter facilities for the detection of poultry carcase condemnation. Br. Poult. Sci. 2020, 61, 646–652. [Google Scholar] [CrossRef]

- Wu, F.; Miao, Z.; He, C. Remote monitoring system for intelligent slaughter production line based on internet of things and cloud platform. In Proceedings of the 2020 11th International Conference on Prognostics and System Health Management (PHM-2020 Jinan), Jinan, China, 23–25 October 2020; pp. 538–542. [Google Scholar]

- Alkahtani, M.; Aljabri, B.; Alsaleh, A.; Alghamdi, H.; AlJuraiyed, O. IoT implementation in slaughterhouses supply chain: A case study of the Adahi experiment in the Kingdom of Saudi Arabia. IEEE Access 2024, 12, 26696–26709. [Google Scholar] [CrossRef]

- Ahmed, M.M.; Hassanien, E.E.; Hassanien, A.E. A smart IoT-based monitoring system in poultry farms using chicken behavioural analysis. Internet Things 2024, 25, 101010. [Google Scholar] [CrossRef]

- Ali, W.; Din, I.U.; Almogren, A.; Rodrigues, J.J.P.C.E. Poultry health monitoring with advanced imaging: Toward next-generation agricultural applications in consumer electronics. IEEE Trans. Consum. Electron. 2024, 70, 7147–7154. [Google Scholar] [CrossRef]

- Shang, Z.; Li, Z.; Wei, Q.; Hao, S. Livestock and poultry posture monitoring based on cloud platform and distributed collection system. Internet Things 2024, 25, 101039. [Google Scholar] [CrossRef]

- Wang, P.; Wu, P.; Wang, C.; Huang, X.; Wang, L.; Li, C.; Niu, Q.; Li, H. Chicken body temperature monitoring method in complex environment based on multi-source image fusion and deep learning. Comput. Electron. Agric. 2025, 228, 109689. [Google Scholar] [CrossRef]

- Choudhary, A. Internet of Things: A comprehensive overview, architectures, applications, simulation tools, challenges and future directions. Discov. Internet Things 2024, 4, 31. [Google Scholar] [CrossRef]

- Zhang, L.; Yue, H.Y.; Zhang, H.J.; Xu, L.; Wu, S.G.; Yan, H.J.; Gong, Y.S.; Qi, G.H. Transport stress in broilers: I. blood metabolism, glycolytic potential, and meat quality. Poult. Sci. 2009, 88, 2033–2041. [Google Scholar] [CrossRef] [PubMed]

- Li, W.; Yan, C.; Descovich, K.; Phillips, C.J.C.; Chen, Y.; Huang, H.; Wu, X.; Liu, J.; Chen, S.; Zhao, X. The effects of preslaughter electrical stunning on serum cortisol and meat quality parameters of a slow-growing chinese chicken breed. Animals 2022, 12, 2866. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.C.; Yang, J.; Huang, M.; Chen, K.J.; Xu, X.L.; Zhou, G.H. The effects of electrical stunning voltage on meat quality, plasma parameters, and protein solubility of broiler breast meat. Poult. Sci. 2017, 96, 764–769. [Google Scholar] [CrossRef]

- Oliveira, F.; Pereira, P.; Dantas, J.; Araujo, J.; Maciel, P. Dependability evaluation of a smart poultry house: Addressing availability issues through the edge, fog, and cloud computing. IEEE Trans. Ind. Inform. 2024, 20, 1304–1312. [Google Scholar] [CrossRef]

- Rejeb, A.; Rejeb, K.; Abdollahi, A.; Al-Turjman, F.; Treiblmaier, H. The interplay between the internet of things and agriculture: A bibliometric analysis and research agenda. Internet Things 2022, 19, 100580. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.-Y.; Liao, H.-Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar] [CrossRef]

- Liu, H.W.; Chen, C.H.; Tsai, Y.C.; Hsieh, K.W.; Lin, H.T. Identifying images of dead chickens with a chicken removal system integrated with a deep learning algorithm. Sensors 2021, 21, 3579. [Google Scholar] [CrossRef] [PubMed]

- Bist, R.B.; Subedi, S.; Yang, X.; Chai, L. Automatic detection of cage-free dead hens with deep learning methods. AgriEngineering 2023, 5, 1020–1038. [Google Scholar] [CrossRef]

- Shen, J.; Fang, Z.; Huang, J. Point-level fusion and channel attention for 3d object detection in autonomous driving. Sensors 2025, 25, 1097. [Google Scholar] [CrossRef]

- Shams, M.Y.; Elmessery, W.M.; Oraiath, A.A.T.; Elbeltagi, A.; Salem, A.; Kumar, P.; El-Messery, T.M.; El-Haffez, T.A.; Abdelshafie, M.F.; El-Wahhab, G.G.A.; et al. Automated on-site broiler live weight estimation through yolo-based segmentation. Smart Agric. Technol. 2025, 10, 100828. [Google Scholar] [CrossRef]

- Park, S.; Kim, J.; Wang, S.; Kim, J. Effectiveness of image augmentation techniques on non-protective personal equipment detection using yolov8. Appl. Sci. 2025, 15, 2631. [Google Scholar] [CrossRef]

- Wang, S. Evaluation of impact of image augmentation techniques on two tasks: Window detection and window states detection. Results Eng. 2024, 24, 103571. [Google Scholar] [CrossRef]

- Jiang, D.; Wang, H.; Li, T.; Gouda, M.A.; Zhou, B. Real-time tracker of chicken for poultry based on attention mechanism-enhanced yolo-chicken algorithm. Comput. Electron. Agric. 2025, 237, 110640. [Google Scholar] [CrossRef]

- Mishra, S.; Sharma, S.K. Advanced contribution of IoT in agricultural production for the development of smart livestock environments. Internet Things 2023, 22, 100724. [Google Scholar] [CrossRef]

- Debauche, O.; Trani, J.P.; Mahmoudi, S.; Manneback, P.; Bindelle, J.; Mahmoudi, S.A.; Guttadauria, A.; Lebeau, F. Data management and internet of things: A methodological review in smart farming. Internet Things 2021, 14, 100378. [Google Scholar] [CrossRef]

| Equipment | Brand | Model | Specification |

|---|---|---|---|

| CPU | Intel, USA | i7-14700K | Cores: 20 Threads: 28 |

| Graphics Processing Unit (GPU) | NVDIA, USA | RTX 4080 Super | Memory: 16 GB, GDDR6X Memory speed: 23 Gbps Memory bandwidth: 736 GB/s Base clock rate: 2295 MHz |

| Random Access Memory (RAM) | Kingston, Taiwan | DDR5-5600 | 32 GB |

| Hard Drive | Western Digital, China | SN770 SSD | 1 TB |

| Operating System | Microsoft, USA | Windows 11 | Business Editions |

| Wi-Fi Router | D-Link, Taiwan | DWR-953 | Integrated SIM card slot 4G LTE Fail-safe Internet with fixed line and mobile Internet support |

| Camera A | Xiaomi, China | AW200 | Resolution: 1920 × 1080 Camera angles: 120° Memory: 256 GB |

| Camera B | Xiaomi, China | C300 | Resolution: 2304 × 1296 Lens movement: 360° horizontally, 108° vertically Memory: 256 GB |

| Scenario | Total Brainwave Energy Before Stunning | Post-Stunning Criterion of Unconsciousness | P1 | P2 | P3 |

|---|---|---|---|---|---|

| Brainwave Energy | Brainwave Energy | Brainwave Energy | |||

| 1 | 4.81 | 0.48 | 0.03 | 0.04 | 0.07 |

| 2 | 3.22 | 0.32 | 0.04 | 0.15 | 0.06 |

| 3 | 6.23 | 0.62 | 3.05 | 2.11 | 3.05 |

| 4 | 2.77 | 0.28 | 5.58 | 6.95 | 7.15 |

| Raise Head | Open Eyes | Move Wing | Weight | Voltage |

|---|---|---|---|---|

| No | No | No | 2.33 kg | 160 V |

| No | Yes | No | 3.01 kg | 100 V |

| Yes | Yes | No | 2.84 kg | 80 V |

| No | No | No | 2.82 kg | 100 V |

| Parameter | Settings |

|---|---|

| Input Size | |

| Gradient Decay Factor | 0.9 |

| Squared Gradient Decay Factor | 0.999 |

| Initial Learn Rate | 0.0001 |

| Learn Rate Schedule | Piecewise |

| Learn Rate Drop Period | 90 |

| Learn Rate Drop Factor | 0.1 |

| Mini Batch Size | 3 |

| L2 Regularization | 0.0005 |

| Max Epochs | 120 |

| Shuffle | every-epoch |

| Verbose Frequency | 10 |

| Plots | training-progress |

| Output Network | best-validation |

| Determination Result | Actual Target | Predicted Target |

|---|---|---|

| TP_1 | Stunned chicken | Stunned chicken |

| TP_2 | Unstunned chicken | Unstunned chicken |

| FP_1 | Unstunned chicken | Stunned chicken |

| FP_2 | Stunned chicken | Unstunned chicken |

| FP_3 | Background | Stunned chicken |

| FP_4 | Background | Unstunned chicken |

| FN_1 | Stunned chicken | Background |

| FN_2 | Unstunned chicken | Background |

| FN_3 | Background | Stunned chicken |

| FN_4 | Background | Unstunned chicken |

| TN | Background | Background |

| YOLO-v4 IoU = 0.75 | Actual Stunned Chicken | Actual Unstunned Chicken | Actual Background |

|---|---|---|---|

| Predicted stunned chicken | TP_1 = 104 | FP_1 = 1 | FN_3 = 0 |

| Predicted unstunned chicken | FP_2 = 1 | TP_2 = 99 | FN_4 = 0 |

| Predicted background | FN_1 = 0 | FN_2 = 0 | TN = 0 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lin, H.-T.; Suhendra. Development and Implementation of an IoT-Enabled Smart Poultry Slaughtering System Using Dynamic Object Tracking and Recognition. Sensors 2025, 25, 5028. https://doi.org/10.3390/s25165028

Lin H-T, Suhendra. Development and Implementation of an IoT-Enabled Smart Poultry Slaughtering System Using Dynamic Object Tracking and Recognition. Sensors. 2025; 25(16):5028. https://doi.org/10.3390/s25165028

Chicago/Turabian StyleLin, Hao-Ting, and Suhendra. 2025. "Development and Implementation of an IoT-Enabled Smart Poultry Slaughtering System Using Dynamic Object Tracking and Recognition" Sensors 25, no. 16: 5028. https://doi.org/10.3390/s25165028

APA StyleLin, H.-T., & Suhendra. (2025). Development and Implementation of an IoT-Enabled Smart Poultry Slaughtering System Using Dynamic Object Tracking and Recognition. Sensors, 25(16), 5028. https://doi.org/10.3390/s25165028