A Graph-Based Superpixel Segmentation Approach Applied to Pansharpening

Abstract

1. Introduction

1.1. Background

1.2. Related Works

1.3. Motivation and Contributions

- Modeling the guidance image (for detail injection) as a graph through a region adjacency graph (RAG) performed over an initial over-segmentation obtained by means of SLIC and followed by a hierarchical merging step to obtain a meaningful regional map.

- A graph-driven pansharpening method is proposed by considering the local simplex algorithm for gain estimation as well as the graph-based guidance image (map) for detail injection, with the aim of refining the fused outcomes at region level.

- Extensive experimental comparisons between pixel- and region-wise pansharpening methods as well as between superpixel versus graph-driven superpixel guided injection schemes are presented. The performance of the proposed method is highlighted in comparison with traditional, variational optimization, and deep learning methods.

2. Preliminaries and Background

2.1. Pansharpening as a Unified Model for Fusion

2.1.1. Global Injection Scheme

2.1.2. Multiplicative Injection Scheme

2.1.3. Projective Injection Scheme

2.2. Simple Linear Iterative Clustering Method

- Initialize the k cluster centres defined by which denotes the pixel position. The pixel intensity is incorporated into the feature vector . The grid interval approximately and uniformly determines the superpixels size, where N is the number of pixels.

- Each pixel at the position is associated to the nearest cluster centre () at the position according to the distance () estimated by the formula:and the color CIELAB distance is defined as:such that the clustering metric can be formulated:where m is the compactness parameter.

- By specifying the clustering metric as in Equation (7), the parameter m allows computation of the relative interest between the grayscale similarity and spatial proximity.

| Algorithm 1: Pseudocode of SLIC method applied to MS image. |

Input: : MS image (R,G,B bands), k: number of initial clusters, m: compactness parameter. Output: : Label matrix result of the SLIC applied on RGB input image. Represent the RGB image in the CIELAB space color: . Initialize k cluster centres by sampling pixels at a uniform grid S: , where is the feature vector, is the pixel intensity and are its coordinates. for each cluster centres and given a pixel coordiantes do

Compute the spatial distance () using Equation (5) as:

end Compute the new cluster centre according to the lowest distance estimated in the grid Update the SLIC map by computing the distances between the previous and the newest cluster (). Repeat the algorithm steps (3)-(5) until the distance between two cluster centres is not a null-value: Label the resulting semantic segmentation map, , assigning each superpixel with . |

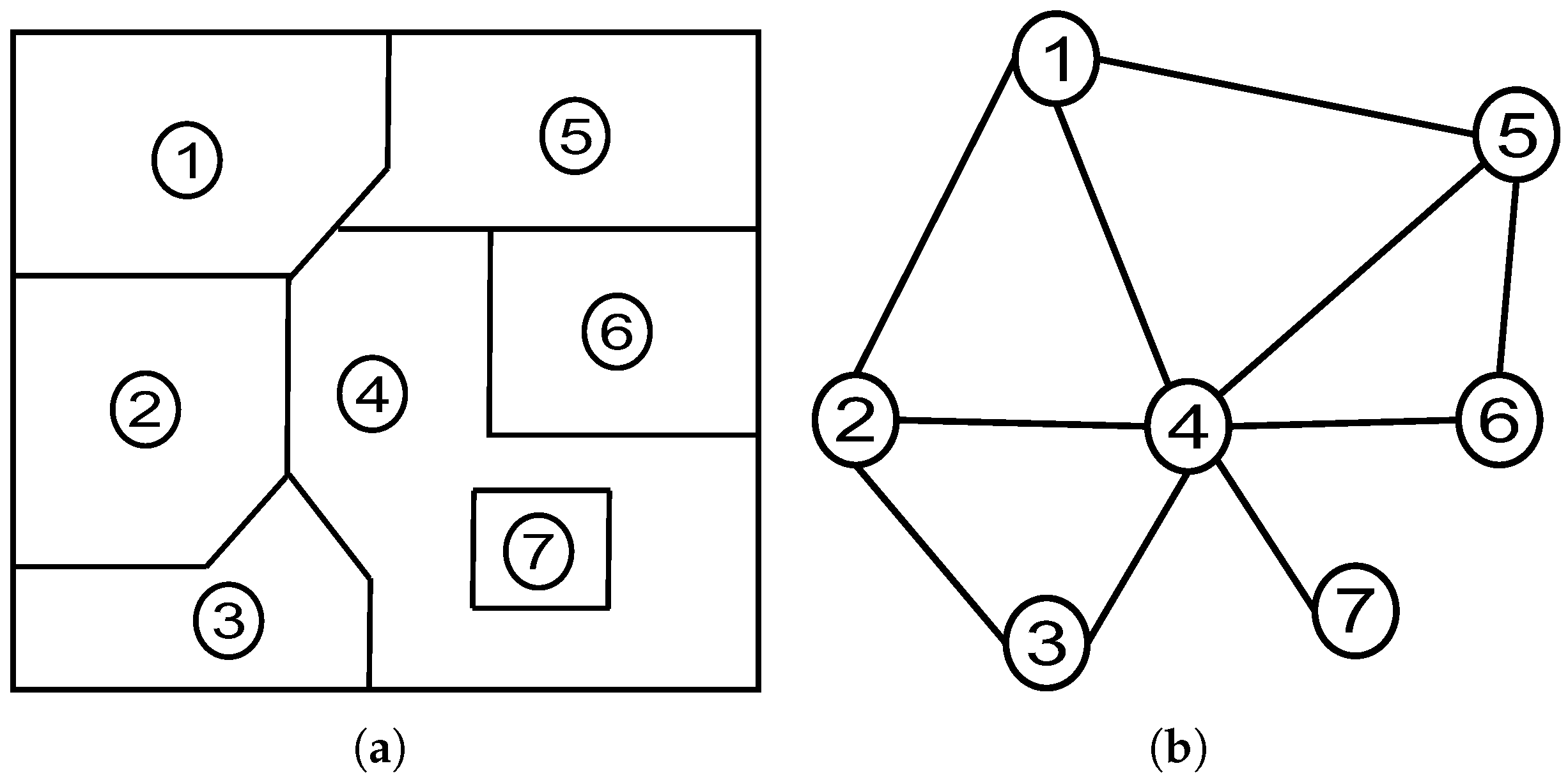

2.3. Image Graph Representation via Region Adjacency Graph

| Algorithm 2: Pseudocode of RAG method. |

| Input: : A greyscale image: . Output: : RAG image-based graph: . Define a set of vertices from an over-segmented image using Equation (11). for each couple of labels (p,q)do

Define the respective vertices as:

end Compute new vertices and edges by repeating steps (1) et (2) until the distance between two edges is equal to zero, as: Obtain the RAG segmented image defined by Equation (10). |

3. Materials and Methods

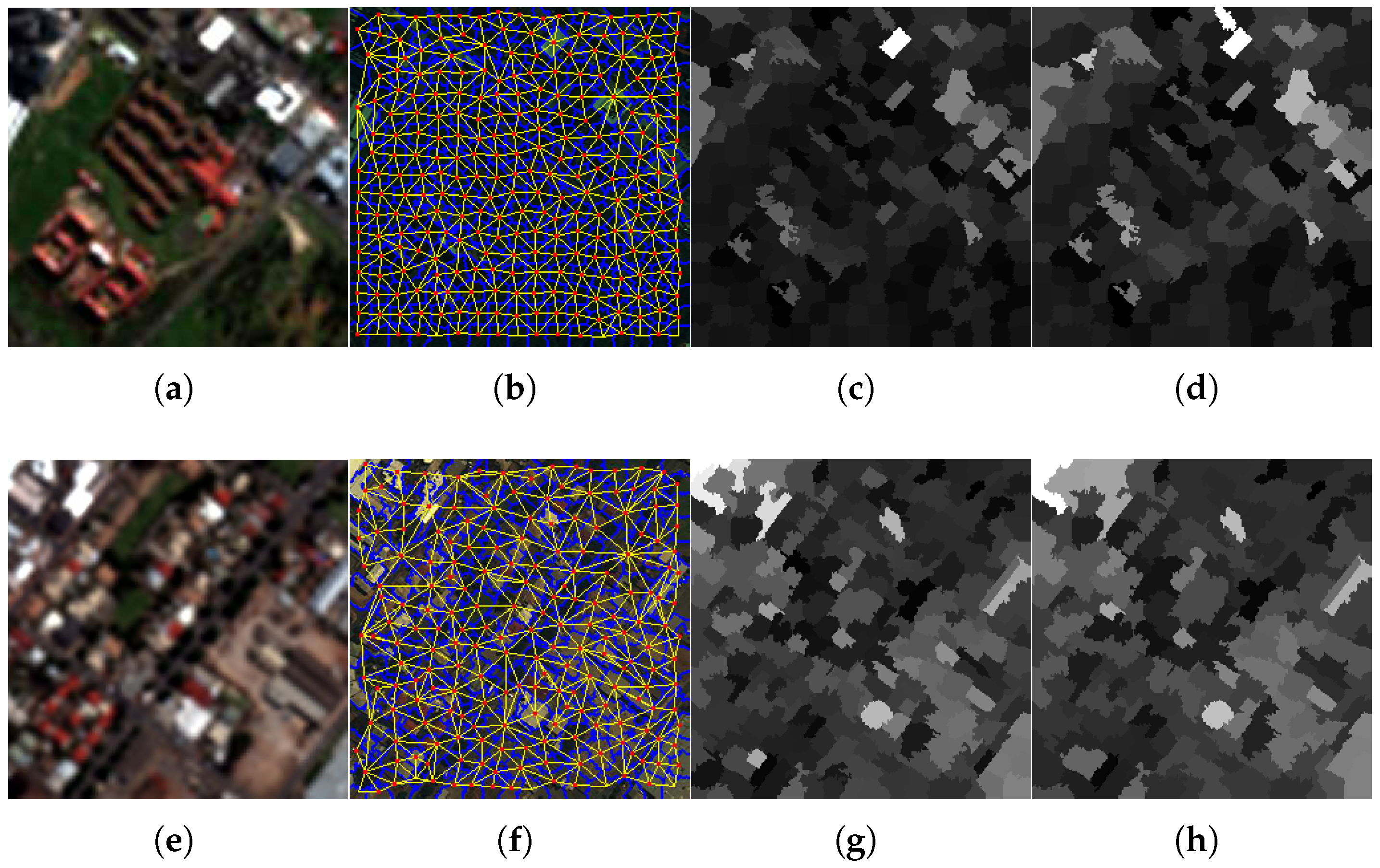

3.1. RAG-Based SLIC Performed on MS Image

3.1.1. SLIC Segmentation

3.1.2. RAG on SLIC

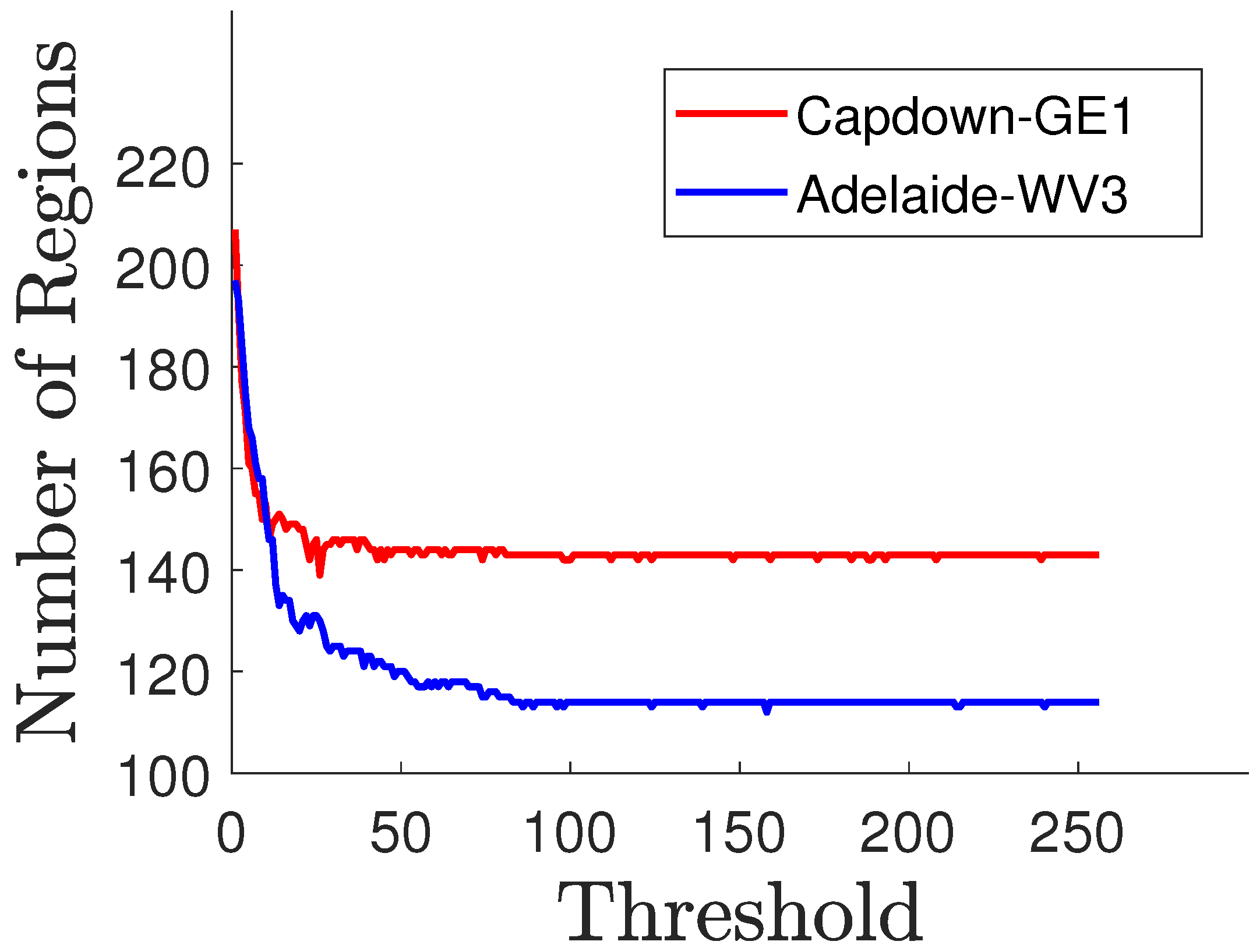

3.1.3. Node Merging

3.2. Graph-Based Superpixel Guided Regression Fusion Rule

4. Experiments and Results

4.1. Benchmarks and Implementation Details

4.2. Implementation Details

4.3. Datasets

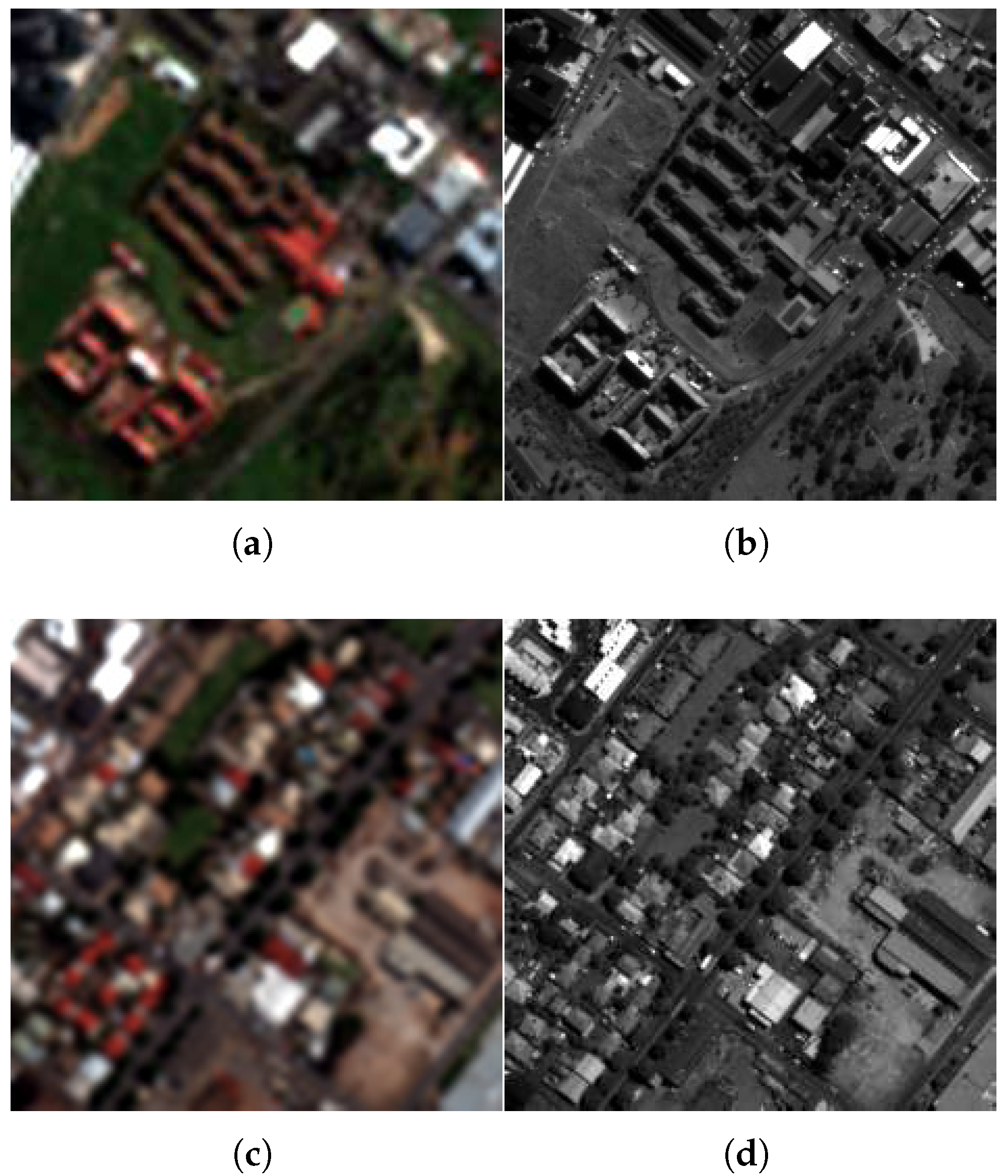

- 1.

- Captown dataset: This dataset is displayed in Figure 6a,b. It represents a sub-urban area of the city of Capetown (South Africa). It is collected by the GeoEye-1 (GE-1) satellite that works in the visible and near-infrared (NIR) spectrum range. It is composed of a PAN and a four-band MS image (red, green, blue, and NIR). The spatial sampling interval (SSI) is 2 m for MS and m for PAN images. The size of the dataset is for an MS image and for PAN. The resolution ratio is equal to four. The radiometric resolution is 16 bits. The characteristics of the Captown set include buildings, vegetation, and grass soil.

- 2.

- Adelaide dataset: This dataset is exhibited in Figure 6c,d. It displays an urban area of Adelaide city (Australia). It is acquired by the WorldView-3 (WV-3) satellite, working in the visible and near-infrared (NIR) spectrum range. The data contain an eight-band MS image covering four standard (blue, green, red, and NIR1) and four new (coastal, yellow, red edge, and NIR2) bands and a PAN image, with a m and a m SSI, respectively. The size of the PAN image is pixels and that of the MS image is pixels. The resolution ratio is equal to four. The radiometric resolution is 16 bits. The characteristics of the Adelaide set include dense buildings, trees, soil, and some cars.

4.4. Quality Metrics

- 1.

- The Quaternion-based index, , is a global multiband extension [1] of the Universal image quality index [53]. The quality metric was first introduced for evaluating a four-band pansharpened MS image, and extended to MS images with bands. Taking an image with N spectral bands, a pixel is defined by a hypercomplex number having one real part and imaginary parts. Let us consider two hypercomplex numbers and , which represent the reference and the fused spectral images at pixel coordinates . The index is written as follows:where denotes the covariance between the two hypercomplex number z and . (similarly ) denotes the standard deviation of the hypercomplex number z (similarly ). (similarly ) denotes the mean value of the hypercomplex number z (similarly ). The metric is viewed as the product of three terms (as their ranking), which are the hypercomplex correlation coefficient, the contrast changes, and the mean bias between z and .

- 2.

- The Spectral Angle Mapper (SAM) is a global spectral dissimilarity or distortion index. It was proposed for discriminating the materials based on their reflectance spectra [56]. Given two vectors, and , which define the reference spectral pixel vector and the corresponding fused spectral pixel, respectively. The SAM index denotes the absolute value of the spectral angles between the two vectors, given by:The SAM metric is expressed in degrees (∘), and its optimal value is equal to zero if the reference and the fused vectors are spectrally identical.

- 3.

- The Erreur Relative Globale Adimensionnelle de Synthèse (ERGAS) [44] is a normalized dissimilarity index estimated between the reference and the multi-band fused images. It is defined as:where is the ratio between the pixel size of PAN and MS (generally equal to for many sensors in the pansharpening case study). is the mean (i.e., average) of the band of the reference image. N is the number of bands. is the root mean square error of the band. Low values of ERGAS indicate high similarity between the reference and the fused images.

- 4.

- The Spatial Correlation Coefficient (SCC) is used for assessing the similarity between the spatial details of the pansharpened and the reference images. It uses a high-pass filter (a Laplacian filter) to extract the high-frequency information from both the reference and fused images, and computes the correlation coefficient between them. It is usually known as the Zou protocol [54]. The kernel of the Laplacian filter involved in this procedure is given by:and the Pearson correlation coefficient is computed as:where X and Y are the reference and fused images in pansharpening, respectively. w and h are the width and height of the image. represents the average of the image X (similarly the image Y). A high SCC value indicates that most of the spatial details of the PAN image are injected into MS bands during the fusion process.

- 5.

- The Structural Similarity Index Measure (SSIM) [57] estimates the overall fusion quality by computing the first-order statistics (the mean, variance, and covariance) of the reference and its corresponding fused MS image. The SSIM index is the combination of three contrast modules, called brightness, contrast, and structure, given by:whereand , , , , and are the means, variances, and covariances of X and Y, respectively.The optimal value of SSIM is equal to one; a high value indicates high similarity between the images.

- 6.

- The Peak Signal-to-Noise Ratio (PSNR) is originally introduced to estimate the noise quantity in images [57]. Then, it is used to calculate the Mean Squared Error (MSE) between the original and distorted images. It is expressed as:where denotes the maximum value representing the color of pixel of an image I.In the context of image pansharpening, MSE is defined as:where and are the reference and pansharpened images of size .The high value of PSNR indicates that the reconstructed image presents less distortion compared to the reference MS image.

- 7.

- The Quality with no Reference (QNR) protocol [55] is proposed for the assessment of fused images at full-resolution at the scale of the PAN band. This metric allows tackling the problem of unavailability of the true MS image for a full-scale PAN image.The QNR index is computed as the product of two normalized measurements of the spectral and spatial consistencies. In more detail, two distortions indexes, called the spectral distortion and the spatial distortion , are combined to define the QNR index, as follows:where (for most applications).The optimal value of is equal to one.

4.5. Results and Discussion

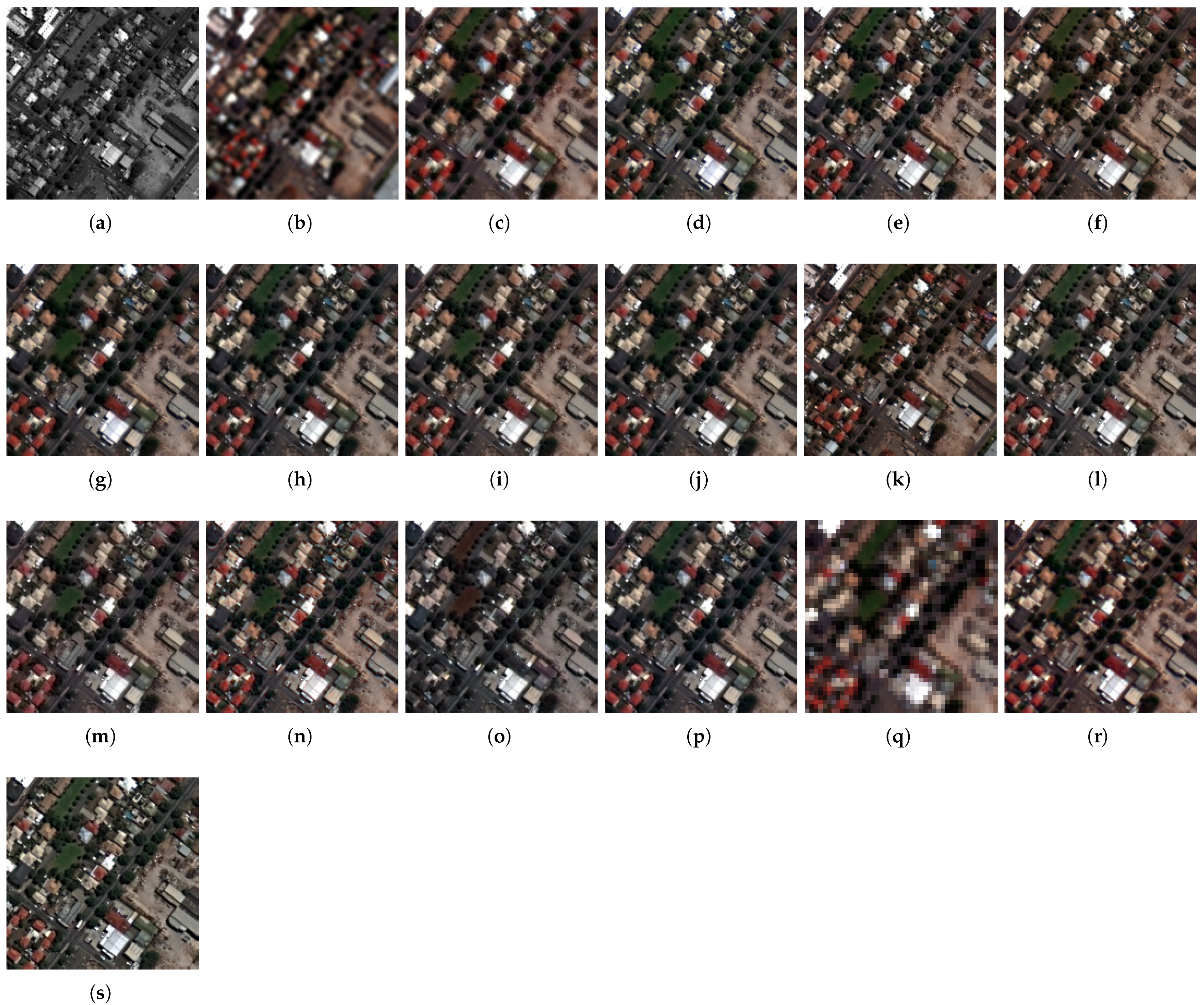

4.5.1. General Fusion Results

4.5.2. Full-Scale Image Results

4.5.3. Quantitative Performances

5. Conclusions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| AWLP | Additive wavelet luminance proportion |

| BDSD | Band-dependent spatial detail |

| BT-H | Brovey (BT) with haze correction |

| CS | Component substitution |

| CNN | Pansharpening based on convolutional neural network |

| CS-D | Pansharpening based on sparse representation of injected details |

| ERGAS | Erreur Relative Globale Adimensionnelle de Synthèse |

| EXP | Expanded multispectral image |

| FE-HPM | Filter estimation based on a semi-blind deconvolution framework and HPM model |

| GLP | Generalized Laplacian pyramid |

| GLP-BPT | GLP based on binary partition tree |

| GLP-MTF | GLP tailored to the MTF |

| GLP-SDM | GLP based on spectral distortion minimization |

| GLP-OLS | GLP based on ordinary least squares model |

| GraphGLP | Pansharpening based on LP and graph model |

| GSA | Gram–Schmidt adaptive algorithm |

| GSA-BPT | GSA with binary partition tree |

| MF-HG | Half-gradient morphological operator |

| MRA | Multi-resolution analysis |

| MMs | Model-based methods |

| MS | Multispectral image |

| MTF | Modulation transfer function |

| NIR | Near-infrared band |

| PAN | Panchromatic image |

| PRACS | Partial replacement adaptive CS |

| PSNR | Peak signal-to-noise ratio |

| PNN | Pansharpening based on neural networks |

| PWMBF | Model-based fusion using PCA and wavelets |

| RAG | Region adjacency graph |

| SAM | Spectral angle mapper |

| SCC | Spatial correlation coefficient |

| SLIC | Simple linear iterative clustering |

| SSIM | Structural similarity index measure |

| TV | Pansharpening based on total variation |

| Quaternion-based index | |

| QNR | Quality with no reference |

References

- Vivone, G.; Alparone, L.; Chanussot, J.; Dalla Mura, M.; Garzelli, A.; Licciardi, G.A.; Restaino, R.; Wald, L. A Critical Comparison Among Pansharpening Algorithms. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2565–2586. [Google Scholar] [CrossRef]

- Vivone, G.; Mura, M.D.; Garzelli, A.; Pacifici, F. A Benchmarking Protocol for Pansharpening: Dataset, Preprocessing, and Quality Assessment. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 6102–6118. [Google Scholar] [CrossRef]

- Vivone, G.; Mura, M.D.; Garzelli, A.; Restaino, R.; Scarpa, G.; Ulfarsson, M.O.; Alparone, L.; Chanussot, J. A New Benchmark Based on Recent Advances in Multispectral Pansharpening: Revisiting Pansharpening with Classical and Emerging Pansharpening Methods. IEEE Geosci. Remote Sens. Mag. 2021, 9, 53–81. [Google Scholar] [CrossRef]

- Hallabia, H. Advanced Trends in Optical Remotely Sensed Data Fusion: Pansharpening Case Study. Iris J. Astron. Satell. Commun. 2025, 1, 1–3. [Google Scholar]

- Alparone, L.; Garzelli, A. Benchmarking of Multispectral Pansharpening: Reproducibility, Assessment, and Meta-Analysis. J. Imaging 2025, 11, 1. [Google Scholar] [CrossRef]

- Hallabia, H.; Kallel, A.; Hamida, A.B.; Hégarat-Mascle, S.L. High Spectral Quality Pansharpening Approach based on MTF-matched Filter Banks. Multidimens. Syst. Signal Process. 2016, 27, 831–861. [Google Scholar] [CrossRef]

- Hallabia, H.; Hamam, H.; Hamida, A.B. An Optimal Use of SCE-UA Method Cooperated With Superpixel Segmentation for Pansharpening. IEEE Geosci. Remote Sens. Lett. 2020, 18, 1620–1624. [Google Scholar] [CrossRef]

- Meng, X.; Shen, H.; Li, H.; Zhang, L.; Fu, R. Review of the pansharpening methods for remote sensing images based on the idea of meta-analysis: Practical discussion and challenges. Inf. Fusion 2019, 46, 102–113. [Google Scholar] [CrossRef]

- Benzenati, T.; Kessentini, Y.; Kallel, A.; Hallabia, H. Generalized Laplacian Pyramid Pan-Sharpening Gain Injection Prediction Based on CNN. IEEE Geosci. Remote Sens. Lett. 2019, 17, 651–655. [Google Scholar] [CrossRef]

- Restaino, R.; Vivone, G.; Addesso, P.; Chanussot, J. A Pansharpening Approach Based on Multiple Linear Regression Estimation of Injection Coefficients. IEEE Geosci. Remote Sens. Lett. 2019, 17, 102–106. [Google Scholar] [CrossRef]

- Vivone, G.; Marano, S.; Chanussot, J. Pansharpening: Context-Based Generalized Laplacian Pyramids by Robust Regression. IEEE Trans. Geosci. Remote Sens. 2020, 58, 6152–6167. [Google Scholar] [CrossRef]

- Addesso, P.; Vivone, G.; Restaino, R.; Chanussot, J. A Data-Driven Model-Based Regression Applied to Panchromatic Sharpening. IEEE Trans. Image Process. 2020, 29, 7779–7794. [Google Scholar] [CrossRef]

- Gillespie, A.R.; Kahle, A.B.; Walker, R.E. Color enhancement of highly correlated images. II. Channel ratio and “chromaticity” transformation techniques. Remote Sens. Environ. 1987, 22, 343–365. [Google Scholar] [CrossRef]

- Lolli, S.; Alparone, L.; Garzelli, A.; Vivone, G. Haze Correction for Contrast-Based Multispectral Pansharpening. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2255–2259. [Google Scholar] [CrossRef]

- Chavez, P.S.; Sides, S.C.; Anderson, J.A. Comparison of Three Different Methods to Merge Multiresolution and Multispectral Data: Landsat TM and SPOT Panchromatic. Photogramm. Eng. Remote Sens. 1991, 57, 295–303. [Google Scholar]

- Tu, T.M.; Su, S.C.; Shyu, H.C.; Huang, P.S. A new look at IHS-like image fusion methods. Inf. Fusion 2001, 2, 177–186. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving Component Substitution Pansharpening Through Multivariate Regression of MS +Pan Data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Choi, J.; Yu, K.; Kim, Y. A New Adaptive Component-Substitution-Based Satellite Image Fusion by Using Partial Replacement. IEEE Trans. Geosci. Remote Sens. 2011, 49, 295–309. [Google Scholar] [CrossRef]

- Garzelli, A.; Nencini, F.; Capobianco, L. Optimal MMSE Pan Sharpening of Very High Resolution Multispectral Images. IEEE Trans. Geosci. Remote Sens. 2008, 46, 228–236. [Google Scholar] [CrossRef]

- Ghahremani, M.; Ghassemian, H. Nonlinear IHS: A Promising Method for Pan-Sharpening. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1606–1610. [Google Scholar] [CrossRef]

- Khan, M.; Chanussot, J.; Condat, L.; Montavert, A. Indusion: Fusion of multispectral and panchromatic images using the induction scaling technique. IEEE Geosci. Remote Sens. Lett. 2008, 5, 98–102. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A. Context-driven fusion of high spatial and spectral resolution images based on oversampled multiresolution analysis. IEEE Trans. Geosci. Remote Sens. 2002, 40, 2300–2312. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. MTF-tailored Multiscale Fusion of High-resolution MS and Pan Imagery. Photogramm. Eng. Remote Sens. 2006, 72, 591–596. [Google Scholar] [CrossRef]

- Hallabia, H.; Hamam, H.; Hamida, A.B. A context-driven pansharpening method using superpixel based texture analysis. Int. J. Image Data Fusion 2021, 12, 1–22. [Google Scholar] [CrossRef]

- Vivone, G.; Restaino, R.; Chanussot, J. A Regression-Based High-Pass Modulation Pansharpening Approach. IEEE Trans. Geosci. Remote Sens. 2018, 56, 984–996. [Google Scholar] [CrossRef]

- Vivone, G.; Restaino, R.; Chanussot, J. Full Scale Regression-Based Injection Coefficients for Panchromatic Sharpening. IEEE Trans. Image Process. 2018, 27, 3418–3431. [Google Scholar] [CrossRef] [PubMed]

- Nunez, J.; Otazu, X.; Fors, O.; Prades, A.; Pala, V.; Arbiol, R. Multiresolution-based image fusion with additive wavelet decomposition. IEEE Trans. Geosci. Remote Sens. 1999, 37, 1204–1211. [Google Scholar] [CrossRef]

- Restaino, R.; Vivone, G.; Mura, M.D.; Chanussot, J. Fusion of Multispectral and Panchromatic Images Based on Morphological Operators. IEEE Trans. Image Process. 2016, 25, 2882–2895. [Google Scholar] [CrossRef] [PubMed]

- Otazu, X.; González-Audícana, M.; Fors, O.; Nunez, J. Introduction of sensor spectral response into image fusion methods. Application to wavelet-based methods. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2376–2385. [Google Scholar] [CrossRef]

- Kallel, A. MTF-Adjusted Pansharpening Approach Based on Coupled Multiresolution Decompositions. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3124–3145. [Google Scholar] [CrossRef]

- Liao, W.; Huang, X.; Coillie, F.V.; Gautama, S.; Pižurica, A.; Philips, W.; Liu, H.; Zhu, T.; Shimoni, M.; Moser, G.; et al. Processing of Multiresolution Thermal Hyperspectral and Digital Color Data: Outcome of the 2014 IEEE GRSS Data Fusion Contest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 2984–2996. [Google Scholar] [CrossRef]

- He, X.; Condat, L.; Bioucas-Dias, J.; Chanussot, J.; Xia, J. A New Pansharpening Method Based on Spatial and Spectral Sparsity Priors. IEEE Trans. Image Process. 2014, 23, 4160–4174. [Google Scholar] [CrossRef] [PubMed]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. CNN-based pansharpening of multi-resolution remote-sensing images. In Proceedings of the 2017 Joint Urban Remote Sensing Event (JURSE), Dubai, United Arab Emirates, 6–8 March 2017; pp. 1–4. [Google Scholar]

- Wei, Y.; Yuan, Q.; Shen, H.; Zhang, L. Boosting the Accuracy of Multispectral Image Pansharpening by Learning a Deep Residual Network. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1795–1799. [Google Scholar] [CrossRef]

- Vivone, G.; Deng, L.J.; Deng, S.; Hong, D.; Jiang, M.; Li, C.; Li, W.; Shen, H.; Wu, X.; Xiao, J.L.; et al. Deep Learning in Remote Sensing Image Fusion: Methods, protocols, data, and future perspectives. IEEE Geosci. Remote Sens. Mag. 2025, 13, 269–310. [Google Scholar] [CrossRef]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O.; Benediktsson, J.A. Model-Based Fusion of Multi- and Hyperspectral Images Using PCA and Wavelets. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2652–2663. [Google Scholar] [CrossRef]

- Vivone, G.; Restaino, R.; Mura, M.D.; Chanussot, J. Multi-band semiblind deconvolution for pansharpening applications. In Proceedings of the 2015 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015; pp. 41–44. [Google Scholar]

- Palsson, F.; Sveinsson, J.R.; Ulfarsson, M.O. A New Pansharpening Algorithm Based on Total Variation. IEEE Geosci. Remote Sens. Lett. 2014, 11, 318–322. [Google Scholar] [CrossRef]

- Vicinanza, M.R.; Restaino, R.; Vivone, G.; Mura, M.D.; Chanussot, J. A Pansharpening Method Based on the Sparse Representation of Injected Details. IEEE Geosci. Remote Sens. Lett. 2015, 12, 180–184. [Google Scholar] [CrossRef]

- Scarpa, G.; Vitale, S.; Cozzolino, D. Target-Adaptive CNN-Based Pansharpening. IEEE Trans. Geosci. Remote Sens. 2018, 56, 5443–5457. [Google Scholar] [CrossRef]

- Ma, J.; Yu, W.; Chen, C.; Liang, P.; Guo, X.; Jiang, J. Pan-GAN: An unsupervised pan-sharpening method for remote sensing image fusion. Inf. Fusion 2020, 62, 110–120. [Google Scholar] [CrossRef]

- Azarang, A.; Manoochehri, H.E.; Kehtarnavaz, N. Convolutional Autoencoder-Based Multispectral Image Fusion. IEEE Access 2019, 7, 35673–35683. [Google Scholar] [CrossRef]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. Pansharpening by Convolutional Neural Networks. Remote Sens. 2016, 8, 594. [Google Scholar] [CrossRef]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of satellite images of different spatial resolutions: Assessing the quality of resulting images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar]

- Restaino, R.; Mura, M.D.; Vivone, G.; Chanussot, J. Context-Adaptive Pansharpening Based on Image Segmentation. IEEE Trans. Geosci. Remote Sens. 2017, 55, 753–766. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Lotti, F.; Selva, M. A Comparison Between Global and Context-Adaptive Pansharpening of Multispectral Images. IEEE Geosci. Remote Sens. Lett. 2009, 6, 302–306. [Google Scholar] [CrossRef]

- Bampis, C.G.; Maragos, P.; Bovik, A.C. Graph-Driven Diffusion and Random Walk Schemes for Image Segmentation. IEEE Trans. Image Process. 2017, 26, 35–50. [Google Scholar] [CrossRef]

- Xiao, J.L.; Huang, T.Z.; Deng, L.J.; Wu, Z.C.; Vivone, G. A New Context-Aware Details Injection Fidelity with Adaptive Coefficients Estimation for Variational Pansharpening. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–15. [Google Scholar] [CrossRef]

- Selva, M.; Santurri, L.; Baronti, S. On the Use of the Expanded Image in Quality Assessment of Pansharpened Images. IEEE Geosci. Remote Sens. Lett. 2018, 15, 320–324. [Google Scholar] [CrossRef]

- Tu, T.M.; Huang, P.S.; Hung, C.L.; Chang, C.P. A fast intensity-hue-saturation fusion technique with spectral adjustment for IKONOS imagery. IEEE Geosci. Remote Sens. Lett. 2004, 1, 309–312. [Google Scholar] [CrossRef]

- Liu, J.G. Smoothing Filter-based Intensity Modulation: A spectral preserve image fusion technique for improving spatial details. Int. J. Remote Sens. 2000, 21, 3461–3472. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A. A universal image quality index. IEEE Signal Process. Lett. 2002, 9, 81–84. [Google Scholar] [CrossRef]

- Zhou, J.; Civco, D.L.; Silander, J.A. A wavelet transform method to merge Landsat TM and SPOT panchromatic data. Int. J. Remote Sens. 1998, 19, 743–757. [Google Scholar] [CrossRef]

- Alparone, L.; Aiazzi, B.; Baronti, S.; Garzelli, A.; Nencini, F.; Selva, M. Multispectral and panchromatic data fusion assessment without reference. Photogramm. Eng. Remote Sens. 2008, 74, 193–200. [Google Scholar] [CrossRef]

- Yuhas, R.H.; Goetz, A.F.; Boardman, J.W. Discrimination among semi-arid landscape endmembers using the Spectral Angle Mapper (SAM) algorithm. In Proceedings of the JPL, Summaries of the Third Annual JPL Airborne Geoscience Workshop, Pasadena, CA, USA, 1–5 June 1992; pp. 147–149. [Google Scholar]

- Wang, Z.; Bovik, A.C.; Sheikh, H.R.; Simoncelli, E.P. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef]

- Huynh-Thu, Q.; Ghanbari, M. Scope of validity of PSNR in image/video quality assessment. Electron. Lett. 2008, 44, 800–801. [Google Scholar] [CrossRef]

- Hallabia, H.; Hamam, H. A Graph-Based Textural Superpixel Segmentation Method for Pansharpening Application. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 2640–2643. [Google Scholar]

- Hallabia, H.; Hamam, H. An Enhanced Pansharpening Approach Based on Second-Order Polynomial Regression. In Proceedings of the 2021 International Wireless Communications and Mobile Computing (IWCMC), Harbin City, China, 28 June–2 July 2021; pp. 1489–1493. [Google Scholar]

| Method | Q4 | SAM | ERGAS | SCC | SSIM | PSNR | QNR |

|---|---|---|---|---|---|---|---|

| EXP | 0.741 | 9.474 | 10.389 | 0.243 | 0.734 | −39.318 | 0.921 |

| BDSD | 0.879 | 8.788 | 7.526 | 0.636 | 0.850 | -36.985 | 0.926 |

| PRACS | 0.839 | 9.380 | 8.549 | 0.586 | 0.819 | −37.751 | 0.931 |

| GSA | 0.853 | 9.456 | 7.888 | 0.637 | 0.837 | −37.441 | 0.881 |

| GSA-BPT | 0.901 | 8.498 | 6.887 | 0.657 | 0.884 | −36.047 | 0.897 |

| AWLP | 0.898 | 8.377 | 7.399 | 0.661 | 0.892 | −36.085 | 0.919 |

| MF-HG | 0.911 | 8.762 | 6.656 | 0.676 | 0.874 | −36.222 | 0.846 |

| CS-D | 0.861 | 12.557 | 7.961 | 0.661 | 0.810 | −38.375 | 0.675 |

| GLP-MTF | 0.901 | 8.689 | 6.863 | 0.658 | 0.880 | −36.261 | 0.839 |

| GLP-SDM | 0.912 | 8.812 | 6.465 | 0.682 | 0.875 | −36.095 | 0.858 |

| GLP-OLS | 0.905 | 9.184 | 6.725 | 0.652 | 0.869 | −36.504 | 0.835 |

| GLP-BPT | 0.902 | 8.492 | 6.823 | 0.656 | 0.886 | −35.974 | 0.935 |

| PWMBF | 0.874 | 10.133 | 7.489 | 0.645 | 0.836 | −37.550 | 0.764 |

| TV | 0.862 | 9.371 | 7.874 | 0.413 | 0.809 | −37.910 | 0.942 |

| FE-HPM | 0.914 | 8.804 | 6.419 | 0.681 | 0.876 | −36.066 | 0.839 |

| BT-H | 0.910 | 8.168 | 6.591 | 0.690 | 0.887 | −35.877 | 0.913 |

| PNN | 0.889 | 8.016 | 6.905 | 0.624 | 0.873 | −36.013 | 0.919 |

| CNN | 0.899 | 8.285 | 6.684 | 0.644 | 0.872 | −35.992 | 0.941 |

| GraphGLP-OLS | 0.903 | 8.999 | 6.749 | 0.655 | 0.873 | −36.392 | 0.935 |

| GraphGLP-Simplex | 0.924 | 8.954 | 6.381 | 0.665 | 0.895 | −36.061 | 0.935 |

| Method | Q8 | SAM | ERGAS | SCC | SSIM | PSNR | QNR |

|---|---|---|---|---|---|---|---|

| EXP | 0.706 | 8.205 | 8.121 | 0.249 | 0.601 | −43.713 | 0.915 |

| BDSD | 0.845 | 7.957 | 6.161 | 0.646 | 0.770 | −41.506 | 0.943 |

| PRACS | 0.847 | 8.174 | 5.871 | 0.665 | 0.794 | −41.167 | 0.917 |

| GSA | 0.838 | 8.178 | 5.970 | 0.685 | 0.792 | −41.326 | 0.884 |

| GSA-BPT | 0.885 | 7.811 | 5.266 | 0.709 | 0.834 | −40.210 | 0.847 |

| AWLP | 0.875 | 7.950 | 5.466 | 0.704 | 0.820 | −40.410 | 0.890 |

| MF-HG | 0.880 | 8.040 | 5.512 | 0.698 | 0.817 | −40.661 | 0.835 |

| CS-D | 0.803 | 11.520 | 6.628 | 0.700 | 0.734 | −42.486 | 0.660 |

| GLP-MTF | 0.880 | 7.956 | 5.391 | 0.703 | 0.822 | −40.457 | 0.838 |

| GLP-SDM | 0.881 | 8.028 | 5.355 | 0.705 | 0.820 | −40.441 | 0.845 |

| GLP-OLS | 0.887 | 8.174 | 5.250 | 0.698 | 0.830 | −40.364 | 0.850 |

| GLP-BPT | 0.886 | 7.795 | 5.236 | 0.708 | 0.835 | −40.164 | 0.879 |

| PWMBF | 0.855 | 8.985 | 5.788 | 0.701 | 0.796 | −41.239 | 0.753 |

| TV | 0.895 | 7.853 | 5.206 | 0.708 | 0.839 | −40.203 | 0.949 |

| FE-HPM | 0.882 | 8.030 | 5.347 | 0.705 | 0.821 | −40.429 | 0.839 |

| BT-H | 0.894 | 8.049 | 5.225 | 0.713 | 0.835 | −40.197 | 0.816 |

| PNN | 0.860 | 8.611 | 7.037 | 0.540 | 0.785 | −42.137 | 0.908 |

| CNN | 0.763 | 8.910 | 7.283 | 0.403 | 0.651 | −43.048 | 0.920 |

| GraphGLP-OLS | 0.886 | 8.187 | 5.260 | 0.697 | 0.829 | −40.381 | 0.922 |

| GraphGLP-Simplex | 0.909 | 8.183 | 4.935 | 0.861 | 0.720 | −39.792 | 0.922 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hallabia, H. A Graph-Based Superpixel Segmentation Approach Applied to Pansharpening. Sensors 2025, 25, 4992. https://doi.org/10.3390/s25164992

Hallabia H. A Graph-Based Superpixel Segmentation Approach Applied to Pansharpening. Sensors. 2025; 25(16):4992. https://doi.org/10.3390/s25164992

Chicago/Turabian StyleHallabia, Hind. 2025. "A Graph-Based Superpixel Segmentation Approach Applied to Pansharpening" Sensors 25, no. 16: 4992. https://doi.org/10.3390/s25164992

APA StyleHallabia, H. (2025). A Graph-Based Superpixel Segmentation Approach Applied to Pansharpening. Sensors, 25(16), 4992. https://doi.org/10.3390/s25164992