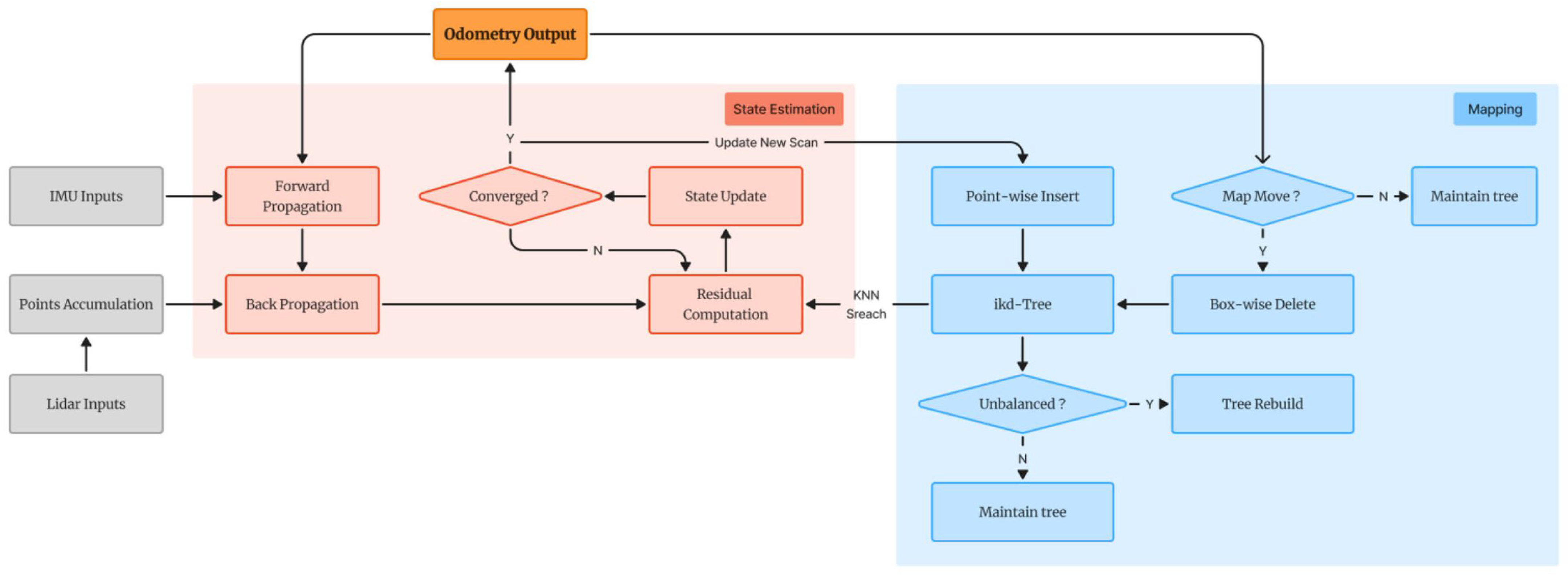

2.1. FAST-LIO2 Framework

FAST-LIO2 is a computationally efficient LiDAR–inertial odometry (LIO) system based on tightly-coupled iterative Kalman filtering, proposed by Wei Xu et al [

6]. Its state estimation module integrates a multi-stage pipeline encompassing IMU-LiDAR data fusion, motion prediction, motion distortion compensation, residual propagation and correction, and incremental state updates, ultimately delivering robust state estimation for real-time SLAM systems.

FAST-LIO2 introduces two key innovations. Firstly, it aligns the raw point clouds directly with the map, eliminating the need for feature extraction and instead using all point cloud data. This approach fully utilizes the subtle features in the environment, enhances the registration accuracy, and saves computational resources and time by omitting additional feature extraction steps, enabling the system to operate more efficiently in real-time. Secondly, it employs an incremental kd-Tree structure (ikd-Tree). The traditional kd-Tree typically requires rebuilding the entire tree with each new point insertion or deletion, which demands significant computational resources and time [

13]. The ikd-Tree adopts an incremental updating approach, allowing gradual updates to the tree structure as new point cloud data is continually received, without the need for complete reconstruction, thus avoiding the waste of computational resources in traditional spatial data structures when dealing with large-scale and dynamic data. The system architecture of FAST-LIO2 is shown in

Figure 1 [

6], which is mainly divided into the state estimation module and the mapping module.

2.1.1. Forward Propagation

Forward propagation predicts the current pose and velocity of the system based on the acceleration and angular velocity from the IMU. This process begins with the state estimation from the previous time step, and updates the state using the current IMU observations. The predicted state from the IMU provides an initial estimate for the next time step, which is then used as the initial value for the LiDAR data optimization. Through forward propagation, the system can rapidly update the robot’s position and orientation, reducing the reliance on LiDAR data and enhancing both computational efficiency and real-time performance. Forward propagation provides a good initial estimate for LiDAR optimization, and the point cloud information provided by the LiDAR further refines the result of the forward propagation, thereby enabling the fusion of IMU and LiDAR data.

2.1.2. Back Propagation

Backward propagation begins by comparing the state estimates obtained from forward propagation with the actual LiDAR data and map to calculate the residuals. A residual function is then constructed, and the residual function is minimized using an optimization algorithm (Gaussian–Newton method). Through iterative optimization, the algorithm adjusts the estimates based on the results from each iteration until the residual function converges, ultimately yielding an accurate state estimate. During LiDAR data acquisition, point clouds are accumulated over each scanning cycle. Consequently, different feature points are scanned at different times, and if the robot is in motion during this phase, its reference frame also moves. The scene observed by the LiDAR is affected by this motion, leading to motion distortions that impact the accuracy of the data. In backward propagation, the IMU-provided acceleration and angular velocity data are used to estimate and compensate for the motion distortions in the LiDAR data [

14].

2.1.3. Incremental Update and Rebalancing of ikd-Tree

In FAST-LIO2, incremental updates to the kd-tree are primarily achieved through point insertion and box deletion operations. Point insertion utilizes a tree downsampling algorithm. Initially, based on the estimated state and the given resolution l, the space is divided into cubes with side length l, and the cube C containing the point P is identified. Within C, the point , which is closest to the center , is located, and all other points are deleted. The point is then inserted into the ikd-tree. The insertion process begins with a recursive search from the root node, comparing the point in question with the tree nodes, recursively partitioning along the axis until an empty node is found, at which point the insertion point is stored and a new leaf node is created with initialized properties. Following the insertion, balance checks are performed to ensure the tree’s structural integrity.

Box deletion is carried out using lazy tags and range information, specifically the states of “deleted” and “treedeleted” in the node structure. Points marked as “deleted” are not immediately removed; instead, they are deleted during the tree reconstruction process. When all child nodes of a root node are marked as “deleted”, the root node itself is tagged as “treedeleted”. In the box deletion operation, if the bounding box containing the points to be deleted does not intersect with the bounding box , no update occurs. If completely contains , the states of “deleted” and “treedeleted” are set to true. If intersects with and the point P is within , point P is deleted, followed by attribute updates and rebalancing.

The balancing criteria for the ikd-tree consist of the

-balancing criterion and the

-deletion criterion. For a node

T and its children, the

-balancing criterion is satisfied when:

where

represents the treesize attribute of node

T, and

is a balancing factor for the tree, with

. This condition limits the number of points in the left and right subtrees, thereby controlling the height of the tree. When the following conditions are met, the tree satisfies the

-deletion criterion:

where

denotes the number of ineffective points in node

T, and

is the deletion factor that controls the number of ineffective points to be removed, with

. This condition ensures that ineffective points are removed from the tree without disturbing its structure. The tree is considered balanced when both conditions in Equations (

1) and (

2) are satisfied. If any node fails to meet these criteria, a rebalancing operation is performed to restore the tree’s balance.

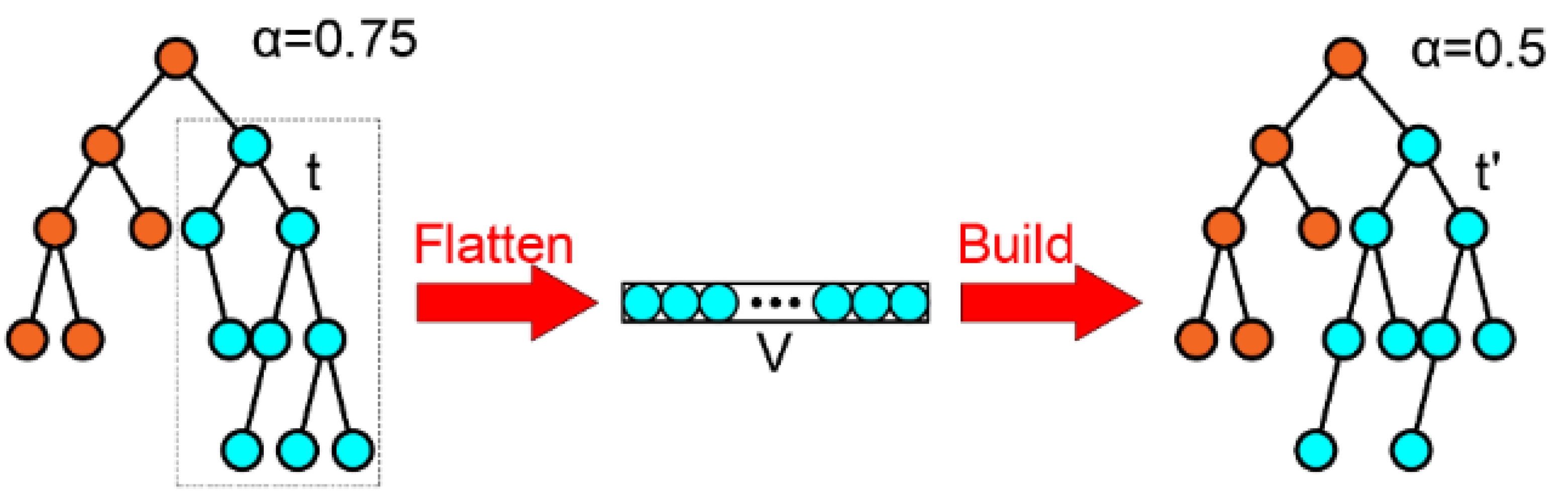

2.1.4. Tree Rebuild

When the reconstruction scale is large and time becomes a bottleneck, the ikd-tree may be monopolized. To address this, a dual-thread reconstruction method is used to improve system efficiency. When the tree’s size is smaller than the threshold

, reconstruction is performed using the main thread. Otherwise, reconstruction is handled by a secondary thread to ensure a balanced tree. During this process, insertions are temporarily suspended, and updates are stored in a vector V of active points from child nodes. The balance increment updates are logged into the OperationLogger. After completing all requests, the new balanced subtree

replaces the original subtree

t. Specifically, when the balance criterion detects that a certain subtree is unbalanced, all the nodes of the subtree will be randomly rearranged. After removing the nodes that have been marked for deletion, the remaining nodes will be reconstructed and then replaced back to their original positions, as shown in

Figure 2. If the balance criterion determines that this subtree is balanced, the the tree is kept in its original form.

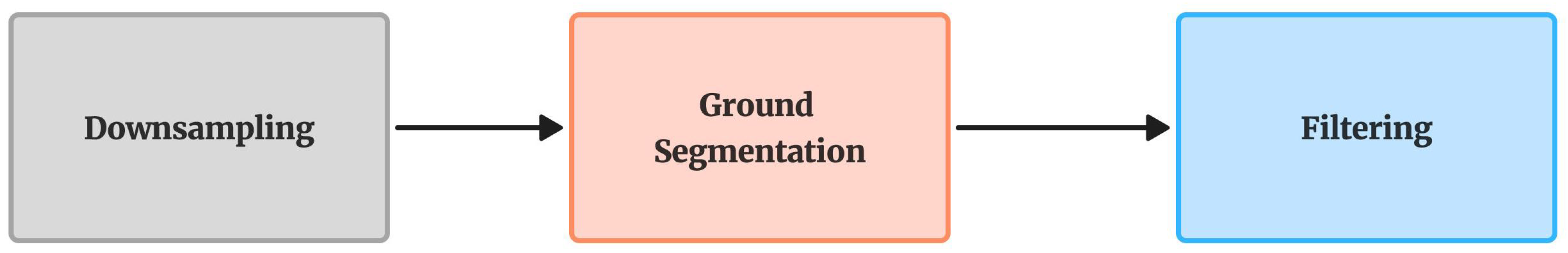

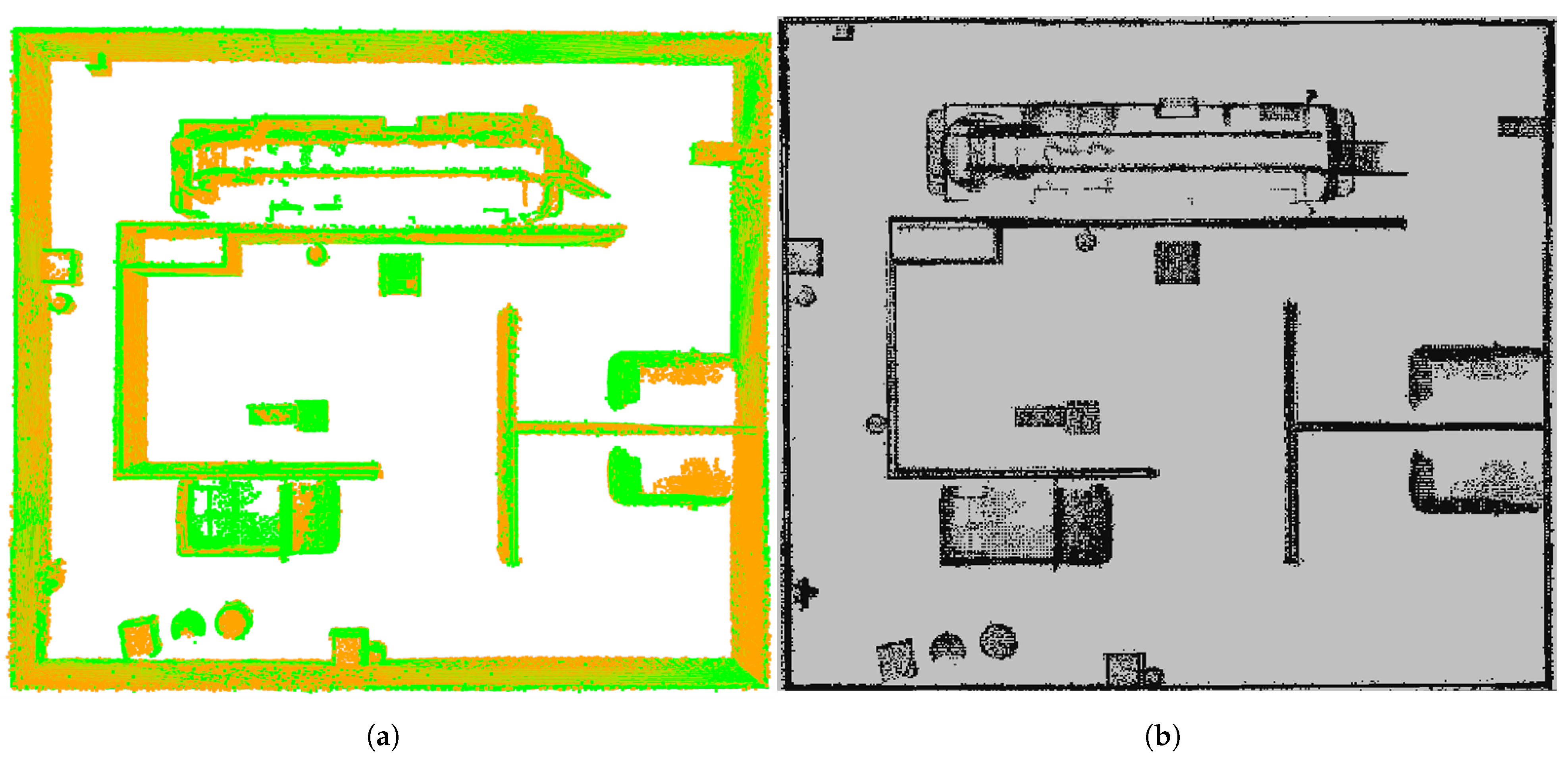

2.2. Preprocess

To enhance the quality and efficiency of the original point cloud data, as well as to provide a streamlined and reliable dataset for subsequent registration processes [

15], a point cloud preprocessing workflow is illustrated in

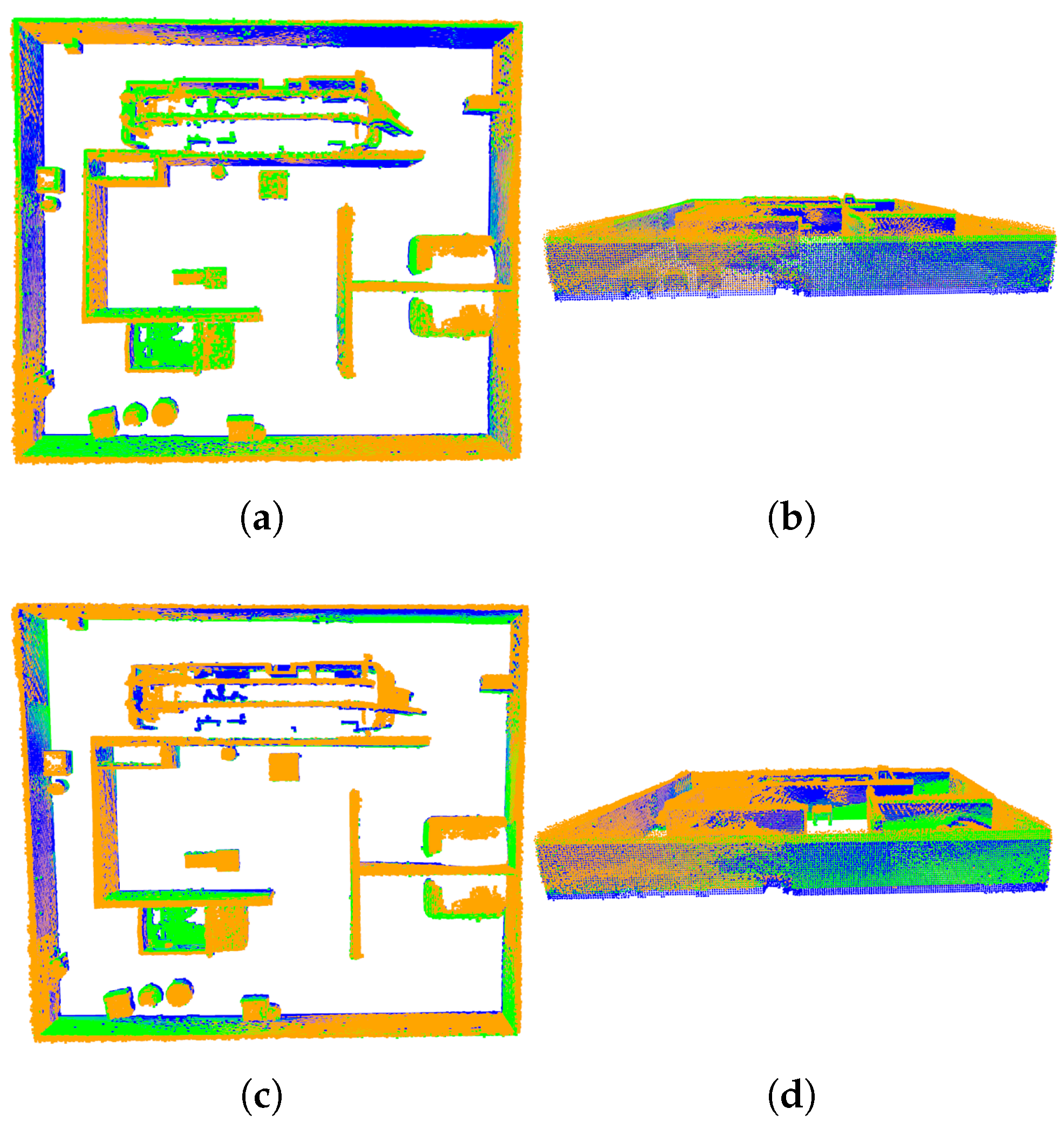

Figure 3. Initially, point cloud downsampling is performed, which reduces the number of data points, thereby lowering computational load and storage requirements while preserving the principal geometric features. This is followed by point cloud segmentation, which divides complex point cloud data into independent regions or objects with similar characteristics, thus providing a more precise and efficient data foundation for subsequent target recognition, scene reconstruction, and feature extraction. Finally, point cloud filtering is conducted to remove noise, outliers, and redundant data, while retaining critical geometric information, thereby reducing data volume and computational complexity.

In this paper, we employ voxel downsampling, a commonly used method for simplifying point cloud data. The fundamental concept involves partitioning the three-dimensional space into a series of equally-sized voxels (cubes), and replacing all points within each voxel with a representative point, typically the centroid. The aim of voxel downsampling is to transform the original point cloud

into a new point cloud

, which has fewer points but still approximately preserves the overall structure of the original cloud. We begin by defining a voxel as a cube with edges

. The index

of the voxel unit to which each point

in point cloud

P belongs can be calculated by

where notation

represents the floor function, which rounds down to the nearest integer. The term

can be understood as the voxel containing the point

. Thus, the set of points contained within a voxel unit

can be determined as

In the voxel unit

, all points are replaced by the centroid

of the points contained within. The formula for calculating the centroid is as follows:

The ground plane fitting (GPF) algorithm is well suited for applications such as autonomous driving and robotic navigation, where large-scale LiDAR point cloud data must be processed in real time [

16]. Its key advantages include fast computation, a simple structure, stable iterative convergence, and a deterministic approach that avoids random sampling. GPF effectively filters out ground points, providing a reliable foundation for subsequent obstacle detection and scene understanding. In this work, the GPF algorithm is adopted for ground segmentation.

To enhance the robustness of plane fitting, GPF introduces the concept of the lowest point representative (LPR). The algorithm begins by sorting the input point cloud

P in ascending order based on height. It then selects the lowest

points and computes their average height as follows:

where

denotes the height coordinate of a point. A threshold

is then applied to ensure that the selected seed points are sufficiently close to the actual ground surface. Specifically, all points satisfying

are selected to form the initial ground seed set S. Assuming the ground surface can be approximated by a planar model, the mathematical representation of the plane is given by:

where

denotes the normal vector of the plane, and

represents the coordinates of a point in 3D space. To estimate the plane parameters, a set of seed points

is first selected. The covariance matrix

of the seed points is then computed as:

where

denote the mean of the seed point set. The covariance matrix

is then decomposed using singular value decomposition (SVD), yielding a set of eigenvectors. The eigenvector corresponding to the smallest eigenvalue represents the normal vector

of the estimated ground plane. Subsequently, the plane offset

can be computed, allowing the distance from any point

to the fitted plane be expressed as:

A threshold is set for the point-to-plane distance. If the distance of point to the plane satisfies , the point is classified as a ground point. Otherwise, it is labeled as a non-ground point. The newly identified ground points are then used to update the seed set, and the above process is repeated for iterations to iteratively refine the plane model until convergence.

Statistical filtering is a critical step in point cloud preprocessing, aiming to effectively eliminate noise by analyzing the geometric distribution within local neighborhoods [

17,

18]. The core principle is grounded in the assumption of a Gaussian distribution: Points that are consistent with the local surface typically exhibit neighborhood distances concentrated around a mean value, while outliers deviate significantly from this pattern. This section presents a detailed description of the statistical filtering model, algorithmic workflow, and parameter optimization strategies.

Let

P denote the original point cloud. For a point

, its local neighborhood

is defined by its

k-nearest neighbors. The Euclidean distances from

to all points in

are then computed using the standard formula:

where

is a point in the neighborhood

. Subsequently, the neighborhood statistics are computed by evaluating the mean

and standard deviation

of the Euclidean distances from each point to its neighboring points, as defined by:

where

represents the average neighborhood density for point

i, while

characterizes the degree of dispersion within the local neighborhood. A global statistical threshold is then established by computing the overall mean

and standard deviation

of these local mean distances across all data points, as defined by:

The outlier rejection threshold is defined as . A point is classified as an outlier and subsequently removed if its average neighborhood distance exceeds the threshold T. The parameter is an empirical constant that controls the strictness of the filtering process. A larger value of results in a more conservative filter, retaining most points and removing only those with significantly large deviations, whereas a smaller leads to a more aggressive filter, eliminating a greater number of potential outliers.

2.3. Coarse Registration

After point cloud preprocessing, it becomes feasible to extract geometrically consistent and structured representations from low-quality raw data. However, the core challenge of point cloud registration lies in estimating the rigid transformation between two point sets, especially in scenarios where the initial pose is completely unknown. Traditional single-stage optimization methods, such as the iterative closest point (ICP) algorithm, are prone to local minima due to their sensitivity to initial alignment. To address this, a hierarchical registration strategy is typically adopted: coarse registration performs a global search for plausible alignment hypotheses, while fine registration refines the transformation locally based on the initial alignment [

19]. In the following, we present a detailed investigation of a coarse registration method based on an improved sample consensus initial alignment (SAC-IA), followed by fine registration using the VGICP algorithm.

In this study, we adopt the improved sample consensus initial alignment (SAC-IA) algorithm proposed by Zhang et al [

7]. Building upon the conventional SAC-IA framework, this method introduces scanning angle constraints and geometric shape filtering to effectively reduce the randomness and collinearity issues in feature point selection, thereby enhancing the stability of feature matching. Furthermore, the algorithm employs a three-part error evaluation strategy based on differences in FPFH descriptors [

20], Euclidean distances [

21], and geometric slopes [

22]. This enhances the ability to detect and correct mismatches, avoids convergence to local minima, and reduces the time of the algorithm. The transformation matrix is accepted only when it meets the threshold conditions for initial rotation and translation, thus providing a more accurate initialization for subsequent fine registration. The improved algorithm significantly outperforms the traditional SAC-IA method in terms of computational efficiency, particularly in scenarios involving sparse point clouds or substantial feature variation.

2.3.1. Feature Point Selection in the Target Point Cloud

In the conventional SAC-IA algorithm, the randomness in feature point selection often leads to mismatches due to incorrectly or poorly selected feature points. To address this issue, the improved SAC-IA algorithm introduces constraints on both the scanning angle of the feature set and the projection shape of feature points. Regarding the scanning angle constraint, it is assumed that for a point

in the point cloud, the elevation angle

between the point and the 2D-plane can be calculated as follows:

Next, the scanning angle

is defined, and the set of scanning angles

is denoted as:

The elevation angle range is divided into several intervals corresponding to the elements in . For each point in the point cloud, its elevation angle is compared with each in the set. If , the point is considered to correspond to and is selected as a candidate feature point, where A is a threshold for the allowable difference between the elevation angle and the scanning angle.

To avoid selecting points that are overly concentrated or overly sparse, and to impose constraints on the spatial projection of feature points, one candidate point is randomly selected from the group corresponding to

, denoted as

. Then, two additional points are selected from the candidate groups corresponding to

, satisfying:

where

and

are the upper and lower bounds for the vertical (

y-axis) spacing of the selected points, respectively. The process is repeated for

, and so on, to iteratively construct a set of feature points.

The projection of this feature point set onto the plane is constrained to form an approximate triangle, which effectively mitigates the issues of point degeneracy along the same direction and local point cloud sparsity.

2.3.2. Calculation of FPFH Values

The FPFH algorithm introduces the concept of the simplified point feature histogram (SPFH), which computes geometric relationships only between each point and its neighbors [

6]. Compared to PFH, this reduces the computational complexity significantly. The SPFH is first computed by evaluating a set of angular features between the source point

and its neighbor

using the following expressions:

where the three orthogonal basis vectors are defined as

.

measures the angle between the neighbor’s normal vector

and the direction vector from

to

.

represents the angle between the vector

u and the line segment connecting

and

.

captures the angular difference between the normal vectors of

and

.

Once the SPFH is calculated for each point, the FPFH descriptor of the source point

is computed by aggregating its own SPFH and the weighted SPFH contributions from its neighbors, as given by:

2.3.3. Computation of Transformation Matrix

To estimate the transformation between the source and target point clouds, point pairs

and

with similar FPFH descriptors are first selected. The rotation matrix and translation vector are then computed using singular value decomposition (SVD). The covariance matrix

H between the source and target point sets is constructed as:

where

and

represent the centroids of all source and target points, respectively.

U and

V are

orthogonal matrices, and

is the diagonal matrix of singular values of

H. The rotation matrix

R is then computed by:

Once the rotation matrix

R is obtained, the translation vector

t can be derived to complete the rigid alignment. It is calculated based on the centroid difference between the point sets:

And the full transformation matrix

T is expressed as:

2.3.4. Mismatch Correction

In point cloud registration, mismatches are inevitable, especially when the point cloud contains noise or when feature point selection is suboptimal. The presence of mismatches can lead to inaccurate registration results, thereby affecting the subsequent fine registration process. To address this issue, the improved SAC-IA algorithm incorporates a mismatch correction mechanism. In this process, three evaluation criteria are introduced: FPFH histogram difference, Euclidean distance deviation, and geometric slope deviation. After initial registration, for each matched point pair, the differences in their FPFH descriptors, Euclidean distances, and geometric slopes are calculated. If all three deviations are below their respective thresholds, the match is considered valid and the algorithm proceeds to the next step. Otherwise, the target feature point in the point cloud is reselected.

2.4. Fine Registration

After performing coarse registration between the source and target point clouds, an initial alignment is obtained. However, due to factors such as noise, low overlap ratio, or large initial pose deviation, only a sub-optimal alignment may be achieved. Therefore, fine registration is required to further refine the alignment. Based on the initial pose provided by the coarse registration, the algorithm computes the nearest neighbor correspondences and minimizes the distance errors to eliminate local misalignment. The VGICP algorithm, which replaces traditional nearest neighbor search with a voxel-based definition of closest points, avoids expensive computations, significantly improves efficiency and robustness, and is well-suited for real-time SLAM applications. Accordingly, this paper adopts the VGICP algorithm to achieve fine registration between the source and target point clouds.

In the GICP algorithm, suppose the source point cloud is

and the target point cloud is

, with the goal of estimating the transformation matrix T between them. It is assumed that each point follows a Gaussian distribution, namely,

, where

and

are the mean values, and

and

are the corresponding covariance matrices. The transformation error between the corresponding points

and

is defined as:

According to the properties of Gaussian error propagation, the distribution of

is given by:

To solve for the optimal transformation matrix, GICP employs the maximum likelihood estimation:

Unlike traditional GICP, the VGICP algorithm avoids reliance on precise nearest neighbor search by utilizing voxel-based distribution comparison. First, the distribution model is extended to multiple neighboring points. For each

and its neighborhood

, the error term is defined as:

This can be viewed as a smoothing process over the target distribution. Similar to GICP, the mean and covariance of the transformed error distribution are:

Then, the transformation matrix

T is estimated via maximum likelihood as:

The estimated transformation matrix is applied iteratively to update the source point cloud. This process continues until the transformation error is smaller than a predefined threshold or convergence is achieved, yielding the optimal transformation matrix.

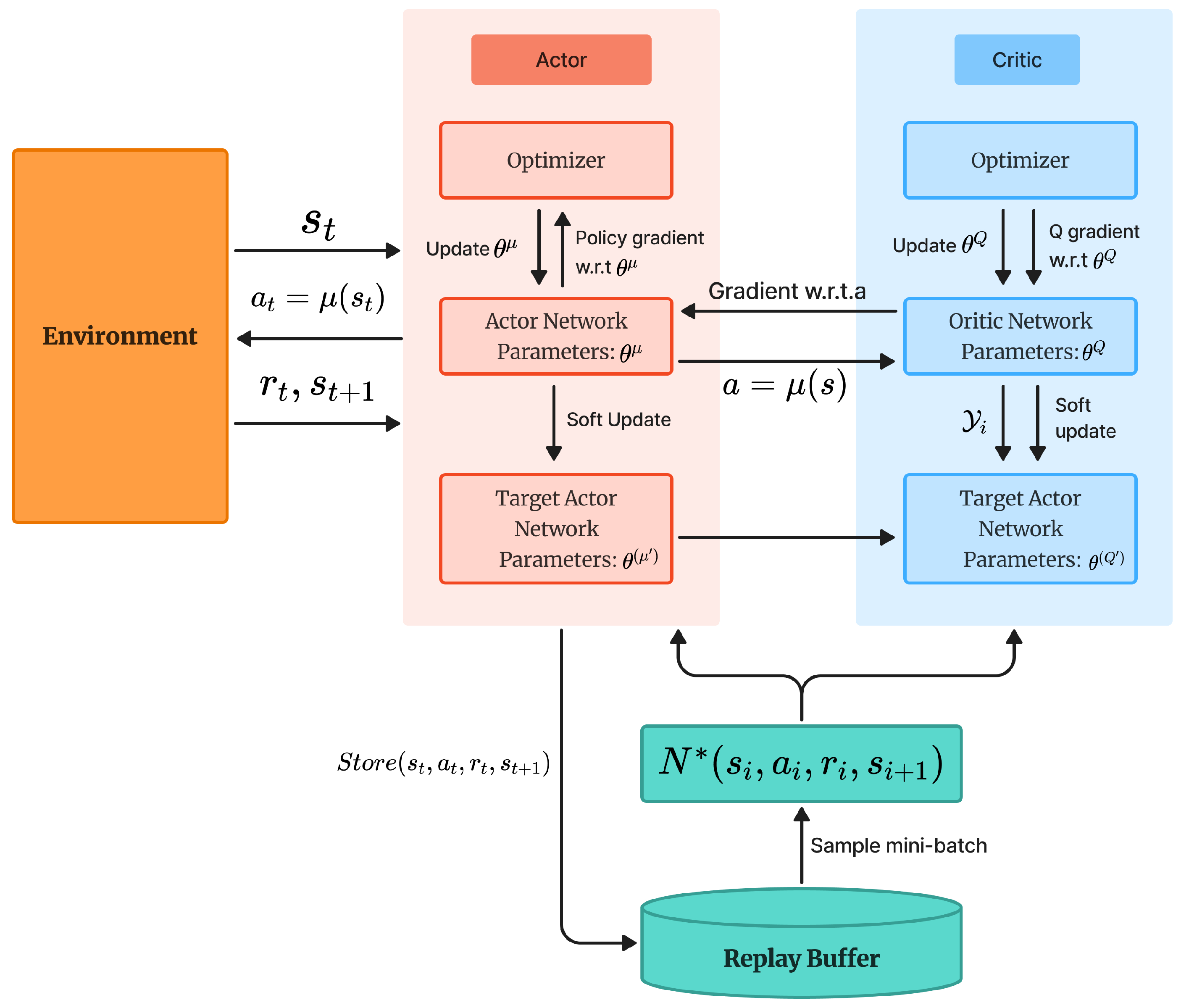

2.5. DDPG-Based Reinforcement Learning Path Planning

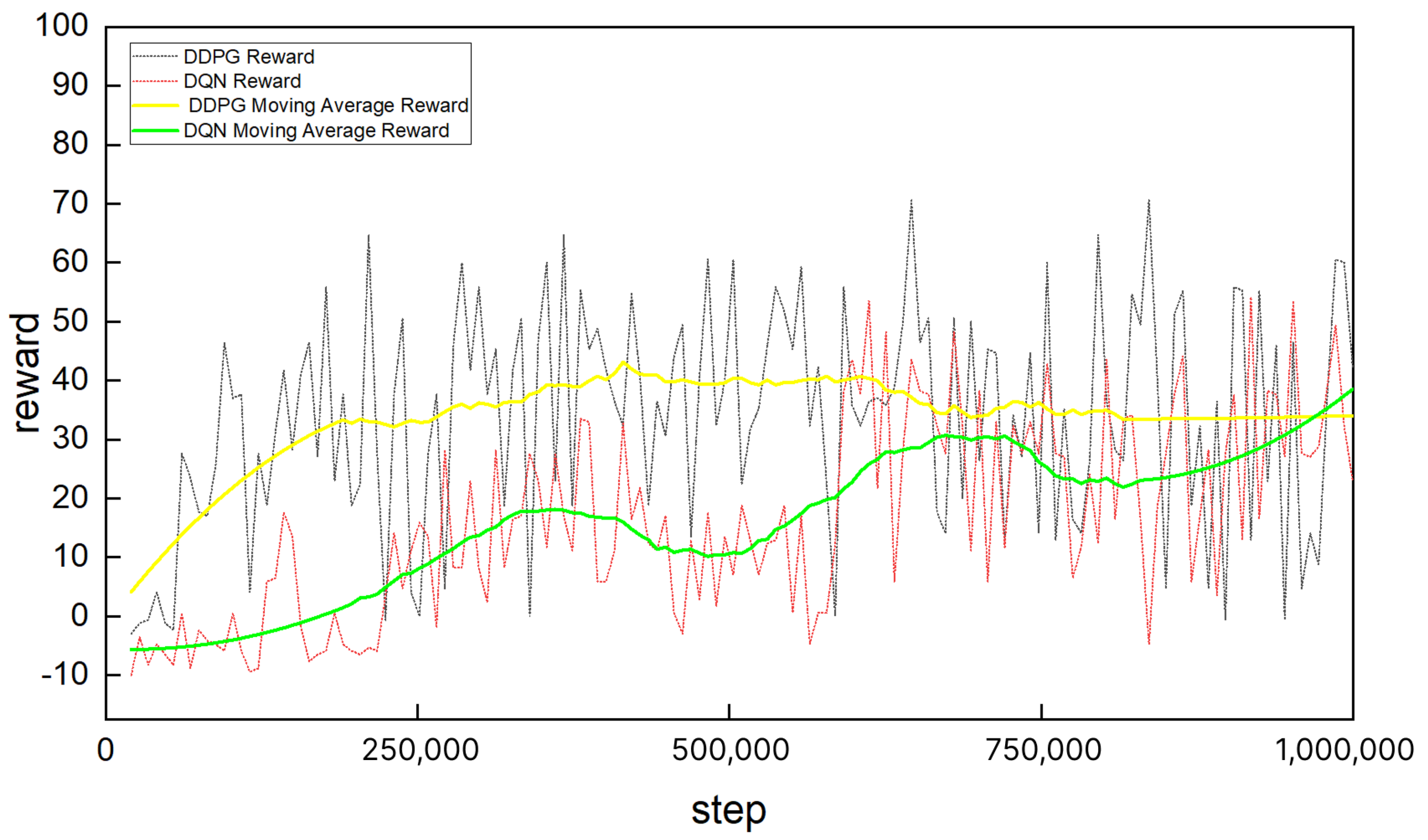

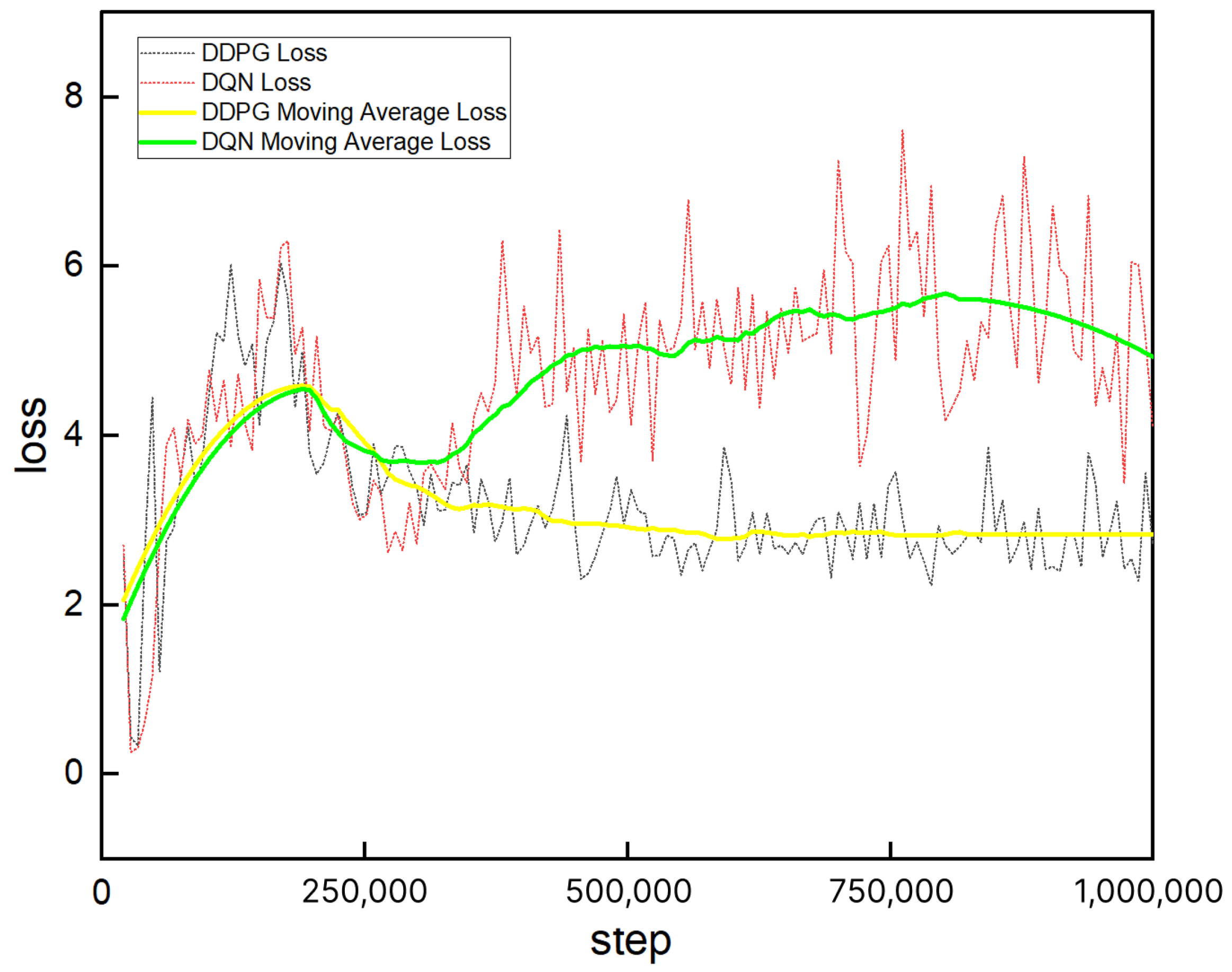

The deep deterministic policy gradient (DDPG) algorithm improves upon DQN primarily by enabling the effective handling of continuous action spaces [

10]. It adopts a deterministic policy and leverages the Actor–Critic architecture. Unlike DQN, which operates in discrete action spaces and relies on the

-greedy strategy, DDPG employs an actor network to output continuous deterministic actions and a critic network to evaluate these actions by estimating their Q-values.

Moreover, DDPG inherits the experience replay and target network mechanisms from DQN. To encourage exploration, noise is added to the deterministic actions, enabling stochastic behavior in continuous action spaces. Additionally, DDPG uses a soft update mechanism to gradually update the target networks, which contributes to improved training stability. The overall workflow of DDPG is illustrated in

Figure 4.

During the DDPG process, the Actor and Critic networks are first initialized similarly to DQN, with the Actor network containing policy network parameters , and the Critic network containing Q-network parameters , along with their corresponding target networks and . The target networks are updated softly to track the parameters of their respective networks.

The Actor network takes the current state as input and outputs a deterministic action . To encourage exploration, noise is added to the action. The interaction tuple is then stored in the replay buffer. During training, a minibatch of experiences is sampled for network updates.

Within the Critic network framework, the target Q-value is computed as follows:

where

is the discount factor,

denotes the target Critic network, and

is the target Actor network. The Critic network is updated by minimizing the mean squared error loss between the estimated Q-value and the target:

The Actor network is updated using the policy gradient, computed as:

Here,

denotes the gradient of the Actor network’s output action with respect to its parameters, and

is the gradient of the Critic network’s output Q-value with respect to the action input.

To further improve training stability, DDPG employs soft updates to gradually adjust the target network parameters as follows:

where

is a small positive constant that ensures smooth target updates. This mechanism helps DDPG maintain structural consistency while improving stability during training.