Smart Safety Helmets with Integrated Vision Systems for Industrial Infrastructure Inspection: A Comprehensive Review of VSLAM-Enabled Technologies

Abstract

1. Introduction

1.1. Research Objectives

1.2. Historical Evolution of Smart Helmet Technology

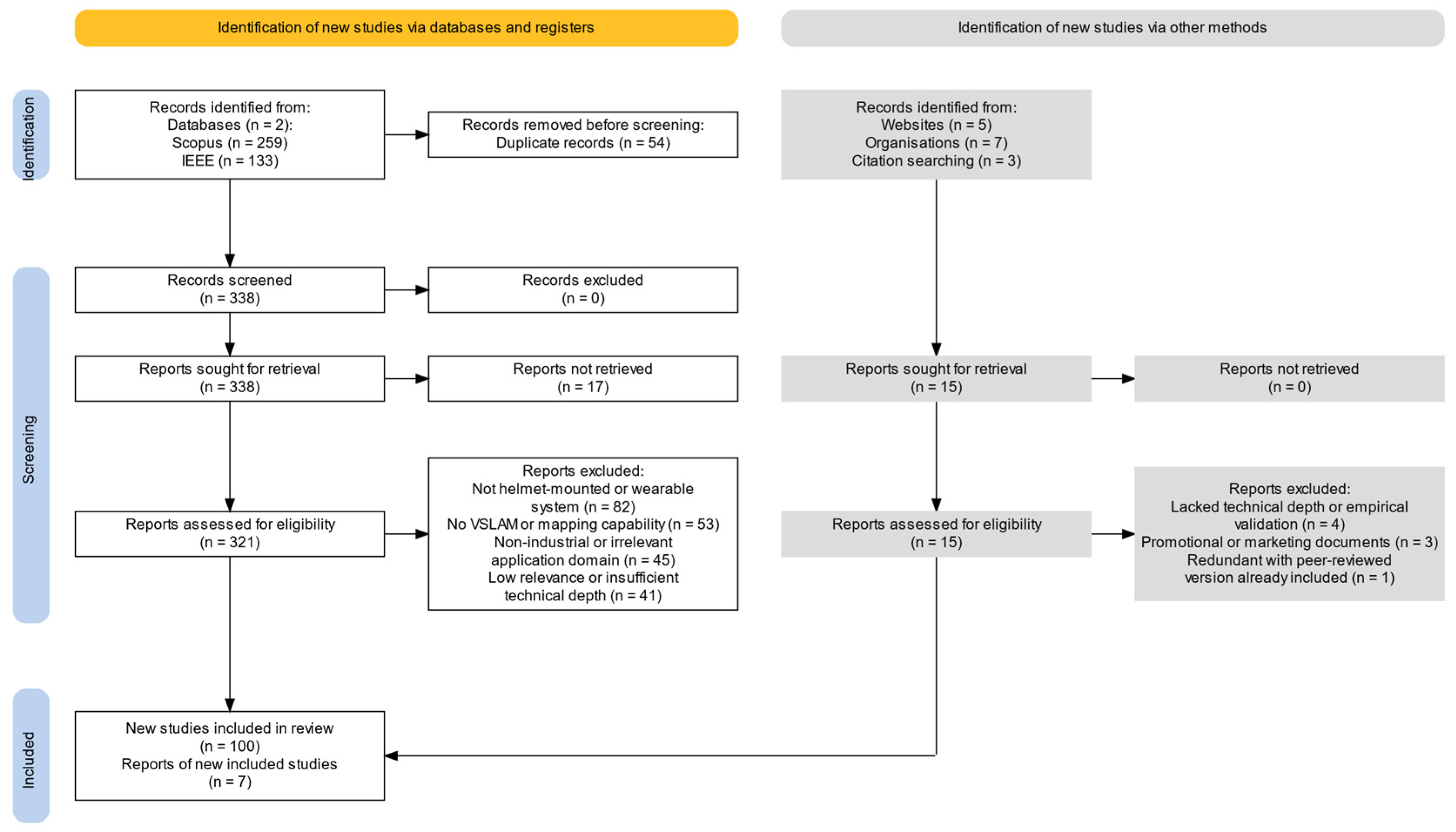

2. Methodology

2.1. Search Strategy and Paper Selection Criteria

- I.

- “smart helmet” OR “wearable AR helmet”.

- II.

- “helmet camera SLAM” OR “visual SLAM inspection”.

- III.

- “wearable vision system” OR” industrial augmented reality”.

- -

- Not helmet-mounted or wearable: excluded if the focus was not on helmet-mounted or wearable systems.

- -

- No VSLAM or mapping capability: excluded if the work did not address visual SLAM or relevant mapping functionalities central to this review.

- -

- Non-industrial or irrelevant application domain: excluded if focusing solely on non-industrial domains (e.g., gaming and recreational VR/AR) unless they introduced transferable technologies.

- -

- Insufficient technical or methodological depth: excluded if lacking empirical results, technical reproducibility, or in-depth methodological description.

- -

- Promotional or marketing focus: excluded if the material was primarily promotional, lacked objectivity, or was produced for marketing purposes without substantive technical content.

- -

- Lack of empirical or practical evidence: excluded if the source did not provide real-world deployment evidence, technical results, or data relevant to inspection/maintenance use cases.

- -

- Redundant with peer-reviewed publication: excluded if peer-reviewed information was available.

2.2. Classification Framework for Technologies

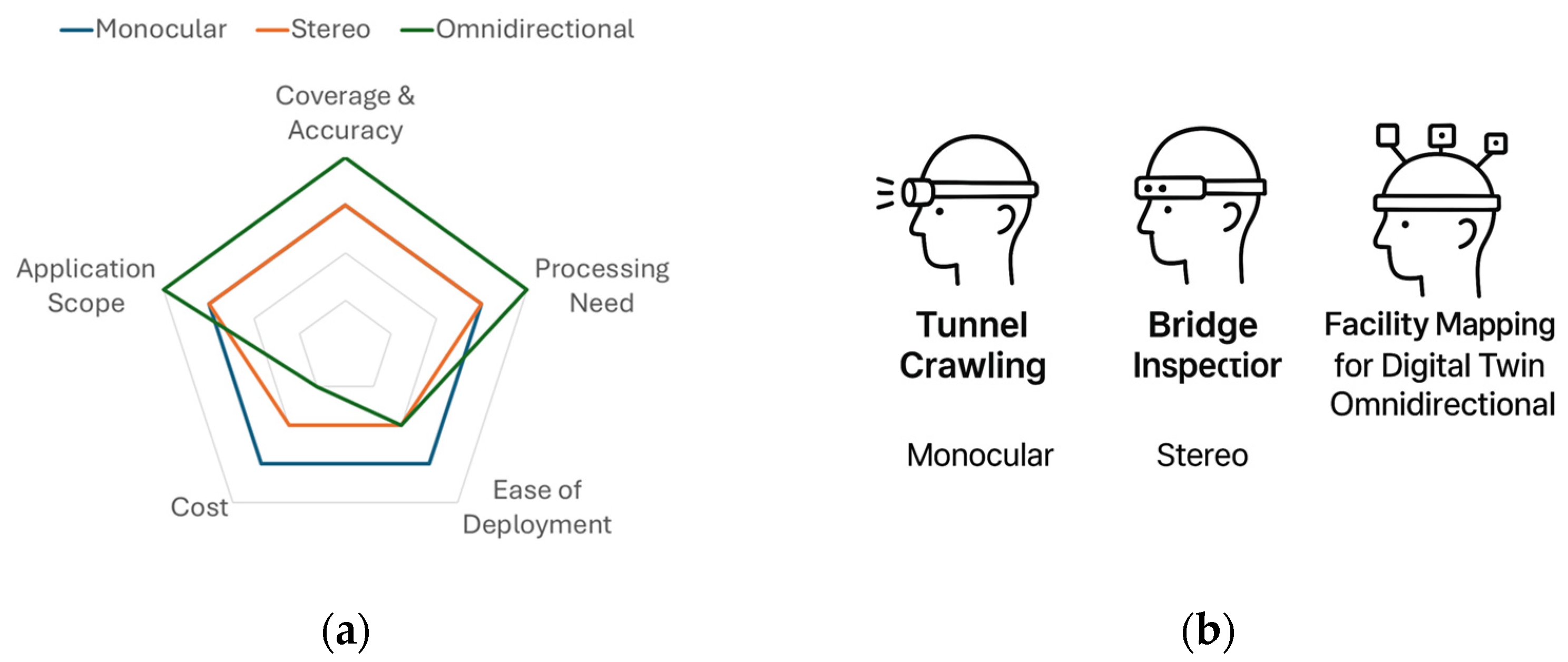

3. Camera System Typologies

3.1. Monocular Camera Systems

3.2. Stereo Vision Systems

3.3. Omnidirectional (360°) Camera Systems

3.4. Comparative Analysis

4. VSLAM Technologies and Implementations

4.1. Core VSLAM Algorithm Categories

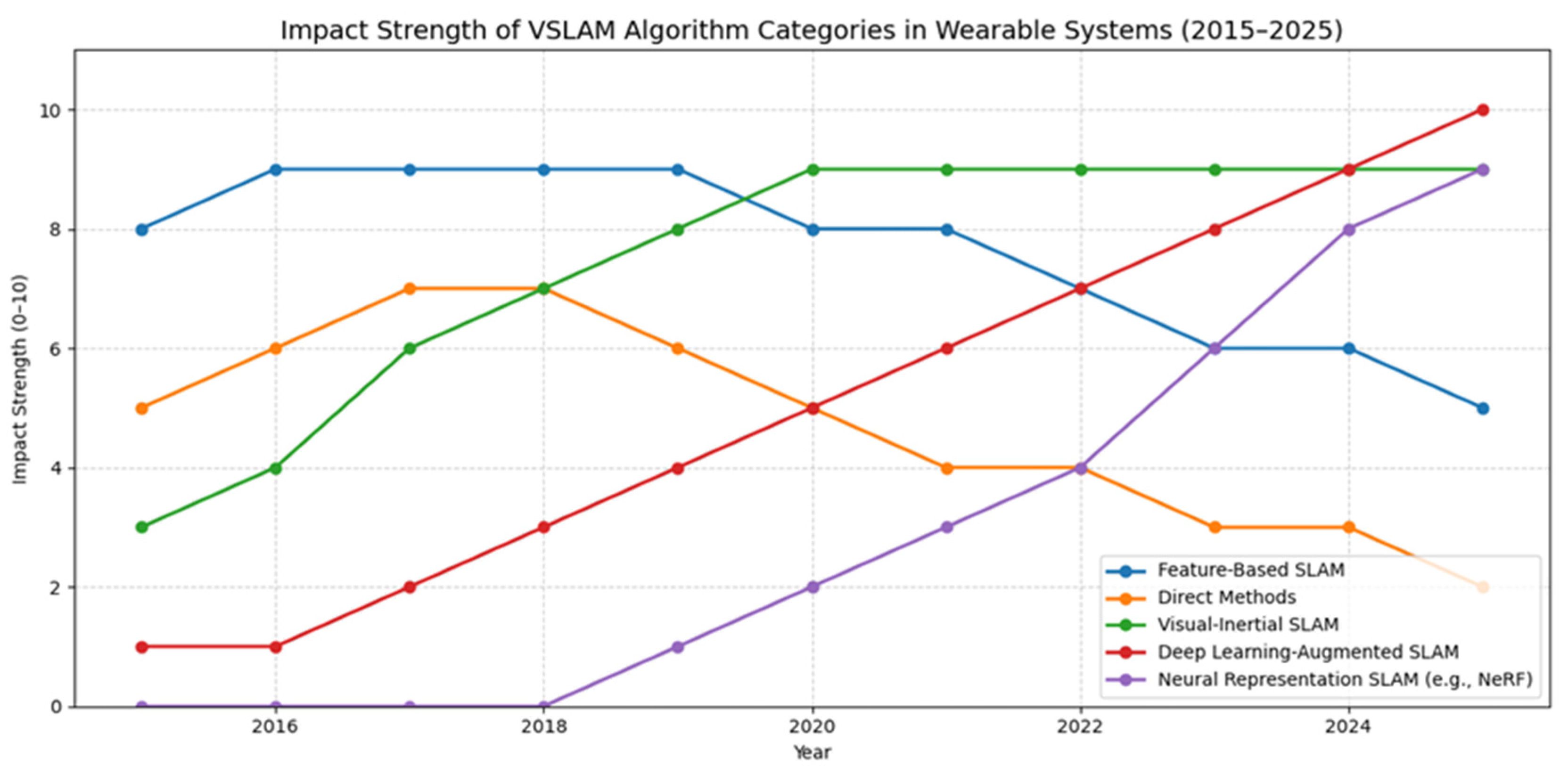

4.2. Algorithm Impact and Trends (2015–2025)

4.3. Sensor Fusion Approaches for Enhanced Robustness

- Visual–inertial fusion (Camera + IMU): Widely regarded as the gold standard, combining visual data with inertial measurements significantly reduces positional drift, enhances robustness to rapid head movements, and maintains tracking during brief visual occlusions. However, this fusion demands precise calibration and careful sensor synchronization, crucial for high-performance outcomes.

- Visual–LiDAR fusion: LiDAR sensors provide an accurate geometric structure even under poor visibility, greatly enhancing spatial accuracy. While extremely beneficial for high-fidelity maps (digital twins), LiDAR’s cost, weight, and energy consumption make it practical mainly in specialized industrial helmets or backpack-mounted configurations.

- Visual–GNSS fusion: integrating GNSS (GPS/RTK) positioning is beneficial for georeferencing outdoor inspections, limiting drift over extensive areas. However, GNSS accuracy degrades indoors or in covered structures, limiting widespread use without supplemental positioning methods.

- Additional sensors (thermal, barometer, and magnetometer): Sensors like thermal imaging help inspections in low-visibility conditions (smoke and darkness), while barometers and magnetometers support vertical and directional orientation. Despite niche benefits, environmental interferences such as magnetic distortions or thermal-image texture limitations require careful consideration in practical deployment.

4.4. Processing Architectures and Computational Strategies

- Edge computing: On-device processing provides immediate, latency-free operation critical for safety-sensitive tasks. Examples like HoloLens use specialized hardware (CPUs, GPUs, ASICs) optimized for energy efficiency. However, battery life, heat management, and device bulk remain significant engineering challenges.

- Cloud or remote processing: Offloading computation to cloud infrastructures enables the use of sophisticated algorithms (deep learning, photogrammetry). Systems such as the Kiber Helmet demonstrate practical use in remote-expert scenarios. However, cloud reliance introduces latency issues, data security concerns, and dependency on reliable network connectivity.

- Hybrid architectures (edge–cloud synergy): Combining local edge processing with remote cloud computation strikes a balance, performing real-time tasks on-device and leveraging cloud capabilities for complex analysis or data-intensive tasks. This requires sophisticated partitioning strategies and robust synchronization mechanisms to handle real-world operational constraints effectively.

- Hardware acceleration and optimization: GPUs, DSPs, and custom processors significantly accelerate real-time VSLAM tasks, enabling low latency and reduced power consumption. However, integrating specialized hardware involves complexity in software development, cost considerations, and challenges in device integration.

5. Industrial Implementations

- Spatial awareness—helping workers understand their position in complex or GPS-denied environments.

- Augmented visualization—overlaying relevant data in the user’s field of view.

- Automation—providing guided procedures, live alerts, or enabling remote expert support.

6. Challenges and Limitations

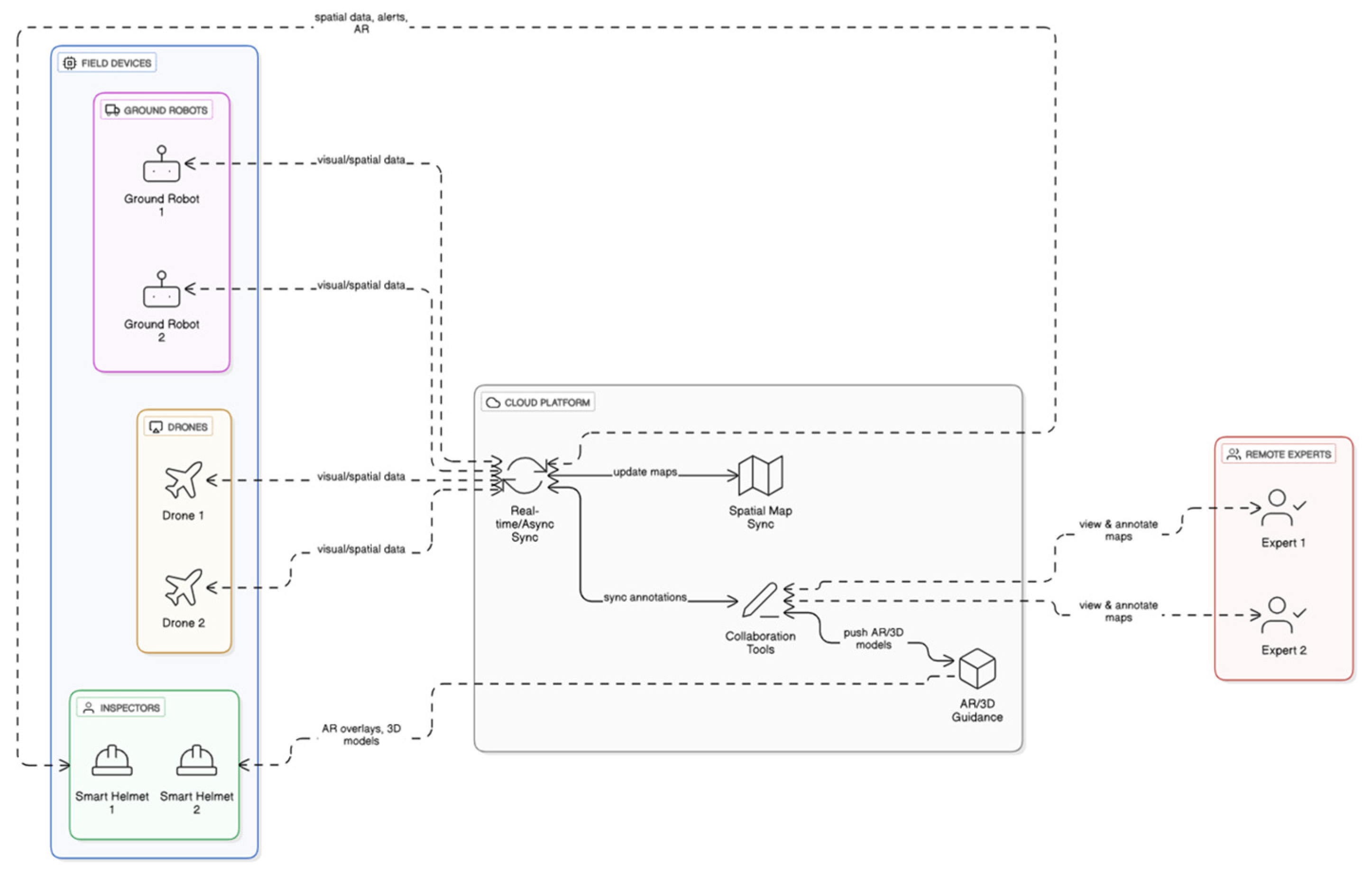

7. Collaborative and Multi-Agent Systems

8. Conclusions and Recommendations

8.1. Research Question Synthesis

8.2. Strategic Implementation Guidance and Recommendations

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Torres-Barriuso, J.; Lasarte, N.; Piñero, I.; Roji, E.; Elguezabal, P. Digitalization of the Workflow for Drone-Assisted Inspection and Automated Assessment of Industrial Buildings for Effective Maintenance Management. Buildings 2025, 15, 242. [Google Scholar] [CrossRef]

- Cannella, F.; Di Francia, G. PV Large Utility Visual Inspection via Unmanned Vehicles. In Proceedings of the 2024 20th IEEE/ASME International Conference on Mechatronic and Embedded Systems and Applications (MESA), Genova, Italy, 2 September 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–8. [Google Scholar]

- Halder, S.; Afsari, K. Robots in Inspection and Monitoring of Buildings and Infrastructure: A Systematic Review. Appl. Sci. 2023, 13, 2304. [Google Scholar] [CrossRef]

- Lee, P.; Kim, H.; Zitouni, M.S.; Khandoker, A.; Jelinek, H.F.; Hadjileontiadis, L.; Lee, U.; Jeong, Y. Trends in Smart Helmets With Multimodal Sensing for Health and Safety: Scoping Review. JMIR Mhealth Uhealth 2022, 10, e40797. [Google Scholar] [CrossRef] [PubMed]

- Nnaji, C.; Awolusi, I.; Park, J.; Albert, A. Wearable Sensing Devices: Towards the Development of a Personalized System for Construction Safety and Health Risk Mitigation. Sensors 2021, 21, 682. [Google Scholar] [CrossRef]

- Rane, N.; Choudhary, S.; Rane, J. Leading-Edge Wearable Technologies in Enhancing Personalized Safety on Construction Sites: A Review. SSRN J. 2023, 4641480. [Google Scholar] [CrossRef]

- Babalola, A.; Manu, P.; Cheung, C.; Yunusa-Kaltungo, A.; Bartolo, P. Applications of Immersive Technologies for Occupational Safety and Health Training and Education: A Systematic Review. Saf. Sci. 2023, 166, 106214. [Google Scholar] [CrossRef]

- Obeidat, M.S.; Dweiri, H.Q.; Smadi, H.J. Unveiling Workplace Safety and Health Empowerment: Unraveling the Key Elements Influencing Occupational Injuries. J. Saf. Res. 2024, 91, 126–135. [Google Scholar] [CrossRef]

- Bogdan, S.; Alisher, U.; Togmanov, M. Development of System Using Computer Vision to Detect Personal Protective Equipment Violations. In Proceedings of the 2024 5th International Conference on Communications, Information, Electronic and Energy Systems (CIEES), Veliko Tarnovo, Bulgaria, 20 November 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–4. [Google Scholar]

- Thomas, L.; Lajitha, C.S.; Mathew, J.T.; Jithin, S.; Mohan, A.; Kumar, K.S. Design and Development of Industrial Vision Sensor (IVIS) for Next Generation Industrial Applications. In Proceedings of the 2022 IEEE 19th India Council International Conference (INDICON), Kochi, India, 24 November 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Rizia, M.; Reyes-Munoz, J.A.; Ortega, A.G.; Choudhuri, A.; Flores-Abad, A. Autonomous Aerial Flight Path Inspection Using Advanced Manufacturing Techniques. Robotica 2022, 40, 2128–2151. [Google Scholar] [CrossRef]

- Sarieva, M.; Yao, L.; Sugawara, K.; Egami, T. Synchronous Position Control of Robotics System for Infrastructure Inspection Moving on Rope Tether. J. Rehabil. Med. 2019, 31, 317–328. [Google Scholar] [CrossRef]

- Wang, Z.; Li, M.; Da, H. Research on the Application of AR in the Safety Inspection of Opening and Closing Stations. In Proceedings of the 2024 7th International Conference on Computer Information Science and Application Technology (CISAT), Hangzhou, China, 12 July 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1193–1197. [Google Scholar]

- Kong, J.; Luo, X.; Lv, Y.; Xiao, J.; Wang, Y. Research on Intelligent Inspection Technology Based on Augmented Reality. In Proceedings of the 2024 Boao New Power System International Forum—Power System and New Energy Technology Innovation Forum (NPSIF), Qionghai, China, 8 December 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 688–695. [Google Scholar]

- Kanwal, A.; Anjum, Z.; Muhammad, W. Visual Simultaneous Localization and Mapping (vSLAM) of Driverless Car in GPS-Denied Areas. Eng. Proc. 2021, 12, 49. [Google Scholar]

- Mishra, B.; Griffin, R.; Sevil, H.E. Modelling Software Architecture for Visual Simultaneous Localization and Mapping. Automation 2021, 2, 48–61. [Google Scholar] [CrossRef]

- Bala, J.A.; Adeshina, S.A.; Aibinu, A.M. Advances in Visual Simultaneous Localisation and Mapping Techniques for Autonomous Vehicles: A Review. Sensors 2022, 22, 8943. [Google Scholar] [CrossRef] [PubMed]

- Chen, Z. Research on Localization and Mapping Algorithm of Industrial Inspection Robot Based on ORB-SLAM2. In Proceedings of the 2023 International Conference on Electronics and Devices, Computational Science (ICEDCS), Marseille, France, 22 September 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 310–315. [Google Scholar]

- Zhang, X.; Zhang, Y.; Zhang, H.; Cao, C. Research on Visual SLAM Technology of Industrial Inspection Robot Based on RGB-D. In Proceedings of the 2023 3rd International Symposium on Computer Technology and Information Science (ISCTIS), Chengdu, China, 7 July 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 51–55. [Google Scholar]

- Shahraki, M.; Elamin, A.; El-Rabbany, A. Event-Based Visual Simultaneous Localization and Mapping (EVSLAM) Techniques: State of the Art and Future Directions. J. Sens. Actuator Netw. 2025, 14, 7. [Google Scholar] [CrossRef]

- Chen, W.; Shang, G.; Ji, A.; Zhou, C.; Wang, X.; Xu, C.; Li, Z.; Hu, K. An Overview on Visual SLAM: From Tradition to Semantic. Remote Sens. 2022, 14, 3010. [Google Scholar] [CrossRef]

- Umezu, N.; Koizumi, S.; Nakagawa, K.; Nishida, S. Potential of Low-Cost Light Detection and Ranging (LiDAR) Sensors: Case Studies for Enhancing Visitor Experience at a Science Museum. Electronics 2023, 12, 3351. [Google Scholar] [CrossRef]

- Martin, T.; Starner, T.; Siewiorek, D.; Kunze, K.; Laerhoven, K.V. 25 Years of ISWC: Time Flies When You’re Having Fun. IEEE Pervasive Comput. 2021, 20, 72–78. [Google Scholar] [CrossRef]

- Mann, S. Phenomenal Augmented Reality: Advancing Technology for the Future of Humanity. IEEE Consum. Electron. Mag. 2015, 4, 92–97. [Google Scholar] [CrossRef]

- Thomas, B.H. Have We Achieved the Ultimate Wearable Computer? In Proceedings of the 2012 16th International Symposium on Wearable Computers, Newcastle, UK, 18–22 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 104–107. [Google Scholar]

- Hartwell, P.G.; Brug, J.A. Smart Helmet. U.S. Patent 6,798,392, 28 September 2004. [Google Scholar]

- García, A.F.; Biain, X.O.; Lingos, K.; Konstantoudakis, K.; Hernández, A.B.; Iragorri, I.A.; Zarpalas, D. Smart Helmet: Combining Sensors, AI, Augmented Reality, and Personal Protection to Enhance First Responders’ Situational Awareness. IT Prof. 2023, 25, 45–53. [Google Scholar] [CrossRef]

- Foster, R.R.; Gallinat, G.D.; Nedinsky, R.A. Completely Integrated Helmet Camera. U.S. Patent 6,819,354, 16 November 2004. [Google Scholar]

- Trujillo, J.-C.; Munguia, R.; Urzua, S.; Guerra, E.; Grau, A. Monocular Visual SLAM Based on a Cooperative UAV–Target System. Sensors 2020, 20, 3531. [Google Scholar] [CrossRef]

- Ometov, A.; Shubina, V.; Klus, L.; Skibińska, J.; Saafi, S.; Pascacio, P.; Flueratoru, L.; Gaibor, D.Q.; Chukhno, N.; Chukhno, O.; et al. A Survey on Wearable Technology: History, State-of-the-Art and Current Challenges. Comput. Netw. 2021, 193, 108074. [Google Scholar] [CrossRef]

- Mayol-Cuevas, W.W.; Tordoff, B.J.; Murray, D.W. On the Choice and Placement of Wearable Vision Sensors. IEEE Trans. Syst. Man Cybern.-Part A Syst. Hum. 2009, 39, 414–425. [Google Scholar] [CrossRef]

- Gutiérrez-Gómez, D.; Puig, L.; Guerrero, J.J. Full Scaled 3D Visual Odometry from a Single Wearable Omnidirectional Camera. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems, Vilamoura-Algarve, Portugal, 7 October 2012; pp. 4276–4281. [Google Scholar]

- Mohd Rasli, M.K.A.; Madzhi, N.K.; Johari, J. Smart Helmet with Sensors for Accident Prevention. In Proceedings of the 2013 International Conference on Electrical, Electronics and System Engineering (ICEESE), Kuala Lumpur, Malaysia, 4 December 2013; pp. 21–26. [Google Scholar]

- Zhang, Y.; Wu, Y.; Tong, K.; Chen, H.; Yuan, Y. Review of Visual Simultaneous Localization and Mapping Based on Deep Learning. Remote Sens. 2023, 15, 2740. [Google Scholar] [CrossRef]

- Asgari, Z.; Rahimian, F.P. Advanced Virtual Reality Applications and Intelligent Agents for Construction Process Optimisation and Defect Prevention. Procedia Eng. 2017, 196, 1130–1137. [Google Scholar] [CrossRef]

- Campos, C.; Elvira, R.; Rodriguez, J.J.G.; Montiel, J.M.; Tardos, J.D. ORB-SLAM3: An Accurate Open-Source Library for Visual, Visual–Inertial, and Multimap SLAM. IEEE Trans. Robot. 2021, 37, 1874–1890. [Google Scholar] [CrossRef]

- Mark, F. Fiducial Marker Patterns, Their Automatic Detection In Images, And Applications Thereof. U.S. Patent 20200090338A1, 23 February 2021. [Google Scholar]

- Écorchard, G.; Košnar, K.; Přeučil, L. Wearable Camera-Based Human Absolute Localization in Large Warehouses. In Proceedings of the Twelfth International Conference on Machine Vision (ICMV 2019), Amsterdam, The Netherlands, 31 January 2020; Osten, W., Nikolaev, D.P., Eds.; SPIE: Cergy-Pontoise, France, 2020; p. 96. [Google Scholar]

- Ballor, J.P.; McClain, O.L.; Mellor, M.A.; Cattaneo, A.; Harden, T.A.; Shelton, P.; Martinez, E.; Narushof, B.; Moreu, F.; Mascareñas, D.D.L. Augmented Reality for Next Generation Infrastructure Inspections. In Model Validation and Uncertainty Quantification, Proceedings of the Conference Society for Experimental Mechanics Series, Florida, FL, USA, 12−15 March 2018; Springer International Publishing: Cham, Switzerland, 2019; pp. 185–192. ISBN 978-3-319-74792-7. [Google Scholar]

- Choi, Y.; Kim, Y. Applications of Smart Helmet in Applied Sciences: A Systematic Review. Appl. Sci. 2021, 11, 5039. [Google Scholar] [CrossRef]

- RINA Innovative Solutions for Remote Inspection: RINA and Kiber Team Up. Available online: https://www.rina.org/en/media/press/2019/11/12/vr-media (accessed on 1 August 2025).

- VRMedia. 5 Reasons Why You Should Choose Augmented Reality Solutions; VRMedia s.r.l.: Pisa, Italy, 2020. [Google Scholar]

- Xu, Z.; Lin, Z.; Liu, H.; Ren, Q.; Gao, A. Augmented Reality Enabled Telepresence Framework for Intuitive Robotic Teleoperation. In Proceedings of the 2023 IEEE International Conference on Robotics and Biomimetics (ROBIO), Koh Samui, Thailand, 4 December 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–6. [Google Scholar]

- Barz, M.; Daiber, F.; Bulling, A. Prediction of Gaze Estimation Error for Error-Aware Gaze-Based Interfaces. In Proceedings of the Proceedings of the Ninth Biennial ACM Symposium on Eye Tracking Research & Applications, Charleston, SC, USA, 14 March 2016; ACM: New York, NY, USA, 2016; pp. 275–278. [Google Scholar]

- Kumar, S.; Micheloni, C.; Piciarelli, C.; Foresti, G.L. Stereo Localization Based on Network’s Uncalibrated Camera Pairs. In Proceedings of the 2009 Sixth IEEE International Conference on Advanced Video and Signal Based Surveillance, Genova, Italy, 2–4 September 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 502–507. [Google Scholar]

- Zhang, T.; Zhang, L.; Chen, Y.; Zhou, Y. CVIDS: A Collaborative Localization and Dense Mapping Framework for Multi-Agent Based Visual-Inertial SLAM. IEEE Trans. Image Process. 2022, 31, 6562–6576. [Google Scholar] [CrossRef]

- Mante, N.; Weiland, J.D. Visually Impaired Users Can Locate and Grasp Objects Under the Guidance of Computer Vision and Non-Visual Feedback. In Proceedings of the 2018 40th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Honolulu, HI, USA, 18–21 July 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–4. [Google Scholar]

- Elmadjian, C.; Shukla, P.; Tula, A.D.; Morimoto, C.H. 3D Gaze Estimation in the Scene Volume with a Head-Mounted Eye Tracker. In Proceedings of the Workshop on Communication by Gaze Interaction, Warsaw, Poland, 15 June 2018; ACM: New York, NY, USA; pp. 1–9. [Google Scholar]

- Hasegawa, R.; Sakaue, F.; Sato, J. 3D Human Body Reconstruction from Head-Mounted Omnidirectional Camera and Light Sources. In Proceedings of the 18th International Joint Conference on Computer Vision, Imaging and Computer Graphics Theory and Applications, Nagoya, Japan, 1 January 2023; SCITEPRESS—Science and Technology Publications: Lisbon, Portugal, 2023; pp. 982–989. [Google Scholar]

- Yang, Y.; Yuan, S.; Yang, J.; Nguyen, T.H.; Cao, M.; Nguyen, T.-M.; Wang, H.; Xie, L. AV-FDTI: Audio-Visual Fusion for Drone Threat Identification. J. Autom. Intell. 2024, 3, 144–151. [Google Scholar] [CrossRef]

- Jiang, L.; Xiong, Y.; Wang, Q.; Chen, T.; Wu, W.; Zhou, Z. SADNet: Generating Immersive Virtual Reality Avatars by Real-time Monocular Pose Estimation. Comput. Animat. Amp, Virtual 2024, 35, e2233. [Google Scholar] [CrossRef]

- Carozza, L.; Bosche, F.; Abdel-Wahab, M. [Poster] Visual-Lnertial 6-DOF Localization for a Wearable Immersive VR/AR System. In Proceedings of the 2014 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Munich, Germany, 10–12 September 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 257–258. [Google Scholar]

- Piao, J.-C.; Kim, S.-D. Adaptive Monocular Visual-Inertial SLAM for Real-Time Augmented Reality Applications in Mobile Devices. Sensors 2017, 17, 2567. [Google Scholar] [CrossRef]

- Cutolo, F.; Cattari, N.; Carbone, M.; D’Amato, R.; Ferrari, V. Device-Agnostic Augmented Reality Rendering Pipeline for AR in Medicine. In Proceedings of the 2021 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Bari, Italy, 4–8 October 2021; pp. 340–345. [Google Scholar]

- Javed, Z.; Kim, G.-W. Publisher Correction to: PanoVILD: A Challenging Panoramic Vision, Inertial and LiDAR Dataset for Simultaneous Localization and Mapping. J. Supercomput. 2022, 78, 13864. [Google Scholar] [CrossRef]

- Lai, T. A Review on Visual-SLAM: Advancements from Geometric Modelling to Learning-Based Semantic Scene Understanding Using Multi-Modal Sensor Fusion. Sensors 2022, 22, 7265. [Google Scholar] [CrossRef]

- Berrio, J.S.; Shan, M.; Worrall, S.; Nebot, E. Camera-LIDAR Integration: Probabilistic Sensor Fusion for Semantic Mapping. IEEE Trans. Intell. Transp. Syst. 2022, 23, 7637–7652. [Google Scholar] [CrossRef]

- Huang, P.; Zeng, L.; Chen, X.; Luo, K.; Zhou, Z.; Yu, S. Edge Robotics: Edge-Computing-Accelerated Multirobot Simultaneous Localization and Mapping. IEEE Internet Things J. 2022, 9, 14087–14102. [Google Scholar] [CrossRef]

- Wu, X.; Li, Y.; Long, J.; Zhang, S.; Wan, S.; Mei, S. A Remote-Vision-Based Safety Helmet and Harness Monitoring System Based on Attribute Knowledge Modeling. Remote Sens. 2023, 15, 347. [Google Scholar] [CrossRef]

- Jiang, J.; Yang, Z.; Wu, C.; Guo, Y.; Yang, M.; Feng, W. A Compatible Detector Based on Improved YOLOv5 for Hydropower Device Detection in AR Inspection System. Expert Syst. Appl. 2023, 225, 120065. [Google Scholar] [CrossRef]

- Zang, Q.; Zhang, K.; Wang, L.; Wu, L. An Adaptive ORB-SLAM3 System for Outdoor Dynamic Environments. Sensors 2023, 23, 1359. [Google Scholar] [CrossRef] [PubMed]

- Sahili, A.R.; Hassan, S.; Sakhrieh, S.M.; Mounsef, J.; Maalouf, N.; Arain, B.; Taha, T. A Survey of Visual SLAM Methods. IEEE Access 2023, 11, 139643–139677. [Google Scholar] [CrossRef]

- Zhang, Q.; Li, C. Semantic SLAM for Mobile Robots in Dynamic Environments Based on Visual Camera Sensors. Meas. Sci. Technol. 2023, 34, 085202. [Google Scholar] [CrossRef]

- Zhao, Y.; Vela, P.A. Good Feature Matching: Toward Accurate, Robust VO/VSLAM With Low Latency. IEEE Trans. Robot. 2020, 36, 657–675. [Google Scholar] [CrossRef]

- Servières, M.; Renaudin, V.; Dupuis, A.; Antigny, N. Visual and Visual-Inertial SLAM: State of the Art, Classification, and Experimental Benchmarking. J. Sens. 2021, 2021, 2054828. [Google Scholar] [CrossRef]

- Zhao, Z.; Wu, C.; Kong, X.; Li, Q.; Guo, Z.; Lv, Z.; Du, X. Light-SLAM: A Robust Deep-Learning Visual SLAM System Based on LightGlue Under Challenging Lighting Conditions. IEEE Trans. Intell. Transp. Syst. 2025, 26, 9918–9931. [Google Scholar] [CrossRef]

- Beghdadi, A.; Mallem, M. A Comprehensive Overview of Dynamic Visual SLAM and Deep Learning: Concepts, Methods and Challenges. Mach. Vis. Appl. 2022, 33, 54. [Google Scholar] [CrossRef]

- Jacobs, J.V.; Hettinger, L.J.; Huang, Y.-H.; Jeffries, S.; Lesch, M.F.; Simmons, L.A.; Verma, S.K.; Willetts, J.L. Employee Acceptance of Wearable Technology in the Workplace. Appl. Ergon. 2019, 78, 148–156. [Google Scholar] [CrossRef]

- Hyzy, M.; Bond, R.; Mulvenna, M.; Bai, L.; Dix, A.; Leigh, S.; Hunt, S. System Usability Scale Benchmarking for Digital Health Apps: Meta-Analysis. JMIR mHealth uHealth 2022, 10, e37290. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Wu, W.; Yang, B.; Zou, X.; Yang, Y.; Zhao, X.; Dong, Z. WHU-Helmet: A Helmet-Based Multisensor SLAM Dataset for the Evaluation of Real-Time 3-D Mapping in Large-Scale GNSS-Denied Environments. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–16. [Google Scholar] [CrossRef]

- Herrera-Granda, E.P.; Torres-Cantero, J.C.; Peluffo-Ordoñez, D.H. Monocular Visual SLAM, Visual Odometry, and Structure from Motion Methods Applied to 3D Reconstruction: A Comprehensive Survey. Heliyon 2023, 10, e37356. [Google Scholar] [CrossRef]

- Pearkao, C.; Suthisopapan, P.; Jaitieng, A.; Homvisetvongsa, S.; Wicharit, L. Development of an AI—Integrated Smart Helmet for Motorcycle Accident Prevention: A Feasibility Study. J. Multidiscip. Healthc. 2025, 18, 957–968. [Google Scholar] [CrossRef]

- Campero-Jurado, I.; Márquez-Sánchez, S.; Quintanar-Gómez, J.; Rodríguez, S.; Corchado, J. Smart Helmet 5.0 for Industrial Internet of Things Using Artificial Intelligence. Sensors 2020, 20, 6241. [Google Scholar] [CrossRef]

- Kamali Mohammadzadeh, A.; Eghbalizarch, M.; Jahanmahin, R.; Masoud, S. Defining the Criteria for Selecting the Right Extended Reality Systems in Healthcare Using Fuzzy Analytic Network Process. Sensors 2025, 25, 3133. [Google Scholar] [CrossRef]

- Tan, C.S. A Smart Helmet Framework Based on Visual-Inertial SLAM and Multi-Sensor Fusion to Improve Situational Awareness and Reduce Hazards in Mountaineering. Int. J. Softw. Sci. Comput. Intell. 2023, 15, 1–19. [Google Scholar] [CrossRef]

- Zhu, Y.; Zhang, D.; Zhou, Y.; Jin, W.; Zhou, L.; Wu, G.; Li, Y. A Binocular Stereo-Imaging-Perception System with a Wide Field-of-View and Infrared- and Visible Light-Dual-Band Fusion. Sensors 2024, 24, 676. [Google Scholar] [CrossRef] [PubMed]

- Keselman, L.; Woodfill, J.I.; Grunnet-Jepsen, A.; Bhowmik, A. Intel(R) RealSense(TM) Stereoscopic Depth Cameras. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1267–1276. [Google Scholar]

- Hübner, P.; Clintworth, K.; Liu, Q.; Weinmann, M.; Wursthorn, S. Evaluation of HoloLens Tracking and Depth Sensing for Indoor Mapping Applications. Sensors 2020, 20, 1021. [Google Scholar] [CrossRef] [PubMed]

- Schischmanow, A.; Dahlke, D.; Baumbach, D.; Ernst, I.; Linkiewicz, M. Seamless Navigation, 3D Reconstruction, Thermographic and Semantic Mapping for Building Inspection. Sensors 2022, 22, 4745. [Google Scholar] [CrossRef] [PubMed]

- Zaremba, A.; Nitkiewicz, S. Distance Estimation with a Stereo Camera and Accuracy Determination. Appl. Sci. 2024, 14, 11444. [Google Scholar] [CrossRef]

- Biswanath, M.K.; Hoegner, L.; Stilla, U. Thermal Mapping from Point Clouds to 3D Building Model Facades. Remote Sens. 2023, 15, 4830. [Google Scholar] [CrossRef]

- Li, J.; Yang, B.; Chen, Y.; Wu, W.; Yang, Y.; Zhao, X.; Chen, R. Evaluation of a compact helmet-based laser scanning system for aboveground and underground 3D mapping. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2022, XLIII-B2-2022, 215–220. [Google Scholar] [CrossRef]

- Hausamann, P.; Sinnott, C.B.; Daumer, M.; MacNeilage, P.R. Evaluation of the Intel RealSense T265 for Tracking Natural Human Head Motion. Sci. Rep. 2021, 11, 12486. [Google Scholar] [CrossRef]

- Zhang, G.; Yang, S.; Hu, P.; Deng, H. Advances and Prospects of Vision-Based 3D Shape Measurement Methods. Machines 2022, 10, 124. [Google Scholar] [CrossRef]

- Muhovič, J.; Perš, J. Correcting Decalibration of Stereo Cameras in Self-Driving Vehicles. Sensors 2020, 20, 3241. [Google Scholar] [CrossRef]

- Di Salvo, E.; Bellucci, S.; Celidonio, V.; Rossini, I.; Colonnese, S.; Cattai, T. Visual Localization Domain for Accurate V-SLAM from Stereo Cameras. Sensors 2025, 25, 739. [Google Scholar] [CrossRef]

- Tani, S.; Ruscio, F.; Bresciani, M.; Costanzi, R. Comparison of Monocular and Stereo Vision Approaches for Structure Inspection Using Autonomous Underwater Vehicles. In Proceedings of the OCEANS 2023—Limerick, Limerick, Ireland, 5–8 June 2023; pp. 1–7. [Google Scholar]

- Marcato Junior, J.; Antunes De Moraes, M.V.; Garcia Tommaselli, A.M. Experimental Assessment of Techniques for Fisheye Camera Calibration. Bol. Ciênc. Geod. 2015, 21, 637–651. [Google Scholar] [CrossRef]

- Murillo, A.C.; Gutierrez-Gomez, D.; Rituerto, A.; Puig, L.; Guerrero, J.J. Wearable Omnidirectional Vision System for Personal Localization and Guidance. In Proceedings of the 2012 IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Providence, RI, USA, 16–21 June 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 8–14. [Google Scholar]

- Gamallo, C.; Mucientes, M.; Regueiro, C.V. Omnidirectional Visual SLAM under Severe Occlusions. Robot. Auton. Syst. 2015, 65, 76–87. [Google Scholar] [CrossRef]

- Lin, B.-H.; Shivanna, V.M.; Chen, J.-S.; Guo, J.-I. 360° Map Establishment and Real-Time Simultaneous Localization and Mapping Based on Equirectangular Projection for Autonomous Driving Vehicles. Sensors 2023, 23, 5560. [Google Scholar] [CrossRef]

- Houba, R.; Constein, H.P.; Arend, R.; Kampf, A.; Gilen, T.; Pfeil, J. Camera System for Capturing Images and Methods Thereof. U.S. Patent 10187555B2, 22 January 2019. [Google Scholar]

- Ardouin, J.; Lécuyer, A.; Marchal, M.; Riant, C.; Marchand, E. FlyVIZ: A Novel Display Device to Provide Humans with 360° Vision by Coupling Catadioptric Camera with Hmd. In Proceedings of the 18th ACM Symposium on Virtual Reality Software and Technology, Toronto, ON, Canada, 10 December 2012; ACM: New York, NY, USA, 2012; pp. 41–44. [Google Scholar]

- Campos, M.B.; Tommaselli, A.M.; Honkavaara, E.; Prol, F.D.; Kaartinen, H.; El Issaoui, A.; Hakala, T. A Backpack-Mounted Omnidirectional Camera with Off-the-Shelf Navigation Sensors for Mobile Terrestrial Mapping: Development and Forest Application. Sensors 2018, 18, 827. [Google Scholar] [CrossRef]

- Chen, H.; Hu, W.; Yang, K.; Bai, J.; Wang, K. Panoramic Annular SLAM with Loop Closure and Global Optimization. Appl. Opt. 2021, 60, 6264. [Google Scholar] [CrossRef] [PubMed]

- Bonetto, E.; Goldschmid, P.; Pabst, M.; Black, M.J.; Ahmad, A. iRotate: Active Visual SLAM for Omnidirectional Robots. Robot. Auton. Syst. 2022, 154, 104102. [Google Scholar] [CrossRef]

- Li, J.; Zhao, Y.; Ye, W.; Yu, K.; Ge, S. Attentive Deep Stitching and Quality Assessment for 360$^{\circ }$ Omnidirectional Images. IEEE J. Sel. Top. Signal Process. 2020, 14, 209–221. [Google Scholar] [CrossRef]

- Zhang, W.; Wang, S.; Dong, X.; Guo, R.; Haala, N. BAMF-SLAM: Bundle Adjusted Multi-Fisheye Visual-Inertial SLAM Using Recurrent Field Transforms. In Proceedings of the 2023 IEEE International Conference on Robotics and Automation (ICRA), London, UK, 29 June 2023; pp. 6232–6238. [Google Scholar]

- Maohai, L.; Han, W.; Lining, S.; Zesu, C. Robust Omnidirectional Mobile Robot Topological Navigation System Using Omnidirectional Vision. Eng. Appl. Artif. Intell. 2013, 26, 1942–1952. [Google Scholar] [CrossRef]

- Browne, M.P.; Larroque, S. Electronic See-through Head Mounted Display with Minimal Peripheral Obscuration. In Proceedings of the Optical Architectures for Displays and Sensing in Augmented, Virtual, and Mixed Reality (AR, VR, MR), San Francisco, CA, USA, 19 February 2020; Kress, B.C., Peroz, C., Eds.; SPIE: Cergy-Pontoise, France; p. 45. [Google Scholar]

- Hottner, L.; Bachlmair, E.; Zeppetzauer, M.; Wirth, C.; Ferscha, A. Design of a Smart Helmet. In Proceedings of the Seventh International Conference on the Internet of Things, Linz, Austria, 22 October 2017; ACM: New York, NY, USA, 2017; pp. 1–2. [Google Scholar]

- Akturk, S.; Valentine, J.; Ahmad, J.; Jagersand, M. Immersive Human-in-the-Loop Control: Real-Time 3D Surface Meshing and Physics Simulation. In Proceedings of the 2024 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Abu Dhabi, United Arab Emirates, 14 October 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 12176–12182. [Google Scholar]

- Shivaanivarsha, N.; Vijayendiran, A.G.; Sriram, A. LoRa WAN Based Smart Safety Helmet with Protection Mask for Miners. In Proceedings of the 2024 International Conference on Communication, Computing and Internet of Things (IC3IoT), Chennai, India, 17 April 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–6. [Google Scholar]

- Zhang, Y.; Li, J.; Zhao, X.; Liao, Y.; Dong, Z.; Yang, B. NeRF-Based Localization and Meshing with Wearable Laser Scanning System: A Case Study in Underground Environment. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2024, X-4–2024, 501–506. [Google Scholar] [CrossRef]

- Jin, X.; Li, L.; Dang, F.; Chen, X.; Liu, Y. A Survey on Edge Computing for Wearable Technology. Digit. Signal Process. 2022, 125, 103146. [Google Scholar] [CrossRef]

- Ramasubramanian, A.K.; Mathew, R.; Preet, I.; Papakostas, N. Review and Application of Edge AI Solutions for Mobile Collaborative Robotic Platforms. Procedia CIRP 2022, 107, 1083–1088. [Google Scholar] [CrossRef]

- Szajna, A.; Stryjski, R.; Woźniak, W.; Chamier-Gliszczyński, N.; Kostrzewski, M. Assessment of Augmented Reality in Manual Wiring Production Process with Use of Mobile AR Glasses. Sensors 2020, 20, 4755. [Google Scholar] [CrossRef] [PubMed]

- Jang, J.; Lee, K.-H.; Joo, S.; Kwon, O.; Yi, H.; Lee, D. Smart Helmet for Vital Sign-Based Heatstroke Detection Using Support Vector Machine. J. Sens. Sci. Technol. 2022, 31, 433–440. [Google Scholar] [CrossRef]

- Ferraris, D.; Fernandez-Gago, C.; Lopez, J. A Trust-by-Design Framework for the Internet of Things. In Proceedings of the 2018 9th IFIP International Conference on New Technologies, Mobility and Security (NTMS), Malaga, Spain, 26 February 2018; pp. 1–4. [Google Scholar]

- Merchán-Cruz, E.A.; Gabelaia, I.; Savrasovs, M.; Hansen, M.F.; Soe, S.; Rodriguez-Cañizo, R.G.; Aragón-Camarasa, G. Trust by Design: An Ethical Framework for Collaborative Intelligence Systems in Industry 5.0. Electronics 2025, 14, 1952. [Google Scholar] [CrossRef]

- Chen, W.; Wang, X.; Gao, S.; Shang, G.; Zhou, C.; Li, Z.; Xu, C.; Hu, K. Overview of Multi-Robot Collaborative SLAM from the Perspective of Data Fusion. Machines 2023, 11, 653. [Google Scholar] [CrossRef]

- Horberry, T.; Burgess-Limerick, R. Applying a Human-Centred Process to Re-Design Equipment and Work Environments. Safety 2015, 1, 7–15. [Google Scholar] [CrossRef]

- Ajmal, M.; Isha, A.S.; Nordin, S.M.; Al-Mekhlafi, A.-B.A. Safety-Management Practices and the Occurrence of Occupational Accidents: Assessing the Mediating Role of Safety Compliance. Sustainability 2022, 14, 4569. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, G.; Cao, H.; Hu, K.; Wang, Q.; Deng, Y.; Gao, J.; Tang, Y. Geometry-Aware 3D Point Cloud Learning for Precise Cutting-Point Detection in Unstructured Field Environments. J. Field Robot. 2025, 42, 514–531. [Google Scholar] [CrossRef]

- Li, X.; Hu, Y.; Jie, Y.; Zhao, C.; Zhang, Z. Dual-Frequency LiDAR for Compressed Sensing 3D Imaging Based on All-Phase Fast Fourier Transform. J. Opt. Photonics Res. 2023, 1, 74–81. [Google Scholar] [CrossRef]

| Facet | Description | Relevant Reference |

|---|---|---|

| Camera System Typology | Categorizes helmet-mounted vision systems into monocular, stereo, and omnidirectional types based on camera configuration. Influences VSLAM algorithm choice and depth perception. | [43,44,45] |

| VSLAM and Sensor Fusion Techniques | Classifies SLAM algorithms and sensor fusion techniques (e.g., visual–inertial and LiDAR fusion). Covers processing architectures like edge computing and cloud offloading. | [46,47,48,49] |

| Application and Deployment | Categorizes industrial applications: inspections, maintenance, AR guidance, and remote collaboration. Maps combinations of camera systems and SLAM to domains. | [50,51,52] |

| Facet | Subcategory | Description | Example from References |

|---|---|---|---|

| Camera System Typology | Monocular | Single forward-facing camera | AR headset using monocular camera [53] |

| Stereo | Dual cameras for depth estimation | Stereo vision in medical AR applications [54] | |

| Omnidirectional | 360° coverage with multiple or fisheye lenses | Panoramic vision sensors for navigation [55] | |

| VSLAM and Sensor Fusion | SLAM algorithms | Feature-based, direct, and deep learning SLAM | Learning-based VSLAM [56] |

| Sensor fusion | Visual–inertial, LiDAR, and GNSS/IMU | LiDAR + vision integration [57] | |

| Processing architectures | On-device vs. cloud offloading | Edge computing for real-time SLAM [58] | |

| Application and Deployment | Industrial sectors | Construction, plants, and tunnels | Construction monitoring with helmet-enabled vision [59] |

| Use cases | AR guidance, fault detection, and inspection | Detection and guidance in plant inspection [60] |

| Evaluation Category | Metric Type | Description | Example/Typical Values | Reference |

|---|---|---|---|---|

| SLAM Accuracy and Robustness | ATE/RPE/precision–recall/cloud error | ATE (Absolute Trajectory Error), RPE (Relative Pose Error) measure trajectory drift; cloud-to-cloud and Hausdorff distance measure map fidelity | ATE < 0.1–0.3 m; cloud error few cm like 2–5 cm | [36,61,62,63] |

| Real-Time Performance | Frame rate, latency, and CPU/GPU usage | Evaluates SLAM throughput and feasibility for mobile hardware; goal is real-time performance on embedded processors | 15–30 FPS; <100 ms latency | [64,65] |

| Environmental Robustness | Qualitative stress conditions | Performance under motion blur, low light, smoke, vibration, etc. | Performance maintained in <20 lux lighting, moderate blur | [66,67] |

| Human Factors | Usability surveys, ergonomics, and cognitive load | SUS score, operator feedback, and fatigue levels after use | SUS score > 70 (acceptable usability); low fatigue over 2 h | [68,69] |

| Benchmark Datasets | Dataset usage | Use of EuRoC MAV, WHU Helmet, etc., to evaluate accuracy and robustness | The WHU Helmet dataset yielded <5 cm error | [70] |

| Category | Details |

|---|---|

| Technical Specs and Capabilities |

|

| Implementations and Use Cases |

|

| Strengths |

|

| Limitations |

|

| VSLAM Performance (Monocular) |

|

| Category | Details |

|---|---|

| Depth Perception and Accuracy |

|

| Notable Implementations |

|

| Strengths | High precision in dimensional measurements (acts like a portable 3D scanner) [84]

|

| Limitations |

|

| VSLAM Characteristics | Performance: stereo SLAM systems (e.g., ORB-SLAM2/3) outperform monocular in terms of localization accuracy and map fidelity [86]

|

| Category | Details |

|---|---|

| Typical Configurations |

|

| Image Stitching and Processing |

|

| Complete Environment Capture Benefits |

|

| Notable VSLAM Implementations | |

| Challenges Specific to 360° Systems |

|

| VSLAM Performance |

|

| Inspection and Field Use Benefits | Wide-Area Documentation: captures scenes behind or beside user

|

| Criteria | Monocular | Stereo | Omnidirectional |

|---|---|---|---|

| Mapping Accuracy and Coverage |

|

|

|

| Processing Requirements |

|

|

|

| Practical Deployment |

|

|

|

| Cost–Benefit |

|

|

|

| Category | Description | Example Algorithms | Strengths | Challenges/Considerations for Wearables |

|---|---|---|---|---|

| Feature-Based (Indirect) | Extracts and matches visual features like corners/edges across frames |

|

|

|

| Direct Methods | Operates on raw pixel intensities to estimate motion by minimizing photometric error |

|

|

|

| Hybrid Approaches | Combines feature and direct methods or integrates inertial/other sensors |

|

|

|

| Deep-Learning-Enhanced | Applies deep networks for features, depth, or end-to-end SLAM |

|

|

|

| Year | Feature-Based SLAM | Direct Methods | Visual–Inertial SLAM | DL-Augmented SLAM | Neural Representation SLAM |

|---|---|---|---|---|---|

| 2015 | 8 | 5 | 3 | 1 | 0 |

| 2016 | 9 | 6 | 5 | 1 | 0 |

| 2017 | 9 | 7 | 7 | 2 | 0 |

| 2018 | 9 | 7 | 8 | 3 | 1 |

| 2019 | 9 | 6 | 9 | 4 | 2 |

| 2020 | 8 | 5 | 9 | 5 | 3 |

| 2021 | 8 | 4 | 9 | 6 | 4 |

| 2022 | 7 | 4 | 9 | 7 | 5 |

| 2023 | 6 | 3 | 9 | 8 | 7 |

| 2024 | 5 | 2 | 9 | 9 | 9 |

| 2025 | 4 | 1 | 9 | 10 | 10 |

| Fusion Approach | Fused Sensors | Key Algorithms/ Systems | Benefits | Challenges/Considerations for Wearables |

|---|---|---|---|---|

| Visual–Inertial Odometry (VIO) | Camera + IMU (accelerometer + gyroscope) |

|

|

|

| Visual–LiDAR Fusion | Camera + LiDAR (2D or 3D) |

|

|

|

| Visual–GNSS Fusion | Camera + GPS/GNSS (RTK or standard) |

|

|

|

| Other Sensors (Barometer, Magnetometer, etc.) | Barometer, magnetometer, digital compass |

|

|

|

| Thermal/RGB/Depth Fusion | Multi-modal cameras (RGB + thermal, RGB-D) |

|

|

|

| Fusion Level | Varies by system |

|

|

|

| Architecture Type | Key Features | Examples | Benefits | Challenges/Considerations for Helmets |

|---|---|---|---|---|

| Edge Computing (On-Device) | All processing is conducted on or very near the helmet (CPU, GPU, and SoC in helmet or wearable module) |

|

|

|

| Cloud/Remote Processing | Processing is offloaded to cloud or remote servers. Helmet streams data (video and sensor) for processing |

|

|

|

| Hardware Acceleration | Use of GPUs, DSPs, NPUs, FPGAs, or ASICs to speed up SLAM, vision, and AI tasks |

|

|

|

| Real Time vs. Post-Processing | Trade-off between immediate on-site feedback and later high-fidelity analysis |

|

|

|

| Edge–Cloud Synergy (Hybrid) | Combines local and remote processing for balanced performance and responsiveness |

|

|

|

| Software Frameworks | Middleware/platforms for managing sensor data and processing pipelines |

|

|

|

| Power and Battery Optimization | Strategy to manage compute and power load to extend operational time |

|

|

|

| Security and Privacy | Ensures inspection data is handled securely |

|

|

|

| Inspection Type | Traditional Methods | Smart Helmet Enhancements | Benefits |

|---|---|---|---|

| Bridge and Structural Inspections | Manual visual inspection, rope/lift access, paper notes, handheld cameras | SLAM-based geo-tagged mapping, hands-free video/data capture, AR overlays of crack maps/BIM, voice or gaze-based defect tagging | Increased safety (hands-free), accurate location-based defect logs, overlay of design data, reduced post-processing |

| Industrial Plant Inspection | Clipboard-based rounds, manual gauge checks, heavy reference manuals | Camera-based OCR of gauges, AR display of machine data, voice-guided procedures, live remote expert support | Faster decision making, reduced cognitive load, real-time alerts, reduced need for expert travel |

| Underground Infrastructure (Tunnels, Mines, Sewers) | Flashlights, GPS-denied mapping, environmental monitors, manual sketching | SLAM with LiDAR/360° cameras, AR navigation cues, real-time 3D thermal/visual mapping, environmental data overlays | Improved situational awareness, immediate model generation, navigation aid in zero visibility, hazard alerts in context |

| Power Generation and Energy Facilities | Paper-based records, handheld inspections, physical marking, high-risk confined space entry | Location-aware data capture, AR component labeling, visual overlays of future installations, interior mapping for turbines/boilers | Safer confined space entry, error prevention in maintenance, planning aid for upgrades, objective image logs |

| Transformation Category | Bridge and Structural | Industrial Plant | Underground Infrastructure | Power Generation |

|---|---|---|---|---|

| Spatial Awareness | 4 | 3 | 5 | 4 |

| Augmented Visualization | 3 | 4 | 3 | 4 |

| Automation | 2 | 4 | 3 | 3 |

| Industrial Implementation | Application Domain | Helmet/Headset Role | Reported Benefits | Technologies Used |

|---|---|---|---|---|

| RINA Marine Surveys (Italy) [41] | Marine vessel inspection | Remote expert support through live helmet video and AR markup | Reduction in inspection time, reduced travel cost, improved safety, and accuracy | Kiber 3 Helmet, live video streaming, AR annotations |

| Boeing Wiring Assembly (USA) [107] | Aerospace manufacturing, harness assembly | AR-guided step-by-step wiring layout on work surface | Reduction in assembly time, near-zero error rate | AR wearable headsets, Visual Task Guidance |

| WHU Underground Mine Mapping (China) [70,82] | Mining/tunnel infrastructure mapping | Real-time and post-processed 3D SLAM mapping of tunnels | Survey-grade 3D point clouds, mapping performed in minutes vs. hours, centimeter-level accuracy | Helmet with LiDAR, IMU, cameras, and SLAM algorithms |

| Worker Safety Monitoring (South Korea) [108] | Construction site safety compliance | Real-time detection of hazards, PPE compliance, and environmental monitoring | Near elimination of heatstroke cases, reduction in unsafe behavior, and safety incident prevention | AI-enabled smart helmets, environmental sensors, cameras |

| Category | Challenge | Description |

|---|---|---|

| Technical Challenges | Battery Life Constraints | High-performance cameras, processors, and transmitters consume significant power, leading to limited battery life (2–3 h), which is insufficient for full work shifts. Solutions include using belt-mounted packs, but that adds a tether and may not be practical in remote settings. |

| Processing Power Limitations | Wearable devices have limitations in processing real-time complex algorithms like 3D reconstruction or AI detection, causing trade-offs between functionality and performance (e.g., reduced mapping or slower responsiveness). | |

| Environmental Factors Affecting Performance | Harsh environments (lighting variability, dust, rain, vibration, and EMI) degrade sensor performance, leading to potential failures in SLAM. Additional ruggedization or fallback sensors (LiDAR and radar) are required but add cost and weight. | |

| Data Management and Storage | High data volumes (video, 3D scans, and HD images) challenge storage and bandwidth, especially in remote locations. Managing data lifecycle, archiving, and compliance adds complexity. Privacy concerns and encryption are needed. | |

| Localization in Degraded Situations | SLAM can fail in low-feature or dynamic environments, causing tracking errors or map failures. Solutions include manual reinitialization or fallback systems, but they impact user experience. | |

| Connectivity in Real-time Systems | Smart helmets dependent on cloud services face network challenges in industrial environments with limited connectivity, requiring solutions for offline autonomy and local networks. | |

| Human Factors | Ergonomics and User Acceptance | Helmets can be uncomfortable (heavy, warm, and restrictive), leading to resistance from users. Balance between comfort, safety standards, and system functionality is essential to encourage adoption. |

| Training Requirements | Workers need extensive training to use the system effectively, which may be challenging for non-tech-savvy workers. Designing intuitive interfaces and minimizing learning curves is key. | |

| Cognitive Load during Operation | AR systems can overwhelm users with excessive information, causing distractions or fatigue. The interface must provide contextually relevant information without causing mental overload. | |

| User Interface Considerations | Hands-free control via voice or gestures can fail in noisy or restrictive environments. Clear feedback and simple, quick task execution are necessary to avoid user frustration and ensure adoption. | |

| Cultural and Workflow Changes | Resistance to technology due to concerns over autonomy or surveillance can hinder adoption. Change management, trust-building, and clear communication of the system’s role as an assistant rather than a replacement are critical. | |

| Regulatory and Standardization Issues | Safety Certifications for Industrial Environments | Helmets must meet strict safety standards (e.g., impact resistance and ATEX for explosive environments). Certification processes are expensive and time-consuming, which limits deployment in hazardous environments. |

| Data Privacy and Security Concerns | Continuous data collection (video/audio) raises privacy concerns. Companies must establish clear data usage policies and secure communication to avoid misuse and comply with privacy laws. | |

| Integration with Existing Safety Protocols | Smart helmets must integrate with traditional safety protocols (e.g., lockout–tagout) and may need regulatory changes for digital tools to be accepted as equivalent to physical safety measures. | |

| Lack of Industry Standards for AR Data and Interoperability | Lack of standardization for AR inspection data formats leads to vendor lock-in and interoperability challenges. Industry guidelines are evolving but are not yet fully established. | |

| Ethical and Legal Issues of Recording | Legal implications of continuous video/audio recording, especially without consent, require clear policies and user consent. Intellectual property protection and privacy concerns for workers must be addressed. | |

| Workforce Acceptance and Labor Relations | Resistance to helmet technology due to concerns over surveillance or loss of autonomy requires thoughtful communication and potential labor agreements to ensure trust and positive adoption. |

| Category | Recommendations | Future Research Directions |

|---|---|---|

| SLAM and Perception | Use proven SLAM solutions (e.g., ORB-SLAM3) and begin with stable indoor deployments | Develop robust SLAM that adapts to challenging conditions using event cameras, radar, and sensor fusion |

| AI and Semantic Analysis | Start integrating pre-trained models for basic object detection and labeling | Build semantic SLAM systems that understand environments (pipes, defects, etc.) and support automated defect tagging |

| Human–Computer Interaction | Involve inspectors in UI/UX design and train them on AR usage effectively | Study AR content delivery to reduce distraction and cognitive load, personalize content via adaptive AI |

| Hardware and Integration | Select ergonomic helmet designs with reliable battery life; plan for regular calibration | Research miniaturized components, edge AI chips, and seamless AR displays like micro-LED or visor projection |

| Data and Digital Twins | Centralize data storage and begin fusing helmet outputs with BIM/IoT dashboards | Enable dynamic digital twins updated in real time with wearable and IoT data |

| Collaboration Tools | Deploy remote expert systems (e.g., RealWear, Kiber) for guidance and training | Research shared spatial maps, swarm SLAM, and AR/VR platforms for team coordination and multi-user annotation |

| Networking and Infrastructure | Ensure secure, robust connectivity (WiFi or 5G) in inspection areas | Develop decentralized SLAM, compression methods for SLAM data, and robust map merging protocols |

| Standardization and Policy | Define clear IT and privacy policies; maintain devices and data proactively | Create benchmarks and datasets for helmet-based inspections; collaborate on open standards and IP-aware design |

| Industry Adoption | Launch pilot projects with clear metrics; document “quick win” successes to justify scaling | Study cost–benefit and impact of helmet deployment in large-scale industrial maintenance cycles |

| XR for Training | Use AR helmets to assist live tasks; link training to operations when possible | Explore using helmet-collected 3D models for VR-based training scenarios and study training-transfer effectiveness |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Merchán-Cruz, E.A.; Moveh, S.; Pasha, O.; Tocelovskis, R.; Grakovski, A.; Krainyukov, A.; Ostrovenecs, N.; Gercevs, I.; Petrovs, V. Smart Safety Helmets with Integrated Vision Systems for Industrial Infrastructure Inspection: A Comprehensive Review of VSLAM-Enabled Technologies. Sensors 2025, 25, 4834. https://doi.org/10.3390/s25154834

Merchán-Cruz EA, Moveh S, Pasha O, Tocelovskis R, Grakovski A, Krainyukov A, Ostrovenecs N, Gercevs I, Petrovs V. Smart Safety Helmets with Integrated Vision Systems for Industrial Infrastructure Inspection: A Comprehensive Review of VSLAM-Enabled Technologies. Sensors. 2025; 25(15):4834. https://doi.org/10.3390/s25154834

Chicago/Turabian StyleMerchán-Cruz, Emmanuel A., Samuel Moveh, Oleksandr Pasha, Reinis Tocelovskis, Alexander Grakovski, Alexander Krainyukov, Nikita Ostrovenecs, Ivans Gercevs, and Vladimirs Petrovs. 2025. "Smart Safety Helmets with Integrated Vision Systems for Industrial Infrastructure Inspection: A Comprehensive Review of VSLAM-Enabled Technologies" Sensors 25, no. 15: 4834. https://doi.org/10.3390/s25154834

APA StyleMerchán-Cruz, E. A., Moveh, S., Pasha, O., Tocelovskis, R., Grakovski, A., Krainyukov, A., Ostrovenecs, N., Gercevs, I., & Petrovs, V. (2025). Smart Safety Helmets with Integrated Vision Systems for Industrial Infrastructure Inspection: A Comprehensive Review of VSLAM-Enabled Technologies. Sensors, 25(15), 4834. https://doi.org/10.3390/s25154834