Abstract

Accurately identifying driver fatigue in complex driving environments plays a crucial role in road traffic safety. To address the challenge of reduced fatigue detection accuracy in complex cabin environments caused by lighting variations, we propose YOLO-FDCL, a novel algorithm specifically designed for driver fatigue detection under complex lighting conditions. This algorithm introduces MobileNetV4 into the backbone network to enhance the model’s ability to extract fatigue-related features in complex driving environments while reducing the model’s parameter size. Additionally, by incorporating the concept of structural re-parameterization, RepFPN is introduced into the neck section of the algorithm to strengthen the network’s multi-scale feature fusion capabilities, further improving the model’s detection performance. Experimental results show that on the YAWDD dataset, compared to the baseline YOLOv8-S, precision increased from 97.4% to 98.8%, recall improved from 96.3% to 97.5%, mAP@0.5 increased from 98.0% to 98.8%, and mAP@0.5:0.95 increased from 92.4% to 94.2%. This algorithm has made significant progress in the task of fatigue detection under complex lighting conditions. At the same time, this model shows outstanding performance on our self-developed Complex Lighting Driving Fatigue Dataset (CLDFD), with precision and recall improving by 2.8% and 2.2%, respectively, and improvements of 3.1% and 3.6% in mAP@0.5 and mAP@0.5:0.95 compared to the baseline model, respectively.

1. Introduction

With the rapid advancement of socioeconomic development and accelerating urbanization, Advanced Driver Assistance Systems (ADASs) have emerged as a pivotal research focus in automotive engineering and artificial intelligence domains [1]. As a critical technological component of intelligent driving systems, driver fatigue state monitoring plays an essential role in traffic safety assurance. Statistical data from the U.S. transportation safety authorities indicate that fatigue-related driving incidents resulted in 633 fatalities in 2020 alone [2]. Comprehensive traffic accident investigations reveal that driver fatigue contributes to 8.8–9.5% of vehicle collisions, with this proportion escalating to 10.6–10.8% in crashes involving substantial property damage [3].

Driver fatigue refers to the physiological and psychological imbalance after long periods of driving, leading to decreased attention, slow reactions, and poor decision making. With the development of technology, driver fatigue is mainly detected through the following three methods.

Physiological signal-based methods require intrusive sensors but have demonstrated promising accuracy. Fu [4] explored ECG signals by utilizing cross-correlation between surface EMG and ECG peak factors for fatigue assessment. Oviyaa [5] proposed EEG sensor-embedded helmet systems that trigger automatic drowsiness alerts. Fan [6] employed prefrontal EEG analysis, extracting features such as energy and entropy for machine learning-based classification. Wu [7] created long-term memory EEG power maps that were subsequently fed into CNN-LSTM models for enhanced fatigue detection. While these methods achieve high accuracy, their intrusive nature and equipment requirements limit practical deployment.

Vehicle behavior-based methods offer non-intrusive monitoring but suffer from delayed response characteristics. Sandberg [8] utilized time series analysis to detect fatigue through driving indicators such as speed and steering wheel angle, employing neural networks trained via particle swarm optimization. Wang et al. [9] developed random forest models based on vehicle lateral and longitudinal accelerations combined with steering wheel angle measurements. The AutoVue system [10], developed by the I-teris company, detects lane deviation using highway-facing cameras for real-time monitoring. Ma Yongfeng et al. [11] investigated truck driver fatigue by analyzing travel speed data as key fatigue indicators. However, these approaches are constrained by individual driving habits and environmental conditions.

Computer vision-based methods have emerged as the most promising approach due to their non-intrusive nature, real-time capabilities, and cost-effectiveness. Zhang et al. [12] developed yawning detection systems integrating deep learning algorithms with Kalman filters and Track-Learning-Detection trackers. Hsu et al. [13] extracted the driver’s mouth and eye features for fatigue early warning using a deep CNN, achieving an average accuracy of 91.9%, though with slightly lower precision. Yan Zhu et al. [14] constructed a fatigue recognition framework utilizing least squares curve fitting for ocular and oral contours, incorporating statistical sequence-based CNN architectures. Xianguo L et al. [15] designed a YOLO-integrated algorithm for monitoring driver eye conditions, demonstrating 99.0% mAP performance on the CEW benchmark. Shugang Liu et al. [16] developed a dynamic tracking solution based on the YOLO architecture to identify small-scale fatigue indicators, effectively tackling facial feature degradation issues in practical driving scenarios.

Although existing driver fatigue detection methods achieve good performance under normal conditions, maintaining robust performance under complex lighting conditions remains a critical challenge. Real-world driving environments present varying lighting conditions—including strong light, weak light, sudden illumination changes, and glare interference—which severely degrade detection accuracy in current methods [17,18]. These dynamic lighting variations make it particularly challenging to reliably detect driver drowsiness states, significantly limiting the practical effectiveness of existing fatigue detection systems in ADAS applications. This paper presents YOLO-FDCL, an enhanced fatigue detection system specifically designed to address the complex lighting challenge. Our main contributions are as follows:

A MobileNetV4-enhanced backbone network that leverages the Universal Inverted Bottleneck (UIB) modules and MobileMQA attention mechanisms to improve feature extraction under varying illumination conditions, demonstrating superior performance in maintaining feature quality when traditional CNN backbones struggle with lighting-induced degradation in fatigue detection scenarios.

A novel RepFPN feature fusion architecture that uniquely integrates three complementary components—RepBlock reparameterization for efficient multi-branch learning, SimConv upsampling with enhanced nonlinear representation, and learnable Transpose modules for detail preservation—creating the first feature pyramid network specifically optimized for fatigue detection under complex lighting scenarios while achieving superior computational efficiency compared to existing FPN variants.

A comprehensive Complex Lighting Driving Fatigue Dataset (CLDFD) that addresses the critical gap in existing datasets by systematically simulating real-world lighting challenges through strategic data augmentation techniques. This dataset expands from 8849 to 44,245 images using targeted image sharpening, saturation adjustment, brightness variation, and color shifting, providing the first standardized benchmark for evaluating fatigue detection robustness across diverse lighting conditions encountered in practical driving scenarios, enabling comprehensive validation that was previously impossible with existing datasets.

2. Related Works

2.1. Computer Vision-Based Fatigue Detection

In early computer vision-based driver fatigue detection, most studies utilized single features for determining driver fatigue states. Alioua et al. [19] proposed a method using Support Vector Machine (SVM) and Circular Hough Transform (CHT) that achieved 98% accuracy in yawning recognition. Zhang and Wang [20] proposed an algorithm using image processing technology to process the images, which was evaluated using the SVM model. Finally, the sequence floating forward selection algorithm is used to select the optimal parameters, and the fatigue detection model was established. Zhuang et al. [21] proposed an eye opening and closing fatigue detection method based on pupil and iris segmentation. This method extracts eye images through the Dlib algorithm, uses a segmentation network to extract pupil and iris features from eye images, and employs a decision network to evaluate eye opening and closing degrees. Finally, PERCLOS values are calculated based on eye opening and closing degrees to predict driver states. This method was tested on the NTHUDDD dataset, achieving a fatigue detection accuracy of 96.72%. However, the aforementioned methods only perform fatigue detection through single facial fatigue features, resulting in poor accuracy and robustness. To address these issues, researchers proposed multi-feature fusion-based fatigue detection methods.

Zhao [22] proposed a fatigue detection method based on cascaded multi-task convolutional neural networks (MTCNNs) and eye-mouth convolutional neural networks (EM-CNNs). MTCNNs are is used for detecting and locating the driver’s facial area and facial landmarks. Then, EM-CNNs are used to classify the state of the eyes and the degree of mouth opening to assess the driver’s fatigue state. Guo [23] proposed a real-time efficient fatigue detection method based on YOLOV5, which achieved an average precision (mAP) of 75.44% on the BioID-Face dataset and 80.61% on the GI4E dataset. You et al. [24] constructed a driver fatigue recognition algorithm based on facial motion information entropy, employing an improved YOLOv3 tiny algorithm to locate driver facial information in various scenarios. The study used the Dlib algorithm combined with facial feature point coordinates to establish FFT (Face Feature Triangle) and generated facial feature vectors based on FFT information. Finally, facial motion information entropy was obtained through a sampler, and driver fatigue states were determined by comparing the information entropy with preset thresholds. Experimental results showed that this algorithm achieved a detection accuracy of 94.32%.

In summary, fatigue detection methods based on object detection can effectively identify driver fatigue states. However, existing models do not adequately consider the complex lighting environments in real-world cockpit scenarios, which leads to reduced accuracy and robustness. Therefore, to address these issues, this paper improves the YOLOv8s model and proposes a high-precision driver fatigue detection model YOLO-FDCL. First, this paper introduces the advanced MobileNetV4 to reconstruct the backbone network of the original model, optimizing the network structure and improving the feature extraction capability. Meanwhile, the neck network of YOLOv8s is modified by introducing the Rep-FPN feature fusion network, which effectively improves the inference speed and detection accuracy of the model while maintaining computational efficiency. Experimental results show that the model achieves high robustness and can accurately detect driver fatigue states in complex lighting environments.

2.2. The Challenge of Determining Actual Fatigue State

Despite significant advances in visual feature detection accuracy, determining a driver’s actual state of fatigue rather than merely detecting visual symptoms has emerged as the most critical challenge in recent years. As emphasized by Hu et al., “a single information cannot precisely reflect the actual state of the driver in different fatigue phases,” highlighting the inherent uncertainty in visual-based assessment methods [25]. This fundamental limitation stems from the complex, nonlinear relationship between observable facial features and actual driving impairment capacity.

The temporal dimension significantly compounds this challenge. EEG-based studies consistently demonstrate that cognitive fatigue manifests in brain activity patterns several minutes before becoming visually apparent [26]. This temporal lag creates a dangerous window where visual-based systems may provide false reassurance while drivers are experiencing diminished cognitive capacity. Furthermore, the progression from cognitive to visual fatigue is highly individual-dependent, with variations influenced by age, sleep debt, and individual fatigue resistance patterns [27].

Validation challenges arise from the absence of universally accepted ground truth for “dangerous” fatigue states. While subjective measures such as the Karolinska Sleepiness Scale provide standardized assessment, studies have documented significant discrepancies between self-reported sleepiness and objective performance metrics [28]. EEG-based methods, recognized as the “gold standard” for fatigue detection, demonstrate superior accuracy but require intrusive sensors impractical for widespread deployment [29]. Multi-modal approaches combining visual features with physiological signals have shown improved reliability but face complexity and cost barriers for commercial adoption [30].

The threshold-setting problem represents perhaps the most critical unsolved challenge in practical deployment. Unlike typical computer vision applications, fatigue detection systems must balance dramatically different risk profiles: false negatives can result in accidents, while false positives may lead to system abandonment [31]. Environmental factors including varying lighting conditions and the transition from laboratory to real-world settings introduce additional uncertainties that current literature has inadequately addressed. As noted in recent reviews, “the gap between achieving high detection accuracy in controlled settings and providing reliable fatigue warnings in diverse real-world conditions represents the next frontier in driver safety research” [32]. These considerations highlight the need for approaches that improve visual detection robustness while acknowledging the inherent uncertainties in translating visual observations to functional safety assessments.

2.3. Feature Fusion

Deep learning-based object detection methods typically rely on backbone networks to extract features, but effective representation requires capturing both fine-grained details and semantic information across multiple scales. Lin et al. [33] developed the Feature Pyramid Network (FPN), which strengthens feature representation by integrating low-level high-resolution features with high-level semantic features through top-down pathways and lateral connections.

Building upon FPN, advanced architectures including PANet [34], NAS-FPN [35], ASFF [36], BiFPN [37], and AugFPN [38] have been proposed to further enhance detection performance through improved multi-scale feature fusion strategies.

These studies demonstrate that multi-scale feature fusion is crucial for robust object detection, particularly in challenging lighting environments. Different feature levels exhibit varying sensitivities to illumination changes: shallow layers capture details that may be affected by shadows and glare, while deeper layers extract semantic features more robust to lighting variations. Multi-scale fusion enables networks to compensate for lighting-induced degradation by leveraging complementary information across scales, maintaining detection accuracy under adverse lighting conditions. However, existing FPN variants often suffer from computational overhead and suboptimal feature integration under extreme lighting variations. Therefore, we propose Rep-FPN, which employs reparametrized convolutions to enhance feature fusion efficiency while providing stronger multi-scale feature integration specifically optimized for complex lighting scenarios, enabling more robust fatigue detection with improved computational efficiency.

3. Methods

3.1. YOLOv8 Model

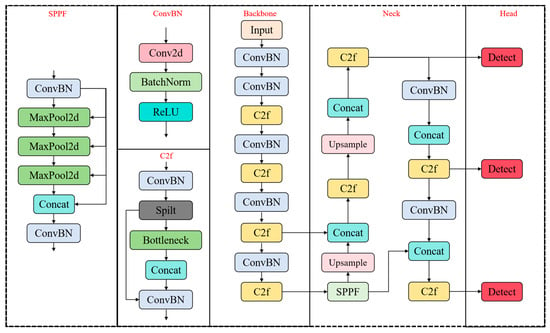

The YOLOv8 model architecture consists of four components: Backbone, Neck, and Detection Head. Figure 1 illustrates the structure of the YOLOv8 network.

Figure 1.

The network structure of YOLOV8.

- Input Layer:

The input layer adjusts the dimensions of the input images to meet training requirements. It also performs operations such as scaling, tone adjustments, and Mosaic data augmentation to enhance the diversity of the training data.

- Backbone Network:

The backbone network adopts a Cross-Stage Partial Network (CSPNet) architecture, which is designed to enhance feature extraction capabilities. It improves overall model performance by balancing computational efficiency and feature representation capacity.

- Neck:

The neck performs feature fusion, employing both the Feature Pyramid Network (FPN) and Path Aggregation Network (PAN). By integrating features across different scales, these networks enhance the model’s ability to detect objects in diverse sizes and positions.

- Detection Head:

The detection head adopts a decoupled structure, dividing the detection and classification tasks. This separation reduces the model’s parameter count and complexity, which in turn boosts its generalization and robustness. It outputs confidence scores and position details for objects, facilitating accurate detection.

3.2. YOLO-FDCL Detection Model

This paper proposes a YOLO-FDCL model based on the YOLOv8s series, which is specialized for driver fatigue detection. The model improves the detection accuracy and, at the same time, enhances the detection capability of the model for complex lighting environments.

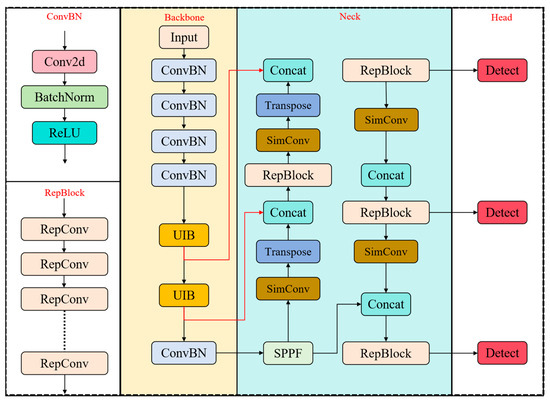

The overall structure of YOLO-FDCL is shown in Figure 2. The overall with YOLO-FDCL is similar to the overall structure of YOLOv8, which is mainly composed of backbone, neck, and head. In order to solve the complex driving noise (different driving habits of drivers, complex lighting environment while driving) on the network to extract features interference, this study selected the MobileNetv4 network [39] as the backbone network, which effectively improves the feature extraction ability of the model, as well as the robustness of the network.

Figure 2.

The network structure of YOLO-FDCL.

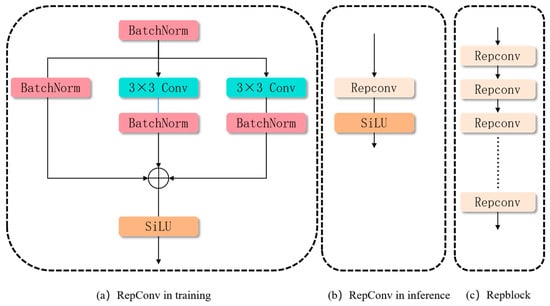

At the same time, this paper refers to the RepVGG algorithm to modify the network’s neck layer to add a reparameterization block (RepBlock) [40] to form the unique feature pyramid structure of this paper can effectively improve the feature fusion ability to improve the accuracy and speed of the driver fatigue detection.

RepBlock is a network module designed based on the re-parameterization concept, consisting of multiple stacked or combined RepConv units with enhanced feature representation capabilities and optimized inference performance. Each RepConv unit utilizes a multi-branch structure during the training phase to achieve diverse feature learning. These branches include a 3 × 3 convolution branch as the main branch, along with auxiliary 1 × 1 convolution, Batch Normalization (BN), and identity mapping branches. The multi-branch structure independently learns parameters during training, effectively enhancing the model’s feature representation ability and generalization capability, thus better adapting to complex task requirements. During the inference phase, all branch parameters are mathematically re-parameterized and fused into an equivalent 3 × 3 convolution operation.

This design significantly reduces the computational overhead during inference while preserving the feature expression effects learned during training. The overall structure of RepBlock, through the stacking of multiple RepConv units, further improves the network’s feature extraction capabilities. Its re-parameterization process ensures higher efficiency and optimal accuracy during inference.

3.2.1. Improved MobileNetv4 Backbone

The traditional YOLOv 8 backbone uses the CSPDarknet architecture to extract multi-scale and high-level features through multiple convolutions and pooling, but it is a deep architecture, and complex convolutions consume a lot of computing resources, limiting deployment on low-performance hardware. In addition, the feature extraction ability of this architecture is slightly insufficient in response to fatigue detection under complex lighting conditions, so we introduce the Mobilenetv4 network as the backbone network of YOLO-FDCL, which can effectively improve the feature extraction ability of the model while realizing the lightweight of the network.

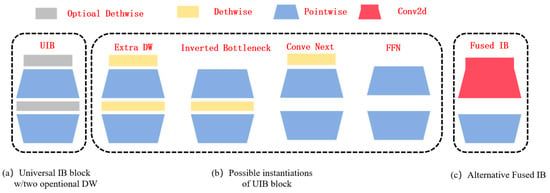

MobileNetV4 is an efficient neural network architecture designed for mobile devices. As shown in Figure 2, it demonstrates excellent performance on various hardware platforms by integrating the Universal Inverted Bottleneck (UIB) and MobileMQA attention mechanisms. The feed-forward network (FFN) by the UIB module unifies the Inverted Bottleneck (IB), ConvNext, and Visual Transformer (VIT), and introduces the Extra Depth IB (ExtraDW) block, which integrates the strengths of the above modules to increase the network’s depth and sense field and maximize the computational utilization at less computational cost. The possible architecture of the universal UIB is shown in Figure 3 below.

Figure 3.

The network structure of UIB.

Compared with the traditional inverted bottleneck structure, the UIB module adopts two deep convolutions before the expansion layer and between the expansion layer and the projection layer, so that the clever structural design can achieve more detailed adjustment of the feature extraction process, so as to improve the flexibility of feature extraction and meet the needs of different tasks. Based on these two variable depth convolutions, the UIB module can form four different instances, namely Extra dw, Inverted bottleneck, Conv next, and FFN (Feed Forward Network). Through two deep convolutions, Extra dw can capture deeper spatial features and extract these features at different levels, effectively enhancing the depth and receptive field of the network to meet the input and output requirements of the network. Inverted bottleneck increases the capacity of the model by performing a spatial blending operation after expanding the feature. Conv next performs the blending of spatial information in advance before the feature map expansion, making the spatial blending of larger convolution kernels more efficient. The FFN module consists of two point-by-point convolutions, and inserts an activation function and a normalization layer between the two layers, which is suitable for the global feature integration of the network backend. Through the information fusion between spatial features and channel features, the UIB module can adaptively adjust the size of the sensing field of the model and efficiently use computing resources, thereby improving the performance of the model. The four possible UIB module instances and built UIB blocks are shown in Figure 3 below.

The module also introduces mobile MQA, which is an optimizer for the attention module and improves inference speed by more than 39%. Unlike traditional Multi-Headed Self-Attention (MHSA), Mobile’s MQA shares keys and values across all heads, reduces memory access requirements while maintaining high-resolution queries through asymmetric spatial subsampling, and significantly improves computational efficiency. The overall network architecture of the MobileNetV4 feature extraction backbone used in this paper is shown in Table 1. YOLO-FDCL efficiently extracts driver fatigue features in complex lighting environments using UIB, while mobile MQA attention further reduces the interference of background noise, thus achieving efficient extraction of fatigue features.

Table 1.

Network architecture of MobileNetV4.

3.2.2. Design of the Neck Section-RepFPN

Although the traditional YOLOv8 feature gold tower structure can effectively integrate multi-scale features, in strong backlight, direct sunlight or low light environments, the traditional FPN may not be able to effectively capture key facial features such as eyes and mouth due to overexposure or underexposure, resulting in false detection and increased missed detection rate of the model. At the same time, under low-light conditions, it is difficult for the traditional FPN downsampling layer to capture enough detailed features, which limits the overall detection accuracy. To this end, we proposed RepFPN to improve the robustness of the model. RepFPN is mainly composed of three parts: Repblock, simconv, and Transpose. Here, we refer to the idea of structural reparameterization proposed by Ding et al. (during training, the network retains multiple branches to learn more subsets. In the inference process, the network merges multiple branches into one path, thereby improving the inference speed of the model), and proposes a reparameterization module (Repblock) in the structure of the model, as shown in Figure 4 below, to improve the inference speed of the model and the information extraction ability of features.

Figure 4.

Repconv training as well as inference schematics, and RepBlock structure diagrams.

The reparameterization procedure is explained below. As shown in Equation (1), the output Qout of RepConv is calculated without activation as follows:

In this context, Dk1 and Dk3 denote the weights of the 1 × 1 and 3 × 3 convolutions, respectively. We use μk3, ωk3, εk3, δk3 to denote the accumulated mean, standard deviation, learned scaling factor, and bias of the Batch Normalization (BN) after the 3 × 3 convolution. Similarly, μk1, ωk1, εk1, δk1 are utilized for the 1 × 1 convolution, while μkd, ωkd, εkd, δkd are designated for the identity branch.

The input feature map is denoted by Qin. The identity branch can be treated as a 1 × 1 branch with a constant weight of 1, and the 1 × 1 convolution can be equivalently considered a 3 × 3 convolution by zero-padding. Next, we show the reparameterization process using the 3 × 3 branch as an example:

As a result, the weight and bias for the 3 × 3 branch can be transformed into and . A similar derivation applies to the other two branches. The final output of RepConv during inference is represented by Equation (3).

where Q is the output of RepConv with SiLU activation function; and are the weights of 1 × 1 convolution and identity branch, respectively; and are the bias of 1 × 1 convolution and identity branch, respectively. In this case, Q denotes the output of RepConv with the SiLU activation function, and , are the weights for the 1 × 1 convolution and identity branch, respectively. and are the biases for these respective branches.

Although the RepConv structure design helps reduce the model’s parameter count and improve inference speed, a single RepConv module is insufficient to effectively capture subtle facial expression changes in drivers under complex lighting conditions, especially when fatigued. Therefore, by cascading multiple RepConv modules to form a RepBlock, the model’s depth is increased, enhancing its generalization ability and flexibility. This enables the model to extract deeper semantic information from feature maps, thus improving detection accuracy and inference speed while reducing the model’s parameter count.

Secondly, we will replace the upsampling operation in the original model with SImconv and the Transpose module. Simconv mainly contains three operations, Conv2d, batch normalization operation, and Relu activation function, and the custom Simconv replaces the original Silu layer with the Relu layer, which enhances the nonlinear representation ability of the model. By adjusting the number of channels and feature representations of the feature map, Simconv can more effectively handle the complex features caused by lighting changes, especially in low-light or high-light environments, and still accurately extract the driver’s subtle features (such as eye closures, etc.). Transpose is a learnable upsampling method, which can learn more complex feature mapping relationships than the original closest collar or interpolation upsampling methods, especially under complex lighting conditions. This learnable upsampling method avoids the image distortion or blurring problems that may be brought about by traditional methods, so that the details of the feature map can be better preserved and enhanced, and the accuracy of driver fatigue detection is further improved. By optimizing the inference speed, enhancing the nonlinear expression ability and improving the feature upsampling method, RepFPN effectively improves the driver fatigue detection ability in complex lighting environments.

3.3. Fatigue Detection Mechanism

Unlike traditional fatigue detection methods that rely on explicitly extracted facial features such as PERCLOS (Percentage of Eye Closure), eye aspect ratio (EAR), or mouth aspect ratio (MAR), our YOLO-FDCL model adopts an end-to-end deep learning approach that directly learns fatigue patterns from holistic facial images. Our model processes the entire facial region as a unified input without requiring manual feature engineering or specific facial landmark detection. The deep convolutional architecture automatically learns discriminative features that distinguish between fatigue and non-fatigue states through several key mechanisms.

The MobileNetV4 backbone extracts hierarchical features from the entire facial image, capturing both local details and global patterns that correlate with fatigue states. Rather than explicitly detecting eye closure or yawning, the model learns implicit representations of fatigue through supervised training on labeled data. These learned features may include subtle changes in facial muscle tension, head pose variations, and overall facial expressions that humans might not easily quantify. The model classification is based on the annotation standards defined in our datasets, where facial images in the YAWDD dataset are categorized into “drowsy” and “undrowsy” states based on observable driver behaviors. The labeling criteria follow established fatigue indicators but are applied at the image level rather than feature level, with no explicit facial region segmentation or feature point detection performed.

This holistic approach offers several advantages. By learning from the entire facial region, the model is less susceptible to partial occlusions or failures in specific feature detection, providing enhanced robustness. The model can capture complex fatigue patterns that might not be captured by predefined features, demonstrating superior adaptability. Additionally, this approach eliminates the computational overhead of multiple feature extractors and fusion mechanisms, resulting in improved efficiency. While traditional approaches decompose the fatigue detection task into multiple sub-problems (eye detection → eye state classification → PERCLOS calculation), our YOLO-FDCL model directly maps facial images to fatigue states, allowing the deep neural network to discover the most discriminative features automatically. This end-to-end learning paradigm is particularly advantageous under complex lighting conditions, where traditional feature extraction methods often fail due to poor visibility of specific facial landmarks.

4. Experiments and Results

This section presents comprehensive experimental validation of our YOLO-FDCL model through multiple complementary analyses. Our evaluation framework systematically demonstrates the effectiveness, robustness, and practical applicability of our approach across diverse scenarios and challenging lighting conditions.

The experimental validation proceeds through three main phases. First, we describe our datasets, including the novel Complex Lighting Driving Fatigue Dataset (CLDFD), experimental setup, and evaluation metrics (Section 4.1, Section 4.2, Section 4.3 and Section 4.4). Second, we conduct comparative analysis against state-of-the-art detection methods and systematic ablation studies to validate individual component contributions (Section 4.5 and Section 4.6). Finally, we perform specialized validation under complex lighting environments and provide visual analysis demonstrating model attention mechanisms (Section 4.7 and Section 4.8).

This multi-faceted approach ensures thorough validation of our claims regarding improved fatigue detection accuracy and robustness under challenging lighting conditions encountered in real-world driving scenarios.

4.1. Datasets

To comprehensively evaluate our YOLO-FDCL model’s performance on driver fatigue detection, we utilize multiple datasets that provide diverse and challenging scenarios. The YAWDD (yawning detection dataset) [41] is the main dataset for us to verify the performance of fatigue detection, and some images of the dataset are shown in Figure 5 below. This dataset comprises videos of drivers’ faces captured under various lighting conditions and is organized into two distinct sets based on camera placement. The first set contains 322 videos recorded with cameras mounted under the front and rearview mirrors, featuring 3–4 videos per participant, while the second set consists of 29 videos captured from dashboard-mounted cameras. The dataset encompasses diverse participant demographics, including both male and female drivers of different ethnicities, with varying eyewear conditions (no glasses, regular eyeglasses, and sunglasses). All videos are in AVI format at 640 × 480 resolution with 30 fps, and each video captures three distinct states: (1) normal driving without talking, (2) talking or singing, and (3) yawning. From this dataset, we extracted a total of 10,611 images, which were divided into training and validation sets at an 8:2 ratio.

Figure 5.

Sample frames from the YAWDD dataset.

The UTA-RLDD (University of Texas at Arlington Real-Life Drowsiness Dataset) [42] provides complementary data with its unique naturalistic approach. This dataset contains approximately 30 h of RGB videos from 60 healthy participants (51 men and 9 women) representing diverse ethnic backgrounds: 10 Caucasian, 5 non-white Hispanic, 30 Indo-Aryan and Dravidian, 8 Middle Eastern, and 7 East Asian individuals, aged 20–59 years. Unlike controlled laboratory recordings, participants self-recorded videos using their personal cell phones or web cameras in their chosen indoor environments, resulting in authentic variations in backgrounds, camera angles, and recording quality. Each participant provided three 10 min videos corresponding to different alertness levels (alert, low vigilant, and drowsy) based on the Karolinska Sleepiness Scale, totaling 180 videos with frame rates below 30 fps.

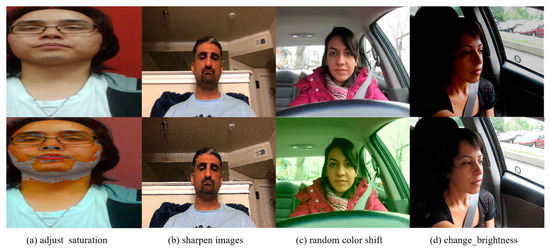

To specifically address the challenge of fatigue detection under complex lighting conditions and validate our model’s robustness, we created the Complex Lighting Driving Fatigue Dataset (CLDFD). This custom dataset was constructed through careful integration and augmentation of samples from both YAWDD and UTA-RLDD datasets. Our dataset construction process involved three key steps. First, we strategically selected 8849 facial images from both source datasets, prioritizing samples that represent diverse lighting conditions, participant characteristics, and fatigue states. Second, we applied comprehensive image enhancement techniques, including image sharpening, saturation adjustment, brightness variation, and color shifting, to simulate the challenging lighting conditions encountered in real-world driving scenarios such as strong backlighting, direct sunlight, shadows, and low-light environments. Through these augmentation techniques, we expanded the dataset to 44,245 images, ensuring adequate representation of various lighting challenges.

For annotation consistency, we employed the LabelImg tool to manually label each image according to two primary fatigue states: “drowsy” and “undrowsy” (alert). Our annotation protocol followed strict classification standards to ensure accurate correspondence between visual fatigue indicators and assigned labels. Multiple annotators reviewed ambiguous cases to maintain labeling quality. The final dataset was divided into training, validation, and test sets at a ratio of 8:1:1, providing sufficient data for both model training and comprehensive evaluation. Sample images from CLDFD, shown in Figure 6, demonstrate the variety of lighting conditions and fatigue states represented in our dataset.

Figure 6.

Sample frames from the CLDFD.

4.2. Experimental Environment

This study was conducted in an environment featuring a 13th Gen Intel Core i9-13900H processor, 32 GB of RAM, and an NVIDIA GeForce RTX 4070 GPU with 8 GB of VRAM. The software environment consisted of Python 3.8, Windows 11 operating system, and PyTorch 2.2.2 deep learning framework. The detailed configurations are listed in Table 2.

Table 2.

Experiment environment configuration.

4.3. Training Parameters

The training strategy we employ follows a similar pattern to YOLOv8, using the stochastic gradient descent (SGD) optimizer with momentum and applying cosine annealing for learning rate adjustments. The initial learning rate is set to 0.01. We apply traditional data augmentation methods, including Mosaic, Mixup, and random flipping. The model was trained for 300 epochs on an RTX 4070, with a batch size of 16, and trained from scratch on the YAWDD dataset. For training, we set the size of each input image 640 × 640.

4.4. Evaluating Indicator

To evaluate the detection performance of the proposed improved model YOLO-FDCL, this paper uses the mean average precision (mAP@0.5) at an IoU threshold of 0.5, and the mean average precision (mAP@0.5:0.95) over the IoU range of 0.5 to 0.95 as metrics for model detection accuracy. The number of parameters and GFLOPs are used as indicators of model complexity. These metrics effectively reflect the performance of the model in terms of both accuracy and complexity in the driver fatigue detection task.

Precision (P) refers to the fraction of true positive samples among those predicted as positive by the model, and is mathematically defined as follows:

where True Positives (TPs) represent the number of correctly predicted positive samples, and False Positives (FPs) represent the number of incorrectly predicted positive samples.

The Mean Average Precision (mAP) is the average of the precision values across different classes and various IoU (Intersection over Union) thresholds. First, for each class and predicted box, whether it is a correct detection is determined based on its IoU with the ground truth box. Then, the area under the Precision–Recall curve was calculated for each class, and finally, these areas were averaged across all classes. The mathematical expression is as follows:

where Nc is the total number of classes, and APi is the average precision for the i-th class.

4.5. Comparisons

To evaluate the performance of the YOLO-FDCL model, we compared it with multiple mainstream models, including the YOLOv8 series, YOLOv10, Faster RCNN, Dynamic RCNN [43], RT-DETR [44], and Deformable-DETR [45]. Training was performed on the YAWDD dataset while maintaining consistency of individual training parameters and hyperparameters, and the training results are shown in Table 3.

Table 3.

Comparison of advanced detection algorithms on the YAWDD Test.

From the results in Table 3, it can be seen that YOLO-FDCL demonstrates excellent performance on key metrics, including precision, recall, mAP@0.5, and mAP@0.5:0.95. Specifically, YOLO-FDCL achieves a precision of 98.8%, a recall of 97.5%, a mAP@0.5 of 98.8%, and a mAP@0.5:0.95 of 94.2%. These values are all superior to the comparison models. For example, compared to the classic two-stage detection models Faster R-CNN and Dynamic R-CNN, YOLO-FDCL not only outperforms them in terms of accuracy and recall, but also features a more compact model with significantly reduced computational overhead and notably lower FLOPs.

Compared to traditional lightweight models such as YOLOv5-S and YOLOv10-S, YOLO-FDCL not only outperforms them in precision and recall, but also improves mAP@0.5 by 0.6% for both models, and achieves improvements of 2.7% and 2.8%, respectively, on the more stringent mAP@0.5:0.95 metric, while maintaining similar computational overhead.

Within the YOLOv8 series, YOLO-FDCL demonstrates comprehensive performance advantages. Compared to the baseline model YOLOv8-S, YOLO-FDCL achieves improvements of 1.4%, 1.2%, 0.8%, and 1.9% in precision, recall, mAP@0.5, and mAP@0.5:0.95, respectively, while slightly reducing the parameter count.

Compared to other YOLOv8 variants, YOLO-FDCL also performs excellently. Compared to the lightweight YOLOv8-N, although the parameter count has increased, all performance metrics show significant improvements. Compared to the medium-scale YOLOv8-M and large-scale YOLOv8-L, YOLO-FDCL not only surpasses them in detection accuracy, but also significantly reduces computational complexity—compared to YOLOv8-M, the parameter count is reduced by half and FLOPs are reduced by a factor of 2, and compared to YOLOv8-L, resource consumption is even more dramatically reduced.

It is worth noting that although the largest YOLOv8-X model has slightly higher recall than YOLO-FDCL (97.9% vs. 97.5%), this minor difference is a reasonable design trade-off. Our model design philosophy is to maintain high detection accuracy while significantly reducing computational complexity and model size to meet the practical requirements of resource-constrained scenarios such as in-vehicle systems. YOLO-FDCL has only 10.96 M parameters and 32.3G FLOPs, compared to YOLOv8-X’s 61.60 M parameters and 226.7G FLOPs, demonstrating significant advantages in computational efficiency. This indicates that YOLO-FDCL successfully achieves the dual goals of performance improvement and efficiency optimization.

Furthermore, compared to the Transformer-based model RT-DETR (precision 98.3%, recall 95.6%, mAP@0.5 96.5%, FLOPs 103.4G), YOLO-FDCL not only achieves 2.3% higher mAP@0.5 and 4.4% higher mAP@0.5:0.95, but also reduces computational complexity by 3.2 times. This balance between maintaining high accuracy and achieving high efficiency makes YOLO-FDCL particularly suitable for deployment on in-vehicle embedded devices.

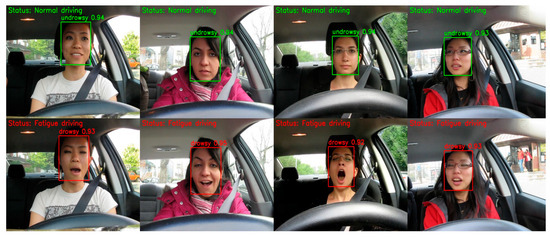

In automotive safety applications such as driver fatigue detection, ensuring driver safety is the primary goal. Experimental results demonstrate that YOLO-FDCL performs excellently in both accuracy and recall, capable of precisely identifying driver fatigue states while effectively reducing false positives and false negatives. This reliable detection performance enables timely warnings at critical moments, providing a more practical and trustworthy technical solution for automotive safety systems. Figure 7 shows partial results of driver fatigue detection on the YAWDD dataset, further confirming the accuracy of this model’s detection.

Figure 7.

YOLO-FDCL detection results.

4.6. Ablation Experiments

4.6.1. Backbone Ablation Experiment

In order to verify the performance improvement effect of MobileNetV4-backbone in the driver fatigue detection model based on YOLO-FDCL, the original YOLOv8 backbone model was replaced with other lightweight (such as Fasternet, EfficientVIT, Starnet, timm backbone), and the YOLO-FDCL model using MobileNetV4-backbone was compared under the same test conditions. The experimental results are shown in Table 3. As can be seen from the experimental results in Table 4, the YOLO-FDCL model using MobileNetV4 as the backbone network shows significant advantages in several key strict metrics. Compared to the relatively new lightweight backbones Fasternet, EfficientVIT, and Starnet, the map50 increases by 0.6%, 0.5%, and 0.4%, respectively. The more stringent experimental requirements of map50:95 increase by 1%, 1%, and 0.8%, respectively. Meanwhile, compared to the Transformer series backbone TIMM, this model improves by 0.6% and 1.1% on map50 and map95, respectively.

Table 4.

Comparison of ablation experiments on different trunks.

4.6.2. FPN Ablation Experiment

Similarly, to validate the performance improvement of RepFPN in the YOLO-FDCL model, the original YOLOv8 NECK part was replaced with other common feature pyramid structures (such as BiFPN, MAFPN, HSFPN), and the YOLO-FDCL model using RepFPN was compared under the same testing conditions. The specific experimental results are shown in Table 5. From the experimental results, we can see that models using the RepFPN structure have higher accuracy compared to those using other traditional FPN structures, with improvements of 1%, 1.2%, and 0.9% on map50:95, respectively.

Table 5.

Comparison of ablation experiments on different FPN.

4.6.3. Integral Ablation Experiment

To validate the effectiveness of the proposed YOLO-FDCL model for driver fatigue detection under complex lighting conditions, an ablation study was conducted. The original YOLOv8s network and its improved versions with each enhanced module were tested, and the experimental results are shown in Table 6.

Table 6.

Ablation study results.

When the backbone network is substituted with the Mobilenetv4 model, the precision, recall, mAP@0.5, and mAP@50:95 improve by 0.9%, 0.5%, 0.8% and 0.9%, respectively. These performance improvements stem from Mobilenetv4’s advanced architectural design. Mobilenetv4 employs optimized depthwise separable convolutions and Universal Inverted Bottleneck (UIB) modules to more effectively extract multi-scale features while reducing information loss. Additionally, its efficient attention mechanism adaptively focuses on critical facial regions, including eyes and mouth, ensuring precise localization of key fatigue detection features even under uneven illumination conditions.

The integration of RepFPN in the neck architecture yields precision, recall, mAP@0.5, and mAP@50:95 improvements of 1.2%, 0.1%, 0.7% and 1.0%, respectively, while achieving an 11% reduction in parameter count. These improvements stem from the synergistic effect of RepFPN’s three core components. RepBlock learns rich feature representations through a multi-branch structure during training and reparameterizes into equivalent 3 × 3 convolutions during inference to enhance efficiency. The SimConv module replaces traditional upsampling with its Conv2d-BN-ReLU structure enhancing nonlinear expression capabilities to better handle complex features caused by lighting variations. Transpose learnable upsampling avoids image distortion issues of traditional interpolation methods, better preserving and enhancing feature map details under complex lighting conditions, thereby significantly improving precision performance.

The synergistic integration of Mobilenetv4 backbone and RepFPN neck achieves optimal performance improvements of 1.4%, 1.2%, 0.8%, and 1.8% in precision, recall, mAP@0.5 and mAP@50:95, respectively. This synergistic effect stems from the complementarity of the two components in the feature processing pipeline: Mobilenetv4 is responsible for extracting rich multi-scale feature representations from input images, with its UIB modules and MQA mechanism ensuring discriminative ability even under adverse lighting conditions; while RepFPN optimally integrates these multi-scale features through its reparameterized feature fusion strategy. This end-to-end optimization enables the entire network to maintain high-precision detection while enhancing sensitivity to subtle fatigue signs when facing complex lighting challenges, achieving balanced improvements in both precision and recall.

4.7. Lighting Environment Experiment

To comprehensively evaluate the robustness and generalization capability of YOLO-FDCL under challenging lighting conditions encountered in real-world driving scenarios, we conducted specialized experiments on our self-constructed Complex Lighting Driving Fatigue Dataset (CLDFD). While the YAWDD dataset provides a valuable benchmark for fatigue detection research, its limited representation of diverse lighting conditions necessitates additional validation under more challenging and realistic lighting scenarios. The CLDFD, as described in Section 4.1, was specifically designed to address this limitation by incorporating systematic data augmentation techniques that simulate various lighting challenges, including strong backlighting, direct sunlight exposure, shadow variations, and low-light conditions. This dataset expansion from 8849 to 44,245 images ensures comprehensive coverage of lighting conditions that drivers encounter in real-world scenarios. The comparative experimental results on the CLDFD are presented in Table 7, where we evaluate both the baseline YOLOv8-S and our proposed YOLO-FDCL under these challenging conditions.

Table 7.

Comparison of advanced detection algorithms on the CLDFD Test.

The experimental results demonstrate that YOLO-FDCL maintains superior performance even under the more challenging lighting conditions represented in the CLDFD. Specifically, our model achieves significant improvements of 2.8% in precision (89.7% vs. 86.9%), 2.2% in recall (88.6% vs. 86.4%), 3.1% in mAP@0.5 (90.5% vs. 87.4%), and 3.6% in mAP@0.5:0.95 (84.6% vs. 81.0%) compared to the baseline YOLOv8-S model.

These improvements are particularly critical for safety-critical fatigue detection applications. The enhanced precision reduces false alarms that could lead to driver distraction or system disabling, while the improved recall ensures fewer missed fatigue events—a crucial factor since undetected drowsiness poses significant safety risks. In complex lighting scenarios where traditional systems often fail, maintaining high precision and recall becomes even more vital as drivers are already challenged by poor visibility conditions that increase accident risk. The consistent performance gains across all metrics under challenging lighting conditions validate that YOLO-FDCL possesses the robustness necessary for reliable fatigue detection in real-world driving scenarios.

4.8. Visual Analysis

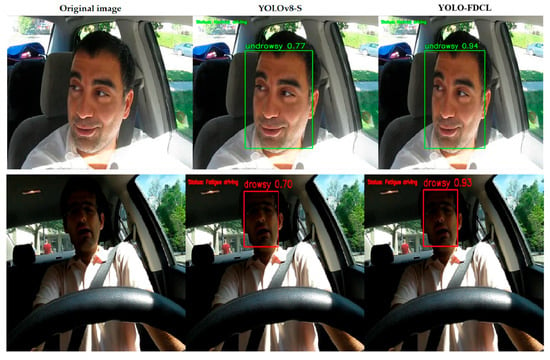

Figure 8 presents a comparison of the YOLOv8s algorithm and the proposed YOLO-FDCL algorithm for the fatigue detection task under complex lighting conditions. The complex lighting conditions and the exposure of the driver’s face to variable lighting environments during driving make it more challenging for the model to extract effective facial fatigue features. In typical detection scenarios, YOLO-FDCL surpasses the original YOLOv8s network in accuracy and exhibits enhanced robustness. In the figure, the green and red boxes correspond to anchor boxes, and the numbers within them denote the confidence scores.

Figure 8.

Comparing the performance results of the YOLOv8-S and YOLO-FDCL algorithms.

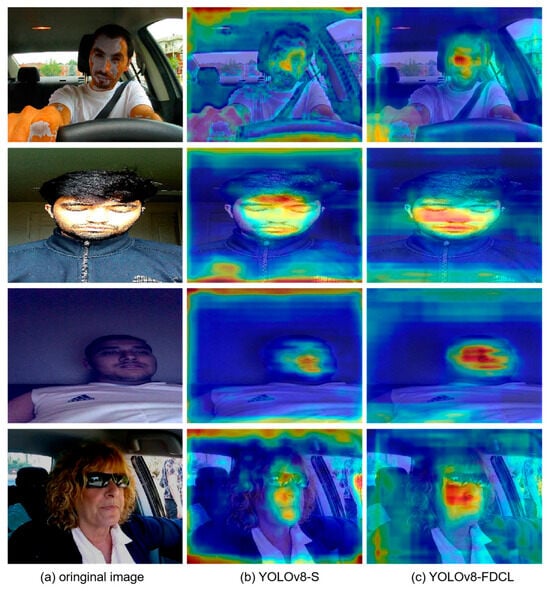

To further validate the validity of our model, we performed a visual comparative analysis of the YOLO-FDCL model and the baseline model, as shown in the heat map of Figure 9. After four operations—image sharpening, saturation adjustment, color shift, and brightness adjustment—YOLO-FDCL simulates the complex care environment of the driver in a real driving environment. It can be clearly seen that in the case of the alternation of light caused by the surrounding environment to the driver’s face, our model can focus on the driver’s face better and detect the fatigue state of the baseline, while the baseline model is disturbed by the external environment, and the detection ability is greatly reduced. In Figure 9, our model is still able to accurately identify the driver’s fatigue state when the driving environment is at low brightness. This fully demonstrates the robustness of our model.

Figure 9.

Comparison of heatmap results between YOLOv8-S and YOLO-FDCL algorithms.

5. Conclusions

To address the challenges of complex lighting conditions in driver fatigue detection, we propose the YOLO-FDCL algorithm that combines the MobileNetV4 architecture with RepFPN multi-scale feature fusion to enhance fatigue feature capture capability in complex scenes. Experimental results demonstrate that YOLO-FDCL achieves significant improvements of 1.4% in precision (from 97.4% to 98.8%), 1.2% in recall (from 96.3% to 97.5%), 0.8% in mAP@0.5 (from 98.0% to 98.8%), and 1.8% in mAP@0.5:0.95 (from 92.4% to 94.2%) compared to the baseline, proving its practical value in complex driving scenarios. This work addresses the critical lighting robustness challenge that has limited ADAS fatigue detection effectiveness in real-world deployment, enabling automotive manufacturers to deploy reliable fatigue monitoring systems with reduced false alarms and improved detection consistency across diverse driving environments. Although the model demonstrates good accuracy and robustness, limitations remain: reliance on single-frame facial features, lack of temporal modeling for dynamic fatigue evolution, and absence of physiological signal integration. Future research will focus on developing multimodal fusion algorithms that integrate temporal dynamics, physiological features, and vehicle behavior data to enhance generalization performance and practical application value.

Author Contributions

Methodology, G.L. and K.W.; software, G.L. and K.W.; validation, G.L. and K.W.; investigation, W.L. and Y.W.; writing—original draft preparation, G.L. and K.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data supporting the findings of this study are available from publicly accessible repositories. The Yawning Detection Dataset (YAWDD) is available at the IEEE Dataport (https://ieee-dataport.org/open-access/yawdd-yawningdetection-dataset, accessed on 11 October 2024). The UTA Real-Life Drowsiness Dataset (UTA-RLDD) is officially hosted by the UTA Vision-Learning-Mining Laboratory and accessible at https://sites.google.com/view/utarldd/home (accessed on 11 October 2024). The Complex Lighting Driving Fatigue Dataset (CLDFD) constructed in this study through systematic augmentation of the aforementioned datasets is available upon reasonable request to the corresponding author. These datasets provide the foundation for the comprehensive analysis and validation of the proposed YOLO-FDCL model under diverse lighting conditions. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Muhammad, K.; Ullah, A.; Lloret, J.; Del Ser, J.; De Albuquerque, V.H.C. Deep learning for safe autonomous driving: Current challenges and future directions. IEEE Trans. Intell. Transp. Syst. 2021, 22, 4316–4336. [Google Scholar] [CrossRef]

- NHTSA. Overviewof Motor Vehicle Crashes; NHTSA: Washington, DC, USA, 2022. Available online: https://crashstats.nhtsa.dot.gov/Api/Public/ViewPublication/813266 (accessed on 15 March 2025).

- Owens, J. Prevalence of Drowsy Driving Crashes: Estimates from a Large-Scale Naturalistic Driving Study; AAA Foundation for Traffic Safety: Washington, DC, USA, 2018. [Google Scholar]

- Fu, R.; Wang, H. Detection of driving fatigue by using noncontact emg and ecg signals measurement system. Int. J. Neural Syst. 2013, 24, 1450006. [Google Scholar] [CrossRef]

- Oviyaa, M.; Renvitha, P.; Swathika, R.; Paul, I.J.L.; Sasirekha, S. Arduino based Real Time Drowsiness and Fatigue Detection for Bikers using Helmet. In Proceedings of the 2020 2nd International Conference on Innovative Mechanisms for Industry Applications (ICIMIA), Washington, DC, USA, 5–7 March 2020; pp. 573–577. [Google Scholar]

- Fan, C.; Peng, Y.; Peng, S.; Zhang, H.; Wu, Y.; Kwong, S. Detection of Train Driver Fatigue and Distraction Based on Forehead EEG: A Time-Series Ensemble Learning Method. IEEE Trans. Intell. Transp. Syst. 2022, 23, 13559–13569. [Google Scholar] [CrossRef]

- Wu, E.Q.; Xiong, P.; Tang, Z.R.; Li, G.J.; Song, A.; Zhu, L.M. Detecting Dynamic Behavior of Brain Fatigue Through 3-D-CNN-LSTM. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 90–100. [Google Scholar] [CrossRef]

- Sandberg, D.; Wahde, M. Particle swarm optimization of feedforward neural networks for the detection of drowsy driving. In Proceedings of the 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence), Hong Kong, China, 1–8 June 2008; pp. 788–793. [Google Scholar]

- Wang, M.S.; Jeong, N.T.; Kim, K.S.; Choi, S.B.; Yang, S.M.; You, S.H.; Lee, J.H.; Suh, M.W. Drowsy behavior detection based on driving information. Int. J. Automot. Technol. 2016, 17, 165–173. [Google Scholar] [CrossRef]

- Zhang, F.; Dai, F. Research and Application of Fatigue Driving Monitoring and Emergency Treatment. In Proceedings of the 2017 International Conference on Computer Technology, Electronics and Communication (ICCTEC), Dalian, China, 19–21 December 2017; pp. 1072–1075. [Google Scholar]

- Wang, K.; Ma, Y.; Huang, J.; Zhang, C. Driving Performance of Heavy-Duty Truck Drivers under Different Fatigue Levels at Signalized Intersections. In Proceedings of the 19th COTA International Conference of Transportation Professionals (CICTP 2019), Nanjing, China, 6–8 July 2019. [Google Scholar]

- Zhang, W.; Murphey, Y.L.; Wang, T.; Xu, Q. Driver Yawning Detection Based on Deep Convolutional Neural Learning and Robust Nose Tracking; IEEE: New York City, NY, USA, 2015. [Google Scholar]

- Hsu, G.; Kang, J.H.; Huang, W.F. Deep hierarchical network with line segment learning for quantitative analysis of facial palsy. IEEE Access 2018, 7, 4833–4842. [Google Scholar] [CrossRef]

- Zhu, Y.; Xie, Z.; Yu, W. Fatigue Driving Recognition Technology Based on Facial Feature Points in Low-Light Environments. J. Automot. Saf. Energy 2022, 13, 282–289. [Google Scholar]

- Li, X.; Li, X.; Shen, Z.; Qian, G. Driver fatigue detection based on improved YOLOv7. J. Real Time Image Process. 2024, 21, 75. [Google Scholar] [CrossRef]

- Liu, S.; Wang, Y.; Yu, Q.; Zhan, J.; Liu, H.; Liu, J. A Driver Fatigue Detection Algorithm Based on Dynamic Tracking of Small Facial Targets Using YOLOv7. IEICE Trans. Inf. Syst. 2023, 106, 1881–1890. [Google Scholar] [CrossRef]

- Abe, T. PERCLOS-based technologies for detecting drowsiness: Current evidence and future directions. Sleep Adv. 2023, 4, zpad006. [Google Scholar] [CrossRef] [PubMed]

- Chirra, V.; Reddy, U.S.; KishoreKolli, V. Deep cnn: A machine learning approach for driver drowsiness detection based on eye state. Rev. d’Intell. Artif. 2019, 33, 461–466. [Google Scholar] [CrossRef]

- Alioua, N.; Amine, A.; Rziza, M. Driver’s Fatigue Detection Based on Yawning Extraction. Int. J. Veh. Technol. 2014, 2014, 47–75. [Google Scholar] [CrossRef]

- Zhang, F.; Wang, F. Exercise fatigue detection algorithm based on video image information extraction. IEEE Access 2020, 8, 199696–199709. [Google Scholar] [CrossRef]

- Zhuang, Q.; Kehua, Z.; Wang, J.; Chen, Q. Driver Fatigue Detection Method Based on Eye States With Pupil and Iris Segmentation. IEEE Access 2020, 8, 173440–173449. [Google Scholar] [CrossRef]

- Zhao, Z.; Zhou, N.; Zhang, L.; Yan, H.; Xu, Y.; Zhang, Z. Driver Fatigue Detection Based on Convolutional Neural Networks Using EM-CNN. Comput. Intell. Neurosci. 2020, 2020, 7251280. [Google Scholar] [CrossRef]

- Guo, Z.; Wang, G.; Zhou, M.; Li, G. Monitoring and Detection of Driver Fatigue from Monocular Cameras Based on Yolo v5. In Proceedings of the 2022 6th CAA International Conference on Vehicular Control and Intelligence (CVCI), Nanjing, China, 28–30 October 2022; pp. 1–6. [Google Scholar]

- Feng, Y.; Gong, Y.; Tu, H.; Liang, J.; Wang, H. A Fatigue Driving Detection Algorithm Based on Facial Motion Information Entropy. J. Adv. Transp. 2020, 2020, 8851485. [Google Scholar] [CrossRef]

- Hu, S.; Gao, Q.; Xie, K.; Wen, C.; Zhang, W.; He, J. Efficient detection of driver fatigue state based on all-weather illumination scenarios. Sci. Rep. 2024, 14, 17075. [Google Scholar] [CrossRef]

- Zeng, C.; Mu, Z.; Wang, Q. Classifying driving fatigue by using EEG signals. Comput. Intell. Neurosci. 2022, 2022, 1885677. [Google Scholar] [CrossRef]

- Sheykhivand, S.; Danishvar, S. Deep Learning for Detecting Multi-Level Driver Fatigue Using Physiological Signals: A Comprehensive Approach. Sensors 2023, 23, 8171. [Google Scholar] [CrossRef]

- Schmidt, E.A.; Schrauf, M.; Simon, M.; Fritzsche, M.; Buchner, A.; Kincses, W.E. Drivers’ misjudgement of vigilance state during prolonged monotonous daytime driving. Accid. Anal. Prev. 2009, 41, 1087–1093. [Google Scholar] [CrossRef]

- Liu, C.; Wang, Y.; Zhang, K.; Chen, Y.; Zhang, C. A Novel Fatigue Driving State Recognition and Warning Method Based on EEG and EOG Signals. J. Healthc. Eng. 2021, 2021, 7799793. [Google Scholar] [CrossRef] [PubMed]

- Du, G.; Li, T.; Li, C.; Liu, P.X.; Li, D. Vision-based fatigue driving recognition method integrating heart rate and facial features. IEEE Trans. Intell. Transp. Syst. 2020, 22, 3089–3100. [Google Scholar] [CrossRef]

- Zhang, Z.; Ning, H.; Zhou, F. A systematic survey of driving fatigue monitoring. IEEE Trans. Intell. Transp. Syst. 2022, 23, 19999–20020. [Google Scholar] [CrossRef]

- Sikander, G.; Anwar, S. Driver Fatigue Detection Systems: A Review. IEEE Trans. Intell. Transp. Syst. 2019, 20, 2339–2352. [Google Scholar] [CrossRef]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 936–944. [Google Scholar]

- Liu, S.; Qi, L.; Qin, H.; Shi, J.; Jia, J. Path Aggregation Network for Instance Segmentation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8759–8768. [Google Scholar]

- Ghiasi, G.; Lin, T.Y.; Le, Q.V. NAS-FPN: Learning Scalable Feature Pyramid Architecture for Object Detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 15–20 June 2019; pp. 7029–7038. [Google Scholar]

- Liu, S.; Huang, D.; Wang, Y. Learning Spatial Fusion for Single-Shot Object Detection. arXiv 2019, arXiv:1911.09516. [Google Scholar] [CrossRef]

- Tan, M.; Pang, R.; Le, Q.V. EfficientDet: Scalable and Efficient Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar]

- Guo, C.; Fan, B.; Zhang, Q.; Xiang, S.; Pan, C. AugFPN: Improving Multi-Scale Feature Learning for Object Detection. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 13–19 June 2020; pp. 10778–10787. [Google Scholar]

- Qin, D.; Leichner, C.; Delakis, M.; Fornoni, M.; Luo, S.; Yang, F.; Wang, W.; Banbury, C.; Ye, C.; Akin, B.; et al. MobileNetV4—Universal Models for the Mobile Ecosystem. In European Conference on Computer Vision; Springer Nature: Cham, Switzerland, 2024. [Google Scholar]

- Ding, X.; Zhang, X.; Ma, N.; Han, J.; Ding, G.; Sun, J. RepVGG: Making VGG-style ConvNets Great Again. In Proceedings of the 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20–25 June 2021; pp. 13728–13737. [Google Scholar]

- Abtahi, S.; Omidyeganeh, M.; Shirmohammadi, S.; Hariri, B. YawDD: A yawning detection dataset. In Proceedings of the ACM SIGMM Conference on Multimedia Systems, Stellenbosch, South Africa, 19–21 March 2014. [Google Scholar]

- Ghoddoosian, R.; Galib, M.; Athitsos, V.A. Realistic Dataset and Baseline Temporal Model for Early Drowsiness Detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- Zhang, H.; Chang, H.; Ma, B.; Wang, N.; Chen, X. Dynamic R-CNN: Towards High Quality Object Detection via Dynamic Training. In Proceedings of the European Conference on Computer Vision, Glasgow, UK, 23–28 August 2020; Springer International Publishing: Cham, Switzerland, 2020. [Google Scholar]

- Zhao, Y.; Lv, W.; Xu, S.; Wei, J.; Wang, G.; Dang, Q.; Liu, Y.; Chen, J. DETRs Beat YOLOs on Real-time Object Detection. In Proceedings of the 2024 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA; 2024; pp. 16965–16974. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. Deformable DETR: Deformable Transformers for End-to-End Object Detection. arXiv 2020, arXiv:2010.04159. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).