Empowering Independence for Visually Impaired Museum Visitors Through Enhanced Accessibility

Abstract

Highlights

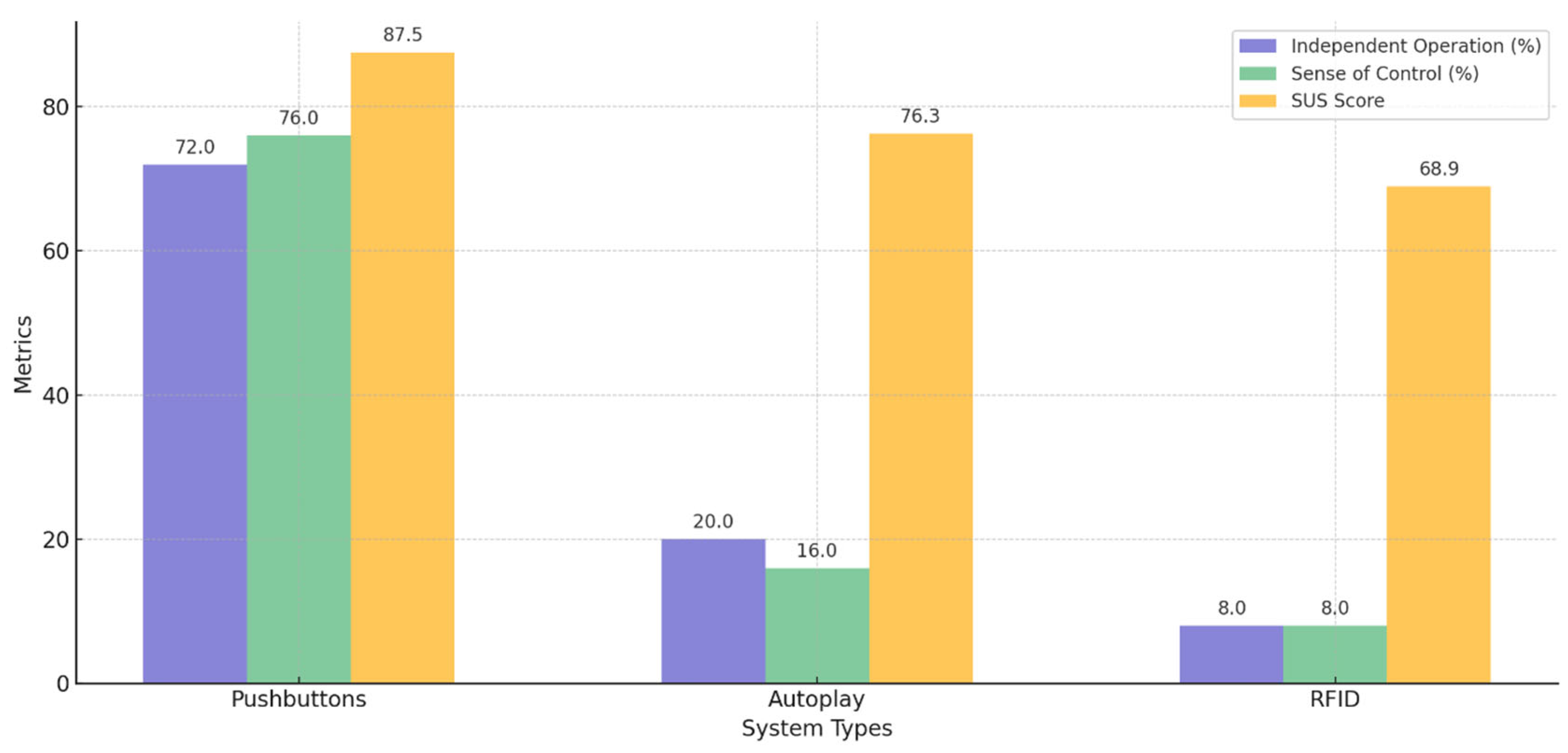

- Enhanced interactive tangible user interfaces (ITUIs) with customizable audio controls significantly improved usability, perceived independence, and user satisfaction among blind and partially sighted (BPS) museum visitors.

- The Pushbutton-based ITUI outperformed Autoplay- and RFID-based systems, achieving the highest ratings in user control (76%) and perceived independence (72%).

- Providing BPS users with control over audio playback speed and volume, along with clear tactile feedback, is essential to fostering independent exploration in museums.

- Simple, user-centered interface design may be more effective than complex technologies in supporting accessible and autonomous cultural engagement of BPS visitors.

Abstract

1. Introduction

2. Background and Related Work

2.1. Museum Accessibility Technologies

2.1.1. Standalone Tactile Replicas

2.1.2. Integrated Multimodal Systems

2.1.3. Interactive, User-Controlled Systems

2.2. Interactive Tangible User Interfaces (ITUIs)

2.3. Development Considerations and Frameworks for User Independence and Control

2.4. Evaluation of Design Frameworks

2.5. Existing Challenges

3. Materials and Methods

3.1. Participants

3.2. Experimental Prototypes

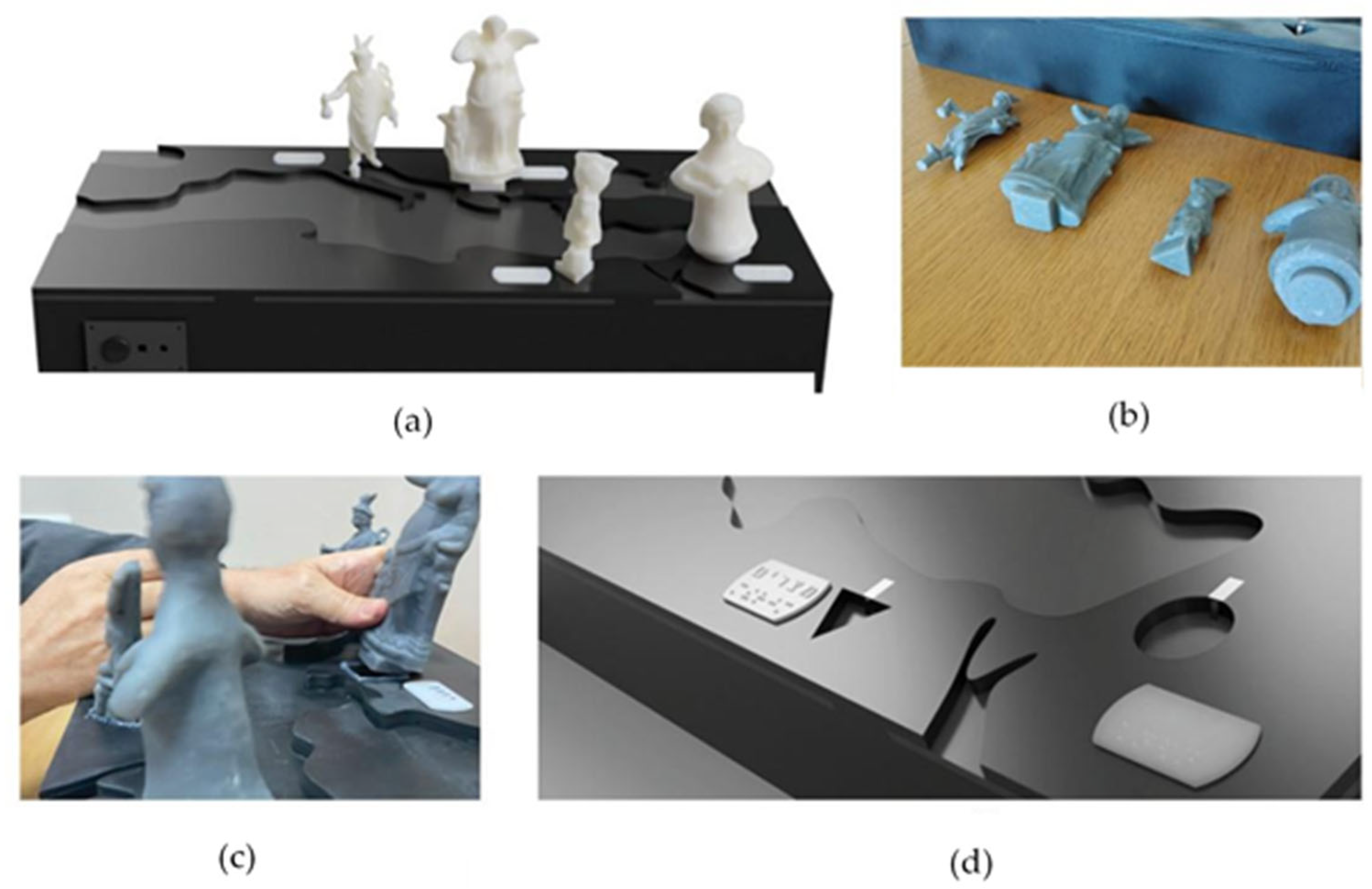

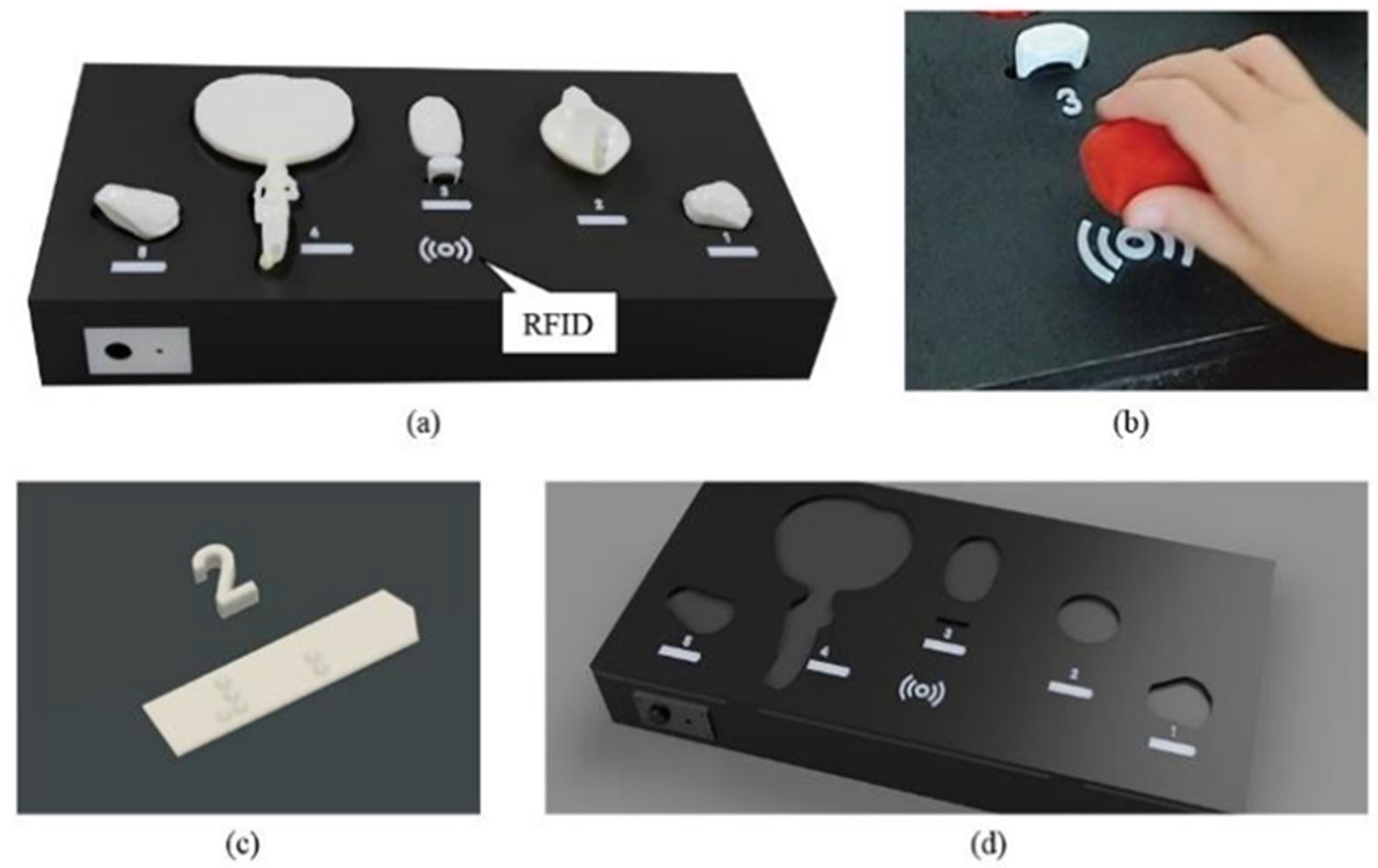

3.2.1. The First Prototype

3.2.2. The Second Prototype

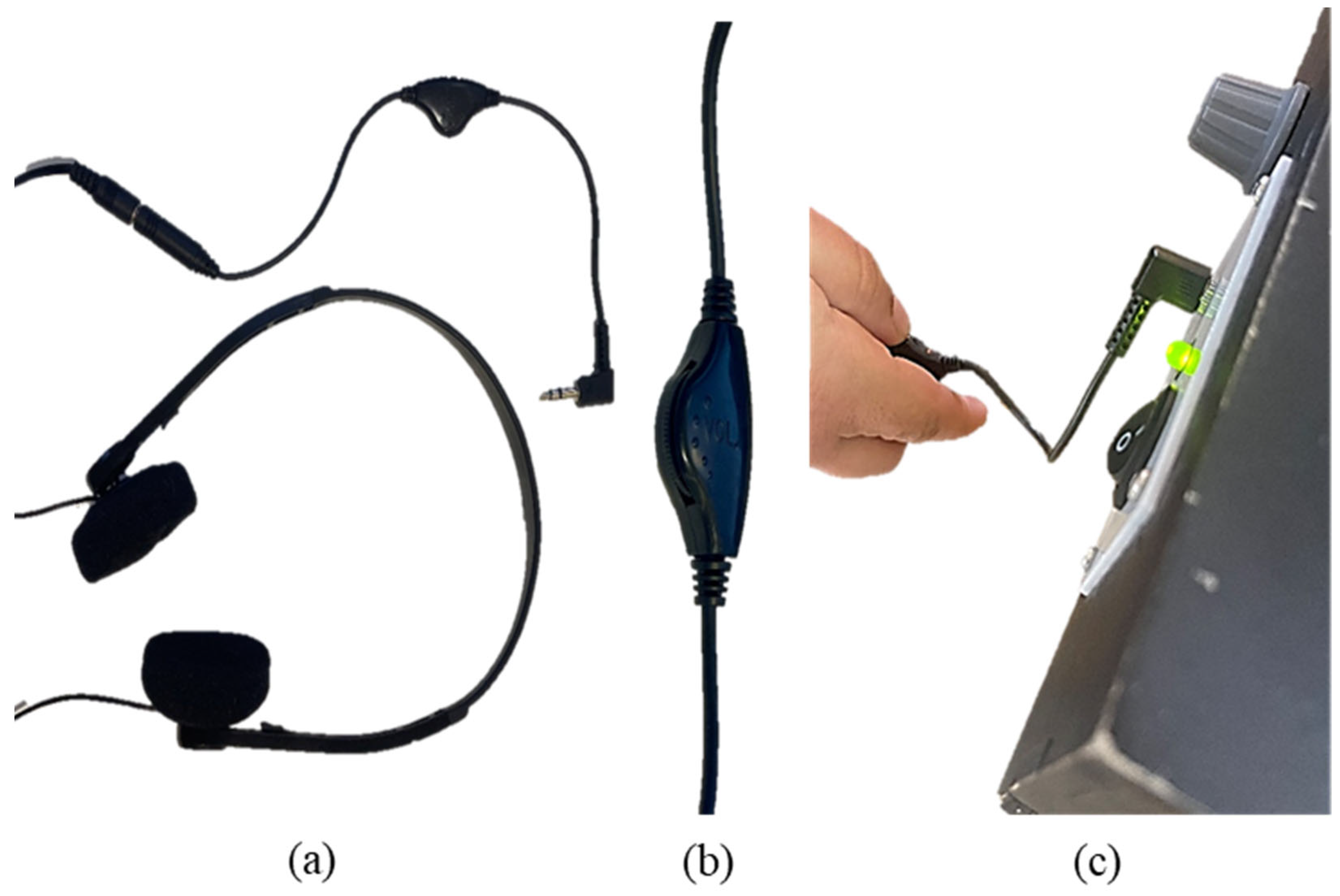

3.2.3. The Third Prototype

3.3. Enhancements

3.4. Measures

3.5. Procedure

3.6. Data Analysis

4. Results

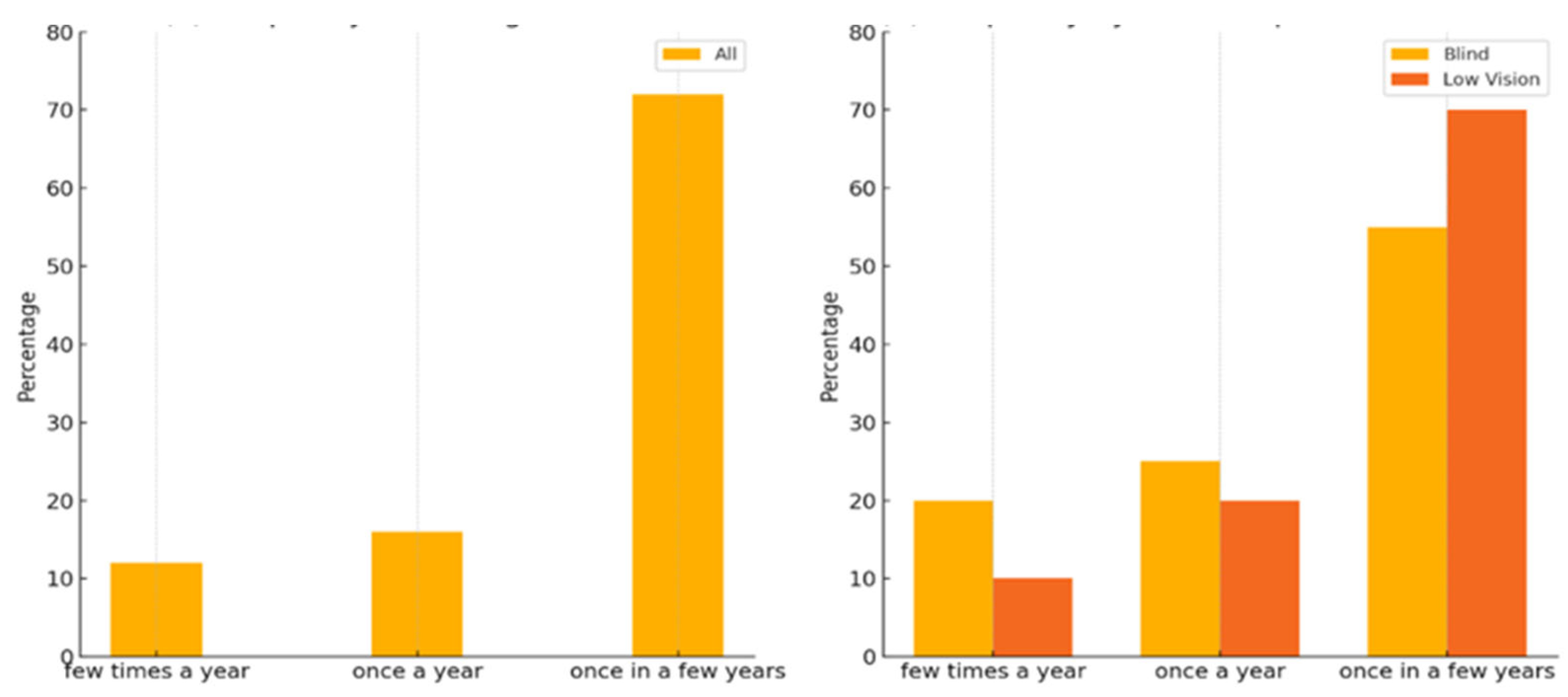

4.1. Descriptive Findings: Museum Visit Behavior

4.2. Quantitative Analysis

4.3. Qualitative Feedback Analysis

5. Discussion

5.1. Interaction Methods and User Independence

5.2. Enhanced Audio Control Features

5.3. Beyond Physical Access: Toward Cognitive and Narrative Accessibility

5.4. Limitations

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| BPS | Blind and Partially Sighted |

| ITUI | Interactive Tangible User Interface |

| SUS | System Usability Scale |

| RFID | Radio-Frequency Identification |

| AUSQ | Additional User Satisfaction Questions |

| TPQ | Two-Part Questionnaire |

| QUEST | Quebec User Evaluation of Satisfaction with Assistive Technology |

| IRB | Institutional Review Board |

| SPSS | Statistical Package for the Social Sciences |

| ANOVA | Analysis of Variance |

| HSD | Honestly Significant Difference (Tukey’s HSD) |

| STL | Stereolithography (3D printing file format) |

| WHO | World Health Organization |

Appendix A. Demographics

Appendix A.1. Demographics and Museum Visit Preferences

| ID | Age | Gender | Years of BPS | Visit Museums? | Frequency | Accompanied by | BPS/Not Accompany | Guided Tour | Family or Friend | Auditory Guide | No Assistant | Touch Screens | App | NFC Tech. Familiarity |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 58 | F | 58 | yes | few times a year | couple | no preference | V | V | V | V | V | Yes | |

| 2 | 44 | F | 15 | no | V | V | Yes | |||||||

| 3 | 34 | F | 34 | no | V | V | V | No | ||||||

| 4 | 27 | F | 27 | no | V | V | V | V | Yes | |||||

| 5 | 23 | F | 23 | no | V | V | V | V | Yes | |||||

| 6 | 26 | F | 26 | no | V | V | V | Yes | ||||||

| 7 | 21 | F | 21 | yes | once every few years | family or friends | no preference | V | V | V | Yes | |||

| 8 | 21 | M | 21 | no | V | Yes | ||||||||

| 9 | 21 | F | 21 | yes | once every few years | family or friends | not blind partner | V | V | V | V | Yes | ||

| 10 | 25 | F | 25 | no | V | V | V | V | V | Yes | ||||

| 11 | 19 | M | 19 | no | V | V | V | V | Yes | |||||

| 12 | 23 | M | 23 | yes | once every few years | family or friends | not blind partner | V | V | Yes | ||||

| 13 | 29 | M | 29 | no | V | V | V | V | Yes | |||||

| 14 | 49 | F | 49 | yes | once v few years | family or friends | blind partner | V | Yes | |||||

| 15 | 23 | M | 23 | yes | once v few years | family or friends | not blind partner | V | V | Yes | ||||

| 16 | 21 | M | 21 | no | V | V | No | |||||||

| 17 | 21 | M | 21 | yes | once a year | family or friends | blind partner | V | V | Yes | ||||

| 18 | 21 | F | 13 | no | V | V | V | V | Yes | |||||

| 19 | 48 | F | 30 | yes | once a year | family or friends | not blind partner | V | V | V | Yes | |||

| 20 | 50 | F | 32 | no | V | Yes | ||||||||

| 21 | 23 | F | 23 | no | V | Yes | ||||||||

| 22 | 32 | F | 26 | no | V | V | No | |||||||

| 23 | 36 | M | 36 | yes | once every few years | family or friends | V | V | V | V | V | No | ||

| 24 | 31 | M | 31 | yes | once a year | family or friends | no preference | V | V | V | V | Yes | ||

| 25 | 30 | F | 30 | yes | once every few years | family or friends | not blind partner | V | V | V | V | Yes |

Appendix A.2. Education and Profession Demographics

| Education Level * | Number of Participants | Common Professions |

|---|---|---|

| Primary or lower | 3 | Psychometric exam preparation, programming courses, unemployed |

| Secondary/tertiary (BA) | 17 | Social workers, engineers, teachers, instructors, business owners |

| Graduate and above (MA) | 5 | Special education teachers, project coordinators, graduate students |

Appendix B. Research Questionnaires

Appendix B.1. System-Specific Satisfaction and Usability Questionnaire

- I do not think I would want to use this ITUI often.

- I think the ITUI is unnecessarily complex.

- I found the ITUI easy to use.

- I think I would need help to use the kit.

- I think the different functions of the ITUI are well integrated.

- I encountered many inconsistencies throughout the use of the kit.

- I think most people can learn to use the ITUI quickly.

- I think the ITUI is cumbersome to use.

- I felt very confident in how I used the kit.

- I had to learn a lot before I was able to use the kit.

- I am satisfied with the kit’s dimensions (size, height, length, width) and exhibits.

- I am satisfied with the weight of the exhibits.

- I am satisfied with the ease with which the exhibits can be removed and returned to their place.

- I am satisfied with how safe and secure the accessory is.

- I am satisfied with the durability of the exhibits (that they will not break in your hand).

- I am satisfied with the easy use of the ITUI (starting and stopping the audio files).

- I am satisfied with how comfortable the ITUI is.

- I am satisfied with the kit’s effectiveness (how well this device fulfills your needs).

Appendix B.2. Comparative Assessment Questionnaire

- Overall, which ITUI was easier to operate?

- Overall, which ITUI was more convenient to operate?

- Overall, which ITUI was more efficient to operate?

- Overall, which ITUI was more independent to operate?

- Which ITUI gave you a sense of control over the situation?

- Which ITUI was easy to learn?

- Overall, which ITUI do you prefer?

Appendix B.3. General Preferences Questionnaire

- I generally prefer products and devices with buttons that can be pressed.

- I generally prefer methods of interaction with the ITUI using RFID technology.

- I prefer to listen to explanations in the museum with headphones.

- I prefer to listen to explanations in the museum with speakers that those visiting with me can also hear.

- The ability to change the reading speed of the audio system is important.

- The ability to control the volume is important.

- To the extent that the audio file can be started, there should be an option to stop the recording.

- I would prefer a similar application that is installed on my phone.

- The ability to touch and hold the printed exhibits enhances understanding and contributes to the experience.

- The ability to hear the audio descriptions contributed to a high level of independence in understanding the cultural and historical contexts of the exhibits in the ITUIs.

- Kits of this type can enrich and upgrade the experience of visiting the museum independently for me.

Appendix C. Qualitative Feedback Analysis

Appendix C.1. Participant (P) Comments by Theme

Appendix C.1.1. Feedback Clarity

Autoplay ITUI

- P3: Sometimes I was unsure if I had placed the object correctly to trigger the audio.

- P11: The automatic activation was nice, but I wished there was clearer feedback when the ITUI recognized the object.

- P25: It worked well when it worked, but sometimes I was uncertain if I had positioned things correctly.

RFID ITUI

- P9: The scanning feedback was inconsistent—sometimes I could not tell if I was holding the tag in the right place.

- P13: I spent too much time finding the correct scanning position.

- P20: The lack of immediate feedback made it difficult to know if I was using it correctly.

Appendix C.1.2. Control Granularity

Autoplay ITUI

- P6: I wished I had more control over the playback without removing and replacing the object.

- P14: The automatic playing was convenient but inflexible. Sometimes, I needed to hear something again.

- P21: It would be better to pause or restart without manipulating the object.

RFID ITUI

- P2: The scanning concept was interesting but needed more precise control options.

- P16: I found it difficult to scan specific sections when I wanted to review information.

- P23: “The ITUI could benefit from more granular control over the audio playback”.

Appendix C.1.3. Spatial Organization

Autoplay ITUI

- P7: The fixed positions of objects helped with orientation, but the lack of interactive elements made it less engaging.

- P15: I sometimes lost track of where I was in the exhibit space.

- P22: The spatial relationship between objects was unclear.

RFID ITUI

- P4: Finding the correct scanning location was challenging without clear physical markers.

- P11: The scanning zones were not intuitive to locate.

- P18: I needed more tactile cues to understand the spatial layout.

Appendix C.2. Additional Observations and Suggestions

Appendix C.2.1. ITUI Improvements

- P8: Adding audio confirmation of speed and volume changes would be helpful.

- P13: Some form of tactile marking to indicate optimal scanning positions would improve the RFID ITUI.

- P16: A way to bookmark or flag specific sections for later review would be useful.

- P20: The ability to customize button sensitivity would help users with different motor control abilities.

Appendix C.2.2. General Comments on Independence

- P2: The Pushbutton ITUI gave me the most confidence to explore independently.

- P9: Being able to control the pace and review content made me feel more autonomous.

- P17: Clear feedback was essential for independent navigation and learning.

- P23: The more control I had over the interaction, the more comfortable I felt exploring on my own.

Appendix D. SUS Scores by Item and ITUI

| Item | RFID | AutoPlay | Pushbutton | ||||||

|---|---|---|---|---|---|---|---|---|---|

| M | SD | Median | M | SD | Median | M | SD | Median | |

| 4.48 | 0.82 | 5.00 | 4.12 | 1.17 | 5.00 | 4.52 | 0.77 | 5.00 |

| 1.44 | 0.65 | 1.00 | 1.40 | 0.87 | 1.00 | 1.16 | 0.67 | 1.00 |

| 4.72 | 0.54 | 5.00 | 4.48 | 0.87 | 5.00 | 4.84 | 0.37 | 5.00 |

| 1.76 | 0.93 | 1.00 | 1.96 | 1.21 | 1.00 | 1.12 | .033 | 1.00 |

| 4.84 | 0.37 | 5.00 | 4.68 | 0.90 | 5.00 | 4.92 | 0.28 | 5.00 |

| 1.48 | 0.77 | 1.00 | 1.64 | 1.22 | 1.00 | 1.24 | 0.72 | 1.00 |

| 4.72 | 0.54 | 5.00 | 4.56 | 0.71 | 5.00 | 4.88 | 0.33 | 5.00 |

| 1.12 | 0.33 | 1.00 | 1.28 | 0.79 | 1.00 | 1.08 | 0.28 | 1.00 |

| 4.60 | 0.82 | 5.00 | 4.32 | 0.90 | 5.00 | 4.76 | 0.83 | 5.00 |

| 1.28 | 0.79 | 1.00 | 1.77 | 1.04 | 1.00 | 1.12 | 0.33 | 1.00 |

References

- Ambrose, A.B.; Paine, C.D. Museum Basics: The International Handbook, 4th ed.; Routledge: London, UK, 2018. [Google Scholar] [CrossRef]

- World Health Organization. Blindness and Vision Impairment. 2021. Available online: https://www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment (accessed on 31 July 2025).

- Ginley, B. Museums: A whole new world for visually impaired people. Disabil. Stud. Q. 2013, 33, 1. [Google Scholar] [CrossRef]

- Brischetto, A.; Iacono, E.; Becchimanzi, C.; Tosi, F. Enhancing inclusive experience in museums: Results from a field study. In Design for Inclusion; Di Bucchianico, P., Ed.; AHFE: Orlando, FL, USA, 2023; Volume 753. [Google Scholar] [CrossRef]

- Vaz, R.; Freitas, D.; Coelho, A. Blind and visually impaired visitors’ experiences in museums: Increasing accessibility through assistive technologies. J. Incl. Mus. 2020, 13, 57–80. [Google Scholar] [CrossRef]

- Sylaiou, S.; Fidas, C. Supporting people with visual impairments in cultural heritage: Survey and future research directions. Int. J. Hum-Comput. Stud. 2024, 40, 2195–2210. [Google Scholar] [CrossRef]

- Pirrone, M.; Centorrino, M.; Galletta, A.; Sicari, C.; Villari, M. Digital humanities and disability: A systematic literature review of cultural accessibility for people with disability. Digital Scholarsh. Humanit. 2023, 38, 313–329. [Google Scholar] [CrossRef]

- Wang, X. Design principles for a smart descriptive guide for blind and partially sighted visitors. I-LanD J. Identity Lang. Divers. 2023, 1, 125–143. [Google Scholar] [CrossRef]

- Avni, Y.; Danial-Saad, A.; Sheidin, J.; Kuflik, T. Enhanding museum accessibility for blind and low vision visitors through interactive multimodal tangible interfaces. Int. J. Hum-Comput. Stud. 2025, 198, 103469. [Google Scholar] [CrossRef]

- Leporini, B.; Rosesetti, V.; Furfari, F.; Pelagatti, S.; Quarta, A. Design guidelines for an interactive 3D model as a supporting tool for exploring a cultural site by visually impaired and sighted people. ACM Trans. Access. Comput. (TACCESS) 2020, 13, 1–39. [Google Scholar] [CrossRef]

- Karaduman, H.; Alan, Ü.; Yiğit, E.Ö. Beyond “do not touch”: The experience of a three-dimensional printed artifacts museum as an alternative to traditional museums for visitors who are blind and partially sighted. Univ. Access Info. Soc. 2023, 22, 811–824. [Google Scholar] [CrossRef]

- De-Miguel-Sánchez, M.; Gutiérrez-Pérez, N. A methodology to make cultural heritage more accessible to people with visual disabilities through 3d printing. DISEGNARECON 2024, 17, 4.3. [Google Scholar] [CrossRef]

- Papis, M.; Kalski, P.; Szuszkiewicz, G.; Kowalik, M.P. Influence of 3D printing technology on reproducing cultural objects in the context of visually impaired people. Adv. Sci. Technol. Res. J. 2025, 19, 121–130. [Google Scholar] [CrossRef] [PubMed]

- Kudlick, C. The local history museum is so near and yet so far. Public Hist. 2005, 27, 75–81. [Google Scholar] [CrossRef]

- Rappolt-Schlichtmann, G.; Daley, S.G. Providing access to engagement in learning: The potential of universal design for learning in museum design. Curator Mus. J. 2013, 56, 307–321. [Google Scholar] [CrossRef]

- Reichinger, A.; Matheus Garcia, M.; Wölfl, B. Multi-sensory museum experiences for blind and visually impaired people. In Universal Access in Human-Computer Interaction: Novel Design Approaches and Technologies; Antona, M., Stephanidis, C., Eds.; Springer: Cham, Switzerland, 2022; pp. 400–419. [Google Scholar]

- Montusiewicz, J.; Barszcz, M.; Korga, S. Preparation of 3D models of cultural heritage objects to be recognised by touch by the blind—Case studies. Appl. Sci. 2022, 12, 11910. [Google Scholar] [CrossRef]

- Shi, L.; Lawson, H.; Zhang, Z.; Azenkot, S. Designing Interactive 3D Printed Models with Teachers of the Visually Impaired. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems, Glasgow, UK, 4 May 2019. [Google Scholar] [CrossRef]

- Shi, L.; Zhao, Y.; Kupferstein, E.; Azenkot, S. A Demonstration of Molder: An Accessible Design GTool for Tactile Maps. In Proceedings of the 21st International ACM SIGACCESS Conference on Computers and Accessibility, Pittsburgh, PA, USA, 28 October 2019. [Google Scholar] [CrossRef]

- Snyder, J. The Visual Made Verbal: A Comprehensive Training Manual and Guide to the History and Applications of Audio Description; American Council of the Blind Press: Arlington, VA, USA, 2020. [Google Scholar]

- Reinders, S.; Butler, M.; Marriott, K. “It brought the model to life”: Exploring the embodiment of multimodal I3Ms for people who are blind or have low vision. arXiv 2025, arXiv:2502.14163. [Google Scholar]

- Jiang, C.; Kuang, E.; Fan, M. How can haptic feedback assist people with blind and low vision (BLV): A systematic literature review. ACM Trans. Access. Comput. 2025, 18, 1–57. [Google Scholar] [CrossRef]

- Rector, K.; Salmon, K.; Thornton, D.; Joshi, N.; Morris, M.R. Eyes-free art: Exploring proxemic audio interfaces for blind and low vision art engagement. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2017, 1, 1–21. [Google Scholar] [CrossRef]

- Wang, X.; Crookes, D.; Harding, S.A.; Johnston, D. Evaluating audio description and emotional engagement for BPS visitors in a museum context: An experimental perspective. Transl. Spaces 2022, 11, 134–156. [Google Scholar] [CrossRef]

- Hutchinson, R.; Eardley, A.F. Inclusive museum audio guides: ‘Guided looking’ through audio description enhances memorability of artworks for signed audiences. Mus. Manag. Curator. 2021, 36, 427–446. [Google Scholar] [CrossRef]

- Cavazos Quero, L.; Iranzo Bartolomé, J.; Lee, S.; Han, E.; Kim, S.; Cho, J. An Interactive Multimodal Guide to Improve Art Aaccessibility for Blind People. In ASSETD ’18: Proceedings of the 20th International ACM SIGACCESS Conference on Computers and Accessibility, Galway, Ireland, 22 October 2018; ACM: New York, NY, USA, 2018. [Google Scholar] [CrossRef]

- Horton, E.L.; Renganathan, R.; Toth, B.N.; Cohen, A.J.; Bajcsy, A.V.; Bateman, A.; Jennings, M.C.; Khattar, A.; Kuo, R.S.; Lee, F.A.; et al. A review of principles in design and usability testing of tactile technology for individuals with visual impairments. Assist. Technol. 2017, 29, 28–36. [Google Scholar] [CrossRef]

- Shehade, M.; Stylianou-Lambert, T. (Eds.) Museums and Technologies of Presence; Routledge: London, UK, 2023. [Google Scholar]

- Ballarin, M.; Balletti, C.; Vernier, P. Replicas in cultural heritage: 3D printing and the museum experience. ISPRS—Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 55–62. [Google Scholar] [CrossRef]

- Solima, L. “Do Not Touch! How 3D Printing Can Open the Way to an Accessible Museum.” Management in a Digital World: Decisions, Production, Communication. CUEIM Comunicazione. 2016. Available online: https://hdl.handle.net/11591/380399 (accessed on 31 July 2025).

- Theodorou, P.; Meliones, A. Gaining insight for the design, development, deployment and distribution of assistive navigation systems for blind and visually impaired people through a detailed user requirements elicitation. Univers. Access Info. Soc. 2023, 22, 841–867. [Google Scholar] [CrossRef]

- Comes, R. Haptic devices and tactile experiences in museum exhibitions. J. Anc. Hist. Archaeol. 2016, 60–64. [Google Scholar] [CrossRef]

- Dimitrova-Radojichikj, D. Museums: Accessibility to Visitors with Visual Impairment. 2018. Available online: https://www.researchgate.net/publication/313030970_Museums_Accessibility_to_visitors_with_visual_impairment (accessed on 31 July 2025).

- Mesquita, S.; Carneiro, M.J. Accessibility of European museums to visitors with visual impairments. Disabil. Soc. 2016, 31, 373–388. [Google Scholar] [CrossRef]

- Landau, S.; Wiender, W.; Naghshineh, K.; Giusti, E. Creating accessible science museums with user-activated environmental audio beacons (Ping!). Assist. Technol. 2005, 17, 133–143. [Google Scholar] [CrossRef] [PubMed]

- Bevan, N. International standards for HCI and usability. Int. J. Hum.-Comput. Stud. 2001, 55, 533–552. [Google Scholar] [CrossRef]

- Nielsen, J. Usability Engineering; Academic Press: London, UK, 1993. [Google Scholar]

- Nielsen, J.; Landauer, T.K. A Mathematical Model of the Finding of Usability Problems. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems—CHI ’93, Amsterdam, The Netherlands, 24 April 1993. [Google Scholar] [CrossRef]

- Vredenburg, K.; Mao, J.Y.; Smith, P.W.; Carey, T. A Survey of User-Centered design Practice. In CHI ’02: Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Minneapolis, MN, USA, 20 April 2002; ACM Press: New York, NY, USA, 2002. [Google Scholar] [CrossRef]

- Ulrich, K.T.; Eppinger, S.D. Product Design and Development; McGraw-Hill: New York, NY, USA, 2003. [Google Scholar]

- Black, G. The Engaging Museum: Developing Museums for Visitor Involvement, 1st ed.; Routledge: London, UK, 2005. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioral Sciences; Routledge: New York, NY, USA, 2013. [Google Scholar]

- Anagnostakis, G.; Antoniou, M.; Kardamitsi, E.; Sachinidis, T.; Koutsabasis, P.; Stavrakis, M.; Vosinakis, S.; Zissis, D. Accessible Museum Collections for the Visually Impaired: Combining Tactile Exploration, Audio Descriptions, and Mobile Gestures. In MobileHCI ’16: Proceedings of the 18th International Conference on Human-Computer Interaction with Mobile Devices and Services Adjunct, Florence, Italy, 6 September 2016; ACM Press: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- Hillemann, P. “Art Museum Tours for Visitors Who Are Blind,” Smithsonian American Art Museum. 2016. Available online: https://americanart.si.edu/blog/eye-level/2016/09/317/art-museum-tours-visitors-who-are-blind (accessed on 31 July 2025).

- Smithsonian Institution. Smithsonian Guidelines for Accessible Exhibition Design; Smithsonian Accessibility Program: Washington, DC, USA, 2010; Available online: https://www.si.edu/accessibility/sgaed (accessed on 31 July 2025).

- Lahav, O.; Mioduser, D. Haptic-feedback support for cognitive mapping of unknown spaces by people who are blind. Int. J. Hum.-Comput. Stud. 2008, 66, 23–35. [Google Scholar] [CrossRef]

- Bandukda, M.; Azmi, A.; Xiao, L.; Holloway, C. Experience-sharing to support inclusive travel for blind and partially sighted people. Sustainability 2024, 16, 8827. [Google Scholar] [CrossRef]

- Gleason, C.; Fiannaca, A.J.; Kneisel, M.; Cutrell, E. FootNotes: Geo-referenced audio annotations for nonvisual exploration. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. (IMWUT) 2018, 2, 1–24. [Google Scholar] [CrossRef]

- Reichinger, A.; Fuhrmann, A.; Maierhofer, S.; Purgathofer, W. Gesture-based Interactive Audio Guide on Tactile Reliefs. In ASSETS ’16: Proceedings of the 18th International ACM SIGACCESS Conference on Computers and Accessibility, Reno, NV, USA, 23 October 2016; ACM Press: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

- D’Agnano, F.; Balletti, C.; Guerra, F.; Vernier, P. TOOTEKO: A case study of augmented reality for an accessible cultural heritage. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 40, 207–213. [Google Scholar] [CrossRef]

- Brooke, J. SUS-A quick and dirty usability scale. In Usability Evaluation in Industry; Jordan, P.W., Thomas, B., Weerdmeester, B.A., McClelland, I.L., Eds.; Taylor & Francis: Oxfordshire, UK, 1996; Volume 189, pp. 4–7. [Google Scholar]

- Gelderblom, G.J.; de Witte, L.P.; Demers, L.; Weiss-Lambrou, R.; Ska, B. The Quebec User Evaluation of Satisfaction with Assistive Technology (QUEST 2.0): An overview and recent progress. Technol. Disabil. 2002, 14, 101–105. [Google Scholar] [CrossRef]

- Faulkner, L. Beyond the five-user assumption: Benefits of increased sample sizes in usability testing. Behav. Res. Methods Instrum. Comput. 2003, 35, 379–383. [Google Scholar] [CrossRef]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Bastien, J.M.C. Usability testing: A review of the method’s methodological and technical aspects. Int. J. Med. Info. 2010, 79, e18–e23. [Google Scholar] [CrossRef] [PubMed]

- Choi, Y.M.; Springle, S.H. Approaches for evaluating the usability of assistive technology product prototypes. Assist. Technol. 2011, 23, 36–41. [Google Scholar] [CrossRef]

- Preece, J.; Rogers, Y.; Sharp, H.; Benyon, D.; Holland, S.; Carey, T. Interaction Design: Beyond Human–Computer Interaction, 4th ed.; Wiley: Chichester, UK, 2015; pp. 409–414. ISBN 9781119020752. [Google Scholar]

- Sears, A.; Hanson, V.L. Representing users in accessibility research. ACM Trans. Access. Comput. 2012, 4, 1–6. [Google Scholar] [CrossRef]

- Corriero, E.F. Latin square design. In The SAGEEencyclopedia of Communication Research Methods; Allen, M., Ed.; SAGE: Thousand Oaks, CA, USA, 2017; pp. 864–866. [Google Scholar] [CrossRef]

- Asakawa, S.; Guerreiro, J.; Sato, D.; Takagi, H.; Ahmetovic, D.; Gonzalez, D.; Kitani, K.M.; Asakawa, C. An Independent and Interactive Museum Experience for Blind People. In Proceedings of the 16th International Web for All Conference, San Francisco, CA, USA, 13 May 2019. [Google Scholar] [CrossRef]

- Henry, S.L. Just Ask: Integrating Accessibility Throughout the Design. 2007. Available online: http://www.uiaccess.com/justask/ (accessed on 31 July 2025).

- Field, A. Discovering Statistics Using IBM SPSS Statistics; Sage: Thousand Oaks, CA, USA, 2024. [Google Scholar]

- Smith, K.S.; Reyna, A.; Zhang, C.; Smith, B.A. Understanding Blind and Low Vision Users’ Attitudes Towards Spatial Interactions in Desktop Screen Readers. In Proceedings of the 25th International ACM SIGACCESS Conference on Computers and Accessibility (ASSETS ’23), New York, NY, USA, 22 October 2023. [Google Scholar] [CrossRef]

- Pacheco-Barrios, K.; Ortega-Márquez, J.; Fregni, F. Haptic technology: Exploring its underexplored clinical applications—A systematic review. Biomedicines 2024, 12, 2802. [Google Scholar] [CrossRef]

- Story, M.F.; Mueller, J.L.; Mace, R.L. The Universal Design File: Designing for People of All Ages and Abilities; NC State University, the Center for Universal Design: Raleigh, NC, USA, 1998. [Google Scholar]

- Reichinger, A.; Schröder, S.; Löw, C.; Sportun, S.; Reichl, P.; Purgathofer, W. Spaghetti, Sink and Sarcophagus: Design Explorations of Tactile Artworks for Visually Impaired People. In NordiCHI ’16: Proceedings of the 9th Nordic Conference on Human-Computer Interaction, New York, NY, USA, 23 October 2016; ACM Press: New York, NY, USA, 2016. [Google Scholar] [CrossRef]

| Measure | Pushbutton ITUI | Autoplay ITUI | RFID ITUI | Test Statistic | p-Value |

|---|---|---|---|---|---|

| SUS score mean (SD) | 87.5 (±8.2) | 76.3 (±9.4) | 68.9 (±11.2) | F = 12.34 | <0.001 * |

| Independent operation | 18 (72%) | 5 (20%) | 2 (8%) | Χ2 = 19.28 | <0.001 * |

| Sense of control | 19 (76%) | 4 (16%) | 2 (8%) | Χ2 = 21.44 | <0.001 * |

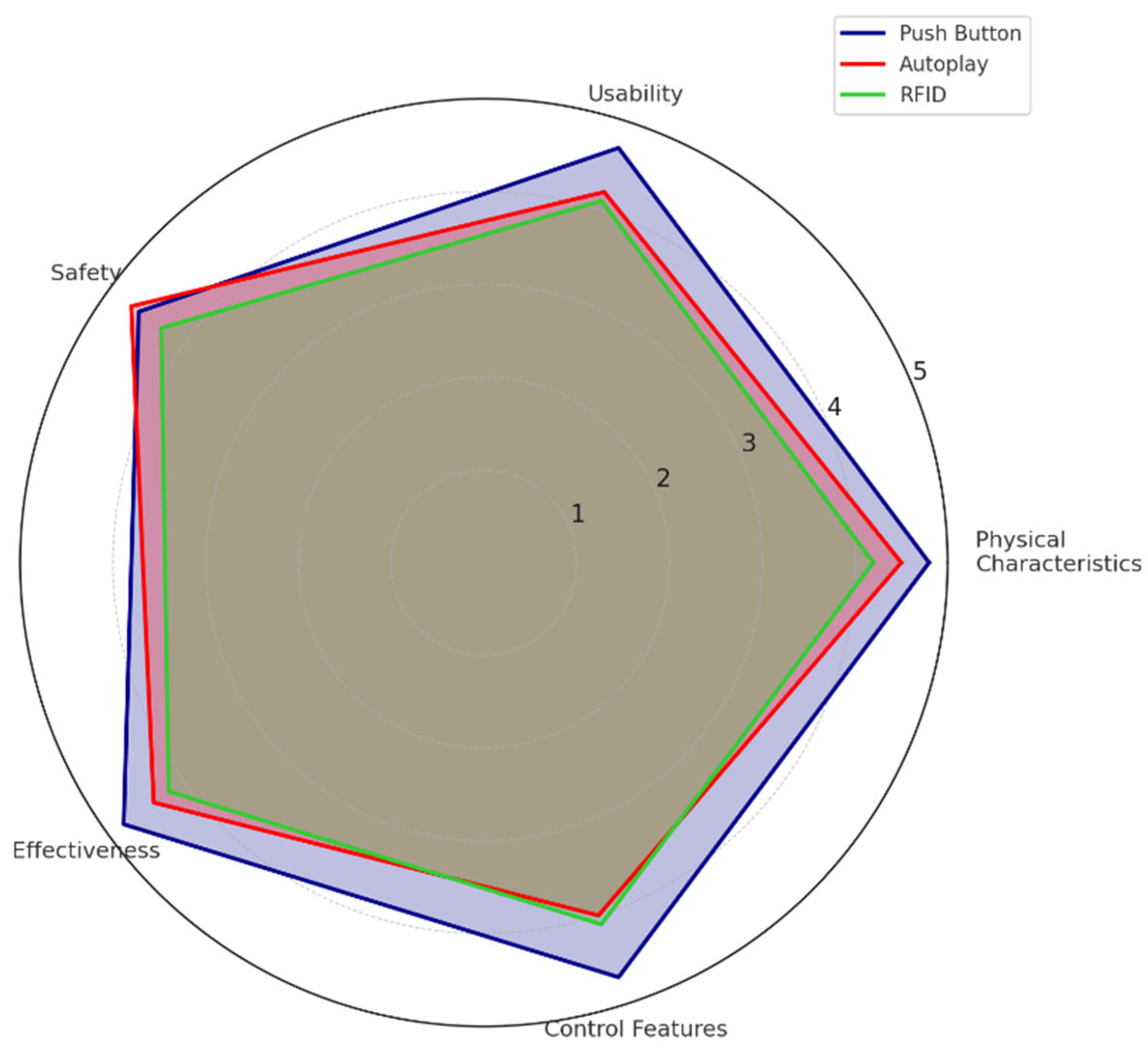

| Dimension | Pushbutton | Autoplay | RFID | F(2,72) | p-Value |

|---|---|---|---|---|---|

| Physical characteristics | 4.8 (±0.2) | 4.5 (±0.3) | 4.2 (±0.4) | 9.76 | <0.001 * |

| Usability | 4.7 (±0.3) | 4.2(±0.4) | 4.1 (±0.5) | 11.23 | <0.001 * |

| Safety | 4.6 (±0.3) | 4.7 (±0.2) | 4.3 (±0.4) | 5.87 | <0.004 * |

| Effectiveness | 4.8 (±0.2) | 4.4 (±0.3) | 4.2 (±0.4) | 10.45 | <0.001 * |

| Control features | 4.7 (±0.3) | 4.0 (±0.5) | 4.1 (±0.4) | 12.78 | <0.001 * |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nasser, T.Z.; Kuflik, T.; Danial-Saad, A. Empowering Independence for Visually Impaired Museum Visitors Through Enhanced Accessibility. Sensors 2025, 25, 4811. https://doi.org/10.3390/s25154811

Nasser TZ, Kuflik T, Danial-Saad A. Empowering Independence for Visually Impaired Museum Visitors Through Enhanced Accessibility. Sensors. 2025; 25(15):4811. https://doi.org/10.3390/s25154811

Chicago/Turabian StyleNasser, Theresa Zaher, Tsvi Kuflik, and Alexandra Danial-Saad. 2025. "Empowering Independence for Visually Impaired Museum Visitors Through Enhanced Accessibility" Sensors 25, no. 15: 4811. https://doi.org/10.3390/s25154811

APA StyleNasser, T. Z., Kuflik, T., & Danial-Saad, A. (2025). Empowering Independence for Visually Impaired Museum Visitors Through Enhanced Accessibility. Sensors, 25(15), 4811. https://doi.org/10.3390/s25154811