Research on Ground Point Cloud Segmentation Algorithm Based on Local Density Plane Fitting in Road Scene

Abstract

1. Introduction

- (1)

- A ground point cloud segmentation algorithm suitable for road scenes is proposed. By combining the subsequent related algorithms, the interference problem caused by noise point clouds mainly composed of ground point clouds in the road scene is effectively alleviated, providing a good foundation for improving the analysis efficiency of point cloud data and the further application of point cloud data;

- (2)

- A 3D local density algorithm and a spatial segmentation algorithm are designed. This algorithm can effectively alleviate the impact of uneven density and has good scalability. Even in non-road scenes, it can achieve different noise reduction and clustering effects by screening and optimizing specific voxels;

- (3)

- A plane fitting and anomaly plane screening algorithm is designed. Compared with the deep learning strategy, this algorithm can iterate out a better ground point cloud fitting result without using data for pre-training, and can greatly reduce the training cost while having versatility, effectively improving the deployment efficiency of the algorithm.

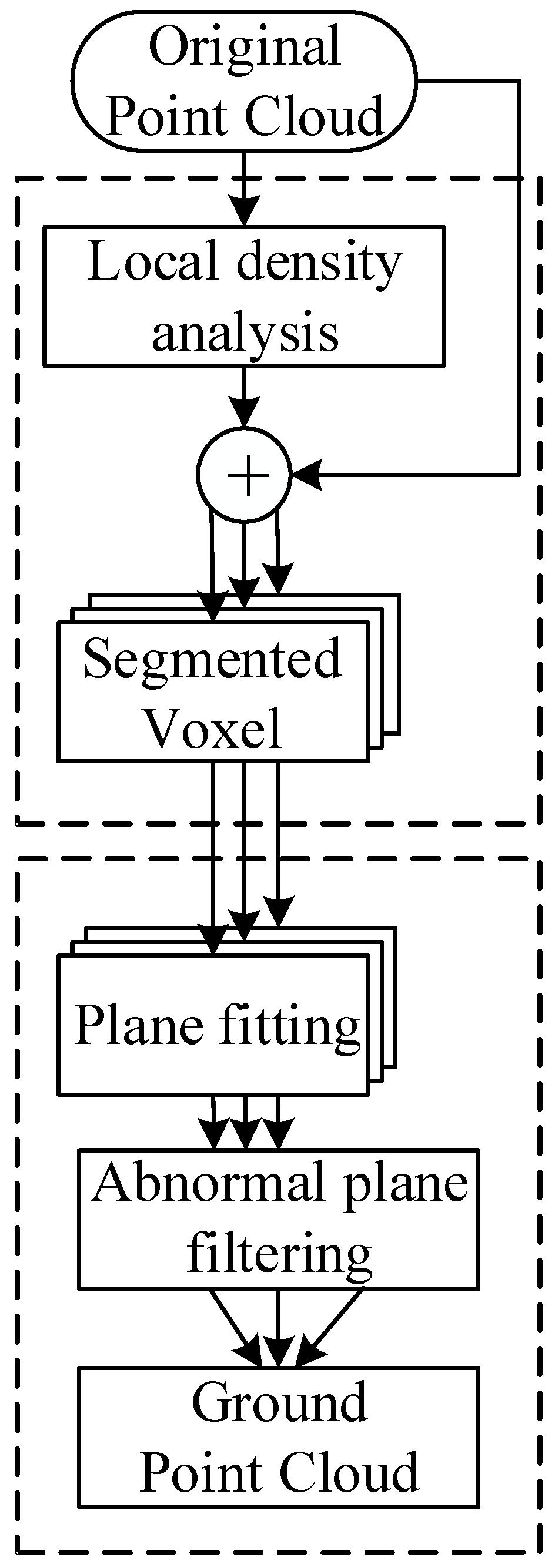

2. Methodologies

2.1. Classic DBSCAN Algorithm and Its Defects

2.2. 3D Local Density Algorithm and Spatial Segmentation Algorithm

2.3. Plane Fitting and Anomaly Plane Screening Algorithm

3. Experiment Results and Discussion

3.1. Dataset and Evaluation Metrics

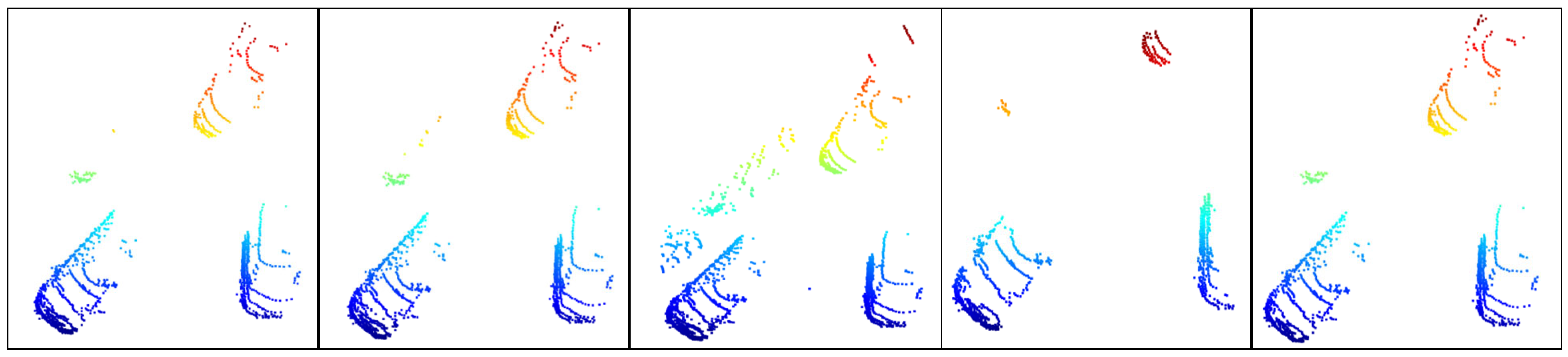

3.2. Data Processing

3.3. Performance Comparison and Experimental Results

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, J.; Wang, Z.; Yu, B.; Tang, J.; Song, S.L.; Liu, C. Data Fusion in Infrastructure-Augmented Autonomous Driving System: Why, Where and How. IEEE Internet Things J. 2023, 10, 15857–15871. [Google Scholar] [CrossRef]

- Lu, B.; Sun, Y.; Yang, Z. Grid self-attention mechanism 3D object detection method based on raw point cloud. J. Commun. 2023, 44, 72–84. [Google Scholar]

- Zhou, Y.; Peng, G.; Duan, H.; Wu, Z.; Zhu, X. A Point Cloud Segmentation Method Based on Ground Point Cloud Removal and Multi-Scale Twin Range Image. In Proceedings of the Chinese Control Conference, Tianjin, China, 24–26 July 2023; pp. 4602–4609. [Google Scholar]

- Qian, Y.; Wang, X.; Chen, Z.; Wang, C.; Yang, M. Hy-Seg: A Hybrid Method for Ground Segmentation Using Point Clouds. IEEE Trans. Intell. Veh. 2023, 8, 1597–1606. [Google Scholar] [CrossRef]

- Ren, Y.; Meng, S.; Huang, M.; Hou, Y. A robust ground seg-mentation method for vehicle lidar. In Proceedings of the International Conference on Electronic Information Technology and Computer Engineering, Xiamen, China, 20–22 October 2023; pp. 338–344. [Google Scholar]

- Lim, H.; Hwang, S.; Myung, H. ERASOR: Egocentric Ratio of Pseudo Occupancy-Based Dynamic Object Removal for Static 3D Point Cloud Map Building. IEEE Robot. Autom. Lett. 2021, 6, 2272–2279. [Google Scholar] [CrossRef]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. RandLA-Net: Efficient Semantic Segmentation of Large-Scale Point Clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11105–11114. [Google Scholar]

- Paigwar; Erkent, Ö.; Sierra-Gonzalez, D.; Laugier, C. Gnd-Net: Fast Ground Plane Estimation and Point Cloud Segmentation for Autonomous Vehicles. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Los Vegas, NV, USA, 25–29 October 2020; pp. 2150–2156. [Google Scholar]

- Lee, S.; Lim, H.; Myung, H. Patchwork++: Fast and Robust Ground Segmentation Solving Partial Under-Segmentation Using 3D Point Cloud. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, Kyoto, Japan, 23–27 October 2022; pp. 13276–13283. [Google Scholar]

- Oh, M.; Jung, E.; Lim, H.; Song, W.; Hu, S.; Lee, E.M.; Park, J.; Kim, J.; Lee, J.; Myung, H. TRAVEL: Traversable Ground and Above-Ground Object Segmentation Using Graph Representation of 3D LiDAR Scans. IEEE Robot. Autom. Lett. 2022, 7, 7255–7262. [Google Scholar] [CrossRef]

- Cheng, J.; He, D.; Lee, C. A simple ground segmentation method for LiDAR 3D point clouds. In Proceedings of the International Conference on Advances in Computer Technology, Information Science and Communications, Suzhou, China, 10–12 July 2020; pp. 171–175. [Google Scholar]

- Jiménez, V.; Godoy, J. Artuñedo and J. Villagra. Ground Seg-mentation Algorithm for Sloped Terrain and Sparse LiDAR Point Cloud. IEEE Access 2021, 9, 132914–132927. [Google Scholar] [CrossRef]

- Lim, H.; Oh, M.; Myung, H. Patchwork: Concentric Zone-Based Region-Wise Ground Segmentation with Ground Likelihood Estimation Using a 3D LiDAR Sensor. IEEE Robot. Autom. Lett. 2021, 6, 6458–6465. [Google Scholar] [CrossRef]

- Zhang, H.; Wei, S.; Liu, G.; Pang, F.; Yu, F. SLAM ground point extraction algorithm combining Depth Image and Semantic Segmentation Network. In Proceedings of the International Conference on Image Processing, Computer Vision and Machine Learning, Chengdu, China, 3–5 November 2023; pp. 348–352. [Google Scholar]

- Liu, Z.; Yi, Z.; Cheng, C. A Robust Ground Point Cloud Seg-mentation Algorithm Based on Region Growing in a Fan-shaped Grid Map. In Proceedings of the IEEE International Conference on Robotics and Biomimetics, Xishuangbanna, China, 5–9 December 2022; pp. 1359–1364. [Google Scholar]

- Zuo, Z.; Fu, Z.; Li, Z.; Wang, Y.; Ren, Y. Ground Segmentation of 3D LiDAR Point Cloud with Adaptive Threshold. In Proceedings of the Chinese Control Conference, Tianjin, China, 24–26 July 2023; pp. 8032–8037. [Google Scholar]

- Wang, F.; Fan, Z.; Li, Y.; Xu, L.; Shi, L.; Qian, K. Ground Plane Segmentation and Outliers Removal in Point Clouds of Electrical Substations. In Proceedings of the IEEE International Conference on Information Technology, Big Data and Artificial Intelligence, Chongqing, China, 26–28 May 2023; pp. 299–303. [Google Scholar]

- Mijit, T.; Firkat, E.; Yuan, X.; Liang, Y.; Zhu, J.; Hamdulla, A. LR-Seg: A Ground Segmentation Method for Low-Resolution LiDAR Point Clouds. IEEE Trans. Intell. Veh. 2024, 9, 347–356. [Google Scholar] [CrossRef]

- Huang, L.; Guo, S.; Li, C.; Lei, Q.; Nie, J. Research on Segmentation and Clustering Algorithms of 3D Point Clouds for Mobile Robot Navigation. In Proceedings of the IEEE International Conference on Mechatronics and Automation, Tianjin, China, 4–7 August 2024; pp. 1308–1313. [Google Scholar]

- Wang, M.; Wang, F.; Liu, C.; Ai, M.; Yan, G.; Fu, Q. DBSCAN Clustering Algorithm of Millimeter Wave Radar Based on Multi Frame Joint. In Proceedings of the International Conference on Intelligent Control, Measurement and Signal Processing, Hangzhou, China, 8–10 July 2022; pp. 1049–1053. [Google Scholar]

- Zhang, X.; Su, J.; Zhang, H.; Zhang, X.; Chen, X.; Cui, Y. Traffic accident location study based on AD-DBSCAN Algorithm with Adaptive Parameters. In Proceedings of the International Conference on Computer Supported Cooperative Work in Design, Rio de Janeiro, Brazil, 24–26 May 2023; pp. 1160–1165. [Google Scholar]

- Raj, S.; Ghosh, D. Optimized DBSCAN with Improved Static clutter removal for High Resolution Automotive Radars. In Proceedings of the European Radar Conference, Locarno, Switzerland, 29 August–2 September 2022; pp. 1–4. [Google Scholar]

- Peng, C.; Jin, L.; Yuan, X.; Chai, L. Vehicle Point Cloud Seg-mentation Method Based on Improved Euclidean Clustering. In Proceedings of the Chinese Control and Decision Conference, Yichang, China, 20–22 May 2023; pp. 4870–4874. [Google Scholar]

| Road Corner | Precision | Recall | F1 Score |

|---|---|---|---|

| LR-Seg | 0.8723 | 0.9127 | 0.892 |

| Patchwork++ | 0.8381 | 0.9219 | 0.878 |

| RANSAC | 0.8737 | 0.8091 | 0.8403 |

| Improved Euclidean Clustering | 0.8428 | 0.863 | 0.8528 |

| Ours | 0.9015 | 0.9182 | 0.9098 |

| Parking Space | Precision | Recall | F1 Score |

|---|---|---|---|

| LR-Seg | 0.8904 | 0.9312 | 0.9101 |

| Patchwork++ | 0.8419 | 0.9423 | 0.8881 |

| RANSAC | 0.8818 | 0.7646 | 0.8191 |

| Improved Euclidean Clustering | 0.8567 | 0.8754 | 0.8659 |

| Ours | 0.9118 | 0.9225 | 0.9171 |

| Long Road | Precision | Recall | F1 Score |

|---|---|---|---|

| LR-Seg | 0.9227 | 0.9525 | 0.9365 |

| Patchwork++ | 0.874 | 0.9598 | 0.9137 |

| RANSAC | 0.8971 | 0.838 | 0.8665 |

| Improved Euclidean Clustering | 0.8945 | 0.8867 | 0.8906 |

| Ours | 0.936 | 0.9552 | 0.9455 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, T.; Fu, Y.; Zhang, Z.; Cheng, X.; Li, L.; He, Z.; Wang, H.; Gong, K. Research on Ground Point Cloud Segmentation Algorithm Based on Local Density Plane Fitting in Road Scene. Sensors 2025, 25, 4781. https://doi.org/10.3390/s25154781

Wang T, Fu Y, Zhang Z, Cheng X, Li L, He Z, Wang H, Gong K. Research on Ground Point Cloud Segmentation Algorithm Based on Local Density Plane Fitting in Road Scene. Sensors. 2025; 25(15):4781. https://doi.org/10.3390/s25154781

Chicago/Turabian StyleWang, Tao, Yiming Fu, Zhi Zhang, Xing Cheng, Lin Li, Zhenxue He, Haonan Wang, and Kexin Gong. 2025. "Research on Ground Point Cloud Segmentation Algorithm Based on Local Density Plane Fitting in Road Scene" Sensors 25, no. 15: 4781. https://doi.org/10.3390/s25154781

APA StyleWang, T., Fu, Y., Zhang, Z., Cheng, X., Li, L., He, Z., Wang, H., & Gong, K. (2025). Research on Ground Point Cloud Segmentation Algorithm Based on Local Density Plane Fitting in Road Scene. Sensors, 25(15), 4781. https://doi.org/10.3390/s25154781