Comprehensive Analysis of Neural Network Inference on Embedded Systems: Response Time, Calibration, and Model Optimisation †

Abstract

1. Introduction

- The lack of systematic benchmarks for generic ANN architectures. While prior work predominantly benchmarked specific pre-trained models for vision tasks, we evaluate generic and application-independent networks on three different embedded systems. This systematic evaluation varies input/output dimensions, network depth, width, and activation functions to provide empirical insights into how these factors affect response time. These findings underscore the importance of lightweight and optimised models, which motivates our investigation into a second challenge.

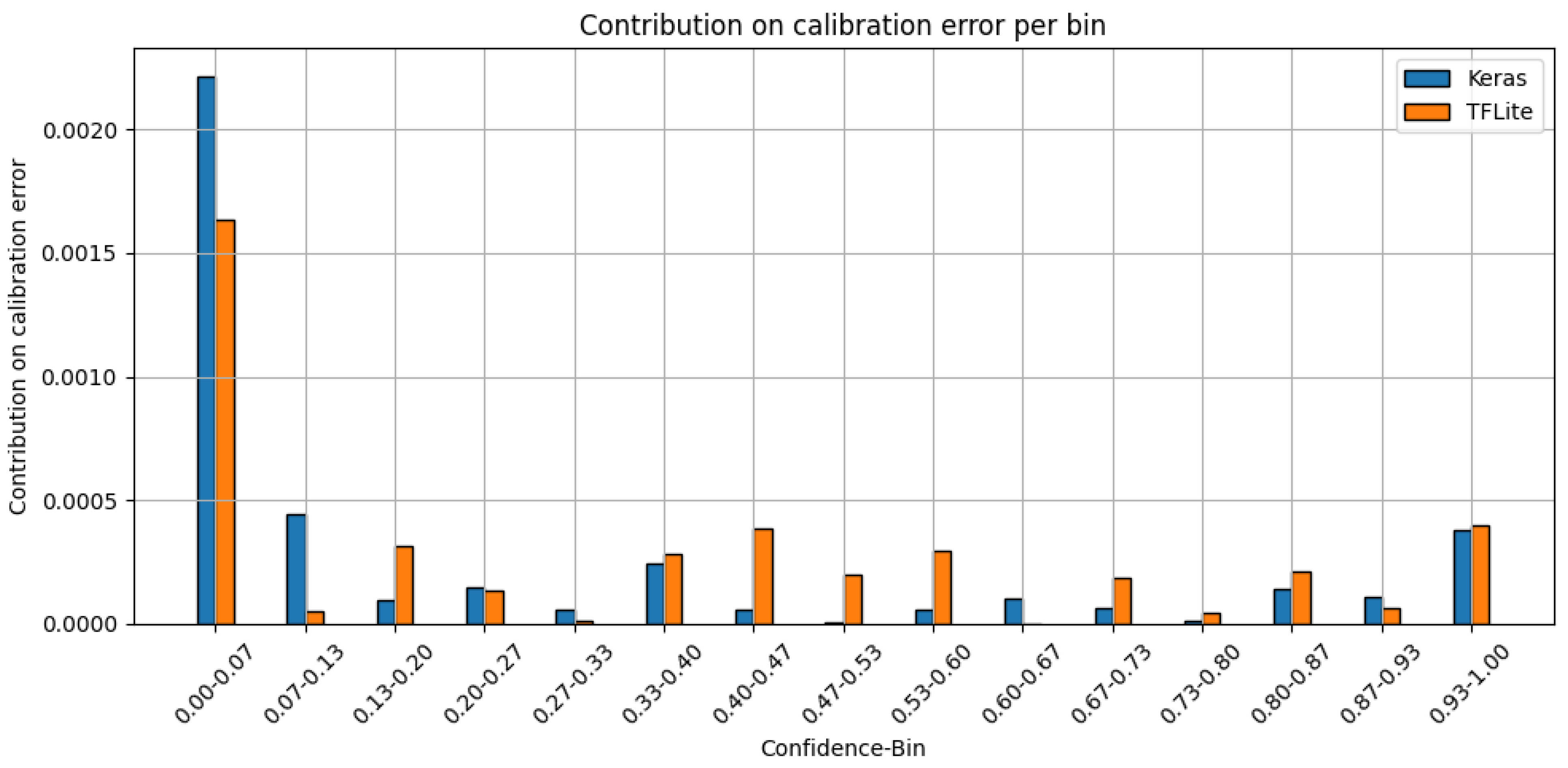

- Limited insight into the effects of model conversion on calibration. Using the ECE as a calibration metric, we investigate how the conversion of Keras models to TFLite impacts the reliability of predicted confidences. While accuracy preservation during conversion has been well studied, e.g., [5], the impact on calibration remains insufficiently addressed.

2. Net Dimensions

2.1. Review of Net Dimensions and Problem Complexity

2.2. Sizing of the Experiments’ Net Dimensions

- Input/output dimensions between 1 and 91 (10 variations);

- Neurons per layer between 2 and 192 (20 variations);

- Layers between 2 and 192 (20 variations).

3. Experimentation Setup

3.1. Time Measurement

3.2. Dataset

3.3. Evaluation Hardware

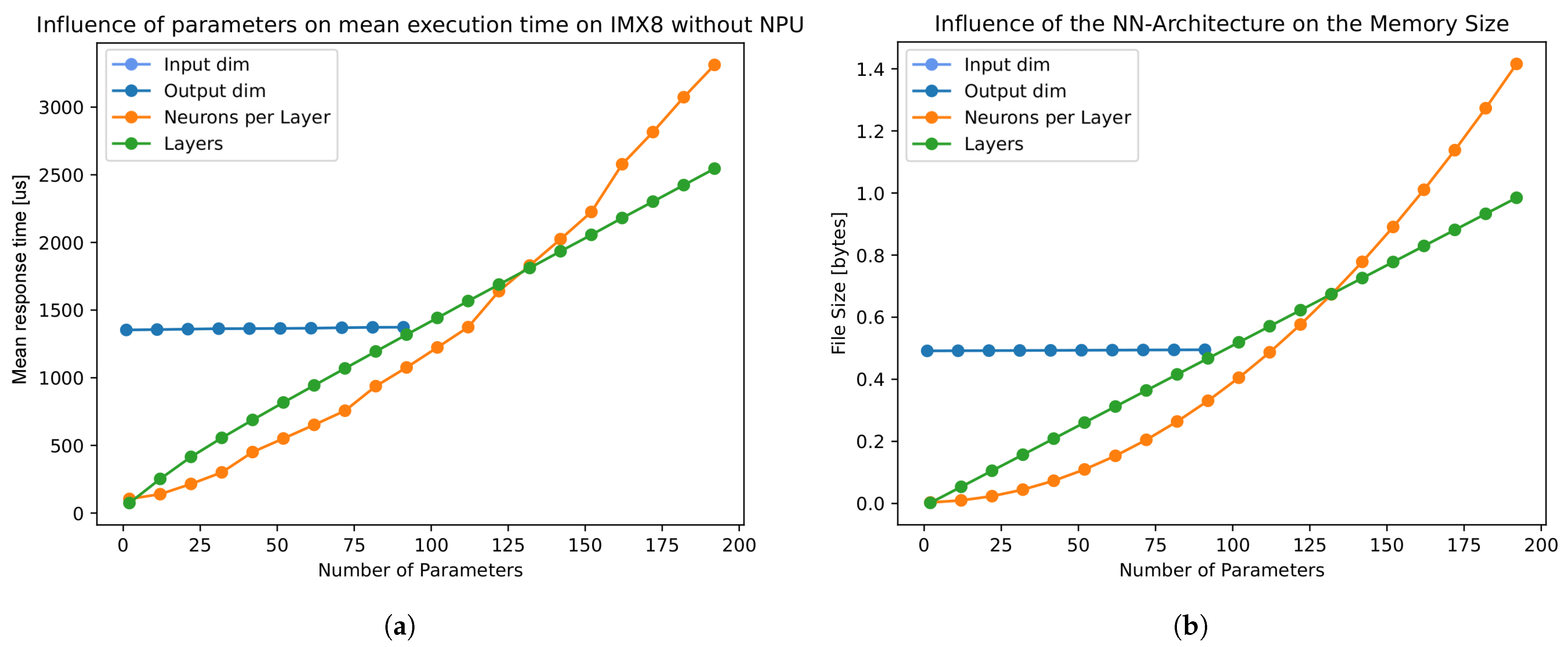

4. Analysis of the Influence of Net Dimensions and Structure

5. Conducting the Experiments

5.1. Experimentation Method

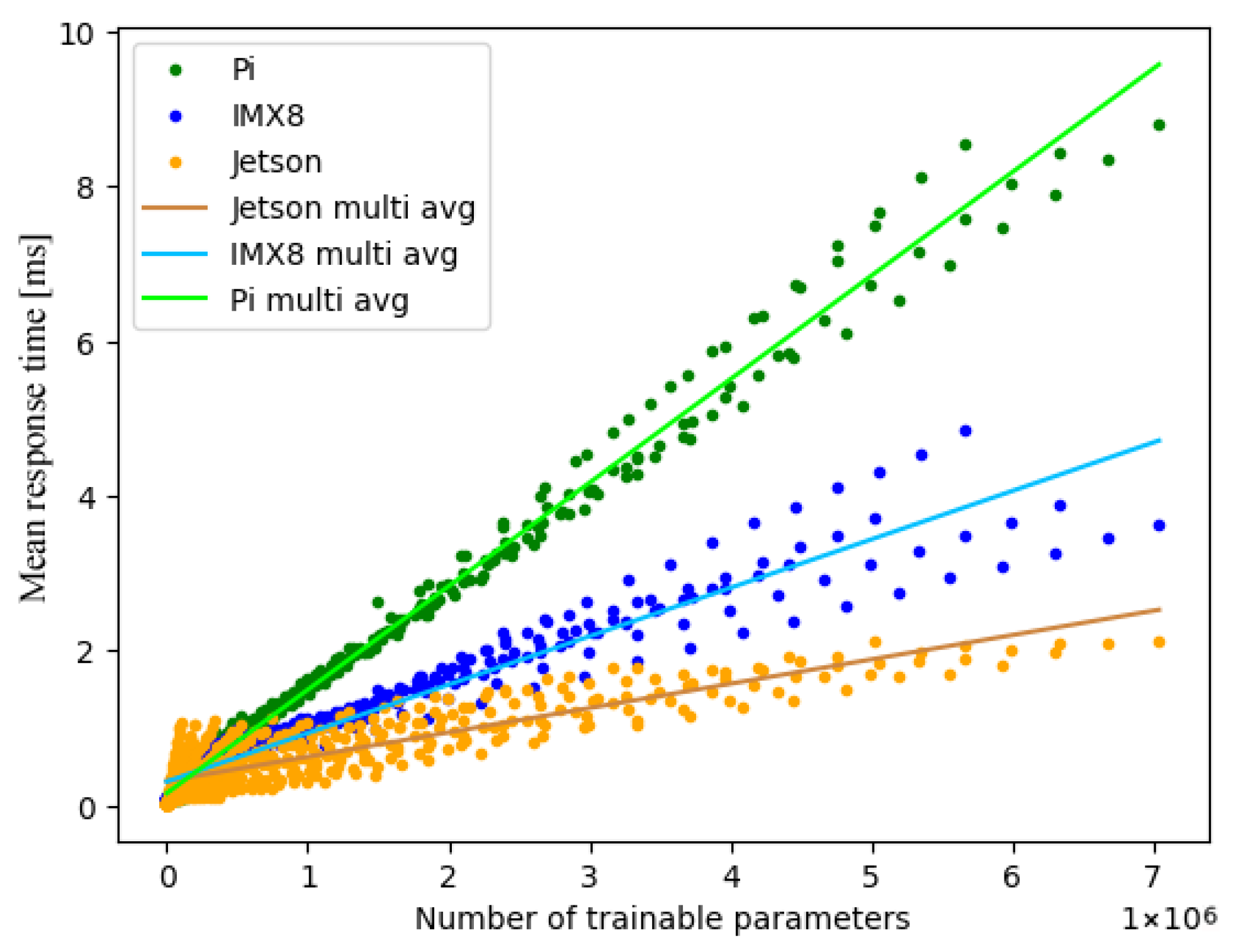

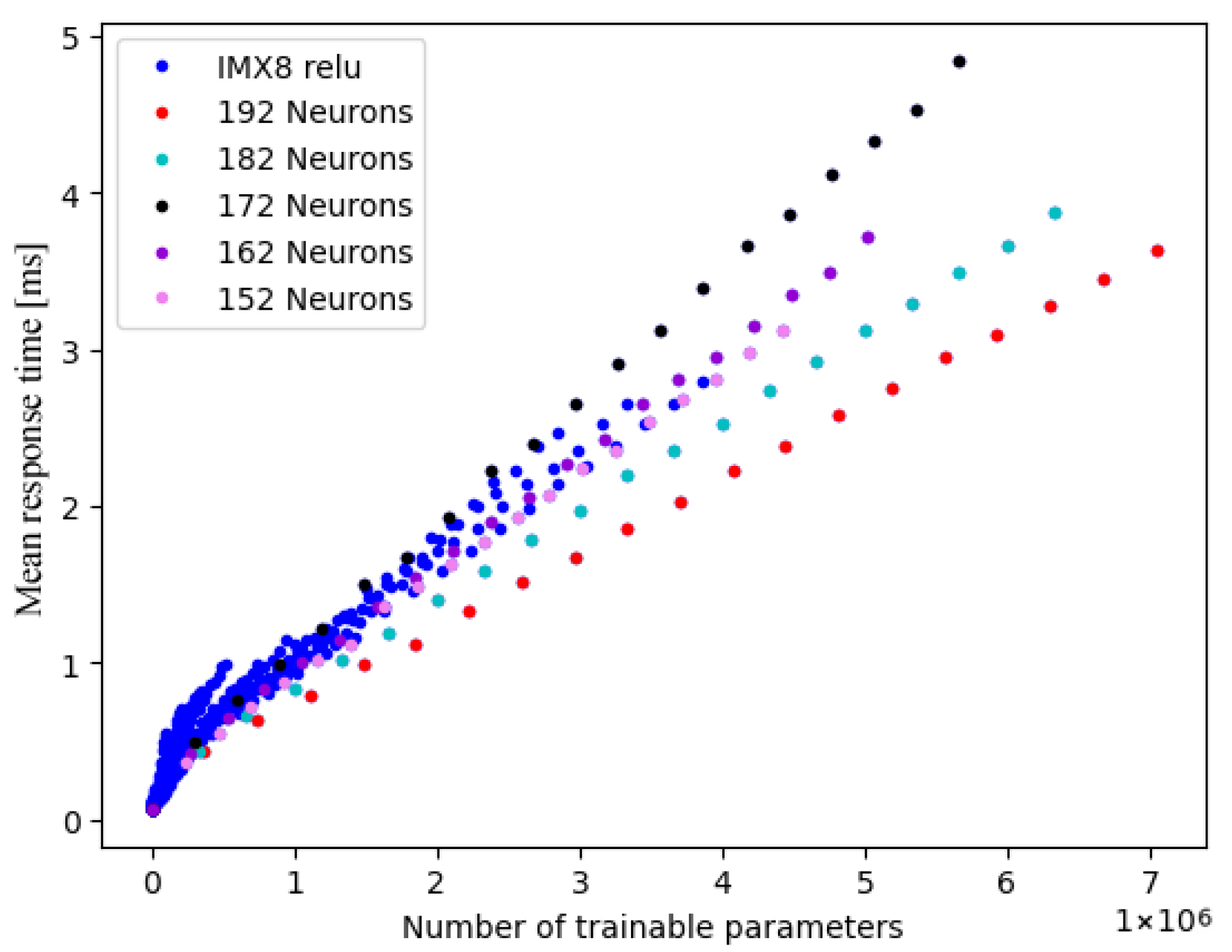

- As response times strongly depend on the total number of trainable parameters in a network as well as the hardware platform, these dimensions are compared.

- In order to reduce the amount of variation, networks are structured as rectangles because the number of trainable parameters and the response times of, e.g., pyramidal networks are enclosed in those of rectangular nets.

- For a further reduction in experiments, input and output layer variations were omitted and set to a constant of one due to the marginal influence on response times.

- Since a network consisting of twelve layers and two neurons per layer has the same number of trainable parameters (67 total) as one consisting of two layers and twenty-two neurons, networks with equivalent numbers of total trainable parameters are not measured anew.

- No processes aside from those necessary for the operating system were run concurrently with the benchmarking in order to minimise the impact of outliers (e.g., interrupts), and 100,000 calculations were run each.

5.2. Empirical Results

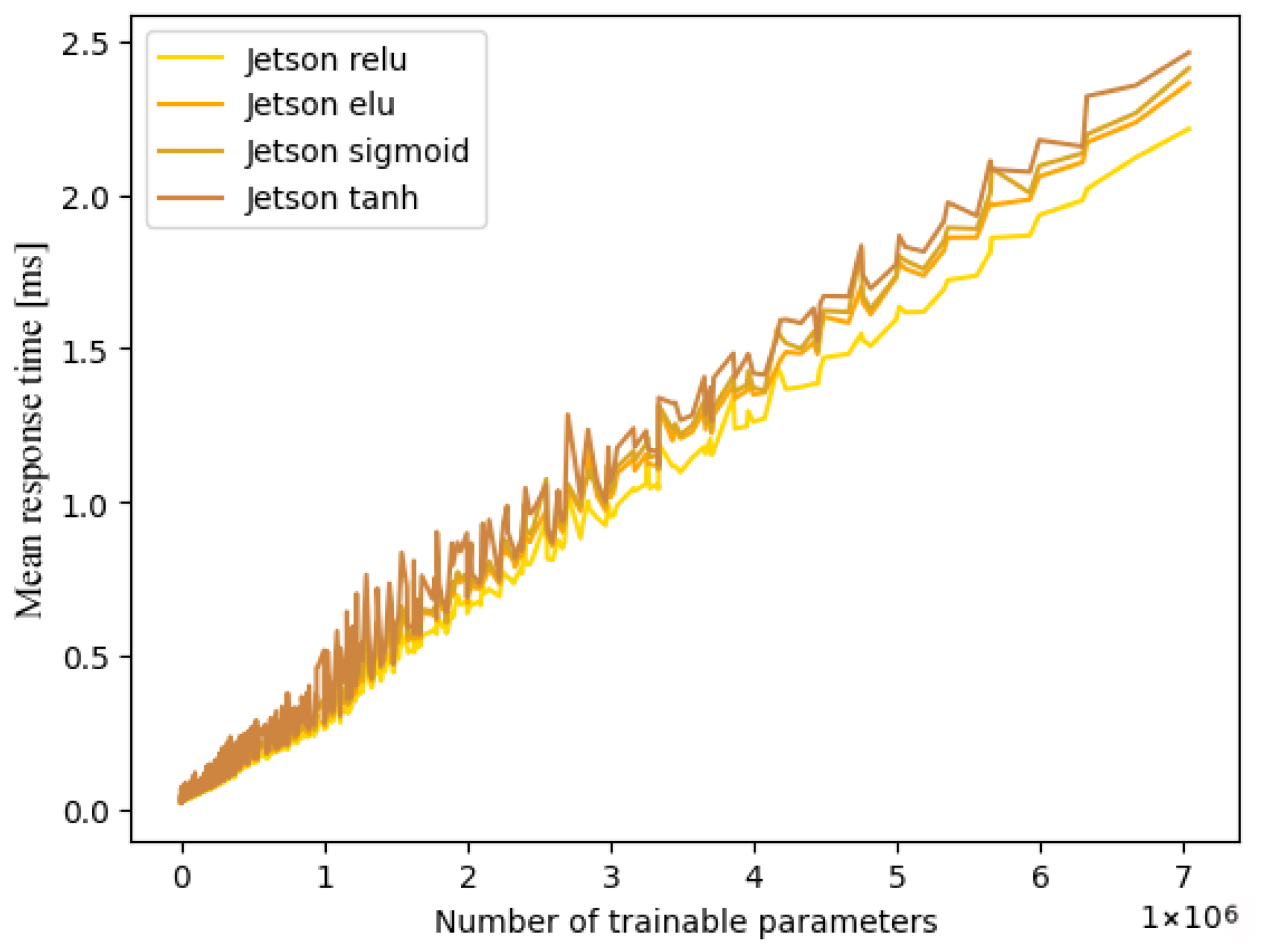

5.2.1. Single-Threading

- ReLU;

- ELU;

- Sigmoid;

- TanH.

5.2.2. Multi-Threading

5.3. Comparison Between Multi- and Single-Threading

5.4. Side Effects

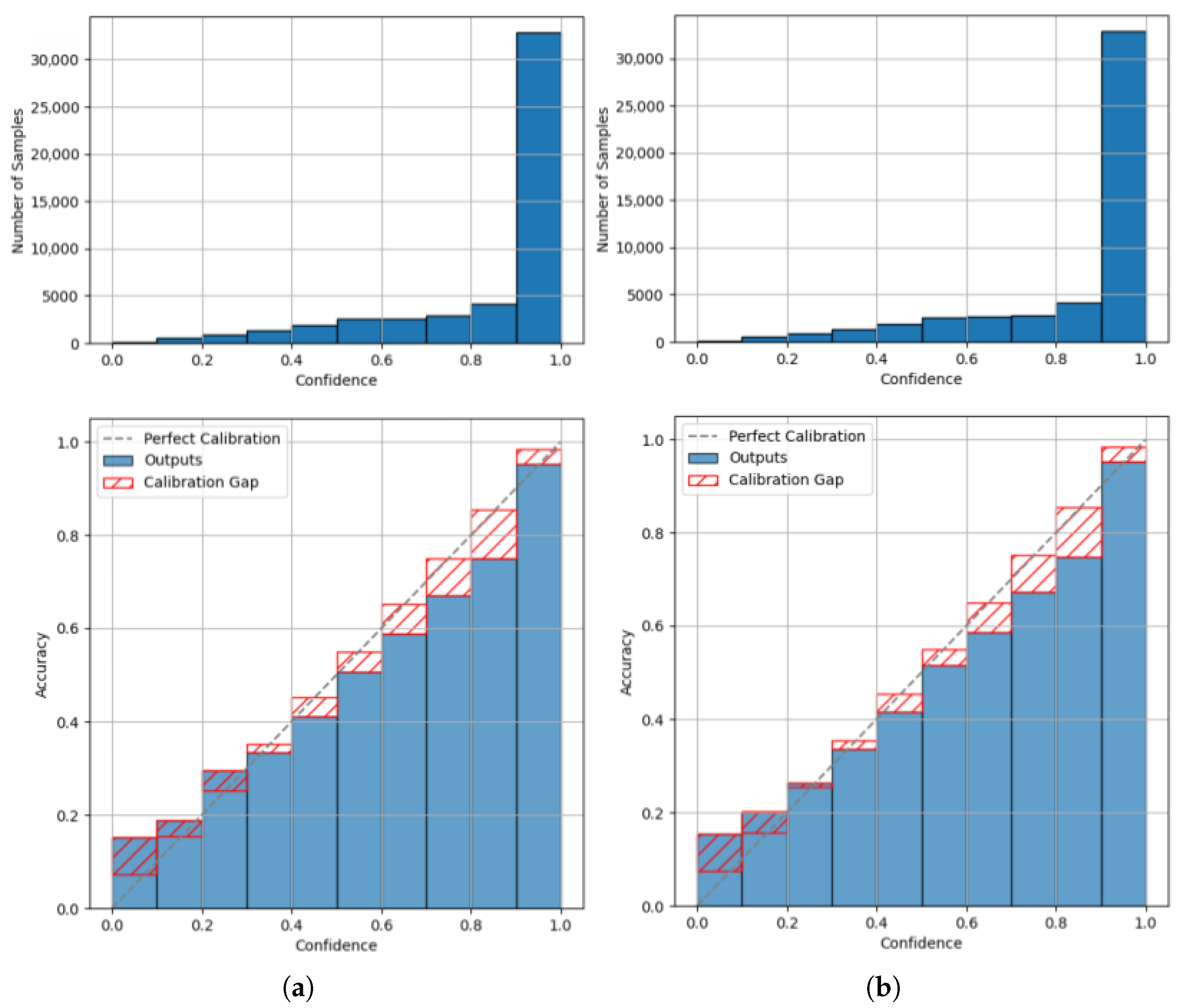

6. Impact of the Network Structure on the Model Calibration

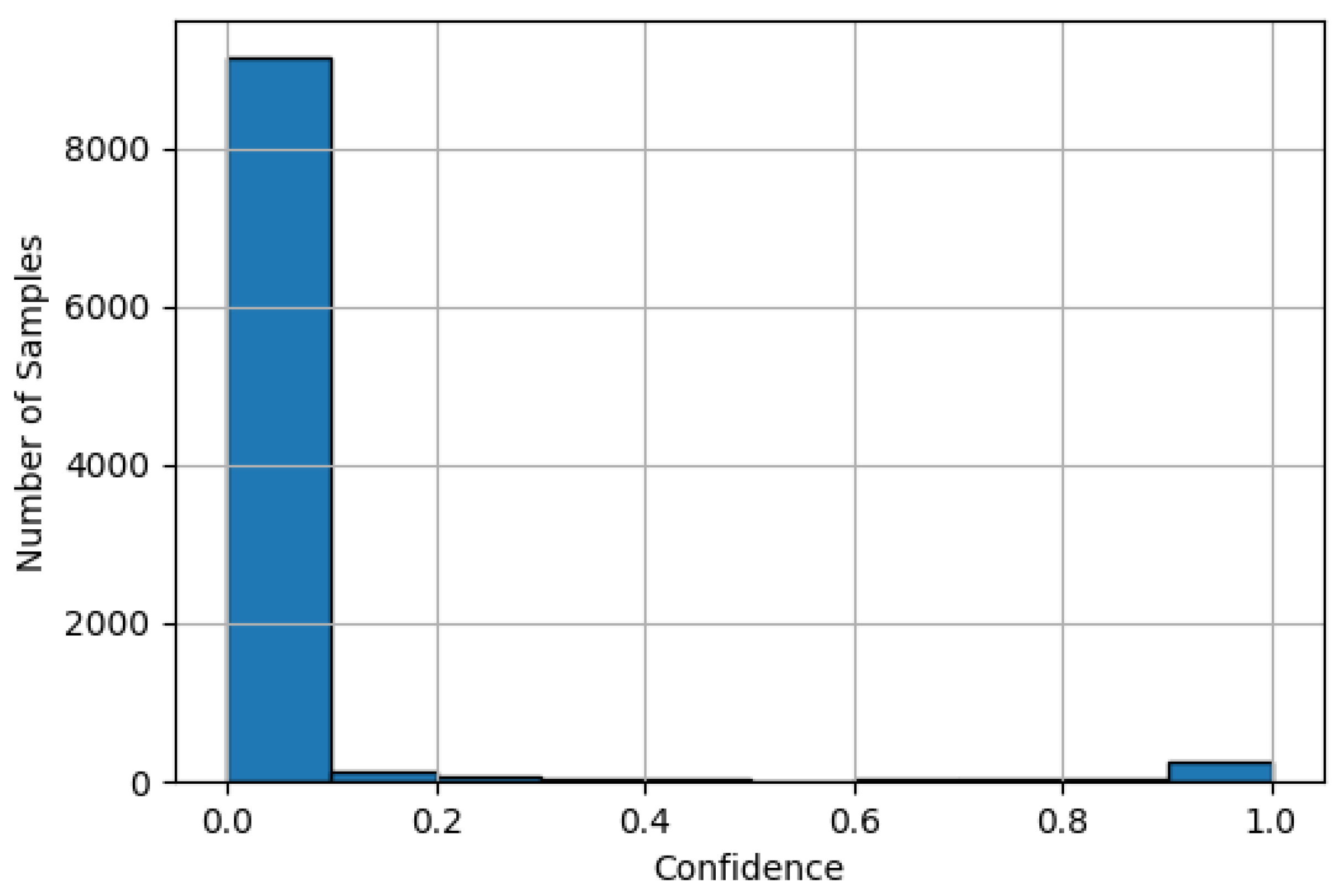

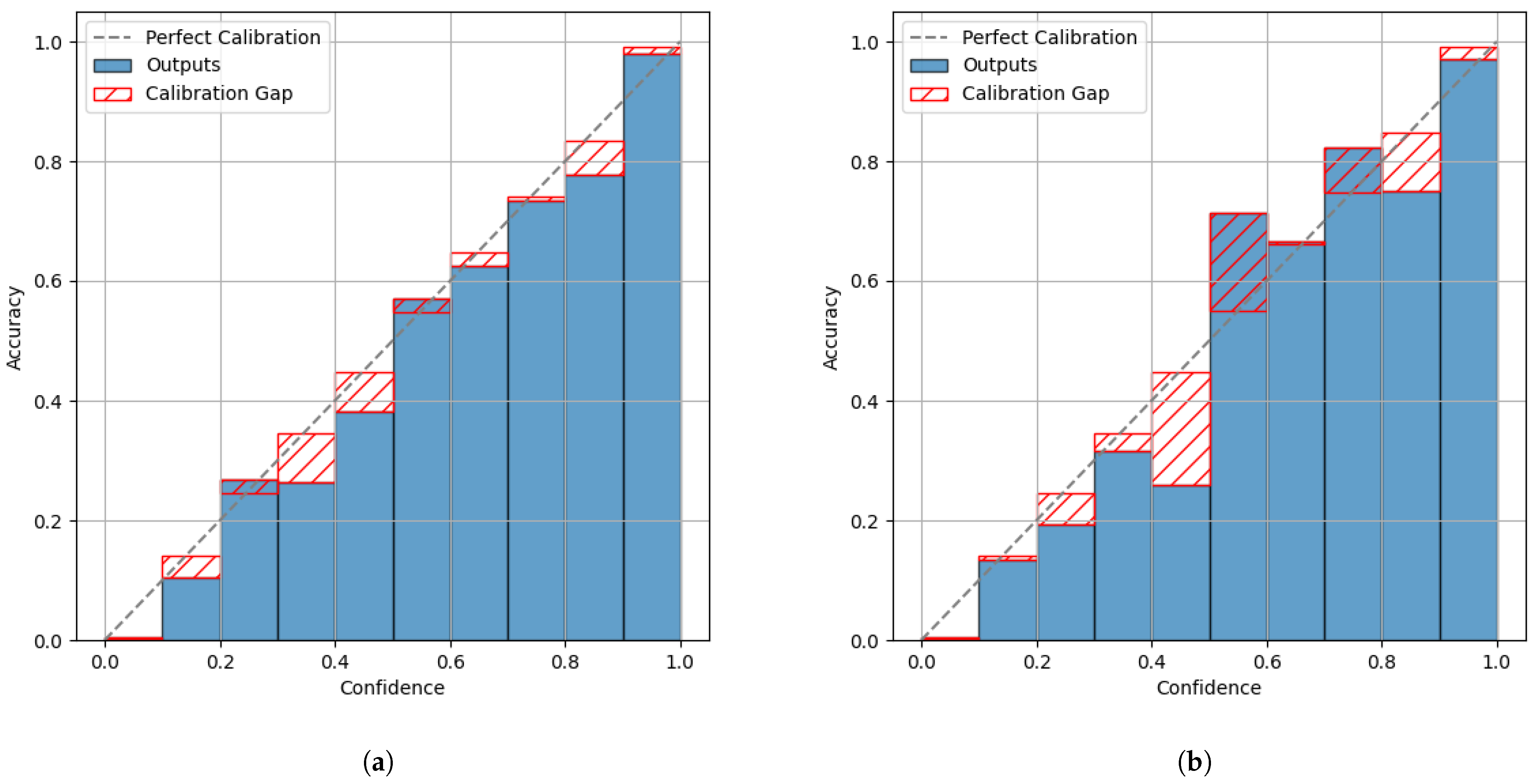

7. Impact of Conversion to TFLite on Model Calibration

7.1. Model and Dataset Selection

- Relevancy to current research;

- Availability and reproducibility;

- TFLite compatibility;

- Comparability to existing benchmarks.

7.1.1. Use Case: Image Recognition

7.1.2. Use Case: Signal Processing

7.2. Empirical Results

7.2.1. Use Case: Image Recognition

7.2.2. Use Case: Signal Processing

8. Conclusions

8.1. Lessons Learned

- Activation functions influence thread-level performance. Beyond arithmetic complexity, different activation functions affect how efficiently computations scale under multi-threaded execution. ReLU consistently shows stable performance, whereas TanH suffers from degraded parallel efficiency—suggesting less favourable interaction with the runtime scheduling (cf. Section 5.2).

- Multi-threading behaviour is strongly platform-dependent. Contrary to expectations, multi-threading sometimes leads to significantly higher response times instead of improvements. This unexpected degradation points to inefficiencies in current partitioning and scheduling strategies of state-of-the-art libraries, highlighting an urgent need for optimisation tailored to specific hardware and model characteristics.

- IMX8 profits from thread-level parallelism;

- Raspberry Pi exhibits performance degradation under multi-threaded execution;

- Jetson reacts variably depending on model configuration (Section 5.2.2 and Section 5.3).

- Thread scheduling behaviour lacks transparency. Identical models yield inconsistent results across platforms despite uniform conditions. These effects indicate a complex interaction between runtime-level scheduling and model structure that is not visible or controllable at the user level (Section 5.4).

- TFLite model conversion preserves calibration globally but alters local confidence patterns. Post-conversion evaluation is recommended, particularly for applications relying on confidence-based decisions (Section 7). This applies especially to systems where sensors act as autonomous decision triggers in real-world environments.

8.2. Outlook

- Targeted use of pruning for calibration improvement. Prior work suggests that larger networks tend to be overconfident. Based on this, we hypothesise that systematical pruning may help regularise confidence by removing such overconfident subnets. Future work could explore pruning strategies optimised for calibration error, enabling smaller and better-calibrated models for embedded deployment.

- Extension to other model types. To assess the structural generalisability of response time modelling, model types that have not been presented in this work, such as recurrent or attention-based networks, offer themselves to further study.

- Development of adaptive scheduling mechanisms. Our analysis shows that the effectiveness of multi-threaded execution varies with model and hardware characteristics. To address this, future frameworks could monitor runtime behaviour and dynamically adjust scheduling strategies when inefficiencies are detected, improving inference performance under changing conditions.

- Reverse-engineering runtime scheduling via algorithm pattern detection. Due to limited transparency in TFLite’s multi-threading, future work could apply automated algorithmic pattern recognition to source code and runtime data [33]. This would help to uncover scheduling structures and dependencies, revealing bottlenecks and guiding optimisations.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| ANN | Artificial Neural Network |

| CPU | Central Processing Unit |

| CSV | Comma-Separated Value |

| ECE | Expected Calibration Error |

| ELU | Exponential Linear Unit |

| KPI | Key Performance Indicators |

| LLM | Large Language Models |

| MLP | Multi Layer Perceptron |

| MFCC | Mel-Frequency Cepstral Coefficients |

| NLL | Negative log likelihood |

| NPU | Neural Processing Unit |

| ReLU | Rectified Linear Unit |

| ResNet50 | Residual Neural Network with 50 Layers |

| TanH | Tangens Hyperbolicus |

| TFLite | Tensorflow Lite |

| VGG16 | Visual Geometry Group from Oxford CNN |

| WCET | worst-case execution time |

References

- Khandelwal, R. A Basic Introduction to TensorFlow Lite—Towards Data Science. 2021. Available online: http://archive.today/IPnrr (accessed on 15 December 2021).

- Baller, S.P.; Jindal, A.; Chadha, M.; Gerndt, M. DeepEdgeBench: Benchmarking deep neural networks on edge devices. In Proceedings of the 2021 IEEE International Conference on Cloud Engineering (IC2E), San Francisco, CA, USA, 4–8 October 2021; pp. 20–30. [Google Scholar]

- Luo, C.; He, X.; Zhan, J.; Wang, L.; Gao, W.; Dai, J. Comparison and benchmarking of ai models and frameworks on mobile devices. arXiv 2020, arXiv:2005.05085. [Google Scholar] [CrossRef]

- Elhanashi, A.; Dini, P.; Saponara, S.; Zheng, Q. Integration of Deep Learning into the IoT: A Survey of Techniques and Challenges for Real-World Applications. Electronics 2023, 12, 4925. [Google Scholar] [CrossRef]

- Rashidi, M. Application of TensorFlow Lite on Embedded Devices: A Hands-on Practice of TensorFlow Model Conversion to TensorFlow Lite Model and Its Deployment on Smartphone to Compare Model’s Performance. 2022. Available online: https://www.diva-portal.org/smash/record.jsf?pid=diva2%3A1698946 (accessed on 20 July 2025).

- Barral Vales, V.; Fernández, O.C.; Domínguez-Bolaño, T.; Escudero, C.J.; García-Naya, J.A. Fine Time Measurement for the Internet of Things: A Practical Approach Using ESP32. IEEE Internet Things J. 2022, 9, 18305–18318. [Google Scholar] [CrossRef]

- Tran, T.T.K.; Lee, T.; Kim, J.S. Increasing neurons or deepening layers in forecasting maximum temperature time series? Atmosphere 2020, 11, 1072. [Google Scholar] [CrossRef]

- Acker, A.; Wittkopp, T.; Nedelkoski, S.; Bogatinovski, J.; Kao, O. Superiority of simplicity: A lightweight model for network device workload prediction. In Proceedings of the 2020 15th Conference on Computer Science and Information Systems (FedCSIS), Sofia, Bulgaria, 6–9 September 2020; pp. 7–10. [Google Scholar]

- Krasteva, V.; Ménétré, S.; Didon, J.P.; Jekova, I. Fully convolutional deep neural networks with optimized hyperparameters for detection of shockable and non-shockable rhythms. Sensors 2020, 20, 2875. [Google Scholar] [CrossRef] [PubMed]

- Ullah, I.; Yang, F.; Khan, R.; Liu, L.; Yang, H.; Gao, B.; Sun, K. Predictive maintenance of power substation equipment by infrared thermography using a machine-learning approach. Energies 2017, 10, 1987. [Google Scholar] [CrossRef]

- Adolf, R.; Rama, S.; Reagen, B.; Wei, G.Y.; Brooks, D. Fathom: Reference workloads for modern deep learning methods. In Proceedings of the 2016 IEEE International Symposium on Workload Characterization (IISWC), Providence, RI, USA, 25–27 September 2016; pp. 1–10. [Google Scholar]

- Liu, J.W.S. Real-Time Systems; Prentice Hall: Upper Saddle River, NJ, USA, 2000. [Google Scholar]

- Model Optimization, 2024. Available online: https://ai.google.dev/edge/litert/models/model_optimization (accessed on 18 March 2025).

- Blalock, D.W.; Ortiz, J.J.G.; Frankle, J.; Guttag, J.V. What is the State of Neural Network Pruning? arXiv 2020, arXiv:2003.03033. [Google Scholar] [CrossRef]

- Verma, G.; Gupta, Y.; Malik, A.M.; Chapman, B. Performance Evaluation of Deep Learning Compilers for Edge Inference. In Proceedings of the 2021 IEEE International Parallel and Distributed Processing Symposium Workshops (IPDPSW), Portland, OR, USA, 17–21 May 2021; pp. 858–865. [Google Scholar] [CrossRef]

- PassMark Software Inc. ARM Cortex-A53 4 Core 1800 MHz vs ARM Cortex-A72 4 Core 1500 MHz [cpubenchmark.net] by PassMark Software, 2022. Available online: https://www.cpubenchmark.net/compare/4128vs3917/ARM-Cortex-A53-4-Core-\protect\penalty\z@1800-MHz-vs-ARM-Cortex-A72-4-Core-1500-MHz (accessed on 26 October 2022).

- TensorFlow. Performance Measurement. 2022. Available online: https://www.tensorflow.org/lite/performance/measurement (accessed on 6 December 2023).

- Performance Best Practices. 2024. Available online: https://ai.google.dev/edge/litert/models/best_practices (accessed on 17 July 2025).

- Guo, C.; Pleiss, G.; Sun, Y.; Weinberger, K.Q. On Calibration of Modern Neural Networks. arXiv 2017, arXiv:1706.04599. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016; Available online: http://www.deeplearningbook.org (accessed on 18 March 2025).

- Zhu, C.; Xu, B.; Wang, Q.; Zhang, Y.; Mao, Z. On the Calibration of Large Language Models and Alignment. In Proceedings of the Findings of the Association for Computational Linguistics: EMNLP 2023, Singapore, 6–10 December 2023; Bouamor, H., Pino, J., Bali, K., Eds.; Association for Computational Linguistics: Stroudsburg, PA, USA, 2023; pp. 9778–9795. [Google Scholar] [CrossRef]

- Minderer, M.; Djolonga, J.; Romijnders, R.; Hubis, F.; Zhai, X.; Houlsby, N.; Tran, D.; Lucic, M. Revisiting the Calibration of Modern Neural Networks. arXiv 2021, arXiv:2106.07998. [Google Scholar] [CrossRef]

- Mitra, P.; Schwalbe, G.; Klein, N. Investigating Calibration and Corruption Robustness of Post-hoc Pruned Perception CNNs: An Image Classification Benchmark Study. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) Workshops, Vancouver, BC, Canada, 17–24 June 2023; pp. 3542–3552. [Google Scholar]

- Ko, V.; Oehmcke, S.; Gieseke, F. Magnitude and Uncertainty Pruning Criterion for Neural Networks. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 2317–2326. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An Image is Worth 16x16 Words: Transformers for Image Recognition at Scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Vit-Keras. 2025. Available online: https://github.com/faustomorales/vit-keras (accessed on 7 May 2025).

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S.; Huang, Z.; Karpathy, A.; Khosla, A.; Bernstein, M.; et al. ImageNet Large Scale Visual Recognition Challenge. Int. J. Comput. Vis. 2015, 115, 211–252. [Google Scholar] [CrossRef]

- Tflite-Speech-Recognition. 2025. Available online: https://github.com/ShawnHymel/tflite-speech-recognition (accessed on 8 May 2025).

- Warden, P. Speech Commands: A Dataset for Limited-Vocabulary Speech Recognition. arXiv 2018, arXiv:1804.03209. [Google Scholar] [CrossRef]

- Detlefsen, N.S.; Borovec, J.; Schock, J.; Jha, A.H.; Koker, T.; Liello, L.D.; Stancl, D.; Quan, C.; Grechkin, M.; Falcon, W. TorchMetrics-Measuring Reproducibility in PyTorch. J. Open Source Softw. 2022, 7, 4101. [Google Scholar] [CrossRef]

- Calculating Expected Calibration Error for Binary Classification. 2024. Available online: https://jamesmccaffrey.wordpress.com/2021/01/06/calculating-expected-calibration-error-for-binary-classification (accessed on 8 May 2025).

- Nixon, J.; Dusenberry, M.; Jerfel, G.; Nguyen, T.; Liu, J.; Zhang, L.; Tran, D. Measuring Calibration in Deep Learning. arXiv 2020, arXiv:1904.01685. [Google Scholar] [CrossRef]

- Neumüller, D.; Sihler, F.; Straub, R.; Tichy, M. Exploring the Effectiveness of Abstract Syntax Tree Patterns for Algorithm Recognition. In Proceedings of the 2024 4th International Conference on Code Quality (ICCQ), Innopolis, Russia, 22 June 2024; pp. 1–18. [Google Scholar] [CrossRef]

| Name | NXP | Raspberry | NVIDIA |

|---|---|---|---|

| 8MPLUSLPD4-EVK | Pi 4 Model B | Jetson AGX XAVIER | |

| Processor | ARM Cortex-A53 4 Core | ARM Cortex-A72 4 Core | NVIDIA Carmel ARM 8 Core |

| Clock Speed | 1.80 GHz | 1.50 GHz | 2.20 GHz |

| Operating System | Yocto 5.15 (kirkstone) | Debian 11 (bullseye) | Ubuntu 20.04.6 (focal) |

| Manufacturer | NXP Semiconductors | Raspberry Pi Ltd. | NVIDIA Corp. |

| City, Country | Eindhoven, NL | Cambridge, UK | Santa Clara, CA, USA |

| Hardware | Activation | Gradient | Y-Axis | Maximum |

|---|---|---|---|---|

| Platform | Function | Section | Deviation | |

| IMX8 | ReLU | 1.469174 × 10−6 | 2.303959 × 10−1 | 0.298859 |

| ELU | 1.520086 × 10−6 | 3.118073 × 10−1 | 0.365074 | |

| Sigmoid | 1.524141 × 10−6 | 3.135349 × 10−1 | 0.378036 | |

| Tanh | 1.649902 × 10−6 | 4.050808 × 10−1 | 0.553396 | |

| Raspberry Pi | ReLU | 1.071348 × 10−6 | 1.578266 × 10−1 | 0.286284 |

| ELU | 1.112801 × 10−6 | 1.998119 × 10−1 | 0.345382 | |

| Sigmoid | 1.114504 × 10−6 | 2.092044 × 10−1 | 0.326310 | |

| Tanh | 1.151102 × 10−6 | 2.668625 × 10−1 | 0.392679 | |

| Jetson | ReLU | 3.188076 × 10−7 | 1.063447 × 10−2 | 0.153478 |

| ELU | 3.409927 × 10−7 | 2.067891 × 10−2 | 0.192275 | |

| Sigmoid | 3.475815 × 10−7 | 2.299473 × 10−2 | 0.164944 | |

| Tanh | 3.579973 × 10−7 | 4.256916 × 10−2 | 0.274846 |

| Rank | Single-Threading | Multi-Threading |

|---|---|---|

| 1 | Jetson | Jetson |

| 2 | Raspberry Pi | IMX8 |

| 3 | IMX8 | Raspberry Pi |

| Hardware | Activation | Gradient | Y-Axis | Maximum |

|---|---|---|---|---|

| Platform | Function | Section | Deviation | |

| IMX8 | ReLU | 6.260788 × 10−7 | 3.092691 × 10−1 | 1.075668 |

| ELU | 6.764540 × 10−7 | 3.874742 × 10−1 | 1.168902 | |

| Sigmoid | 6.788531 × 10−7 | 3.905559 × 10−1 | 1.129849 | |

| Tanh | 9.248984 × 10−7 | 1.003794 × 100 | 1.746782 | |

| Raspberry Pi | ReLU | 1.337886 × 10−6 | 1.586471 × 10−1 | 0.815641 |

| ELU | 1.415562 × 10−6 | 2.039669 × 10−1 | 1.476009 | |

| Sigmoid | 1.399550 × 10−6 | 2.106196 × 10−1 | 1.007297 | |

| Tanh | 1.450218 × 10−6 | 9.194122 × 10−1 | 2.250828 | |

| Jetson | ReLU | 3.143797 × 10−7 | 3.164306 × 10−1 | 0.715131 |

| ELU | 3.473014 × 10−7 | 3.510930 × 10−1 | 0.769705 | |

| Sigmoid | 3.550585 × 10−7 | 3.552569 × 10−1 | 0.814380 | |

| Tanh | 6.556785 × 10−7 | 1.190182 × 100 | 2.846824 |

| Cortex-A72 | Cortex-A53 | |

|---|---|---|

| CPU max MHz | 1500 | 1800 |

| CPU min MHz | 600 | 1200 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huber, P.; Göhner, U.; Trapp, M.; Zender, J.; Lichtenberg, R. Comprehensive Analysis of Neural Network Inference on Embedded Systems: Response Time, Calibration, and Model Optimisation. Sensors 2025, 25, 4769. https://doi.org/10.3390/s25154769

Huber P, Göhner U, Trapp M, Zender J, Lichtenberg R. Comprehensive Analysis of Neural Network Inference on Embedded Systems: Response Time, Calibration, and Model Optimisation. Sensors. 2025; 25(15):4769. https://doi.org/10.3390/s25154769

Chicago/Turabian StyleHuber, Patrick, Ulrich Göhner, Mario Trapp, Jonathan Zender, and Rabea Lichtenberg. 2025. "Comprehensive Analysis of Neural Network Inference on Embedded Systems: Response Time, Calibration, and Model Optimisation" Sensors 25, no. 15: 4769. https://doi.org/10.3390/s25154769

APA StyleHuber, P., Göhner, U., Trapp, M., Zender, J., & Lichtenberg, R. (2025). Comprehensive Analysis of Neural Network Inference on Embedded Systems: Response Time, Calibration, and Model Optimisation. Sensors, 25(15), 4769. https://doi.org/10.3390/s25154769