Abstract

Research on image sensor (IS)-based visible light positioning systems has attracted widespread attention. However, when the receiver is tilted or under a single LED, the positioning system can only achieve two-dimensional (2D) positioning and requires the assistance of inertial measurement units (IMU). When the LED is not captured or decoding fails, the system’s positioning error increases further. Thus, we propose a novel three-dimensional (3D) visible light positioning system based on image sensors for various environments. Specifically, (1) we use IMU to obtain the receiver’s state and calculate the receiver’s 2D position. Then, we fit the height–size curve to calculate the receiver’s height, avoiding the coordinate iteration error in traditional 3D positioning methods. (2) When no LED or decoding fails, we propose a firefly-assisted unscented particle filter (FA-UPF) algorithm to predict the receiver’s position, achieving high-precision dynamic positioning. The experimental results show that the system positioning error under a single LED is within 10 cm, and the average positioning error through FA-UPF under no light source is 6.45 cm.

1. Introduction

With the development of the internet and the continuous improvement of smart buildings, the high-precision location-based service (HPLBS) has become an indispensable part of urban modernization, and the increase in indoor activities has further increased the demand for indoor positioning [1]. Existing technologies such as wireless local area network (WLAN) [2], Bluetooth [3], infrared [4], ultrasonic (UT) [5], ultra-wideband (UWB) [6], and radio frequency (RF) [7] can be used to achieve indoor positioning. However, these technologies have some accuracy, cost, and robustness disadvantages. Visible light positioning (VLP) has the advantages of high accuracy and low deployment cost. It is not susceptible to electromagnetic interference and is used in various electromagnetic-sensitive positioning scenarios, such as hospitals and mines [8]. The visible light positioning system uses a light-emitting diode (LED) as the transmitter, which can provide positioning while considering the lighting function. Therefore, it has attracted widespread attention from scholars and has become a powerful alternative to traditional indoor positioning technologies [9]. Figure 1 shows the positioning of visible light in the house used in a supermarket, which makes it more efficient for people to find the goods.

Figure 1.

Application of VLP in supermarkets.

Most research on visible light positioning systems based on image sensors uses multiple LED information for positioning. However, in complex indoor environments, the field of view of image sensors may be blocked by many obstacles, leading to positioning failure. In addition, this research also lacks height information of the receiver. Specifically as follows.

- (1)

- Traditional visible light positioning algorithms require the image sensor to capture three or more complete LED images for high-precision positioning. When the light source layout is sparse or only one LED is decoded, the existing method uses positioning parameter information and inertial measurement unit (IMU) data to calculate the receiver position. However, the IMU has a drift error, seriously affecting the positioning accuracy.

- (2)

- When the existing visible light positioning system realizes three-dimensional positioning, its algorithm simultaneously includes the receiver’s X, Y, and Z coordinates in the three-variable nonlinear equation system to solve the position. Slight parameter errors during the least squares iteration process will accumulate and amplify error in the final three-dimensional positioning result. In addition, solving nonlinear equations takes a long time.

- (3)

- Some existing indoor visible light positioning systems use particle filter (PF) or unscented particle filter (UPF) combined with inertial measurement units (IMU) to achieve positioning. However, when the receiver moves, the LED image will be missed from the image sensor’s field of view (FoV). The particle filter algorithm cannot work correctly. In addition, the particle dilution problem in the later stage of the particle filter will decrease positioning accuracy [10].

To address the above issues, we propose a single LED three-dimensional visible light positioning system based on an image sensor. This system separates the height from the three-dimensional information and calculates it separately, avoiding the transmission and amplification of errors in solving nonlinear equations. Furthermore, when no LED is in the image sensor’s field of view (FoV) or decoding fails, we propose the firefly-assisted unscented particle filter (FA-UPF) algorithm to predict the receiver position, which improves the system’s positioning accuracy and robustness. The details are as follows:

- (1)

- We propose a single LED three-dimensional positioning algorithm. It uses the geometric projection relationship of the image to list the similar triangles proportional equation for calculating the receiver’s X and Y coordinates, achieving high-precision two-dimensional positioning. Regarding height calculation, we establish a height–size database and fit the linear curve between the receiver’s height and the LED’s pixel width. After calculating the two-dimensional positioning, the pixel width of the LED is input into the linear curve to obtain the receiver’s height and achieve three-dimensional positioning. The positioning algorithm that separates the horizontal and vertical coordinates from the height avoids the accumulation and transmission of errors when solving nonlinear equation systems in traditional techniques.

- (2)

- We propose the firefly-assisted unscented particle filter algorithm. It uses the firefly algorithm to optimize the particle distribution of the unscented particle filter, which prevents the particles from gathering at the local optimum after resampling and dramatically reduces the IMU’s error. When no LED exists in the image sensor’s field of view (FoV) or decoding fails, the algorithm can accurately predict the receiver’s position, solving the problem of dynamic positioning and increasing system robustness.

- (3)

- Finally, we build an experimental platform to test the system’s performance. The experiment shows that in the 2 m × 2 m × 2.65 m positioning area, the average error of the system is 5.34 cm. The minimum positioning error is 0.76 cm, and the maximum positioning error is 15.89 cm. In addition, more than 70% of the test points have a positioning error within 10 cm. We also test the position prediction without LED; the results show that the system can achieve centimeter-level positioning accuracy.

In summary, this paper addresses key limitations in image sensor-based visible light positioning systems, including the inability to perform accurate 3D positioning with sparse LEDs and the degradation of prediction accuracy when LEDs are absent. To overcome these challenges, we propose a novel single-LED 3D positioning algorithm and a firefly-assisted unscented particle filter (FA-UPF) for dynamic positioning. The remainder of this paper is organized as follows: Section 2 reviews related work on visible light positioning and filtering methods. Section 3 introduces the system architecture and coordinate transformations. Section 4 details the proposed single LED positioning algorithm. Section 5 describes the FA-UPF algorithm for dynamic positioning. Section 6 presents the experimental setup and evaluation results, and Section 7 concludes the paper.

2. Related Work

In this section, we introduce visible light positioning and particle filter.

2.1. Visible Light Positioning

- (1)

- Multiple LEDs case.

Liu et al. designed a real-time high-precision visible light positioning algorithm, which utilizes the trilateration algorithm to determine the receiver’s position when three LED beacons are within the field of view [11]. Li et al. proposed a three-LED positioning method that does not require encoding. The proposed algorithm arranges LEDs with different spacings in a straight line and determines the receiver’s position from the geometric relationship of the LEDs captured by the camera [12]. Zhu et al. proposed a positioning algorithm for circular LEDs, which calculates the camera position by utilizing the feature points of the LEDs and their arc-shaped geometric characteristics. The algorithm achieves a 10 cm positioning accuracy using only two circular LEDs [13]. Yazar et al. proposed a pulse optimization design method for LEDs as transmitters. The method quantifies positioning accuracy using the Cramér-Rao Lower Bound (CRLB) and investigates the maximization of positioning performance in both asynchronous and synchronous visible light positioning systems. It can reduce energy consumption by approximately 45% or improve positioning accuracy by 25% [14]. Huang et al. proposed an RSS-based VLP method that transforms time-domain signals to the frequency domain and jointly estimates receiver position, LED visibility indicators, and signal parameters to achieve robust positioning under limited FOV [15]. Cappelli et al. proposed a low-power Visible Light Localization (VLL) system using three modulated LEDs and a photodiode receiver, where embedded machine learning regressors on a microcontroller process light intensities to achieve energy-efficient indoor positioning with accuracy satisfying predefined error constraints [16].

- (2)

- Single LED case.

Cheng et al. proposed an optical camera communication (OCC)-based visible light positioning system that improves positioning accuracy using a plane intersection method from the geometric features of LED projections. Experimental results show that the system achieves a 3D average positioning error of 5.5 cm under different heights and tilt angles, enabling high-precision positioning [17]. Mao et al. proposed a visible light positioning method using an improved Camshift algorithm and a 2D HSV histogram to enhance LED tracking accuracy and robustness. Experiments show a positioning accuracy of 0.95 cm, with 90% of errors below 1.79 cm, demonstrating high precision and real-time performance for dynamic positioning [18]. Hao et al. proposed an improved angle-of-arrival (AOA) method, in which the LED is at known locations as the transmitter, projecting the LED onto an image sensor with a camera acting as the receiver to capture the LED signals. The system can determine accurate position and orientation, achieving decimeter-level positioning [19]. Kim et al. proposed a tracking system based on a single LED and a single PD, which reduces the computational burden of the particle filter by applying a novel Bayesian method [20]. Chen et al. proposed a VLP positioning method that uses only a single LED light. This method measures the rotation angle by using an IMU and calibrates the IMU data with visual projection geometry, achieving sub-meter positioning accuracy [21]. Wang et al. proposed a single-LED visible light positioning system using a tilted rotatable photodetector (STRP) that accounts for first-order reflections, deriving the Cramer-Rao lower bound for optimal receiver angle selection and introducing an LS-assisted improved gray wolf optimization (LS-IGWO) algorithm to achieve centimeter-level positioning accuracy [22].

2.2. Filter Methods for Positioning

Liang et al. proposed the visible light positioning system with the tightly coupled extended Kalman filter (EKF) and IMU. The system fuses inertial measurements and visual information to achieve lightweight, real-time, and high-precision global positioning, maintaining stable performance even in sparse LED environments [23]. Guan et al. proposed an improved Camshift-Kalman algorithm, which combines the Camshift algorithm with a Kalman filter and innovatively introduces the Bhattacharyya coefficient. Experimental results demonstrate that the algorithm significantly enhances positioning accuracy, real-time performance, and robustness [24]. Liang et al. proposed a tightly coupled vision-inertial fusion method based on EKF, leveraging IMU and CMOS image sensors to enhance the system robustness [25]. Xie et al. proposed a novel visible light positioning method based on the Mean Shift (MS) algorithm and the Unscented Kalman Filter (UKF). In this method, the Unscented Kalman Filter enables the Mean Shift algorithm to track high-speed targets, improving positioning accuracy when the LED is obstructed [26]. Poompat et al. employed EKF to estimate joint position and orientation in an indoor visible light positioning system. The evaluation shows that 95% of the time, the position error is within 0.18 m and the orientation error is less than or equal to 12.5, demonstrating the effectiveness of EKF in dynamic tracking [27].

3. System Overview

In this section, we introduce the composition of the visible light positioning system and the relationship between coordinate system transformations.

3.1. Visible Light Positioning System

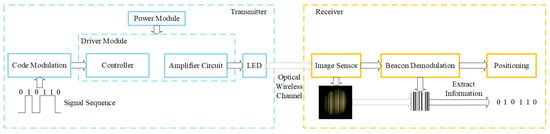

The framework of the visible light positioning system is shown in Figure 2. It can be divided into three parts: the transmitter, the channel, and the receiver [28]. First, the transmitter encodes and modulates the LED beacon data. Then, the driving circuit controls the LED to transmit the beacon information. The light signals carry the beacon information to propagate in the physical space through the visible light channel. The signal arrives at the photosensitive element of the image sensor at the receiver. The photosensitive element converts the received light signals into electrical signals. Then, the receiver performs signal processing, such as noise reduction, demodulation, decoding, etc., to restore the original signal. Finally, we calculate the receiver’s position according to the LED beacon information and the positioning algorithms, such as triangulation, the dual-LED method, and fingerprint database.

Figure 2.

Framework of image sensor-based visible light positioning.

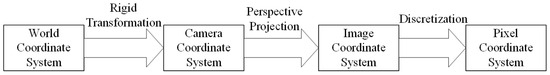

3.2. Coordinate System Transformation Relationship

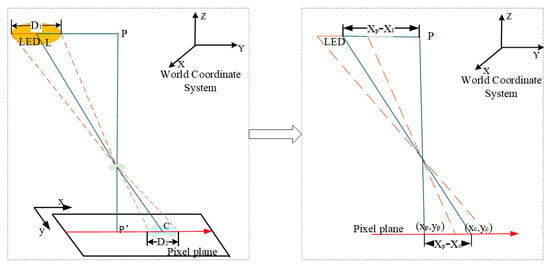

We use the image sensor as the receiver of the visible light positioning system. Under ideal conditions, we can use the pinhole imaging model to describe the imaging principle of the image sensor. The pinhole imaging model can determine the position of the LED on the two-dimensional image. Four coordinate systems are involved in the imaging process: the world coordinate system, camera coordinate system, image coordinate system, and pixel coordinate system. The conversion relationship among them is shown in Figure 3.

Figure 3.

The visible light positioning system includes four coordinate systems.

First, we convert the LED coordinate from the world coordinate system to the camera coordinate system. The transformation process is a rigid body transformation. There is only rotation and translation without deformation. For example, the world coordinates of one LED are , and its coordinates in the camera coordinate system are . The relationship between the two coordinates is , T is the offset in the coordinate system, and is the rotation matrix, as follows:

where , , and are the three-dimensional rotation angles of the camera coordinate system relative to the world coordinate system. represents the pitch angle, represents the roll angle, and represents the azimuth angle.

Then, we convert in the camera coordinate system into in the image coordinate system, according to the camera imaging principle, as follows:

where s is the scale factor, , a and r are the diameters of the LED in the image coordinate system and the world coordinate system, respectively. f is the focal length of the camera.

Finally, we convert LED coordinates in the image coordinate system to the pixel coordinate system. The difference between them lies in the origin coordinate and the unit of measurement.

4. Single LED Positioning Algorithm

In this section, we introduce our positioning algorithm.

4.1. Positioning Algorithm Overview

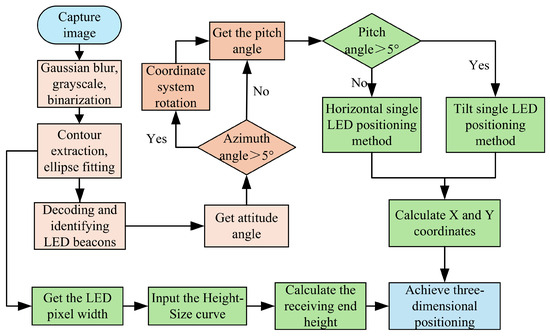

In actual application scenarios, if the number of LEDs in the camera field of view is less than 3, it cannot provide sufficient positioning information, resulting in positioning failure. Therefore, we design a single LED three-dimensional positioning algorithm. The algorithm calculates the horizontal and vertical coordinates and height of the receiver separately, avoiding the accumulation and transmission of errors in traditional positioning algorithms and improving the three-dimensional positioning accuracy. In order to cope with the tilted state of the receiver, we add an IMU to the receiver to obtain the receiver state and achieve positioning when the receiver is tilted. The system’s three-dimensional positioning is shown in Figure 4.

Figure 4.

Three-dimensional positioning system under a single LED.

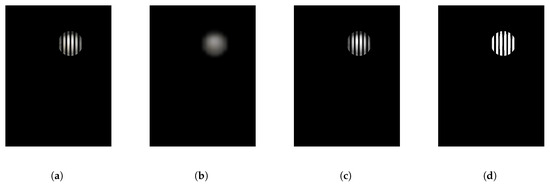

After the transmitter transmits the beacon data, the image sensor in the receiver starts to capture the LED image. We preprocess the captured image, including Gaussian blur, grayscale, binarization, and other processes. The processed image is shown in Figure 5. We extract the LED outline from the preprocessed image, find the center of the centroid after ellipse fitting, and then decode the LED and identify its frequency.

Figure 5.

Image preprocessing results. (a) Original image. (b) Blurred image. (c) Grayscale image. (d) Binarized image.

In Figure 5d, we assume the image has pixels, which means the image consists of m rows and n columns of pixels. The camera exposure time is T, the post-exposure processing time for each row is d, the LED frequency is f, and the width of each LED stripe generated in the image is W. We know as follows.

After calculating the LED frequency, we must determine the receiver state to select different positioning algorithms.

First, we obtain the receiver’s azimuth and determine whether the camera coordinate system is parallel to the world coordinate system based on the value of the receiver’s azimuth angle. If the azimuth angle is greater than 5, the receiver is considered to be in a non-parallel state. The camera coordinate system must be re-rotated to make it parallel to the world coordinate system. After rotating the coordinate system, the receiver can be in a parallel state; that is, the horizontal and vertical coordinates of the camera coordinate system are parallel to the world coordinate system.

Then, we determine whether the receiver is in a tilted state based on the pitch angle obtained by the IMU. If the pitch angle of the receiver is less than 5, the receiver is considered to be placed horizontally. At this moment, the LED image is a perfect circle, and the center of the centroid in the image can be directly used to calculate the two-dimensional coordinates of the receiver. If the pitch angle is greater than 5, the receiver is in a tilted state, and the LED image in the image is an ellipse. The image must be projected onto the horizontal plane to calculate the receiver’s two-dimensional coordinates.

Finally, to calculate the receiver’s height, we use the Gaussian blurred image of the LED to obtain the LED pixel width and input it into the height–size curve to obtain the corresponding receiver height. When both two-dimensional coordinates and height are obtained, the system achieves the three-dimensional visible light positioning.

4.2. Calculating Two-Dimensional Coordinate of Receiver

- (1)

- Receiver horizontal placement.

When the receiver is placed horizontally, the calculation method of the X and Y coordinates of the receiver is shown in Figure 6. denotes the LED’s known position in the world coordinate system, and is the receiver’s unknown position in the world coordinates, and are the external dimensions of the LED and the diameter of the circular imaging area, respectively, and and are the central pixel coordinates of the LED and the image center coordinates, respectively. When the image coordinate system and the world coordinate system are parallel, according to the principle of similar triangles, as follows:

further,

we can calculate the receiver’s positioning as follows.

Figure 6.

Receiver horizontal positioning principle.

- (2)

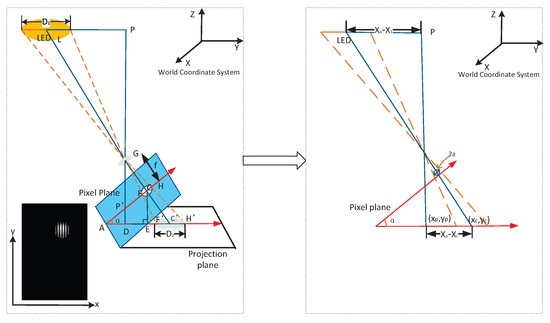

- Receiver tilted placement.

When the pitch angle of the receiver is greater than 5, we consider the receiver to be tilted, and the positioning principle is shown in Figure 7. Due to the receiver’s tilt, the LED’s contour in the captured image is elliptical, affecting the positioning accuracy. We perform the ellipse fitting [29] on the LED image, find the centroid, and project the tilted pixel plane onto the horizontal plane. Then, we use the receiver horizontal placement method to calculate the receiver’s position.

Figure 7.

Receiver tilt positioning principle.

In Figure 7, is the world coordinate system of the positioning system. Point is the coordinate of the center of the LED in the world coordinate system. is the diameter of the LED. is the coordinate of the center of the LED in the captured image, and is the center coordinate in the pixel plane. The coordinates of the receiver in the world coordinate system are . Thus,

- (1)

- Solve . In , , where a is the semi-major axis of the elliptical LED imaging and f is the focal length of the camera. According to the angular relationship in Figure 7, the . Meanwhile, , and is as follows,

- (2)

- Solve . From Figure 7, we find that . Let . We get the following:

- (3)

- Solve the positioning results . Associating the above equations, the positioning coordinates are as follows:Until now, we have found the X and Y coordinates of the receiver, achieving the high-precision two-dimensional positioning.

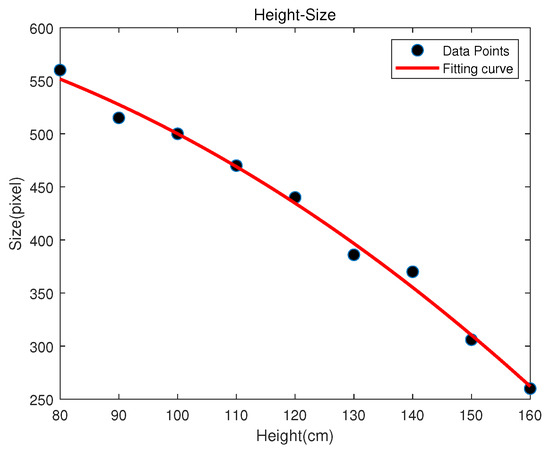

4.3. Calculating the Receiver’s Height

In order to obtain the Z coordinate of the receiver, we use a height calculation algorithm through the second-order polynomial fitting method. We first use the second-order polynomial fitting curve to establish a linear relationship between the LED width in the image and the height collected by the database. Specifically, when the receiver is not tilted, we collect the LED images at different heights to establish the discrete height–size database. The database stores the LED’s pixel width and corresponding receiver height in the world coordinate system. These discrete data are fitted with a second-order polynomial to obtain a continuous height–size curve, and a mapping function relationship between the LED pixel width and the vertical distance from the LED to the receiver is established, as shown in Figure 8.

Figure 8.

Height–size fitting curve.

In Figure 8, the red represents the fitting curve, and the black point represents the collected discrete data. The fitted curve expression is . It can express the linear relationship between the receiver height and LED pixel width.

- (1)

- When the receiver is placed horizontally, the pixel width of the LED in the image is input into the curve, and the corresponding height is obtained. Since the height of our experimental site is 2.65 m, the Z coordinate of the receiver can be expressed as follows.

- (2)

- When the receiver is tilted, the image is projected onto a plane parallel to the ground, as shown in Figure 7. is approximately the diameter of the LED in the image carried in a non-tilted state, which is obtained according to Equation (7). Substitute into the fitting curve to calculate the height of the receiver.

5. Dynamic Positioning Algorithm Through FA-UPF

In this section, we focus on the dynamic positioning algorithm with a firefly-assisted unscented particle filter.

5.1. Unscented Particle Filter Analysis

For the case where the system has no light source, most visible light positioning systems use the Kalman filter [30] to fuse visible light positioning with IMU data for achieving the positioning. However, the Kalman filter uses a linear approximation to calculate the Jacobian matrix nonlinearly and requires a manual initial position setting. Therefore, we use the unscented particle filter [31], which combines the advantages of the Kalman filter and the particle filter.

The unscented particle filter combines the nonlinear processing capability of the unscented transform with the particle filter’s strong robustness, enabling better state estimation capabilities in complex nonlinear systems. It still has shortcomings in dynamic visible light positioning without light sources. There are two reasons: (1) IMU’s noise and drift error grow rapidly over time. Without light sources for a long time, the prediction results of the unscented particle filter will deviate from the actual trajectory. (2) In the absence of light sources, due to insufficient observations, the particle degradation phenomenon of unscented particle filters is more serious, resulting in a decrease in estimation accuracy, and the computational cost increases significantly with the increase in the number of particles, which is not suitable for visible light positioning systems with high real-time requirements.

To compensate for the dynamic positioning error from the unscented particle filter in the absence of light sources, we use the firefly algorithm (FA) to assist the unscented particle filter in performing the receiver positioning. The firefly-assisted unscented particle filter algorithm can dynamically adjust the particle sampling distribution through FA and optimize the resampling of the unscented particle filter, improving the prediction accuracy. In addition, the error correction mechanism from FA can also effectively reduce the impact of IMU cumulative errors on unscented particle filter prediction results.

5.2. Firefly-Assisted Unscented Particle Filter for Dynamic Positioning

In the later step of the unscented particle filter, the problem of particle depletion leads to reduced positioning accuracy. We introduce the firefly algorithm [32] to optimize and improve the unscented particle filter.

The position update of the firefly algorithm requires each firefly to interact with all other fireflies at the current moment. If this algorithm is applied to unscented particle filter positioning, the calculation time will significantly increase, seriously affecting the real-time positioning performance. Therefore, the firefly algorithm needs to be appropriately improved.

- (1)

- Update the firefly brightness.

In the unscented particle filter algorithm, particles with larger weights are closer to the actual value. In the firefly algorithm, the brighter the firefly, the better its position in space. Therefore, each particle can be regarded as an individual firefly. The calculated particle weight represents the firefly’s brightness as follows:

- (2)

- Improve the firefly attraction.

In the standard firefly algorithm, each firefly is attracted by adjacent fireflies to update its position. The farther away the fireflies are, the smaller their relative attraction and role in guiding position updates. We design each particle to update its position only by comparing it with the global optimal particle at the current moment. Suppose the distance from the global optimal value is too large. In that case, the degree of attraction will be minimal, causing the particles to be unable to move to the high-likelihood area or move slowly. So the attraction is improved as follows:

where is the maximum attraction and is the minimum attraction.

The improved method still satisfies the requirement that the closer the distance between particles, the greater the relative attraction. At the same time, particles far from the global optimal value can be guided by the minimum attraction to update their positions.

- (3)

- Improve the firefly position updating.

In the standard firefly algorithm, each firefly needs to calculate the distance to all other fireflies and update its position interactively with them, respectively. Suppose it is directly substituted into the particle filter. In that case, it will greatly increase the complexity of the calculation and the position estimation time, making it difficult to meet the positioning requirements. Therefore, the particles at each moment are only moved closer to the particles at the global optimal position to reduce the algorithm calculation time. The improvement is as follows:

where is the state of particle i at time k after the m iteration and is the state of particle i at time k after the iteration. is the particle state with the largest weight among all particles after the iteration at time k.

6. Evaluation

In this section, we briefly introduce the system components and verify the performance of the localization system. In the paper, all positioning results shown in the figures are calculated and presented in the world coordinate system.

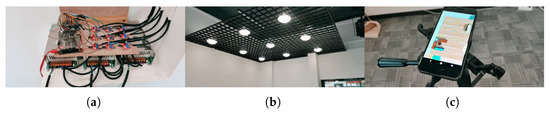

6.1. Platform of Hardware

The system’s transmitter and receiver model is shown in Table 1, and the frequency allocation is shown in Table 2. We modulate the LED to different frequencies to give different beacon information. In the positioning process, the world coordinates of the LED can be obtained according to the calculated LED frequency for positioning.

Table 1.

Hardware model.

Table 2.

LED frequency allocation.

Figure 9 shows the experimental test scenario. Figure 9a shows the LED source at the transmitter, and Figure 9b shows the ceiling LED layout. Figure 9c shows the receiver of the smartphone. In the system, the software system takes 350 ms to perform positioning, with 150 ms for capturing and storing the image, 150 ms for performing the image processing, and 20–30 ms for the positioning algorithm. There is a time redundancy gap between the steps for removing the effect of possible errors and bugs in the software code.

Figure 9.

Scenario of the experiment. (a) Transmitter. (b) Ceiling layout. (c) Smartphone receiver.

6.2. Positioning Error

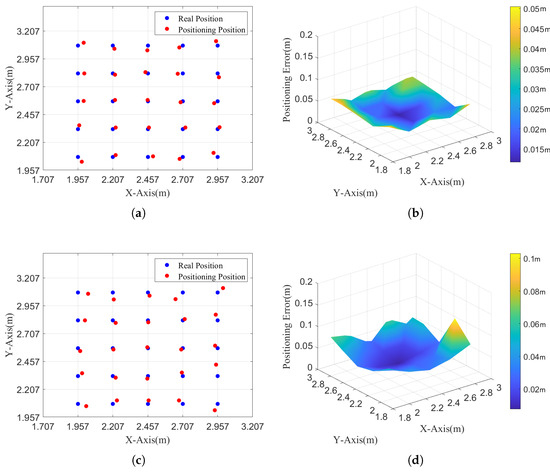

We measure the system’s static positioning error for a receiver at different tilt states. points are selected within the 2 m × 2m area in the experiment. Under our experimental setup, the effective horizontal positioning range of a single LED is approximately 0.5 m × 0.5 m, within which the positioning error remains below 10 cm in most cases. We perform tests at tilt angles of and , respectively. The positioning results under the tilt angles of and are shown in Figure 10.

Figure 10.

The positioning result and error under different tilt angles. (a) 2D positioning results under 0 tilt angle. (b) 3D positioning errors under 0 tilt angle. (c) 2D positioning results under 10 tilt angle. (d) 3D positioning errors under 10 tilt angle.

Figure 10a,c show the two-dimensional positioning results and three-dimensional positioning errors of the single-LED positioning algorithm under the tilt angle of 0. The average positioning error is 3.08 cm. The minimum positioning error is 1.17 cm, and the maximum positioning error is 5.05 cm.

Figure 10b,d show the two-dimensional positioning results and three-dimensional positioning errors of the single-LED positioning optimization algorithm at a tilt angle of 10. The average positioning error is 4.13 cm, which is higher than that at a tilt angle of 0. The minimum positioning error is 0.76 cm, and the maximum positioning error is 10.3 cm.

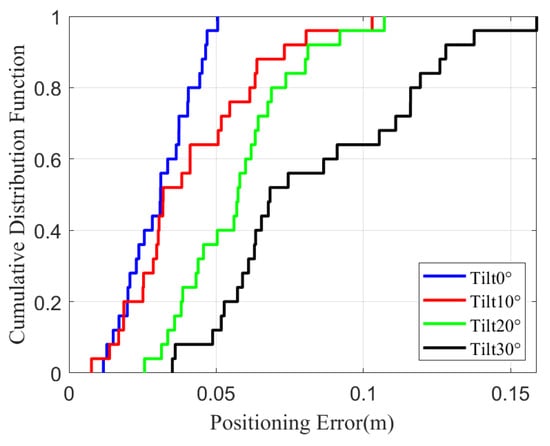

In Figure 11, we show the cumulative distribution function (CDF) of positioning error at different tilt angles. From Figure 11, we can find that the more parallel the receiver surface is to the horizontal, the smaller the system positioning error is. It is because the smaller the smartphone’s tilt angle, the smaller the deformation of the captured LED pixels, so more accurate image processing and positioning accuracy are achieved. Meanwhile, the average positioning error of the system is 5.34 cm, and 60% of positioning errors are within 10 cm, which means high-precision design requirements are completed.

Figure 11.

Cumulative distribution function of positioning error at different tilt angles.

6.3. Dynamic Trajectory Analysis

In this section, we analyze the system’s dynamic positioning performance from two perspectives: (1) path-wise tracking accuracy; (2) the statistical distribution of dynamic positioning errors.

6.3.1. Path-Wise Tracking Accuracy

- (1)

- Dynamic positioning error under the single LED.

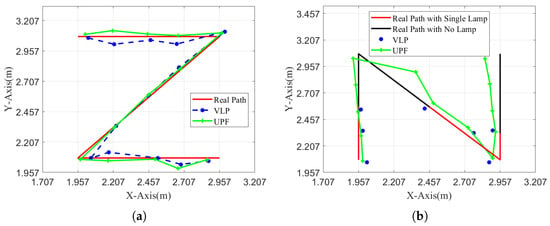

In Figure 12a, we show the system’s positioning accuracy with the FA-UPF under the single LED. During the experiment, we select a Z path within the 2 m × 2 m area and let the smartphone move along the path Z. The red line is the actual path of the smartphone. The blue and green lines are drawn according to the positioning results, respectively, without FA-UPF and with FA-UPF. From Figure 12a, we can find that the green path is closer to the red path, which means the FA-UPF brings the system higher positioning precision.

Figure 12.

Dynamic positioning performance based on FA-UPF and VLP under different paths. (a) The Z path. (b) The N path.

- (2)

- Dynamic positioning errors under the occasional no-LED.

Figure 12b shows the system’s positioning accuracy with FA-UPF in the occasional no-LED. During the experiment, we select an N path within the area and temporarily turn off some LEDs to simulate the situations without LEDs. We record the results of dynamic positioning with FA-UPF. The red path represents the actual path of the smartphone’s movement when only one LED in FoV exists. The black path represents the actual path of the smartphone’s movement in a no-LED situation. From Figure 12b, we can find that the visible light positioning system is disabled immediately when there is no LED in FoV, but the FA-UPF can predict the current position according to the previous state and inertia data to provide positioning service.

6.3.2. Error Distribution in Dynamic Scenarios

- (1)

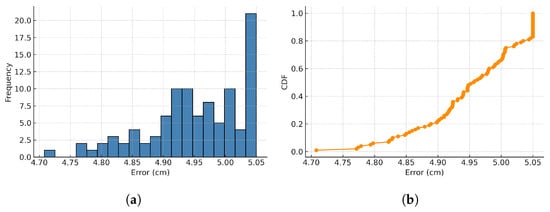

- Histogram and CDF of positioning error for Z path.

Figure 13 shows the histogram and cumulative distribution function (CDF) of the positioning error during Z-path movement. When the LED was turned on, the errors have a mean of approximately 4.97 cm, a minimum of 1.17 cm, and a maximum of 5.05 cm. The histogram shows that most of the positioning errors are concentrated around 4.9 cm to 5.0 cm, while the CDF curve rises steeply, with 90% of errors falling below 5.05 cm. This confirms that, in dynamic conditions, the system maintains centimeter-level accuracy under an LED turned on stably.

Figure 13.

Z path positioning error distribution. (a) Positioning error histogram. (b) Cumulative distribution function.

- (2)

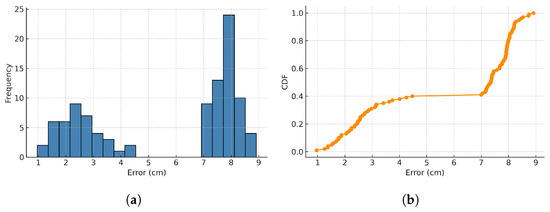

- Histogram and CDF of positioning error for N path.

Figure 14 presents the histogram and CDF of the positioning error under the N path, where some LEDs are intentionally turned off to simulate periods of LED absence. The error distribution exhibits two clusters: one in the low-error region (0.8–3 cm) corresponding to normal LED detection, and another in the high-error region (7–9 cm) when LED signals are unavailable. Although the errors increase during LED outages, the FA-UPF algorithm effectively constrains the error growth by relying on IMU prediction. The system still achieves acceptable accuracy when partially LED signals are turned off.

Figure 14.

N path positioning error distribution. (a) Positioning error histogram. (b) Cumulative distribution function.

Further, our data analysis reveals that when the smartphone suddenly changes the direction of movement and there is no LED in the FoV of the receiver, it leads to a significant error between the predicted position and the actual position after the change of direction. The angle of the FA-UPF can only be obtained from the IMU, and the prediction effect is significantly reduced. The average positioning error with FA-UPF in path N is 6.45 cm, and the average error of the no-LED part of the path is 8.91 cm. The system can keep centimeter-level high-precision positioning in the occasional no-LED situation.

While our experiments include two representative motion trajectories (Z and N), the system is expected to maintain robust performance across other types of trajectories due to the FA-UPF’s ability to compensate for temporary LED absence and motion uncertainty. However, sharp turns or irregular motion may lead to slightly higher prediction errors, especially when LED signals are unavailable. Future work will include more complex and realistic movement paths to evaluate the generalization of the proposed approach.

6.4. Discussion on Ambient Light Robustness

In practical environments, visible light positioning systems must operate reliably under various ambient lighting conditions. Our system is designed to be robust to both natural light (e.g., sunlight) and artificial light sources (e.g., ceiling lamps), as the LED transmitters are frequency-modulated and uniquely identifiable through image-based frequency decoding. Furthermore, Gaussian blurring and binarization preprocessing techniques help suppress background illumination and enhance LED signal isolation. Nevertheless, in extremely bright environments, overexposure may hinder LED detection. In such cases, adaptive exposure control and optical filters (e.g., bandpass filters) could be employed to improve robustness.

6.5. Impact of LED Frequency on Positioning Performance

The proposed system relies on frequency-modulated LED signals to identify and distinguish different beacons. The driving frequency of the LEDs determines the width of the LED stripes captured in the image sensor. In our system, the frequency is calculated based on the number and width of these stripes. Therefore, significant changes in driving frequency may affect stripe clarity and the accuracy of frequency decoding, especially if the frequency is too high or too low relative to the camera’s exposure settings. However, within a reasonable operating range (e.g., 600 Hz to 2.2 kHz in our experiments), the decoding algorithm maintains stable performance. This demonstrates that the system is robust to moderate changes in LED driving frequencies. For extreme cases, adjusting the camera’s exposure time or employing adaptive frequency calibration may be necessary.

6.6. Comparative Analysis with Related Works

To evaluate the effectiveness of the proposed system, we compare it with several representative image sensor-based VLP methods from recent literature. Table 3 summarizes the comparison in terms of LED requirements, dimensionality, need for angle sensors, and robustness to LED absence. Unlike prior works [13,18], which require multiple LEDs or fail under sparse lighting, our method achieves 3D positioning using only a single LED and maintains accurate tracking when the LED is not visible by leveraging the FA-UPF algorithm. Moreover, the system does not require angle sensors, which reduces hardware cost and complexity. These advancements demonstrate that our method extends the applicability of VLP systems in constrained or dynamic environments.

Table 3.

Comparison with representative image sensor-based VLP methods.

7. Conclusions

In this paper, we have solved typical issues in visible light positioning systems, such as sparse LED layouts, IMU drift errors, and the inability to locate in the absence of light sources. The proposed system achieves an average static positioning error of 5.34 cm within a 2 m × 2 m × 2.65 m area. Under a 10 tilt angle, the error remains within 10.3 cm. In addition, to enhance the system’s performance, we have proposed a single-LED 3D visible light positioning algorithm and a firefly-assisted unscented particle filter algorithm. The FA-UPF algorithm enables position prediction in no-LED scenarios with an average error of 6.45 cm and a worst-case error of 8.91 cm. Over 70% of the test points yield errors below 10 cm, demonstrating the system’s high accuracy and robustness. Experimental results have demonstrated that the proposed algorithms can achieve centimeter-level indoor positioning accuracy under various environments.

Despite the promising results, the proposed system has several limitations. First, although the system achieves 3D positioning with a single LED, the accuracy may degrade under strong ambient light or when the LED image is significantly occluded. Second, the FA-UPF algorithm depends on the IMU data for prediction in no-light conditions, and its performance may decline under long-term drift or sudden acceleration. Finally, the current implementation is tested in controlled indoor environments, and generalization to larger, dynamic spaces remains unverified.

To address these limitations and further improve system performance, we plan to pursue the following directions in future work:

- (1)

- Multi-sensor fusion: Integrating UWB or WiFi to compensate for IMU drift in prolonged LED absence scenarios.

- (2)

- Dynamic environmental adaptation: Developing real-time calibration for ambient light interference and reflective surfaces (e.g., mirrors).

- (3)

- Large-scale deployment: Extending the algorithm to multi-floor 3D positioning with sparse LED layouts.

- (4)

- Theoretical analysis: Modeling the impact of LED driving frequency fluctuations on positioning robustness under mobile camera conditions.

Author Contributions

Methodology, X.L. software, J.Z. writing—original draft preparation, J.Z. validation, J.Z., S.S. and L.G. All authors have read and agreed to the published version of the manuscript.

Funding

National Natural Science Foundation of China (62202215, 62401092, 62025105); Chongqing Natural Science Foundation General Project (CSTB2023NSCQ-MSX1088); Shenyang Science and Technology Talent Special Project (RC230794); Basic Scientific Research Project of Liaoning Provincial Department of Education (JYTQN2023050).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The original contributions presented in this study are included in the article. Further inquiries can be directed to the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

References

- Lyu, Y.; Wang, W.; Wu, Y.; Zhang, J. How does digital economy affect green total factor productivity? Evidence from China. Sci. Total. Environ. 2023, 857, 159428. [Google Scholar] [CrossRef] [PubMed]

- Shang, S.; Wang, L. Overview of WiFi fingerprinting-based indoor positioning. IET Commun. 2022, 16, 725–733. [Google Scholar] [CrossRef]

- Zhao, X.; Yang, Y. An AOA indoor positioning system based on bluetooth 5.1. In Proceedings of the 2022 11th International Conference of Information and Communication Technology (ICTech), Wuhan, China, 4–6 February 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 511–515. [Google Scholar]

- Aparicio-Esteve, E.; Hernández, Á.; Ureña, J. Design, calibration, and evaluation of a long-range 3-D infrared positioning system based on encoding techniques. IEEE Trans. Instrum. Meas. 2021, 70, 7005113. [Google Scholar] [CrossRef]

- Haigh, S.; Kulon, J.; Partlow, A.; Gibson, C. Comparison of objectives in multiobjective optimization of ultrasonic positioning anchor placement. IEEE Trans. Instrum. Meas. 2022, 71, 2513212. [Google Scholar] [CrossRef]

- Ridolfi, M.; Kaya, A.; Berkvens, R.; Weyn, M.; Joseph, W.; Poorter, E.D. Self-calibration and Collaborative Localization for UWB Positioning Systems: A Survey and Future Research Directions. ACM Comput. Surv. 2021, 54, 1–27. [Google Scholar] [CrossRef]

- Robinson, A.K.; Prajapati, N.; Senic, D.; Simons, M.T.; Holloway, C.L. Determining the angle-of-arrival of a radio-frequency source with a Rydberg atom-based sensor. Appl. Phys. Lett. 2021, 118, 114001. [Google Scholar] [CrossRef]

- He, J.; Liu, Y. Vehicle positioning scheme based on particle filter assisted single LED visible light positioning and inertial fusion. Opt. Express 2023, 31, 7742–7752. [Google Scholar] [CrossRef]

- Jin, L.; Jiang, Q.; Yu, S.; Xu, H.; Xie, M.; Li, X.; Ma, D. Lightweight RSS-Based Visible Light Positioning Considering Dust Accumulation. J. Light. Technol. 2025, 43, 4693–4702. [Google Scholar] [CrossRef]

- Cao, X.; Zhuang, Y.; Chen, G.; Wang, X.; Yang, X.; Zhou, B. A visible light positioning system based on particle filter and deep learning. IEEE Trans. Aerosp. Electron. Syst. 2023, 60, 2735–2748. [Google Scholar] [CrossRef]

- Liu, X.; Guo, L.; Yang, H.; Wei, X. Visible Light Positioning Based on Collaborative LEDs and Edge Computing. IEEE Trans. Comput. Soc. Syst. 2021, 9, 324–335. [Google Scholar] [CrossRef]

- Li, M.; Jiang, F.; Pei, C. Research on visible light indoor positioning algorithm based on fire safety. Opt. Appl. 2020, 50, 209–222. [Google Scholar] [CrossRef]

- Zhu, Z.; Yang, Y.; Guo, C.; Feng, C.; Jia, B. Visible light communication assisted perspective circle and arc algorithm for indoor positioning. In Proceedings of the IEEE International Conference on Communications, Dublin, Ireland, 7–11 June 2020; pp. 3832–3837. [Google Scholar]

- Amsters, R.; Demeester, E.; Slaets, P.; Holm, D.; Joly, J.; Stevens, N. Towards automated calibration of visible light positioning systems. In Proceedings of the IEEE International Conference on Indoor Positioning and Indoor Navigation, Pisa, Italy, 28 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–8. [Google Scholar]

- Huang, N.; Chen, F.; Liu, C.; Zhang, Y.Y.; Zhu, Y.J.; Gong, C. Robust Design and Experimental Demonstration of RSS-Based Visible Light Positioning under Limited Receiving FOV. IEEE Trans. Green Commun. Netw. 2024, 9, 392–403. [Google Scholar] [CrossRef]

- Cappelli, I.; Carli, F.; Intravaia, M.; Micheletti, F.; Peruzzi, G. A Machine Learning Model for Microcontrollers Enabling Low Power Indoor Positioning Systems via Visible Light Communication. In Proceedings of the 2022 IEEE International Symposium on Measurements & Networking (M&N), Padua, Italy, 18–20 July 2022; IEEE: Piscataway, NJ, USA, 2022; pp. 1–6. [Google Scholar]

- Cheng, H.; Xiao, C.; Ji, Y.; Ni, J.; Wang, T. A single LED visible light positioning system based on geometric features and CMOS camera. IEEE Photonics Technol. Lett. 2020, 32, 1097–1100. [Google Scholar] [CrossRef]

- Mao, W.; Xie, H.; Tan, Z.; Liu, Z.; Liu, M. High precision indoor positioning method based on visible light communication using improved Camshift tracking algorithm. Opt. Commun. 2020, 468, 125599–125621. [Google Scholar] [CrossRef]

- Hao, J.; Chen, J.; Wang, R. Visible light positioning using a single LED luminaire. IEEE Photonics J. 2019, 11, 7905113. [Google Scholar] [CrossRef]

- Kim, D.; Park, J.K.; Kim, J.T. Single LED, Single PD-Based Adaptive Bayesian Tracking Method. Sensors 2022, 22, 6488. [Google Scholar] [CrossRef]

- Chen, J.; Hao, J.; Wang, R.; Shen, A.; Yu, Z. A VLP Approach Based on a Single LED Lamp. In Proceedings of the International Conference on Machine Learning and Intelligent Communications, Nanjing, China, 24–25 August 2019; Springer: Cham, Switzerland, 2019; pp. 305–312. [Google Scholar]

- Wang, Z.; Liang, Z.; Liu, R.; Li, X.; Li, H. Design and Performance Analysis for Indoor Visible Light Positioning with Single-LED and Single-Tilted Rotatable-PD. IEEE Trans. Instrum. Meas. 2024, 73, 5502414. [Google Scholar] [CrossRef]

- Liang, Q.; Liu, M. A Tightly Coupled VLC-Inertial Localization System by EKF. IEEE Robot. Autom. Lett. 2020, 5, 3129–3136. [Google Scholar] [CrossRef]

- Guan, W.; Liu, Z.; Wen, S.; Xie, H.; Zhang, X. Visible light dynamic positioning method using improved camshift-Kalman algorithm. IEEE Photonics J. 2019, 11, 7906922. [Google Scholar] [CrossRef]

- Liang, Q.; Lin, J.; Liu, M. Towards robust visible light positioning under LED shortage by visual-inertial fusion. In Proceedings of the IEEE International Conference on Indoor Positioning and Indoor Navigation, Pisa, Italy, 30 September–3 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1–8. [Google Scholar]

- Xie, Z.; Guan, W.; Zheng, J.; Zhang, X.; Chen, S.; Chen, B. A high-precision, real-time, and robust indoor visible light positioning method based on mean shift algorithm and unscented Kalman filter. Sensors 2019, 19, 1094. [Google Scholar] [CrossRef]

- Poompat, S.; Pakorn, U. Joint Position and Orientation Estimation for Visible Light Positioning Using Extended Kalman Filters. In Proceedings of the IEEE International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology, Khon Kaen, Thailand, 27–30 May 2024; IEEE: Piscataway, NJ, USA, 2024; pp. 1–5. [Google Scholar]

- Liu, X.; Wei, X.; Guo, L. DIMLOC: Enabling high-precision visible light localization under dimmable LEDs in smart buildings. IEEE Internet Things J. 2019, 6, 3912–3924. [Google Scholar] [CrossRef]

- Zhu, Z.; Guo, C.; Bao, R.; Chen, M.; Saad, W.; Yang, Y. Positioning using visible light communications: A perspective arcs approach. IEEE Trans. Wirel. Commun. 2023, 22, 6962–6977. [Google Scholar] [CrossRef]

- Amsters, R.; Holm, D.; Joly, J.; Demeester, E.; Stevens, N.; Slaets, P. Visible light positioning using Bayesian filters. J. Light. Technol. 2020, 38, 5925–5936. [Google Scholar] [CrossRef]

- Ullah, I.; Shen, Y.; Su, X.; Esposito, C.; Choi, C. A Localization Based on Unscented Kalman Filter and Particle Filter Localization Algorithms. IEEE Access 2020, 8, 2233–2246. [Google Scholar] [CrossRef]

- Wei, Z.; Hu, H.; Huang, H. Optimization of location, power allocation and orientation for lighting lamps in a visible light communication system using the firefly algorithm. Opt. Express 2021, 29, 8796–8808. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).