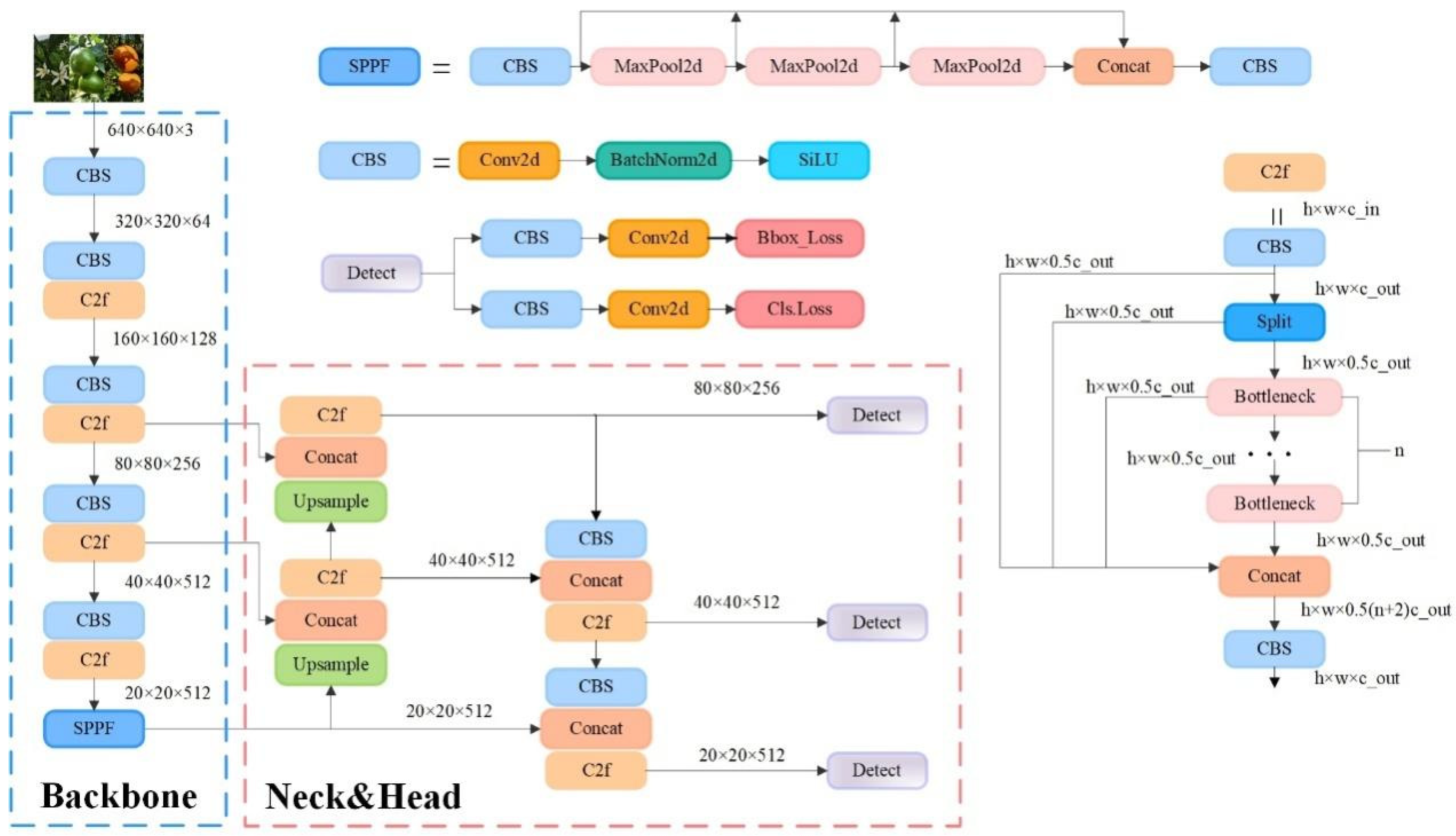

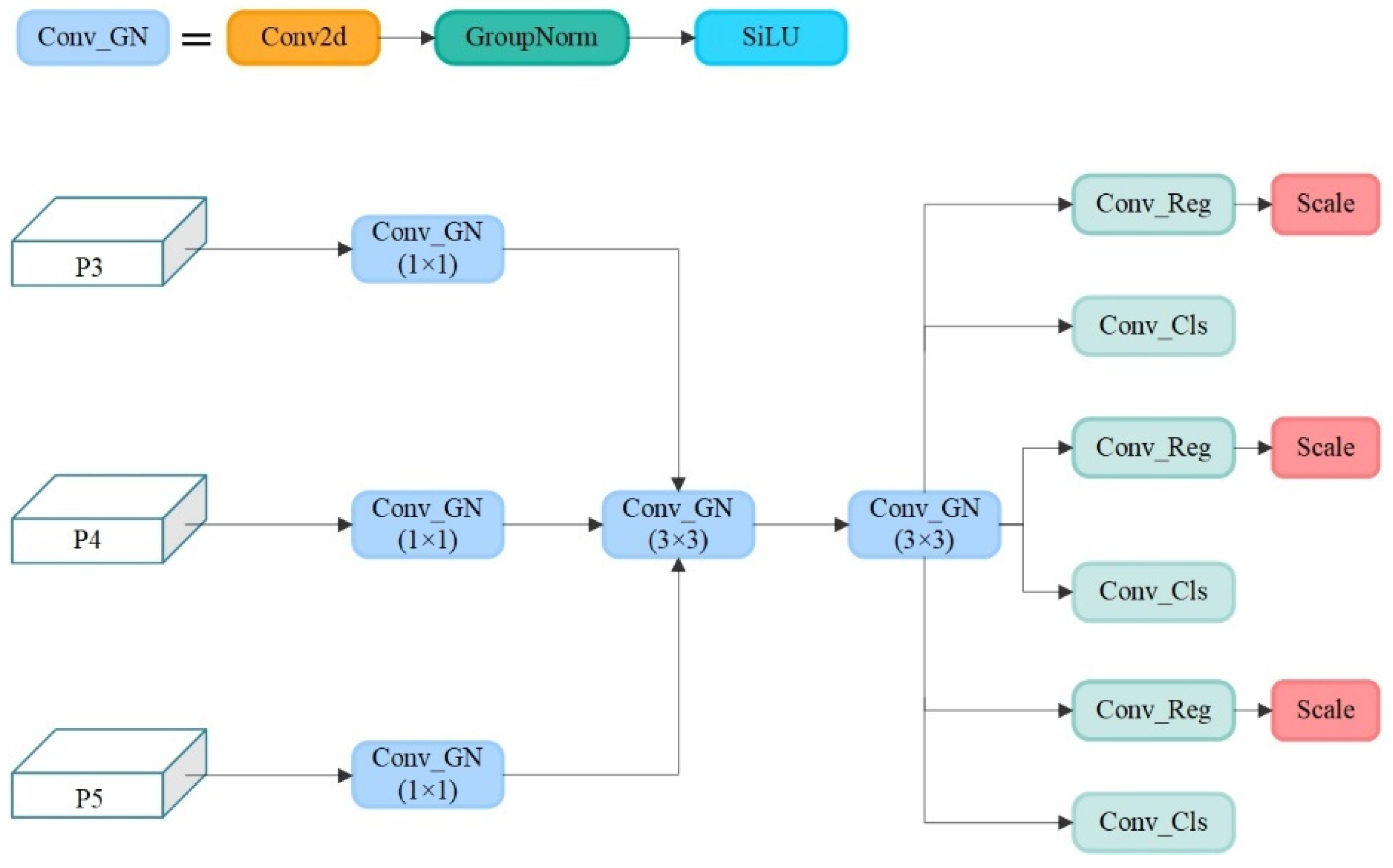

4.1. Comparison of Different Network Models

To verify that the improved YOLOv8-RL new model is lightweight while still considering the accurate recognition ability of citrus full-growth period images, in this study, the current mainstream small model detection networks and the whole series of YOLOv8 networks as well as the current latest target detection networks are selected for comprehensive comparative analysis, including YOLOv5s, YOLOv7-tiny [

27], YO LOv8n/s/m/l/x [

24], YOLOv9t [

29], and YOLOv10n [

30]. All the network models are trained with the same dataset, no official default weights are loaded, and all the parameters are kept consistent. The weights with the highest accuracy were selected after the training was completed, and the comprehensive evaluation was performed on the same test set with a total of 650 citrus full-growth period images. In this work, FPS is calculated as follows: when BatchSize = 1, 1000 ms is divided by the sum of the image preprocessing time, inference time, and postprocessing time to obtain the final result. After complete testing, the performance metrics of the above target detection model are shown in

Table 2 below, and all the metrics are the average accuracies of the three classifications of citrus (blossom, green, and orange fruit).

The analysis in

Table 2 shows that YOLO5s still has good performance in long-term image detection in citrus. While it has high precision, the parameters, floating-point operation times, model size, and other indicators are still not inferior to those of the current mainstream detection network; however, owing to the long reasoning time and relatively low FPS value, it has lost the advantage of real-time detection. YOLOv7 tiny is a new detector proposed by the Alexey Bochkovskiy team in July 2022 [

27]. The accuracy of the model in the test set is 97% mAP@.5. The index also has 96.6% performance. Although the F1 value reaches 0.95, the parameters, computational complexity, and model size are far lower than those of the optimal model. Second, as an improved version of the Yoov5 network, the YOLOv8 [

24] series network not only maintains excellent performance but also enhances the model reasoning time and the efficiency of real-time detection. For the citrus dataset, the whole YOLOv8 series network has ideal performance. It can be seen from the data analysis in the table that with increasing depth and width of the network, the detection performance of the network is also gradually enhanced, but the consequent drawback is the high cost of computing resources. Taking YOLOv8n and YOLOv8x as examples, the detection accuracies for mAP@.5 and F1 values differ by 3.1%, 3.6% and 4%, respectively, but the parameter quantity, floating-point operation, and model size of the former are much smaller than those of the latter. Although YOLO8x has the highest detection performance, its high computational cost and large model proportion are not suitable for the current real-time detection requirements. When the parameters of the YOLOv8n model are moderate, the reasoning time and FPS value have better performance. YOLOv9t [

29] and YOLOv10n [

30] are the latest detection networks published at present. According to the data analysis in the table, although they have good performance in terms of the parameter quantity and model size in the citrus dataset test, each index has little advantage in citrus detection.

Finally, the best performing YOLOv8n is selected for a comprehensive comparison with the YOLOv8-RL proposed in this study, and the analysis shows that the performance of the improved network is close to that of the original network in terms of indicators such as P, mAP@.5, and the F1 value, which indicates that the improved network has no loss of detection accuracy. Second, the new network, YOLOv8-RL, has a decrease in the number of parameters by 50.7%, the number of floating-point operations is reduced by 49.4%, and the model size is only 3.2 MB, which is approximately one-half of the original model. In addition, the inference time of YOLOv8-RL is only 2.3 ms, and the FPS real-time detection frame rate is 123.1, which are both better than those of the original network. In summary, the experimental results show that the improved YOLOv8-RL network still maintains high detection performance after a large number of network parameters are reduced to lighten the network, achieves high detection accuracy with minimum model volume, low computational cost and high detection efficiency, and is more suitable for edge detection devices with scarce computational resources, which provides technical support for the application of the lightweight network to real-time citrus detection.

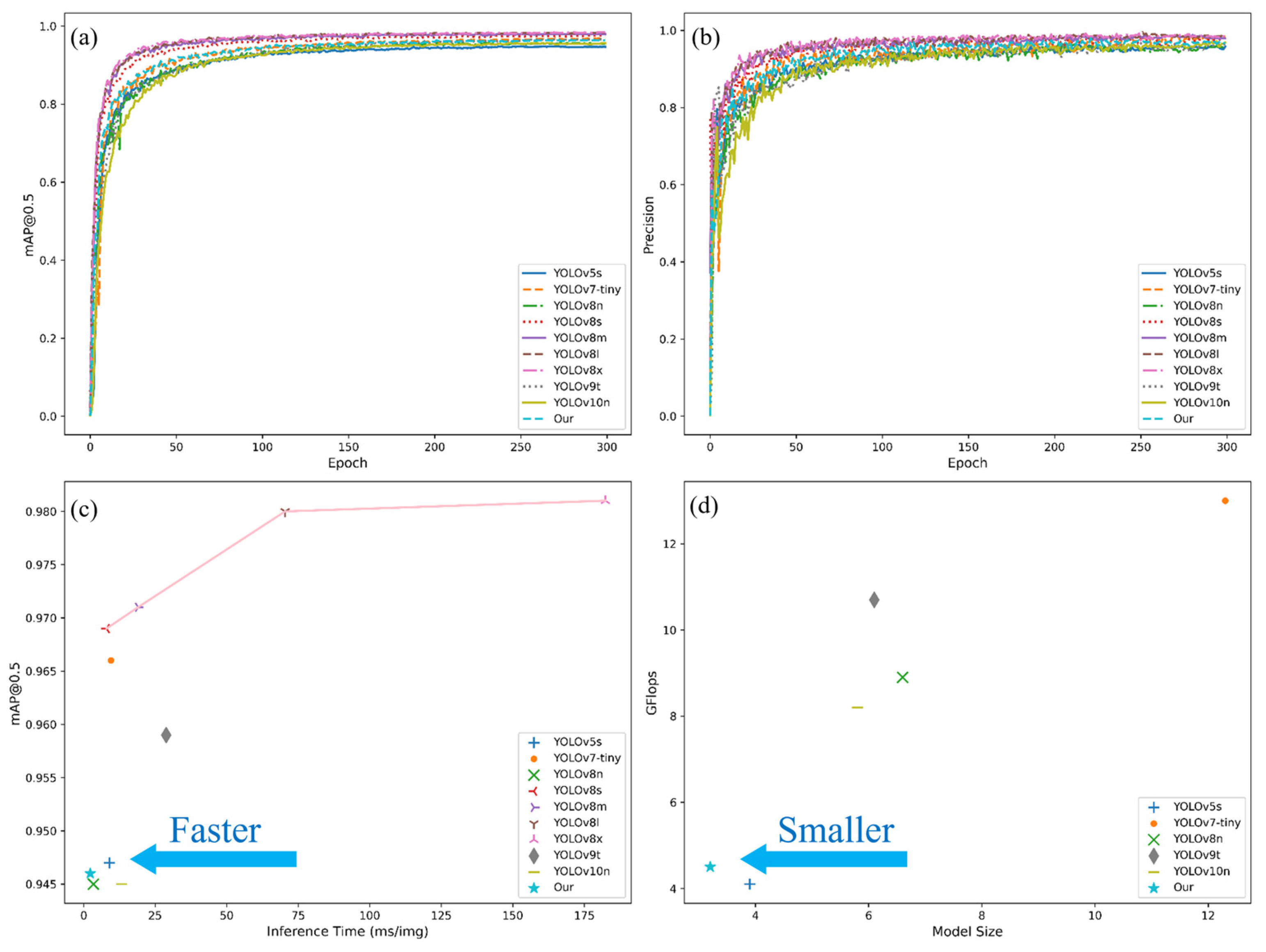

To analyze the differences in network performance more intuitively, the most important mAP@.5 and accuracy P metrics are selected for visual analysis in this study, and all network models are shown in

Figure 7a,b after 300 iterations. The analysis shows that all the involved detection networks start to converge gradually after 100 epochs and reach a smooth trend. The large model has excellent performance for citrus detection in complex environments, with the advantages of network width and depth, and has better performance with the same number of training times. YOLOv8n and the YOLOv8-RL lightweight model proposed in this study have similar detection performances.

Figure 7c,d show the model inference time-mAP@.5 scatterplot and the model size floating-point operation count scatterplot, respectively.

Figure 7c shows that YOLOv8-RL has the shortest inference time while considering the high detection performance.

Figure 7d shows that the YOLOv8-RL network is not only the optimal model with a small model size but also has the lowest computational expenditure.

4.2. Comparative Analysis of Detection in Complex Scenarios over the Full Growth Period

Given the complexity of the orchard environment under natural conditions, the degree of light and darkness, the shading interference of fruit tree branches and leaves, and the prevalence of the overlapping fruit shading phenomenon, all of these factors lead to difficulties in fruit identification and counting. Therefore, the accurate identification of citrus in complex scenes is highly important for informative yield estimation. For the above real scenarios, this study selects the most balanced YOLOv8n network, the latest detection networks YOLOv9t and YOLOv10n, and the new network YOLOv8-RL proposed in this paper for comparative analysis and detects citrus blossoms, green fruits, and ripening periods to explore the recognition ability of the new network in real environments in the field. The red circles in the figure indicate network leakage detection in different scenarios.

As shown in

Figure 8, the YOLOv8n network is able to recognize citrus targets effectively in all natural scenes. During the flowering period, citrus flower clusters are more densely distributed on the branches, and the leaves cover each other, increasing the network’s ability to recognize the flowers. Second, the network is not sensitive to the small targets at the edge of the image under backlight conditions, and there is a slight omission of detection. In the green fruit period, the network’s detection rate of the fruit is significantly improved. The analysis suggests that although the green fruit and green leaves are similar in color, the network has been trained to maintain the ability to judge the characteristics of the green fruit, which makes it possible to accurately identify the target even in the case of overlapping fruits and misshapen leaves and branches. As shown in the figure, the brightness of the light has a large effect on the detection ability of the network. In direct sunlight, the surface color of the green fruit is extremely similar to the color of the green leaves, and the network has a leakage detection ability. During the ripening period of citrus, the network has the highest detection rate of the fruit, and the analysis suggests that although the situation of fruit overlapping with leaf shade still exists, the citrus color features are obvious, and the network can effectively extract the texture features. Second, in the case of sufficient light, the network is more likely to obtain the color semantic information of the fruits, which improves the detection rate.

Under the same detection scenario, both the YOLOv9t and YOLOv10n networks are effective at recognizing citrus full-growth images. As shown in

Figure 9 and

Figure 10, as the current state-of-the-art detection networks, the two are very close to each other in terms of their citrus detection ability. During the flowering period, individual flowers are all effectively detected, but in the case of leaf occlusion and flower sticking, the network loses the features of the target, and both of them have instances of missed detection. Second, during green fruit detection, the YOLOv9t detection rate was significantly greater than the YOLOv10n detection rate, and the number of green fruits detected was greater than the number detected in the same scenario; however, individual fruits were still not detected in direct light scenarios with interference from the background and the same color. During the citrus ripening period, the detection rates of the two networks remained the same, and the networks were able to recognize the fruits in both elevated and close-up images. In summary, as the latest target price detection networks, YOLOv9t and YOLOv10n exhibit excellent performance in terms of detection accuracy and the target detection rate alone, but the lower frame rate and large computational overhead are not suitable for the real-time detection of citrus in complex environments.

In the actual field detection process, unavoidable factors such as light dimness, fruit overlap, and leaves covering each other present considerable challenges for fruit identification and counting. In practical detection, a network with high accuracy and light weight reduces the difficulty of real-time citrus monitoring and counting to a certain extent, as it can be deployed to portable devices such as mechanical vehicles or cell phones. The detection results of the YOLOv8-RL network proposed in this study in real scenarios are shown in

Figure 11. With the number of parameters and the number of floating-point operations reduced to half of the original number, the recognition rate and target detection rate of the network are consistent with those of the YOLOv8n, YOLOv9t, and YOLOv10n networks. The lightweight network model can accurately identify the target at the flowering, fruiting, and ripening stages, which shows that the reduction in parameters does not affect the detection ability of the network and once again verifies the effectiveness of the network improvement, which is more suitable for real-time monitoring of citrus in complex scenarios.

4.4. Estimation Studies

To validate the usefulness of the YOLOv8-RL network, the study area of the Pengyu Brothers Citrus Demonstration Base in Gongcheng County was selected for field validation in this study. Sixteen citrus fruit trees were randomly selected from the orchard for yield estimation model construction and validation. Among them, 10 fruit trees were used for model construction, and 6 fruit trees were used for model validation. The detection network can recognize the number of fruits on one image, which provides a basis for predicting the number of fruits on a whole tree. Therefore, in this study, unilateral images of 16 fruit trees were collected during two growing periods, i.e., the green fruit stage and the citrus ripening stage, for the network to recognize the counts. The true number of fruits hanging on the tree was recorded visually by the human eye at the same time as the collection of the unilateral images, and the average of the three visual recordings was taken as the true value of the tree. A regression equation was then established between the true value of the tree’s fruits and the number of fruits recognized by the network, the number of fruits on the whole tree was obtained via local prediction of the whole, and the number of fruits on the whole tree was subsequently obtained [

31]. Predicting the final number through the green fruit stage estimation model can achieve early estimation, and the maturity stage estimation model can effectively reduce the counting error and improve the accuracy of estimation.

4.4.1. Estimation Modeling

Table 4 and

Table 5 show the number of network recognitions and the manual visual truth values of 10 fruit trees for two growth stages, the green fruit stage and the ripening stage, respectively. As shown in the table, the number of unilateral recognitions of each fruit tree is between 40 and 90, and the true value of the fruit tree is approximately 50–140. Unilateral fruit tree network identification is prone to leakage misdetection phenomena, identification of the number and true value of the gap, the analysis of the citrus orchard base reveals that the distance between the fruit tree distribution is not uniform, the branches and leaves of the fruit trees intersect each other, the fruits overlap with each other in terms of adhesion, and the degree of light dimming and other factors affect the network detection rate. The difference between the true values of different fruit trees is due to the randomness of fruit tree selection and fruit trees in terms of the growth process, light conditions, nutrients, soil moisture, and other external factors, as well as the yield of fruit trees [

32]. Finally, the number of detections in the table was fitted to the true value to explore the relationship between the two. After linear and nonlinear fitting, the optimal fitting equation is shown in

Figure 12. The results showed that during the green fruit stage, the optimal result was achieved when the fruit detection count was linearly fitted to the actual value, with a fitting equation of y = 1.43885x + 0.89779 and a determination coefficient R

2 of 0.91992. During the mature stage, the optimal result was achieved when the fruit detection count was nonlinearly fitted to the actual value, with a fitting equation of y = 0.111 ×

2 + 0.04029x + 39.22014 and a determination coefficient R

2 of 0.95639, indicating a strong correlation between the recognized and true values.

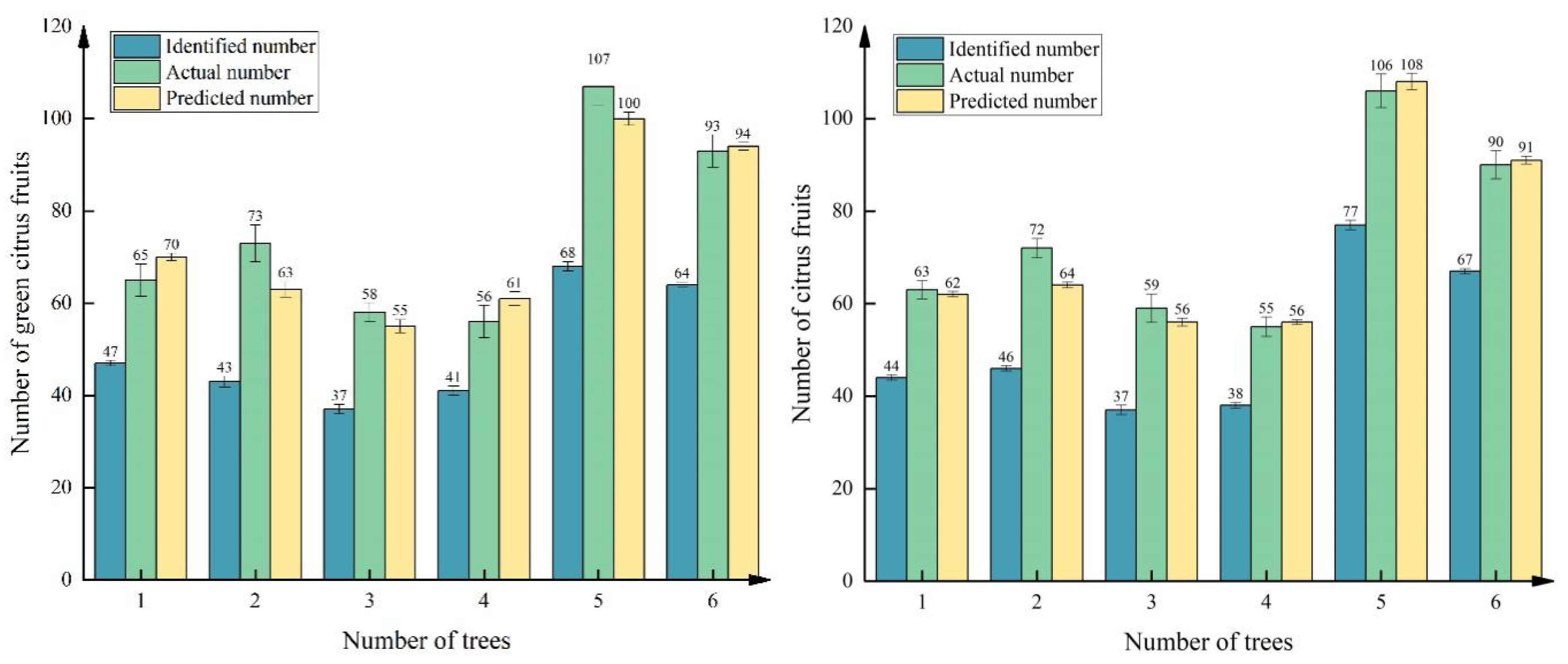

4.4.2. Validation and Analysis of Estimated Production Models

To validate the applicability of the two estimation models in

Section 4.4.1, in this study, six fruit trees were selected for model validation, and the true values of the citrus fruits were obtained via manual counting. The process is as follows: the side views of the six fruit trees in the green fruit stage and ripening stage were input into the detection network three times, the number of identifications was subsequently substituted into the estimation model for prediction, the average of the three detection results was taken as the detection value of the network, and the average of the three prediction values was taken as the final model prediction value.

Figure 13 shows the results of the algorithm recognition of three of the fruit trees at the green fruit stage and maturity stage, from which it can be seen that the number of fruits in the side view of the complete fruit tree can roughly represent the overall yield of the tree.

The detected, true, and predicted values of the six citrus fruit trees mentioned above during the two growing periods are shown in

Table 6 and

Table 7, where the values are the average values of the fruit quantities obtained in the three ways. The absolute value of the difference between the true value of the fruit and the detected value is recorded as error E1, the absolute value of the difference between the true value of the fruit and the predicted value is recorded as error E2, and the percentage value of the error value of the true value is used as the error rate of the fruit quantity of the fruit tree. The results of the table analysis indicate that the same citrus fruit tree, green fruit stage, and maturity of the final number of fruits are very similar. In the analysis of the green fruit stage to promote the development of fruit, farmers prune the fruit tree or artificially control the number of fruits, resulting in the number of fruits in the green fruit stage not being very high. Second, regardless of the green fruit period or ripening period, the number of unilateral fruit tree images, the number of network recognition and the true value of the existence of a large error, as shown in Column E1 of the table, are the reasons that the network for the image of the unilateral detection of the results given is only the approximate number of fruit trees in one direction, many hidden in the branches and leaves of the fruit tree are one of the sources of error; the network in the detection of the overlap of the fruits and the foliage cover-up the phenomenon of omission detection is also a source of error; and the network in the detection of overlap and foliage cover-up generated by the fruits and leaves. The phenomenon of missed detection is also one of the sources of error. Therefore, using only the network to determine the number of counts as the final estimation of fruit tree yield results is more inaccurate.

On this basis, the predicted value of the fruit tree was obtained by substituting the network identification value into the estimation model, and the error between the predicted value and the true value was recalculated, as shown in Column E2. The number of errors in the two growing periods after correction by the estimation model is significantly smaller than the error generated by a single network detection count, and the accuracy of the prediction of the fruit yield can be further improved by using the estimation model. Second, the true error rate of the fruit counts corrected by the estimation model was calculated, and the results are shown in the columns of Table E2 errors, with an average error rate of 6.96% at the green fruit stage and an average error rate of 3.71% at the ripening stage. Although there are many fruits in the green fruit period, the network detection rate is low because of the similar color of fruits and green leaves, and the difference between the number of detections and the true value is large. The color of fruits in the ripening period is orange, and the detection network is more likely to learn its characteristics. The number of detections is close to the true value, and the coefficient of determination of the estimation model of citrus fruits in the ripening period is higher than that of the green fruit period, so the average error rate of the ripening period fruits after the correction of the estimation model is lower.

In summary, the fruit detection value, true value, and predicted value of six citrus fruit trees at the green fruit stage and maturity stage are shown in

Figure 14, from which it can be seen that the error between the network identification value and the true value is larger, and it is not accurate to use only the network identification fruit count as the final fruit tree yield; after the correction of the two estimation models, the true value and the predicted value are much closer to each other, with lower error, which is more suitable for the estimation of fruit yield in complex environments. The estimation of fruit yield during the green fruit period can help farmers estimate fruit yield and income in advance and adjust the efficiency of agricultural production and resource allocation in time; the estimation of fruit yield during the ripening period is highly important for rationally arranging production and marketing plans and improving the quality of fruits.