Three-Dimensional Extended Target Tracking and Shape Learning Based on Double Fourier Series and Expectation Maximization

Abstract

1. Introduction

- (1)

- The unknown but fixed 3D shape is represented via a radial function in spherical coordinates, with a Double Fourier Series (DFS) expansion employed for its modeling. This approach converts the continuous radial function into a parametric representation characterized by a finite set of coefficients.

- (2)

- The axis-angle representation constitutes a compact and singularity-free approach for parameterizing 3D orientation. It describes a rotation by specifying a unit vector, which defines the axis of rotation, and a scalar angle, which denotes the magnitude of rotation about this axis. Furthermore, this method exhibits both geometric intuitiveness and notable computational efficiency.

- (3)

- Joint estimation of the target’s kinematic state and shape parameters is achieved via the Expectation Conditional Maximization (ECM) framework. The E-step infers the kinematic state using an unscented Kalman smoother filter, while the M-step estimates shape and rotation parameters by minimizing separate cost functions with added regularization for robustness and smoothness.

2. Problem Formulation

2.1. DFS-Based Shape Model for 3D Target

2.2. Orientation Representation (Axis-Angle)

2.3. Kinematic State Model

- A.

- line kinematic model

- B.

- nonlinear kinematic model

2.4. Measurement Model

3. ECM Tracking Algorithm Based on DFS

3.1. E-Step

3.2. M-Step

- (1)

- Shape parameter optimization

- (2)

- Orientation parameter optimization

- (3)

- Optimization Constraints

- A.

- Shape parameter constraints:

- B.

- Rotation parameter constraints:

| Algorithm 1. DFS-ECM |

| Initial parameters: Measurement set batch , State batch , orientation and extent parameters , Maximum Iterations |

| begin Setup 1 while not converged and do 2 expectation: 3 for do 4 for do 5 Calculate and according to (28–29) 6 end for 7 end for 8 for do 9 Calculate smoothed and according to (37–38) 10 end for 11 maximization: 12 Calculate , according to (45,47) 13 ; 14 end while end begin |

4. Simulation Results

4.1. Evaluation Index

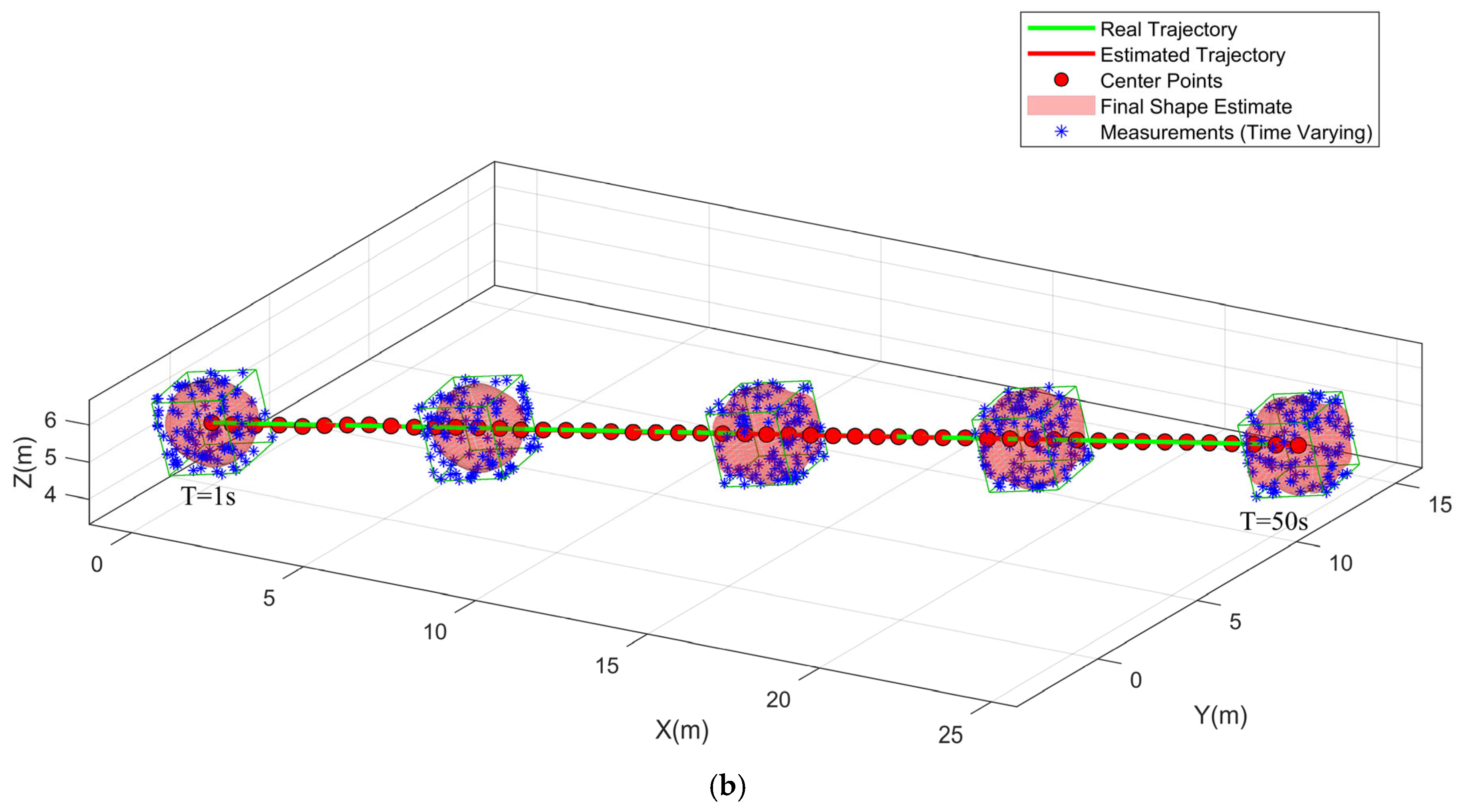

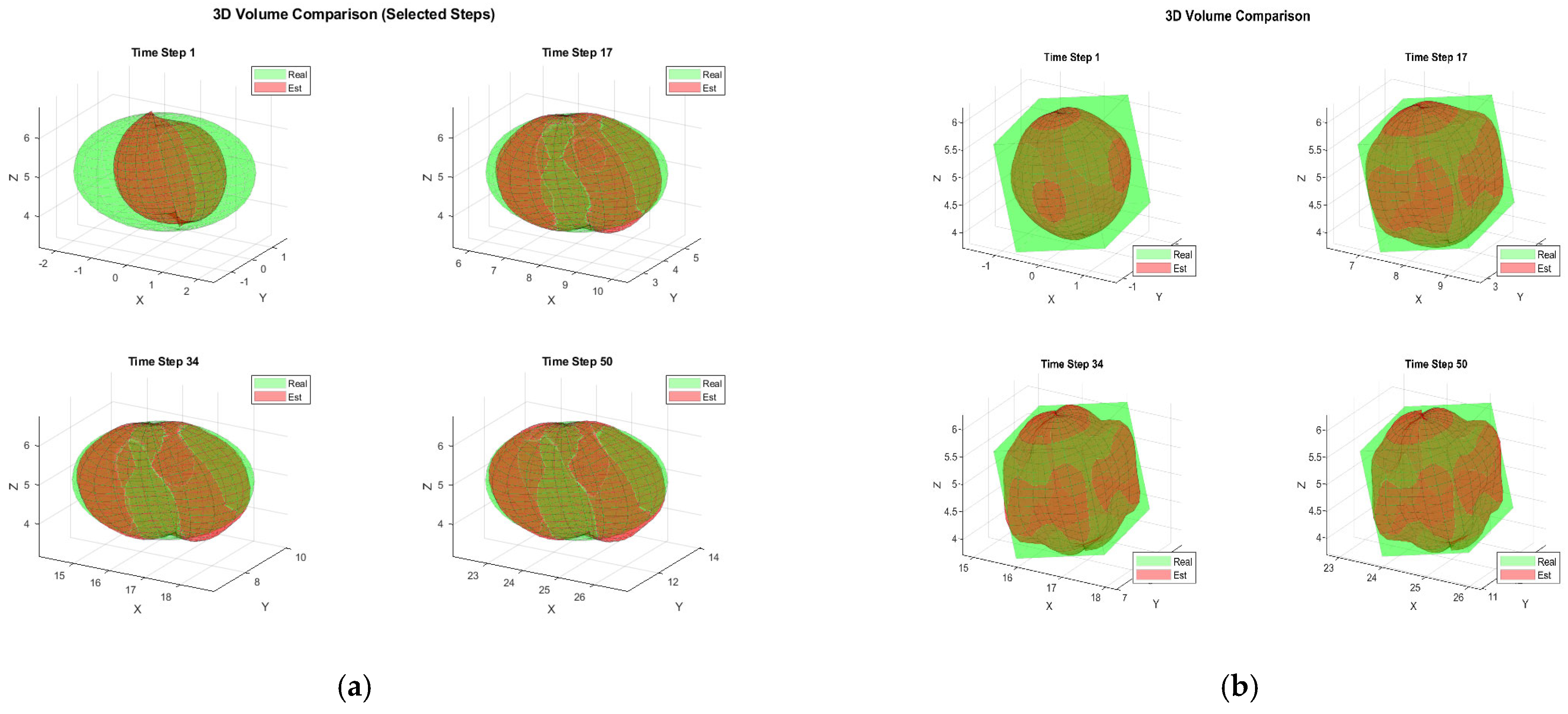

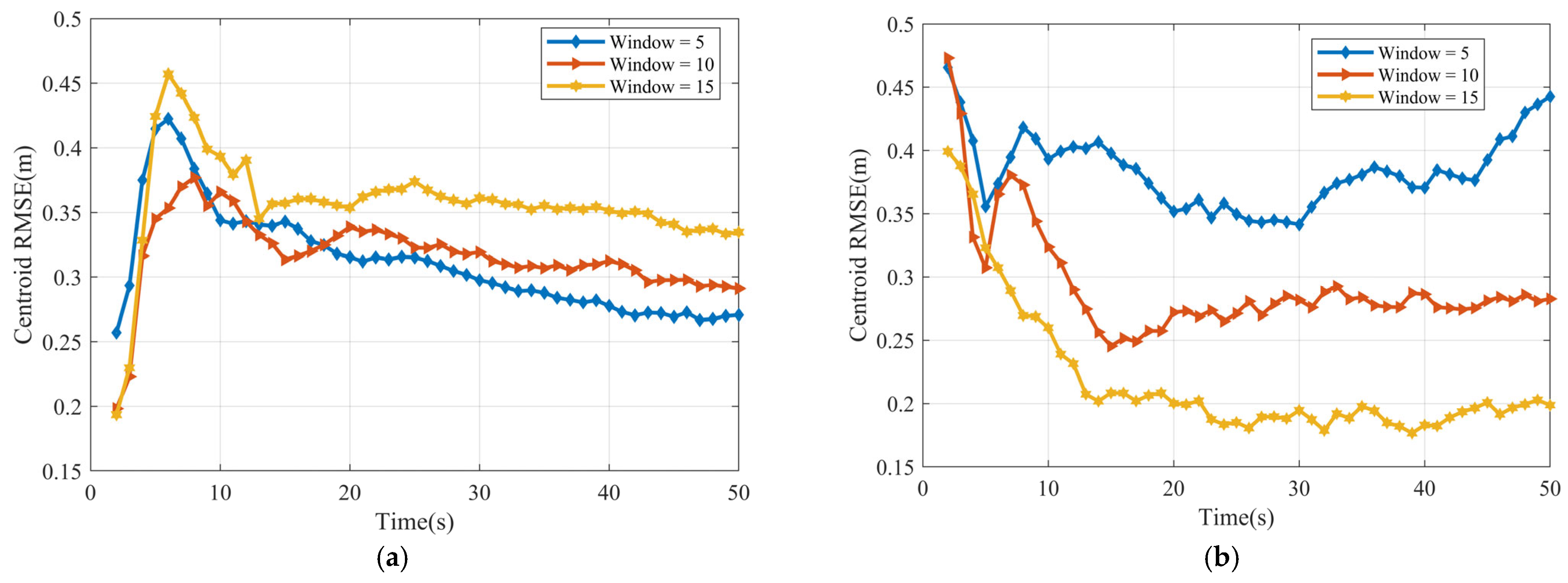

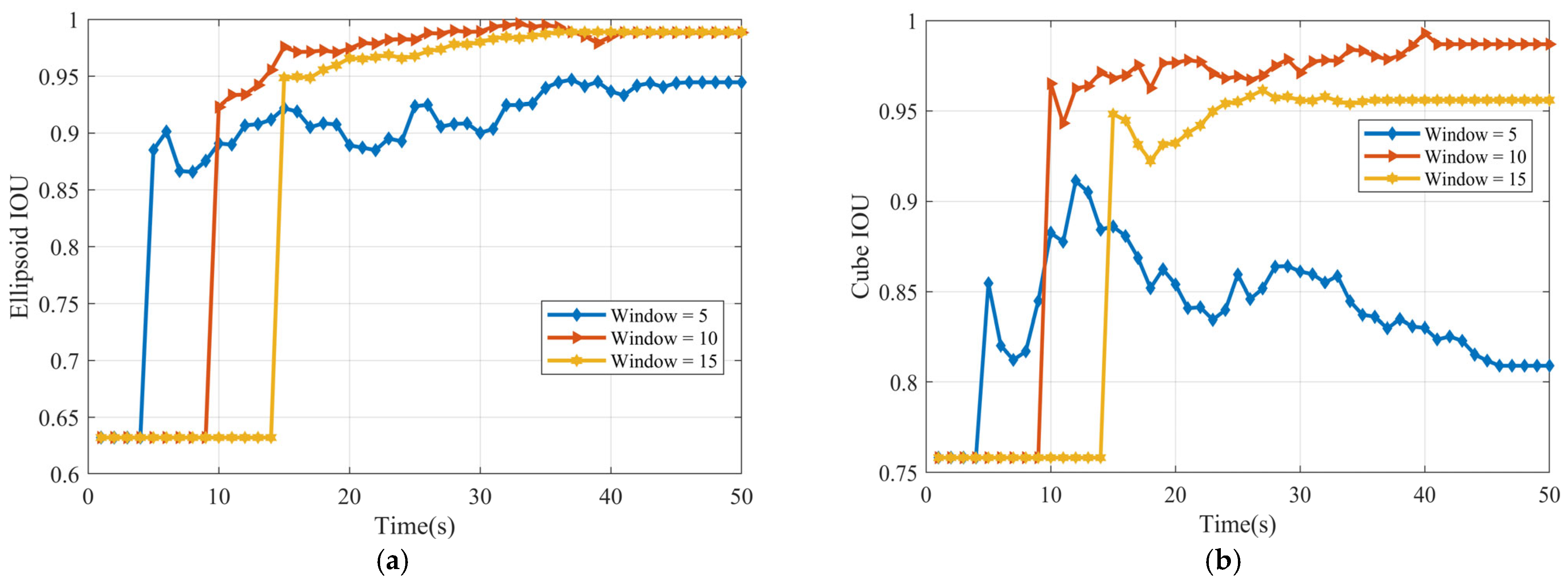

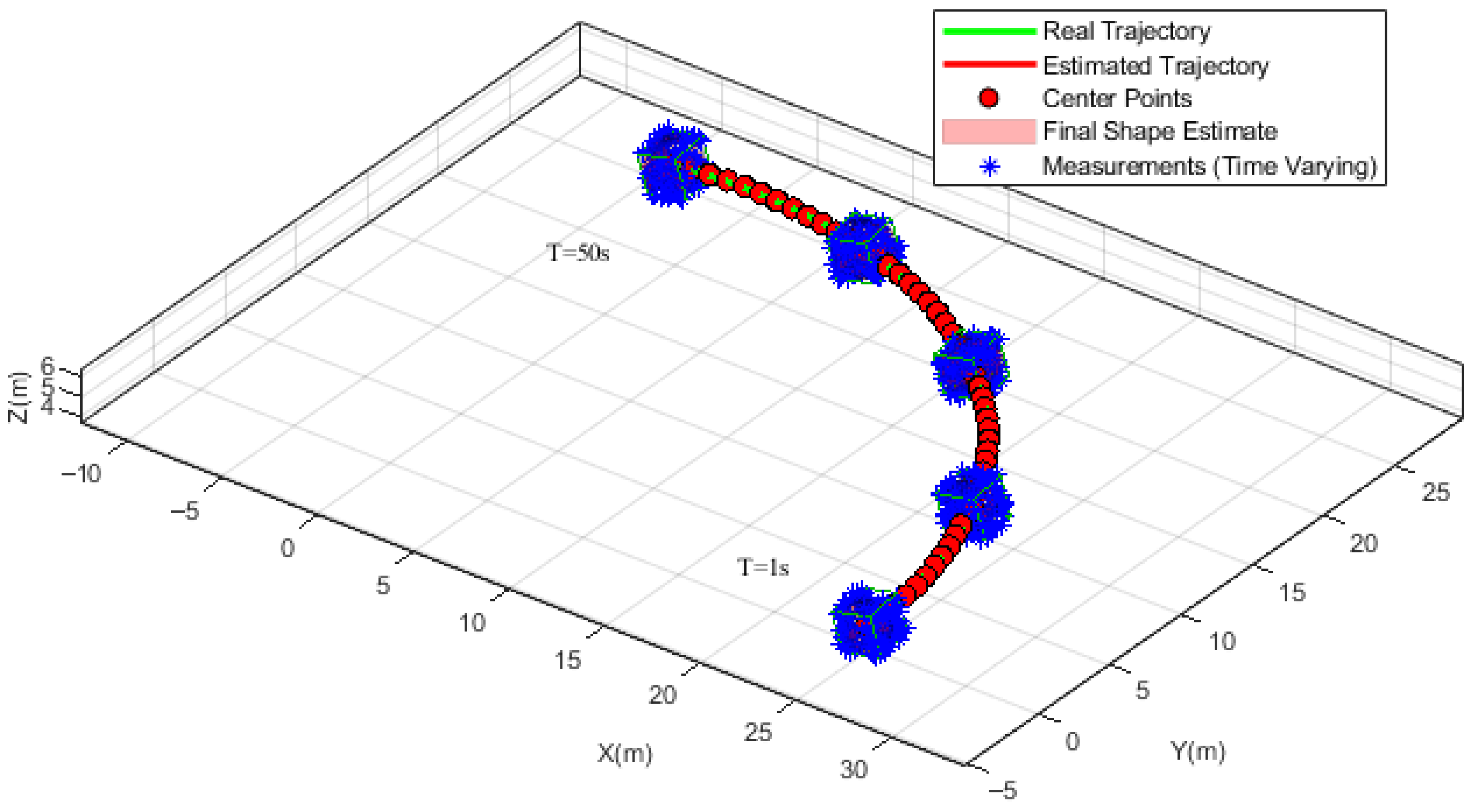

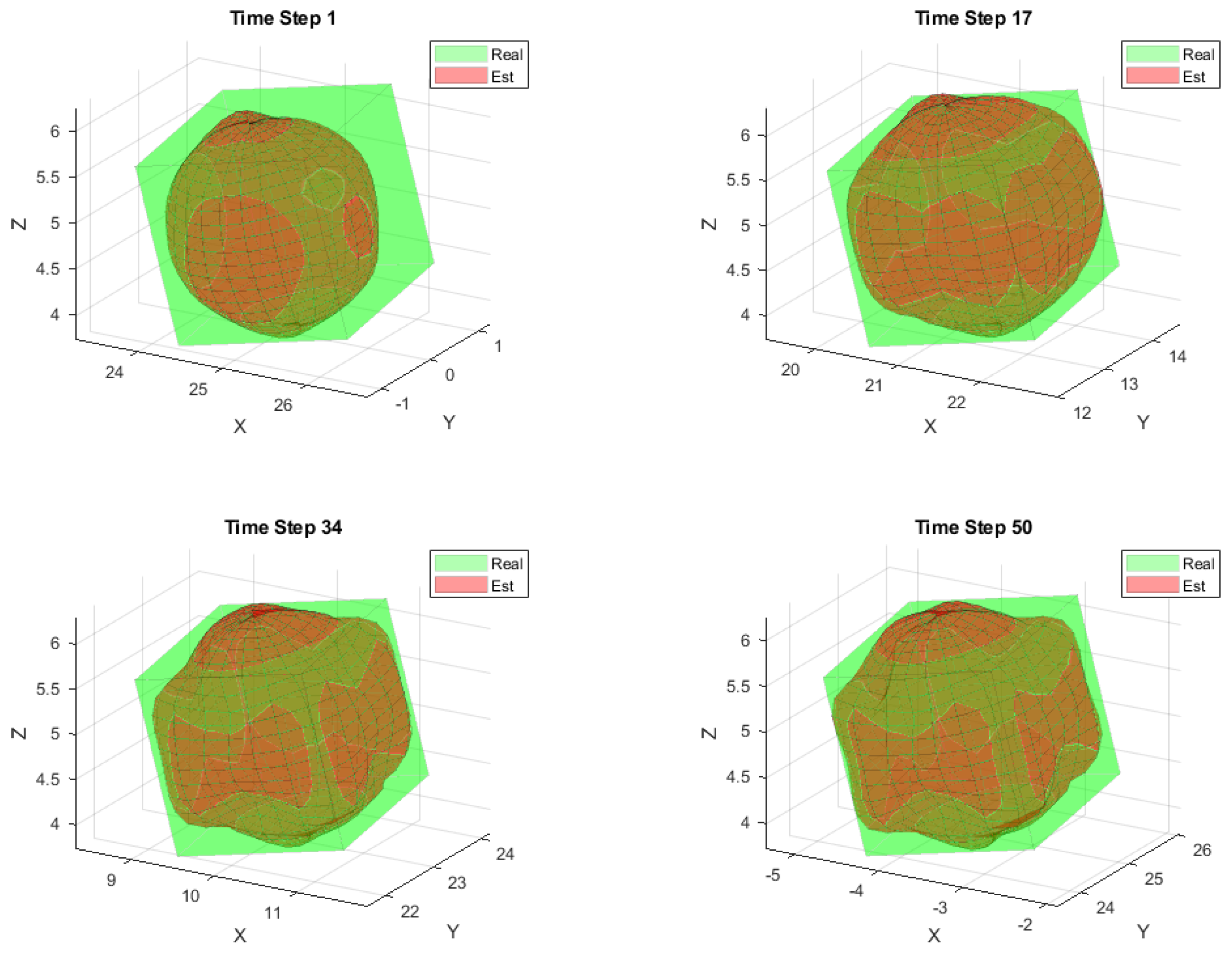

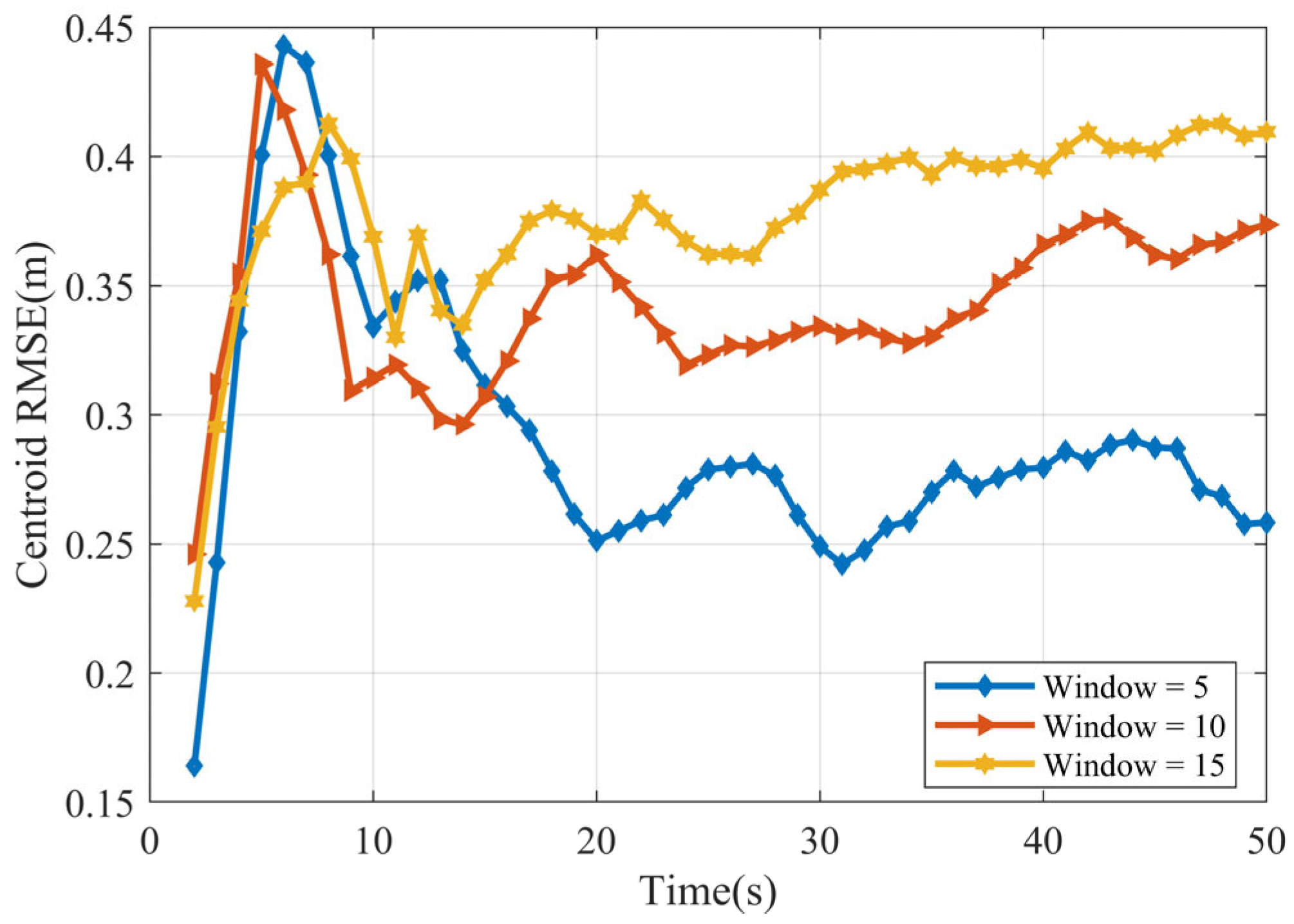

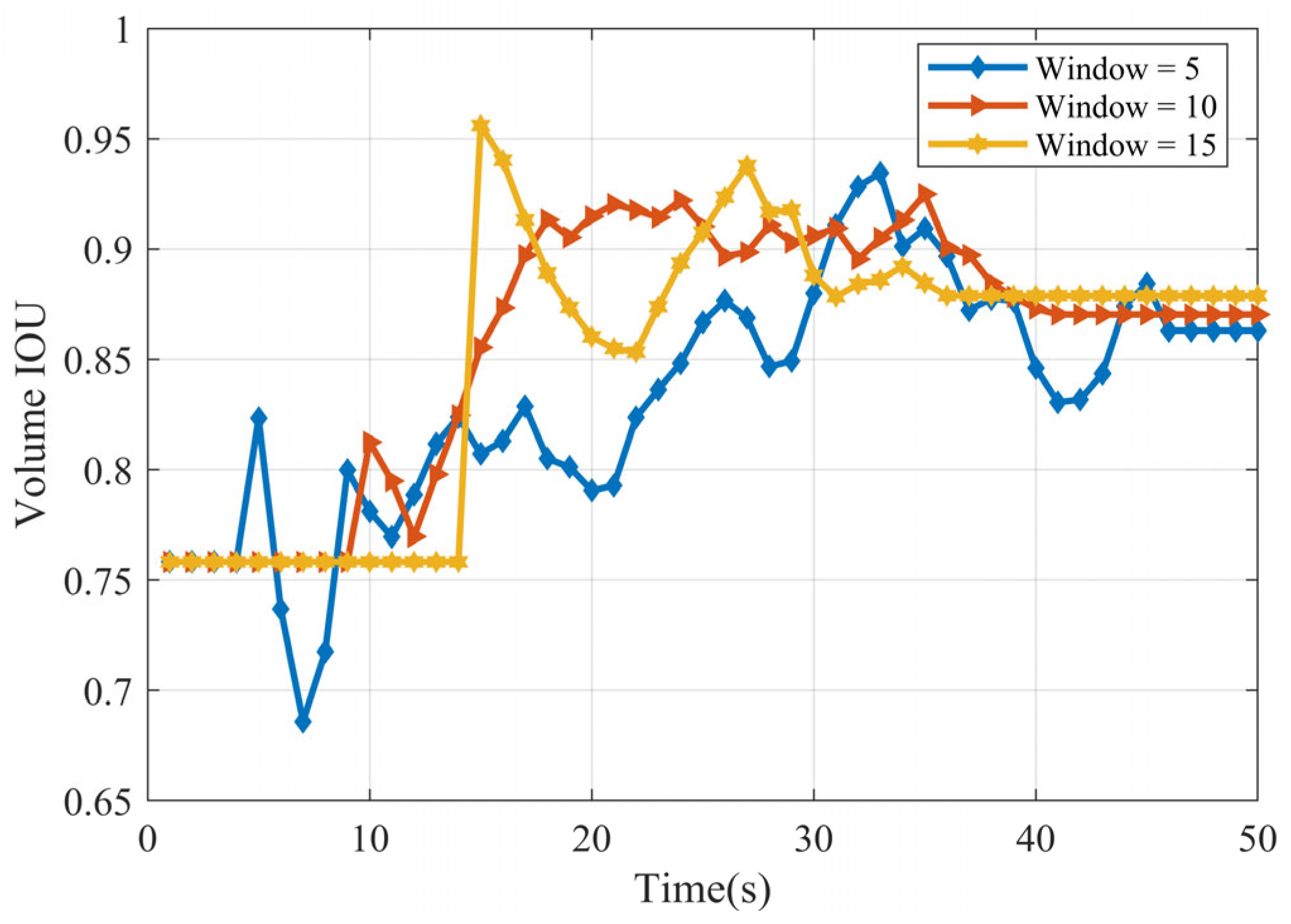

4.2. Scenario I

4.3. Scenario II

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Faion, F.; Zea, A.; Steinbring, J.; Baum, M.; Hanebeck, U.D. Recursive Bayesian Pose and Shape Estimation of 3D Objects Using Transformed Plane Curves. In Proceedings of the 2015 Sensor Data Fusion: Trends, Solutions, Applications (SDF), Bonn, Germany, 6–8 October 2015; pp. 1–6. [Google Scholar]

- Moosmann, F.; Stiller, C. Joint self-localization and tracking of generic objects in 3D range data. In Proceedings of the 2013 IEEE International Conference on Robots and Automation (ICRA), Karlsruhe, Germany, 16–10 May 2013; pp. 1146–1152. [Google Scholar]

- Held, D.; Levinson, J.; Thrun, S.; Savarese, S. Robust Real-time Tracking Combining 3D Shape, Color, and motion. Int. J. Robot. Res. 2016, 35, 30–49. [Google Scholar] [CrossRef]

- Kraemer, S.; Bouzouraa, M.E.; Stiller, C. Simultaneous Tracking and Shape Estimation Using a Multi-layer Laserscanner. In Proceedings of the 20th IEEE International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 1–7. [Google Scholar]

- Granström, K.; Baum, M.; Reuter, M. Extended Object Tracking: Introduction, Overview and Applications. J. Adv. Inf. Fusion. 2017, 2, 139–174. [Google Scholar]

- Mihaylova, L.; Carmi, A.Y.; Septier, F.; Gning, F.; Pang, S.K.; Godsill, S. Overview of Bayesian Sequential Monte Carlo Methods for Group and Extended Object Tracking. Digit. Signal Process. 2014, 25, 1–16. [Google Scholar] [CrossRef]

- Thormann, K.; Yang, S.; Baum, S. A Comparison of Kalman filter Based Approaches for Elliptic Extended Object Tracking. In Proceedings of the IEEE 23rd International Conference on Information Fusion (FUSION), Rustenburg, South Africa, 6–9 July 2020; pp. 1–8. [Google Scholar]

- Thormann, K.; Baum, K. Fusion of Elliptical Extended Object Estimates Parameterized with Orientation and Axes Lengths. IEEE Trans. Aerosp. Electron. Syst. 2021, 4, 2369–2382. [Google Scholar] [CrossRef]

- Liu, S.; Liang, Y.; Xu, L.; Li, T.; Hao, X. EM-based Extended Object Tracking Without a Prior Extension Evolution Model. Signal Process. 2021, 188, 108181. [Google Scholar] [CrossRef]

- Dahlén, K.M.; Lindberg, C.; Yoneda, M.; Ogawa, T. An Improved B-spline Extended Object Tracking Model Using the Iterative Closest Point Method. In Proceedings of the 25 the International Conference on Information Fusion (FUSION), Linköping, Sweden, 4–7 July 2022; pp. 1–8. [Google Scholar]

- Wahlström, N.; Özkan, E. Extended Target Tracking Using Gaussian Processes. IEEE Trans. Signal Process. 2015, 16, 4165–4178. [Google Scholar] [CrossRef]

- Thormann, K.; Baum, M.; Honer, J. Extended Target Tracking Using Gaussian Processes with High-resolution Automotive Radar. In Proceedings of the 21st International Conference on Information Fusion (FUSION), Cambridge, UK, 10–13 July 2018; pp. 1764–1770. [Google Scholar]

- Baum, M.; Hanebeck, U.D. Shape Tracking of Extended Objects and Group Targets with Star-convex RHMs. In Proceedings of the 14th International Conference on Information Fusion(FUSION), Chicago, IL, USA, 5–8 July 2011; pp. 1–8. [Google Scholar]

- Baum, M.; Hanebeck, U.D. Extended Object Tracking with Random Hypersurface Models. IEEE Trans. Aerosp. Electron. Syst. 2014, 50, 149–159. [Google Scholar] [CrossRef]

- Baum, M.; Feldmann, M.; Fränken, D.; Hanebeck, U.D.; Koch, W. Extended Object and Group Tracking: A Comparison of Random Matrices and Random Hypersurface Models. In Proceedings of the Informatik 2010 Service Science—Neue Perspektiven fȕr Die Informatik, Beitrage Der 40 Jahrestagung Der Gesellschaft Fur Inform. e.V., Leipzig, Germany, 1 January 2010; pp. 904–906. [Google Scholar]

- Zea, A.; Faion, F.; Baum, M.; Hanebeck, U.D. Level-set Random Hypersurface Models for Tracking Nonconvex Extended Objects. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 2990–3007. [Google Scholar] [CrossRef]

- Liu, Y.; Ji, H.; Zhang, Y. Measurement Transformation Algorithm for Extended Target Tracking. Signal Process. 2021, 186, 1–12. [Google Scholar] [CrossRef]

- Steinbring, J.; Baum, M.; Zea, A.; Faion, F.; Hanebeck, U.D. A Closed-Form Likelihood for Particle Filters to Track Extended Objects with Star-Convex RHMs. In Proceedings of the IEEE International Conference on Multisensor Fusion and lntegration for Intelligent Systems (MFI), San Diego, CA, USA, 14–16 September 2015; pp. 1–6. [Google Scholar]

- Kaulbersch, H.; Baum, M.; Willett, P. EM Approach for Tracking Star-convex Extended Objects. In Proceedings of the 20th International Conference on Information Fusion, Xi’an, China, 10–13 July 2017; pp. 1–8. [Google Scholar]

- Liu, Y.; Ji, H.; Zhang, Y. Gaussian-like Measurement Likelihood Based Particle Filter for Extended Target Tracking. IET Radar Sonar Navig. 2022, 12, 1–15. [Google Scholar] [CrossRef]

- Özkan, E.; Wahlström, N.; Godsill, S.J. Rao-Blackwellised Particle Filter for Star-convex Extended Target Tracking Models. In Proceedings of the International Conference on Information Fusion, Heidelberg, Germany, 5–8 July 2016; pp. 1193–1199. [Google Scholar]

- Faion, F.; Baum, M.; Hanebeck, U.D. Tracking 3D Shapes in Noisy Point Clouds with Random Hyper Surface Models. In Proceedings of the 15 th International Conference on Information Fusion (FUSION), Singapore, 9–12 July 2012; pp. 2230–2235. [Google Scholar]

- Zea, A.; Faion, F.; Hanebeck, U.D. Tracking Extended Objects Using Extrusion Random Hypersurface Models. In Proceedings of the Sensor Data Fusion: Trends, Solutions, Applications (SDF), Bonn, Germany, 8–10 October 2014; pp. 1–6. [Google Scholar]

- Hoher, P.; Baur, T.; Reuter, J.; Griesser, D.; Govaers, F.; Koch, W. 3D Extended Object Tracking and Shape Classification with a Lidar Sensor using Random Matrices and Virtual Measurement Models. In Proceedings of the 27th International Conference on Information Fusion (FUSION), Venice, Italy, 8–11 July 2024; pp. 1–6. [Google Scholar]

- Baur, T.; Reuter, J.; Zea, A.; Hanebeck, U.D. Extent Estimation of Sailing Boats Applying Elliptic Cones to 3D Lidar Data. In Proceedings of the 25th International Conference on Information Fusion (FUSION), Linköping, Sweden, 4–7 July 2022; pp. 1–8. [Google Scholar]

- Kumru, M.; Özkan, E. 3D Extended Object Tracking Using Recursive Gaussian Processes. In Proceedings of the International Conference on Information Fusion (FUSION), Cambridge, UK, 10–13 July 2018; pp. 1–8. [Google Scholar]

- Kumru, M.; Özkan, E. Three-Dimensional Extended Object Tracking and Shape Learning Using Gaussian Processes. IEEE Trans. Aerosp. Electron. Syst. 2021, 5, 2795–2814. [Google Scholar] [CrossRef]

- Baur, T.; Reuter, J.; Zea, A.; Hanebeck, U.D. Shape Estimation and Tracking using Spherical Double Fourier Series for Three-Dimensional Range Sensors. In Proceedings of the IEEE International Conference on Multisensor Fusion and Integration for Intelligent Systems (MFI), Karlsruhe, Germany, 23–25 September 2021; pp. 1–6. [Google Scholar]

- Hu, H.; Beck, J.; Lauer, M.; Stiller, C. Continuous Fusion of IMU and Pose Data using Uniform B-Spline. In Proceedings of the Multisensor Fusion and Integration for Intelligent System(MFI), Karlsruhe, Germany, 14–16 September 2020; pp. 1–6. [Google Scholar]

- Li, X.R.; Jinkov, V. Survey of Maneuvering Target Tracking. Part I: Dynamic Models. IEEE Trans. Aerosp. Electron. Syst. 2003, 4, 1333–1364. [Google Scholar]

- Wan, E.; Merwe, R. The Unscented Kalman Filter for Nonlinear Estimation. In Proceedings of the IEEE 2000 Adaptive Systems for Signal Processing, Communications, and Control Symposium, Lake Louise, AB, Canada, 4 October 2000; pp. 1–6. [Google Scholar]

- Julier, S.J.; Uhlmann, J.K. Unscented filtering and nonlinear estimation. Proc. IEEE 2004, 3, 401–422. [Google Scholar] [CrossRef]

- Särkkä, S. Unscented Rauch-Tung-Striebel smoother. IEEE Trans. Autom. Control. 2008, 3, 845–849. [Google Scholar] [CrossRef]

- Huang, Y.; Zhang, Y.; Zhao, Y.; Mihaylova, L.; Chambers, J.A. Robust Rauch-Tung-Striebel smoothing framework for heavy-tailed and/or skew noises. IEEE Trans. Aerosp. Electron. Syst. 2020, 1, 415–441. [Google Scholar] [CrossRef]

- Feldman, M.; Franker, D.; Koch, W. Tracking of extended objects and group targets using random matrices. IEEE Trans. Signal Process. 2011, 4, 1409–1420. [Google Scholar] [CrossRef]

| The error covariance of target state at time | |

| The predict error covariance of target state at time | |

| The th innovation covariance at time | |

| The th cross-covariance at time | |

| The th error covariance at time | |

| The th smoothed covariance at time | |

| The smoothed error covariance at time | |

| Transition Probability density of state | |

| The likelihood function | |

| The likelihood function of complete data | |

| The prior Probability density of state | |

| The smoothness penalty term of the cost function | |

| Iteration times of ECM | |

| Index of measurement number |

| Target | Angle | T = 5 (rad) | T = 10 (rad) | T = 15 (rad) |

|---|---|---|---|---|

| Ellipsoid | 0.020 | 0.030 | 0.030 | |

| 0.080 | 0.050 | 0.060 | ||

| 0.050 | 0.050 | 0.050 | ||

| Cube | 0.043 | 0.042 | 0.043 | |

| 0.004 | 0.004 | 0.003 | ||

| 0.015 | 0.012 | 0.016 |

| Target | Algorithm | Centroid RMSE (m) | Velocity RMSE (m/s) | IOU |

|---|---|---|---|---|

| Ellipsoid | RM | 0.27 | 0.10 | 0.90 |

| DFS-ECM | 0.26 | 0.07 | 0.96 | |

| Cube | RM | 0.27 | 0.10 | 0.70 |

| DFS-ECM | 0.20 | 0.07 | 0.95 |

| Target | Number of Measurement | Centroid RMSE (m) | IOU |

|---|---|---|---|

| Ellipsoid | 10 | 0.29 | 0.91 |

| 20 | 0.28 | 0.92 | |

| 40 | 0.27 | 0.96 | |

| Cube | 10 | 0.30 | 0.90 |

| 20 | 0.28 | 0.92 | |

| 40 | 0.288 | 0.95 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mao, H.; Yang, X. Three-Dimensional Extended Target Tracking and Shape Learning Based on Double Fourier Series and Expectation Maximization. Sensors 2025, 25, 4671. https://doi.org/10.3390/s25154671

Mao H, Yang X. Three-Dimensional Extended Target Tracking and Shape Learning Based on Double Fourier Series and Expectation Maximization. Sensors. 2025; 25(15):4671. https://doi.org/10.3390/s25154671

Chicago/Turabian StyleMao, Hongge, and Xiaojun Yang. 2025. "Three-Dimensional Extended Target Tracking and Shape Learning Based on Double Fourier Series and Expectation Maximization" Sensors 25, no. 15: 4671. https://doi.org/10.3390/s25154671

APA StyleMao, H., & Yang, X. (2025). Three-Dimensional Extended Target Tracking and Shape Learning Based on Double Fourier Series and Expectation Maximization. Sensors, 25(15), 4671. https://doi.org/10.3390/s25154671