Digital Twin for Analog Mars Missions: Investigating Local Positioning Alternatives for GNSS-Denied Environments

Abstract

1. Introduction

2. Related Work

2.1. Digital Twin Technology in Space Exploration

2.2. Localization of Astronauts and Rovers

3. Methodology

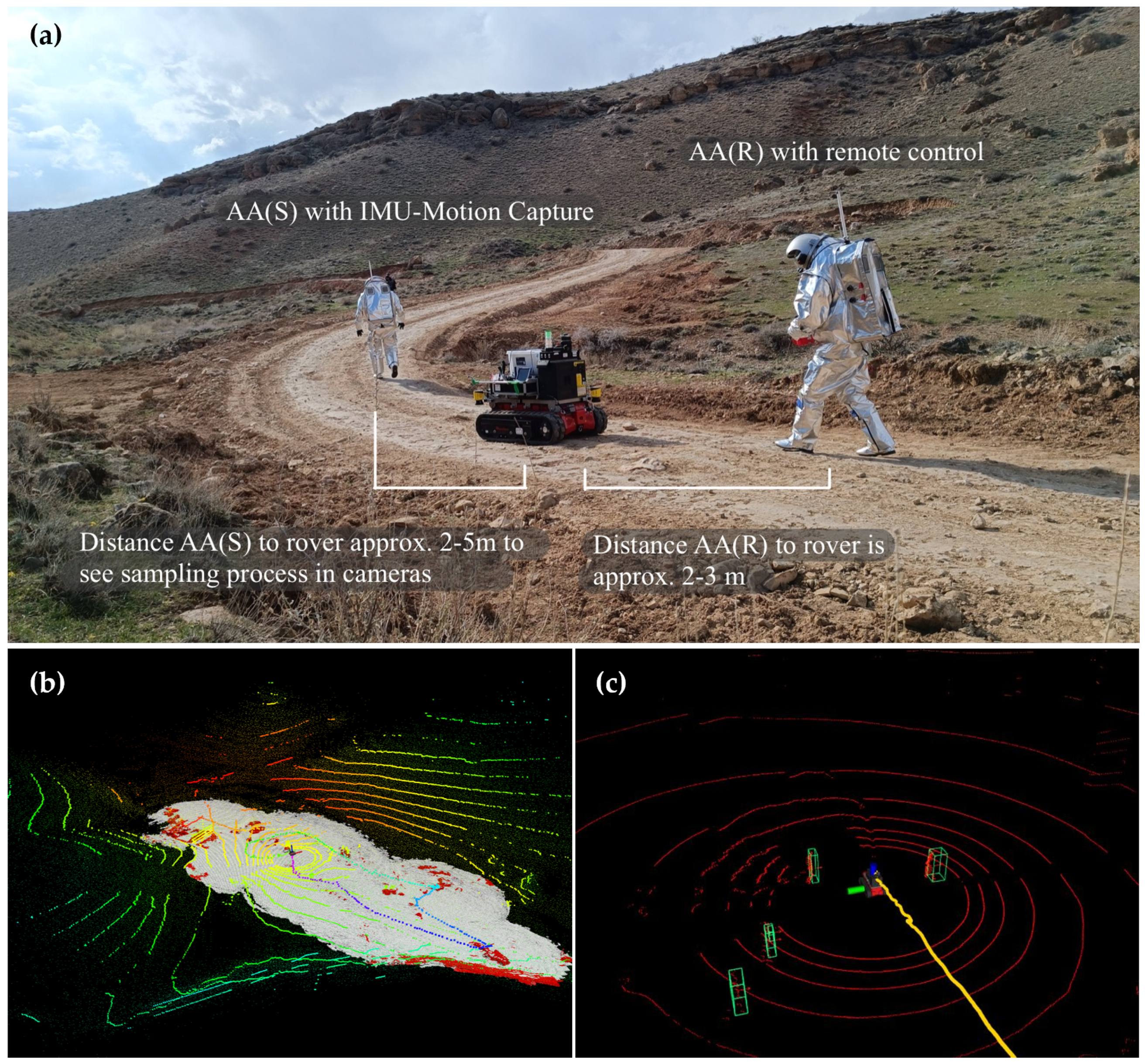

3.1. Human–Robotic Analog Mars Mission AMADEE-24

3.2. Study Design: Digital Twin Integration and Operator Localization

3.3. Data Acquisition

3.3.1. Drone Scans and Aerial Imaging

3.3.2. iROCS Rover

3.3.3. Aouda Space Suit Simulators

3.3.4. Xsens Awinda

3.4. Data Analysis: Alternative Astronaut and Rover Localization

3.4.1. iROCS Localization Using LeGO-LOAM-BOR

3.4.2. Human Detection with Adaptive Clustering

- 1.

- Data Acquisition: The sensor network collects position data points at discrete time intervals.

- 2.

- Cluster Formation: Data points are grouped based on their proximity in space. The distance between points is computed aswhere is the Euclidean distance between two data points and .

- 3.

- Adaptive Thresholding: The algorithm adapts the clustering radius based on the distribution of the points. If the distance between points exceeds a threshold, they belong to different clusters. This is given bywhere n is the number of points, and is a scaling factor.

- 4.

- Cluster Centroid Calculation: The centroid of each cluster is computed as the average of the positions in the cluster:where is the set of points in cluster k, and is the number of points in that cluster.

- 5.

- Localization Refinement: The astronaut’s final position is estimated as the cluster’s centroid that contains the most sensor data points within a given time window.To map the astronaut’s position into a global coordinate system, the position vector of the astronaut in the local coordinate frame of the sensor network is transformed into a global coordinate system by applying a rotation and translation matrix. The transformation is given bywhere

- is the astronaut’s position in the global coordinate system;

- is the rotation matrix that accounts for the orientation difference between the local sensor frame and the global frame;

- is the translation vector representing the offset between the origin of the local and global coordinate systems.

3.4.3. IMU-Motion Capture

3.5. Statistical Analysis

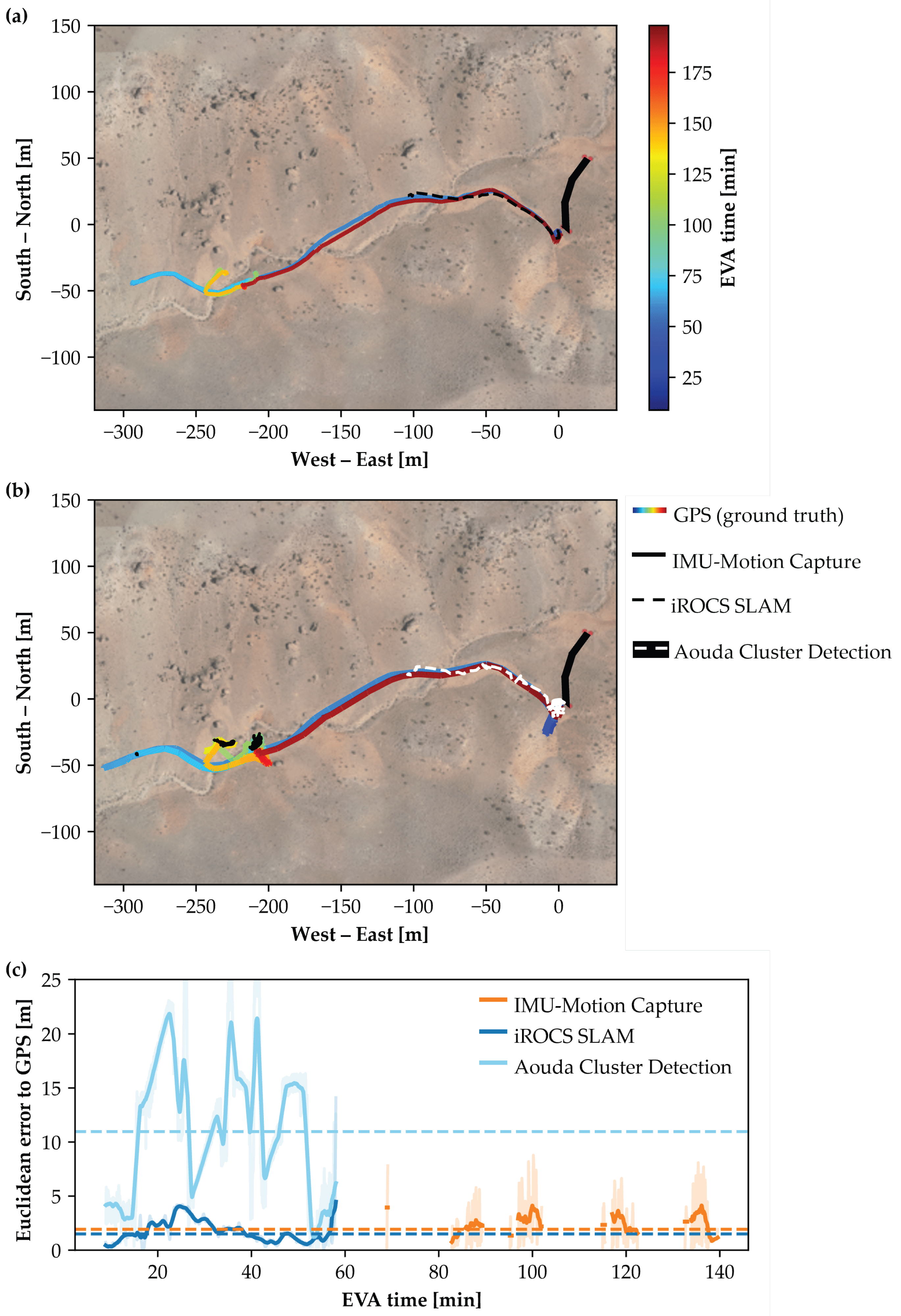

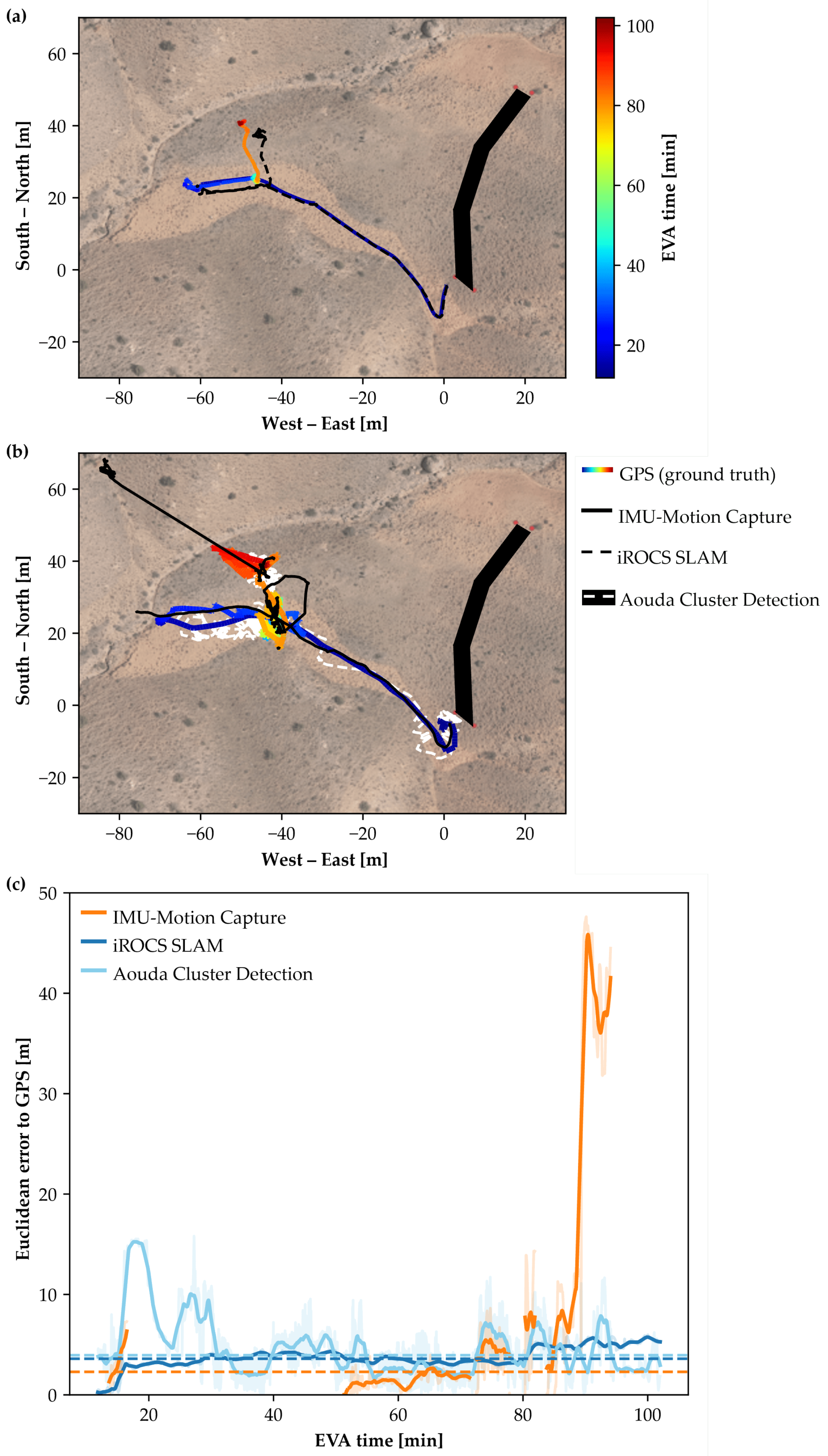

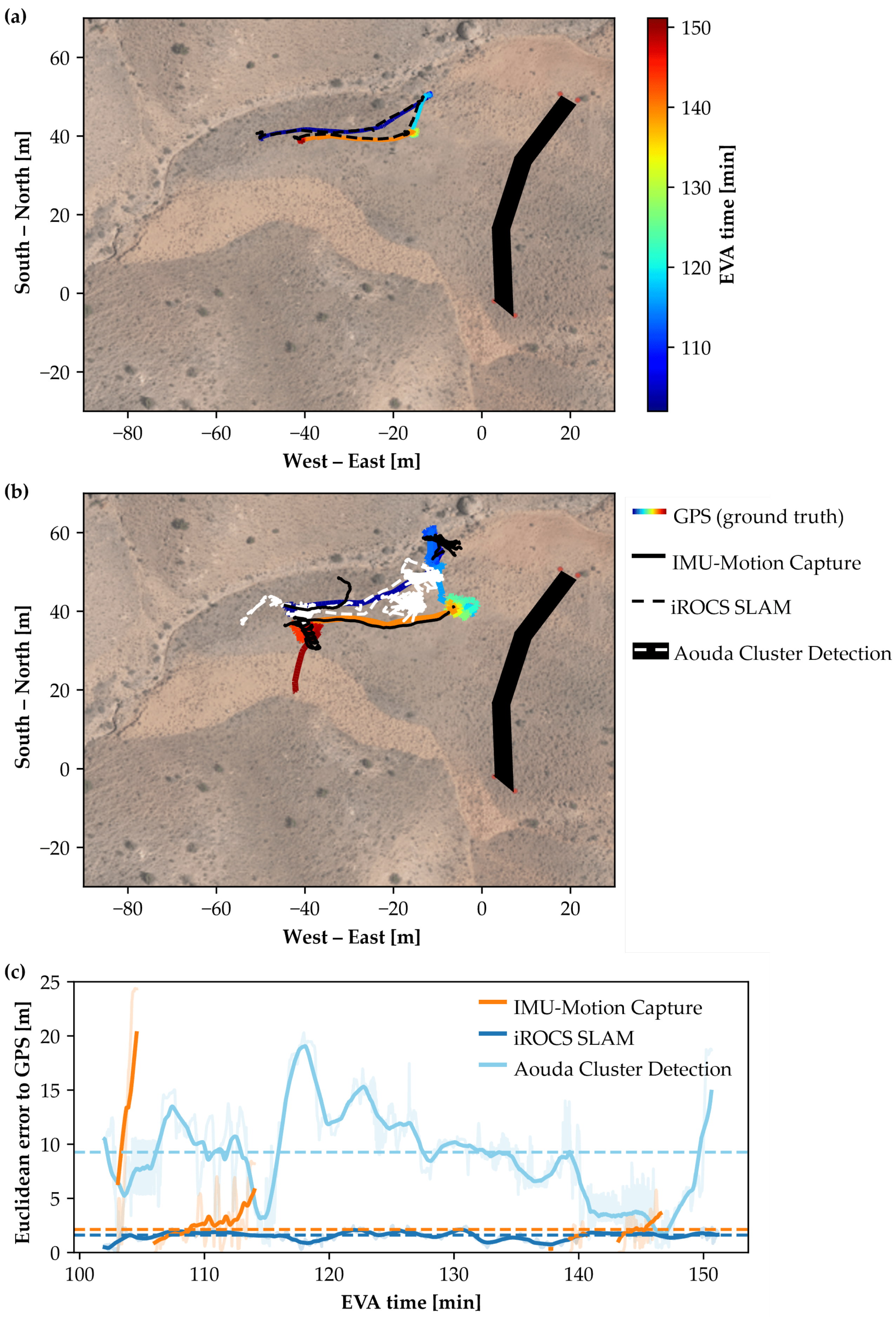

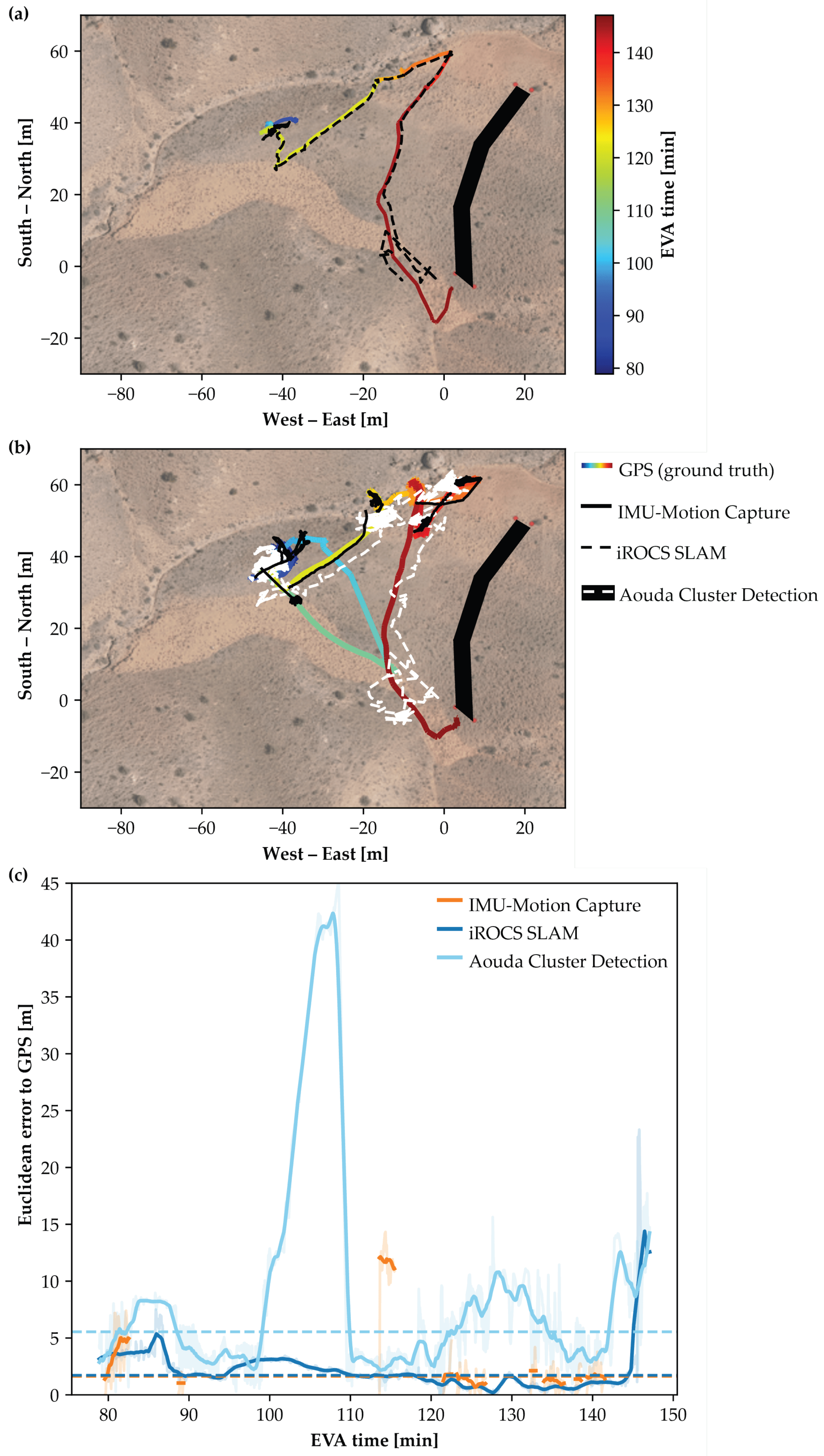

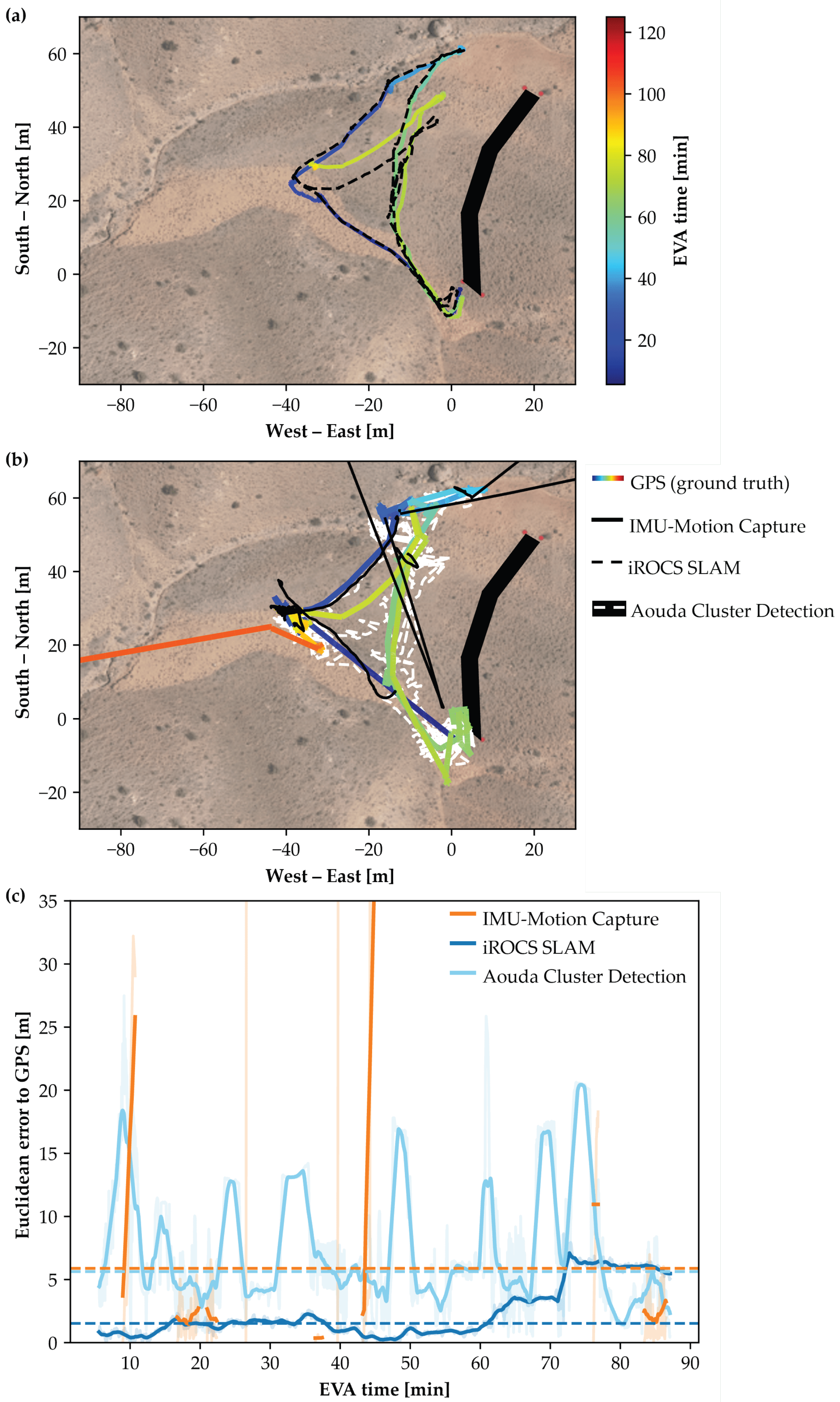

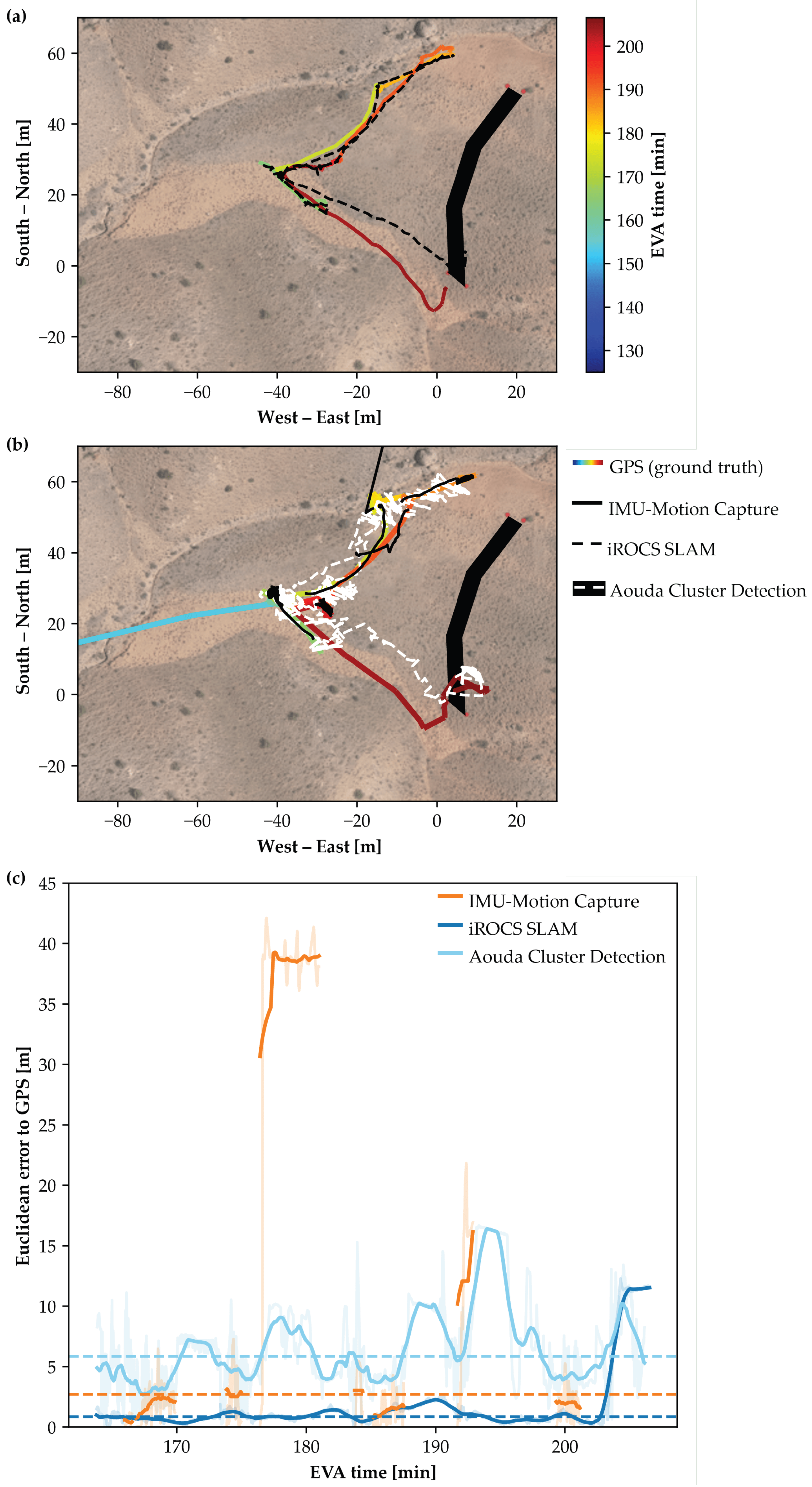

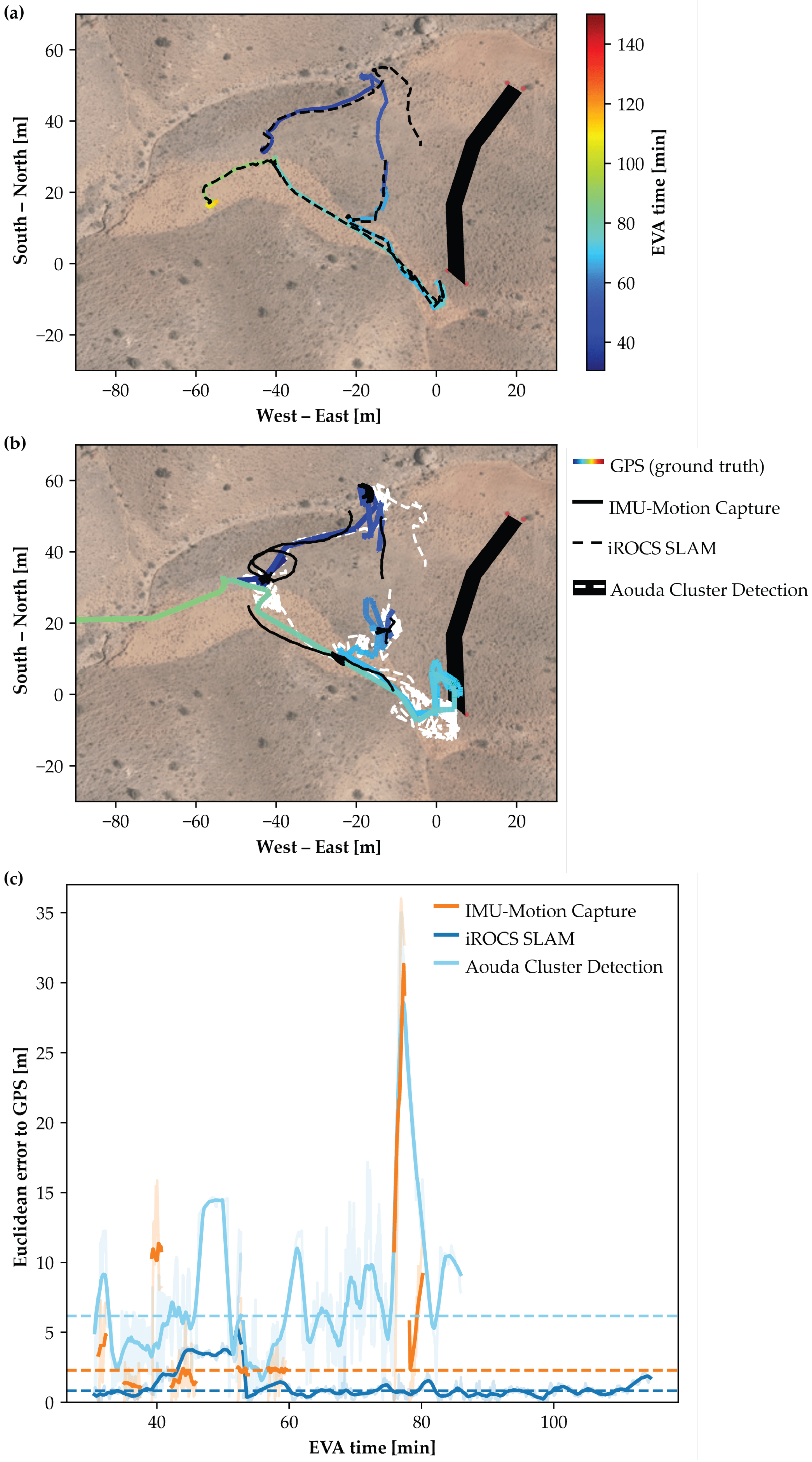

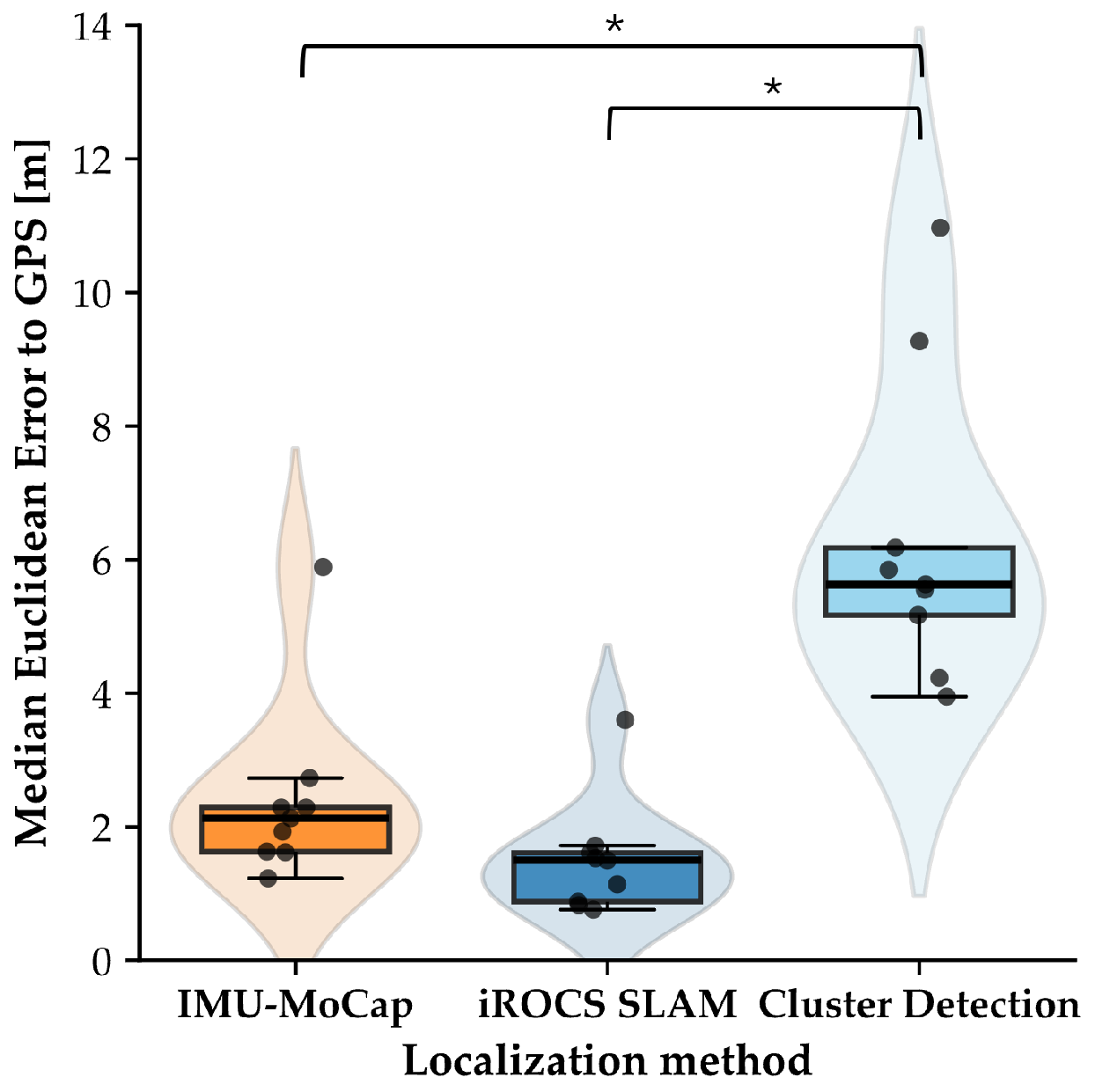

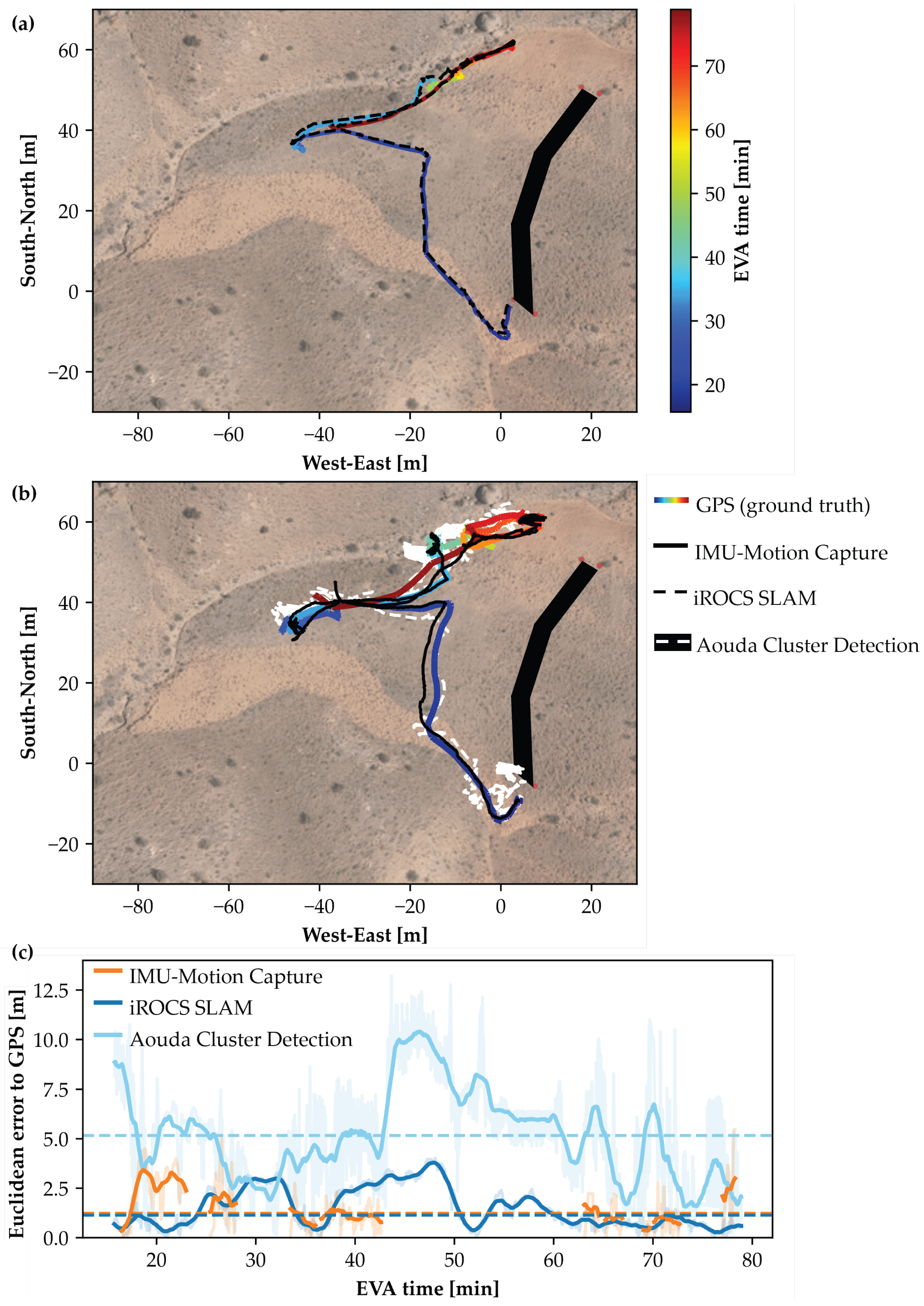

4. Results

5. Discussion

5.1. Alternative Local Positioning Approaches

5.2. Advanced Mission Planning Using Digital Twins

- Pre-mission planning: The digital twin enables the Remote Science Team and Flight Planning Team to virtually explore the terrain and pre-select POI and traverse paths. However, practical usability must be evaluated in future deployments.

- Situational awareness: The digital representation of terrain and surface conditions may enhance situational awareness for the MSC. This includes a clearer understanding of operator position, environmental hazards, and spatial relationships between POI.

- Post-EVA analysis: The system facilitates detailed EVA reconstruction, enabling the analysis of traverse paths, task durations, time allocation, HRI, and potentially hazardous situations. When combined with physiological telemetry, such analyses support refining operational procedures and time scheduling.

- Mission evaluation: The digital twin contributes to post-mission assessment by supporting the evaluation of critical performance metrics [42].

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| AA(R) | Analog Astronaut (Rover operator) |

| AA(S) | Analog Astronaut (Geological sampling) |

| ANOVA | Analysis of Variance |

| BOR | Bundle Optimization Refinement |

| CEP | Circular Error Probable |

| CoM | Center of Mass |

| DEM | Digital Elevation Model |

| ECG | Electrocardiogram |

| EVA | Extravehicular Activities |

| GNSS | Global Navigation Satellite System |

| GPS | Global Positioning System |

| HRI | Human–Robot Interaction |

| ICP | Iterative Closest Point |

| IMU | Inertial Measurement Unit |

| iROCS | Intuitive Rover Operation and Collecting Samples |

| LeGO-LOAM | Lightweight and Ground-Optimized LiDAR Odometry and Mapping |

| LiDAR | Light Detection and Ranging |

| MEE | Median Euclidean Error |

| MoCap | Motion Capture |

| MSC | Mission Control Center |

| OBDH | On-Board Data Handling |

| OeWF | Österreichisches Weltraum Forum (Austrian Space Forum) |

| POI | Point of Interest |

| RANSAC | Random Sample Consensus |

| RTE | Rosenbauer Technical Equipment |

| SLAM | Simultaneous Localization and Mapping |

| UMM | Universal Multi-Layer Map |

| WGS84 | World Geodetic System 1984 |

| XKF3 | Extended Kalman Filter (version 3, used by Xsens Awinda) |

Appendix A. Experimental Results of All EVAs

References

- Patel, D.D.; Geiskkovitch, D.Y. The Space Between Us: Bridging Human and Robotic Worlds in Space Exploration. In Proceedings of the Companion of the 2024 ACM/IEEE International Conference on Human-Robot Interaction, Boulder, CO, USA, 11–15 March 2024; pp. 833–836. [Google Scholar]

- Crawford, I.A. Dispelling the myth of robotic efficiency. Astron. Geophys. 2012, 53, 2–22. [Google Scholar] [CrossRef]

- Hambuchen, K.; Marquez, J.; Fong, T. A review of NASA human-robot interaction in space. Curr. Robot. Rep. 2021, 2, 265–272. [Google Scholar] [CrossRef]

- Sajeev, S.; Aloor, J.; Shakya, A. Space Robotics versus Humans in Space. In Proceedings of the IAA-ISRO-ASI Symposium on Human Spaceflight Programme, Bengaluru, India, 22–24 January 2020. [Google Scholar] [CrossRef]

- Ravanis, E.; Sejkora, N.; Groemer, G.; Gruber, S. Preparing a Mars analogue mission-Flight Planning for AMADEE-20. In Proceedings of the 43rd COSPAR Scientific Assembly, Sydney, Australia, 28 January–4 February 2021; Volume 43, p. 162. [Google Scholar]

- Liu, W.; Wu, M.; Wan, G.; Xu, M. Digital twin of space environment: Development, challenges, applications, and future outlook. Remote Sens. 2024, 16, 3023. [Google Scholar] [CrossRef]

- Schmidt, C.M.; Paterson, T.; Schmidt, M.A. The Astronaut Digital Twin: Accelerating discovery and countermeasure development in the optimization of human space exploration. In Building a Space-Faring Civilization; Academic Press: Cambridge, MA, USA, 2025; pp. 245–256. [Google Scholar]

- Hamza, B.; Nebylov, A.; Yatsevitch, G. Original solutions for localization and navigation on the surface of mars planet. In Proceedings of the 2010 IEEE Aerospace Conference, Big Sky, MT, USA, 6–13 March 2010; pp. 1–13. [Google Scholar]

- Li, R.; He, S.; Skopljak, B.; Meng, X.; Tang, P.; Yilmaz, A.; Jiang, J.; Oman, C.M.; Banks, M.; Kim, S. A multisensor integration approach toward astronaut navigation for landed lunar missions. J. Field Robot. 2014, 31, 245–262. [Google Scholar] [CrossRef]

- Ziegler, J.; Kretzschmar, H.; Stachniss, C.; Grisetti, G.; Burgard, W. Accurate human motion capture in large areas by combining IMU-and laser-based people tracking. In Proceedings of the 2011 IEEE/RSJ International Conference on Intelligent Robots and Systems, San Francisco, CA, USA, 25–30 September 2011; pp. 86–91. [Google Scholar]

- Shan, T.; Englot, B. LeGO-LOAM: Lightweight and Ground-Optimized Lidar Odometry and Mapping on Variable Terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar] [CrossRef]

- Battler, M.; Auclair, S.; Osinski, G.R.; Bamsey, M.T.; Binsted, K.; Bywaters, K.; Kobrick, R.L.; Harris, J.; Barry, N. Surface exploration metrics of a long duration polar analogue study: Implications for future Moon and Mars missions. Proc. Int. Astronaut. Congr. 2008, 4, 548–557. [Google Scholar]

- Groemer, G.; Ozdemir, S. Planetary analog field operations as a learning tool. Front. Astron. Space Sci. 2020, 7, 32. [Google Scholar] [CrossRef]

- Kereszturi, A. Geologic field work on Mars: Distance and time issues during surface exploration. Acta Astronaut. 2011, 68, 1686–1701. [Google Scholar] [CrossRef]

- Johnson, B.J.; Buffington, J.A. EVA systems technology gaps and priorities 2017. In Proceedings of the International Conference on Environmental Systems (No. JSC-CN-39609), Singapore, 16–20 July 2017. [Google Scholar]

- Johnson, A.W.; Newman, D.J.; Waldie, J.M.; Hoffman, J.A. An EVA mission planning tool based on metabolic cost optimization. In SAE Technical Paper (No. 2009-01-2562); SAE International: Warrendale, PA, USA, 2009. [Google Scholar]

- Pinello, L.; Brancato, L.; Giglio, M.; Cadini, F.; De Luca, G.F. Enhancing Planetary Exploration through Digital Twins: A Tool for Virtual Prototyping and HUMS Design. Aerospace 2024, 11, 73. [Google Scholar] [CrossRef]

- Yang, W.; Zheng, Y.; Li, S. Application Status and Prospect of Digital Twin for On-Orbit Spacecraft. IEEE Access 2021, 9, 106499. [Google Scholar] [CrossRef]

- Reimeir, B.; Wargel, A.; Riedl, F.; Maach, S.; Weidner, R.; Grömer, G.; Federolf, P. Neil Armstrong’s digital twin: An integrative approach for movement analysis in simulated space missions. Curr. Issues Sport Sci. (CISS) 2024, 9, 018. [Google Scholar] [CrossRef]

- Ozdemir, S.; Groemer, G.; Garnitschnigg, S. Introduction to mars analog mission: Amadee20 and exploration cascade. In Proceedings of the EGU General Assembly Conference Abstracts, Vienna, Austria, 3–8 May 2020; p. 12937. [Google Scholar]

- Yuan, Q.; Chen, I.M. Localization and velocity tracking of human via 3 IMU sensors. Sens. Actuators Phys. 2014, 212, 25–33. [Google Scholar] [CrossRef]

- Strozzi, N.; Parisi, F.; Ferrari, G. Impact of on-body imu placement on inertial navigation. IET Wirel. Sens. Syst. 2018, 8, 3–9. [Google Scholar] [CrossRef]

- Hernandez, Y.; Kim, K.H.; Benson, E.; Jarvis, S.; Meginnis, I.; Rajulu, S. Underwater space suit performance assessments part 1: Motion capture system development and validation. Int. J. Ind. Ergon. 2019, 72, 119–127. [Google Scholar] [CrossRef]

- Kobrick, R.L.; Lopac, N.; Schuman, J.; Covello, C.; French, J.; Gould, A.; Meyer, M.; Southern, T.; Lones, J.; Ehrlich, J.W. Spacesuit Range of Motion Investigations Using Video and Motion Capture Systems at Spaceflight Analogue Expeditions and Within the Erau Suit Lab. 2018. Available online: https://commons.erau.edu/publication/1436 (accessed on 22 July 2025).

- Di Capua, M.; Akin, D. Body Pose Measurement System (BPMS): An Inertial Motion Capture System for Biomechanics Analysis and Robot Control from Within a Pressure Suit. In Proceedings of the 42nd International Conference on Environmental Systems, San Diego, CA, USA, 15–19 July 2012; p. 3643. [Google Scholar]

- Bertr, P.J.; Anderson, A.; Hilbert, A.; Newman, D.J. Feasibility of spacesuit kinematics and human-suit interactions. In Proceedings of the 44th International Conference on Environmental Systems, Tucson, AZ, USA, 13–17 July 2014. [Google Scholar]

- Tian, X.; Wang, X.; Wang, S.; Wei, Y.; Zhang, Y. Human Center of Mass Trajectory Estimation Based on the IMU System. In Proceedings of the 2024 IEEE 19th Conference on Industrial Electronics and Applications (ICIEA), Kristiansand, Norway, 5–8 August 2024; pp. 1–5. [Google Scholar]

- Gong, W.; Zhang, B.; Wang, C.; Yue, H.; Li, C.; Xing, L.; Qiao, Y.; Zhang, W.; Gong, F. A Literature Review: Geometric Methods and Their Applications in Human-Related Analysis. Sensors 2019, 19, 2809. [Google Scholar] [CrossRef] [PubMed]

- Manakitsa, N.; Maraslidis, G.S.; Moysis, L.; Fragulis, G.F. A review of machine learning and deep learning for object detection, semantic segmentation, and human action recognition in machine and robotic vision. Technologies 2024, 12, 15. [Google Scholar] [CrossRef]

- Li, J.; Smith, A. Histogram-based Feature Descriptors for Pedestrian Detection using LiDAR. IEEE Trans. Intell. Veh. 2016, 2, 345–357. [Google Scholar]

- Yan, X.; Wang, Y. Learning-based Human Detection by Fusing LiDAR and Camera Data. Robot. Auton. Syst. 2018, 107, 20–31. [Google Scholar]

- Paulus, R.; Meyer, J. End-to-End Deep Learning for Human Detection in 3D LiDAR Scans. IEEE Robot. Autom. Lett. 2019, 4, 1234–1241. [Google Scholar]

- Bormann, R.; Jordan, F. Adaptive Clustering with Dynamic Distance Threshold for Robust Object Detection. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Brisbane, Australia, 21–25 May 2018; pp. 4562–4569. [Google Scholar]

- Magnusson, M.; Gustafsson, P. Spatial Clustering of LiDAR Data with Environmental Adaptation. J. Field Robot. 2017, 34, 789–804. [Google Scholar]

- Edlinger, R.; Nüchter, A. Feel the Point Clouds: Traversability Prediction and Tactile Terrain Detection Information for an Improved Human-Robot Interaction. In Proceedings of the IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Busan, Republic of Korea, 28–31 August 2023; pp. 1121–1128. [Google Scholar]

- Edlinger, R.; Nüchter. Universal Multi-Layer Map Display and Improved Situational Awareness in Real-World Facilities. In Proceedings of the IEEE International Symposium on Safety Security Rescue Robotics (SSRR), New York, NY, USA, 12–14 November 2024; pp. 255–260. [Google Scholar] [CrossRef]

- Groemer, G.E.; Hauth, S.; Luger, U.; Bickert, K.; Sattler, B.; Hauth, E.; Föger, D.; Schildhammer, D.; Agerer, C.; Ragonig, C.; et al. The Aouda. X space suit simulator and its applications to astrobiology. Astrobiology 2012, 12, 125–134. [Google Scholar] [CrossRef]

- Thomas, K.S.; McMann, H.J. US Spacesuits; Springer: Berlin/Heidelberg, Germany, 2006; pp. 25–60. [Google Scholar]

- Schepers, M.; Giuberti, M.; Bellusci, G. Xsens MVN: Consistent Tracking of Human Motion Using Inertial Sensing; Xsens Technologies: Enschede, The Netherlands, 2018; Volume 1, pp. 1–8. [Google Scholar]

- Paulich, M.; Schepers, M.; Rudigkeit, N.; Bellusci, G. Xsens MTw Awinda: Miniature Wireless Inertial-Magnetic Motion Tracker for Highly Accurate 3D Kinematic Applications; Xsens: Enschede, The Netherlands, 2018; pp. 1–9. [Google Scholar]

- Nelson, W. Use of Circular Error Probability in Target Detection; Technical Report (No. MTR10293); MITRE Corporation: Bedford, MA, USA, 1988. [Google Scholar]

- Gruber, S.; Groemer, G.; Paternostro, S.; Larose, T.L. AMADEE-18 and the analog mission performance metrics analysis: A benchmarking tool for mission planning and evaluation. Astrobiology 2020, 20, 1295–1302. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Reimeir, B.; Leininger, A.; Edlinger, R.; Nüchter, A.; Grömer, G. Digital Twin for Analog Mars Missions: Investigating Local Positioning Alternatives for GNSS-Denied Environments. Sensors 2025, 25, 4615. https://doi.org/10.3390/s25154615

Reimeir B, Leininger A, Edlinger R, Nüchter A, Grömer G. Digital Twin for Analog Mars Missions: Investigating Local Positioning Alternatives for GNSS-Denied Environments. Sensors. 2025; 25(15):4615. https://doi.org/10.3390/s25154615

Chicago/Turabian StyleReimeir, Benjamin, Amelie Leininger, Raimund Edlinger, Andreas Nüchter, and Gernot Grömer. 2025. "Digital Twin for Analog Mars Missions: Investigating Local Positioning Alternatives for GNSS-Denied Environments" Sensors 25, no. 15: 4615. https://doi.org/10.3390/s25154615

APA StyleReimeir, B., Leininger, A., Edlinger, R., Nüchter, A., & Grömer, G. (2025). Digital Twin for Analog Mars Missions: Investigating Local Positioning Alternatives for GNSS-Denied Environments. Sensors, 25(15), 4615. https://doi.org/10.3390/s25154615